Académique Documents

Professionnel Documents

Culture Documents

2.160 Equation Sheet

Transféré par

mcx_123Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

2.160 Equation Sheet

Transféré par

mcx_123Droits d'auteur :

Formats disponibles

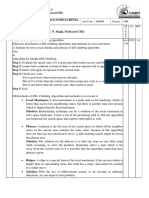

Variables Linear Discrete KF Initial Conditions Kalman Gain State Transition Propagation State Transition Update Observation Error

Covariance Update

( [ ) ] ( ) ( ) ( ( ( ) ) ( )) ??? [ ( ) ( ) ] ( ) ( )

Distribution Non-linear

Continuous KF / Linearized ) KF ( Extended KF ( )

Bayes Filter

( )

Gaussian KF

( )

Particle Filters

( )

( Measure to get Generate to get

)with M particles/samples ( ) ( ) { } ( ( { ) from

) ( )

( ) Joes form ) (

Importance Sampling | )

Chapman-Kolmogorov Equation ( ) ( ( )) ( )

( ) is Gaussian with mean and covariance

( )

( ) *

( )

)) (

( )

Error Covariance Propagation

???

( )

( ))

( )

( ) (

( ) (

( )

( )) ( )

) ( with mean and covariance

Draw M samples from ( ) { to get

( )

( ) }

Cost Function Advantages

[(

) (

)] If matrices and are observable, the Riccati Differential Equation has a positive-definite, symmetric solution for an arbitrary initial Numerical solver to obtain solution for RDE. EKF is accurate up to the 1st order as are updated from linearized which are only 1st order accurate. Gaussian KF is a special case of the Bayes Filter, and is optimal among linear and nonlinear filters. Assumed Gaussian distributions, but did not assume linear form. Importance Sampling can be replaced with Re)M Sampling. Sample an integer m ( times with probability proportional ( ) to get directly.

Assuming linear filter (and Gaussian noise), Kalman filter is the optimal minimum variance estimator among all linear (and non-linear) filters Poor observability Numerical instability Blind spot

Non-Gaussian, arbitrary distributions

Caveats

Vous aimerez peut-être aussi

- How To Update The Firmware of TP-LINK Powerline Adapters Using The TPPLC Utility PDFDocument2 pagesHow To Update The Firmware of TP-LINK Powerline Adapters Using The TPPLC Utility PDFmcx_123Pas encore d'évaluation

- RTCA DO 357 Abstract DO 160G GuidelineDocument44 pagesRTCA DO 357 Abstract DO 160G Guidelinemcx_12367% (3)

- United States Patent: Osajda Et AlDocument9 pagesUnited States Patent: Osajda Et Almcx_123Pas encore d'évaluation

- Adam PDFDocument15 pagesAdam PDFWander ScheideggerPas encore d'évaluation

- Fitting hyperelastic models to experimental data"http://www.w3.org/TR/html4/loose.dtd"> <HTML><HEAD><META HTTP-EQUIV="Content-Type" CONTENT="text/html; charset=iso-8859-1"> <TITLE>ERROR: The requested URL could not be retrieved</TITLE> <STYLE type="text/css"><!--BODY{background-color:#ffffff;font-family:verdana,sans-serif}PRE{font-family:sans-serif}--></STYLE> </HEAD><BODY> <H1>ERROR</H1> <H2>The requested URL could not be retrieved</H2> <HR noshade size="1px"> <P> While trying to process the request: <PRE> TEXT http://www.scribd.com/titlecleaner?title=Fitting+hyperelastic+models+to+experimental+data.pdf HTTP/1.1 Host: www.scribd.com Proxy-Connection: keep-alive Referer: http://www.scribd.com/upload-document?archive_doc=123752599&metadata=%7B%22platform%22%3A%22web%22%2C%22page%22%3A%22read%22%2C%22action%22%3A%22download_promo%22%2C%22logged_in%22%3Afalse%2C%22context%22%3A%22archive_view_restricted%22%7D Origin: http://www.scribd.com X-CSRF-Token: 17f6aa10b990325eDocument19 pagesFitting hyperelastic models to experimental data"http://www.w3.org/TR/html4/loose.dtd"> <HTML><HEAD><META HTTP-EQUIV="Content-Type" CONTENT="text/html; charset=iso-8859-1"> <TITLE>ERROR: The requested URL could not be retrieved</TITLE> <STYLE type="text/css"><!--BODY{background-color:#ffffff;font-family:verdana,sans-serif}PRE{font-family:sans-serif}--></STYLE> </HEAD><BODY> <H1>ERROR</H1> <H2>The requested URL could not be retrieved</H2> <HR noshade size="1px"> <P> While trying to process the request: <PRE> TEXT http://www.scribd.com/titlecleaner?title=Fitting+hyperelastic+models+to+experimental+data.pdf HTTP/1.1 Host: www.scribd.com Proxy-Connection: keep-alive Referer: http://www.scribd.com/upload-document?archive_doc=123752599&metadata=%7B%22platform%22%3A%22web%22%2C%22page%22%3A%22read%22%2C%22action%22%3A%22download_promo%22%2C%22logged_in%22%3Afalse%2C%22context%22%3A%22archive_view_restricted%22%7D Origin: http://www.scribd.com X-CSRF-Token: 17f6aa10b990325ekrishPas encore d'évaluation

- An Implicit Finite Element Method For Elastic Solids in Contact by HirotaDocument11 pagesAn Implicit Finite Element Method For Elastic Solids in Contact by Hirotamcx_123Pas encore d'évaluation

- Making Desert With JunkoDocument8 pagesMaking Desert With Junkomcx_123Pas encore d'évaluation

- Making Desert With JunkoDocument8 pagesMaking Desert With Junkomcx_123Pas encore d'évaluation

- Shinsekai Yori (From The New World) - Chapter 1&2 PDFDocument32 pagesShinsekai Yori (From The New World) - Chapter 1&2 PDFAndreea ReaPas encore d'évaluation

- (Aidoru) Shinsekai Yori (From The New World) - Glossary of TermsDocument6 pages(Aidoru) Shinsekai Yori (From The New World) - Glossary of Termsmcx_123Pas encore d'évaluation

- Frictionless ManifestoDocument34 pagesFrictionless Manifestomcx_123Pas encore d'évaluation

- A Practical Guide To High-Speed Printed-Circuit-Board LayoutDocument6 pagesA Practical Guide To High-Speed Printed-Circuit-Board Layoutyamaha640Pas encore d'évaluation

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Adidas Cycling Jersey - With Formotion Technology - Baju Sepeda Adidas Limited Item - DetikForumDocument300 pagesAdidas Cycling Jersey - With Formotion Technology - Baju Sepeda Adidas Limited Item - DetikForumAditya Tudhing PermanaPas encore d'évaluation

- Elevator Controler Part 3 TKDocument15 pagesElevator Controler Part 3 TKalfreliaPas encore d'évaluation

- Entry Level Software Developer Resume ExamplesDocument5 pagesEntry Level Software Developer Resume Examplesafjwdbaekycbaa100% (2)

- Daffodil Internship ReportDocument29 pagesDaffodil Internship ReportBinso BennyPas encore d'évaluation

- About Davinci Resolve 18Document2 pagesAbout Davinci Resolve 18EdwinPas encore d'évaluation

- Deepali Resume New PDFDocument2 pagesDeepali Resume New PDFNivedanSahayPas encore d'évaluation

- A Load Balancing Algorithm For The Data Centres ToDocument15 pagesA Load Balancing Algorithm For The Data Centres ToALAN SIBY JUPas encore d'évaluation

- Linked List: - Linked List Is A Set of Nodes Where Each Node Has Two Fields Data and NextDocument39 pagesLinked List: - Linked List Is A Set of Nodes Where Each Node Has Two Fields Data and NextDipa DeshmukhPas encore d'évaluation

- Wehel Hadi (8686807) Thesis Applied Data ScienceDocument46 pagesWehel Hadi (8686807) Thesis Applied Data ScienceJaivant VassanPas encore d'évaluation

- Designjet t790 User GuideDocument213 pagesDesignjet t790 User GuidedinomounarymaminaPas encore d'évaluation

- Lab-Project 11: Using FTK: What You Need For This PartDocument15 pagesLab-Project 11: Using FTK: What You Need For This PartNguyễn Ngọc Bảo LongPas encore d'évaluation

- A Directed Genetic Algorithm For Global OptimizationDocument17 pagesA Directed Genetic Algorithm For Global OptimizationParth TrivediPas encore d'évaluation

- Attendance CAAT ProceduresDocument9 pagesAttendance CAAT ProceduresMyles OlanPas encore d'évaluation

- NYT Media Kit Downloadable Specs - 8 - 9Document82 pagesNYT Media Kit Downloadable Specs - 8 - 9StevePas encore d'évaluation

- Comet CatalogDocument223 pagesComet Catalogmilen2456Pas encore d'évaluation

- Pa Ipa BDocument15 pagesPa Ipa BAbdoulaye Andillo MahamadouPas encore d'évaluation

- Ibm Ds8000 CliDocument669 pagesIbm Ds8000 CligodisdeadPas encore d'évaluation

- ISO.16750-2 - 2010.road Vehicles-Environmental Conditions and Testing For Electrical and Electronic Equipment-Part 2 - Electrical LoadsDocument24 pagesISO.16750-2 - 2010.road Vehicles-Environmental Conditions and Testing For Electrical and Electronic Equipment-Part 2 - Electrical LoadsdinhquangcdtbkPas encore d'évaluation

- 6series Update Flowchart10Document3 pages6series Update Flowchart10Piccolo DaimahoPas encore d'évaluation

- Install Nagios ServerDocument21 pagesInstall Nagios Servercham khePas encore d'évaluation

- Unit 2 Software Project PlanningDocument158 pagesUnit 2 Software Project PlanningTushar MahajanPas encore d'évaluation

- Lack of Resource and Rate Limiting: Gavin Johnson-LynnDocument16 pagesLack of Resource and Rate Limiting: Gavin Johnson-LynnOluwaseun OgunrindePas encore d'évaluation

- IAT-I Question Paper With Solution of 18CS71 Artificial Intelligence and Machine Learning Oct-2022-Dr. Paras Nath SinghDocument7 pagesIAT-I Question Paper With Solution of 18CS71 Artificial Intelligence and Machine Learning Oct-2022-Dr. Paras Nath SinghBrundaja D NPas encore d'évaluation

- Unit 1 - Student Copy - Are We RelatedDocument487 pagesUnit 1 - Student Copy - Are We RelatedUtkarsh AroraPas encore d'évaluation

- Deep Learning in Cardiology: Paschalis Bizopoulos, and Dimitrios KoutsourisDocument27 pagesDeep Learning in Cardiology: Paschalis Bizopoulos, and Dimitrios KoutsourisWided MiledPas encore d'évaluation

- Leaflet WEARING PARTS TILLAGE - GREEN MAINTENANCE (2017-05 W00226539R EN)Document32 pagesLeaflet WEARING PARTS TILLAGE - GREEN MAINTENANCE (2017-05 W00226539R EN)Florin TomaPas encore d'évaluation

- Speed Face V5Document2 pagesSpeed Face V5Tropicoir lankaPas encore d'évaluation

- GenMath Week 1 - 051343Document61 pagesGenMath Week 1 - 051343Reymark VelascoPas encore d'évaluation

- Muting Light Curtain DOC V21 enDocument59 pagesMuting Light Curtain DOC V21 enCayetano CaceresPas encore d'évaluation

- How Trojans Can Impact YouDocument3 pagesHow Trojans Can Impact YouyowPas encore d'évaluation