Académique Documents

Professionnel Documents

Culture Documents

Notes

Transféré par

Mohammad ZaheerCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Notes

Transféré par

Mohammad ZaheerDroits d'auteur :

Formats disponibles

[root@host01 oracle]# cd /u01/app/11.2.

0/grid/bin/ [root@host01 bin]# ld ld: no input files [root@host01 bin]# ls acfsdriverstate cluvfy dbstart evmpost hsotsO netca oradaemonagent sclsspawn acfsload cluvfyrac.sh dbv evmpost.bin imp netca_deinst.sh oradism scrctl acfsregistrymount commonenv dbvO evmshow impdp netmgr oradnssd scriptagent acfsrepl_apply commonenv.template deploync evmshow.bin impdpO nfsPatchPlugin.pm oradnssd.bin scriptagent.bin acfsrepl_apply.bin CompEMagent.pm dg4pwd evmsort impO nid oraenv SecureAgentCmds.pm acfsreplcrs CompEMcentral.pm dg4pwdO evmsort.bin jssu nidO orajaxb SecureOMSCmds.pm acfsreplcrs.pl CompEMcore.pm dgmgrl evmwatch kfed oc4jctl oranetmonitor SecureUtil.pm acfsrepl_initializer coraenv dgmgrlO evmwatch.bin kfedO oc4jctl.pl oranetmonitor.bin setasmgid acfsrepl_monitor crfsetenv diagcollection.pl evt.sh kfod oclskd orapki setasmgid0 acfsrepl_preapply crsctl diagcollection.sh exp kfodO oclskd.bin orapwd setasmgidwrap acfsrepl_transport crsctl.bin diagsetup expdp kgmgr oclsomon orapwdO skgxpinfo acfsroot crsd Directory.pm expdpO kgmgrO oclsvmon orarootagent skgxpinfoO acfssinglefsmount crsd.bin diskmon expO LaunchEMagent.pm oclumon orarootagent.bin sqlldr adapters crsdiag.pl diskmon.bin extjob lbuilder oclumon.bin oraxml sqlldrO adrci crs_getperm dropjava extjobo lcsscan oclumon.pl oraxsl sqlplus adrciO crs_getperm.bin dsml2ldif extjobO ldapadd ocrcheck orion srvconfig AgentLifeCycle.pm crs_profile dumpsga extjoboO ldapaddmt ocrcheck.bin orionO srvctl AgentMisc.pm crs_profile.bin dumpsga0 extproc ldapbind ocrconfig osdbagrp StartAgent.pl AgentStatus.pm crs_register echodo extprocO ldapcompare ocrconfig.bin osdbagrp0 statusnc AgentSubAgent.pm crs_register.bin EMAgentPatch.pm extusrupgrade ldapdelete ocrdump osh symfind agtctl crs_relocate EMAgent.pm fmputl ldapmoddn ocrdump.bin osysmond sysresv agtctlO crs_relocate.bin emca fmputlhp ldapmodify ocrpatch osysmond.bin sysresv0 amdu crs_setperm EmCommonCmdDriver.pm genagtsh ldapmodifymt ocssd owm targetdeploy.pl amduO crs_setperm.bin EMconnectorCmds.pm genclntsh ldapsearch ocssd.bin patchAgtStPlugin.pm tkprof appagent crs_start emcrsp genclntst ldifmigrator octssd Path.pm tkprofO appagent.bin crs_start.bin emcrsp.bin genezi linkshlib octssd.bin platform_common tnslsnr appvipcfg crs_stat emctl geneziO lmsgen odisrvreg plshprof tnslsnr0 appvipcfg.pl crs_stat.bin EmctlCommon.pm genksms loadjava odnsd plshprofO tnsping

aqxmlctl crs_stop emctl.pl gennfgt loadpsp odnsd.bin qosctl tnsping0 aqxmlctl.pl crs_stop.bin emctl.template gennttab loadpspO oerr racgeut trcasst asmca crstmpl.scr EMDeploy.pm genoccish lsdb ohasd racgevtf trcldr asmcmd crs_unregister emdfail.command genorasdksh lsdb.bin ohasd.bin racgmain trcroute asmcmdcore crs_unregister.bin EMDiag.pm gensyslib lsnodes oidca racgvip trcroute0 asmproxy crswrapexece.pl EmKeyCmds.pm gipcd lsnodes.bin oidprovtool racgwrap trcsess bndlchk csscan EMomsCmds.pm gipcd.bin lsnrctl oifcfg racgwrap.sbs tstshm cemutlo cssdagent EMSAConsoleCommon.pm gnsd lsnrctl0 oifcfg.bin rawutl tstshmO cemutlo.bin cssdagent.bin emutil gnsd.bin lxchknlb ojvmjava rawutl0 uidrvci cemutls cssdmonitor emutil.bat.template gpnpd lxegen ojvmtc rconfig uidrvciO cemutls.bin cssdmonitor.bin emwd.pl gpnpd.bin lxinst ologdbg rdtool umu clscfg cssvfupgd eusm gpnptool mapsga ologdbg.pl RegisterTType.pm unzip clscfg.bin cssvfupgd.bin evmd gpnptool.bin mapsga0 ologgerd relink usrvip clsecho cursize evmd.bin grdcscan maxmem olsnodes renamedg vipca clsecho.bin cursizeO evminfo gsd maxmemO olsnodes.bin renamedg0 wrap clsfmt cvures evminfo.bin gsdctl mdnsd onsctl rman wrapO clsfmt.bin dbfs_client evmlogger gsd.sh mdnsd.bin oprocd rmanO wrc clsid dbfsize evmlogger.bin hsalloci mkpatch oraagent sAgentUtils.pm wrcO clsid.bin dbfsizeO evmmkbin hsallociO mkpatchO oraagent.bin sbttest xml clssproxy dbgeu_run_action.pl evmmkbin.bin hsdepxa mkstore orabase sbttestO xmlwf clssproxy.bin dbhome evmmklib hsdepxaO ncomp oracle schema zip cluutil dbshut evmmklib.bin hsots ndfnceca oracleO schemasync [root@host01 bin]# ./crsctl status res -t -------------------------------------------------------------------------------NAME TARGET STATE SERVER STATE_DETAILS

-------------------------------------------------------------------------------Local Resources -------------------------------------------------------------------------------ora.DATA.dg ONLINE ONLINE ONLINE ONLINE ora.LISTENER.lsnr host01 host02

ONLINE ONLINE ONLINE ONLINE ora.asm ONLINE ONLINE ONLINE ONLINE ora.gsd OFFLINE OFFLINE OFFLINE OFFLINE ora.net1.network ONLINE ONLINE ONLINE ONLINE ora.ons ONLINE ONLINE ONLINE ONLINE ora.registry.acfs ONLINE ONLINE ONLINE ONLINE

host01 host02 host01 host02 host01 host02 host01 host02 host01 host02 host01 host02 Started Started

-------------------------------------------------------------------------------Cluster Resources -------------------------------------------------------------------------------ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE ora.LISTENER_SCAN2.lsnr 1 ONLINE ONLINE ora.LISTENER_SCAN3.lsnr 1 ONLINE ONLINE ora.cvu 1 ONLINE ONLINE host02 host01 host01 host01 host01 host02 host01 host02 host01

ora.host01.vip 1 ONLINE ONLINE ora.host02.vip 1 ONLINE ONLINE ora.oc4j 1 ONLINE ONLINE

ora.scan1.vip 1 ONLINE ONLINE ora.scan2.vip 1 ONLINE ONLINE

ora.scan3.vip 1 ONLINE ONLINE

host01

[root@host01 bin]# ./srvctl status asm ASM is running on host01,host02 [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# ./srvctl status lsnr Listener LISTENER is enabled Listener LISTENER is running on node(s): host01,host02 [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# ./srvctl status scan SCAN VIP scan1 is enabled SCAN VIP scan1 is running on node host02 SCAN VIP scan2 is enabled SCAN VIP scan2 is running on node host01 SCAN VIP scan3 is enabled SCAN VIP scan3 is running on node host01 [root@host01 bin]# ./srvctl status scan_listener SCAN Listener LISTENER_SCAN1 is enabled SCAN listener LISTENER_SCAN1 is running on node host02 SCAN Listener LISTENER_SCAN2 is enabled SCAN listener LISTENER_SCAN2 is running on node host01 SCAN Listener LISTENER_SCAN3 is enabled SCAN listener LISTENER_SCAN3 is running on node host01 [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# ./crsctl check cluster CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online [root@host01 bin]# ./srvctl status scan SCAN VIP scan1 is enabled SCAN VIP scan1 is running on node host02 SCAN VIP scan2 is enabled SCAN VIP scan2 is running on node host01 SCAN VIP scan3 is enabled SCAN VIP scan3 is running on node host01 [root@host01 bin]# ./srvctl config scan SCAN name: cluster01-scan, Network: 1/192.0.2.0/255.255.255.0/eth0 SCAN VIP name: scan1, IP: /cluster01-scan/192.0.2.113 SCAN VIP name: scan2, IP: /cluster01-scan/192.0.2.111 SCAN VIP name: scan3, IP: /cluster01-scan/192.0.2.112 [root@host01 bin]# nslookup cluster01-scan Server: 192.0.2.1 Address: 192.0.2.1#53 Name: cluster01-scan.example.com Address: 192.0.2.112

Name: cluster01-scan.example.com Address: 192.0.2.113 Name: cluster01-scan.example.com Address: 192.0.2.111 [root@host01 bin]# ./srvctl config nodeapps -a Network exists: 1/192.0.2.0/255.255.255.0/eth0, type static VIP exists: /host01-vip/192.0.2.201/192.0.2.0/255.255.255.0/eth0, hosting node h ost01 VIP exists: /host02-vip/192.0.2.202/192.0.2.0/255.255.255.0/eth0, hosting node h ost02 [root@host01 bin]# ./oifcfg getif eth0 192.0.2.0 global public eth1 192.168.1.0 global cluster_interconnect eth2 192.168.1.0 global cluster_interconnect [root@host01 bin]#

[root@host01 bin]#

[root@EDB2R1P6 ~]# cat /etc/named.conf // Enterprise Linux BIND Configuration Tool // // Default initial "Caching Only" name server configuration // include "/etc/rndc.key"; controls { inet 127.0.0.1 allow { localhost; } keys { "rndckey"; }; }; options { directory "/var/named"; pid-file "/var/named/named.pid"; dump-file "/var/named/data/cache_dump.db"; statistics-file "/var/named/data/named_stats.txt"; listen-on { 127.0.0.1; }; listen-on { 192.0.2.1; }; recursion no; [root@EDB2R1P6 ~]# cd /var/named [root@EDB2R1P6 named]# ls bind.log data db.127.0.0 db.cache named.pid slaves [root@EDB2R1P6 named]# cd data [root@EDB2R1P6 data]# ls master-example.com reverse-192.0.2

[root@EDB2R1P6 data]# vi master-example.com [root@EDB2R1P6 data]#

[root@host01 bin]# ./crsctl stat res -init -t -------------------------------------------------------------------------------NAME TARGET STATE SERVER STATE_DETAILS

-------------------------------------------------------------------------------Cluster Resources -------------------------------------------------------------------------------ora.asm 1 ONLINE ONLINE host01 host01 host01 host01 host01 host01 host01 OBSERVER Started

ora.cluster_interconnect.haip 1 ONLINE ONLINE ora.crf 1 ora.crsd 1 ora.cssd 1 ONLINE ONLINE ONLINE ONLINE ONLINE ONLINE

ora.cssdmonitor 1 ONLINE ONLINE ora.ctssd 1 ora.diskmon ONLINE ONLINE

OFFLINE OFFLINE host01 host01 host01 host01 host01

ora.drivers.acfs 1 ONLINE ONLINE ora.evmd 1 ora.gipcd 1 ora.gpnpd 1 ora.mdnsd 1 ONLINE ONLINE ONLINE ONLINE ONLINE ONLINE ONLINE ONLINE

[root@host01 bin]# \ gpnpd ********* This is available across all the nodes, and it manages the netwrok an d parameter for ASM..as below.. 2) adding node and deleting node is easier with gpnpd.

[root@host01 bin]# ./gpnptool get Warning: some command line parameters were defaulted. Resulting command line: ./gpnptool.bin get -o<?xml version="1.0" encoding="UTF-8"?><gpnp:GPnP-Profile Version="1.0" xmlns="ht tp://www.grid-pnp.org/2005/11/gpnp-profile" xmlns:gpnp="http://www.grid-pnp.org/ 2005/11/gpnp-profile" xmlns:orcl="http://www.oracle.com/gpnp/2005/11/gpnp-profil e" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="htt p://www.grid-pnp.org/2005/11/gpnp-profile gpnp-profile.xsd" ProfileSequence="6" ClusterUId="a161b7f2f466ff74bf99af778117533f" ClusterName="cluster01" PALocation =""><gpnp:Network-Profile><gpnp:HostNetwork id="gen" HostName="*"><gpnp:Network id="net1" IP="192.0.2.0" Adapter="eth0" Use="public"/><gpnp:Network id="net2" IP ="192.168.1.0" Adapter="eth1" Use="cluster_interconnect"/><gpnp:Network id="net3 " IP="192.168.1.0" Adapter="eth2" Use="cluster_interconnect"/></gpnp:HostNetwork ></gpnp:Network-Profile><orcl:CSS-Profile id="css" DiscoveryString="+asm" LeaseD uration="400"/><orcl:ASM-Profile id="asm" DiscoveryString="" SPFile="+DATA/clust er01/asmparameterfile/registry.253.807124429"/><ds:Signature xmlns:ds="http://ww w.w3.org/2000/09/xmldsig#"><ds:SignedInfo><ds:CanonicalizationMethod Algorithm=" http://www.w3.org/2001/10/xml-exc-c14n#"/><ds:SignatureMethod Algorithm="http:// www.w3.org/2000/09/xmldsig#rsa-sha1"/><ds:Reference URI=""><ds:Transforms><ds:Tr ansform Algorithm="http://www.w3.org/2000/09/xmldsig#enveloped-signature"/><ds:T ransform Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"> <InclusiveNamespac es xmlns="http://www.w3.org/2001/10/xml-exc-c14n#" PrefixList="gpnp orcl xsi"/>< /ds:Transform></ds:Transforms><ds:DigestMethod Algorithm="http://www.w3.org/2000 /09/xmldsig#sha1"/><ds:DigestValue>CiSWNSk0nZrt2qgkK+gttVxAJGs=</ds:DigestValue> </ds:Reference></ds:SignedInfo><ds:SignatureValue>JIXdW1L6sJuV2y+zTAOLGZLxBPD2dQ W8YMOHnwJy3hZL37egCPb/fKn5LUODHOnu8MdxSzXiUc/auGBdSxMbTAWGsKGbH6CE3GYxySAoyn2WLX Q8xVwV5B+O2zNGLgXosTKA3WkNGLQysSmNegOxJkM9MjrzKHodgZCCtGSoR3w=</ds:SignatureValu e></ds:Signature></gpnp:GPnP-Profile> Success.

[root@host01 bin]# pwd /u01/app/11.2.0/grid/bin [root@host01 bin]#

[root@host01 bin]# ./crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----------------------------- --------1. ONLINE 73e24900d09b4fdcbf95c3c654b41544 (ORCL:ASMDISK01) [DATA] 2. ONLINE 660f91f261a44f26bf530986f64615b0 (ORCL:ASMDISK02) [DATA] 3. ONLINE 1f5238cfd81f4fc0bf78f3cbf7751e27 (ORCL:ASMDISK03) [DATA] Located 3 voting disk(s). [root@host01 bin]# ./ocrcheck Status of Oracle Cluster Registry is as follows : Version : 3 Total space (kbytes) : 262120 Used space (kbytes) : 2888 Available space (kbytes) : 259232 ID : 1034439597 Device/File Name : +DATA Device/File integrity check succeeded Device/File not configured Device/File not configured Device/File not configured Device/File not configured Cluster registry integrity check succeeded Logical corruption check succeeded [root@host01 bin]# ./crsctl get css disktimeout CRS-4678: Successful get disktimeout 200 for Cluster Synchronization Services. [root@host01 bin]# ./crsctl get css misscount CRS-4678: Successful get misscount 30 for Cluster Synchronization Services. [root@host01 bin]# MISSCOUNT ********** network timeout means misscount which is 30 sec.every 30 sec node will vote in voting disk.

[root@host01 bin]# ./olsnodes -n host01 1 host02 2 host03 3 [root@host01 bin]# #################################33 n Locating the ocr file

[root@host02 bin]# pwd /u01/app/11.2.0/grid/bin [root@host02 bin]# ./ocrconfig -showbackup host01 .ocr host01 .ocr host01 .ocr host01 host01 2013/02/12 13:32:05 2013/02/12 09:32:03 2013/02/12 05:32:01 2013/02/11 21:31:58 2013/02/11 21:31:58 /u01/app/11.2.0/grid/cdata/cluster01/backup00 /u01/app/11.2.0/grid/cdata/cluster01/backup01 /u01/app/11.2.0/grid/cdata/cluster01/backup02 /u01/app/11.2.0/grid/cdata/cluster01/day.ocr /u01/app/11.2.0/grid/cdata/cluster01/week.ocr

PROT-25: Manual backups for the Oracle Cluster Registry are not available [root@host02 bin]# ./ocrconfig -restore Path ocrchek [root@host02 bin]# ./ocrcheck Status of Oracle Cluster Registry Version Total space (kbytes) Used space (kbytes) Available space (kbytes) ID Device/File Name is as follows : : 3 : 262120 : 2888 : 259232 : 1034439597 : +DATA Device/File integrity check succeeded Device/File not configured Device/File not configured Device/File not configured Device/File not configured Cluster registry integrity check succeeded Logical corruption check succeeded [root@host02 bin]#

./ocrconfig -manualbackup ./ocrconfig -backuploc location

[root@host01 bin]# ./ocrconfig -manualbackup host01 2013/02/12 15:44:50 0130212_154450.ocr [root@host01 bin]# /u01/app/11.2.0/grid/cdata/cluster01/backup_2

Cluster health check *********************1 [grid@host01 bin]$ ./cluvfy comp crs -n all -verbose Verifying CRS integrity Checking CRS integrity... Clusterware version consistency passed The Oracle Clusterware is healthy on node "host03" The Oracle Clusterware is healthy on node "host02" The Oracle Clusterware is healthy on node "host01" CRS integrity check passed Verification of CRS integrity was successful. [grid@host01 bin]$ ############################################################################3333 33 ASM ****** SQL> select instance_name from v$instance; INSTANCE_NAME ---------------+ASM1 SQL> select paddr,name from v$bgprocess where name like RBAL; PADDR NAME -------- ----30F5D2BC RBAL SQL> DB *** SQL> select instance_name from v$instance; INSTANCE_NAME ---------------orcl1

SQL> select paddr,name from v$bgprocess where name like ASMB; PADDR NAME -------- ----48BE1698 ASMB SQL>

SQL> desc v$asm_client; Name Null? ----------------------------------------- -------GROUP_NUMBER INSTANCE_NAME DB_NAME STATUS SOFTWARE_VERSION COMPATIBLE_VERSION SQL>

Type ---------------------------NUMBER VARCHAR2(64) VARCHAR2(8) VARCHAR2(12) VARCHAR2(60) VARCHAR2(60)

SQL> select INSTANCE_NAME,GROUP_NUMBER, STATUS from v$asm_client; INSTANCE_NAME GROUP_NUMBER ST ATUS ---------------------------------------------------------------- ------------ ----------+ASM1 1 CO NNECTED orcl1 1 CO NNECTED orcl1 2 CO NNECTED SQL> SQL> select paddr,name from v$bgprocess where name like %ARB%; PADDR -------00 00 00 00 00 00 00 00 00 00 00 NAME ----ARB0 ARB1 ARB2 ARB3 ARB4 ARB5 ARB6 ARB7 ARB8 ARB9 ARBA

11 rows selected. SQL>

SQL> select GROUP_NUMBER,DISK_NUMBER,name,PATH,BYTES_WRITTEN from v$asm_disk; SQL> / GROUP_NUMBER DISK_NUMBER NAME BYTES_WRITTEN ------------ ----------- ---------------------------------------- ------------0 8 0 9 0 10 0 11 0 12 0 13 1 0 ASMDISK01 1244211200 1 1 ASMDISK02 1263197184 1 2 ASMDISK03 1153472000 1 3 ASMDISK04 1275191808 2 0 ASMDISK05 82460672 2 1 ASMDISK06 91708928 2 2 ASMDISK07 86306304 2 3 ASMDISK08 189382144 14 rows selected. SQL> SQL> select GROUP_NUMBER,DISK_NUMBER,name,PATH,BYTES_WRITTEN from v$asm_disk whe re GROUP_NUMBER=1; GROUP_NUMBER DISK_NUMBER NAME BYTES_WRITTEN ------------ ----------- ---------------------------------------- ------------1 0 ASMDISK01 1245331456 1 1 ASMDISK02 1264745472 1 2 ASMDISK03 1153783296 1 3 ASMDISK04 1276680704 SQL> SQL> desc v$asm_attribute; Name Null? Type ------------------------------------------------------------------------------PATH -----------------------ORCL:ASMDISK01 ORCL:ASMDISK02 ORCL:ASMDISK03 ORCL:ASMDISK04 PATH -----------------------ORCL:ASMDISK09 ORCL:ASMDISK10 ORCL:ASMDISK11 ORCL:ASMDISK12 ORCL:ASMDISK13 ORCL:ASMDISK14 ORCL:ASMDISK01 ORCL:ASMDISK02 ORCL:ASMDISK03 ORCL:ASMDISK04 ORCL:ASMDISK05 ORCL:ASMDISK06 ORCL:ASMDISK07 ORCL:ASMDISK08

---------------------------------- -------- --------------------------------------------------------------------------NAME VARCHAR2(256) VALUE VARCHAR2(256) GROUP_NUMBER NUMBER ATTRIBUTE_INDEX NUMBER ATTRIBUTE_INCARNATION NUMBER READ_ONLY VARCHAR2(7) SYSTEM_CREATED VARCHAR2(7) SQL>

SQL> alter diskgroup data set attribute compatible.asm= 2 11.2; Diskgroup altered. SQL> alter diskgroup data set attribute compatible.rdbms=11.2; Diskgroup altered. SQL> SQL> alter diskgroup data set attribute disk_repair_time=4m; Diskgroup altered. SQL> SQL> select GROUP_NUMBER,DISK_NUMBER,name,PATH,BYTES_WRITTEN from v$asm_disk whe re GROUP_NUMBER=1; GROUP_NUMBER DISK_NUMBER NAME BYTES_WRITTEN ------------ ----------- ---------------------------------------- ------------1 0 ASMDISK01 1262760448 1 1 ASMDISK02 1282714112 1 2 ASMDISK03 1164706304 1 3 ASMDISK04 1297487360 SQL> alter diskgroup data offline disk ASMDISK04; Diskgroup altered. PATH -----------------------ORCL:ASMDISK01 ORCL:ASMDISK02 ORCL:ASMDISK03 ORCL:ASMDISK04

SQL> select GROUP_NUMBER,DISK_NUMBER,name,PATH,BYTES_WRITTEN from v$asm_disk whe re GROUP_NUMBER=1; SQL> / GROUP_NUMBER DISK_NUMBER NAME BYTES_WRITTEN ------------ ----------- ---------------------------------------- ------------1 3 _DROPPED_0003_DATA 1 0 ASMDISK01 1271535104 1 1 ASMDISK02 1292986880 1 2 ASMDISK03 1168803328 SQL> / GROUP_NUMBER DISK_NUMBER NAME BYTES_WRITTEN ------------ ----------- ---------------------------------------- ------------1 0 ASMDISK01 1297424384 1 1 ASMDISK02 1319025664 1 2 ASMDISK03 1194385920 SQL> SQL> alter diskgroup data add disk ORCL:ASMDISK09; Diskgroup altered. SQL> select GROUP_NUMBER,DISK_NUMBER,name,PATH,BYTES_WRITTEN from v$asm_disk wh ere GROUP_NUMBER=1; GROUP_NUMBER DISK_NUMBER NAME BYTES_WRITTEN ------------ ----------- ---------------------------------------- ------------1 0 ASMDISK01 1306331136 1 1 ASMDISK02 1327957504 1 2 ASMDISK03 1202017280 1 3 ASMDISK09 74764288 PATH -----------------------ORCL:ASMDISK01 ORCL:ASMDISK02 ORCL:ASMDISK03 ORCL:ASMDISK09 PATH -----------------------ORCL:ASMDISK01 ORCL:ASMDISK02 ORCL:ASMDISK03 PATH -----------------------ORCL:ASMDISK01 ORCL:ASMDISK02 ORCL:ASMDISK03

SQL> select GROUP_NUMBER,DISK_NUMBER,name,PATH,BYTES_WRITTEN from v$asm_disk wh ere GROUP_NUMBER=1; GROUP_NUMBER DISK_NUMBER NAME PATH BYTES_WRITTEN ------------ ----------- ------------------------------ ---------------------------------- -------------

1 1 1 1 SQL>

0 ASMDISK01 1310812160 1 ASMDISK02 1329797632 2 ASMDISK03 1206904832 3 ASMDISK09 189181952

ORCL:ASMDISK01 ORCL:ASMDISK02 ORCL:ASMDISK03 ORCL:ASMDISK09

SQL> desc v$asm_file; Name Null? Type ---------------------------------------------------------------------------------------------------------------- -------- --------------------------------------------------------------------------GROUP_NUMBER NUMBER FILE_NUMBER NUMBER COMPOUND_INDEX NUMBER INCARNATION NUMBER BLOCK_SIZE NUMBER BLOCKS NUMBER BYTES NUMBER SPACE NUMBER TYPE VARCHAR2(64) REDUNDANCY VARCHAR2(6) STRIPED VARCHAR2(6) CREATION_DATE DATE MODIFICATION_DATE DATE REDUNDANCY_LOWERED VARCHAR2(1) PERMISSIONS VARCHAR2(16) USER_NUMBER NUMBER USER_INCARNATION NUMBER USERGROUP_NUMBER NUMBER USERGROUP_INCARNATION NUMBER PRIMARY_REGION VARCHAR2(4) MIRROR_REGION VARCHAR2(4)

HOT_READS NUMBER HOT_WRITES NUMBER HOT_BYTES_READ NUMBER HOT_BYTES_WRITTEN NUMBER COLD_READS NUMBER COLD_WRITES NUMBER COLD_BYTES_READ NUMBER COLD_BYTES_WRITTEN NUMBER SQL> #########################################3 SQL> select GROUP_NUMBER,file_number,PRIMARY_REGION,MIRROR_REGION from v$asm_fil e where group_number=1; GROUP_NUMBER FILE_NUMBER PRIM MIRR ------------ ----------- ---- ---1 253 COLD COLD 1 255 COLD COLD 1 256 COLD COLD 1 257 COLD COLD 1 258 COLD COLD 1 259 COLD COLD 1 260 COLD COLD 1 261 COLD COLD 1 262 COLD COLD 1 263 COLD COLD 1 264 COLD COLD 1 265 COLD COLD 1 266 COLD COLD 1 267 COLD COLD 1 268 COLD COLD 1 269 COLD COLD 1 270 COLD COLD 1 271 COLD COLD 1 272 COLD COLD 19 rows selected. SQL> SQL> alter diskgroup data add template temp1 attributes(HOT MIRRORCOLD); Diskgroup altered. SQL> alter diskgroup data add template temp2 attributes(HOT MIRRORCOLD); Diskgroup altered. SQL>

SQL> create tablespace test100 datafile +DATA(temp1) size 10m; Tablespace created. SQL> select name from v$datafile; NAME -------------------------------------------------------------------------------+DATA/orcl/datafile/system.256.807272123 +DATA/orcl/datafile/sysaux.257.807272125 +DATA/orcl/datafile/undotbs1.258.807272125 +DATA/orcl/datafile/users.259.807272125 +DATA/orcl/datafile/example.264.807272285 +DATA/orcl/datafile/undotbs2.265.807272441 +DATA/orcl/datafile/undotbs3.266.807272449 +DATA/orcl/datafile/test123.272.807290811 +DATA/orcl/datafile/test100.273.807294103 9 rows selected. SQL>

SQL> select GROUP_NUMBER,file_number,PRIMARY_REGION,MIRROR_REGION from v$asm_fi le where group_number=1; GROUP_NUMBER FILE_NUMBER PRIM MIRR ------------ ----------- ---- ---1 253 COLD COLD 1 255 COLD COLD 1 256 COLD COLD 1 257 COLD COLD 1 258 COLD COLD 1 259 COLD COLD 1 260 COLD COLD 1 261 COLD COLD 1 262 COLD COLD 1 263 COLD COLD 1 264 COLD COLD 1 265 COLD COLD 1 266 COLD COLD 1 267 COLD COLD 1 268 COLD COLD 1 269 COLD COLD 1 270 COLD COLD 1 271 COLD COLD 1 272 COLD COLD 1 273 HOT COLD 20 rows selected. SQL>

SQL> alter diskgroup data modify file +DATA/orcl/datafile/users.259.807272125 attributes(HOT MIRRORCOLD);

Diskgroup altered. SQL> select GROUP_NUMBER,file_number,PRIMARY_REGION,MIRROR_REGION from v$asm_fil e where group_number=1; GROUP_NUMBER FILE_NUMBER PRIM MIRR ------------ ----------- ---- ---1 253 COLD COLD 1 255 COLD COLD 1 256 COLD COLD 1 257 COLD COLD 1 258 COLD COLD 1 259 HOT COLD 1 260 COLD COLD 1 261 COLD COLD 1 262 COLD COLD 1 263 COLD COLD 1 264 COLD COLD 1 265 COLD COLD 1 266 COLD COLD 1 267 COLD COLD 1 268 COLD COLD 1 269 COLD COLD 1 270 COLD COLD 1 271 COLD COLD 1 272 COLD COLD 1 273 HOT COLD 20 rows selected. SQL>

Adding 3rd Party ****************** SQL> alter diskgroup data add volume vol1 size 1G; Diskgroup altered. SQL> SQL> select GROUP_NUMBER,file_number,PRIMARY_REGION,MIRROR_REGION from v$asm_fil e where group_number=1; GROUP_NUMBER FILE_NUMBER PRIM MIRR ------------ ----------- ---- ---1 253 COLD COLD 1 255 COLD COLD 1 256 COLD COLD 1 257 COLD COLD 1 258 COLD COLD 1 259 HOT COLD 1 260 COLD COLD 1 261 COLD COLD 1 262 COLD COLD 1 263 COLD COLD

1 1 1 1 1 1 1 1 1 1 20 rows selected.

264 265 266 267 268 269 270 271 272 273

COLD COLD COLD COLD COLD COLD COLD COLD COLD HOT

COLD COLD COLD COLD COLD COLD COLD COLD COLD COLD

SQL> alter diskgroup data add volume vol1 size 1G; Diskgroup altered. SQL> desc v$asm_volume; Name Null? Type ---------------------------------------------------------------------------------------------------------------- -------- --------------------------------------------------------------------------GROUP_NUMBER NUMBER VOLUME_NAME VARCHAR2(30) COMPOUND_INDEX NUMBER SIZE_MB NUMBER VOLUME_NUMBER NUMBER REDUNDANCY VARCHAR2(6) STRIPE_COLUMNS NUMBER STRIPE_WIDTH_K NUMBER STATE VARCHAR2(8) FILE_NUMBER NUMBER INCARNATION NUMBER DRL_FILE_NUMBER NUMBER RESIZE_UNIT_MB NUMBER USAGE VARCHAR2(30) VOLUME_DEVICE VARCHAR2(256) MOUNTPATH VARCHAR2(1024) SQL> select group_number,volume_name,SIZE_MB,USAGE,MOUNTPATH,VOLUME_DEVICE from v$asm_volume; GROUP_NUMBER VOLUME_NAME SIZE_MB USAGE

------------ ------------------------------ ---------- ----------------------------MOUNTPATH -----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------VOLUME_DEVICE -----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------1 VOL1 1024 /dev/asm/vol1-175 SQL>

[root@host01 bin]# mkdir -p /u01/app/asm [root@host01 bin]# ls -ld /u01/app/asm/ drwxr-xr-x 2 root root 4096 Feb 13 16:29 /u01/app/asm/ [root@host01 bin]# acfsutil registry -a -f /dev/asm/vol1-175 /u01/app/asm acfsutil registry: mount point /u01/app/asm successfully added to Oracle Registr y [root@host01 bin]# [root@host01 bin]# mkfs.acfs -f /dev/asm/vol1-175 mkfs.acfs: version = 11.2.0.3.0 mkfs.acfs: on-disk version = 39.0 mkfs.acfs: volume = /dev/asm/vol1-175 mkfs.acfs: volume size = 1073741824 mkfs.acfs: Format complete. [root@host01 bin]# [root@host01 bin]# mount.acfs -o all [[root@host01 bin]# cd /u01/app/asm [root@host01 asm]# ls lost+found [root@host01 asm]# vi abc.txt [root@host01 asm]# ls abc.txt lost+found [root@host01 asm]#

Creating Alias for the Datafile in ASM/ *********************************888 SQL> create tablespace test600 datafile +DATA/orcl/datafile/test600.dbf size 5 m; Tablespace created. SQL>

ASMCMD> ls -l Type Redund DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR DATAFILE MIRROR

Striped COARSE COARSE COARSE COARSE COARSE COARSE COARSE COARSE COARSE COARSE COARSE

Time FEB 13 FEB 13 FEB 13 FEB 13 FEB 13 FEB 13 FEB 13 FEB 13 FEB 13 FEB 13 FEB 13

10:00:00 16:00:00 10:00:00 16:00:00 15:00:00 16:00:00 16:00:00 10:00:00 10:00:00 10:00:00 10:00:00

Sys Y Y Y Y Y Y Y Y Y Y Y N

Name EXAMPLE.264.807272285 SYSAUX.257.807272125 SYSTEM.256.807272123 TEST100.273.807294103 TEST123.272.807290811 TEST500.276.807295467 TEST600.277.807295547 UNDOTBS1.258.807272125 UNDOTBS2.265.807272441 UNDOTBS3.266.807272449 USERS.259.807272125 test600.dbf => +DATA/ORCL/DATAF

ILE/TEST600.277.807295547 ASMCMD>

###########################################################333 [root@host01 bin]# ./srvctl status service -d orcl Service erp is running on instance(s) orcl1 [root@host01 bin]# ./srvctl status service -d orcl Service payroll is running on instance(s) orcl1 [root@host01 bin]# [root@host01 bin]# [root@host01 bin]# ./srvctl config service -d orcl Service name: erp Service is enabled Server pool: orcl_erp Cardinality: 1 Disconnect: false Service role: PRIMARY Management policy: AUTOMATIC DTP transaction: false AQ HA notifications: false Failover type: NONE Failover method: NONE TAF failover retries: 0 TAF failover delay: 0 Connection Load Balancing Goal: LONG Runtime Load Balancing Goal: NONE TAF policy specification: NONE Edition: Preferred instances: orcl1 Available instances: orcl2 [root@host01 bin]# ./srvctl config service -d orcl Service name: payroll Service is enabled Server pool: orcl_payroll Cardinality: 1 Disconnect: false Service role: PRIMARY Management policy: AUTOMATIC DTP transaction: false AQ HA notifications: false Failover type: NONE -s erp -s payroll

-s erp

-s payroll

Failover method: NONE TAF failover retries: 0 TAF failover delay: 0 Connection Load Balancing Goal: LONG Runtime Load Balancing Goal: NONE TAF policy specification: BASIC Edition: Preferred instances: orcl1 Available instances: orcl2 [root@host01 bin]# #################################################@@@@@@@@@2222 [root@host01 bin]# ./srvctl config database -d orcl Database unique name: orcl Database name: orcl Oracle home: /u01/app/oracle/product/11.2.0/dbhome_1 Oracle user: oracle Spfile: +DATA/orcl/spfileorcl.ora Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: orcl Database instances: orcl1,orcl2,orcl3 Disk Groups: DATA,FRA Mount point paths: Services: erp,payroll Type: RAC Database is administrator managed [root@host01 bin]#

##########################@@@@@@@@@@@@2 [root@host01 bin]# ./srvctl status service -d orcl -s erp Service erp is running on instance(s) orcl2 [root@host01 bin]# ./srvctl status service -d orcl -s erp Service erp is running on instance(s) orcl2 [root@host01 bin]# ./srvctl status service -d orcl -s payroll Service payroll is running on instance(s) orcl2 [root@host01 bin]# ./srvctl stop service -d orcl -s payroll [root@host01 bin]# ./srvctl start service -d orcl -s payroll [root@host01 bin]# ./srvctl stop service -d orcl -s erp [root@host01 bin]# ./srvctl status service -d orcl -s erp Service erp is not running. [root@host01 bin]# ./srvctl start service -d orcl -s erp [root@host01 bin]# ./srvctl status service -d orcl -s erp Service erp is running on instance(s) orcl1 [root@host01 bin]# ./srvctl status service -d orcl -s payroll Service payroll is running on instance(s) orcl1 [root@host01 bin]# ############################################################################44 [root@host01 bin]# ./srvctl config database -d testdb Database unique name: testdb Database name: testdb Oracle home: /u01/app/oracle/product/11.2.0/dbhome_1

Oracle user: oracle Spfile: Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: testdb Database instance: testdb Disk Groups: Mount point paths: Services: Type: SINGLE Database is administrator managed [root@host01 bin]# ./srvctl config database -d orcl Database unique name: orcl Database name: orcl Oracle home: /u01/app/oracle/product/11.2.0/dbhome_1 Oracle user: oracle Spfile: +DATA/orcl/spfileorcl.ora Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: orcl Database instances: orcl1,orcl2,orcl3 Disk Groups: DATA,FRA Mount point paths: Services: erp,payroll Type: RAC Database is administrator managed [root@host01 bin]#

[oracle@host01 trace]$ pwd /u01/app/oracle/diag/rdbms/orcl/orcl1/trace [oracle@host01 trace]$ [oracle@host01 trace]$ trcsess output=mytrace.trc service=erp *.trc [oracle@host01 trace]$ tkprof mytrace.trc xyzsuri.txt TKPROF: Release 11.2.0.3.0 - Development on Thu Feb 14 16:49:21 2013 Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved. [oracle@host01 trace]$ vi xyzsuri.txt

#@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@2 SQL> select RESOURCE_CONSUMER_GROUP from v$session where USERNAME=U1; RESOURCE_CONSUMER_GROUP -------------------------------HR_GROUP SQL>

Cluster Health check [root@host01 bin]# ./crsctl lsmodules crs List CRSD Debug Module: AGENT List CRSD Debug Module: AGFW List CRSD Debug Module: CLSFRAME List CRSD Debug Module: CLSVER List CRSD Debug Module: CLUCLS List CRSD Debug Module: COMMCRS List CRSD Debug Module: COMMNS List CRSD Debug Module: CRSAPP List CRSD Debug Module: CRSCCL List CRSD Debug Module: CRSCEVT List CRSD Debug Module: CRSCOMM List CRSD Debug Module: CRSD List CRSD Debug Module: CRSEVT List CRSD Debug Module: CRSMAIN List CRSD Debug Module: CRSOCR List CRSD Debug Module: CRSPE List CRSD Debug Module: CRSPLACE List CRSD Debug Module: CRSRES List CRSD Debug Module: CRSRPT List CRSD Debug Module: CRSRTI List CRSD Debug Module: CRSSE List CRSD Debug Module: CRSSEC List CRSD Debug Module: CRSTIMER List CRSD Debug Module: CRSUI List CRSD Debug Module: CSSCLNT List CRSD Debug Module: OCRAPI List CRSD Debug Module: OCRASM List CRSD Debug Module: OCRCAC List CRSD Debug Module: OCRCLI List CRSD Debug Module: OCRMAS List CRSD Debug Module: OCRMSG List CRSD Debug Module: OCROSD List CRSD Debug Module: OCRRAW List CRSD Debug Module: OCRSRV List CRSD Debug Module: OCRUTL List CRSD Debug Module: SuiteTes List CRSD Debug Module: UiServer [root@host01 bin]# ./crsctl lsmodules css List CSSD Debug Module: CLSF

List CSSD Debug Module: List CSSD Debug Module: List CSSD Debug Module: List CSSD Debug Module: List CSSD Debug Module: List CSSD Debug Module: List CSSD Debug Module: [root@host01 bin]#

CSSD GIPCCM GIPCGM GIPCNM GPNP OLR SKGFD

[root@host01 bin]# ./crsctl lsmodules evm List EVMD Debug Module: CLSVER List EVMD Debug Module: CLUCLS List EVMD Debug Module: COMMCRS List EVMD Debug Module: COMMNS List EVMD Debug Module: CRSCCL List EVMD Debug Module: CRSOCR List EVMD Debug Module: CSSCLNT List EVMD Debug Module: EVMAGENT List EVMD Debug Module: EVMAPP List EVMD Debug Module: EVMCOMM List EVMD Debug Module: EVMD List EVMD Debug Module: EVMDMAIN List EVMD Debug Module: EVMEVT List EVMD Debug Module: OCRAPI List EVMD Debug Module: OCRCLI List EVMD Debug Module: OCRMSG [root@host01 bin]#

[root@host01 bin]# ./crsctl -h Usage: crsctl add - add a resource, type or other entity crsctl check - check a service, resource or other entity crsctl config - output autostart configuration crsctl debug - obtain or modify debug state crsctl delete - delete a resource, type or other entity crsctl disable - disable autostart crsctl discover - discover DHCP server crsctl enable - enable autostart crsctl get - get an entity value crsctl getperm - get entity permissions crsctl lsmodules - list debug modules crsctl modify - modify a resource, type or other entity crsctl query - query service state crsctl pin - pin the nodes in the node list crsctl relocate - relocate a resource, server or other entity crsctl replace - replaces the location of voting files crsctl release - release a DHCP lease crsctl request - request a DHCP lease crsctl setperm - set entity permissions crsctl set - set an entity value crsctl start - start a resource, server or other entity crsctl status - get status of a resource or other entity crsctl stop - stop a resource, server or other entity crsctl unpin - unpin the nodes in the node list crsctl unset - unset an entity value, restoring its default [root@host01 bin]#

Cluster Stock [root@host01 bin]# ./crsctl lsmodules Usage: crsctl lsmodules {mdns|gpnp|css|crf|crs|ctss|evm|gipc} where mdns multicast Domain Name Server gpnp Grid Plug-n-Play Service css Cluster Synchronization Services crf Cluster Health Monitor crs Cluster Ready Services ctss Cluster Time Synchronization Service evm EventManager gipc Grid Interprocess Communications [root@host01 bin]#

#########@@@@@@@@@@@@@********************************** [root@host01 bin]# ./oclumon query> dumpnodeview dumpnodeview: Node name not given. Querying for the local host ---------------------------------------Node: host01 Clock: 02-15-13 10.31.01 SerialNo:46654 ---------------------------------------SYSTEM: #cpus: 1 cpu: 4.38 cpuq: 43 physmemfree: 41184 physmemtotal: 2425008 mcache: 989 816 swapfree: 3749236 swaptotal: 4200988 ior: 39 iow: 98 ios: 12 swpin: 0 swpout : 0 pgin: 39 pgout: 98 netr: 40.365 netw: 29.547 procs: 310 rtprocs: 12 #fds: 71 09 #sysfdlimit: 6815744 #disks: 7 #nics: 4 nicErrors: 0 TOP CONSUMERS: topcpu: oraagent.bin(8618) 0.19 topprivmem: java(4293) 185988 topshm: oracl e(9437) 188572 topfd: ohasd.bin(2964) 706 topthread: java(11282) 48 ---------------------------------------Node: host01 Clock: 02-15-13 10.31.06 SerialNo:46655 ---------------------------------------SYSTEM: #cpus: 1 cpu: 3.81 cpuq: 2 physmemfree: 47944 physmemtotal: 2425008 mcache: 9901 96 swapfree: 3749236 swaptotal: 4200988 ior: 42 iow: 631 ios: 97 swpin: 0 swpout : 0 pgin: 42 pgout: 631 netr: 48.907 netw: 37.366 procs: 310 rtprocs: 12 #fds: 7 121 #sysfdlimit: 6815744 #disks: 7 #nics: 4 nicErrors: 0 TOP CONSUMERS: topcpu: orarootagent.bi(3458) 0.40 topprivmem: java(4293) 185988 topshm: or acle(9437) 188572 topfd: ohasd.bin(2964) 706 topthread: java(11282) 48 ---------------------------------------Node: host01 Clock: 02-15-13 10.31.12 SerialNo:46656

---------------------------------------SYSTEM: #cpus: 1 cpu: 1.19 cpuq: 2 physmemfree: 47944 physmemtotal: 2425008 mcache: 9902 16 swapfree: 3749236 swaptotal: 4200988 ior: 42 iow: 66 ios: 10 swpin: 0 swpout: 0 pgin: 42 pgout: 66 netr: 29.595 netw: 26.000 procs: 310 rtprocs: 12 #fds: 711 9 #sysfdlimit: 6815744 #disks: 7 #nics: 4 nicErrors: 0 TOP CONSUMERS: topcpu: oracle(9210) 0.19 topprivmem: java(4293) 185988 topshm: oracle(9437 ) 188572 topfd: ohasd.bin(2964) 706 topthread: java(11282) 48 ---------------------------------------Node: host01 Clock: 02-15-13 10.31.17 SerialNo:46657 ---------------------------------------SYSTEM: #cpus: 1 cpu: 38.50 cpuq: 20 physmemfree: 23420 physmemtotal: 2425008 mcache: 99 5600 swapfree: 3749556 swaptotal: 4200988 ior: 1294 iow: 255 ios: 91 swpin: 97 s wpout: 0 pgin: 439 pgout: 255 netr: 62.888 netw: 44.058 procs: 312 rtprocs: 12 # fds: 7137 #sysfdlimit: 6815744 #disks: 7 #nics: 4 nicErrors: 0 TOP CONSUMERS: topcpu: oracle(9911) 6.36 topprivmem: java(4293) 185988 topshm: oracle(9437 ) 188700 topfd: ohasd.bin(2964) 706 topthread: java(11282) 48 ---------------------------------------Node: host01 Clock: 02-15-13 10.31.27 SerialNo:46659 ---------------------------------------SYSTEM: #cpus: 1 cpu: 47.51 cpuq: 4 physmemfree: 56476 physmemtotal: 2425008 mcache: 981 168 swapfree: 3749540 swaptotal: 4200988 ior: 1825 iow: 482 ios: 221 swpin: 0 sw pout: 30 pgin: 109 pgout: 482 netr: 92.251 netw: 132.754 procs: 310 rtprocs: 12 #fds: 7120 #sysfdlimit: 6815744 #disks: 7 #nics: 4 nicErrors: 0 TOP CONSUMERS: topcpu: ocssd.bin(3519) 1.39 topprivmem: java(4293) 185988 topshm: oracle(9 437) 188700 topfd: ohasd.bin(2964) 706 topthread: java(11282) 48 Waiting upto 300 secs for backend... query> query> manage -get reppath CHM Repository Path = /u01/app/11.2.0/grid/crf/db/host01 Done query> version Cluster Health Monitor (OS), Version 11.2.0.3.0 - Production Copyright 2011 Orac le. All rights reserved. query> showobjects

Following nodes are attached to the loggerd host01 host02 host03 query>

$$$$$#####################%%%%%%%%%%%*********************###33 [grid@host01 bin]$ pwd /u01/app/11.2.0/grid/bin [grid@host01 bin]$ cluvfy comp crs -n all Verifying CRS integrity Checking CRS integrity... Clusterware version consistency passed CRS integrity check passed Verification of CRS integrity was successful. [grid@host01 bin]$ cluvfy comp crs -n -verbose ERROR: A value is required for "-n <node_list>". See usage for details. USAGE: cluvfy comp crs [-n <node_list>] [-verbose] <node_list> is the comma separated list of non-domain qualified nodenames, on wh ich the test should be conducted. If "all" is specified, then all the nodes in t he cluster will be used for verification. DESCRIPTION: Checks the integrity of Oracle Cluster Ready Services(CRS) on all the nodes in t he nodelist. If no -n option is provided, local node is used for this check. [grid@host01 bin]$ ####################################4444 [grid@host01 bin]$ cluvfy comp clocksync -n all Verifying Clock Synchronization across the cluster nodes Checking if Clusterware is installed on all nodes... Check of Clusterware install passed Checking if CTSS Resource is running on all nodes... CTSS resource check passed Querying CTSS for time offset on all nodes... Query of CTSS for time offset passed

Check CTSS state started... CTSS is in Observer state. Switching over to clock synchronization checks using NTP Starting Clock synchronization checks using Network Time Protocol(NTP)... NTP Configuration file check started... NTP Configuration file check passed Checking daemon liveness... Liveness check passed for "ntpd" Check for NTP daemon or service alive passed on all nodes NTP daemon slewing option check passed NTP daemons boot time configuration check for slewing option passed NTP common Time Server Check started... Check of common NTP Time Server passed Clock time offset check from NTP Time Server started... Clock time offset check passed Clock synchronization check using Network Time Protocol(NTP) passed Oracle Cluster Time Synchronization Services check passed Verification of Clock Synchronization across the cluster nodes was successful. [grid@host01 bin]$ [grid@host01 bin]$ cluvfy comp gpnp -n all -verbose Verifying GPNP integrity Checking GPNP integrity... Node Name -----------------------------------host03 host02 host01 GPNP integrity check passed Verification of GPNP integrity was successful. [grid@host01 bin]$ Status -----------------------passed passed passed

########*************************************************888888888888 checking the profile [root@host01 bin]# ./crsctl stat res ora.testdb.db

NAME=ora.testdb.db TYPE=ora.database.type TARGET=ONLINE STATE=ONLINE on host01 [root@host01 bin]#

For Testdb ********* 6 [root@host01 bin]# ./crsctl stat res ora.testdb.db -p NAME=ora.testdb.db TYPE=ora.database.type ACL=owner:oracle:rwx,pgrp:oinstall:rwx,other::r-ACTION_FAILURE_TEMPLATE= ACTION_SCRIPT= ACTIVE_PLACEMENT=1 AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX% AUTO_START=restore CARDINALITY=1 CHECK_INTERVAL=1 CHECK_TIMEOUT=30 CLUSTER_DATABASE=false DATABASE_TYPE=SINGLE DB_UNIQUE_NAME=testdb DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=database) PROPERTY(DB_UNIQUE_NAME= CONC AT(PARSE(%NAME%, ., 2), %USR_ORA_DOMAIN%, .)) ELEMENT(INSTANCE_NAME= %GEN_USR_OR A_INST_NAME%) ELEMENT(DATABASE_TYPE= %DATABASE_TYPE%) DEGREE=1 DESCRIPTION=Oracle Database resource ENABLED=1 FAILOVER_DELAY=0 FAILURE_INTERVAL=60 FAILURE_THRESHOLD=1 GEN_AUDIT_FILE_DEST=/u01/app/oracle/admin/testdb/adump GEN_START_OPTIONS= GEN_START_OPTIONS@SERVERNAME(host01)=open GEN_USR_ORA_INST_NAME= GEN_USR_ORA_INST_NAME@SERVERNAME(host01)=testdb HOSTING_MEMBERS= INSTANCE_FAILOVER=1 LOAD=1 LOGGING_LEVEL=1 MANAGEMENT_POLICY=AUTOMATIC NLS_LANG= NOT_RESTARTING_TEMPLATE= OFFLINE_CHECK_INTERVAL=0 ONLINE_RELOCATION_TIMEOUT=0 ORACLE_HOME=/u01/app/oracle/product/11.2.0/dbhome_1 ORACLE_HOME_OLD= PLACEMENT=restricted PROFILE_CHANGE_TEMPLATE= RESTART_ATTEMPTS=2 ROLE=PRIMARY SCRIPT_TIMEOUT=60 SERVER_POOLS=ora.testdb SPFILE= START_DEPENDENCIES=weak(type:ora.listener.type,uniform:ora.ons)

START_TIMEOUT=600 STATE_CHANGE_TEMPLATE= STOP_DEPENDENCIES= STOP_TIMEOUT=600 TYPE_VERSION=3.2 UPTIME_THRESHOLD=1h USR_ORA_DB_NAME=testdb USR_ORA_DOMAIN= USR_ORA_ENV= USR_ORA_FLAGS= USR_ORA_INST_NAME=testdb USR_ORA_OPEN_MODE=open USR_ORA_OPI=false USR_ORA_STOP_MODE=immediate VERSION=11.2.0.3.0 [root@host01 bin]# For ORCL *********** 6 [root@host01 bin]# ./crsctl stat res ora.orcl.db -p NAME=ora.orcl.db TYPE=ora.database.type ACL=owner:oracle:rwx,pgrp:oinstall:rwx,other::r-ACTION_FAILURE_TEMPLATE= ACTION_SCRIPT= ACTIVE_PLACEMENT=1 AGENT_FILENAME=%CRS_HOME%/bin/oraagent%CRS_EXE_SUFFIX% AUTO_START=restore CARDINALITY=3 CHECK_INTERVAL=1 CHECK_TIMEOUT=30 CLUSTER_DATABASE=true DATABASE_TYPE=RAC DB_UNIQUE_NAME=orcl DEFAULT_TEMPLATE=PROPERTY(RESOURCE_CLASS=database) PROPERTY(DB_UNIQUE_NAME= CONC AT(PARSE(%NAME%, ., 2), %USR_ORA_DOMAIN%, .)) ELEMENT(INSTANCE_NAME= %GEN_USR_OR A_INST_NAME%) ELEMENT(DATABASE_TYPE= %DATABASE_TYPE%) DEGREE=1 DESCRIPTION=Oracle Database resource ENABLED=1 FAILOVER_DELAY=0 FAILURE_INTERVAL=60 FAILURE_THRESHOLD=1 GEN_AUDIT_FILE_DEST=/u01/app/oracle/admin/orcl/adump GEN_START_OPTIONS= GEN_START_OPTIONS@SERVERNAME(host01)=open GEN_START_OPTIONS@SERVERNAME(host02)=open GEN_START_OPTIONS@SERVERNAME(host03)=open GEN_USR_ORA_INST_NAME= GEN_USR_ORA_INST_NAME@SERVERNAME(host01)=orcl1 GEN_USR_ORA_INST_NAME@SERVERNAME(host02)=orcl2 GEN_USR_ORA_INST_NAME@SERVERNAME(host03)=orcl3 HOSTING_MEMBERS= INSTANCE_FAILOVER=0 LOAD=1 LOGGING_LEVEL=1 MANAGEMENT_POLICY=AUTOMATIC NLS_LANG=

NOT_RESTARTING_TEMPLATE= OFFLINE_CHECK_INTERVAL=0 ONLINE_RELOCATION_TIMEOUT=0 ORACLE_HOME=/u01/app/oracle/product/11.2.0/dbhome_1 ORACLE_HOME_OLD= PLACEMENT=restricted PROFILE_CHANGE_TEMPLATE= RESTART_ATTEMPTS=2 ROLE=PRIMARY SCRIPT_TIMEOUT=60 SERVER_POOLS=ora.orcl SPFILE=+DATA/orcl/spfileorcl.ora START_DEPENDENCIES=hard(ora.DATA.dg,ora.FRA.dg) weak(type:ora.listener.type,glob al:type:ora.scan_listener.type,uniform:ora.ons,global:ora.gns) pullup(ora.DATA.d g,ora.FRA.dg) START_TIMEOUT=600 STATE_CHANGE_TEMPLATE= STOP_DEPENDENCIES=hard(intermediate:ora.asm,shutdown:ora.DATA.dg,shutdown:ora.FR A.dg) STOP_TIMEOUT=600 TYPE_VERSION=3.2 UPTIME_THRESHOLD=1h USR_ORA_DB_NAME=orcl USR_ORA_DOMAIN= USR_ORA_ENV= USR_ORA_FLAGS= USR_ORA_INST_NAME= USR_ORA_INST_NAME@SERVERNAME(host01)=orcl1 USR_ORA_INST_NAME@SERVERNAME(host02)=orcl2 USR_ORA_INST_NAME@SERVERNAME(host03)=orcl3 USR_ORA_OPEN_MODE=open USR_ORA_OPI=false USR_ORA_STOP_MODE=immediate VERSION=11.2.0.3.0 [root@host01 bin]# RMAN> show all 2> ; RMAN configuration parameters for database with db_unique_name ORCL are: CONFIGURE RETENTION POLICY TO REDUNDANCY 1; # default CONFIGURE BACKUP OPTIMIZATION OFF; # default CONFIGURE DEFAULT DEVICE TYPE TO DISK; # default CONFIGURE CONTROLFILE AUTOBACKUP OFF; # default CONFIGURE CONTROLFILE AUTOBACKUP FORMAT FOR DEVICE TYPE DISK TO %F; # default CONFIGURE DEVICE TYPE DISK PARALLELISM 1 BACKUP TYPE TO BACKUPSET; # default CONFIGURE DATAFILE BACKUP COPIES FOR DEVICE TYPE DISK TO 1; # default CONFIGURE ARCHIVELOG BACKUP COPIES FOR DEVICE TYPE DISK TO 1; # default CONFIGURE MAXSETSIZE TO UNLIMITED; # default CONFIGURE ENCRYPTION FOR DATABASE OFF; # default CONFIGURE ENCRYPTION ALGORITHM AES128; # default CONFIGURE COMPRESSION ALGORITHM BASIC AS OF RELEASE DEFAULT OPTIMIZE FOR LOA D TRUE ; # default CONFIGURE ARCHIVELOG DELETION POLICY TO NONE; # default CONFIGURE SNAPSHOT CONTROLFILE NAME TO /u01/app/oracle/product/11.2.0/dbhome_1/ dbs/snapcf_orcl1.f; # default RMAN>

ONE NODE RAC *****************11

Vous aimerez peut-être aussi

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- ReadmeDocument1 pageReadmeMohammad ZaheerPas encore d'évaluation

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (587)

- 2013 10 14.141338+0530istDocument1 page2013 10 14.141338+0530istMohammad ZaheerPas encore d'évaluation

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (890)

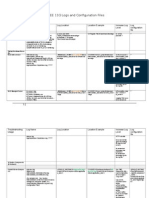

- OBIEE 11G Logs and Configuration FilesDocument7 pagesOBIEE 11G Logs and Configuration FilesMohammad ZaheerPas encore d'évaluation

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- SOA Integration Using Oracle ISGDocument48 pagesSOA Integration Using Oracle ISGjeffeli100% (2)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- 2013 10 14.141338+0530istDocument1 page2013 10 14.141338+0530istMohammad ZaheerPas encore d'évaluation

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- 2013 09 19.235744+0530istDocument1 page2013 09 19.235744+0530istMohammad ZaheerPas encore d'évaluation

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- 2013 10 04.011110+0530istDocument1 page2013 10 04.011110+0530istMohammad ZaheerPas encore d'évaluation

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- 1Document1 page1Mohammad ZaheerPas encore d'évaluation

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- 2013 12 09.194331+0530istDocument1 page2013 12 09.194331+0530istMohammad ZaheerPas encore d'évaluation

- 2013 09 19.233345+0530istDocument1 page2013 09 19.233345+0530istMohammad ZaheerPas encore d'évaluation

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- 2013 09 22.222802+0530istDocument1 page2013 09 22.222802+0530istMohammad ZaheerPas encore d'évaluation

- 2013 11 07.142236+0530istDocument1 page2013 11 07.142236+0530istMohammad ZaheerPas encore d'évaluation

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- 2013 09 28.220952+0530istDocument2 pages2013 09 28.220952+0530istMohammad ZaheerPas encore d'évaluation

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- 2013 09 21.144726+0530istDocument1 page2013 09 21.144726+0530istMohammad ZaheerPas encore d'évaluation

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2219)

- 2013 09 18.224237+0530istDocument1 page2013 09 18.224237+0530istMohammad ZaheerPas encore d'évaluation

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- 2013 10 04.011110+0530istDocument1 page2013 10 04.011110+0530istMohammad ZaheerPas encore d'évaluation

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- 2014 01 13.020312+0530istDocument1 page2014 01 13.020312+0530istMohammad ZaheerPas encore d'évaluation

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)

- 2013 10 13.141338+0530istDocument1 page2013 10 13.141338+0530istMohammad ZaheerPas encore d'évaluation

- 2014 01 23.232730+0530istDocument1 page2014 01 23.232730+0530istMohammad ZaheerPas encore d'évaluation

- 2014 01 18.222700+0530istDocument1 page2014 01 18.222700+0530istMohammad ZaheerPas encore d'évaluation

- 2013 11 22.180611+0530istDocument1 page2013 11 22.180611+0530istMohammad ZaheerPas encore d'évaluation

- 2013 11 16.142455+0530istDocument1 page2013 11 16.142455+0530istMohammad ZaheerPas encore d'évaluation

- 2014 01 13.010901+0530istDocument1 page2014 01 13.010901+0530istMohammad ZaheerPas encore d'évaluation

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- 2014 01 24.101050+0530istDocument1 page2014 01 24.101050+0530istMohammad ZaheerPas encore d'évaluation

- 2014 01 27.015226+0530istDocument1 page2014 01 27.015226+0530istMohammad ZaheerPas encore d'évaluation

- 2014 01 25.222903+0530istDocument1 page2014 01 25.222903+0530istMohammad ZaheerPas encore d'évaluation

- 2014 01 27.015304+0530istDocument1 page2014 01 27.015304+0530istMohammad ZaheerPas encore d'évaluation

- 2014 02 10.110415+0530istDocument1 page2014 02 10.110415+0530istMohammad ZaheerPas encore d'évaluation

- 2014 01 27.015405+0530istDocument1 page2014 01 27.015405+0530istMohammad ZaheerPas encore d'évaluation

- 2014 02 23.103028+0530istDocument1 page2014 02 23.103028+0530istMohammad ZaheerPas encore d'évaluation

- 2014 05 07 Effective Log Management BookletDocument10 pages2014 05 07 Effective Log Management BookletDarren Yuen Tse HouPas encore d'évaluation

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (119)

- External Escalation Matrix - 2021Document1 pageExternal Escalation Matrix - 2021sreePas encore d'évaluation

- Tata HPC AmanDocument34 pagesTata HPC Amantemp mailPas encore d'évaluation

- Capitulo 3 - NIST SP 800-63 Digital Identity GuidelinesDocument3 pagesCapitulo 3 - NIST SP 800-63 Digital Identity GuidelinesCristiane LimaPas encore d'évaluation

- SAFE Poster PDFDocument1 pageSAFE Poster PDFEdison HaiPas encore d'évaluation

- Architecture of AI Systems - Engineering For Big Data and AI (Grokking)Document60 pagesArchitecture of AI Systems - Engineering For Big Data and AI (Grokking)Herve RousselPas encore d'évaluation

- Data Center Best Practice Security by Palo AltoDocument94 pagesData Center Best Practice Security by Palo AltocakrajutsuPas encore d'évaluation

- How Do I Prevent Someone From Forwarding A PDFDocument8 pagesHow Do I Prevent Someone From Forwarding A PDFSomblemPas encore d'évaluation

- SAP HANA Cockpit Installation Guide enDocument54 pagesSAP HANA Cockpit Installation Guide enneoPas encore d'évaluation

- WAHA User GuideDocument30 pagesWAHA User GuidewahacmsPas encore d'évaluation

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- GBI S4H Part 00 IntroductionDocument168 pagesGBI S4H Part 00 IntroductionmfakhrurizapradanaPas encore d'évaluation

- Manage Engine NETFLOWANALYZERDocument3 pagesManage Engine NETFLOWANALYZERAvotra RasolomalalaPas encore d'évaluation

- Mule3user 120911 0919 968Document881 pagesMule3user 120911 0919 968Christian Bremer0% (1)

- Oracle Data Guard With TSMDocument9 pagesOracle Data Guard With TSMDens Can't Be Perfect100% (1)

- Bugreport Vayu - Global TKQ1.221013.002 2023 11 27 22 33 32 Dumpstate - Log 31440Document30 pagesBugreport Vayu - Global TKQ1.221013.002 2023 11 27 22 33 32 Dumpstate - Log 31440pepiño :bPas encore d'évaluation

- ODI XML IntegrationDocument20 pagesODI XML IntegrationAmit Sharma100% (6)

- TAPA Tutorial 1119163.14Document124 pagesTAPA Tutorial 1119163.14manojsingh474Pas encore d'évaluation

- Information SystemDocument27 pagesInformation SystemDevilPas encore d'évaluation

- S2350 - S5300 - S6300 V200R003C00 Upgrade GuideDocument59 pagesS2350 - S5300 - S6300 V200R003C00 Upgrade GuideMartin PelusoPas encore d'évaluation

- SRS Sample (New Capstone - IS)Document188 pagesSRS Sample (New Capstone - IS)Mở Thế GiớiPas encore d'évaluation

- Data Recovery & Backups - CourseraDocument1 pageData Recovery & Backups - CourseraIván Barra50% (2)

- Digital Manufacturing: History, Perspectives, and Outlook: Special Issue Paper 451Document1 pageDigital Manufacturing: History, Perspectives, and Outlook: Special Issue Paper 451Avik K DuttaPas encore d'évaluation

- RMMMINIT - Protection Against Unintended Execution PDFDocument2 pagesRMMMINIT - Protection Against Unintended Execution PDFlovely lovelyPas encore d'évaluation

- dc1500 dc1550: Control Series 221/321 + 222/322 + AB425SDocument16 pagesdc1500 dc1550: Control Series 221/321 + 222/322 + AB425SЦанко МарковскиPas encore d'évaluation

- Sap MM Manual PDFDocument2 pagesSap MM Manual PDFOm Prakash Dubey25% (4)

- DBA Database Management Checklist Written byDocument3 pagesDBA Database Management Checklist Written bydivandannPas encore d'évaluation

- Chapter 2.3 - Configuring Floating Static Routes InstructionsDocument3 pagesChapter 2.3 - Configuring Floating Static Routes InstructionsPao Renz OngPas encore d'évaluation

- Week 1 - Data Visualization and SummarizationDocument8 pagesWeek 1 - Data Visualization and SummarizationJadee BuenaflorPas encore d'évaluation

- AZ 104 August 2023Document5 pagesAZ 104 August 2023munshiabuPas encore d'évaluation

- ESG200 - Barracuda Email Security Gateway Product SpecialistDocument13 pagesESG200 - Barracuda Email Security Gateway Product SpecialistAlberto Huamani CanchizPas encore d'évaluation