Académique Documents

Professionnel Documents

Culture Documents

Fabio Ancona Et Al - On The Importance of Sorting in "Neural Gas" Training of Vector Quantizers

Transféré par

Tuhma0 évaluation0% ont trouvé ce document utile (0 vote)

15 vues5 pagesTitre original

Fabio Ancona et al- On the Importance of Sorting in "Neural Gas" Training of Vector Quantizers

Copyright

© Attribution Non-Commercial (BY-NC)

Formats disponibles

PDF ou lisez en ligne sur Scribd

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Attribution Non-Commercial (BY-NC)

Formats disponibles

Téléchargez comme PDF ou lisez en ligne sur Scribd

0 évaluation0% ont trouvé ce document utile (0 vote)

15 vues5 pagesFabio Ancona Et Al - On The Importance of Sorting in "Neural Gas" Training of Vector Quantizers

Transféré par

TuhmaDroits d'auteur :

Attribution Non-Commercial (BY-NC)

Formats disponibles

Téléchargez comme PDF ou lisez en ligne sur Scribd

Vous êtes sur la page 1sur 5

On the Importance of Sorting in "Neural Gas" Training of Vector Quantizers

Fabio Ancona, Sandro Ridella, Stefano Rovetta, and Rodolfo Zunino

DIBE - Department of Biophysical and Electronic Engineering - University of Genoa

Via all’ Opera Pia 11a - 16145 Genova - Italy - Email; zunino @ dibe.unige.it

Abstract—The paper considers the role ofthe sorting proc-

cas in the wellknown “Neural Gas" model for Vector

‘Quantization. Theoretical derivations and experimental

evidence show that complete sorting Is not required for ef-

fective training, since limiting the sorted list to even a few

top units performs effectively. This property has a signif

‘ant Impact on the implementation of the overall neural

tmodel atthe local level.

1. Introduction

Vector Quantization (VQ) represents an_ important

paradigm for information representation from both a

theoretical point of view {1] and an applicative perspec-

tive [2]. This is mainly due to the possibilty of easy in-

terpretation of training outcomes and to the remarkable

performances attained by VQ-based compression.

‘The "Neural Gas" (NG) model [3] provides an effec-

tive algorithm for adaptive training of vector quantizers,

by a simple principle of operation: neurons are iteratively

adjusted, each unit moves proportionally to its similarity

to training samples. As compared to k-means clustering

[4], NG seems to be less subject to getting trapped in lo-

cal minima. Theory proves [3] that the iterative NG al-

gorithm implements a stochastic gradient descent of an

analytical cost function, as opposed to Kohonen's Self

Organizing Maps [5], for which no such property has

been established, Moreover, NG involves a matching

based rather than a topology-driven ordering of neurons

although a dynamic version of NG has been proposed for

flexible-topology networks [6,7], the basic NG model

does not assume any interconnection schema among neu-

ron units. This simplifies hardware implementations, and

motivates the interest in the NG algorithm, as the re

search presented here aims to a hardware support of VQ

Waining: both a digital [8] and an analog [9] approach

Yield a "VQ-coprocessor" for reducing huge computa

tional taining loads.

‘Most ofthe neural model can be supported atthe local

level, that is, each neuron operates independently of the

(0-7803-4122-8/97 $10,000 1997 IEEE.

04

others; network-wide inter-neuron communications only

take place in ordering the matching scores of neurons.

‘The analysis presented here addresses the relevance of

sorting to the algorithm's performance, with @ twofold

‘goal: from a a theoretical point of view, the investigation

clarifies important features of the basic training model;

from a practical perspective, simplifying sorting without

affecting overall performance can reduce computational

costs dramatically and facilitate hardware realizations, as

well. A formal analysis first characterizes the sorting

process by measuring the relevance of each position in

the list. Relative importances decrease monotonically

from “top” positions in the list to “bottom” ones: in other

‘words, identifying the list head accurately is more impor-

tant than the tail. Moreover, as a result of the annealing

process, the gap in importance between non-top list posi-

tions progressively shrinks during training, and eventu-

ally only the best-matching neuron is really imporant.

Complete, exact sorting is a basic condition forthe va

lidity of the original NG theoretical model; nevertheless,

the present analysis shows that in practical implementa-

tions complete sorting may be avoided, and determining

only a few “top” positions in the neuron list is sufficient.

‘The practical impact of such derivations is greatest on

large codebooks, since detecting the best k-out-of-V units

is simpler than sorting a long list completely; this is true

both in software (by cutting computational costs) and in

hardware, where the circuitry for exhaustive sorting may

‘be quite complex [10-12]. When considering that NG

‘optimization supports a fuzzy clustering strategy [3],

partial sorting can be regarded as implementing a sort of

constrained version of k-means clustering, in which only

‘units are active at any iterations.

‘A set of experimental results support theoretical pre-

dictions. Experiments include tests on the synthetic data

set used by Fritzke [6] to demonstrate VQ algorithms,

‘and tests on a real application involving VQ-based image

compression [2,13]. Experiments aim to assess the im-

portance of the sorting process quantitatively, by measur-

ing the effect of incomplete or incorrect sorting on the

algorithm's distortion performance; the statistical valida-

tion of obtained results required a massive amount of in-

dependent runs. Experimental evidence yields two con-

clusions: first, partial, exact sorting performs better than

‘complete but noisy sorting; second, even a few units in

Partial sorting is sufficient to attain a final distortion

‘equivalent to that attained by ideal NG.

2. Sorting in “Neural Gas” training

“Neural Gas” iteratively adjusts neurons’ positions ac-

cording to their similarities to input patterns. In order to

coordinate adjustments without a fixed topological

schema, the training process uses Euclidean distances

from training samples for sorting neurons. A nonlinear

time-controlled scheduling weights the reward for each

neuron’s ranking; thus training evolves from a distrib-

uted-activation pattern, in which several neurons are ad-

justed at the same time, to a truly Winner-Take-All

(WTA) schema, in which only one neuron is affected by a

training sample. Theory [3] shows thatthe asympiotical

Positions of neurons in the data space span a uniform

coverage over data. In the following, a set of neurons

{Wj #1,...N Jare positioned in a d-dimensional domain

space, X; the terms ‘neuron’ and ‘codevector' will be used

as synonyms. The NG algorithm can be outlined as fol-

lows:

For iterations ¢=0 to T:

1. Draw at random a training sample, x € X;

2. ¥ neuron, w;, compute the Euclidean distance:

4,=|W,-x] Vi

3. Sort neurons in order of increasing a);

Jet k(/) = 0,...V denote the rank of the i-th neuron

in the sorted list,

4. Adjust neurons according to their positions in the

sorte ls:

wll = wi +n) Heh -(w? =x) we @)

The rewarding function, A, balances the distribution of

‘weight updates during training and drives the training

process. An implementation of h( is given in (3], and the

overall scheduling functions can be written as

wo

8

snohomish

aC on 40)

ue to the satisfactory results obtained, implementa-

tion (3) willbe adopted as a default throughout the paper.

‘The settings forthe annealing schedule — = 10, y=

0.01, no ™ 0.65, np™ 0.05 — proved effective in all ex-

periments, NG as a training algorithm is interesting from

several viewpoints, AS to distortion performance, empiri-

cal evidence (reported in [3] and verified in the present

research) indicates that, on average, NG yields smaller

distortion than other algorithms; in addition, overall con-

vergence typically requires a smaller number of iterations

(training samples). As to implementation, the model's

features meet hardware-related requirements very well,

‘specially when considering that the neural model does,

not involve any topological structure. Most of a neuron's

ity performs independently of the other units. AS

distance computations (1) and weight adjustments (2)

only involve local information, the bulk of the computa-

tional load can be supported by neuron-embedded cir-

culty (9}

Sorting is the only process requiring inter-neuron cir-

culation of information for evaluating list positions k();

in a full impementation of NG, complete, exact sorting

demands network-wide communications. In training

hhuge networks for a real application (e.g., image com

pression), this may prove very expensive in terms of

hardware connectivity and layout design [10-12]. Thus

‘one might wonder how accurate and complete the sorting,

process must be to ensure effective training; the overall

goal is to reduce the information flow through the net-

work.

‘Two basic questions arise: the first concerns sorting

accuracy. can each unit be allowed just to estimate its

own list ranking by using limited information? —Con-

versely, the second question concerns sorting complete

ness: can proper training be attained by identifying

(exactly) a subset of the total sorted list of neurons? Both

problems lead o an analysis of the sensitivity of the

training. process to sorting errors. Weight update (2)

evolves as: o(k.) = n(Q-Ak,) where the neuron index, J

is omitted for simplicity. “Sensitivity” will be expressed

wy

a Mo (m)" =k

2 ofe,t)=-28 en =]

alae) GG) ©

“Thus sensitivity measures how much a variation from the

Jeth list positon atime r affects the weight update of the

associated neuron. Normalized sensitivity, a trivial sim-

plification of (4), beter fits practical purposes:

sstinr-(22)? eo(-5t)

Stk

©

tos

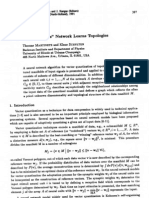

Fig.{ - Sensi

a) - Normalized sensitivity

at top position, k=0

sun [ig PBPOEENCE CD

Mes...

vaeseserpeumanas

ity analysis for different positions in the sorted list of neurons

b) - Normalized sensitivity

at general positions, ke1

¢) Overall Importance

at general positions, ket

Analyzing the family of curves (5) parameterized by k

points out interesting features ofthe training progress.

irstof all, the graphs in Fig.la and Fig Ib show that

normalized sensitivity for the top positon in the list (the

best-matching neuron with k-O) is substantially different

from all others (note the different scale on the graphs).

Second, the importance of the *winning” unit increases

while training proceeds, whereas the importances of all

‘other positions decrease. This is analytically derived by

verifiying that

©

‘The limits (6) are well approximated in practice; with the

parameter settings above cited (¢.g, -p = 0.01), final

sensitivity 5,(&,7) is very large when XO and virally

vanishes even for small positive values of k

Finally, comparing curves shows that sensitivity de-

creases from higher to lower ranks (le,,

K ck" > 5,(k.)>S,(k".4) )- A quantitative “overall

importance”, 1 (K), ofthe k-th list position is given by

1k): Js.G.0 it

TImportances (7) give relative weights of the various list

Positions throughout the entire training process. Inte-

grals can be computed numerically: results forthe first 15

Positive values of& are reported in Fig. le; the importance

value for k=0 far exceeds any other (by at least $0 times)

and has not been included. Relative importance clearly

drops to insignificant values even for small values of &

This strongly supports partial sorting

o

106

‘To sum up, the theoretical analysis of the sorting proc

ess in NG training lead toa few basic conclusions:

Correct identification of the best-matching neuron be-

comes more and more important while training pro-

ceeds; conversely, sensitivity for non-op positions

tends to a null value during training.

Sensitivity is not uniformly distributed among list

positions. More precisely, it decreases when & in-

‘creases; however, the gaps among different curves

shrink when k increases,

The specific importances of positions in the list

(weighted over the entire training process) indicate

that a few top positions convey most of the overall

importance; partial sorting is acceptable in practice.

3. Experimental results

‘This sections discusses practical methods io take

maximum advantage of the conclusions drawn by the

above theoretical analysis, In particular, two crucial is

sues are considered: 1) if partial sorting is supported, how

‘many positions in the list must be computed exactly to

attain an acceptable distortion? 2) what is the best proc

essing strategy for the positions not included in the partal

list? Due to the complexity of a theoretical analysis to

‘this purpose, an empirical approach has been adopted and

different domains have been considered to validate con-

clusions, The procedure used in all tests involves two ex-

perimental set-ups:

1. a few (top) list positions are computed exactly and

the corresponding neurons adjusted accordingly;

‘uniform noise affects the sorting process for other

neurons, whose list positions are somehow offset. In

the simulations, noise injection is controlled by how

nuch a rank can be misplaced

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (120)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- Anthony Kuh - Neural Networks and Learning TheoryDocument72 pagesAnthony Kuh - Neural Networks and Learning TheoryTuhmaPas encore d'évaluation

- Jan Jantzen - Introduction To Perceptron NetworksDocument32 pagesJan Jantzen - Introduction To Perceptron NetworksTuhmaPas encore d'évaluation

- T. Villmann Et Al - Fuzzy Labeled Neural Gas For Fuzzy ClassificationDocument8 pagesT. Villmann Et Al - Fuzzy Labeled Neural Gas For Fuzzy ClassificationTuhmaPas encore d'évaluation

- Ji Zhu - Flexible Statistical ModelingDocument98 pagesJi Zhu - Flexible Statistical ModelingTuhmaPas encore d'évaluation

- Kai Labusch, Erhardt Barth and Thomas Martinetz - Sparse Coding Neural Gas: Learning of Overcomplete Data RepresentationsDocument21 pagesKai Labusch, Erhardt Barth and Thomas Martinetz - Sparse Coding Neural Gas: Learning of Overcomplete Data RepresentationsTuhmaPas encore d'évaluation

- Václav Hlavác - Linear Classifiers, A Perceptron FamilyDocument22 pagesVáclav Hlavác - Linear Classifiers, A Perceptron FamilyTuhmaPas encore d'évaluation

- F.-M. Schleif, B. Hammer and T. Villmann - Margin Based Active Learning For LVQ NetworksDocument20 pagesF.-M. Schleif, B. Hammer and T. Villmann - Margin Based Active Learning For LVQ NetworksTuhmaPas encore d'évaluation

- Frank-Michael Schleif Et Al - Generalized Derivative Based Kernelized Learning Vector QuantizationDocument8 pagesFrank-Michael Schleif Et Al - Generalized Derivative Based Kernelized Learning Vector QuantizationTuhmaPas encore d'évaluation

- Kai Labusch, Erhardt Barth and Thomas Martinetz - Approaching The Time Dependent Cocktail Party Problem With Online Sparse Coding Neural GasDocument9 pagesKai Labusch, Erhardt Barth and Thomas Martinetz - Approaching The Time Dependent Cocktail Party Problem With Online Sparse Coding Neural GasTuhmaPas encore d'évaluation

- Jorg A. Walter, Thomas M. Martinetz and Klaus J. Schulten - Industrial Robot Learns Visuo-Motor Coordination by Means Of" Neural-Gas" NetworkDocument8 pagesJorg A. Walter, Thomas M. Martinetz and Klaus J. Schulten - Industrial Robot Learns Visuo-Motor Coordination by Means Of" Neural-Gas" NetworkTuhmaPas encore d'évaluation

- Frank-Michael Schleif - Sparse Kernelized Vector Quantization With Local DependenciesDocument8 pagesFrank-Michael Schleif - Sparse Kernelized Vector Quantization With Local DependenciesTuhmaPas encore d'évaluation

- Bai-Ling Zhang, Min-Yue Fu and Hong Yan - Handwritten Digit Recognition by Neural 'Gas' Model and Population DecodingDocument5 pagesBai-Ling Zhang, Min-Yue Fu and Hong Yan - Handwritten Digit Recognition by Neural 'Gas' Model and Population DecodingTuhmaPas encore d'évaluation

- Frank-Michael Schleif, Matthias Ongyerth and Thomas Villmann - Sparse Coding Neural Gas For Analysis of Nuclear Magnetic Resonance SpectrosDocument6 pagesFrank-Michael Schleif, Matthias Ongyerth and Thomas Villmann - Sparse Coding Neural Gas For Analysis of Nuclear Magnetic Resonance SpectrosTuhmaPas encore d'évaluation

- Barbara Hammer and Alexander Hasenfuss - Topographic Mapping of Large Dissimilarity Data SetsDocument58 pagesBarbara Hammer and Alexander Hasenfuss - Topographic Mapping of Large Dissimilarity Data SetsTuhmaPas encore d'évaluation

- Stephen J. Verzi Et Al - Universal Approximation, With Fuzzy ART And. Fuzzy ARTMAPDocument6 pagesStephen J. Verzi Et Al - Universal Approximation, With Fuzzy ART And. Fuzzy ARTMAPTuhmaPas encore d'évaluation

- Kai Labusch, Erhardt Barth and Thomas Martinetz - Learning Data Representations With Sparse Coding Neural GasDocument6 pagesKai Labusch, Erhardt Barth and Thomas Martinetz - Learning Data Representations With Sparse Coding Neural GasTuhmaPas encore d'évaluation

- Chang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsDocument7 pagesChang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsTuhmaPas encore d'évaluation

- J.-H.Wang and J.-D.Rau - VQ-agglomeration: A Novel Approach To ClusteringDocument9 pagesJ.-H.Wang and J.-D.Rau - VQ-agglomeration: A Novel Approach To ClusteringTuhmaPas encore d'évaluation

- Topology Representing Network Enables Highly Accurate Classification of Protein Images Taken by Cryo Electron-Microscope Without MaskingDocument16 pagesTopology Representing Network Enables Highly Accurate Classification of Protein Images Taken by Cryo Electron-Microscope Without MaskingTuhmaPas encore d'évaluation

- Frank-Michael Schleif, Andrej Gisbrecht and Barbara Hammer - Accelerating Kernel Neural GasDocument8 pagesFrank-Michael Schleif, Andrej Gisbrecht and Barbara Hammer - Accelerating Kernel Neural GasTuhmaPas encore d'évaluation

- Shao-Han Liu and Jzau-Sheng Lin - A Compensated Fuzzy Hopfield Neural Network For Codebook Design in Vector QuantizationDocument13 pagesShao-Han Liu and Jzau-Sheng Lin - A Compensated Fuzzy Hopfield Neural Network For Codebook Design in Vector QuantizationTuhmaPas encore d'évaluation

- Ajantha S. Atukorale and P.N. Suganthan - Hierarchical Overlapped Neural Gas Network With Application To Pattern ClassificationDocument12 pagesAjantha S. Atukorale and P.N. Suganthan - Hierarchical Overlapped Neural Gas Network With Application To Pattern ClassificationTuhmaPas encore d'évaluation

- Lachlan L.H. Andrew - Improving The Robustness of Winner-Take - All Cellular Neural NetworksDocument6 pagesLachlan L.H. Andrew - Improving The Robustness of Winner-Take - All Cellular Neural NetworksTuhmaPas encore d'évaluation

- Banchar Arnonkijpanich, Barbara Hammer and Alexander Hasenfuss - Local Matrix Adaptation in Topographic Neural MapsDocument34 pagesBanchar Arnonkijpanich, Barbara Hammer and Alexander Hasenfuss - Local Matrix Adaptation in Topographic Neural MapsTuhmaPas encore d'évaluation

- Clifford Sze-Tsan Choy and Wan-Chi Siu - Fast Sequential Implementation of "Neural-Gas" Network For Vector QuantizationDocument4 pagesClifford Sze-Tsan Choy and Wan-Chi Siu - Fast Sequential Implementation of "Neural-Gas" Network For Vector QuantizationTuhmaPas encore d'évaluation

- Thomas M. Martinetz Et Al - "Neural-Gas" Network For Vector Quantization and Its Application To Time-Series PredictionDocument12 pagesThomas M. Martinetz Et Al - "Neural-Gas" Network For Vector Quantization and Its Application To Time-Series PredictionTuhmaPas encore d'évaluation

- Jim Holmström - Growing Neural Gas: Experiments With GNG, GNG With Utility and Supervised GNGDocument42 pagesJim Holmström - Growing Neural Gas: Experiments With GNG, GNG With Utility and Supervised GNGTuhmaPas encore d'évaluation

- Dietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPDocument13 pagesDietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPTuhmaPas encore d'évaluation

- A Neural-Gas Network Learns TopologiesDocument6 pagesA Neural-Gas Network Learns TopologiesLucas ArrudaPas encore d'évaluation