Académique Documents

Professionnel Documents

Culture Documents

תקשורת ספרתית- הרצאה 4 - מרחב האותות

Transféré par

RonTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

תקשורת ספרתית- הרצאה 4 - מרחב האותות

Transféré par

RonDroits d'auteur :

Formats disponibles

1

4 : -

2

k-D Detection Problem /M- hypotheses

Given a communication system with the following M-

hypotheses:

are known vectors which are transmitted by the

source

is a discrete random variables vector with a known

probability density function. The components of the vectors are

independent

( ) ( ), {1,.., } = =

i i

P symbol wastransmitted P H i M S

: , 1,...,

i i

H i M = + = R S n

1

( , 1,..., ) ( )

k

n j j j j n j j

j

P x n x dx j k f x dx

=

< < + = =

, 1,..,

i

i M = S

i

S

n

R

n

3

Vector Notation

The H

i

hypotheses is given by

11

s

R

1

S

n

12

s

1 1 1

:

| | | | | |

| | |

| | |

| | | = +

| | |

| | |

| | |

\ \ \

i

i

k ik k

r s n

H

r s n

( ) ( )

( )

1 1

1

or in vector notation

:

...., .... ; ...., ....

...., ....

= +

= =

=

i i

T T

k k

T

i i ik

H

r r n n

s s

R S n

R n

S

4

The optimal receiver

Noise

g(s,n)

Source

: ( , ) , {1,.., }

i

H g i M = = +

i i

R s n s n

A solution for the M-ary decision case

The optimal receiver

( ) ( | )

j j

P H f R S

5

Error Probability (cont.)

Assuming,

( ) 1/ , 1

i

P H M i M =

.The overall error probability

is the average of the message error probability

1 1

1 1

1

( | )

1

( ) ( | )

j

M M

E i

A

i j

j i

M M

j i

i j

j i

P f H d

M

G f H d

M

= =

= =

= =

=

R R

R R R

where

1

( )

0

j

j

j

A

G

A

R

R

R

6

Error Probability (cont.)

The trick

a.

( | ) ( | ),

j j k

A f H f H for all j k R R R

Therefore, for a given i

( | )

1

( | )

j

j

i

f H

A

f H

R

R

R

b.

( | )

1, 0 1

( | )

s

j

j

i

f H

A s

f H

(

(

R

R

R

Thus

( | )

( ) , 0 1

( | )

s

j

j

i

f H

G s

f H

(

(

R

R

R

77

Error Probability (cont.)

The trick

a.

( | ) ( | ),

j j k

A f H f H for all j k R R R

Therefore, for a given i

( | )

1

( | )

j

j

i

f H

A

f H

R

R

R

b.

( | )

1, 0 1

( | )

s

j

j

i

f H

A s

f H

(

(

R

R

R

Thus

( | )

( ) , 0 1

( | )

s

j

j

i

f H

G s

f H

(

(

R

R

R

88

Upper bound- Bhattacharyya bound

and for s=1/2 we obtain

( )

1 1

1/ 2

1 1

1/ 2

1 1

1

( ) ( | )

( | )

1

( | )

( | )

1

( | ) ( | )

M M

E j i

i j

j i

M M

j

i

i j

i

j i

M M

j i

i j

j i

P G f H d

M

f H

f H d

M f H

f H f H d

M

= =

= =

= =

`

)

R R R

R

R R

R

R R R

Thus we get the bound

( )

1/ 2

.

( 1) max ( | ) ( | )

E i j j i

P M f H f H d

R R R

9

Example: Gaussian Channel M Hypotheses,

K Dimensions

/ 2

2

0 0

/ 2

2

1

0 0

1

( | ) exp( )

1

exp( )

K

j

K

K

k k

k

f H

N N

r s

N N

=

| |

=

|

\

| |

=

|

\

i

i

R S

R

Thus,

( )

1/ 2

( | ) ( | )

j i

I f H f H = = R R

2

2 / 2

0 0

1

exp( )

2

K

j

I

N N

+

| |

=

|

\

i

R S R S

10

It is easy to show that

Substituting this we get

2

2 2

2 1

2

2 2

j

j j

+

+ = +

i

i i

S S

R S R S R S S

2

2

/ 2

0 0 0

2

1

exp exp

4

i j

N

i j

I

N N N

| |

+

|

| |

| |

|

|

=

|

|

|

\

|

\

|

\

s s

R

s s

11

Integration over all the dimensions we get

2

.

0

2

/ 2

0 0

( 1) max exp( )

4

2 1

exp( )

2

j

E i j

j

K

P M

N

d

N N

| |

|

\

i

i

S S

S S

R

R

Or

2

.

0

2

/ 2

1

0 0

( 1) max exp( )

4

2 1

exp( )

2

j

E i j

k jk

N

k

K

k

k

P M

N

s s

r

dr

N N

| |

|

\

i

i

S S

12

Since the inner integral is one!! We get a simple bound

2

.

0

( 1) max exp( )

4

j

E i j

P M

N

i

S S

13

The theorem of Irrelevance

Given a channel with pdf

The output of the channel is

composed of two sub

vectors

1 2

Pr [ | ]

( , | )

j

j

ob d

f Lim

d

< < +

=

R x R R S

R R S

x

An optimum receiver may disregard the

vector R

2

if and only if

2 1 2 1

( | , ) ( | )

j

f f = R S R R R

1 2 1 2 1

1 2 1

In general p(a,b)=p(a)p(b|a)

Thus,

( , | ) ( | ) ( | , )

( | ) ( | )

=

=

j j j

j

f f f

f f

R R S R S R S R

R S R R

Prove:

Channel Receiver

1 2

( , | )

j

f R R S

1

R

2

R

{ }, 1,..,

j

j M = S

14

Examples

1

R

{ }, 1,.., =

j

j M S

1

n

2 2

n =R

Case A:

Case B:

1

R

{ }, 1,..,

j

j M = S

1

n

2

n

1

R

2

, R

15

What about this case

1

R

{ }, 1,..,

j

j M = S

1

n

2

n

2

, R

16

Sufficient Statistic- Definition

We say that a mapping forms a sufficient statistic

for the density if there are

M functions from

such that the vector

is a probability vector and such that this probability vector is equal

to

| 1 |

(.),..., (.)

M M M

f f

= = r r

'

:

D D

T R R >

( ) ({ }, ( )),

obs m m obs

T P T m M > r r

'

[0,1]

D

R to

1

( ({ }, ( )),.. ({ }, ( )))

m obs M m obs

P T P T r r

(Pr( 1| ),..., Pr( | ))

obs obs

M Y M M Y = = = = r r

17

The objectives of the current lecture

In this lecture we will consider the following detection

problem.

Given:

Find an optimal receiver in the sense of minimum

probability of error

: ( ) ( ) ( ), 0 , 1,...,

( ) are known to the reciever

n(t) is additive noise with a known statistic

i i

i

H r t s t n t t T i M

P H

= + =

In order to solve this problem we want to make use of the

results of the previous talk

The major differences are:

1. ( ) is a waveform

2. n(t) is additive noise process and not a random vector

i

s t

18

Example: Pulse Amplitude Modulation

0

1

: ( ) ( ) ( ), 0

: ( ) ( ) ( ), 0

H r t g t n t t T

H r t g t n t t T

= +

= +

19

FSK Transmitter

1

f

t

0

( ) p t

1

( ) p t

pulse

generator

0

( ) p t

pulse

generator

1

( ) p t

+

"0"

"1"

trigger

trigger

bitstream 1 0 1 1 0 0 0 1

1 0 1 1 0

( ) ( ) ( 2 ) ( 3 ) ( 4 ) ... p t p t T p t T p t T p t T + + + + +

t

20

Signal Space Representation ( )

Euclidean vector space

Let

k

R be the set of all ordered k-tuples

1 2

( , ,..., )

k

x x x = x where

i

x are real

numbers known as the coordinates of x, the elements of

k

R are known as

points or vectors. We define the following operations:

(I)

1 1 2 2

( , ,..., )

k k

x y x y x y + = + + + x y )

(II)

1 2

( , ,..., )

k

x x x = x

(III) Inner product (or scalar product)

1

( , )

k

i i

i

x y

=

=

x y

(IV) Norm of

1/ 2

*

1

( , )

k

i i

i

x x

=

| |

= =

|

\

x x x x

2

2

, . . x L i e x <

21

Inner Product-Scalar Product

*

. ( , ) ( , ) = I x y y x

1 2 1 2

. ( , ) ( , ) ( , ) + = + III x x y x y x y

. ( , ) ( , ) = II x y x y

. ( , ) 0 iff (0,..., 0) = = IV x x x

22

Example- 3-D

Let a, b be vectors in 3-D space. (1, 0, 0), (0,1, 0), (0, 0,1)

x y z

e e e = = = ,

unit vectors. Therefore,

(4,1, 2) ( , ) ( , ) ( , )

( , ) ((4,1, 2), (1, 0, 0)) 4

( , ) ((4,1, 2), (0,1, 0)) 1

( , ) ((4,1, 2), (0, 0,1)) 2

4(1, 0, 0) 1(0,1, 0) 2(0, 0,1)

x x y y z y

x

y

z

e e e e e e

e

e

e

= = + +

= =

= =

= =

= + +

a a a a

a

a

a

a

23

Example- 3-D

If

(4,1, 2)

( 2,1, 3)

=

=

a

b

4 1 2

2 1 3

x y z

x y z

e e e

e e e

= + +

= +

a

b

and

2 2 1

( , ) 4 ( 2) 1 1 2 ( 3) 13

x y z

e e e + = +

= + + =

a b

a b

2 2 2

2 2 2

2 2 1

( , ) 4 1 2 21 21

( , ) 2 1 3 14 14

x y z

e e e + = +

= + + = > =

= + + = > =

a b

a a a

b b a

13 13

cos( )

14*21 7 6

= = =

(a, b)

a b

24

The Fourier Series

We also know about the Fourier Series of integrable function over the

interval [0,T],

0

1 2 1 2

( ) exp( ); ( ) exp( )

T

n n

nt nt

f t f j f f t j dt

T T

T T

= =

;

and

n

( ) is equivalent to {f } f t

Can we find a different way to represent set of waveforms for detection?

25

Hilbert Space: Definition

A sequence {h

n

} in a linear vector space is said to be Cauchy

sequence if for every there is an integer N such that

0 >

, < >

n m

h h if n m N

A vector space with Norm is complete if every Cauchy

sequence converge

Hilbert Space is linear complete vector space with scalar

product

26

Hilbert Space for real (complex) functions

H is the collection of all real (complex) functions on the interval

[0,T] such that

* 2

0

( ) ( ) , ( )

T

f t f t f L <

The inner product (scalar Product) is defined as

* 2

0

( , ) ( ) ( ) , ( , )

T

f g f t g t f g L = <

27

Definitions

1. A family of functions

1

{ }

k k

=

is called an orthogonal family if

( , ) 0

i j

= for ij.

2. A family of functions

1

{ }

k k

=

is called an orthonormal family if

1

( , )

0

i j

i j

i j

=

3. A family of functions

1

{ }

k k

=

is called a complete family if for every

f(t) that has energy different than zero (

*

0

( ) ( ) ( , )

T

f t f t dt f f =

, there

is at least one i s.t.;

0

( ) ( ) 0

T

k

f t t dt

.

28

Riesz -Fischer Theorem

A complete orthonormal family of functions includes all

possible orthonormal vectors in the space No other

vector outside this set can possible be orthogonal to all

the vectors of the family

The Riesz -Fischer Theorem

Let

1

{ }

k k

=

be orthonormal and complete on [0,T], and

2

( ) f t L then

2

1

0

lim ( ( ) ( )) 0, ( , ( ))

T

N

i i i i

N

i

f t f t dt f f t

=

= =

An orthonormal family of functions is called complete in a vector

space

2

( ) [0, ] f t L T iff ( , ) 0, 0

k

f k f = =

This may be stated as follows. The only function orthonormal to all

the members of the complete set is the zero function in the sense

of norm

29

Example

Thus we can express the Fourier representation as

1

1 2

( ) ( ); ( ) exp( )

i i i

i

tn

f t f t t j

T

T

=

= =

0

1 2

( ) exp( ) ( )

1 2

( ) exp( ) ( , )

n n n

T

n n

nt

f t f j f t

T

T

nt

f f t j dt f

T

T

= =

= =

The above function series satisfies

1

( ( ), ( ))

0

i j ij

i j

t t

i j

=

= =

In a similar way we have

2

2

1

( ) | |

i

i

f t f E energy

=

= =

Orthonormal

Base

30

Parseval's theorem:

Let f(t) and g(t) be real functions which can be represented by a function

series,

1

( ) ( )

i i

i

f t f t

=

=

, and

1

( ) ( )

i i

i

g t g t

=

=

, where ( ( ), ( ))

i i

f f t t =

T

* *

1

0

T

2

* 2

1

0

( ( ), ( ))

then

. ( ) ( ) ( , )

. ( ) ( ) | |

i i

i i

i

i

i

g g t t

a f t g t dt f g f g

b f t f t dt f f E Energy

=

=

= =

= = =

31

Signal Space Representation

Any signal in a set of M waveform

can be represented by linear combination of a set of N orthonormal

function

( )

i

s t

{ ( ),1 }

i

s t i M

{ ( ),1 },

i

t j N where N M

1

( ) ( ), 1, 2,..., , 0

M

i ik k

k

s t s t i M t T

=

= =

*

0

( , ( )) ( ) ( )

t

ik i k i k

s s t s t t dt = =

is the projection of ( ) on the direction of ( )

ik i k

s s t t

32

Signal Space Representation

The representation (1) has the following matrix form:

Thus, using the set of the basis functions, each signal s

i

(t) maps to a

set of N real numbers, which is a N dimensional real-valued vector

This is a 1-1 correspondence between the signal set (or

equivalently, the message symbol set) and the N dimensional

vector space.

1

1

( )

( ) ( ,..., )

( )

i i iN

N

t

s t s s

t

| |

|

|

=

|

|

\

1

( ,..., )

i i iN

s s = s

33

Gram-Schmidt Orthogonalization

1 1

1

1

1

2

1

1 1

0

1

( ) ( )

( )

( ) ( )

( )

( )

( )

T

g t s t

g t

t unit length

g t

s t

E s t dt

E

=

=

= =

1 1 1 11 1

1

11 1

( ) ( ) ( )

:

( )

s t E t s t

Note

t has unit energy

s E

= =

=

Step 1:

34

35

36

Example: 4 Signals - Gram-Schmidt procedure

37

The Procedure for the example

38

39

The final results

The set of signals are

equivalent to

1 1

2

3

4

( ) ( 2, 0, 0)

( ) (0, 2, 0)

( ) (0, 2,1)

( ) ( 2, 0,1)

s t

s t

s t

s t

=

=

=

=

2

3

4

s

s

s

s

40

Another Example

Gram Schmidt Procedure for the set of functions

2

,

3

2

t,

1

2

5

2

13t

2

,

1

2

7

2

t35t

2

,

3330t

2

35t

4

-1 -0.5 0.5 1

-1

1

2

2 3 4

{1, , , , } [ 1,1] t t t t t

41

Conclusions

Any set of signal set with M can be represented by a

finite set of N base function N<=M.

The base functions can be used to generate the

signals

The main issue is how to treat the noise process

42

Revisit of Random Process

A stochastic process X(t) is defined as ensemble of sample functions,

the values of the process at time instants are given

by the random variables

1 2 3 n

t <t <t <...< t

1 2 n

x(t ), x(t ) , ...,x(t )

1 2 n

x(t ), x(t ) , ...,x(t )

The random variables are characterized

statistically by their joints pdf

1 2 n

pdf(x(t ), x(t ) , ...,x(t ))

Stationary Stochastic process in the narrow sense satisfies that the

probability low of the vector is invariant to time shift

1 2 n 1 2 n

pdf(x(t ), x(t ) , ...,x(t ))=pdf(x(t +a), x(t +a) , ...,x(t +a))

43

Autocorrelation-Wide sense Stationary process

Stationary Stochastic process in the wide sense satisfies that the mean

of the process is constant and the covariance is a function of the time

difference

The Autocorrelation function of X(t):

1 1 1

1 2 1 2 1 2

( ( )) ( ( ) )

( , ) ( ( ) ( )) ( ( ) , ( ) )

x

E X t x p X t x dx

R t s E X t X s x x p X t x X s x dx dx

= =

= = = =

The Covariance function of X(t)

( , ) ( ( ) ( )) ( ( )) ( ( )) ( , ) ( ( )) ( ( ))

x x

K t s E X t X s E X t E X s R t s E X t E X s = =

A wide sense stationary process:

( ( )) =constant; ( , ) (| |)

x x x

E X t R t s R t s = =

44

Remainder: Circular Gaussian random

noise vector

0

n

1

n

2

n

( ) p n

A circular Gaussian real random k-dim vector is defined

as zero mean i.i.d (independent and identically

distributed) Gaussian vector with probability density

function

2 2 2

1 2

1 2

2 / 2 2

2

2 / 2 2

1 ...

( ) ( , ,..., ) exp

(2 ) 2

1

exp

(2 ) 2

k

k

k

k

n n n

p p n n n

| | + + +

= =

|

\

| |

| =

|

\

n

n

45

Vector Space-Revisit

46

Normed Linear Space

Vous aimerez peut-être aussi

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- מבוא לחישוב- תרגיל כיתה 14 - קריאה וכתיבה לקובץDocument2 pagesמבוא לחישוב- תרגיל כיתה 14 - קריאה וכתיבה לקובץRonPas encore d'évaluation

- מבוא לחישוב- הצעת פתרון לתרגיל בית 6 - 2010Document12 pagesמבוא לחישוב- הצעת פתרון לתרגיל בית 6 - 2010RonPas encore d'évaluation

- מבוא לחישוב- תרגיל כיתה 5 - לולאות, ENUM, CASTINGDocument3 pagesמבוא לחישוב- תרגיל כיתה 5 - לולאות, ENUM, CASTINGRonPas encore d'évaluation

- מבוא לחישוב- הצעת פתרון לתרגיל בית 5 - 2010Document20 pagesמבוא לחישוב- הצעת פתרון לתרגיל בית 5 - 2010RonPas encore d'évaluation

- מבוא לחישוב- הצעת פתרון לתרגיל בית 3 - 2010Document3 pagesמבוא לחישוב- הצעת פתרון לתרגיל בית 3 - 2010RonPas encore d'évaluation

- מערכות הפעלה- תרגיל בית 1 - 2013Document2 pagesמערכות הפעלה- תרגיל בית 1 - 2013RonPas encore d'évaluation

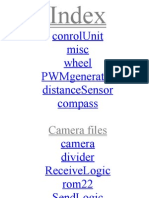

- משובצות- קוד רפרנסDocument29 pagesמשובצות- קוד רפרנסRonPas encore d'évaluation

- שפות סימולציה- הצעת פתרון למעבדה 1Document5 pagesשפות סימולציה- הצעת פתרון למעבדה 1RonPas encore d'évaluation

- VLSI- תרגול 0 - Mosfet CapacitanceDocument2 pagesVLSI- תרגול 0 - Mosfet CapacitanceRonPas encore d'évaluation

- VLSI- הרצאה 1 - מבואDocument51 pagesVLSI- הרצאה 1 - מבואRonPas encore d'évaluation

- סיכום תמציתי של פקודות ותחביר ב-VHDLDocument4 pagesסיכום תמציתי של פקודות ותחביר ב-VHDLRonPas encore d'évaluation

- Quick Start Guide: For Quartus II SoftwareDocument6 pagesQuick Start Guide: For Quartus II SoftwareRonPas encore d'évaluation

- מדריך modelsim למתחיליםDocument22 pagesמדריך modelsim למתחיליםRonPas encore d'évaluation

- עיבוד תמונה- תרגיל כיתה 10 - morphology, חלק 1Document31 pagesעיבוד תמונה- תרגיל כיתה 10 - morphology, חלק 1RonPas encore d'évaluation

- עיבוד תמונה- תרגיל כיתה 4 - sobel and harrisDocument15 pagesעיבוד תמונה- תרגיל כיתה 4 - sobel and harrisRonPas encore d'évaluation

- עיבוד תמונה- הרצאות - Canny Edge DetectionDocument24 pagesעיבוד תמונה- הרצאות - Canny Edge DetectionRonPas encore d'évaluation

- תכנון מיקרו מעבדים- הספר המלא של בראנובDocument267 pagesתכנון מיקרו מעבדים- הספר המלא של בראנובRonPas encore d'évaluation

- שפות סימולציה- חומר נלווה - Tips for Successful Practice of SimulationDocument7 pagesשפות סימולציה- חומר נלווה - Tips for Successful Practice of SimulationRonPas encore d'évaluation

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Module 2 ML Mumbai UniversityDocument39 pagesModule 2 ML Mumbai University2021.shreya.pawaskarPas encore d'évaluation

- Finite Element Programming With MATLAB: 12.1 Using MATLAB For FEMDocument33 pagesFinite Element Programming With MATLAB: 12.1 Using MATLAB For FEM436MD siribindooPas encore d'évaluation

- ICSE Class 10 Physics Chapter 02 Work Energy and PowerDocument43 pagesICSE Class 10 Physics Chapter 02 Work Energy and PowerNo Charge GamesPas encore d'évaluation

- BYUOpticsBook 2013Document344 pagesBYUOpticsBook 2013geopaok165Pas encore d'évaluation

- Lecture-1 Slides PDFDocument47 pagesLecture-1 Slides PDFSarit BurmanPas encore d'évaluation

- Book EDocument184 pagesBook EIain DoranPas encore d'évaluation

- Vector Calculus BookDocument258 pagesVector Calculus Bookunbeatableamrut100% (2)

- Notes On QMDocument226 pagesNotes On QMJoshT75580Pas encore d'évaluation

- Puri Tensor 2Document38 pagesPuri Tensor 2uday singhPas encore d'évaluation

- Zerna 11-StemDocument42 pagesZerna 11-StemJohn Ricman ZernaPas encore d'évaluation

- Motion in A Straight Line - CeLOEDocument31 pagesMotion in A Straight Line - CeLOEMuhammad GustianPas encore d'évaluation

- XI Physcis MCQSDocument10 pagesXI Physcis MCQSerum shomailPas encore d'évaluation

- A Viable Alternative For Shell Model For Spherical NucleiDocument60 pagesA Viable Alternative For Shell Model For Spherical NucleiRishi SankerPas encore d'évaluation

- Notes On Quantum ChemistryDocument61 pagesNotes On Quantum ChemistryLina Silaban100% (1)

- TensoresDocument96 pagesTensoresJhonnes ToledoPas encore d'évaluation

- P5 Box2D Physics Tutorial: Foreword: The Tutorial Is The Physics Chapter From Introduction To Programming WithDocument74 pagesP5 Box2D Physics Tutorial: Foreword: The Tutorial Is The Physics Chapter From Introduction To Programming WithgmaPas encore d'évaluation

- Differential Geometry M Usman HamidDocument125 pagesDifferential Geometry M Usman HamidKalaiPas encore d'évaluation

- Knocking On The Devil's DoorDocument38 pagesKnocking On The Devil's DoorIvan PezPas encore d'évaluation

- Em NoteDocument131 pagesEm NotepremPas encore d'évaluation

- Calculus II - Dot ProductDocument9 pagesCalculus II - Dot ProductMustafaMahdi100% (1)

- Skills in Mathematics Vectors and 3D Geometry For JEE Main and Advanced 2022Document289 pagesSkills in Mathematics Vectors and 3D Geometry For JEE Main and Advanced 2022Harshil Nagwani100% (2)

- Engineering Mathematics - I Semester - 1 by DR N V Nagendram UNIT - V Vector Differential Calculus Gradient, Divergence and CurlDocument34 pagesEngineering Mathematics - I Semester - 1 by DR N V Nagendram UNIT - V Vector Differential Calculus Gradient, Divergence and CurlRajarshi DeyPas encore d'évaluation

- UNIT - 7.PDF Engg MathDocument99 pagesUNIT - 7.PDF Engg MathsudersanaviswanathanPas encore d'évaluation

- Linear Algebra QuizDocument2 pagesLinear Algebra QuizKai YangPas encore d'évaluation

- MathematicsNumericsDerivationsAndOpenFOAM PDFDocument144 pagesMathematicsNumericsDerivationsAndOpenFOAM PDFKarlaHolzmeisterPas encore d'évaluation

- Vector OperationDocument34 pagesVector OperationLancel AlcantaraPas encore d'évaluation

- Dr. Firas K. AL-Zuhairi E-Mail: 150009@uotechnology - Edu.iq: Engineering MechanicsDocument28 pagesDr. Firas K. AL-Zuhairi E-Mail: 150009@uotechnology - Edu.iq: Engineering Mechanicsحسين راشد عيسى كريمPas encore d'évaluation

- Project Report 2004 Solar: A Solar System Simulator Author: Sam Morris Supervisor: Dr. Richard BanachDocument56 pagesProject Report 2004 Solar: A Solar System Simulator Author: Sam Morris Supervisor: Dr. Richard BanachAnjali M GowdaPas encore d'évaluation

- Fourier 12Document13 pagesFourier 12Dushyant SinghPas encore d'évaluation