Académique Documents

Professionnel Documents

Culture Documents

Webcfz 2003

Transféré par

34_pink_rabbitDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Webcfz 2003

Transféré par

34_pink_rabbitDroits d'auteur :

Formats disponibles

Gain-scheduling MPC of nonlinear systems

L. Chisci, P. Falugi and G. Zappa

L. Chisci, P. Falugi and G. Zappa,

Dipartimento di Sistemi e Informatica Universit`a di Firenze,

via Santa Marta 3, 50139 Firenze. Italy

e-mail: chisci,falugi,zappa@dsi.uni.it

Abstract

Predictive control of nonlinear systems is addressed by embedding

the dynamics into an LPV system and by computing robust invariant

sets. This mitigates the on-line computational burden by transferring

most of the computations o-line. Benets and conservatism of this

approach are discussed in relation with the control of a critical me-

chanical system.

keywords: Predictive control, constraints, nonlinear systems, LPV sys-

tems, invariant sets, gain-scheduling control.

1 INTRODUCTION

Model Predictive Control (MPC) of nonlinear systems has recently attracted

great interest in the control literature [1]. In particular, there exist several

receding-horizon control schemes [2]-[7] which successfully address the issues

of stability, constraint satisfaction and performance optimization for nonlin-

ear systems. The common idea of these approaches is to enforce stability

by introducing in the control optimization problem suitable terminal con-

straints and penalties derived from an auxiliary, usually linear, control law.

Despite of the remarkable performance and nice theoretical properties of

such predictive controllers, there is a major computational drawback associ-

ated with the non-convexity of the optimization problem which, in turn, is

a consequence of the system nonlinearity. Recently, [9] have advocated the

use of linear embedding techniques [8] in the context of nonlinear MPC by

virtue of their computational advantages over direct nonlinear MPC meth-

ods. In particular the algorithm in [9] relies on the embedding of a nonlinear

1

system into a polytopic Linear Time Varying (LTV) uncertain system and

requires a convex LMI optimization to be solved on-line. In this paper,

we adopt a less conservative embedding into an LPV (Linear Parameter

Varying) model [10] and derive an MPC algorithm which involves a simpler

convex Quadratic Programming (QP) problem to be solved on-line. LPV

models have already been employed successfully in the context of robust lin-

ear MPC [11, 12]; here they are considered in the context of nonlinear MPC.

Following the paradigm, frequently adopted in gain-scheduling control de-

sign [13, 14], of embedding the original nonlinear system into an LPV model,

it is possible, by means of the techniques in [15], to design a gain-scheduling

feedback which stabilizes the system and also to compute, under certain

conditions, an associated admissible set. Within this set of simple structure,

the gain-scheduling feedback guarantees asymptotic stability and constraint

satisfaction. In these computations the key point is the decoupling of the

parameter dynamics from the state dynamics. Subsequently, following the

closed-loop MPC paradigm [16] and adopting the gain-scheduling policy as

auxiliary control law, we propose a novel MPC algorithm for nonlinear sys-

tems based on a robust MPC approach to LPV systems previously presented

in [11]. It is proved that the proposed algorithm, named Gain Scheduling-

MPC (GS-MPC), guarantees asymptotic regulation (at the origin) of the

constrained nonlinear system, and its domain of attraction is evaluated. Sta-

bilization of nonlinear systems under input constraints has been extensively

studied in the literature [17]-[20] from a dierent control design perspective

based on control Lyapunov functions (CLFs). As opposed to MPC, the

CLF approach does not involve on-line computations but suers from two

main drawbacks: (1) cannot deal with state constraints; and (2) requires

the construction of a CLF, which is not an easy task in general.

2 LPV embedding of nonlinear systems

In this section we shall address the embedding of a nonlinear dynamics into

an LPV representation [14]. The LPV model will be subsequently adopted

for designing MPC algorithms. Consider the discrete-time nonlinear system

x(t + 1) = f(x(t), u(t)) (1)

where u(t) IR

m

and x(t) IR

n

are respectively the state and control input

at sample time t, and f(0, 0) = 0. Moreover x(t) is available for feedback

and the system is subject to the constraints

u(t) U, x(t) X (2)

2

where U is a convex polytope and X a polyhedron, both containing the

origin in the interior. The control objective is to regulate the system at the

origin while satisfying the constraints (2). It will be convenient (even if not

necessary) for the subsequent developments to transform the system (1) into

the following quasi-linear form:

x(t + 1) = A(x

1

(t)) x(t) + B(x

1

(t)) v(t) (3)

for a suitable partitioning x = [x

1

, x

2

]

of the state vector and possibly with

the aid of a suitable pre-compensation feedback

u(t) = g(x(t), v(t)). (4)

Remark 1 (Quasi-linearization) - It is clear that quasi-linear repre-

sentations like (3), if they exist, are highly non-unique. Since the subsequent

step will be to embed (3) into a Linear Dierence Inclusion (LDI), a sensible

criterion to be pursued in order to reduce conservatism of the embedding is

to make (3) as simple as possible in the sense that: (1) x

1

has low dimension;

(2) few entries of A(x

1

) and B(x

1

) depend on x

1

. To this end a feedback

pre-compensation (4) can be exploited in order to cancel part of the nonlin-

earities prior to embedding. On the other hand, feedback pre-compensation

may require large control eorts to cancel nonlinearities and may, in turn,

imply conservatism due to the presence of constraints on u. Hence, the ex-

tent to which combine feedback linearization [22] and embedding techniques

is very much system-dependent and a systematic procedure to transform (1)

into (3) is impossible to give. However, it must be pointed out that it is

not convenient, in general, to fully exploit feedback linearization due to its

lack of robustness, but it can be useful to cancel some precisely known non-

linearities so as to simplify the embedding procedure. As will be seen later

the quasi-linearization step is optional in that the embedding techniques de-

scribed in the sequel can directly be applied to the nonlinear dynamics (1).

Then, as customary in gain-scheduling control design [13, 14], we impose

the rate constraint

|x

1

(t + 1) x

1

(t)| , (5)

i.e. the control signal v must guarantee that the time evolution of the x

1

component is suciently slow. Adopting an LPV paradigm, the dynamics

(3) can be embedded into

x(t + 1) = A(p(t)) x(t) +B(p(t)) v(t) (6)

3

where p(t) is a measurable, time-varying, parameter satisfying

|p(t + 1) p(t)| (7)

and the control signal v, according to (4), is constrained by

g(x(t), v(t)) U (8)

and, from (5), must guarantee

|A

1

(p(t))x(t) +B

1

(p(t))v(t) x

1

(t)| (9)

where A

1

, B

1

are the upper submatrices of A, B corresponding to the parti-

tioning x = [x

1

, x

2

]

. Next, for computational reasons, it is assumed, without

loss of generality, that p(t) evolves in a compact set P which has a nite

partition

P =

_

i=1

P

i

. (10)

For each subset P

i

, the LPV dynamics (6) is embedded into a polytopic

dynamics

x(t + 1) F(i)

_

x(t)

v(t)

(11)

where F(i) IR

n(n+m)

is a polytope of matrices [A, B] such that [A(p), B(p)]

F(i), p P

i

. Hereafter the time varying index i(t) will denote the subset

P

i

to which p(t) belongs. Accordingly, the bound (7) on the parameter vari-

ations is transformed into the dierence inclusion i(t + 1) Q(i(t)) where

Q(i) is a suitable subset of the index set I

= {1, 2, . . . , }. Subsequently,

the velocity constraint (9) is transformed into

F

1

(i)

_

x(t)

v(t)

x

1

(t)

< (12)

where F

1

(i) is the polytope of submatrices [A

1

, B

1

] of the matrices [A, B]

F(i). Finally the nonlinear control constraints (8) are approximated by

linear constraints

Lx(t) +Mv(t) 1 (13)

where L and M are matrices of appropriate dimensions. Notice that each

row of L, M denes a scalar constraint. Hence the polytope of vectors [x

, v

satisfying (13) is an inner approximation of the set of vectors satisfying (8).

To summarize, the original nonlinear dynamics (1) has been embedded into

the LPV dynamics

_

i(t + 1) Q(i(t))

x(t + 1) F(i(t)) [x(t)

v(t)

(14)

4

Further (14) is subject to the rate constraints (12), the control constraints

(13) and state constraints x(t) X which, combined together, take the form

L

i(t)

x(t) +M

i(t)

v(t) 1 (15)

for suitable matrices L

i

and M

i

depending on the parameter index i.

Remark 2 (Direct LPV embedding of the nonlinear dynamics)

- It is worth pointing out that the LPV dynamics (14) can directly be

obtained from (1) without need of the intermediate quasi-linear description

(3) and, hence, of the pre-compensation (4). In fact assume that f(x, u) is

C

1

over the domain X U and let J(x, u)

=

_

f

x

,

f

u

_

IR

n(n+m)

denote

the Jacobian matrix. Then it has been shown (see e.g. [23, p.55]) that the

trajectories of the nonlinear system can be embedded in the trajectories of

the LDI (14) provided that

F(i) = Co

i

,

i

= {J(x, u) : (x, u) X

i

U} IR

n(n+m)

where X

i

= {x X : x

1

P

i

} and Co denotes the convex hull. Clearly this

approach does not require feedback pre-compensation. On the other hand,

if J(x, u) depends on several state and/or input variables, the embedding

can be very complicated and/or conservative. For this reason, the quasi-

linearization (3) can be benecial.

Remark 3 (LPV embedding vs. LTV embedding) - Embedding

techniques have been rst considered in the context of nonlinear MPC in [9]

where the nonlinear dynamics x(t+1) = f(x(t), u(t)) is approximated with a

single polytopic LTV model x(t +1) F [x(t)

u(t)

Here, we approximate

the same dynamics with the LPV model (14) which consists of a bank of

polytopic LTV models F(i) indexed by the discrete parameter i, along with

a set-valued model i(t + 1) Q(i(t)) of the discrete parameter dynamics.

Clearly the adoption of multiple local embeddings should yield much better

approximation of the nonlinear dynamics with respect to a single global em-

bedding. The optional quasi-linearization step can give a further reduction

of conservatism with respect to the approach in [9]

Remark 4 (Choice of the parameter rate bound ) - The bound

in (7) is dictated by the speed of variation of the state variable x

1

, chosen

as parameter in the LPV representation. A large clearly implies a more

conservative LPV embedding so that performance may deteriorate due to

5

lack of information on the real plant dynamics. On the other hand, a small

can imply tighter constraints which, in turn, may cause infeasibility. A lim-

itation on the rate of variation |x

1

(t +1) x

1

(t)| may arise from constraints

on x and u. From a designer point of view, a good choice of should be as

close as possible to such a limitation in order to avoid both unnecessarily

stringent constraints and an unnecessarily conservative LPV embedding.

3 Invariant sets for LPV systems

This section discusses the properties of invariant sets in the context of LPV

systems [14]. In particular we shall refer to the LPV system (14) subject to

the linear constraints (15). Recall that the discrete parameter i(t) is actually

related to the state x(t), i.e. i(t) = i(x

1

(t)). Hence, according to (10), the

state space is partitioned as X =

i

X

i

. Moreover, the dynamics (11) can

be regarded as piecewise polytopic, i.e.

x(t + 1) F(i)

_

x(t)

v(t)

if x(t) X

i

. (16)

Nevertheless, for ease of computation of invariant sets, this relationship will

be disregarded and the extended state s(t)

= [i(t)

x(t)

I IR

n

will

be considered hereafter assuming that i(t) evolves independently of x(t).

The relation between x(t) and i(t) will be reconsidered only at the end of

set computations. Notice that a set I IR

n

in the extended state space

is just an l-tuple {

1

,

2

, . . . ,

l

} of subsets of IR

n

.

Assumption 1 - For any value i I of the discrete parameter, there

exists a linear feedback control law v(t) = F

i

x(t) such that the closed-loop

polytopic system x(t + 1) F(i) [x(t)

(F

i

x(t))

is exponentially stable.

A necessary condition for the existence of such a feedback gain F

i

is

clearly exponential stability of the non linear system (1) around the origin. A

sucient condition is quadratic stabilizability [24, 25] of the vertices of F(i).

Such a condition is, however, not necessary. One could use gains F

i

that

are not quadratically (but, for instance, poly-quadratically, polytopically or

polynomially) stabilizing. Notice that Assumption 1 can be made easier

to satisfy by considering a ner partitioning of the parameter space at the

expense of a larger number of polytopic models. The key issue is whether

the linear gain-scheduling control law

v(t) = F

i(t)

x(t) (17)

6

stabilizes the LPV system (14) and satises the constraints (15) in a suitable

neighborhood of the origin, as in such a case the same stability and constraint

satisfaction requirements are also guaranteed for the original nonlinear sys-

tem (1). In order to use (17) as auxiliary control law [7] in an MPC scheme,

it is necessary to determine a, possibly maximal, admissible set [26, 27]

associated to the resulting closed-loop dynamics

_

i(t + 1) Q(i(t))

x(t + 1) F(i(t))

_

x(t)

(F

i(t)

x(t))

(18)

Denition 1 - Given , 0 < 1, a set I IR

n

of the extended

state space is contractive under the closed-loop dynamics (18) if

s(t) =

_

i(t + 1)

1

x(t + 1)

_

In particular if = 1, is called invariant. If, in addition, all states

s = [i, x

satisfy the constraints (15), is called -admissible (ad-

missible if = 1).

Next we would like to nd, for a xed (0, 1], the largest -admissible

set

0

= {

1

0

,

2

0

, . . . ,

l

0

}. Let X

i

c

= {x : (L

i

+ M

i

F

i

)x 1} denote the

set of states which satisfy the constraints (15) under the linear feedback

v = F

i

x, and let X

c

=

_

X

1

c

, X

2

c

, . . . , X

c

_

. Then

i

0

, i I, are computed

with the following set recursion:

O

i

0

= X

i

c

i = 1, 2, . . . ,

O

i

k

= X

i

c

_

_

_

x : F(i)

_

x

F

i

x

_

jQ(i)

O

j

k1

,

_

_

_

k = 1, 2, . . .

i = 1, 2, . . . ,

(19)

For = 1, O

i

k

represents the set of initial states x(0) for which, under the

closed-loop dynamics (18), the constraints (15) are satised up to time k

assuming that i(0) = i. For < 1, O

i

k

is just a subset of the previous one.

Since O

i

k

O

i

k1

, O

i

k

converges to O

i

. Let O

= {O

1

, . . . , O

}, then the

following result holds.

Theorem 1 [15, 28, 29] - O

is the maximal admissible set (i.e.

contractive subset of X

c

). Moreover the system (18) is exponentially sta-

ble with exponential convergence rate < 1 if and only if the set O

7

provided by (19) has non-empty interior.

Notice that, since the uncertain dynamics F(i) is polytopic and the con-

straints (15) are linear, all the sets O

i

k

are described by a nite number of

linear inequalities. Therefore it is important to ascertain whether the set

O

is nitely determined, i.e. there exists a nite k

such that O

k

= O

.

In this respect, the following result can be proved.

Theorem 2 - Assume that the system (18) is exponentially stable with

convergence rate (0, 1) and let . Then if O

k

is bounded for some

k, O

is nitely determined.

Proof: see the Appendix.

Let be a contractive factor which guarantees that O

has non empty

interior and set

0

= O

.

0

represents a set of initial extended states

for which the plant state is asymptotically steered to the origin under the

gain-scheduling linear feedback (17) without violating the constraints. More

precisely

0

is a collection of convex sets

i

0

IR

n

, 1 i , such that if

x(0)

i

0

when i(0) = i, then x(t) 0 without violating constraints. Since

i(0) is related to x

1

(0), it turns out that x(0) will be driven to the origin if

x(0) X

i

i

0

for some i I. Hence the relevant invariant set in the plant

state space IR

n

is

i=1

_

X

i

i

0

_

. The set

0

, union of convex

sets, is invariant under the auxiliary gain-scheduling control law (17). How-

ever, since additional uncertainty is inherently introduced in the extended

dynamics (14) with respect to (16),

0

is not the largest admissible set for

(16). This set has a much more complex structure and will not be consid-

ered. Conversely we shall investigate whether it is possible to enlarge

0

by

making use of a nonlinear feedback v(t) = h(x(t)). Once again, for compu-

tational reasons, we shall consider the LPV dynamics (14) and the extended

state s(t). This motivates the following denition of controlled invariant set.

Denition 2 - is a controlled invariant set for the LPV constrained

system (14)-(15) if for any s = [i, x

, there exists v, depending on x

and i, such that

(i)

_

Q(i)

F(i) [x

_

(controlled invariance)

(ii) L

i

x +M

i

v 1 (constraint satisfaction)

8

Special controlled invariant sets are the sets of all initial states s(0)

which can be steered into

0

by an N-steps feedback control sequence

{v(0), v(1), . . . , v(N 1)}, where each v(k) is allowed to depend on the

current state x(k) and parameter i(k), and such that x(k) and v(k) satisfy

(15). Notice, however, that given a feasible s(0) it is not possible, in general,

to pre-compute at time t = 0 the control sequence {v(0), v(1), . . . , v(N1)}

(open-loop control) which robustly steers s(0) into

0

in N steps. For

receding-horizon operation it is convenient to resort to the so called closed-

loop paradigm [16] by considering a semi-feedback control sequence

v(k) = F

i(k)

x(k) +c(k) k = 0, 1, . . . , N 1 (20)

which combines the auxiliary control law (17) with an open-loop correction

component c. In order to dene the MPC algorithm that will be presented in

the next section, it is necessary to compute o-line the set of initial extended

states s(0), and the relative correction sequences {c(0), c(1), . . . , c(N 1)},

such that s(N) is steered to

0

by the semi-feedback control sequence

(20). This set, denoted by S

N

I IR

n+mN

and represented by S

N

=

{S

1

N

, S

2

N

, . . . , S

N

}, is obtained recursively as follows:

S

i

0

=

i

0

i = 1, 2, . . . ,

S

i

k

=

_

_

_

_

x

c

z

_

_ :

_

_

F(i)

_

x

F

i

x +c

_

z

_

jQ(i)

S

j

k1

, (L

i

+M

i

F

i

)x 1

_

_

k = 1, 2, . . .

i = 1, 2, . . . ,

(21)

At each stage k, c = c(0) and z = [c

(1), c

(2), . . . , c

(k 1)]

provide a valid

k-steps open-loop c-sequence that steers the extended state s(0) = [i, x

to

s(k)

0

. Also notice that, by the above mechanism, the sequence tail z

will also be valid at the subsequent time step to steer the future state to

0

in k 1 steps. The set of extended states s which can be steered to

0

by an N-steps semi-feedback control sequence of the form (20), denoted

hereafter by

N

= {

1

N

,

2

N

, . . . ,

N

}, is clearly the projection of S

N

onto

I IR

n

. In turn this implies that the set of initial states x which can steered

to

0

is

i=1

_

X

i

i

N

_

. Again, since the sets of matrices F(i) are

polytopic, the sets S

i

N

and

i

N

are polytopic as well; hence,

N

is an union

of polytopic sets.

9

4 MPC algorithm

Let us assume that:

(i) the gain-scheduling feedback (17) has been designed o-line so as to sta-

bilize the LPV model (14);

(ii) the admissible set

0

has been computed o-line by iterating (19) until

O

k

= O

k+1

;

(iii) for a given control horizon N, the set S

N

has been computed o-line

via (21).

Then, MPC can be exploited in order to improve performance of the auxil-

iary gain-scheduling controller by means of the degrees of freedom c(k) and

the receding-horizon control strategy. To this end, the following algorithm

is introduced.

Gain-Scheduling MPC (GS-MPC) algorithm. - At each sample

time t, given the state x(t) = [x

1

(t), x

2

(t)]

, let i(t) = i(x

1

(t)) be the index

such that x

1

(t) X

i

and

c(t) = arg min

c(t)

c(t)

2

subject to

_

x(t)

c(t)

_

S

i(t)

N

. (22)

Then apply to the plant the control signal

u(t) = g(x(t), v(t)), v(t) = F

i(t)

x(t) +c(t|t) (23)

where c

(t) = [c

(t|t), c

(t + 1|t), . . . , c

(t +N 1|t)].

The above algorithm selects, at time t, among all admissible sequences c(t)

the one with minimum

2

norm. Since S

i

N

, 1 i , are convex polytopes,

(22)-(23) amounts to a QP problem.

Remark 6 (Performance criterion) - Recalling the denition of

the correction sequence c() in (20), the performance criterion minimized

in (22) is J =

k=0

v(t + k|t) F

i(x(t+k|t))

x(t + k|t)

2

where the sum-

mation is actually truncated at k = N 1 since the auxiliary control

law v(t + k|t) = F

i(x(t+k|t))

x(t + k|t) is hypothesized for k N. Notice

that the above cost penalizes the deviation between the actual control se-

quence and the one provided by the auxiliary gain-scheduling controller.

The choice of this optimization policy only aims at avoiding a cumbersome

10

non-convex optimization problem. From a performance point of view, a cri-

terion which takes into account the mismatch on closed-loop behaviour like

J =

k=0

x(t+k|t

2

Q

+v(t+k|t)F

i(x(t+k|t))

x(t+k|t)

2

R

, x

Q

= x

Qx,

or

J =

k=0

x(t +k|t)

2

Q

+u(t +k|t)

2

R

(24)

would be more appropriate. In such cases, however, the optimization would

be non-convex due to the highly nonlinear and complicated dependence of

x(t +k|t) and u(t +k|t) from the degrees of freedom c(t +k|t).

As far as stability is concerned, the following result holds.

Theorem 3 - Provided that x(0)

N

, the receding-horizon con-

trol (22)-(23) guarantees that: (i) the constraints (15) are satised and (ii)

lim

t

x(t) = 0.

Proof: see the Appendix.

The GS-MPC algorithm ensures, therefore, asymptotic stability to the

origin with domain of attraction

N

.

Remark 7 (Nonlinear MPC vs. min-norm pointwise control)

- Stabilization of constrained nonlinear systems can also be tackled via con-

trol Lyapunov functions (CLF) [17]-[20]. Compared to the CLF approach,

our GS-MPC algorithm: (1) can deal with state (in addition to control)

constraints; (2) does not require determination of a CLF. As a matter of

fact, the o-line determination of the control invariant set

N

discussed in

section 3, provides in a systematic way a polytopic CLF for the nonlinear

system (1). In fact, let

(x)

= max{ : x } denote the norm induced

by the polytope and assume that the set S

N

in (21) has been computed

with a contraction factor (0, 1). Then V (x) =

i(x)

N

(x), induced by the

-contractive controlled invariant polytope

i(x)

N

, turns out to be a CLF for

the plant (1) which can be used in the pointwise min-norm controller [19]

dened as follows

u(x) = arg min

u

_

u

2

| V (f(x, u)) < V (x)

_

.

Another advantage of using MPC is the possibility of optimizing a perfor-

mance criterion. The peculiarity of the criterion adopted in GS-MPC for

11

the sake of computational simplicity, does not take it for granted that the

real system performance under GS-MPC is better than under pointwise min-

norm control. In GS-MPC, however, optimization is based on predictions

several steps-ahead while pointwise min-norm control uses one-step ahead

optimization which may lead to myopic choices. In this respect, GS-MPC

seems to provide a good tradeo between computational and performance

requirements. This fact will be conrmed by the simulation results reported

in the next section.

5 Simulation results

In this section the previously introduced LPV embedding and MPC tech-

niques are applied to the highly nonlinear model of the Furuta pendulum

[21]. The Furuta pendulum is attached to an arm, driven by a dc-motor,

rotating in the horizontal plane. The control objective is to balance the

pendulum in the upward vertical position. The state space model, derived

by the Lagrange method, is

x

2

= x

3

J(x

2

)

_

x

1

x

3

_

+C(x)

_

x

1

x

3

_

=

_

t

3

u

t

6

sin(x

2

)

_

J(x

2

) =

_

1 +t

7

sin

2

(x

2

) t

1

cos(x

2

)

t

4

cos(x

2

) 1

_

C(x) =

_

t

2

+

1

2

t

7

x

2

sin(2x

2

) t

1

x

3

sin(x

2

) +

1

2

t

7

x

1

sin(2x

2

)

1

2

t

8

x

1

sin(2x

2

) t

5

_

(25)

where: x

1

= is the angular speed of the rotating arm; x

2

= and x

3

=

are the angular position and, respectively, velocity of the pendulum ( = 0 is

the vertical upward position); u is the input voltage of the dc-motor subject

to the actuator limits |u| u

max

; t

i

, 1 i 8, are suitable coecients

depending on the physical parameters of the system. Via a suitable pre-

compensation u = g(x, v), it is possible to transform the dynamics into the

simpler form

x

1

= v x

2

= x

3

x = f

3

(x, v)

In fact, this is achieved by choosing g(x, v) =

1

t

3

[g

1

(x) +g

2

(x

2

)v], g

1

(x) =

t

1

t

6

cos(x

2

)sin(x

2

)

1

2

t

1

t

8

x

2

1

sin(2x

2

)cos(x

2

)+t

1

t

5

x

3

cos(x

2

)+t

2

x

1

+t

7

x

1

x

3

sin(2x

2

)+

t

1

x

2

3

sin(x

2

) e g

2

(x

2

) = 1 t

1

t

4

+(t

7

+t

1

t

4

)sin

2

(x

2

) from which it turns out

that f

3

(x, v) = t

6

sin(x

2

)+t

4

cos(x

2

)v+

1

2

t

8

x

2

1

sin(2x

2

)t

5

x

3

Next we applied

12

Euler discretization with sampling period T

s

in order to get a discrete-time

model

x(t + 1) = A(x

1

, x

2

)x(t) +B(x

2

)v(t)

A(x

1

, x

2

) =

_

_

1 0 0

0 1 +T

s

0

0 a(x

1

, x

2

) 1 T

s

t

5

_

_ B(x

2

) =

_

_

T

s

0

b(x

2

)

_

_

a(x

1

, x

2

) = T

s

_

t

6

+t

8

x

2

1

cos(x

2

)

_ sin(x

2

)

x

2

b(x

2

) = T

s

t

4

cos(x

2

).

(26)

As a nal step, p = x

1

has been selected as gain-scheduling parameter.

Further, in order to embed (26) into a family of polytopic systems, we also

assumed bounds |x

2

| x

2,max

and |x

3

| x

3,max

on the other two state

variables. These bounds along with the bounds p = x

1

P

i

, in turn, imply

bounds on the entries a(x

1

, x

2

) and b(x

2

) in (26) namely

a

i,min

a(x

1

, x

2

) a

i,max

for x

1

= p P

i

, b

min

b(x

2

) b

max

from which it is possible to obtain a polytopic inclusion F(i) for each discrete

parameter i as the convex hull of the four matrix vertices characterized by

a

i,min

, a

i,max

, b

min

, b

max

. Further, the following set-valued map Q(i) has

been assumed for the time evolution of the discrete parameter i:

Q(i) = {max{1, i 1}, i, min{i + 1, l}}, for 1 i l.

The control constraints |g(x(t), v(t))| u

max

have been approximated by

v

i(t)

min

v(t) v

i(t)

max

where the bounds v

i

min

, v

i

max

have been obtained con-

sidering the bounds x

1

P

i

as well as |x

2

| x

2,max

and |x

3

| x

3,max

.

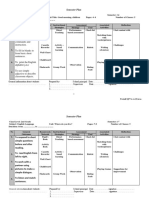

The numeric values of all the model parameters are reported in Table 1. A

comment on the specic choice of the parameter bound rate is in order.

Since the parameter rate constraints (9), in this case, yield |v(t)| /T

s

we

selected = T

s

v, v = max

iI

max

_

|v

i

min

|, |v

i

max

|

_

= 100 in order to avoid

tightening of constraints.

The auxiliary gain-scheduling controller has been designed o-line using

the LMI-based design procedure of [25]; the various gains F

i

are reported

in Table 2. Fig. 1 shows the projections of the 3-dimensional sets

i

0

,

1 i 11, on the coordinate planes. A control horizon N = 3 has been

selected and the corresponding polytopes S

i

3

have been computed. To give an

idea of the complexity of such polytopes, the number of linear inequalities

is also reported in Table 2. It is worth to point out, however, that the

computational load required for the solution of QP is quite insensitive to

13

Table 1: Model parameters

model coecients t

1

= 0.0265 t

2

= 1.0524 t

3

= 0.4549 t

4

= 0.6777 t

5

= 0.6058

t

6

= 48.5267 t

7

= 0.0304 t

8

= 0.7776

sampling period T

s

= 0.01

control bound u

max

= 200

state bounds x

1,max

= 10 x

2,max

=

2

9

x

3,max

= 10

parameter rate bound = 1

no. of parameter subranges = 11

parameter subranges P

i

= [x

1,max

+ 2

x

1,max

(i 1), x

1,max

+ 2

x

1,max

i], i = 1, ,

the number of constraints if interior point algorithms [30] are used. Fig.

1 shows the behaviour of GS-MPC starting from an initial state x(0) =

[10, 0.11, 3.44]

which turns out to be infeasible for the gain-scheduling

controller. It can be seen that GS-MPC guarantees asymptotic stability

and constraint satisfaction. We compared our GS-MPC algorithm, based on

embedding, with the direct Nonlinear MPC (NMPC) algorithm of Magni et

al. [7]. For the sake of comparison, the cost adopted for NMPC is (24) where

the weight matrices Q and R have been selected by LQ inverse optimality

arguments so as to give (for the unconstrained model linearized around the

origin) the same feedback gain F

6

(see Table 2) associated to the subset X

6

of IR

3

containing the origin. Fig. 2 compares the behaviours of GS-MPC

and NMPC. We found that the number of ops required by NMPC was

about 10

3

times the op count of GS-MPC. On the other hand notice that,

despite of the much higher on-line computational load of NMPC, the two

algorithms give a similar response.

6 Conclusions

In this paper a novel MPC algorithm for nonlinear systems has been pro-

posed. It is based on the embedding of the nonlinear dynamics into an LPV

system and the construction of (controlled) invariant sets with a simple poly-

topic structure. The advantages of the approach lie in transferring most of

the computations o-line. In fact, at each sampling instant, only a QP

problem must be solved, while the computations of invariant polytopic sets,

carried out o-line, may require a remarkable time. As many other MPC

schemes, this approach exploits an auxiliary control law within a safe ad-

14

Table 2: Gain-scheduling auxiliary feedback and number of constraints

i F

i

n

of constraints

1 F

1

= [0.0323 0.2159 2.0086]10

3

1945

2 F

2

= [0.0310 0.2079 1.7448]10

3

2215

3 F

3

= [0.0314 0.2053 1.5633]10

3

2201

4 F

4

= [0.0309 0.2016 1.4040]10

3

2219

5 F

5

= [0.0307 0.1985 1.3104]10

3

2530

6 F

6

= [0.0310 0.2002 1.2935]10

3

2621

7 F

7

= [0.0313 0.2026 1.3468]10

3

2668

8 F

8

= [0.0313 0.2017 1.4376]10

3

2404

9 F

9

= [0.0321 0.2058 1.5957]10

3

2465

10 F

10

= [0.0331 0.2133 1.8563]10

3

2358

11 F

11

= [0.0351 0.2226 2.1732]10

3

2041

10 5 0 5 10

10

5

0

5

10

x

1

0.2 0 0.2 0.4

6

4

2

0

2

4

x

2

x

3

10 5 0 5 10

0.6

0.4

0.2

0

0.2

0.4

0.6

x

1

x

3

x

2

Figure 1:

0

(solid), state trajectory (solid-star)

15

0 0.5 1 1.5

10

8

6

4

2

0

Arm Speed, x

1

0 0.5 1 1.5

0.2

0.15

0.1

0.05

0

0.05

0.1

0.15

Pendulum Angle, x

2

0 0.5 1 1.5

4

3

2

1

0

1

Pendulum speed, x

3

0 0.5 1 1.5

50

0

50

100

150

200

Control input, u

Figure 2: Time domain responses under the GS-MPC (solid) and NMPC

(dashed) algorithms

missible set and an optimization-based controller within a larger controlled

invariant set. In particular, the cost functional penalizes only the dierence

between the actual control sequence and the control sequence provided by

the auxiliary gain-scheduling controller. Finally it should be emphasized

that this MPC scheme could naturally encompass additive bounded distur-

bances and parametric uncertainties.

References

[1] Allgower F, Zheng A (Eds.). Nonlinear Model Predictive Control

2000, Progress in Systems and Control Theory 26, Birkhauser, Basel,

Switzerland.

[2] Mayne DQ, Michalska H. Receding-horizon control of nonlinear sys-

tems, IEEE Transactions on Automatic Control 1990; 35, 814-824.

[3] Parisini T, Zoppoli R. A receding-horizon regulator for nonlinear sys-

tems and a neural approximation, Automatica 1995; 31, 1443-1451.

[4] Chen H, Allgower F. A quasi-innite horizon nonlinear predictive con-

trol scheme with guaranteed stability, Automatica 1998; 34, 1205-1217.

16

[5] De Nicolao G., Magni L., Scattolini R. Stabilizing receding-horizon

control of nonlinear time-varying systems, IEEE Transactions on Au-

tomatic Control 1998; 43, 1030-1036.

[6] Mayne DQ, Rawlings JB, Rao CV, Scokaert POM. Constrained model

predictive control: stability and optimality, Automatica 2000; 36, 789-

814.

[7] Magni L., De Nicolao G., Magnani L., Scattolini R. A stabilizing model-

based predictive control algorithm for nonlinear systems, Automatica

2001; 37, 1351-1362.

[8] Liu RW. Convergent systems, IEEE Transactions Automatic Control

1968; 13, 384-391.

[9] Angeli D, Casavola A, Mosca E. Constrained predictive control of non-

linear plants via polytopic linear system embedding, International

Journal of Robust and Nonlinear Control 2000; 10, 1091-1103.

[10] Sznaier M. Receding horizon: an easy way to improve performance in

LPV systems, Proc. American Control Conference 1999; San Diego,

USA, 4, 2262-2266.

[11] Chisci L, Falugi P, Zappa G. Predictive control for constrained LPV

systems, Proc. European Control Conference 2001; Porto, Portugal,

3074-3079.

[12] Casavola A, Famularo D, Franze G. A scheduling min-max predictive

control algorithm for LPV systems subject to bounded rate parameters.

Proc. 40th IEEE Conference on Decision and Control 2001; Orlando,

USA, 2372-2377.

[13] Shamma JS, Athans M. Gain scheduling: potential hazards and reme-

dies, IEEE Control Systems Magazine 1992; 12, 101-107.

[14] Rugh WJ, Shamma JS. Research on gain scheduling, Automatica 2000;

36, 1401-1426.

[15] Shamma JS, Xiong D. Set valued methods for linear parameter varying

systems, Automatica 1999; 35, 1081-1089.

[16] Rossiter JA, Kouvaritakis B, Rice MJ. A numerically robust state-space

approach to stable predictive control strategies, Automatica 1998; 34:

65-73.

17

[17] Artstein Z. Stabilization with relaxed control, Nonlinear analysis 1983,

7, 1163-1173.

[18] Lin Y, Sontag ED. A universal formula for stabilization with bounded

controls, Systems and Control Letters 1991, 16, 393-397.

[19] Freeman RA, Kokotovic PV. Inverse optimality in robust stabilization,

SIAM Journal Control and Optimization 1996, 34, 1365-1391.

[20] Freeman RA, Primbs JA. Control Lyapunov functions: new ideas from

an old source, Proc. 35th Conference on Decision and Control 1996;

Kobe, Japan, 3926-3931.

[21] Astrom KJ, Furuta K. Swinging up a pendulum by energy control,

Automatica 1999; 36, 287-295.

[22] Isidori A. Nonlinear Control Systems 3rd Edition 1995, Springer, Lon-

don, UK.

[23] Boyd S, El Ghaoui L, Feron E, Balakrishnan V. Linear Matrix Inequal-

ities in System and Control Theory 1994, SIAM, Philadelphia, USA.

[24] Barmisch RR. Necessary and sucient conditions for quadratic stabi-

lizability of an uncertain system, Journal of Optimization Theory and

Applications 1985; 46, 399-408.

[25] Geromel JC, Peres PLD, Bernussou J. On a convex parameter space

method for linear control design of uncertain systems, SIAM Journal

Control and Optimization 1991; 29, 381-402.

[26] Gilbert EG and Tan KT. Linear systems with state and control con-

straints: the theory and application of maximal output admissible sets,

IEEE Transactions Automatic Control 1991; 36, 1008-1021.

[27] Kolmanovski IV, Gilbert EG. Theory and computation of disturbance

invariant sets for discrete-time linear systems. Mathematical Problems

in Engineering: Theory, Methods, Applications 1998; 4: 317-367.

[28] Blanchini F. Ultimate boundedness control for uncertain discrete-time

systems via set-induced Lyapunov function, IEEE Transactions Auto-

matic Control 1994; 39, 428-433.

[29] Blanchini F. Set invariance in control. Automatica 1999; 35: 1747-

1767.

18

[30] Rao C, Rawlings JB, Wright S. Application of interior point methods

to model predictive control. Journal of Optimization Theory and Ap-

plications 1998: 99, 723-757.

Proof of Theorem 2 - Given S I IR

n

, let us denote by (t, S)

IR

n

the set of states x(t) originated from s(0) S for the LPV system

(18). If O

i

k

, i = 1, , , are bounded there exists a ball B IR

n

, contain-

ing the origin, such that O

i

k

B. By the exponential stability assumption

(t, I B) {0} as t . Hence there exists an index h > k for which

x (h + 1, I B) = (L

i

+ M

i

F

i

)x 1 for i = 1, 2, . . . , . Hence

O

h

O

k

I B and, therefore, x (h + 1, O

h

) = (L

i

+ M

i

F

i

)x

1 for i = 1, 2, . . . , . This, by denition implies that O

h

= O

h+1

and hence

O

= O

h

is nitely determined.

Proof of Theorem 3 - The hypotheses that x(t)

N

implies that

x(t)

i

N

for i(t) = i(x

1

(t)), i.e. s(t) = [i(t), x

(t)]

N

. Therefore

there exist control sequences c(t) such that [s

(t), c

(t)]

S

N

. Therefore

x(t)

N

implies that s(t + 1)

N1

N

. Hence, by induction,

s(t)

N

for all t 0 and satisfaction of the constraints (15) is guaranteed.

Next, consider the cost function V

t

= V (s(t))

= c(t)

2

. Since the

sequence {c(t +1|t), c(t +2|t), . . . , c(t +N 1|t), 0} is feasible at time t +1,

V

t

V

t+1

c(t)

2

0 (27)

where c(t)

= c(t|t). Hence {V

t

}

t0

is a nonnegative monotonic non-increasing

scalar sequence and, as t , must converge to V

< . Summing the

V

t

V

t+1

of (27), for t from 0 to , we have > V

0

V

t=0

c(t)

2

0 lim

t

c(t)

2

= 0 which proves that lim

t

c(t) = 0.

Any state x(t) reachable from x(0) under the non linear feedback (23) can

be expressed by

x(t) = (t, 0)x(0) +

t1

k=0

(t, k + 1)B(k)c(k) (28)

where (t, k)

= (t1) (k+1)(k) and (k) = A(k)+B(k)F

i(k)

, [A(k), B(k)]

F(i(k)) for any admissible sequence i(0), i(1), , i(k1). The contractivity

of

0

implies that there exists a constant M such that

|(t, k)| M

tk

(29)

19

From (28) and (29), it follows that lim

t

c(t) = 0 implies that lim

t

x(t) = 0.

20

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Projective Tests PsychologyDocument4 pagesProjective Tests PsychologytinkiPas encore d'évaluation

- Accomplishment ReportDocument3 pagesAccomplishment ReportRHYSLYN RUFIN SALINASPas encore d'évaluation

- эссеDocument8 pagesэссеВалентина РозенбергPas encore d'évaluation

- Fatima Jinnah Women University: Part-1 Course EvaluationDocument3 pagesFatima Jinnah Women University: Part-1 Course EvaluationAsma HashmiPas encore d'évaluation

- CLAT Preparation Tips by ToppersDocument9 pagesCLAT Preparation Tips by ToppersSristi RayPas encore d'évaluation

- RPMS Cycle Phase IVDocument13 pagesRPMS Cycle Phase IVchristian b. dellotaPas encore d'évaluation

- Speaker DiarizationDocument23 pagesSpeaker DiarizationamrithageorgePas encore d'évaluation

- Sample Semi Detailed OBE Lesson PlanDocument2 pagesSample Semi Detailed OBE Lesson PlanJude Salayo OanePas encore d'évaluation

- Arenas - Universal Design For Learning-1Document41 pagesArenas - Universal Design For Learning-1Febie Gail Pungtod ArenasPas encore d'évaluation

- CS 143 Introduction To Computer VisionDocument6 pagesCS 143 Introduction To Computer VisionjyothibellaryvPas encore d'évaluation

- Workshop 1 Template For Choosing AR TopicDocument1 pageWorkshop 1 Template For Choosing AR TopicRoxanne CarpenteroPas encore d'évaluation

- Lbs 302 Classroom Management PlanDocument10 pagesLbs 302 Classroom Management Planapi-437553094Pas encore d'évaluation

- DLL - MTB 3 - Q2 - W9Document2 pagesDLL - MTB 3 - Q2 - W9Claris MarisgaPas encore d'évaluation

- Kelas Masa Mata Pelajaran 3utm 7.30-8.30 BIDocument2 pagesKelas Masa Mata Pelajaran 3utm 7.30-8.30 BIRajen RajPas encore d'évaluation

- August 2021 The Impact of Technology On Children UaDocument2 pagesAugust 2021 The Impact of Technology On Children UaMesam JafriPas encore d'évaluation

- English 10 3rd GradingDocument2 pagesEnglish 10 3rd GradingOMIER YASINPas encore d'évaluation

- (B-2) Past Continuous TenseDocument3 pages(B-2) Past Continuous TenseDien IsnainiPas encore d'évaluation

- Assignment 2 Jatin PawarDocument4 pagesAssignment 2 Jatin PawarJatin PawarPas encore d'évaluation

- The Human As An Embodied SpiritDocument20 pagesThe Human As An Embodied SpiritJoyce Manalo100% (1)

- Individual Performance Commitment and Review Form: Mfos Kras Objectives Timeline Weight Rating Per KRADocument6 pagesIndividual Performance Commitment and Review Form: Mfos Kras Objectives Timeline Weight Rating Per KRARogiePas encore d'évaluation

- IppdDocument3 pagesIppdRhoda Mae Dano Jandayan88% (8)

- Student TestingDocument16 pagesStudent Testingapi-443892331Pas encore d'évaluation

- UCSP Chapter 5-Lesson 1Document37 pagesUCSP Chapter 5-Lesson 1Jed Farrell100% (1)

- Feature Extraction Using MFCCDocument8 pagesFeature Extraction Using MFCCsipijPas encore d'évaluation

- Achievement TestDocument9 pagesAchievement Testgulshang786Pas encore d'évaluation

- Logical FallaciesDocument7 pagesLogical FallaciesIbrahima SakhoPas encore d'évaluation

- Class 7. Writing. Physical Stregnth Mental Strength vs. Success in SportDocument2 pagesClass 7. Writing. Physical Stregnth Mental Strength vs. Success in SportTuấn Việt TrầnPas encore d'évaluation

- Semester Plan: (Dictation)Document9 pagesSemester Plan: (Dictation)Future BookshopPas encore d'évaluation

- Unit 1 EDUC 5270 Written AssignmentDocument8 pagesUnit 1 EDUC 5270 Written AssignmentDonaid MusicPas encore d'évaluation

- 1944 - Robert - The Science of IdiomsDocument17 pages1944 - Robert - The Science of IdiomsErica WongPas encore d'évaluation