Académique Documents

Professionnel Documents

Culture Documents

Ammy

Transféré par

Sk SharmaCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Ammy

Transféré par

Sk SharmaDroits d'auteur :

Formats disponibles

Storage cache Memor y

Computer storage is structured into a structure. At the biggest stage (closest to the processor) are the processer signs up. Next comes one or more stages of cache. When several stages are used, they are denoted L1, L2, etc Next comes main memor y, which is usually created out of a powerful random-access memor y (DRAM). All of these are regarded inner to the pc. The hierarchy carries on with exterior storage, with the next stage generally being a fixed difficult drive, and one or more stages below that created up of detachable media such as ZIP capsules, visual drives, and record. As one goes down the storage structure, one discovers reducing cost/bit, increasing capacity, and more slowly accessibility time. It would be awesome to use only the quickest storage,but because that is the most costly storage, we business off accessibility some time to cost by using more of the more slowly storage. The key is to arrange the information and programs in storage so that the storage terms needed are usually in the fastest memor y. In common, it is likely that most upcoming accesses to primar y storage by the processor will be to places lately accesses. So the cache instantly maintains a duplicate of some of the lately used terms from the DRAM. If the cache is designed properly, then most of enough time the processer will ask for storage terms that are already in the cache.

Computer Memor y Program Over view Characteristics of Memor y Systems Location Processor Internal primar y memory External additional memor y Capacity Term dimension organic device or organization Variety of terms variety of bytes Unit of Transfer Internal o Usually controlled by bus width External o Usually a prevent which is much bigger than a word

Addressable unit o Tiniest place which can be exclusively addressed o Group on exterior disk Access Methods Successive tape o Begin at the starting and study through in order o Access time relies on place of information and past location Immediate disk o Personal prevents have exclusive address o Access is by getting to area plus sequential search o Access time relies on place of information and past location Unique - RAM o Personal details recognize place exactly o Access time is separate of information place and past location Associative cache o Information is situated by a evaluation with material of a part of the store o Access time is separate of information place and past location Performance Access time (latency) o The time between introducing an deal with and getting entry to legitimate data Memor y Pattern time mainly random-access memor y o Time may be needed for the storage to recover before the next access o Access time plus restoration time Exchange rate o The amount at which information can be moved into or out of a storage unit Physical Types Semiconductor RAM Attractive drive and tape Optical CD and DVD Magneto-optical Physical Characteristics Volatile/non-volatile Erasable/non-erasable Energy requirements Organization The actual agreement of pieces to type words The apparent agreement is not always used The Memor y Hierarchy How much? o If the potential is there, applications will be developed to use it. How fast?

o To accomplish performance, the storage must be able to keep up with the processor. How expensive? o For a realistic system, the price of storage must be affordable in relationship to other components There is a trade-off among the three key features of memor y: price, potential, and accessibility time. Quicker accessibility time higher price per bit Greater potential small price per bit Greater potential more slowly accessibility time The way out of this situation is not to depend on only one storage element or technology. Implement a storage structure.

As one goes down the hierarchy: (a) decreasing cost per bit; (b) increasing capacity; (c) increasing access time; (d) decreasing frequency of access of the memor y by the processor. Thus smaller, more expensive, faster memories are supplemented by larger, cheaper, slower memories. The key to the success of this organization is item (d). Locality of Reference principle Memor y references by the processor, for both data and instructions, cluster Programs contain iterative loops and subroutines - once a loop or subroutine is entered, there are repeated references to a small set of instructions

Operations on tables and arrays involve access to a clustered set of data word Cache Memor y Principles Cache memor y Small amount of fast memor y Placed between the processor and main memor y Located either on the processor chip or on a separate module

Storage cache Function Over view Processer demands the material of some storage location The cache is examined for the asked for data o If discovered, the asked for term is sent to the processor o If not discovered, a prevent of primar y storage is first study into the cache, then the requested term is sent to the processor When a prevent of information is fetched into the cache to fulfill only one memor y reference, it is likely that there will be upcoming sources to that same memor y location or to other terms in the prevent area or referrals concept. Each block has a tag included to recognize it.

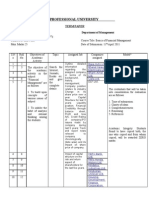

example of a typical cache organization is shown below:

Elements of Cache Design Cache Size Small enough so overall cost/bit is close to that of main memor y Large enough so overall average access time is close to that of the cache alone o Access time = main memory access time plus cache access time Large caches tend to be slightly slower than small caches Mapping Function An algorithm is needed to map main memory blocks into cache lines. A method is needed to determine which main memor y block occupies a cache line. Three techniques used: direct, associative, and set associative. Assume the following: Cache of 64 K bytes Transfers between main memor y and cache are in blocks of 4 bytes each cache organized as 16K = 2^14 lines of 4 bytes each Main memory of 16 M bytes, directly addressable by a 24-bit address (where 2^24 = 16M) main memor y consists of 4M blocks of 4 bytes each Direct Mapping Each block of main memor y maps to only one cache line * cache line # = main memor y block # % number of lines in cache * Main memor y addresses are viewed as three fields * Least significant w bits identify a unique word or byte within a block * Most significant s bits specify one of the 2^s blocks of main memor y * Tag field of s-r bits (most significant)

Line field of r bits identifies one of the m = 2^r lines of the cache

Tag (s-r)

Word (w)

Line or Slot (r) 14 bits

8 bits

14 bits

14 bits 24 bit address 2 bit word identifier 22 bit block identifier 8 bit tag (22-14) 14 bit slot or line No two blocks in the same line have the same Tag field Check contents of cache by finding and checking Tag

Direct Mapping Cache Organization

Direct Mapping Summar y Address length = (s+w) bits Number of addressable units = 2^(s+w) words or bytes Block size = line size = 2^w words or bytes Number of blocks in main memory = 2^(s+w)/2^w = 2^s

Number of lines in cache = m = 2^r Size of tag = (s-r) bits Direct Mapping Pros and Cons Simple Inexpensive Fixed location for a given block o If a program accesses two blocks that map to the same line repeatedly, then cache misses are ver y high Associative Mapping A main memor y block can be loaded into any line of the cache A memory address is interpreted as a tag and a word field The tag field uniquely identifies a block of main memor y Each cache lines tag is examined simultaneously to determine if a block is in cache Associative Mapping Cache Organization:

Example of Associative Mapping

Pentium 4 and PowerPC Cache Organizations Pentium 4 Cache Organization 80386 no on chip cache 80486 8k bytes using 16 bytes/lines and 4-way set associative organization Pentium (all versions) two on chip L1 caches o Data and instructions Pentium 4 o L1 caches 8k bytes 64 bytes/line 4-way set associative o L2 cache Feeds both L1 caches 256k bytes 128 bytes/line 8-way set associative

Pentium 4 Block Diagram

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- Positioning The ConneCtor 2001 Case (Positioning)Document9 pagesPositioning The ConneCtor 2001 Case (Positioning)billy930% (2)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Home Work 3 of E3001Document3 pagesHome Work 3 of E3001Sk SharmaPas encore d'évaluation

- Organigrama SamsungDocument1 pageOrganigrama SamsungCorelai67% (3)

- Exercise 1.create A Navigation Bar in Tabular Format.: Year Length Day Length MercuryDocument6 pagesExercise 1.create A Navigation Bar in Tabular Format.: Year Length Day Length MercurySk SharmaPas encore d'évaluation

- Crypto TPDocument13 pagesCrypto TPSk SharmaPas encore d'évaluation

- Marriage ResumeDocument2 pagesMarriage ResumeSk SharmaPas encore d'évaluation

- R PT Instruction PlanDocument7 pagesR PT Instruction PlanSk SharmaPas encore d'évaluation

- Addison Wesley - Booch Et Al. - The Unified Modeling Language User GuideDocument391 pagesAddison Wesley - Booch Et Al. - The Unified Modeling Language User GuideolusapPas encore d'évaluation

- Imports Imports Public Class Dim As Dim As Private Sub Byval As Byval As Handles NewDocument1 pageImports Imports Public Class Dim As Dim As Private Sub Byval As Byval As Handles NewSk SharmaPas encore d'évaluation

- FTP: File Transfer ProtocolDocument4 pagesFTP: File Transfer ProtocolSk SharmaPas encore d'évaluation

- Cap315 (Object Oriented Analysis and Design)Document6 pagesCap315 (Object Oriented Analysis and Design)Sk SharmaPas encore d'évaluation

- Lovely Professional University Application Form For Change of ProgrammeDocument5 pagesLovely Professional University Application Form For Change of ProgrammeSk SharmaPas encore d'évaluation

- Guidelines For Change of Programme - 17th April 2012Document3 pagesGuidelines For Change of Programme - 17th April 2012Sk SharmaPas encore d'évaluation

- Data Flow Diagram ExerciseDocument2 pagesData Flow Diagram ExerciseSk SharmaPas encore d'évaluation

- HW1 (Networks)Document10 pagesHW1 (Networks)Sk SharmaPas encore d'évaluation

- Stall MGMT System (TP)Document7 pagesStall MGMT System (TP)Sk SharmaPas encore d'évaluation

- Lovely Professional University: Term PaperDocument4 pagesLovely Professional University: Term PaperSk SharmaPas encore d'évaluation

- Icase: IIII11l11ll IllDocument26 pagesIcase: IIII11l11ll IllSk SharmaPas encore d'évaluation

- Subconscious Programming For Maximum ResultsDocument28 pagesSubconscious Programming For Maximum Resultsvmegan100% (5)

- Luckygames - Io Vip BotDocument18 pagesLuckygames - Io Vip BotPhenix Pradeep0% (1)

- TI+Lab+2 Intro To Model Based Design With Simulink ManualDocument5 pagesTI+Lab+2 Intro To Model Based Design With Simulink ManualSashikanth BethaPas encore d'évaluation

- Cs4227 Edc Phy Product BriefDocument2 pagesCs4227 Edc Phy Product BriefkrulafixPas encore d'évaluation

- Overview of Storage in Windows Server 2016Document49 pagesOverview of Storage in Windows Server 2016DECC DESARROLLO EMPRESARIALPas encore d'évaluation

- Understanding HP-UX 11i v2 and v3 USB IoscanDocument5 pagesUnderstanding HP-UX 11i v2 and v3 USB Ioscankazakh2Pas encore d'évaluation

- Sison, Winnie Rose B - Learning-Activity - 1Document5 pagesSison, Winnie Rose B - Learning-Activity - 1Winnie SisonPas encore d'évaluation

- MC2100 Spec SheetDocument2 pagesMC2100 Spec SheetToma HrgPas encore d'évaluation

- Building Information SystemsDocument25 pagesBuilding Information SystemsCamilo AmarcyPas encore d'évaluation

- Punjab Police Constable Operator PDFDocument3 pagesPunjab Police Constable Operator PDFavtarsinghPas encore d'évaluation

- Recovering A Corrupt Cisco IOS Image On A 2500 Series RouterDocument4 pagesRecovering A Corrupt Cisco IOS Image On A 2500 Series RouterHamami InkaZoPas encore d'évaluation

- f1102 Gprs Intelligent Modem User ManualDocument30 pagesf1102 Gprs Intelligent Modem User ManualCocofourfaithPas encore d'évaluation

- Ecloud ProcessDocument14 pagesEcloud ProcessdaekwonparkPas encore d'évaluation

- OBL Module GIT Class Notes 2022 2023Document51 pagesOBL Module GIT Class Notes 2022 2023Maries San PedroPas encore d'évaluation

- CCLDocument13 pagesCCLNasis DerejePas encore d'évaluation

- Android Pizza Delivery SynopsisDocument2 pagesAndroid Pizza Delivery SynopsisPradeep SinghonePas encore d'évaluation

- Pokemon Chaos Black Walkthrough GuideDocument3 pagesPokemon Chaos Black Walkthrough GuideSam WiliamsonPas encore d'évaluation

- Ds-3E0109P-E/M (B) 8-Port 100 Mbps Long-Range Unmanaged Poe SwitchDocument4 pagesDs-3E0109P-E/M (B) 8-Port 100 Mbps Long-Range Unmanaged Poe Switchtokuro_22Pas encore d'évaluation

- MPPT Using Sepic ConverterDocument109 pagesMPPT Using Sepic ConverterSandhya RevuriPas encore d'évaluation

- Factory Talk Activation Quick StartDocument8 pagesFactory Talk Activation Quick StartDan WacekPas encore d'évaluation

- 1111 C Programming p.47Document446 pages1111 C Programming p.47Paras DorlePas encore d'évaluation

- PLC Siemens S7 Configuration HWDocument382 pagesPLC Siemens S7 Configuration HWyukaokto100% (6)

- MD 100T00 ENU PowerPoint - M01Document38 pagesMD 100T00 ENU PowerPoint - M01CourageMarumePas encore d'évaluation

- What Is A MicrocontrollerDocument92 pagesWhat Is A MicrocontrollerBajrang sisodiya50% (2)

- Compellent Best Practices For LinuxDocument31 pagesCompellent Best Practices For LinuxSujit FrancisPas encore d'évaluation

- HP z600 Workstation Datasheet (2010.09-Sep)Document2 pagesHP z600 Workstation Datasheet (2010.09-Sep)JSSmithPas encore d'évaluation

- Service Manual: Zenis POS SeriesDocument23 pagesService Manual: Zenis POS SeriesMircea MitroiPas encore d'évaluation

- How To Use AirPods: Tips, Tricks and General InstructionsDocument8 pagesHow To Use AirPods: Tips, Tricks and General InstructionsrachuPas encore d'évaluation