Académique Documents

Professionnel Documents

Culture Documents

Particle Filtering For Bearing-Only Audio-Visual Speaker Detection and Tracking

Transféré par

yasser_nasef5399Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Particle Filtering For Bearing-Only Audio-Visual Speaker Detection and Tracking

Transféré par

yasser_nasef5399Droits d'auteur :

Formats disponibles

2009 International Conference on Signals, Circuits and Systems

Particle Filtering for Bearing-Only Audio-Visual Speaker Detection and Tracking

Andrew Rae, Member, IEEE, Alaa Khamis, Member, IEEE, Otman Basir, Member, IEEE, and Mohamed Kamel, Fellow, IEEE

AbstractWe present a method for audio-visual speaker detection and tracking in a smart meeting room environment based on bearing measurements and particle ltering. Bearing measurements are determined using the Time Difference of Arrival (TDOA) of the acoustic signal reaching a pair of microphones, and by tracking facial regions in images from monocular cameras. A particle lter is used to sample the space of possible speaker locations within the meeting room, and to fuse the bearing measurements from auditory and visual sources. The proposed system was tested in a video messaging scenario, using a single participant seated in front of a screen to which a camera and microphone pair are attached. The experimental results show that the accuracy of speaker tracking using bearing measurements is related to the location of the speaker relative to the locations of the camera and microphones, which can be quantied using a parameter known as Dilution of Precision. Index TermsDirection of arrival estimation, acoustic arrays, machine vision, position measurement, Monte Carlo methods

I. I NTRODUCTION

PEAKER tracking has received attention in recent years for its use in smart meeting room and classroom environments to identify and track the active participant. This information is applicable to distributed meetings where the location of the speaker can be used to zoom in on the speaking person, or to select the camera that provides the best view of the speaker [1]. This allows remote meeting participants to observe the facial expressions and gestures of the speaker, thereby facilitating natural human interaction. Speaker tracking is a relevant problem to the eld of multimodal target tracking, as it cannot be addressed using only audio or visual sensing modalities [2]. Audio-visual speaker tracking takes advantage of the complementary nature of the audio and visual modalities to track the location of and/or identify a speaking person. For example, the location of the speaker can be determined by TDOA or steered beamforming methods, provided an array of microphones are available [3]. However, sound source localization is degraded in reverberant environments, and not applicable during the absence of

A. Rae is formerly with the Pattern Analysis and Machine Intelligence (PAMI) research group, Department of Electrical and Computer Engineering, University of Waterloo, Canada. A. Khamis is a Research Assistant Professor with the Department of Electrical and Computer Engineering, University of Waterloo, Canada. O. Basir is Associate Director of the PAMI research group and an Associate Professor in the Department of Electrical and Computer Engineering, University of Waterloo, Canada. M. Kamel is Director of the PAMI research group and a Professor in the Department of Electrical and Computer Engineering, University of Waterloo, Canada.

speech. Machine vision can be used to track people in the environment (e.g., [1], [2]), however it may not be possible to determine decisively which person is speaking at a given time. Fusing auditory and visual measurements should thus enable the speaker to be located with greater reliability than either modality alone. We propose a method of audio-visual speaker tracking using bearing measurements provided by audio and video subsystems. By positioning cameras and microphone arrays at separate locations throughout the meeting room environment, bearing measurements determined using the data from each sensor are used to estimate the two dimensional (x-y) position of the speaker in the room. Particle ltering is used to consider multiple location hypotheses for the speaker throughout the environment that are weighted by the bearing measurements from the audio and visual sources. The remainder of the paper is organized as follows. Section II describes the bearing-only tracking method based on a particle ltering framework. Section IV summarizes an implementation of the system and preliminary results. Section V gives conclusions and future research directions. II. B EARING - ONLY TRACKING METHOD Our approach to audio-visual speaker tracking is to use bearing measurements to locate and track the speaker in a meeting room environment. The problem is formulated as a two-dimensional tracking problem, where the xy position of the speaker in the horizontal plane is desired. Tracking the vertical position of the speakers face would provide useful information that would facilitate an automatic zoom onto the speaker for more natural remote interaction, however the vertical position is ignored for simplicity at this time. A. Notation We dene in this section the symbols and notation used in the audio-visual speaker tracking approach. The horizontal position of the speaker is expressed in a modied polar 1 coordinate system by the state vector xt , dened in (1). t is the reciprocal of the distance from the origin of the coordinate system to the speaker, and t is the angular position of the speaker. A polar coordinate system would use the distance t rather than its reciprocal. The subscript t represents the time index. 1 (1) xt = t t

-1-

978-1-4244-4398-7/09/$25.00 2009 IEEE

2009 International Conference on Signals, Circuits and Systems

The particle lter uses a set of N samples {xi }N to t i=1 approximate the posterior probability distribution of the state vector xt conditioned on a sequence of measurements z1:t = {z1 , . . . , zt } [4], as shown in (2). Each particle i [1, N ] i has a weight wt that represents its relative importance to the particle set.

N

1,2 t 1,1 t

Camera 1 Speaker

Meeting Table

p(xt |z1:t ) =

i=1

i wt (xt

xi ) t

t

+

(2)

Microphones

The measurement vector zt contains the bearing measurements from both the audio and video modalities at time instant t. Let M be the number of microphone pairs, and let K be the number of cameras. The location of microphones and cameras are assumed known a priori. An estimate of bearing to the sound source is made for each microphone pair m [1, M ] based on the time-difference of arrival of the sound signal to each microphone in the pair. This bearing estimate is relative to the midpoint between the two microphones in the pair [2]. m We denote this bearing estimate as t . Bearing estimates to the various meeting participants are made by tracking the participants visually in the images provided by each camera. Each such camera system k [1, K] tracks multiple participants independently of the other camera systems providing Lk bearing estimates, one for each tracked t participant. The set of bearing estimates from camera k is k k, Lt denoted k = {t } =1 . t The measurement vector zt is dened in (3) as the set of bearing measurements from all M microphone pairs and all K camera systems. 1 t . . . M (3) zt = t1 t . . . K t Fig. 1 shows an example meeting room conguration. One pair of microphones (M = 1) provides a bearing measurement to the speaker t using TDOA, while two camera systems (K = 2) give bearing estimates 1 and 2 to the tracked t t participants. B. Particle Filter Tracker A particle ltering approach is used to track the location of speaking participant by fusing the bearing measurements from the auditory and visual modalities. The following discusses the proposed method, including models for the bearing measurements and the system state dynamics, as well as the use of boundary contraints imposed by the restricted meeting room space. A Sampling Importance Resampling (SIR) particle lter is used. The importance density from which the particles are sampled is chosen as the state transition probability p(xt |xt1 ), shown in (4). Due to this choice of importance density, the optimal weight for each particle i [1, N ] can

-2-

Non-Speaker

y x

Camera 2

2,2 t 2,1 t

Fig. 1. An example meeting room conguration with one microphone pair and two camera systems. Bearing measurements for both auditory and visual modalities are shown.

be updated recursively using the measurement probability p(zt |xt ) [4] as shown in (5).

i wt

xi p(xt |xi ) t t1 =

i wt1

(4) (5)

p(zt |xi ) t

Implementing this particle lter thus requires that the two probability densities p(xt |xt1 ) and p(zt |xt ) be dened. A state transition model and measurement model are used to dene these probabilities. In modeling the state transition, we assume that the speaker position remains constant between lter iterations and that any change in position is the result of additive noise t . The noise term t is assumed to have a zeromean Gaussian distribution with covariance matrix Qt , and is dened in the modied polar coordinate system. The value of each particle i [1, N ] is updated as in (6) with a sample i drawn from this Gaussian distribution shown in (7). The t components of t are assumed to be independent, as indicated by the covariance matrix Qt being chosen as diagonal in (8). i t x i = xi + i t t1 t N (t ; 0, Qt ) 1 0

2

(6) (7) (8)

Qt =

0

2

As the meeting room is a known environment with known dimensions, the particle set is constrained to lie within the boundaries of the room. This is achieved by testing each sample i drawn from the noise distribution in (7) to ensure t the new particle location xi is within the room boundaries. If t xi is outside the room boundaries, a new sample i is drawn. t t This process repeats until xi is within the room boundaries. t The bearing measurements t and t from the audio and video systems, respectively, are modeled as functions of the state vector xt . The bearing measurements are relative to the positions and orientations of the microphone pairs and cameras. For each such sensor, a reference point and unit vector are needed to dene respectively the position of the

2009 International Conference on Signals, Circuits and Systems

sensor and the direction of a measured angle of zero. This reference point and unit vector are dened in the rectangular coordinate system. The modeling of a bearing measurement from each of these sensors to each particle is simplied by also converting the particle set {xi }N to the rectangular t i=1 coordinate system. This conversion is necessary since the origin of the polar coordinate system used to model the system dynamics is in general different from the reference point of the bearing measurement sensors. For a sensor with reference point p = {px , py } and unit vector u = {ux , uy }, h(xi , p, u) is the model of the bearing t estimate to particle xi , and is given by (9). t

i h(xi , p, u) = atan2 xi px , yt py atan2 (ux , uy ) (9) t t

1,2 t 1,1 t

Camera 1 Speaker

Meeting Table

t

+

Microphones

Non-Speaker

y x

Camera 2

1,2 t 1,1 t

2,2 t 2,1 t

The bearing measurements provided by audio and video have different probabilistic models, but use the same prediction given by (9). Bearing measurements from a microphone pair are modeled as Gaussian in (10). This gives more emphasis to particles located near the measured bearing vector. As a camera system can provide bearing measurements to multiple targets, a sum-of-Gaussians model is used, shown in (11). Particles that are located near any of the measured bearing vectors will be emphasized. p(t |xi ) t p(t |xi ) t = N t

Lt 2 h(xi , p, u); 0, t 2 h(xi , p, u); 0, t

Camera 1 Speaker

Meeting Table

t

+

(10) (11)

Microphones

=

=1

N t

By assuming independence of the various bearing measurements, the full measurement probability is given by the product in (12). This expression provides the means of updating the weight of each particle using (5). p(zt |xi ) = t

M m=1 m p(t |xi ) t K k=1

Non-Speaker

y x

Camera 2

2,2 t 2,1 t

p(k |xi ) t t

(12)

Fig. 2. Illustration of the convergence of the particle set to the intersection points of the bearing measurement vectors.

Particles that lie near the intersection of two or more bearing measurement vectors from two or more sensors will receive high weight. These particles therefore have a high probability of being resampled. The particle set will therefore converge to a region where multiple bearing measurement vectors intersect, which should correspond to the location of the speaker. Figure 2 illustrates this convergence. Figure 2a shows an initial particle set uniformly distributed over the meeting room environment. After ve iterations of the lter, the particle set converges to the area shown in Figure 2b. The particle set is now concentrated around the intersection of the audio bearing measurement and one of the visual bearing measurements, providing an estimate of speaker position using only bearing measurements. III. A V IDEO M ESSAGING A PPLICATION In this section we present an application of the proposed tracking method in a reduced scope situation involving video messaging. One microphone pair (M = 1) and one camera (K = 1) are used to detect and track the speaker in an area in front of a computer display. The microphones and camera

-3-

are attached to the display. This situation is common to video messaging applications, such as Skype. This section is organized as follows. First, the experimental setup is described in Section III-A. Second, bearing measurements from audio and video are discussed in Section III-B and Section III-C, respectively. A. Equipment Setup We use two Logitech QuickCam Fusion web cameras with integrated microphones to provide sensory data. These are attached to a computer monitor. Audio signals from the two microphones are used to determine the angular position of the speaker relative to a reference point equidistant from the two microphones. Images from the camera on the left are used to measure the angular location of the meeting participants. Images from the camera are acquired at 30 frames per second with a resolution of 320 pixels 240 pixels. The microphone audio signals are sampled at 44.1 kHz. The Image Acquisition Toolbox and Data Acquisition Toolbox in MATLAB are used for this purpose. Acquisition from video and audio sources occurs simultaneously. Data sets are collected

2009 International Conference on Signals, Circuits and Systems

and processed ofine in order to precisely synchronize the audio channels. This is discussed in depth in the following section. B. Audio Bearing Measurements Estimating the bearing to the speaker using audio data is performed in three stages: synchronizing the audio channels, measuring the Time Difference of Arrival (TDOA) of the sound signal between channels, and nally calculating the bearing estimate using this TDOA measurement. Samples acquired from the two microphones at the same time instant are not synchronized, as each audio channel has a unique unknown time delay. To synchronize the audio channels, a signal with a known frequency is used. At the beginning of each data set, a signal with frequency 2 kHz is output for 0.1 seconds by a computer speaker equidistant from the two microphones. The acquired audio signals are time shifted to synchronize with the beginning of this pulse. The need to synchronize with an external signal is the principal reason for performing testing ofine using stored data. The next step is to measure the TDOA of speech signals reaching the two microphones. The cross-correlation of the two audio signals within a xed time window is used to estimate this time difference. The window size is set to T = 33.3 ms, and is equal to time between video frames. Thus, we have bearing estimates from audio and video 30 times per second. The audio signal s is recorded by both microphones m1 and m2 , with a time delay D in m2 compared with m1 . The signals s1 and s2 from the two microphones contain additive noise terms n1 and n2 , shown in (13)(14). The value of this time delay is estimated from the peak in the cross-correlation of s1 and s2 using (15); the cross-correlation R12 ( ) is estimated by using (16) [5]. s1 (t) = s(t) + n1 (t) s2 (t) = s(t + D) + n2 (t) D = arg max R12 ( ) 1 R12 ( ) = T

t0 t0 T

location into a bearing estimate relative to the camera location. The heads of the participants are detected using skin-region segmentation. This detection is performed once per second (every 30 frames) to reinitialize the candidates. Between detections, the candidate regions are tracked using the mean shift method [6]. The horizontal image position of the center of each candidate region is transformed into a bearing estimate to that candidate in the world coordinate system relative to the camera. 1) Face Candidate Detection: Skin region segmentation is performed in the YCbCr color space. Skin color has been shown to cluster more tightly in chrominance color spaces such as the YCbCr color space, simplifying skin region detection for a wide array of individuals and illumination conditions [7]. A multivariate Gaussian model of skin color is created in the Cb and Cr image channels with mean s and covariance matrix s . A binary skin image B is created by classifying each pixel (i, j) as skin (1) or non-skin (0) using a Mahalanobis distance criterion in (20). Fig. 3b shows the result of this segmentation on an example image. s = s = 1 if DM 0 otherwise Cb Cr

2 Cb 0

(18) 0 2 Cr , s , s < 3 (19)

B(i, j) =

Cb(i,j) Cr(i,j)

(20)

(13) (14) (15) (16)

s1 (t)s (t )dt 2

Having estimated the time delay between the audio channels, the bearing can be calculated to the sound source (the speaker). The bearing estimate is relative to the point pm between the two microphones, the positions of which are given by m1 and m2 . The bearing angle to the speaker is given by (17), where v is the speed of sound [2]. This expression is an approximation valid when the separation between the microphones is small compared with the distance r to the sound source, r |m1 m2 | [2]. = arccos Dv |m1 m2 | (17)

C. Video Bearing Measurements Bearing measurements from video are made in two stages: rst, by detecting or tracking the location of meeting participants in the image, and second converting this image

-4-

A morphological opening operation followed by a closing operation are used to remove spurious pixels from the binary image and to ll in gaps in detected regions, respectively. The remaining connected components in the binary image are tested against size and aspect ratio criteria to lter out regions unlikely to be face regions. Components must have a height and width of at least 20 pixels, and an aspect ratio (ratio of component width to height) between 0.5 and 1. The remaining components represent the face candidates, as shown in Fig. 3c. An ellipse is dened for each candidate with major axis equal to the height of the component, minor axis equal to the width of the components, and centered at the centroid of the component. The nal candidate ellipse regions are illustrated in Fig. 3d. The number of face candidates for a given camera k [1, K] is denoted Lk . t 2) Mean Shift Tracking of Face Candidates: Face candidate detection is performed using only one frame per second. For the remaining 29 frames acquired during that second, the detected face candidates are tracked using the mean shift technique [6]. This technique uses a candidate region from the previous frame as a model, and searches the new frame for a region matching the model. In our implementation, the model of face candidate [1, Lk ] is the color histogram q k of the t pixels within the elliptical region dened by the segmentation method described in Section III-C1. These regions are also illustrated in Fig. 3d. The model q k is kept constant between frames; it is not updated in each successive frame. Updating the model can improve tracking robustness if the appearance

2009 International Conference on Signals, Circuits and Systems

TABLE I VALUE OF BEARING MEASUREMENT FOR CERTAIN KEY HORIZONTAL IMAGE POSITIONS . Horizontal image position (pixels) 0

W 2

Bearing measurement (radians)

2 2

+

2

2 2

/2

/2

Candidate 1 Candidate 2

2 t 1 t

Fig. 3. Face region detection. (a) Original image. (b) Skin pixel segmentation result. (c) Skin regions after morphological ltering. (d) Elliptical face candidates overlaid on original image.

Fig. 4. Visual bearing measurements. (a) Elliptical face candidates overlaid on original image. (b) Bearing measurements resulting from these face candidates.

of the tracked object changes over time. However, it can also allow the tracker to diverge to another object. In our system, the participants being tracked will be generally looking at the screen, and thus their facial appearance will not change signicantly. Keeping the model q k constant ensures that the image region most similar to the detected face region will be tracked in each frame. To accelerate the convergence of the mean shift tracker, a crude motion model is used to predict the position of the face region in the new frame. Denoting the center of the elliptical k, face candidate of camera k at time t by ck, = {cik, , cjt }, t t the predicted location of this center point in the frame at time t + 1 is given by (21). This equation assumes the center point changes by the same amount as the previous frame; this change is given by (22). ck, t ck, = ck, + ck, t t t+1 = ck, t ck, t1 (21) (22)

by the eld-of-view of the camera . Table I gives the value of bearing measurements for certain horizontal image positions. Fig. 4a shows two face candidates which result in the bearing 1 2 estimates t and t shown in Fig. 4b; also shown is the eldof-view of the camera. IV. E XPERIMENTAL R ESULTS Results for audio-visual tracking of the active speaker in a video messaging application are provided in this section. At this time, experimental results are available only for the single participant case. This work will be extended to the multiple speaker case in the future. Audio and video experimental data was collected while the speaker was positioned at a set of known positions relative to the screen upon which the camera and microphones are mounted. The test positions can be separated into two groups. Group 1 has a xed lateral position of x = 0m while the distance from the monitor is varied from y = 0.5m to y = 2.0m. Group 2 is a xed distance of y = 1.0m from the monitor while the lateral position is varied from x = 0.4m to x = 0.4m. While the speaker was sitting at each test position, 10 seconds of audio and video data were recorded. The average estimated speaker location was determined for each data set using ofine processing. Knowing the ground truth speaker position for each data set, the average localization error for each data set was calculated. The average errors for the positions in Group 1 are shown in Fig. 5a. Shown in Fig. 5b is a parameter called Dilution of Precision (DOP), calculated for each of the speaker locations. This parameter gives an indication of how the geometry of the sensors affects localization accuracy. DOP is used by Global Positioning System (GPS) receivers to describe the effect that satellite geometry has on the accuracy of the computed receiver position [8]. A low DOP value indicates that satellite geometry is favorable, while a high DOP value indicates that geometry is poor.

-5-

3) Bearing Estimation: The image locations of the face candidates are converted to bearing estimates relative to the position of the camera in the world coordinate system. As we are only interested in tracking the speaker in the horizontal k, plane, only the horizontal image position cjt is required. Transforming this image position into an angular measurement is given by (23), where W = 320 pixels is the width of the image, and = 78 is the eld of view of the camera. k, t = arctan 1 1

0.5W

k, cjt

tan

(23)

This equation (23) maps the horizontal image position of a face candidate to an angular measurement of bearing. It is similar to an equation given in [2] used to map a bearing estimate from TDOA to an image coordinate. The range of bearing measurements possible using this method are limited

2009 International Conference on Signals, Circuits and Systems

(a) Localization error for Group 1 test positions Average error (meters) x error y error Average error (meters) 0.8 0.6 0.4 0.2 0 0.5 0.8 0.6 0.4 0.2 0 0.4

(a) Localization error for Group 2 test positions x error y error

1 1.5 Distance from monitor (meters) (b) Dilution of precision for Group 1 test positions

0.3

0.1 0 0.1 0.2 0.3 Lateral position (meters) (b) Dilution of precision for Group 2 test positions

0.2

0.4

25 Dilution of precision 20 15 10 5 0.5 Dilution of precision 1 1.5 Distance from monitor (meters) 2

15 14 13 12 11 0.4

0.3

0.2

0.1 0 0.1 Lateral position (meters)

0.2

0.3

0.4

Fig. 5. (a) Position estimation error for Group 1 positions, where distance from the monitor was varied. (b) Dilution of precision for Group 1 positions.

Fig. 6. (a) Position estimation error for Group 2 positions, where lateral position was varied. (b) Dilution of precision for Group 2 positions.

To calculate DOP, we must rst calculate the direction cosine matrix G for the current sensor conguration as shown in (24). DOP is calculated from G as shown in (25). Further information on the calculation of DOP in the context of GPS can be found in [8]. G= DOP = cos cos sin sin

1

(24) (25)

environment. The particle set converges to the position in the meeting room where the bearing measurements intersect, thus triangulating the position of the speaker. Future extensions to this work will test how well the proposed method tracks multiple participants. Eventually, we would like to scale this approach up to a meeting room environment using multiple cameras and microphone pairs to detect and track multiple meeting participants. ACKNOWLEDGMENT This work has been supported by Ontario Research FundResearch Excellence (ORE-RE) program through the project MUltimodal- SurvEillance System for SECurity-RElaTed applications (MUSES SECRET) funded by the Government of Ontario, Canada. R EFERENCES

[1] D. Gatica-Perez, G. Lathoud, J.-M. Odobez, and I. McCowan, Audiovisual Probabilistic Tracking of Multiple Speakers in Meetings, IEEE Trans. Audio, Speech and Language Processing, vol. 15, no. 2, pp. 601 616, 2007. [2] Y. Chen and Y. Rui, Real-Time Speaker Tracking Using Particle Filter Sensor Fusion, Proceedings of the IEEE, vol. 92, no. 3, pp. 485494, 2004. [3] D. B. Ward, E. A. Lehmann, and R. C. Williamson, Particle Filtering Algorithms for Tracking an Acoustic Source in a Reverberent Environment, IEEE Trans. Speech Audio Process., vol. 11, no. 6, pp. 826836, 2003. [4] M. S. Arulampalam, S. Maskell, N. Gordon, and T. Clapp, A Tutorial on Particle Filters for Online Nonlinear/Non-Gaussian Bayesian Tracking, IEEE Transactions on Signal Processing, vol. 50, no. 2, pp. 174188, 2002. [5] C. H. Knapp and G. C. Carter, The Generalized Correlation Method for Estimation of Time Delay, IEEE Trans. Acoust., Speech, Signal Process., vol. ASSP-24, no. 4, pp. 320327, 1976. [6] D. Comaniciu and V. Ramesh, Mean Shift and Optimal Prediction for Efcient Object Tracking, in Proc. 2000 Int. Conf. Image Processing, vol. 3, 2000, pp. 7073. [7] S. L. Phung, A. Bouzerdoum, and D. Chai, A Novel Skin Color Model in YCbCr Color Space and its Application to Human Face Detection, in Proc. 2002 Int. Conf. Image Processing, vol. 1, 2002, pp. 289292. [8] J. J. Spilker, Satellite Constellation and Geometric Dilution of Precision, in The Global Positioning System: Theory and Applications, B. W. Parkinson and J. J. Spilker, Eds. American Institute of Aeronautics and Astonautics, 1994, vol. I, pp. 177208.

tr (GT G)

It can be seen in Fig. 5b that DOP increases as the speaker is located further away from the screen. Localization error also increases, particularly in the y direction. The average errors for the positions in Group 2 are shown in Fig. 6a, and the DOP values shown in Fig. 6b. It can be seen that the minimum values for both localization error and DOP occur when the speaker is at a lateral position of x = 0m. Error and DOP both tend to increase as the speaker moves laterally in either the positive or negative x direction. The apparent relationship between DOP and localization error suggests that a better arrangement of sensors can be found that improves localization accuracy. V. C ONCLUSION This paper has provided a novel approach to audio-visual speaker tracking in a smart meeting room environment that uses bearing measurements from audio and video to triangulate the position of the speaker. Bearing measurements from audio are calculated using the Time Difference of Arrival (TDOA) of speech signals reaching each pair of microphones in a microphone array. A machine vision algorithm detects participants faces using skin color segmentation and tracks the segmented regions using mean shift kernel tracking. The tracked skin regions are transformed into bearing measurements relative to the location of the camera in the world coordinate system. A particle lter uses the bearing measurements from audio and video to weight the particles spread around the meeting room

-6-

Vous aimerez peut-être aussi

- Ref 28Document6 pagesRef 28Mayssa RjaibiaPas encore d'évaluation

- 2010 Sound Field Extrapolation: Inverse Problems, Virtual Microphone Arrays and Spatial FiltersDocument10 pages2010 Sound Field Extrapolation: Inverse Problems, Virtual Microphone Arrays and Spatial FiltersPhilippe-Aubert GauthierPas encore d'évaluation

- Investigation of Signal Thresholding Effects On The Accuracy of Sound Source LocalizationDocument6 pagesInvestigation of Signal Thresholding Effects On The Accuracy of Sound Source LocalizationIJAR JOURNALPas encore d'évaluation

- Downloads MAG Vol35-2 Paper10Document9 pagesDownloads MAG Vol35-2 Paper10Nillx87Pas encore d'évaluation

- Bros Sier 04 Fast NotesDocument6 pagesBros Sier 04 Fast NotesfddddddPas encore d'évaluation

- Experimental 3D Sound Field Analysis With A Microphone ArrayDocument8 pagesExperimental 3D Sound Field Analysis With A Microphone ArrayLeandro YakoPas encore d'évaluation

- Iterative Compensation of Microphone Array and Sound Source Movements Based On Minimization of Arrival Time DifferencesDocument5 pagesIterative Compensation of Microphone Array and Sound Source Movements Based On Minimization of Arrival Time Differencesscribd1235207Pas encore d'évaluation

- Scream and Gunshot Detection and Localization For Audio-Surveillance SystemsDocument6 pagesScream and Gunshot Detection and Localization For Audio-Surveillance Systemsaksj1186Pas encore d'évaluation

- Estimating Time Delay Using GCC For Speech Source LocalisationDocument7 pagesEstimating Time Delay Using GCC For Speech Source LocalisationInternational Journal of Application or Innovation in Engineering & ManagementPas encore d'évaluation

- A Self-Steering Digital Microphone Array: At&T NJ, UsaDocument4 pagesA Self-Steering Digital Microphone Array: At&T NJ, UsaMayssa RjaibiaPas encore d'évaluation

- Comparisons of Adaptive Median Filter Based On Homogeneity Level Information and The New Generation FiltersDocument5 pagesComparisons of Adaptive Median Filter Based On Homogeneity Level Information and The New Generation FiltersInternational Organization of Scientific Research (IOSR)Pas encore d'évaluation

- SP - Project ReportDocument12 pagesSP - Project ReportZaïd BouslikhinPas encore d'évaluation

- GCC BetaDocument13 pagesGCC BetangoctuongPas encore d'évaluation

- 3D Microphone ArrayDocument11 pages3D Microphone ArrayDavid KanePas encore d'évaluation

- Design of A Highly Directional Endfire Loudspeaker Array : M.m.boone@tudelft - NLDocument17 pagesDesign of A Highly Directional Endfire Loudspeaker Array : M.m.boone@tudelft - NLalecs serbPas encore d'évaluation

- Range Resolution of Ultrasonic Distance Measurement Using Single Bit Cro..Document7 pagesRange Resolution of Ultrasonic Distance Measurement Using Single Bit Cro..Thiago TavaresPas encore d'évaluation

- SQR Algo Dir Ls 07Document6 pagesSQR Algo Dir Ls 07rocinguyPas encore d'évaluation

- Removing Impulse Random Noise From Color Video Using Fuzzy FilterDocument4 pagesRemoving Impulse Random Noise From Color Video Using Fuzzy FilterIJERDPas encore d'évaluation

- Convention Paper 5452: Audio Engineering SocietyDocument10 pagesConvention Paper 5452: Audio Engineering SocietyNathalia Parra GarzaPas encore d'évaluation

- Ma KaleDocument3 pagesMa KalesalihyucaelPas encore d'évaluation

- Sound Source LocalizationDocument4 pagesSound Source LocalizationChackrapani WickramarathnePas encore d'évaluation

- Software Radio: Sampling Rate Selection, Design and SynchronizationD'EverandSoftware Radio: Sampling Rate Selection, Design and SynchronizationPas encore d'évaluation

- A Class of Spectrum-Sensing Schemes For Cognitive Radio Under Impulsive Noise Circumstances: Structure and Performance in Nonfading and Fading EnvironmentsDocument18 pagesA Class of Spectrum-Sensing Schemes For Cognitive Radio Under Impulsive Noise Circumstances: Structure and Performance in Nonfading and Fading EnvironmentsBedadipta BainPas encore d'évaluation

- Sensor Deployment Using Particle Swarm Optimization: Nikitha KukunuruDocument7 pagesSensor Deployment Using Particle Swarm Optimization: Nikitha KukunuruArindam PalPas encore d'évaluation

- Urban Sound ClassificationDocument6 pagesUrban Sound Classificationamit kPas encore d'évaluation

- Proc SPIE 11169-13 ValentinBaron FinalDocument9 pagesProc SPIE 11169-13 ValentinBaron Finalbarbara nicolasPas encore d'évaluation

- Audio Analysis Using The Discrete Wavelet Transform: 1 2 Related WorkDocument6 pagesAudio Analysis Using The Discrete Wavelet Transform: 1 2 Related WorkAlexandro NababanPas encore d'évaluation

- Paper Velocimeter SPIE RS07Document12 pagesPaper Velocimeter SPIE RS07Mounika ReddyPas encore d'évaluation

- A Comparison - Of.acoustic - Absortion.coefficient - Measuring.in - Situ.method (Andrew.R.barnard)Document8 pagesA Comparison - Of.acoustic - Absortion.coefficient - Measuring.in - Situ.method (Andrew.R.barnard)Raphael LemosPas encore d'évaluation

- GCCDocument14 pagesGCCAlif Bayu WindrawanPas encore d'évaluation

- A Design of An Acousto-Optical SpectrometerDocument4 pagesA Design of An Acousto-Optical Spectrometeranasrl2006Pas encore d'évaluation

- Acoustic Scene ClassificationDocument6 pagesAcoustic Scene ClassificationArunkumar KanthiPas encore d'évaluation

- Designing The Wiener Post-Filter For Diffuse Noise Suppression Using Imaginary Parts of Inter-Channel Cross-SpectraDocument5 pagesDesigning The Wiener Post-Filter For Diffuse Noise Suppression Using Imaginary Parts of Inter-Channel Cross-SpectraPPas encore d'évaluation

- DLR Research Activities For Structural Health Monitoring in Aerospace StructuresDocument7 pagesDLR Research Activities For Structural Health Monitoring in Aerospace StructuresccoyurePas encore d'évaluation

- Spread Spectrum Ultrasonic Positioning SystemDocument6 pagesSpread Spectrum Ultrasonic Positioning SystemTuấn DũngPas encore d'évaluation

- Digital Signal Processing System of Ultrasonic SigDocument7 pagesDigital Signal Processing System of Ultrasonic Sigkeltoma.boutaPas encore d'évaluation

- OSP Marano - Et - Al-2011-The - Structural - Design - of - Tall - and - Special - BuildingsDocument9 pagesOSP Marano - Et - Al-2011-The - Structural - Design - of - Tall - and - Special - Buildingsthomas rojasPas encore d'évaluation

- Detection of Interface Failure in A Composite Structural T - Joint Using Time of FlightDocument3 pagesDetection of Interface Failure in A Composite Structural T - Joint Using Time of Flightedcam13Pas encore d'évaluation

- 4 Al Haddad UPMDocument5 pages4 Al Haddad UPMCarl IskPas encore d'évaluation

- Beamforming Techniques For Multichannel Audio Signal SeparationDocument9 pagesBeamforming Techniques For Multichannel Audio Signal Separation呂文祺Pas encore d'évaluation

- Optical Measurement of Acoustic Drum Strike Locations: Janis Sokolovskis Andrew P. McphersonDocument4 pagesOptical Measurement of Acoustic Drum Strike Locations: Janis Sokolovskis Andrew P. McphersonEvilásio SouzaPas encore d'évaluation

- Aes2001 Bonada PDFDocument10 pagesAes2001 Bonada PDFjfkPas encore d'évaluation

- Ultrasonic Signal De-Noising Using Dual Filtering AlgorithmDocument8 pagesUltrasonic Signal De-Noising Using Dual Filtering Algorithmvhito619Pas encore d'évaluation

- Feature Extraction For Speech Recogniton: Manish P. Kesarkar (Roll No: 03307003) Supervisor: Prof. PreetiDocument12 pagesFeature Extraction For Speech Recogniton: Manish P. Kesarkar (Roll No: 03307003) Supervisor: Prof. Preetinonenonenone212Pas encore d'évaluation

- Speaker Enclosure Design Using Modal AnalysisDocument4 pagesSpeaker Enclosure Design Using Modal Analysismax_gasthaus0% (1)

- Cap1 PDFDocument27 pagesCap1 PDFJose ArellanoPas encore d'évaluation

- Eco Localization by The Analysis of The Characteristics of The Reflected Waves in Audible FrequenciesDocument6 pagesEco Localization by The Analysis of The Characteristics of The Reflected Waves in Audible FrequenciesJosue Manuel Pareja ContrerasPas encore d'évaluation

- HMM Adaptation Using Vector Taylor Series For Noisy Speech RecognitionDocument4 pagesHMM Adaptation Using Vector Taylor Series For Noisy Speech RecognitionjimakosjpPas encore d'évaluation

- Glotin H. (Ed.) - Soundscape Semiotics. Localization and Categorization PDFDocument193 pagesGlotin H. (Ed.) - Soundscape Semiotics. Localization and Categorization PDFFarhad BahramPas encore d'évaluation

- A Prototype Compton Camera Array For Localization and Identification of Remote Radiation SourcesDocument6 pagesA Prototype Compton Camera Array For Localization and Identification of Remote Radiation SourcesMAILMEUSPas encore d'évaluation

- Novel Approach For Detecting Applause in Continuous Meeting SpeechDocument5 pagesNovel Approach For Detecting Applause in Continuous Meeting SpeechManjeet SinghPas encore d'évaluation

- FMC PDFDocument11 pagesFMC PDFJoseph JohnsonPas encore d'évaluation

- A Computationally Efficient Speech/music Discriminator For Radio RecordingsDocument4 pagesA Computationally Efficient Speech/music Discriminator For Radio RecordingsPablo Loste RamosPas encore d'évaluation

- Spectral Correlation Based Signal Detection MethodDocument6 pagesSpectral Correlation Based Signal Detection Methodlogu_thalirPas encore d'évaluation

- Reliable Fusion of Tof and Stereo Depth Driven by Confidence MeasuresDocument16 pagesReliable Fusion of Tof and Stereo Depth Driven by Confidence MeasuresHanz Cuevas VelásquezPas encore d'évaluation

- Analysis and Synthesis of Speech Using MatlabDocument10 pagesAnalysis and Synthesis of Speech Using Matlabps1188Pas encore d'évaluation

- Adaptive Wiener Filtering Approach For Speech EnhancementDocument9 pagesAdaptive Wiener Filtering Approach For Speech EnhancementUbiquitous Computing and Communication JournalPas encore d'évaluation

- 3 Deec 51 Ae 28 Ba 013 A 4Document5 pages3 Deec 51 Ae 28 Ba 013 A 4Carrot ItCsPas encore d'évaluation

- Acoustic Predictions of High Power Sound SystemsDocument7 pagesAcoustic Predictions of High Power Sound Systemsdub6kOPas encore d'évaluation

- Orenda ValveDocument26 pagesOrenda Valveyasser_nasef5399Pas encore d'évaluation

- RB211 Bleed ValvDocument10 pagesRB211 Bleed Valvyasser_nasef5399100% (1)

- E530 Hardware Service ManualDocument118 pagesE530 Hardware Service Manualyasser_nasef5399Pas encore d'évaluation

- SCH VsdSpeedstar2000 UmDocument93 pagesSCH VsdSpeedstar2000 Umyasser_nasef5399Pas encore d'évaluation

- Fisher-Paykel DW BulletinsDocument119 pagesFisher-Paykel DW BulletinsrosieherbPas encore d'évaluation

- 06-901 Keyed Input SwitchesDocument4 pages06-901 Keyed Input Switchesmajed al.madhajiPas encore d'évaluation

- Feasibility Study of Solar Photovoltaic (PV) Energy Systems For Rural Villages of Ethiopian Somali Region (A Case Study of Jigjiga Zone)Document7 pagesFeasibility Study of Solar Photovoltaic (PV) Energy Systems For Rural Villages of Ethiopian Somali Region (A Case Study of Jigjiga Zone)ollata kalanoPas encore d'évaluation

- Optimasi Blending Pertalite Dengan Komponen Reformate Di PT. XYZ BalikpapanDocument7 pagesOptimasi Blending Pertalite Dengan Komponen Reformate Di PT. XYZ BalikpapanFrizki AkbarPas encore d'évaluation

- WDU 2.5 enDocument14 pagesWDU 2.5 enAhmadBintangNegoroPas encore d'évaluation

- Ball Mill SizingDocument10 pagesBall Mill Sizingvvananth100% (1)

- Measurement Advisory Committee Summary - Attachment 3Document70 pagesMeasurement Advisory Committee Summary - Attachment 3MauricioICQPas encore d'évaluation

- IBM System Storage DS8000 - A QuickDocument10 pagesIBM System Storage DS8000 - A Quickmuruggan_aPas encore d'évaluation

- An 80-Mg Railroad Engine A Coasting at 6.5 KM - H Strikes A 20Document4 pagesAn 80-Mg Railroad Engine A Coasting at 6.5 KM - H Strikes A 20Aura Milena Martinez ChavarroPas encore d'évaluation

- Behringer UB2222FX PRODocument5 pagesBehringer UB2222FX PROmtlcaqc97 mtlcaqc97Pas encore d'évaluation

- EMOC 208 Installation of VITT For N2 Cylinder FillingDocument12 pagesEMOC 208 Installation of VITT For N2 Cylinder Fillingtejcd1234Pas encore d'évaluation

- TDS Sadechaf UVACRYL 2151 - v9Document5 pagesTDS Sadechaf UVACRYL 2151 - v9Alex MacabuPas encore d'évaluation

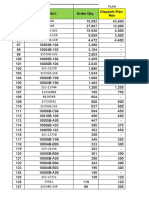

- Order Qty Vs Dispatch Plan - 04 11 20Document13 pagesOrder Qty Vs Dispatch Plan - 04 11 20NPD1 JAKAPPas encore d'évaluation

- Ecc Mech Sharq 22 016 Rev 01Document6 pagesEcc Mech Sharq 22 016 Rev 01Muthu SaravananPas encore d'évaluation

- Le22a1321 AocDocument130 pagesLe22a1321 AocEchefisEchefisPas encore d'évaluation

- Using Dapper Asynchronously inDocument1 pageUsing Dapper Asynchronously inGiovani BrondaniPas encore d'évaluation

- Cisco Network DiagramDocument1 pageCisco Network DiagramĐỗ DuyPas encore d'évaluation

- TechSpec MistralDocument4 pagesTechSpec MistralScarab SweepersPas encore d'évaluation

- A9K CatalogueDocument152 pagesA9K CatalogueMohamed SaffiqPas encore d'évaluation

- Schematic Lenovo ThinkPad T410 NOZOMI-1Document99 pagesSchematic Lenovo ThinkPad T410 NOZOMI-1borneocampPas encore d'évaluation

- PT14 Engine Monitor 1Document2 pagesPT14 Engine Monitor 1BJ DixPas encore d'évaluation

- Idlers - Medium To Heavy Duty PDFDocument28 pagesIdlers - Medium To Heavy Duty PDFEd Ace100% (1)

- 1Document100 pages1Niomi GolraiPas encore d'évaluation

- RequirementsDocument18 pagesRequirementsmpedraza-1Pas encore d'évaluation

- Cosben e Brochure PDFDocument28 pagesCosben e Brochure PDFsmw maintancePas encore d'évaluation

- Turbin 1Document27 pagesTurbin 1Durjoy Chakraborty100% (1)

- 100ah - 12V - 6FM100 VISIONDocument2 pages100ah - 12V - 6FM100 VISIONBashar SalahPas encore d'évaluation

- Nuevo CvuDocument1 pageNuevo CvuJesús GonzálezPas encore d'évaluation

- Transmisor HarrisDocument195 pagesTransmisor HarrisJose Juan Gutierrez Sanchez100% (1)

- Lecture10 Combined FootingsDocument31 pagesLecture10 Combined FootingsGopalram Sudhirkumar100% (3)

- Section 05120 Structural Steel Part 1Document43 pagesSection 05120 Structural Steel Part 1jacksondcplPas encore d'évaluation