Académique Documents

Professionnel Documents

Culture Documents

Time Series Lecture Notes

Transféré par

Apam Benjamin0 évaluation0% ont trouvé ce document utile (0 vote)

321 vues97 pagestime series notes

Copyright

© Attribution Non-Commercial (BY-NC)

Formats disponibles

DOCX, PDF, TXT ou lisez en ligne sur Scribd

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documenttime series notes

Droits d'auteur :

Attribution Non-Commercial (BY-NC)

Formats disponibles

Téléchargez comme DOCX, PDF, TXT ou lisez en ligne sur Scribd

0 évaluation0% ont trouvé ce document utile (0 vote)

321 vues97 pagesTime Series Lecture Notes

Transféré par

Apam Benjamintime series notes

Droits d'auteur :

Attribution Non-Commercial (BY-NC)

Formats disponibles

Téléchargez comme DOCX, PDF, TXT ou lisez en ligne sur Scribd

Vous êtes sur la page 1sur 97

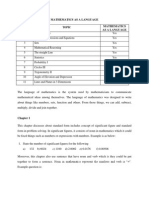

V.I.1.

a Basic Definitions and Theorems about ARIMA models

First we define some important concepts. A stochastic process (c.q. probabilistic process) is defined by a T-

dimensional distribution function.

(V.I.1-1)

Before analyzing the structure of a time series model one must make sure that the time series are stationary with

respect to the variance and with respect to the mean. First, we will assume statistical stationarity of all time series

(later on, this restriction will be relaxed).

Statistical stationarity of a time series implies that the marginal probability distribution is time-independent

which means that:

the expected values and variances are constant

(V.I.1-2)

where T is the number of observations in the time series;

the autocovariances (and autocorrelations) must be constant

(V.I.1-3)

where k is an integer time-lag;

the variable has a joint normal distribution f(X

1

, X

2

, ..., X

T

) with marginal normal distribution in each dimension

(V.I.1-4)

If only this last condition is not met, we denote this by weak stationarity.

Now it is possible to define white noise as a stochastic process (which is statistically stationary) defined by a

marginal distribution function (V.I.1-1), where all X

t

are independent variables (with zero covariances), with a

joint normal distribution f(X

1

, X

2

, ..., X

T

), and with

(V.I.1-5)

It is obvious from this definition that for any white noise process the probability function can be written as

(V.I.1-6)

Define the autocovariance as

(V.I.1-7)

or

(V.I.1-8)

whereas the autocorrelation is defined as

(V.I.1-9)

In practice however, we only have the sample observations at our disposal. Therefore we use the sample

autocorrelations

(V.I.1-10)

for any integer k.

Remark that the autocovariance matrix and autocorrelation matrix associated with a stochastic stationary

process

(V.I.1-11)

(V.I.1-12)

is always positive definite, which can be easily shown since a linear combination of the stochastic variable

(V.I.1-13)

has a variance of

(V.I.1-14)

which is always positive.

This implies for instance for T=3 that

(V.I.1-15)

or

(V.I.1-16)

Bartlett proved that the variance of autocorrelation of a stationary normal stochastic process can be formulated

as

(V.I.1-17)

This expression can be shown to be reduced to

(V.I.1-18)

if the autocorrelation coefficients decrease exponentially like

(V.I.1-19)

Since the autocorrelations for i > q (a natural number) are equal to zero, expression (V.I.1-17) can be shown to

be reformulated as

(V.I.1-20)

which is the so called large-lag variance. Now it is possible to vary q from 1 to any desired integer number of

autocorrelations, replace the theoretical correlations by their sample estimates, and compute the square root of

(V.I.1-20) to find the standard deviation of the sample autocorrelation.

Note that the standard deviation of one autocorrelation coefficient is almost always approximated by

(V.I.1-21)

The covariances between autocorrelation coefficients have also been deduced by Bartlett

(V.I.1-22)

which is a good indicator for dependencies between autocorrelations. Remind therefore that inter-correlated

autocorrelations can seriously distort the picture of the autocorrelation function (ACF c.q. autocorrelations as a

function of a time-lag).

It is however possible to remove the intervening correlations between X

t

and X

t-k

by defining a partial

autocorrelation function (PACF)

The partial autocorrelation coefficients are defined as the last coefficient of a partial autoregression equation of

order k

(V.I.1-23)

It is obvious that there exists a relationship between the PACF and the ACF since (V.I.1-23) can be rewritten

as

(V.I.1-24)

or (on taking expectations and dividing by the variance)

(V.I.1-25)

Sometimes (V.I.1-25) is written in matrix formulation according to the Yule-Walker relations

(V.I.1-26)

or simply

(V.I.1-27)

Solving (V.I.1-27) according to Cramer's Rule yields

(V.I.1-28)

Note that the determinant of the numerator contains the same elements as the determinant of the denominator,

except for the last column that has been replaced.

A practical numerical estimation algorithm for the PACF is given by Durbin

(V.I.1-29)

with

(V.I.1-30)

The standard error of a partial autocorrelation coefficient for k > p (where p is the order of the

autoregressive data generating process; see later) is given by

(V.I.1-31)

Finally, we define the following polynomial lag-processes

(V.I.1-32)

where B is the backshift operator (c.q. B

i

Y

t

= Y

t-i

) and where

(V.I.1-33)

These polynomial expressions are used to define linear filters. By definition a linear filter

(V.I.1-34)

generates a stochastic process

(V.I.1-35)

where a

t

is a white noise variable.

(V.I.1-36)

for which the following is obvious

(V.I.1-37)

We call eq. (V.I.1-36) the random-walk model: a model that describes time series that are fluctuating around

X

0

in the short and in the long run (since a

t

is white noise).

It is interesting to note that a random-walk is normally distributed. This can be proved by using the definition of

white noise and computing the moment generating function of the random-walk

(V.I.1-38)

(V.I.1-39)

from which we deduce

(V.I.1-40)

(Q.E.D.).

A deterministic trend is generated by a random-walk model with an added constant

(V.I.1-41)

The trend can be illustrated by re-expressing (V.I.1-41) as

(V.I.1-42)

where ct is a linear deterministic trend (as a function of time).

The linear filter (V.I.1-35) is normally distributed with

(V.I.1-43)

due to the additivity property of eq. (I.III-33), (I.III-34), and (I.III-35) applied to a

t

.

Now the autocorrelation of a linear filter can be quite easily computed as

(V.I.1-44)

since

(V.I.1-45)

and

(V.I.1-46)

Now it is quite evident that, if the linear filter (V.I.1-35) generates the variable X

t

, then X

t

is a stationary

stochastic process ((V.I.1-1) - (V.I.1-3)) defined by a normal distribution (V.I.1-4) (and therefore strongly

stationary), and a autocovariance function (V.I.1-45) which is only dependent on the time-lag k.

The set of equations resulting from a linear filter (V.I.1-35) with ACF (V.I.1-44) are sometimes called stochastic

difference equations. These stochastic difference equations can be used in practice to forecast (economic) time

series. Theforecasting function is given by

(V.I.1-47)

On using (V.I.1-35), the density of the forecasting function (V.I.1-47) is

(V.I.1-48)

where

(V.I.1-49)

is known, and therefore equal to a constant term. Therefore it is obvious that

(V.I.1-50)

(V.I.1-51)

The concepts defined and described above are all time-related. This implies for instance that autocorrelations are

defined as a function of time. Historically, this time-domain viewpoint is preceded by the frequency-

domain viewpoint where it is assumed that time series consist of sine and cosine waves at different frequencies.

In practice there are both advantages and disadvantages to both viewpoints. Nevertheless, both should be seen as

complementary to each other.

(V.I.1-52)

for the Fourier series model

(V.I.1-53)

In (V.I.1-53) we define

(V.I.1-54)

The least squares estimates of the parameters in (V.I.1-52) are computed by

(V.I.1-55)

In case of a time series with an even number of observations T = 2 q the same definitions are applicable except

for

(V.I.1-56)

It can furthermore be shown that

(V.I.1-57)

(V.I.1-58)

such that

(V.I.1-59)

(V.I.1-60)

Obviously

(V.I.1-61)

It is also possible to show that

(V.I.1-62)

If

(V.I.1-63)

then

(V.I.1-64)

and

(V.I.1-65)

and

(V.I.1-66)

and

(V.I.1-67)

and

(V.I.1-68)

which state the orthogonality properties of sinusoids and which can be proved. Remark that (V.I.1-67) is a

special case of (V.I.1-64) and (V.I.1-68) is a special case of (V.I.1-66). Particularly eq. (V.I.1-66) is interesting for

our discussion in regard to (V.I.1-60) and (V.I.1-53), since it states that sinusoids are independent.

If (V.I.1-52) is redefined as

(V.I.1-69)

then I(f) is called the sample spectrum.

The sample spectrum is in fact a Fourier cosine transformation of the autocovariance function estimate. Denote

the covariance-estimate of (V.I.1-7)by the sample-covariance (c.q. the numerator of (V.I.1-10)), the complex

number i, and the frequency by f, then

(V.I.1-70)

On using (V.I.1-55)and (V.I.1-70) it follows that

(V.I.1-71)

which can be substituted into (V.I.1-70) yielding

(V.I.1-72)

Now from (V.I.1-10) it follows

(V.I.1-73)

and if (t - t') is substituted by k then (V.I.1-72) becomes

(V.I.1-74)

which proves the link between the sample spectrum and the estimated autocovariance function.

On taking expectations of the spectrum we obtain

(V.I.1-75)

for which it can be shown that

(V.I.1-76)

On combining (V.I.1-75) and (V.I1.1-76) and on defining the power spectrum as p(f) we find

(V.I.1-77)

It is quite obvious that

(V.I.1-78)

so that it follows that the power spectrum converges if the covariance decreases rather quickly. The power

spectrum is a Fourier cosine transformation of the (population) autocovariance function. This implies that for any

theoretical autocovariance function (cfr. the following sections) a respective theoretical power spectrum can be

formulated.

Of course the power spectrum can be reformulated with respect to autocorrelations in stead of autocovariances

(V.I.1-79)

which is the so-called spectral density function.

Since

(V.I.1-80)

it follows that

(V.I.1-81)

and since g(f) > 0 the properties of g(f) are quite similar to those of a frequency distribution function.

Since it can be shown that the sample spectrum fluctuates wildly around the theoretical power spectrum a

modified (c.q. smoothed) estimate of the power spectrum is suggested as

(V.I.1-82)

b. The AR(1) process

The AR(1) process is defined as

(V.I.1-83)

where W

t

is a stationary time series, e

t

is a white noise error term, and F

t

is called the forecasting function. Now

we derive the theoretical pattern of the ACF of an AR(1) process for identification purposes.

First, we note that (V.I.1-83) may be alternatively written in the form

(V.I.1-84)

Second, we multiply the AR(1) process in (V.I.1-83) by W

t-k

in expectations form

(V.I.1-85)

Since we know that for k = 0 the RHS of eq. (V.I.1-85) may be rewritten as

(V.I.1-86)

and that for k > 0 the RHS of eq. (V.I.1-85) is

(V.I.1-87)

we may write the LHS of (V.I.1-85) as

(V.I.1-88)

From (V.I.1-88) we deduce

(V.I.1-89)

and

(V.I.1-90)

(figure V.I.1-1)

We can now easily observe how the theoretical ACF of an AR(1) process should look like. Note that we have

already added the theoretical PACF of the AR(1) process since the first partial autocorrelation coefficient is

exactly equivalent to the first autocorrelation coefficient.

In general, a linear filter process is stationary if the (B) polynomial converges.

Remark that the AR(1) process is stationary if the solution for (1 - |B) = 0 is larger in absolute value than 1 (c.q.

the roots of (B) are, in absolute value, less than 1).

This solution is |

-1

.Hence, if the absolute value of the AR(1) parameter is less than 1, then model is stationary

which can be illustrated by the fact that

(V.I.1-91)

For a general AR(p) model the solutions of

(V.I.1-92)

for which

(V.I.1-93)

must be satisfied in order to obtain stationarity.

c. The AR(2) process

The AR(2) process is defined as

(V.I.1-94)

where W

t

is a stationary time series, e

t

is a white noise error term, and F

t

is the forecasting function.

The process defined in (V.I.1-94) can be written in the form

(V.I.1-95)

and therefore

(V.I.1-96)

Now, for (V.I.1-96) to be valid, it easily follows that

(V.I.1-97)

and that

(V.I.1-98)

and that

(V.I.1-99)

and finally that

(V.I.1-100)

The model is stationary if the

i

weights converge. This is the case when some conditions on |

1

and |

2

are

imposed. These conditions can be found on using the solutions of the polynomial of the AR(2) model. The so-

called characteristic equationis used to find these solutions

(V.I.1-101)

The solutions of

1

and

2

are

(V.I.1-102)

which can be either real or complex. Notice that the roots are complex if

When these solutions, in absolute value, are smaller than 1, the AR(2) model is stationary.

Later, it will be shown that these conditions are satisfied if |

1

and |

2

lie in a (Stralkowski) triangular

region restricted by

(V.I.1-103)

The derivation of the theoretical ACF and PACF for an AR(2) model is described below.

On multiplying the AR(2) model by W

t-k

, and taking expectations we obtain

(V.I.1-104)

From (V.I.1-97) and (V.I.1-98) it follows that

(V.I.1-105)

Now it is possible to combine (V.I.1-104) with (V.I.1-105) such that

(V.I.1-106)

from which it follows that

(V.I.1-107)

Therefore

(V.I.1-108)

Eq. (V.I.1-106) can be rewritten as

(V.I.1-109)

such that on using (V.I.1-108) it is obvious that

(V.I.1-110)

According to (V.I.1-107) the ACF is a second order stochastic difference equation of the form

(V.I.1-111)

where (due to (V.I.1-108))

(V.I.1-112)

are starting values of the difference equation.

In general, the solution to the difference equation is, according to Box and Jenkins (1976), given by

(V.I.1-113)

In particular, three different cases can be worked out for the solutions of the difference equation

(V.I.1-114)

of (V.I.1-102). The general solution of eq. (V.I.1-113) can be written in the form

(V.I.1-115)

(V.I.1-116)

Remark that for the case the following stationarity conditions

(V.I.1-117)

(V.I.1-118)

has two solutions

due to (V.I.1-114) and

due to

(V.I.1-119)

Hence we find the general solution to the difference equation

(V.I.1-120)

In order to impose convergence the following must hold

(V.I.1-121)

Hence two conditions have to be satisfied

(V.I.1-122)

which describes a part of a parabola consisting of acceptable parameter values for

Remark that this parabola is the frontier between acceptable real-valued and acceptable complex roots (cfr.

Triangle of Stralkowski).

(V.I.1-123)

in goniometric notation.

The general solution for the second-order difference equation can be found by

(V.I.1-124)

On defining

(V.I.1-125)

the ACF can be shown to be real-valued since

(V.I.1-126)

On using the property

(V.I.1-127)

eq. (V.I.1-126) becomes

(V.I.1-128)

with

(V.I.1-129)

In eq. (V.I.1-128) it is shown that the ACF is oscillating with period = 2t/u and a variable amplitude of

(V.I.1-130)

as a function of k.

A useful equation can be found to compute the period of the pseudo-periodic behavior of the time series as

(V.I.1-131)

which must satisfy the convergence condition (c.q. the amplitude is exponentially decreasing)

(V.I.1-132)

The pattern of the theoretical PACF can be deduced from relations (V.I.1-25) - (V.I.1-28).

The theoretical ACF and PACF are illustrated below. Figure (V.I.1-2) contains two possible ACF and PACF patterns

for real roots while figure (V.I.1-3) shows the ACF and PACF patterns when the roots are complex.

(figure V.I.1-2)

(figure V.I.1-3)

d. The AR(p) process

An AR(p) process is defined by

(V.I.1-133)

where W

t

is a stationary time series, e

t

is a white noise error component, and F

t

is the forecasting function.

As described above, the AR(p) process can be written

(V.I.1-134)

Hence

(V.I.1-135)

The weights converge if the stationarity conditions of the roots of the characteristic equation

(V.I.1-136)

The variance can be shown to be

(V.I.1-137)

(V.I.1-138)

which can be used to study the behavior of the theoretical ACF pattern.

Remember, that the Yule-Walker relations (V.I.1-26) and (V.I.1-27) hold for all AR(p) models. These can be

used (together with the application of Cramer's Rule (V.I.1-28)) to derive the theoretical PACF pattern from the

theoretical ACF function.

e. The MA(1) process

The definition of the MA(1) process is given by

(V.I.1-139)

where W

t

is a stationary time series, e

t

is a white noise error component, and F

t

is the forecasting function

eq. (V.I.1-46) and (V.I.1-45) we obtain

(V.I.1-140)

Therefore the pattern of the theoretical ACF is

(V.I.1-141)

Note that from eq. (5.I.1-141) it follows that

(V.I.1-142)

This implies that there exist at least two MA(1) processes which generate the same theoretical ACF.

Since an MA process consists of a finite number of y weights it follows that the process is always stationary.

However, it is necessary to impose the so-called invertibility restrictions such that the MA(q) process can be

rewritten into a AR() model.

(V.I.1-143)

converges.

On using the Yule-Walker equations and eq. (V.I.1-141) it can be shown that the theoretical PACF is

(V.I.1-144)

Hence the theoretical PACF is dominated by an exponential function which decreases.

The theoretical ACF and PACF for the MA(1) are illustrated in figure (V.I.1-4).

(figure V.I.1-4)

f. The MA(2) process

By definition the MA(2) process is

(V.I.1-145)

which can be rewritten on using (V.I.1-139)

(V.I.1-146)

where W

t

is a stationary time series, e

t

is a white noise error component, and F

t

is the forecasting function.

into eq. (V.I.1-46) and (V.I.1-45) we obtain

(V.I.1-147)

Hence the theoretical ACF can be deduced

(V.I.1-148)

The invertibility conditions can be shown to be

(V.I.1-149)

(compare with the stationarity conditions of the AR(2) process).

The deduction of the theoretical PACF is rather complicated but can be shown to be dominated by the sum of

two exponentials (in case of real roots), or by decreasing sine waves (in case the roots are complex).

These two possible cases are shown in figures (V.I.1-5) and (V.I.1-6).

(figure V.I.1-5)

(figure V.I.1-6)

g. The MA(q) process

The MA(q) process is defined by

(V.I.1-150)

where W

t

is a stationary time series, e

t

is a white noise error component, and F

t

is the forecasting function.

Remark that this description of the MA(q) process is not straightforward-to-use for forecasting purposes due to its

recursive character.

into (V.I.1-46) and (V.I.1-45), the following autocovariances can be deduced

(V.I.1-151)

Hence the theoretical ACF is

(V.I.1-152)

The theoretical PACF for higher order MA(q) processes are extremely complicated and not extensively discussed

in literature.

(V.I.1-153)

h. The ARMA(1,1) process

On combining an AR(1) and a MA(1) process one obtains an ARMA(1,1) model which is defined as

(V.I.1-154)

where W

t

is a stationary time series, e

t

is a white noise error component, and F

t

is the forecasting function.

Note that the model of (V.I.1-154) may alternatively be written as

(V.I.1-155)

such that

(V.I.1-156)

in -weight notation.

The -weights can be related to the ARMA parameters on using

(V.I.1-157)

such that the following is obtained

(V.I.1-158)

Also the t-weights can be related to the ARMA parameters on using

(V.I.1-159)

such that the following is obtained

(V.I.1-160)

From (V.I.1-158) and (V.I.1-160) it can be clearly seen that an ARMA(1,1) is in fact a parsimonious description of

either an AR or a MA process with an infinite amount of weights. This does not imply that all higher order AR(p) or

MA(q) processes may be written as an ARMA(1,1). Though, in practice an ARMA process (c.q. a mixed model) is,

quite frequently, capable of capturing higher order pure-AR t-weights or pure-MA -weights.

On writing the ARMA(1,1) process as

(V.I.1-161)

(which is a difference equation) we may multiply by W

t-k

and take expectations. This gives

(V.I.1-162)

In case k > 1 the RHS of (V.I.1-162) is zero thus

(V.I.1-163)

If k = 0 or if k = 1 then

(V.I.1-164)

Hence we obtain

(V.I.1-165)

The theoretical ACF is therefore

(V.I.1-166)

The theoretical ACF and PACF patterns for the ARMA(1,1) are illustrated in figures (V.I.1-7), (V.I.1-8), and (V.I.1-

9).

(figure V.I.1-7)

(figure V.I.1-8)

(figure V.I.1-9)

i. The ARMA(p,q) process

The general ARMA(p,q) can be defined by

(V.I.1-167)

or alternatively in MA() notation

(V.I.1-168)

or in AR() notation

(V.I.1-169)

where t(B) = 1/(B).

The stationarity conditions depend on the AR part: the roots of |(B) = 0 must be larger than 1.

The invertibilityconditions only depend on the MA part: the roots of u(B) = 0 must also be larger than 1.

The theoretical ACF and PACF patterns are deduced from the so-called difference equations

(V.I.1-170)

k. Non stationary time series

Most economic (and also many other) time series do not satisfy the stationarity conditions stated earlier for which

ARMA models have been derived. Then these times series are called non stationary and should be re-expressed

such that they become stationary with respect to the variance and the mean.

It is not suggested that the description of the following re-expression tools is exhaustive! They rather form a set of

tools which have shown to be useful in practice. It is quite evident that many extensions are possible with respect

to re-expression tools: these are discussed in literature such as in JENKINS (1976 and 1978), MILLS (1990),

MCLEOD (1983), etc...

Transformation of time series

If we write a time series as the sum of a deterministic mean and a disturbance term

(V.I.1-193)

(V.I.1-194)

where h is an arbitrary function.

(V.I.1-195)

This can be used to obtain the variance of the transformed series

(V.I.1-196)

which implies that the variance can be stabilized by imposing

(V.I.1-197)

Accordingly, if the standard deviation of the series is proportional to the mean level

(V.I.1-198)

then

(V.I.1-199)

from which it follows that

(V.I.1-200)

In case the variance of the series is proportional to the mean level, then

(V.I.1-201)

from which it follows that

(V.I.1-202)

With the use of a Standard Deviation / Mean Procedure (SMP) we are able to detect heteroskedasticity in

the time series. Above that, with the help of the SMP, it is quite often possible to find an appropriate

transformation which will ensure the time series to be homoskedastic. In fact, it is assumed that there exists a

relationship between the mean level of the time series and the variance or standard deviation as in

(V.I.1-203)

which is an explicitly assumed relationship, in contrast to (V.I.1-194).

The SMP is generated by a three step process:

the time series is spilt into equal (chronological) segments;

for each segment the arithmetic mean and standard deviation is computed;

the mean and S.D. of each segment is plotted or regressed against each other.

By selecting the length of the segments equal to the seasonal period one ensures that the S.D. and mean is

independent from the seasonal period.

In practice one of the following patterns will be recognized (as summarized in the graph). Note that the lambda

parameter should take a value of zero when a linearly proportional association between S.D. and the mean is

recognized.

The value of lambda is in fact the transformation parameter which implies the following:

(V.I.1-204)

(Figure V.I.1-10)

Differencing of time series

With the use of the Autocorrelation Function (ACF) (with autocorrelations on the y axis and the different time

lags on the x axis) it is possible to detect unstationarity of the time series with respect to the mean level.

(figure V.I.1-11)

When the ACF of the time series is slowly decreasing, this is an indication that the mean is not stationary. An

example of such an ACF is given in figure (V.I.1-11).

The differencing operator (nabla) is used to make the time series stationary.

l. Differencing (Nabla and B operator)

We have already used the back shift operator in previous sections. As we know the back shift operator (B-

operator) transforms an observation of a time series to the previous one

(V.I.1-205)

Also, it can easily be shown that

(V.I.1-206)

Note that if we back shift k times seasonally we get

(V.I.1-207)

The back shift operator can be useful in defining the nabla operator which is

(V.I.1-208)

or in general

(V.I.1-209)

which is sometimes also called the differencing operator.

As stated before, a time series which is not stationary with respect to the mean can be made stationary by

differencing. How can this be interpreted ?

(figure V.I.1-12)

In figure (V.I.1-12) a function is displayed with two points on a graph (a, f(a)), and (b, f(b)).

Assume that a time series is generated by the function f(x). Then the derivative of the function gives the slope of

a line tangent with respect to the graph in every point of the function's domain.

The derivative of a function is defined as

(V.I.1-210)

If we compute the slope of the cord in (figure V.I.1-12), this is in fact the same as the derivative of f(x) between a

and b with a discrete step in stead of an infinitesimal small step.

This results in computing

(V.I.1-211)

Although we have assumed the time series to be generated by f(x), in practice we only observe sample values at

discrete time intervals. Therefore the best approximation of f(x) between two known points (a, f(a)) and (b, f(b))

is a straight line with slope given by (V.I.1-211).

If this approximation is to be optimal, the distance between a and b should be as small as possible. Since the

smallest difference between observations of equally spaced time series is the time lag itself, the smallest value of

h in eq. (V.I.1-211) is in fact equal to 1.

Therefore (V.I.1-211) reduces to

(V.I.1-212)

which is nothing else but the differencing operator.

We conclude that by differencing a time series we 'derive' the function by which it is generated, and therefore

reduce the function's power by 1. If e.g. we would have a time series generated by a quadratic function, we could

make it stationary by differencing the series twice.

Furthermore it should be noted that if a time series is non stationary, and must therefore be 'derived' to induce

stationarity, the series is often called to be generated by an integrated process. Now the ARMA models which

have been describedbefore, can be elaborated to the class of ARIMA (c.q. Autoregressive Integrated Moving

Average) models.

m. The behavior of non stationary time series

In the previous subsections, non stationarity has been discussed at a rather intuitive level. Now we will discuss

some morefundamental properties of the behavior of non stationary time series.

A time series that is generated by

(V.I.1-213)

with (B) an AR operator which is not stationary: (B) has d roots equal to 1; all other roots lie outside the unit

circle. Thus eq. (V.I.1-213) can be written by factoring out the unit roots

(V.I.1-214)

where |(B) is stationary.

In general a univariate stochastic process as (V.I.1-214) is denoted an ARIMA(p,d,q) model where p is the

autoregressive order, d is the number of non-seasonal differences, and q is the order of the moving average

components.

Quite evidently, time series exhibiting non stationarity in both variance and mean, are first to be transformed in

order to induce a stable variance, and then to be differenced enabling stationarity with respect to the mean level.

The reason for this is that power, and logarithmic transformations are not always defined for negative (real)

numbers.

The ARIMA(p,d,q) model can be expanded by introducing deterministic d-order polynomial trends.

This is simply achieved by adding a parameter - constant to (V.I.1-214), expressed in terms of a (non-seasonal)

non-stationary time series Z

t

(V.I.1-215)

The same properties can be achieved by writing (V.I.1-215) as an invertible ARMA process

(V.I.1-216)

where c is a parameter-constant. This is because

(V.I.1-217)

Also remark that the p AR parameters must not add to unity, since this would, according to (V.I.1-217), imply (in

the limit) an infinite mean level, an obvious nonsense!

An ARIMA model can be generally written as a difference equation. For instance, the ARIMA(1,1,1) can be

formulated as

(V.I.1-218)

which illustrates the postulated fact. This form of the ARIMA model is used for recursive forecasting purposes.

The ARIMA model can also be generally written as a random shock model (c.q. a model in terms of the -weights,

and the white noise error components) since

(V.I.1-219)

it follows that

(V.I.1-220)

Hence, if j is the maximum of (p + d - 1, q)

(V.I.1-221)

it follows that the -weights satisfy

(V.I.1-222)

which implies that large-lagged -weights are composed of polynomials, exponentials (damped), and sinusoids

(damped) with respect to index j.

This form of the ARIMA model (c.q. eq. (V.I.1-219)) is used to compute the forecast confidence intervals.

A third way of writing an ARIMA model is the truncated random shock model form.

The parameter k may be interpreted as the time origin of the observable data. First, we observe that if Y

t

' is a

particular solution of (V.I.1-213), thus if

(V.I.1-223)

then it follows from (V.I.1-213), and (V.I.1-223) that

(V.I.1-224)

Hence, the general solution of (V.I.1-213) is the sum of

Y

t

'' (c.q. a complementary function which is the solution of (V.I.1-224)), and Y

t

' (c.q. a particular integral which is

a particular solution of (V.I.1-213)).

(V.I.1-225)

and that the general solution of the homogeneous difference equation with respect to time origin k < t is given by

(V.I.1-226)

(V.I.1-227)

(V.I.1-228)

since

(V.I.1-229)

see also (V.I.1-227).

The general complementary function for

(V.I.1-230)

is

(V.I.1-231)

with D

i

described in

(V.I.1-232)

From (V.I.1-231) it can be concluded that the complementary function involves a mixture of:

(V.I.1-233)

(with -weights of the random shock model form) satisfying the ARIMA model structure (where B operates on t,

not on k)

(V.I.1-234)

which can be easily proved on noting that

(V.I.1-235)

such that

(V.I.1-236)

Hence, if t - k > q eq. (V.I.1-233) is the particular integral of (V.I.1-234).

If in an extreme case k = - then

(V.I.1-237)

called the nontruncated random shock form of the ARIMA model.

(V.I.1-238)

(compare this result with (V.I.1-237)).

Also remark that it is evident that

(V.I.1-239)

This implies that when using the complementary function for forecasting purposes, it is advisable to update the

forecast as new observations become available.

o. Unit root tests

There are d unit roots in a non-stationary time series (with respect to the mean) if |(B) is stationary and u(B)

invertible in

(V.I.1-252)

The most frequently used test for unit roots is the augmented Dickey-Fuller regression (ADF)

(V.I.1-253)

(V.I.1-254)

An example of the use of the ADF is the following LR-test

(V.I.1-255)

where

(V.I.1-256)

(V.I.1-257)

Some critical 95% values for this LR-test (K >1) are: 7.24 (for T > 24), 6.73 (for T > 50), 6.49 (for T > 100), and

6.25 (for T >120). It is also possible to perform an Engle-Granger cointegration test between the variables X

t,i

.

This test estimates the cointegrating regression in a first step

(V.I.1-258)

Vous aimerez peut-être aussi

- Problems Chaptr 1 PDFDocument4 pagesProblems Chaptr 1 PDFcaught inPas encore d'évaluation

- Stochastic Modelling 2000-2004Document189 pagesStochastic Modelling 2000-2004Brian KufahakutizwiPas encore d'évaluation

- Research ProposalDocument6 pagesResearch ProposalApam BenjaminPas encore d'évaluation

- 6th Central Pay Commission Salary CalculatorDocument15 pages6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- An Invitation To General Algebra and Invitation To Construction 3.0Document469 pagesAn Invitation To General Algebra and Invitation To Construction 3.0Debraj SarkarPas encore d'évaluation

- Tuyeras Thesis 1 03 PDFDocument353 pagesTuyeras Thesis 1 03 PDFDaniel AlejandroPas encore d'évaluation

- Linear Dynamical Systems - Course ReaderDocument414 pagesLinear Dynamical Systems - Course ReaderTraderCat SolarisPas encore d'évaluation

- Applied Linear Algebra: An Introduction with MATLABDocument191 pagesApplied Linear Algebra: An Introduction with MATLABTaraPas encore d'évaluation

- تعليم العناصر المنتهية PDFDocument197 pagesتعليم العناصر المنتهية PDFZaid HadiPas encore d'évaluation

- Ma Thematic A Cheat SheetDocument2 pagesMa Thematic A Cheat Sheetlpauling100% (1)

- Math CatalogDocument24 pagesMath Catalogrrockel0% (1)

- 146148488X PDFDocument444 pages146148488X PDFAnonymous cWTxkWZFBLPas encore d'évaluation

- Stability of Linear Systems: Some Aspects of Kinematic SimilarityD'EverandStability of Linear Systems: Some Aspects of Kinematic SimilarityPas encore d'évaluation

- Probability and Geometry On Groups Lecture Notes For A Graduate CourseDocument209 pagesProbability and Geometry On Groups Lecture Notes For A Graduate CourseChristian Bazalar SalasPas encore d'évaluation

- Mathematica SolutionDocument38 pagesMathematica SolutionShakeel Ahmad KasuriPas encore d'évaluation

- Categorical Operational PhysicsDocument181 pagesCategorical Operational PhysicswrdoPas encore d'évaluation

- Harmonic Analysis and Rational ApproximationDocument307 pagesHarmonic Analysis and Rational ApproximationياسينبوهراوةPas encore d'évaluation

- Group Theory (MIlne)Document133 pagesGroup Theory (MIlne)Juliano Deividy B. SantosPas encore d'évaluation

- ColorFunction Example MathematicaDocument21 pagesColorFunction Example Mathematicac-a-lunaPas encore d'évaluation

- Project MphilDocument64 pagesProject Mphilrameshmaths_aplPas encore d'évaluation

- Chapter 11 Mathematica For EconomistsDocument17 pagesChapter 11 Mathematica For EconomistsAkif MuhammadPas encore d'évaluation

- Comparative Analysis of Different Numerical Methods For The Solution of Initial Value Problems in First Order Ordinary Differential EquationsDocument3 pagesComparative Analysis of Different Numerical Methods For The Solution of Initial Value Problems in First Order Ordinary Differential EquationsEditor IJTSRDPas encore d'évaluation

- Adaptive Algorithms for Fluid Dynamics and Heat ConductionDocument376 pagesAdaptive Algorithms for Fluid Dynamics and Heat ConductionGusgaraPas encore d'évaluation

- Ex4 Tutorial - Forward and Back-PropagationDocument20 pagesEx4 Tutorial - Forward and Back-PropagationAnandPas encore d'évaluation

- Stochastic CalculusDocument217 pagesStochastic CalculusBenjamin HinesPas encore d'évaluation

- ProjectDocument39 pagesProjectJeo C AuguinPas encore d'évaluation

- ECC - Cyclic Group Cryptography With EllipticDocument21 pagesECC - Cyclic Group Cryptography With EllipticMkumPas encore d'évaluation

- Lecture Notes On Differential EquationsDocument156 pagesLecture Notes On Differential EquationsAyesha KhanPas encore d'évaluation

- Dynamical Systems Method for Solving Nonlinear Operator EquationsD'EverandDynamical Systems Method for Solving Nonlinear Operator EquationsÉvaluation : 5 sur 5 étoiles5/5 (1)

- Final Versiongauss and The Method of Least SquaresDocument25 pagesFinal Versiongauss and The Method of Least Squaresakshi_gillPas encore d'évaluation

- Milne - Fields and Galois TheoryDocument111 pagesMilne - Fields and Galois TheoryDavid FerreiraPas encore d'évaluation

- Random MatricesDocument27 pagesRandom MatricesolenoblePas encore d'évaluation

- MATH2045: Vector Calculus & Complex Variable TheoryDocument50 pagesMATH2045: Vector Calculus & Complex Variable TheoryAnonymous 8nJXGPKnuW100% (2)

- Lab 04 Eigen Value Partial Pivoting and Elimination PDFDocument12 pagesLab 04 Eigen Value Partial Pivoting and Elimination PDFUmair Ali ShahPas encore d'évaluation

- Linear Algebra (SM), KuttlerDocument183 pagesLinear Algebra (SM), Kuttlercantor2000Pas encore d'évaluation

- Comments On The Savitzky Golay Convolution Method For Least Squares Fit Smoothing and Differentiation of Digital DataDocument4 pagesComments On The Savitzky Golay Convolution Method For Least Squares Fit Smoothing and Differentiation of Digital DataHéctor F BonillaPas encore d'évaluation

- Surv138 Endmatter PDFDocument41 pagesSurv138 Endmatter PDFRajesh Shahi100% (1)

- Introduction To Calculus of Vector FieldsDocument46 pagesIntroduction To Calculus of Vector Fieldssalem aljohiPas encore d'évaluation

- Professor Emeritus K. S. Spiegler Auth. Principles of Energetics Based On Applications de La Thermodynamique Du Non Équilibre by P. Chartier M. Gross and K. S. Spiegler Springer Verlag Berlin Hei1Document172 pagesProfessor Emeritus K. S. Spiegler Auth. Principles of Energetics Based On Applications de La Thermodynamique Du Non Équilibre by P. Chartier M. Gross and K. S. Spiegler Springer Verlag Berlin Hei1bigdevil11Pas encore d'évaluation

- Cayley Graphs of Groups and Their ApplicationsDocument47 pagesCayley Graphs of Groups and Their ApplicationsAnjeliPas encore d'évaluation

- Hardy Spaces Lecture Notes 1Document62 pagesHardy Spaces Lecture Notes 1Valdrick100% (1)

- Linear Algebra and Applications: Numerical Linear Algebra: David S. WatkinsDocument107 pagesLinear Algebra and Applications: Numerical Linear Algebra: David S. Watkinshasib_07Pas encore d'évaluation

- Chapter 17 PDFDocument10 pagesChapter 17 PDFChirilicoPas encore d'évaluation

- Probability and Statistics ConceptsDocument73 pagesProbability and Statistics ConceptsatifPas encore d'évaluation

- Commutative AlgebraDocument428 pagesCommutative AlgebraNomen NescioPas encore d'évaluation

- Mathematics as a Language: Chapters on Standard Form, Quadratic Expressions, Sets, Reasoning and MoreDocument6 pagesMathematics as a Language: Chapters on Standard Form, Quadratic Expressions, Sets, Reasoning and MoreFarah LiyanaPas encore d'évaluation

- Grothendieck Topologies and Étale Cohomology: Pieter BelmansDocument25 pagesGrothendieck Topologies and Étale Cohomology: Pieter BelmansWalter Andrés Páez GaviriaPas encore d'évaluation

- Financial Risk Management With Bayesian Estimation of GARCH Models PDFDocument204 pagesFinancial Risk Management With Bayesian Estimation of GARCH Models PDFAnonymous 4gOYyVfdfPas encore d'évaluation

- ElectromagnetismDocument89 pagesElectromagnetismTeh Boon SiangPas encore d'évaluation

- The University of Edinburgh Dynamical Systems Problem SetDocument4 pagesThe University of Edinburgh Dynamical Systems Problem SetHaaziquah TahirPas encore d'évaluation

- Differential GeometryDocument161 pagesDifferential GeometrysajId146100% (1)

- Number TheoryDocument17 pagesNumber TheoryStephanie Joy PascuaPas encore d'évaluation

- Exercises For Stochastic CalculusDocument85 pagesExercises For Stochastic CalculusA.Benhari100% (2)

- Stanislav v. Emelyanov PHD, Sergey K. Korovin PHD Auth. Control of Complex and Uncertain Systems New Types of FeedbackDocument324 pagesStanislav v. Emelyanov PHD, Sergey K. Korovin PHD Auth. Control of Complex and Uncertain Systems New Types of FeedbackflausenPas encore d'évaluation

- Lecture 3 EigenvaluesDocument38 pagesLecture 3 EigenvaluesRamzan8850Pas encore d'évaluation

- Eigen FilteringDocument6 pagesEigen Filteringlakshmipriya94Pas encore d'évaluation

- Sylvester Criterion For Positive DefinitenessDocument4 pagesSylvester Criterion For Positive DefinitenessArlette100% (1)

- Notes On Social Choice and Mechanism Design Econ 8104, Spring 2009, Kim Sau ChungDocument27 pagesNotes On Social Choice and Mechanism Design Econ 8104, Spring 2009, Kim Sau ChungMegan JohnstonPas encore d'évaluation

- Sporadic Groups, AschbacherDocument164 pagesSporadic Groups, AschbacherMon0idPas encore d'évaluation

- Oxygen Cycle: PlantsDocument3 pagesOxygen Cycle: PlantsApam BenjaminPas encore d'évaluation

- Lecture7 Module2 Anova 1Document10 pagesLecture7 Module2 Anova 1Apam BenjaminPas encore d'évaluation

- Lecture4 Module2 Anova 1Document9 pagesLecture4 Module2 Anova 1Apam BenjaminPas encore d'évaluation

- MANOVA Analysis of Plant Growth with LegumesDocument8 pagesMANOVA Analysis of Plant Growth with LegumesApam BenjaminPas encore d'évaluation

- PLM IiDocument3 pagesPLM IiApam BenjaminPas encore d'évaluation

- Counseling Experience and Location AnalysisDocument8 pagesCounseling Experience and Location AnalysisApam BenjaminPas encore d'évaluation

- Nitrogen Cycle: Ecological FunctionDocument8 pagesNitrogen Cycle: Ecological FunctionApam BenjaminPas encore d'évaluation

- Cocoa (Theobroma Cacao L.) : Theobroma Cacao Also Cacao Tree and Cocoa Tree, Is A Small (4-8 M or 15-26 FT Tall) EvergreenDocument12 pagesCocoa (Theobroma Cacao L.) : Theobroma Cacao Also Cacao Tree and Cocoa Tree, Is A Small (4-8 M or 15-26 FT Tall) EvergreenApam BenjaminPas encore d'évaluation

- Lecture8 Module2 Anova 1Document13 pagesLecture8 Module2 Anova 1Apam BenjaminPas encore d'évaluation

- Lecture5 Module2 Anova 1Document9 pagesLecture5 Module2 Anova 1Apam BenjaminPas encore d'évaluation

- Lecture6 Module2 Anova 1Document10 pagesLecture6 Module2 Anova 1Apam BenjaminPas encore d'évaluation

- Hosmer On Survival With StataDocument21 pagesHosmer On Survival With StataApam BenjaminPas encore d'évaluation

- PLMDocument5 pagesPLMApam BenjaminPas encore d'évaluation

- ARIMA (Autoregressive Integrated Moving Average) Approach To Predicting Inflation in GhanaDocument9 pagesARIMA (Autoregressive Integrated Moving Average) Approach To Predicting Inflation in GhanaApam BenjaminPas encore d'évaluation

- How Does Information Technology Impact On Business Relationships? The Need For Personal MeetingsDocument14 pagesHow Does Information Technology Impact On Business Relationships? The Need For Personal MeetingsApam BenjaminPas encore d'évaluation

- A Study On School DropoutsDocument6 pagesA Study On School DropoutsApam BenjaminPas encore d'évaluation

- Impact of Feeding Practices on Infant GrowthDocument116 pagesImpact of Feeding Practices on Infant GrowthApam BenjaminPas encore d'évaluation

- Trial Questions (PLM)Document3 pagesTrial Questions (PLM)Apam BenjaminPas encore d'évaluation

- Personal Information: Curriculum VitaeDocument6 pagesPersonal Information: Curriculum VitaeApam BenjaminPas encore d'évaluation

- Creating STATA DatasetsDocument4 pagesCreating STATA DatasetsApam BenjaminPas encore d'évaluation

- Ajsms 2 4 348 355Document8 pagesAjsms 2 4 348 355Akolgo PaulinaPas encore d'évaluation

- Asri Stata 2013-FlyerDocument1 pageAsri Stata 2013-FlyerAkolgo PaulinaPas encore d'évaluation

- Regression Analysis of Road Traffic Accidents and Population Growth in GhanaDocument7 pagesRegression Analysis of Road Traffic Accidents and Population Growth in GhanaApam BenjaminPas encore d'évaluation

- Banque AtlanticbanDocument1 pageBanque AtlanticbanApam BenjaminPas encore d'évaluation

- Project ReportsDocument31 pagesProject ReportsBilal AliPas encore d'évaluation

- How To Write A Paper For A Scientific JournalDocument10 pagesHow To Write A Paper For A Scientific JournalApam BenjaminPas encore d'évaluation

- MSC Project Study Guide Jessica Chen-Burger: What You Will WriteDocument1 pageMSC Project Study Guide Jessica Chen-Burger: What You Will WriteApam BenjaminPas encore d'évaluation

- l.4. Research QuestionsDocument1 pagel.4. Research QuestionsApam BenjaminPas encore d'évaluation

- STS Chapter 1 ReviewerDocument4 pagesSTS Chapter 1 ReviewerEunice AdagioPas encore d'évaluation

- SECTION 303-06 Starting SystemDocument8 pagesSECTION 303-06 Starting SystemTuan TranPas encore d'évaluation

- A Compilation of Thread Size InformationDocument9 pagesA Compilation of Thread Size Informationdim059100% (2)

- Metal Framing SystemDocument56 pagesMetal Framing SystemNal MénPas encore d'évaluation

- Chapter 16 - Energy Transfers: I) Answer The FollowingDocument3 pagesChapter 16 - Energy Transfers: I) Answer The FollowingPauline Kezia P Gr 6 B1Pas encore d'évaluation

- Tutorial On The ITU GDocument7 pagesTutorial On The ITU GCh RambabuPas encore d'évaluation

- HVCCI UPI Form No. 3 Summary ReportDocument2 pagesHVCCI UPI Form No. 3 Summary ReportAzumi AyuzawaPas encore d'évaluation

- Accomplishment Report Yes-O NDCMC 2013Document9 pagesAccomplishment Report Yes-O NDCMC 2013Jerro Dumaya CatipayPas encore d'évaluation

- Ancient MesopotamiaDocument69 pagesAncient MesopotamiaAlma CayapPas encore d'évaluation

- WK 43 - Half-Past-TwoDocument2 pagesWK 43 - Half-Past-TwoKulin RanaweeraPas encore d'évaluation

- DNB Paper - IDocument7 pagesDNB Paper - Isushil chaudhari100% (7)

- Philippines' Legal Basis for Claims in South China SeaDocument38 pagesPhilippines' Legal Basis for Claims in South China SeaGeePas encore d'évaluation

- KAC-8102D/8152D KAC-9102D/9152D: Service ManualDocument18 pagesKAC-8102D/8152D KAC-9102D/9152D: Service ManualGamerAnddsPas encore d'évaluation

- Indian Patents. 232467 - THE SYNERGISTIC MINERAL MIXTURE FOR INCREASING MILK YIELD IN CATTLEDocument9 pagesIndian Patents. 232467 - THE SYNERGISTIC MINERAL MIXTURE FOR INCREASING MILK YIELD IN CATTLEHemlata LodhaPas encore d'évaluation

- Certificate Testing ResultsDocument1 pageCertificate Testing ResultsNisarg PandyaPas encore d'évaluation

- Idioms & Phrases Till CGL T1 2016Document25 pagesIdioms & Phrases Till CGL T1 2016mannar.mani.2000100% (1)

- 47-Article Text-338-1-10-20220107Document8 pages47-Article Text-338-1-10-20220107Ime HartatiPas encore d'évaluation

- Acuity Assessment in Obstetrical TriageDocument9 pagesAcuity Assessment in Obstetrical TriageFikriPas encore d'évaluation

- TILE QUOTEDocument3 pagesTILE QUOTEHarsh SathvaraPas encore d'évaluation

- Elevator Traction Machine CatalogDocument24 pagesElevator Traction Machine CatalogRafif100% (1)

- 1.2 - Sewing Machine and Special AttachmentsDocument3 pages1.2 - Sewing Machine and Special Attachmentsmaya_muth0% (1)

- PC3 The Sea PeopleDocument100 pagesPC3 The Sea PeoplePJ100% (4)

- Magnetic Pick UpsDocument4 pagesMagnetic Pick UpslunikmirPas encore d'évaluation

- Awakening The MindDocument21 pagesAwakening The MindhhhumPas encore d'évaluation

- Fraktur Dentoalevolar (Yayun)Document22 pagesFraktur Dentoalevolar (Yayun)Gea RahmatPas encore d'évaluation

- Update On The Management of Acute Pancreatitis.52Document7 pagesUpdate On The Management of Acute Pancreatitis.52Sebastian DeMarinoPas encore d'évaluation

- Thermal BurnsDocument50 pagesThermal BurnsPooya WindyPas encore d'évaluation

- Aortic Stenosis, Mitral Regurgitation, Pulmonary Stenosis, and Tricuspid Regurgitation: Causes, Symptoms, Signs, and TreatmentDocument7 pagesAortic Stenosis, Mitral Regurgitation, Pulmonary Stenosis, and Tricuspid Regurgitation: Causes, Symptoms, Signs, and TreatmentChuu Suen TayPas encore d'évaluation

- IS 4991 (1968) - Criteria For Blast Resistant Design of Structures For Explosions Above Ground-TableDocument1 pageIS 4991 (1968) - Criteria For Blast Resistant Design of Structures For Explosions Above Ground-TableReniePas encore d'évaluation

- Oecumenius’ Exegetical Method in His Commentary on the RevelationDocument10 pagesOecumenius’ Exegetical Method in His Commentary on the RevelationMichał WojciechowskiPas encore d'évaluation