Académique Documents

Professionnel Documents

Culture Documents

AOT - Lecture Notes V1

Transféré par

S Deva PrasadCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

AOT - Lecture Notes V1

Transféré par

S Deva PrasadDroits d'auteur :

Formats disponibles

M. Tech.

ED II SEMESTER Course Code: CED11T13 ADVANCED OPTIMIZATION TECHNIQUES LPC 3 - 3

SYLLABUS

UNIT I LINEAR PROGRAMMING: Two-phase simplex method, Big-M method, duality, interpretation, applications. UNIT II ASSIGNMENT PROBLEM: Hungarians algorithm, Degeneracy, applications, unbalanced problems, Traveling salesman problem. UNIT III CLASSICAL OPTIMIZATION TECHNIQUES: Single variable optimization with and without constraints, multi variable optimization without constraints, multi - variable optimization with constraints - method of Lagrange multipliers, Kuhn-Tucker conditions. UNIT IV NUMERICAL METHODS FOR OPTIMIZATION: Nelder Meads Simplex search method, Gradient of a function, Steepest descent method, Newtons method, type s of penalty methods for handling constraints. UNIT V GENETIC ALGORITHM (GA): Differences and similarities between conventional and evolutionary algorithms, working principle, reproduction, crossover, mutation, termination criteria, different reproduction and crossover operators, GA for constrained optimization, draw backs of GA. UNIT VI GENETIC PROGRAMMING (GP): Principles of genetic programming, terminal sets, functional sets, differences between GA & GP, random population generation, solving differential equations using GP. UNIT VII MULTI-OBJECTIVE GA: Paretos analysis, Non-dominated front, multi - objective GA, Nondominated sorted GA, convergence criterion, applications of multi-objective problems. UNIT VIII APPLICATIONS OF OPTIMIZATION IN DESIGN AND MANUFACTURING SYSTEMS: Some typical applications like optimization of path synthesis of a four-bar mechanism, minimization of weight of a cantilever beam, optimization of springs and gears, general optimization model of a machining process, optimization of arc welding parameters, and general procedure in optimizing machining operations sequence. TEXT BOOKS: 1. Jasbir S. Arora (2007), Optimization of structural and mechanical systems, 1st Edition, World Scientific, Singapore. 2. Kalyanmoy Deb (2009), Optimization for Engineering Design: Algorithms and Examples , 1st Edition, Prentice Hall of India, New Delhi, India. 3. Singiresu S. Rao (2009), Engineering Optimization: Theory and Practice, 4th Edition, John Wiley & Sons, New Delhi, India. REFERENCE BOOKS: 1. D. E. Goldberg (2006), Genetic algorithms in Search, Optimization, and Machine learning, 28th Print, AddisonWesley Publishers, Boston, USA. 2. W. B. Langdon, Riccardo Poli (2010), Foundations of genetic programming, 1st Edition, Springer, New York. 3. R. Venkata Rao, Vimal J. Savsani (2012), Mechanical Design Optimization Using Advanced Optimization Techniques, 1st Edition, Springer, New York. Other Titles

1. Hamdy A Taha (2007), Operations Research An introduction, 8th Ed, Pearson

UNIT I

LINEAR PROGRAMMING

Two-phase simplex method, Big-M method, duality, interpretation, applications.

Introduction

The first formal application of Operations Research (OR) was initiated in England during World War II, when a team of British Scientists set out to make scientifically based decisions regarding the best utilization of war material. After the war, the ideas advanced in military operations were adapted to improve efficiency and productivity in the civilian sector (Taha 2007). "Operations Research (OR) is the representation of real-world systems/problems/decisions by mathematical models together with the use of quantitative methods (algorithms) for solving such models, with a view to optimizing." A mathematical model of OR consists: Decision variables, which are the unknowns to be determined by the solution to the model. Constraints to represent the physical limitations of the system An objective function An optimal solution to the model is the identification of a set of variable values which are feasible (satisfy all the constraints) and which lead to the optimal value of the objective function. In general terms, one can regard OR as being the application of scientific methods / thinking to decision making. Underlying OR is the philosophy that: decisions have to be made; and using a quantitative (explicit, articulated) approach will lead to better decisions than using non-quantitative (implicit, unarticulated) approaches. Indeed it can be argued that although OR is imperfect it offers the best available approach to making a particular decision in many instances (which is not to say that using OR will produce the right decision). Linear Programming (LP) is an optimization method applicable for the solution of problems in which the objective function and the constraints appear as linear functions of the decision variables. The constraint equations in a linear programming problem may be in the form of equalities or inequalities (SS Rao 2009). LP is a mathematical technique designed to aid managers in allocating scarce resources (such as labor, capital, or energy) among competing activities. It reflects, in the form of a model, the organizations attempt to achieve some objective (frequently maximizing profit/rate of return, minimizing costs) in view of limited or constrained resources (available capital/labor, service levels, available machine time, capital).

The objective of a decision maker in a linear programming problem is to maximize or minimize an objective function in consideration with resources subjected to some constraints. The constraints take the form of linear inequalities, hence the name "linear" for the problem in analysis.

The general linear programming problem can be stated in the following standard forms: SCALAR FORM Minimize f (x1, x2, . . . , xn) = c1x1 + c2x2 + + cnxn subject to the constraints a11x1 + a12x2 + + a1nxn = b1 a21x1 + a22x2 + + a2nxn = b2 ... am1x1 + am2x2 + + amnxn = bm x1 0 x2 0 ... xn 0 where cj , bj , and aij (i = 1, 2, . . . ,m; j = 1, 2, . . . , n) are known constants, and xj are the decision variables.

(1)

(2)

(3)

The characteristics of a linear programming problem, stated in standard form, are 1. The objective function is of the minimization type. 2. All the constraints are of the equality type. 3. All the decision variables are nonnegative. MATRIX FORM Minimize f (X) = cTX subject to the constraints aX = b X0 Where,

(4) (5) (6)

, { } {

, } { }

, a= [ ]

Any linear programming problem can be expressed in standard form by using the following transformations. 1. The maximization of a function f (x1, x2, . . . , xn) is equivalent to the minimization of the negative of the same function. e.g: The objective function minimize f = c1x1 + c2x2 + + cnxn is equivalent to maximize f = f = c1x1 c2x2 cnxn

Consequently, the objective function can be stated in the minimization form in any linear programming problem. 2. In most engineering optimization problems, the decision variables represent some physical dimensions, and hence the variables xj will be nonnegative. However, a variable may be unrestricted in sign in some problems. In such cases, an unrestricted variable (which can take a positive, negative, or zero value) can be written as the difference of two nonnegative variables. Thus if xj is unrestricted in sign, it can be written as xj = xj xj , where xj 0 and xj 0 It can be seen that xj will be negative, zero, or positive, depending on whether x j is greater than, equal to, or less than xj 3. If a constraint appears in the form of a less than or equal to type of inequality as ak1x1 + ak2x2 + + aknxn bk it can be converted into the equality form by adding a nonnegative slack variable S1 as follows: ak1x1 + ak2x2 + + aknxn + S1= bk A linear constraint of the form can be converted into equality by adding a new, nonnegative variable to the left-hand side of the inequality. Such a variable is numerically equal to the difference between the right- and left-hand sides of the inequality and is known as slack variable. It represents the part of unutilized resource of type . Similarly, if the constraint is in the form of a greater than or equal to type of inequality as ak1x1 + ak2x2 + + aknxn bk it can be converted into the equality form by subtracting a variable as ak1x1 + ak2x2 + + aknxn S1= bk where S1 is a nonnegative variable known as a surplus variable. A linear constraint of the form can be converted into equality by adding a new, nonnegative variable to the left-hand side of the inequality. Such a variable is numerically equal to the difference between the right- and left-hand sides of the inequality and is known as surplus

variable. It represents the part of resource required in excess of the minimum limit of type

It can be seen that there are m equations in n decision variables in a linear programming problem. We can assume that m < n; for if m > n, there would be m n redundant equations that could be eliminated. The case n = m is of no interest, for then there is either a unique solution X that satisfies Eqs. (3.2 or 3.5) and (3.3 or 3.6) (in which case there can be no optimization) or no solution, in which case the constraints are inconsistent. The case m < n corresponds to an underdetermined set of linear equations, which, if they have one solution, have an infinite number of solutions. The problem of linear programming is to find one of these solutions that satisfies Eqs. (3.2 or 3.5) and (3.3 or 3.6) and yields the minimum value of f (Objective function in consideration for optimization).

LP Solutions:

When an LP is solved, one of the following four cases will occur: 1. The LP has a unique optimal solution. 2. The LP has alternative (multiple) optimal solutions. It has more than one (actually an infinite number of) optimal solutions 3. The LP is infeasible. It has no feasible solutions (The feasible region contains no points). 4. The LP is unbounded. In the feasible region there are points with arbitrarily large (in a max problem) objective function values.

PS: Please refer - SSRao (2009) for more fundamental concepts.

Two-phase simplex method

Vous aimerez peut-être aussi

- LESSON 7 Vanjo BautistaDocument19 pagesLESSON 7 Vanjo BautistaChristian paul ArnaizPas encore d'évaluation

- Maths ProjectDocument20 pagesMaths ProjectChirag JoshiPas encore d'évaluation

- Optimization Principles: 7.1.1 The General Optimization ProblemDocument13 pagesOptimization Principles: 7.1.1 The General Optimization ProblemPrathak JienkulsawadPas encore d'évaluation

- Quntiative Techniques 2 SonuDocument11 pagesQuntiative Techniques 2 SonuSonu KumarPas encore d'évaluation

- SMU Assignment Solve Operation Research, Fall 2011Document11 pagesSMU Assignment Solve Operation Research, Fall 2011amiboi100% (1)

- Operations Research PDFDocument63 pagesOperations Research PDFHari ShankarPas encore d'évaluation

- Linear ProgrammingDocument8 pagesLinear ProgrammingbeebeePas encore d'évaluation

- Op Tim IzationDocument21 pagesOp Tim IzationJane-Josanin ElizanPas encore d'évaluation

- Scan Doc0002Document9 pagesScan Doc0002jonty777Pas encore d'évaluation

- Maths ProjectDocument23 pagesMaths ProjectAjay Vernekar100% (1)

- Nonlinear ProgrammingDocument5 pagesNonlinear Programminguser2127Pas encore d'évaluation

- Project On Linear Programming ProblemsDocument29 pagesProject On Linear Programming ProblemsVinod Bhaskar67% (129)

- ShimuraDocument18 pagesShimuraDamodharan ChandranPas encore d'évaluation

- CHAPTER 6 System Techniques in Water Resuorce PPT YadesaDocument32 pagesCHAPTER 6 System Techniques in Water Resuorce PPT YadesaGod is good tubePas encore d'évaluation

- Nonlinear Programming: Applicability Possible Types of Constraint Set Methods For Solving The Problem ExamplesDocument4 pagesNonlinear Programming: Applicability Possible Types of Constraint Set Methods For Solving The Problem ExamplesLewis SitorusPas encore d'évaluation

- Discuss The Methodology of Operations ResearchDocument5 pagesDiscuss The Methodology of Operations Researchankitoye0% (1)

- Integration and DifferentiationDocument27 pagesIntegration and DifferentiationJane-Josanin ElizanPas encore d'évaluation

- Vijay Maths ProjectDocument54 pagesVijay Maths ProjectVijay MPas encore d'évaluation

- LP Methods.S4 Interior Point MethodsDocument17 pagesLP Methods.S4 Interior Point MethodsnkaprePas encore d'évaluation

- Mathematical Optimization - WikipediaDocument16 pagesMathematical Optimization - WikipediaDAVID MURILLOPas encore d'évaluation

- Approximation Models in Optimization Functions: Alan D Iaz Manr IquezDocument25 pagesApproximation Models in Optimization Functions: Alan D Iaz Manr IquezAlan DíazPas encore d'évaluation

- 6214 Chap01Document34 pages6214 Chap01C V CHANDRASHEKARAPas encore d'évaluation

- Linear ProgrammingDocument54 pagesLinear ProgrammingkatsandePas encore d'évaluation

- Chapter 3 - Deriving Solutions From A Linedeear Optimization Model PDFDocument40 pagesChapter 3 - Deriving Solutions From A Linedeear Optimization Model PDFTanmay SharmaPas encore d'évaluation

- A. H. Land A. G. Doig: Econometrica, Vol. 28, No. 3. (Jul., 1960), Pp. 497-520Document27 pagesA. H. Land A. G. Doig: Econometrica, Vol. 28, No. 3. (Jul., 1960), Pp. 497-520john brownPas encore d'évaluation

- MGMT ScienceDocument35 pagesMGMT ScienceraghevjindalPas encore d'évaluation

- Describe The Structure of Mathematical Model in Your Own WordsDocument10 pagesDescribe The Structure of Mathematical Model in Your Own Wordskaranpuri6Pas encore d'évaluation

- MB0032 Set-1Document9 pagesMB0032 Set-1Shakeel ShahPas encore d'évaluation

- Using graphical method to solve the linear programming.:ريرقتلا نساDocument5 pagesUsing graphical method to solve the linear programming.:ريرقتلا نساQUSI E. ABDPas encore d'évaluation

- Application of A Dual Simplex Method To Transportation Problem To Minimize The CostDocument5 pagesApplication of A Dual Simplex Method To Transportation Problem To Minimize The CostInternational Journal of Innovations in Engineering and SciencePas encore d'évaluation

- Operational ResearchDocument23 pagesOperational ResearchAyesha IqbalPas encore d'évaluation

- Optimization MethodsDocument62 pagesOptimization MethodsDiego Isla-LópezPas encore d'évaluation

- Unit 4,5Document13 pagesUnit 4,5ggyutuygjPas encore d'évaluation

- ECEG-6311 Power System Optimization and AI: Linear and Non Linear Programming Yoseph Mekonnen (PH.D.)Document56 pagesECEG-6311 Power System Optimization and AI: Linear and Non Linear Programming Yoseph Mekonnen (PH.D.)Fitsum HailePas encore d'évaluation

- Power System OptimizationDocument49 pagesPower System OptimizationTesfahun GirmaPas encore d'évaluation

- Module - 3 Lecture Notes - 5 Revised Simplex Method, Duality and Sensitivity AnalysisDocument11 pagesModule - 3 Lecture Notes - 5 Revised Simplex Method, Duality and Sensitivity Analysisswapna44Pas encore d'évaluation

- A New Algorithm For Linear Programming: Dhananjay P. Mehendale Sir Parashurambhau College, Tilak Road, Pune-411030, IndiaDocument20 pagesA New Algorithm For Linear Programming: Dhananjay P. Mehendale Sir Parashurambhau College, Tilak Road, Pune-411030, IndiaKinan Abu AsiPas encore d'évaluation

- Mba Semester Ii MB0048 - Operation Research-4 Credits (Book ID: B1301) Assignment Set - 1 (60 Marks)Document18 pagesMba Semester Ii MB0048 - Operation Research-4 Credits (Book ID: B1301) Assignment Set - 1 (60 Marks)geniusgPas encore d'évaluation

- ECOM 6302: Engineering Optimization: Chapter Four: Linear ProgrammingDocument53 pagesECOM 6302: Engineering Optimization: Chapter Four: Linear ProgrammingaaqlainPas encore d'évaluation

- Advanced Linear Programming: DR R.A.Pendavingh September 6, 2004Document82 pagesAdvanced Linear Programming: DR R.A.Pendavingh September 6, 2004Ahmed GoudaPas encore d'évaluation

- LP Quantitative TechniquesDocument29 pagesLP Quantitative TechniquesPrateek LoganiPas encore d'évaluation

- Main WorkDocument60 pagesMain WorkpekkepPas encore d'évaluation

- Multiobjective Optimization Scilab PDFDocument11 pagesMultiobjective Optimization Scilab PDFNiki Veranda Agil PermadiPas encore d'évaluation

- Optimization Models HUSTDocument24 pagesOptimization Models HUSTLan - GV trường TH Trác Văn Nguyễn Thị NgọcPas encore d'évaluation

- Junal LPDocument25 pagesJunal LPGumilang Al HafizPas encore d'évaluation

- Explain The Types of Operations Research Models. Briefly Explain The Phases of Operations Research. Answer Operations ResearchDocument13 pagesExplain The Types of Operations Research Models. Briefly Explain The Phases of Operations Research. Answer Operations Researchnivinjohn1Pas encore d'évaluation

- Linear Programming - FormulationDocument27 pagesLinear Programming - FormulationTunisha BhadauriaPas encore d'évaluation

- DAA Question AnswerDocument36 pagesDAA Question AnswerPratiksha DeshmukhPas encore d'évaluation

- Linear ProgrammingDocument25 pagesLinear ProgrammingEmmanuelPas encore d'évaluation

- MB0048 Opration ResearchDocument19 pagesMB0048 Opration ResearchNeelam AswalPas encore d'évaluation

- Linear Programming - The Graphical MethodDocument6 pagesLinear Programming - The Graphical MethodRenato B. AguilarPas encore d'évaluation

- Part - Ii: Subject Statistics and Linear Programme Technique Paper - XVDocument9 pagesPart - Ii: Subject Statistics and Linear Programme Technique Paper - XVabout the bookPas encore d'évaluation

- Simplex Method - Maximisation CaseDocument12 pagesSimplex Method - Maximisation CaseJoseph George KonnullyPas encore d'évaluation

- Maher NawkhassDocument16 pagesMaher NawkhassMaher AliPas encore d'évaluation

- Optmizationtechniques 150308051251 Conversion Gate01Document32 pagesOptmizationtechniques 150308051251 Conversion Gate01dileep bangadPas encore d'évaluation

- Optimization Using Linear ProgrammingDocument31 pagesOptimization Using Linear ProgrammingkishorerocxPas encore d'évaluation

- Understanding Response Surfaces: Central Composite Designs Box-Behnken DesignsDocument3 pagesUnderstanding Response Surfaces: Central Composite Designs Box-Behnken DesignsMonir SamirPas encore d'évaluation

- Un US1 GW A0 WDWDocument6 pagesUn US1 GW A0 WDWFuturamaramaPas encore d'évaluation

- Course Description or (VCE R11)Document6 pagesCourse Description or (VCE R11)S Deva PrasadPas encore d'évaluation

- ShadnagarGC Location01 3Document1 pageShadnagarGC Location01 3S Deva PrasadPas encore d'évaluation

- SDP - Interaction With Outside WorldDocument7 pagesSDP - Interaction With Outside WorldS Deva PrasadPas encore d'évaluation

- Mechanical Course OutcomesDocument20 pagesMechanical Course OutcomesS Deva PrasadPas encore d'évaluation

- Aot Notes v1Document12 pagesAot Notes v1S Deva PrasadPas encore d'évaluation

- CE SyllabusDocument1 pageCE SyllabusS Deva PrasadPas encore d'évaluation

- SailajaDocument2 pagesSailajaS Deva PrasadPas encore d'évaluation

- OR Lecture NotesDocument1 pageOR Lecture NotesS Deva Prasad0% (1)

- Mar2013Document2 pagesMar2013S Deva PrasadPas encore d'évaluation

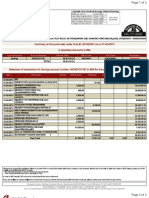

- Summary of Accounts Held Under Customer Id: 501622621 As On 28-02-2013Document2 pagesSummary of Accounts Held Under Customer Id: 501622621 As On 28-02-2013S Deva PrasadPas encore d'évaluation

- PPC Model QPDocument2 pagesPPC Model QPS Deva PrasadPas encore d'évaluation

- Well Designed Organisation Structure Enhances Decision Making - The Economic TimesDocument2 pagesWell Designed Organisation Structure Enhances Decision Making - The Economic TimesS Deva PrasadPas encore d'évaluation

- ShadnagarGC Location01 3Document1 pageShadnagarGC Location01 3S Deva PrasadPas encore d'évaluation

- Course Session 319916 Computer Programming IntroductionDocument66 pagesCourse Session 319916 Computer Programming IntroductionS Deva PrasadPas encore d'évaluation

- General Motors Weight Loss DietDocument4 pagesGeneral Motors Weight Loss DietS Deva Prasad100% (40)

- Shareholder Agreement 06Document19 pagesShareholder Agreement 06Josmar TelloPas encore d'évaluation

- SAN MIGUEL CORPORATION, ANGEL G. ROA and MELINDA MACARAIG, vs. NATIONAL LABOR RELATIONS COMMISSION (Second Division), LABOR ARBITER EDUARDO J. CARPIO, ILAW AT BUKLOD NG MANGGAGAWA (IBM), ET ALDocument6 pagesSAN MIGUEL CORPORATION, ANGEL G. ROA and MELINDA MACARAIG, vs. NATIONAL LABOR RELATIONS COMMISSION (Second Division), LABOR ARBITER EDUARDO J. CARPIO, ILAW AT BUKLOD NG MANGGAGAWA (IBM), ET ALLaila Ismael SalisaPas encore d'évaluation

- Odontogenic CystsDocument5 pagesOdontogenic CystsBH ASMRPas encore d'évaluation

- LESSON - STEM-based Research ProblemsDocument49 pagesLESSON - STEM-based Research ProblemsLee JenoPas encore d'évaluation

- BSC IT SyllabusDocument32 pagesBSC IT Syllabusஜூலியன் சத்தியதாசன்Pas encore d'évaluation

- Occupational Stress Questionnaire PDFDocument5 pagesOccupational Stress Questionnaire PDFabbaskhodaei666Pas encore d'évaluation

- DPWH Cost EstimationDocument67 pagesDPWH Cost EstimationAj Abe92% (12)

- Sampling PowerpointDocument21 pagesSampling PowerpointMuhammad Furqan Aslam AwanPas encore d'évaluation

- VOID BEQUESTS - AssignmentDocument49 pagesVOID BEQUESTS - AssignmentAkshay GaykarPas encore d'évaluation

- CH7Document34 pagesCH7Abdul AzizPas encore d'évaluation

- Chap 14Document31 pagesChap 14Dipti Bhavin DesaiPas encore d'évaluation

- Gcse Economics 8136/1: Paper 1 - How Markets WorkDocument19 pagesGcse Economics 8136/1: Paper 1 - How Markets WorkkaruneshnPas encore d'évaluation

- Choosing The Right HF Welding Process For Api Large Pipe MillsDocument5 pagesChoosing The Right HF Welding Process For Api Large Pipe MillsNia KurniaPas encore d'évaluation

- Allergies To Cross-Reactive Plant Proteins: Takeshi YagamiDocument11 pagesAllergies To Cross-Reactive Plant Proteins: Takeshi YagamisoylahijadeunvampiroPas encore d'évaluation

- Juniper M5 M10 DatasheetDocument6 pagesJuniper M5 M10 DatasheetMohammed Ali ZainPas encore d'évaluation

- CE 441 Foundation Engineering 05 07 2019Document216 pagesCE 441 Foundation Engineering 05 07 2019Md. Azizul Hakim100% (1)

- Pac All CAF Subject Referral Tests 1Document46 pagesPac All CAF Subject Referral Tests 1Shahid MahmudPas encore d'évaluation

- Class B Digital Device Part 15 of The FCC RulesDocument7 pagesClass B Digital Device Part 15 of The FCC RulesHemantkumarPas encore d'évaluation

- Magicolor2400 2430 2450FieldSvcDocument262 pagesMagicolor2400 2430 2450FieldSvcKlema HanisPas encore d'évaluation

- D.E.I Technical College, Dayalbagh Agra 5 III Semester Electrical Engg. Electrical Circuits and Measurements Question Bank Unit 1Document5 pagesD.E.I Technical College, Dayalbagh Agra 5 III Semester Electrical Engg. Electrical Circuits and Measurements Question Bank Unit 1Pritam Kumar Singh100% (1)

- Rectangular Wire Die Springs ISO-10243 Standard: Red Colour Heavy LoadDocument3 pagesRectangular Wire Die Springs ISO-10243 Standard: Red Colour Heavy LoadbashaPas encore d'évaluation

- Ra 11521 9160 9194 AmlaDocument55 pagesRa 11521 9160 9194 Amlagore.solivenPas encore d'évaluation

- Group H Macroeconomics Germany InflationDocument13 pagesGroup H Macroeconomics Germany Inflationmani kumarPas encore d'évaluation

- Consumer Research ProcessDocument78 pagesConsumer Research ProcessShikha PrasadPas encore d'évaluation

- Bsa 32 Chap 3 (Assignment) Orquia, Anndhrea S.Document3 pagesBsa 32 Chap 3 (Assignment) Orquia, Anndhrea S.Clint Agustin M. RoblesPas encore d'évaluation

- The 9 Best Reasons To Choose ZultysDocument13 pagesThe 9 Best Reasons To Choose ZultysGreg EickePas encore d'évaluation

- Audi Navigation System Plus - MMI Operating ManualDocument100 pagesAudi Navigation System Plus - MMI Operating ManualchillipaneerPas encore d'évaluation

- Job Description Examples - British GasDocument2 pagesJob Description Examples - British GasIonela IftimePas encore d'évaluation

- Section 12-22, Art. 3, 1987 Philippine ConstitutionDocument3 pagesSection 12-22, Art. 3, 1987 Philippine ConstitutionKaren LabogPas encore d'évaluation

- Aml Questionnaire For Smes: CheduleDocument5 pagesAml Questionnaire For Smes: CheduleHannah CokerPas encore d'évaluation