Académique Documents

Professionnel Documents

Culture Documents

Pipeline

Transféré par

veeraCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Pipeline

Transféré par

veeraDroits d'auteur :

Formats disponibles

Pipelining

We’ve already covered the basics of pipelining in Lecture 1.

We saw that cars could be built on an assembly line, and that

instructions could be executed in much the same way.

[H&P §A.1] In the ideal situation, this could give a speedup equal to

the number of pipeline stages:

Time to execute instruction on unpipelined machine

Number of pipe stages

However, this assumes “perfectly balanced” stages—each stage

requires exactly the same amount of time.

This is rarely the case, and anyway, pipelining does involve some

extra overhead.

Three aspects of RISC architectures make them easy to pipeline:

• All operations on data apply to data in registers.

• Only load and store operations move data between

memory and registers.

• All instructions are the same size, and there are few

instruction formats.

An unpipelined RISC

For our examples, we’ll work with a simplified RISC instruction set. In

an unpipelined implementation, instructions take at most 5 clock

cycles. One cycle is devoted to each of—

• Instruction fetch (IF).

Fetch the current instruction (the one pointed to by PC).

IR ← Mem[PC]

Update the PC by adding

NPC ← PC +

© 2002 Edward F. Gehringer ECE 463/521 Lecture Notes, Fall 2002 1

Based on notes from Drs. Tom Conte & Eric Rotenberg of NCSU

Figures from CAQA used with permission of Morgan Kaufmann Publishers. © 2003 Elsevier Science (USA)

• Instruction decode/register fetch (ID).

Decode the instruction.

Read the source registers from the register file.

A ← Regs[IR6..10]; B = Regs[IR11..15]

Sign-extend the offset (displacement) field of the

instruction.

Imm ← sign-extend(IR16..31)

Check for a possible branch (by reading values from the

source registers).

Cond ← (A rel B)

Compute the branch target address by adding the

to the

ALU_Output ← NPC + Imm

If the branch is taken, store the branch-target address

into the PC.

If (cond) PC ← ALU_Output, else PC ← NPC

What feature of the ISA makes it possible to read the

registers in this stage?

• Execute/compute effective address (EX).

The ALU operates on the operands, performing one of

three types of functions, depending on the opcode

Ø Memory reference: ALU adds and

to form the effective address.

ALU_Output ←

Ø Register-register instruction: ALU performs

operation on the values read from the register file.

ALU_Output ← A op B

Ø Register-immediate instruction: ALU performs

operation on the

and the

Lecture 14 Advanced Microprocessor Design 2

ALU_Output ← A op Imm

In a load-store architecture, execution can be done at

the same time as effective-address computation

because

• Memory access (MEM).

Load_Mem_Data ← Mem[ALU_Output] /* Load */

Mem[ALU_Output] ← B /* Store */

• Write-back (WB). If the instruction is register-register or

, the result is written into the register file

at the address specified by the destination operand.

Reg-Reg ALU Operation: Regs[IR16..20] ← ALU_Output

Reg-Immediate ALU Operation: Regs[IR11..15] ← ALU_Output

Load instruction: Regs[IR11..15] ← Load_Mem_Data

In this implementation, some instructions require 2 cycles, some

require 4, and some require 5.

• 2 cycles:

• 4 cycles:

• 5 cycles:

Assuming the instruction frequencies from the integer benchmarks

mentioned in the last lecture, what’s the CPI of this architecture?

Pipelining our RISC

It’s easy to pipeline this architecture—just make each clock cycle into

a pipe stage.

© 2002 Edward F. Gehringer ECE 463/521 Lecture Notes, Fall 2002 3

Based on notes from Drs. Tom Conte & Eric Rotenberg of NCSU

Figures from CAQA used with permission of Morgan Kaufmann Publishers. © 2003 Elsevier Science (USA)

Clock # 1 2 3 4 5 6 7 8 9

Instruction i IF ID EX MEM WB

Instr. i+1 IF ID EX MEM WB

Instr. i+2 IF ID EX MEM WB

Instr. i+3 IF ID EX MEM WB

Instr. i+4 IF ID EX MEM WB

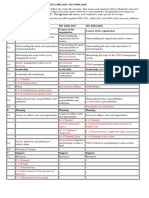

Here is a diagram of our instruction pipeline.

Instruction Fetch (IF) Instruction Decode (ID) Execute (EX) Memory (MEM) Write-

back

(WB)

ALU

MUX

4

ALU

NPC

PC A

IR

ALU

Instruction cond

Data LMD

(inst.

MUX

cache Regs cache

reg.)

MUX

Sign- Imm

extend

In this pipeline, the major functional units are used in different cycles,

so overlapping the execution of instructions introduces few conflicts.

• Separating the instruction and data caches eliminates a

conflict that would arise in the IF and MEM stages.

Of course, we have to access these caches faster than we

would in an unpipelined processor.

• The register file is used in two stages:

Lecture 14 Advanced Microprocessor Design 4

Thus, we need to perform reads and writes

each clock cycle.

To handle reads and writes to the same register, we write in

the first half of the clock cycle and read in the second half.

• Something is incomplete about our diagram of the IF stage.

What?

We’ve omitted one thing from the diagram above: We need a place

to save values between pipeline stages. Otherwise, the different

instructions in the pipeline would interfere with each other.

So we insert latches, or pipeline registers, between stages. Of

course, we’d need latches even in an unpipelined multicycle

implementation.

© 2002 Edward F. Gehringer ECE 463/521 Lecture Notes, Fall 2002 5

Based on notes from Drs. Tom Conte & Eric Rotenberg of NCSU

Figures from CAQA used with permission of Morgan Kaufmann Publishers. © 2003 Elsevier Science (USA)

What is our pipeline speedup, then …?

Of course, we have to allow for latch-delay time.

We also need to allow for clock skew—the maximum delay between

when the clock arrives at any two registers.

Let’s define To’head = Tlatch + Tskew.

Avg. unpipelined execution time

Speedup =

Avg. pipelined execution time

Tunpipe

=T

unpipe

n + To'head . n

= n (ideal case where To'head = 0)

Example: Consider the unpipelined processor in the previous

example. Assume—

• Clock cycle is 1 ns.

• Branch instructions, 20% of the total, take 2 cycles.

• Store instructions, 10% of the total, take 4 cycles.

• All other instructions take 5 cycles.

• Clock skew and latch delay add 0.2 ns. to the cycle time.

What is the speedup from pipelining?

Lecture 14 Advanced Microprocessor Design 6

How can pipelining help?

How can pipelining improve performance?

• If we keep CT constant, by improving CPI …

50n

IF/ID and MEM/WB

IF ID EX MEM WB

are unpipelined

50n

Pipeline IF ID EX MEM WB

• If we keep CPI constant, by improving CT …

50ns

Unpipelined IF ID EX MEM WB CPI_pipe=1

MEM MEM

IF1 IF2 ID1 ID2 EX1 EX2 1 2 WB1 WB2 CPI_pipe=1

Pipelined

25ns

• Usually we improve both CT and CPI.

Pipeline hazards

A hazard reduces the performance of the pipeline. Hazards arise

because of the program’s characteristics.

There are three kinds of hazards.

• Structural hazards—Not enough hardware resources exist

for all combinations of instructions.

• Data hazards—Dependences between instructions prevent

their overlapped execution.

• Control hazards—Branches change the PC, which results

in stalls while branch targets are fetched.

© 2002 Edward F. Gehringer ECE 463/521 Lecture Notes, Fall 2002 7

Based on notes from Drs. Tom Conte & Eric Rotenberg of NCSU

Figures from CAQA used with permission of Morgan Kaufmann Publishers. © 2003 Elsevier Science (USA)

Structural hazards

Consider a pipeline with a unified instruction-data cache.

Clock # 1 2 3 4 5 6 7 8 9 10

Load instr. IF ID EX MEM WB

Instr. i+1 IF ID EX MEM WB

Instr. i+2 IF ID EX MEM WB

Instr. i+3 stall IF ID EX MEM WB

Instr. i+4 IF ID EX MEM WB

Instr. i+5 IF ID EX MEM

Instr. i+6 IF ID EX

Instruction i+3 has to stall, because the load instruction “steals” an

instruction-fetch cycle.

In this pipeline, what kind of instructions (what “opcodes”) cause

structural hazards?

Lecture 14 Advanced Microprocessor Design 8

Vous aimerez peut-être aussi

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Ecd Lab Report 3Document6 pagesEcd Lab Report 3Saqib AliPas encore d'évaluation

- Strategic Perspective For The Corps of SignalsDocument36 pagesStrategic Perspective For The Corps of Signalscallmetarantula0% (1)

- Chief Patrons: in Association With Global Research Conference Forum Organizes Virtual International Conference OnDocument2 pagesChief Patrons: in Association With Global Research Conference Forum Organizes Virtual International Conference OnRavishankar BhaganagarePas encore d'évaluation

- Chapter1-Nature of StatisticsDocument10 pagesChapter1-Nature of StatisticsNelia Olaso InsonPas encore d'évaluation

- Vrealize Automation TransitionDocument93 pagesVrealize Automation TransitionBao Vy LePas encore d'évaluation

- Presentacion Trazador CNCDocument15 pagesPresentacion Trazador CNCNery Alexander CaalPas encore d'évaluation

- Question Bank Lab Test FCPDocument9 pagesQuestion Bank Lab Test FCPRoslina JaafarPas encore d'évaluation

- L5 L6 L7 L8 L9 - Project ManagementDocument94 pagesL5 L6 L7 L8 L9 - Project Managementapi-19922408Pas encore d'évaluation

- Ip Profiling SytemDocument72 pagesIp Profiling Sytemjohnraymart colasitoPas encore d'évaluation

- TestDocument12 pagesTestdineshkmr88Pas encore d'évaluation

- Olidworks: S Edrawings ProfessionalDocument2 pagesOlidworks: S Edrawings Professionalkambera2100% (1)

- Annex SL 9001 14001 45001 Management System MapDocument3 pagesAnnex SL 9001 14001 45001 Management System MapPramod AthiyarathuPas encore d'évaluation

- Small Animal Radiology and Ultrasound A Diagnostic Atlas and TextDocument728 pagesSmall Animal Radiology and Ultrasound A Diagnostic Atlas and TextAndrzej Milczak100% (3)

- Proven Perimeter Protection: Single-Platform SimplicityDocument6 pagesProven Perimeter Protection: Single-Platform SimplicitytecksanPas encore d'évaluation

- Ch-3 & 4 Solving System of EquationsDocument18 pagesCh-3 & 4 Solving System of EquationsAbel TayePas encore d'évaluation

- Trunking Radio System TETRADocument21 pagesTrunking Radio System TETRAMihsah Exttreme50% (2)

- Circuit Analysis 2 Lab Report 2 Pieas PakistanDocument6 pagesCircuit Analysis 2 Lab Report 2 Pieas PakistanMUYJ NewsPas encore d'évaluation

- Variable DC Power Supply Project ReportDocument10 pagesVariable DC Power Supply Project ReportEngr. Zeeshan mohsin73% (22)

- An Assignment Problem Is A Particular Case of Transportation ProblemDocument7 pagesAn Assignment Problem Is A Particular Case of Transportation ProblemsunilsinghmPas encore d'évaluation

- Li4278 Spec Sheet en UsDocument2 pagesLi4278 Spec Sheet en UsErwin RamadhanPas encore d'évaluation

- Ezra Pound PDFDocument2 pagesEzra Pound PDFGaryPas encore d'évaluation

- B 14873473Document74 pagesB 14873473GiovanniCuocoPas encore d'évaluation

- An Update On The 3x+1 Problem Marc ChamberlandDocument32 pagesAn Update On The 3x+1 Problem Marc ChamberlandFajar Haifani100% (1)

- Embedded Packet Capture Overview: Finding Feature InformationDocument10 pagesEmbedded Packet Capture Overview: Finding Feature InformationManoj BPas encore d'évaluation

- Swim Lane Diagram of Hiring ProcessDocument2 pagesSwim Lane Diagram of Hiring ProcessNAMRATA GUPTAPas encore d'évaluation

- CE Course DescriptionDocument12 pagesCE Course DescriptionRayanPas encore d'évaluation

- A Smart Bus Tracking System Based On Location-Aware Services and QR Codes 4Document5 pagesA Smart Bus Tracking System Based On Location-Aware Services and QR Codes 4Amanuel SeidPas encore d'évaluation

- HR Interview QuestionDocument17 pagesHR Interview Questionanon-351969Pas encore d'évaluation

- Diagrama TV LGDocument44 pagesDiagrama TV LGarturo_gilsonPas encore d'évaluation

- Coursera EF7ZHT9EAX5ZDocument1 pageCoursera EF7ZHT9EAX5ZBeyar. ShPas encore d'évaluation