Académique Documents

Professionnel Documents

Culture Documents

Dynamic Programming Tutorial by Saad Ahmad

Transféré par

he110rulesCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Dynamic Programming Tutorial by Saad Ahmad

Transféré par

he110rulesDroits d'auteur :

Formats disponibles

Dynamic Programming Dynamic programming is a paradigm much like divide and conquer, the problem is split into smaller

subproblems which are solved and combined. In a divide and conquer paradigm we create unique subproblems and solve them, however in dynamic programming we solve subproblems which are shared between different subproblems. Dynamic programming is usually applied to an optimization problem, e.g., maximizing something or minimizing something. These problems have many different solutions, but one of them is an optimal solution.

The steps to creating a dynamic programming algorithm are: 1. Define the structure of an optimal solution 2. Generate the value of the optimal solution recursively 3. Create an optimal solution in a bottom up manner This is usually hard to understand until we see examples, so I will go over 2 examples 1. Money-board problem Given an NxN board find the path starting from any square at the start, to a target square in row n which maximizes the amount of money that can be obtained. The person can only move forward, diagonally or straight. Lets assume we have a 4x4 board (Figure 1): 4 3 2 1 1

X O 3

Numbers represent index of each row and column. O represents our current square. X represents the squares we can travel to. Cash [r, c] returns the cash amount on square (r, c) T represents our target square

So how do we solve this? We could use brute force by generating all possible paths and find the path which has the most money but, there are 3N possibilities which is too slow in cases when N gets large. So we will use dynamic programming.

Saad Ahmad

Step 1 - Define the optimal structure for the problem. As shown in figure 1 that we can move to 3 squares above our current, which means that we can also say that the maximum amount for any square is the maximum amount of the 3 squares below it plus the amount in the current square. Therefore we can say that the best path to (r, c) is one of The best way through (r 1, c - 1) -- The bottom left square The best way through (r 1, c) -- The bottom square The best way through (r 1, c + 1) -- The bottom right square If r = 1 then we know that we are at the start and there is no best way to that square. This gives us enough information to create a recursive solution. Step 2 - Solve the problem recursively Let C (r, c) be a function which returns the maximum amount of cash that a person can pick up by going to the specified row and column Knowing the optimal structure, we go to 2nd step of creating a dynamic programming algorithm, creating a recursive function to solve the problem. - If c < 1 or c > n Cash [r, c] If r = 1 max (C (r - 1, c - 1), C (r - 1, c), C (r - 1, c + 1)) + Cash [r, c] Otherwise

C (r, c) =

Pseudo Code for the recursive algorithm. function C (r, c) if c < 1 or c > N then return - else if r = 1 then return Cash [r, c] else max (C (r - 1, c - 1), C (r - 1, c), C (r - 1, c + 1)) + Cash [r, c] end

This algorithm works however it has a problem; many of the same subproblems are solved multiple times. Fortunately we can solve this problem by calculating upwards starting from row 1.

Saad Ahmad

Step 3 Generating a solution bottom up Instead of C being a function, we will use it as a 2D array to store the maximum amount of money that can be picked up by going to (r, c). In our recursive algorithm we see that for all for values of r 2, we need the value of r 1. This allows us to start at r = 2 and go up the money board. Pseudo Code for the bottom up solution function FindMaximumAmount for i : 1 to N C [1, i] = Cash[1, i] C [i, 0] = - C [i, N + 1] = - end for r : 2 to N for c : 1 to N C [r, c] = max (C [r - 1, c 1], C [r - 1, c], C [r - 1, c + 1]) + Cash[r, c] end end end

Example 2 Coin Change Problem Given N amount of denominations d [N], determine the minimum amount of change required to make change for and amount A. We could use brute force and generate all possibilities however the running time for the algorithm would be exponential. Since this problem has an optimal structure and shared subproblems we will use dynamic programming. Step 1 - Define the optimal structure for the problem. In a solution to make change for A cents, we can say that for a value C and C A, we can split the optimal solution into C cents and A C cents. The solution to C cents must be optimal because if there was a way to generate change using fewer coins to make C cents we would replace that method with the better to generate C cents. This is the same for A C cents. Step 2 - Solve the problem recursively Let MC (A) be a function which returns the minimum number of coins needed to make change for A cents. As we said in the optimal structure there exists a value C which splits the amount of coins optimally between 0..C, C+1 .. A. Let C be a value of d[k]. If d[k] is the optimal value then we can say that MC (A) = 1 + MC (A d[k]).

Saad Ahmad

The problem is that we do not know for which value of k gives the optimal value so we will check all possibilities for k. Also note that if we need to make change for 0 cents we need 0 cents so we can define the following recursive function. 0 MC (A) = Min for all values of k (1 + MC (A d[k])) If A > 0 If A = 0

Pseudo Code for the recursive algorithm. function MC (A) if A = 0 then return 0 else minimum = for k : 1 to N if (d[k] A) then coins = 1 + MC (A d[k]) if (coins < minimum) then minimum = coins end end end return minimum end end

Step 3 Generating a solution bottom up If you tried the recursive algorithm you will realize that it takes a long time to solve this problem. This is because many subproblems are solved more then once. Fortunate if we start from A = 0 and go up, we are able to compute the solution in O (A*k) time. If we calculate the optimal number of coins needed for each integer amount that that comes inside the range of 1 to A, we are able to create a table which has the optimal solution for each amount between 1 to A. Let MC be a 1-D array which stores the minimum coin needed at each amount

Saad Ahmad

Pseudo Code for the bottom up solution function CalculateMinimumCoins MC [0] = 0 for i : 1 to A minimum = for k : 1 to N if (d[k] i) then if (1 + MC [i d[k]] < minimum) then minimum = 1 + MC [i d[k]] end end MC [i] = minimum end end

In conclusion dynamic programming is a very effective tool in solving problems that have an optimal structure and have shared subproblems. Dynamic programming can be difficult to master, but with more examples it becomes easier to see how to apply the dynamic programming rules to a problem.

Saad Ahmad

Refrences Thomas H. Cormen, Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein, 2001. Introduction to Algorithms, 2nd ed. MIT Press & McGraw-Hill. "Dynamic Programming." Wikipedia. 3 Jan 2008 <http://en.wikipedia.org/wiki/Dynamic_Programming>.

Saad Ahmad

Vous aimerez peut-être aussi

- DynprogDocument18 pagesDynprogHykinel Bon GuartePas encore d'évaluation

- Dynamic Programming PDFDocument19 pagesDynamic Programming PDFSod AkaPas encore d'évaluation

- MCS 031Document15 pagesMCS 031Bageesh M BosePas encore d'évaluation

- Dynamic Programming Algorithms: Based On University of Toronto CSC 364 Notes, Original Lectures by Stephen CookDocument18 pagesDynamic Programming Algorithms: Based On University of Toronto CSC 364 Notes, Original Lectures by Stephen Cookshubham guptaPas encore d'évaluation

- Dynamic ProgrammingDocument74 pagesDynamic Programming21dce106Pas encore d'évaluation

- Algorithm Types and ClassificationDocument5 pagesAlgorithm Types and ClassificationSheraz AliPas encore d'évaluation

- Welcome To Our Presentation: Topics:Dynamic ProgrammingDocument25 pagesWelcome To Our Presentation: Topics:Dynamic ProgrammingSushanta AcharjeePas encore d'évaluation

- Chapter 12Document21 pagesChapter 12Perrioer MathewPas encore d'évaluation

- Multiplym 2Document23 pagesMultiplym 2adyant.gupta2022Pas encore d'évaluation

- Maximum profit locations along highwayDocument3 pagesMaximum profit locations along highwayAnamta KhanPas encore d'évaluation

- Dynamic ProgrammingDocument4 pagesDynamic ProgrammingAjit KushwahaPas encore d'évaluation

- DAADocument62 pagesDAAanon_385854451Pas encore d'évaluation

- Finals 2011 SolutionsDocument5 pagesFinals 2011 SolutionsRayner Harold Montes CondoriPas encore d'évaluation

- DAA MaterialDocument12 pagesDAA MaterialdagiPas encore d'évaluation

- Algorithms: A Brief Introduction Notes: Section 2.1 of RosenDocument6 pagesAlgorithms: A Brief Introduction Notes: Section 2.1 of RosenHorizon 99Pas encore d'évaluation

- Algorithms: A Brief Introduction Notes: Section 2.1 of RosenDocument6 pagesAlgorithms: A Brief Introduction Notes: Section 2.1 of RosenHorizon 99Pas encore d'évaluation

- XCS 355 Design and Analysis of Viva)Document43 pagesXCS 355 Design and Analysis of Viva)koteswarararaoPas encore d'évaluation

- 44 Dynamic ProgrammingDocument20 pages44 Dynamic ProgrammingTushar ChauhanPas encore d'évaluation

- Analysis of Algorithms NotesDocument72 pagesAnalysis of Algorithms NotesSajid UmarPas encore d'évaluation

- MGMT ScienceDocument35 pagesMGMT ScienceraghevjindalPas encore d'évaluation

- Design and Analysis of Algorithm Question BankDocument15 pagesDesign and Analysis of Algorithm Question BankGeo ThaliathPas encore d'évaluation

- Unit 3 - Analysis and Design of Algorithm - WWW - Rgpvnotes.inDocument11 pagesUnit 3 - Analysis and Design of Algorithm - WWW - Rgpvnotes.indixitraghav25Pas encore d'évaluation

- CS-DAA Two Marks QuestionDocument12 pagesCS-DAA Two Marks QuestionPankaj SainiPas encore d'évaluation

- Design and Analysis of AlgorithmDocument89 pagesDesign and Analysis of AlgorithmMuhammad KazimPas encore d'évaluation

- Graphical Method To Solve LPPDocument19 pagesGraphical Method To Solve LPPpraveenPas encore d'évaluation

- ACM ICPC Finals 2012 SolutionsDocument5 pagesACM ICPC Finals 2012 Solutionsbarunbasak1Pas encore d'évaluation

- Solutions Practice Set DP GreedyDocument6 pagesSolutions Practice Set DP GreedyNancy QPas encore d'évaluation

- Quntiative Techniques 2 SonuDocument11 pagesQuntiative Techniques 2 SonuSonu KumarPas encore d'évaluation

- CORE - 14: Algorithm Design Techniques (Unit - 1)Document7 pagesCORE - 14: Algorithm Design Techniques (Unit - 1)Priyaranjan SorenPas encore d'évaluation

- Maximum Subrectangle ProblemDocument3 pagesMaximum Subrectangle Problemzu1234567Pas encore d'évaluation

- Data Structure and AlgorithmsDocument57 pagesData Structure and AlgorithmsfNxPas encore d'évaluation

- Ones Assignment Method for Solving Traveling Salesman ProblemDocument8 pagesOnes Assignment Method for Solving Traveling Salesman Problemgopi_dey8649Pas encore d'évaluation

- Chapter 2Document29 pagesChapter 2Lyana JaafarPas encore d'évaluation

- Programacion Dinamica Sin BBDocument50 pagesProgramacion Dinamica Sin BBandresPas encore d'évaluation

- Greedy Method MaterialDocument16 pagesGreedy Method MaterialAmaranatha Reddy PPas encore d'évaluation

- Design and Analysis of Algorithm QuestionsDocument12 pagesDesign and Analysis of Algorithm QuestionskoteswararaopittalaPas encore d'évaluation

- Greedy AlgorithmDocument46 pagesGreedy AlgorithmSri DeviPas encore d'évaluation

- Unknown Document NameDocument18 pagesUnknown Document NameAshrafPas encore d'évaluation

- Daa Unit 3Document22 pagesDaa Unit 3Rahul GusainPas encore d'évaluation

- Weeks 8 9 Sessions 21 26 Slides 1 25 Algorithm 1Document26 pagesWeeks 8 9 Sessions 21 26 Slides 1 25 Algorithm 1Richelle FiestaPas encore d'évaluation

- Dynamic Programming - 1Document5 pagesDynamic Programming - 1nehruPas encore d'évaluation

- Dynamic Programming in Python: Optimizing Rod Cutting AlgorithmsDocument6 pagesDynamic Programming in Python: Optimizing Rod Cutting AlgorithmsGonzaloAlbertoGaticaOsorioPas encore d'évaluation

- Ads Unit - 4Document50 pagesAds Unit - 4MonicaPas encore d'évaluation

- Bubble Sort AlgorithmDocument57 pagesBubble Sort AlgorithmSanjeeb PradhanPas encore d'évaluation

- DAA Question AnswerDocument36 pagesDAA Question AnswerPratiksha DeshmukhPas encore d'évaluation

- HIR DA R IVE RS ITY IO T: SC Ho Ol of Me CH An Ica L ND Ust Ria L Ee Rin GDocument52 pagesHIR DA R IVE RS ITY IO T: SC Ho Ol of Me CH An Ica L ND Ust Ria L Ee Rin Ghaile mariamPas encore d'évaluation

- Dynamic ProgrammingDocument26 pagesDynamic Programmingshraddha pattnaikPas encore d'évaluation

- Lesson 03 Graphical Method For Solving L PPDocument19 pagesLesson 03 Graphical Method For Solving L PPMandeep SinghPas encore d'évaluation

- Dynamic ProgrammingDocument35 pagesDynamic ProgrammingMuhammad JunaidPas encore d'évaluation

- MollinDocument72 pagesMollinPhúc NguyễnPas encore d'évaluation

- Aiml DocxDocument11 pagesAiml DocxWbn tournamentsPas encore d'évaluation

- SE-Comps SEM4 AOA-CBCGS MAY18 SOLUTIONDocument15 pagesSE-Comps SEM4 AOA-CBCGS MAY18 SOLUTIONSagar SaklaniPas encore d'évaluation

- Lecture 35 Aproximation AlgorithmsDocument13 pagesLecture 35 Aproximation AlgorithmsRitik chaudharyPas encore d'évaluation

- Dynamic Programming1Document38 pagesDynamic Programming1Mohammad Bilal MirzaPas encore d'évaluation

- CS502 - Fundamental of Algorithm Short-Question Answer: Ref: Handouts Page No. 40Document8 pagesCS502 - Fundamental of Algorithm Short-Question Answer: Ref: Handouts Page No. 40mc190204637 AAFIAH TAJAMMALPas encore d'évaluation

- Matrix Chain MultiplicationDocument11 pagesMatrix Chain MultiplicationamukhopadhyayPas encore d'évaluation

- A Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"D'EverandA Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Évaluation : 2.5 sur 5 étoiles2.5/5 (2)

- C ProgrammingDocument205 pagesC ProgrammingSrinivasan RamachandranPas encore d'évaluation

- Pathophysiology of Uremic EncephalopathyDocument5 pagesPathophysiology of Uremic EncephalopathyKristen Leigh Mariano100% (1)

- Alarm Management Second Ed - Hollifield Habibi - IntroductionDocument6 pagesAlarm Management Second Ed - Hollifield Habibi - IntroductionDavid DuranPas encore d'évaluation

- Supply Chain Management of VodafoneDocument8 pagesSupply Chain Management of VodafoneAnamika MisraPas encore d'évaluation

- Rapid ECG Interpretation Skills ChallengeDocument91 pagesRapid ECG Interpretation Skills ChallengeMiguel LizarragaPas encore d'évaluation

- Data Science From Scratch, 2nd EditionDocument72 pagesData Science From Scratch, 2nd EditionAhmed HusseinPas encore d'évaluation

- Product 243: Technical Data SheetDocument3 pagesProduct 243: Technical Data SheetRuiPas encore d'évaluation

- All Paramedical CoursesDocument23 pagesAll Paramedical CoursesdeepikaPas encore d'évaluation

- Course Code: Hrm353 L1Document26 pagesCourse Code: Hrm353 L1Jaskiran KaurPas encore d'évaluation

- Optra - NubiraDocument37 pagesOptra - NubiraDaniel Castillo PeñaPas encore d'évaluation

- P-H Agua PDFDocument1 pageP-H Agua PDFSarah B. LopesPas encore d'évaluation

- Express VPN Activation CodeDocument5 pagesExpress VPN Activation CodeButler49JuulPas encore d'évaluation

- MathsDocument27 pagesMathsBA21412Pas encore d'évaluation

- Applied SciencesDocument25 pagesApplied SciencesMario BarbarossaPas encore d'évaluation

- Vestax VCI-380 Midi Mapping v3.4Document23 pagesVestax VCI-380 Midi Mapping v3.4Matthieu TabPas encore d'évaluation

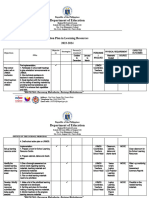

- aCTION PLAN IN HEALTHDocument13 pagesaCTION PLAN IN HEALTHCATHERINE FAJARDOPas encore d'évaluation

- Asset Valuation: Debt Investments: Analysis and Valuation: 1 2 N M 1 2 N MDocument23 pagesAsset Valuation: Debt Investments: Analysis and Valuation: 1 2 N M 1 2 N MSirSmirkPas encore d'évaluation

- Securifire 1000-ExtractedDocument2 pagesSecurifire 1000-ExtractedWilkeey EstrellanesPas encore d'évaluation

- Report On Indian Airlines Industry On Social Media, Mar 2015Document9 pagesReport On Indian Airlines Industry On Social Media, Mar 2015Vang LianPas encore d'évaluation

- List of Psychotropic Drugs Under International ControlDocument32 pagesList of Psychotropic Drugs Under International ControlRadhakrishana DuddellaPas encore d'évaluation

- Eladio Dieste's Free-Standing Barrel VaultsDocument18 pagesEladio Dieste's Free-Standing Barrel Vaultssoniamoise100% (1)

- CEA-2010 by ManishDocument10 pagesCEA-2010 by ManishShishpal Singh NegiPas encore d'évaluation

- Arsh Final Project ReportDocument65 pagesArsh Final Project Report720 Manvir SinghPas encore d'évaluation

- Ballari City Corporation: Government of KarnatakaDocument37 pagesBallari City Corporation: Government of KarnatakaManish HbPas encore d'évaluation

- VNACS Final Case ReportDocument9 pagesVNACS Final Case ReportVikram Singh TomarPas encore d'évaluation

- Regional Office X: Republic of The PhilippinesDocument2 pagesRegional Office X: Republic of The PhilippinesCoreine Imee ValledorPas encore d'évaluation

- IPIECA - IOGP - The Global Distribution and Assessment of Major Oil Spill Response ResourcesDocument40 pagesIPIECA - IOGP - The Global Distribution and Assessment of Major Oil Spill Response ResourcesОлегPas encore d'évaluation

- Teaching and Learning in the Multigrade ClassroomDocument18 pagesTeaching and Learning in the Multigrade ClassroomMasitah Binti TaibPas encore d'évaluation

- Airbus Reference Language AbbreviationsDocument66 pagesAirbus Reference Language Abbreviations862405Pas encore d'évaluation