Académique Documents

Professionnel Documents

Culture Documents

Tips For Improving INSERT Performance in DB2 Universal Database

Transféré par

iwankwnDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Tips For Improving INSERT Performance in DB2 Universal Database

Transféré par

iwankwnDroits d'auteur :

Formats disponibles

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

IBM

English

Sign in (or register)

Technical topics

Evaluation software

Community

Events

Search developerWorks

Tips for improving INSERT performance in DB2 Universal Database

Bill Wilkins (wilkins@ca.ibm.com), DB2 Vendor Enablement, IBM Summary: Improving the performance of database inserts is very important to managing a database, especially in an OLTP environment. This article explains exactly what happens when an insert occurs, looks at alternatives, and examines issues that affect insert performance, such as locking, index maintenance, and constraint management. And, to back up his advice, the author includes performance measurement results. Date: 11 Mar 2004 Level: Intermediate Activity: 74917 views Comments: 0 (View | Add comment - Sign in) Average rating (71 votes) Rate this article Introduction

IBM DB2 e-kit for Database Professionals

Learn how easy it is to get trained and certified for DB2 for Linux, UNIX, and Windows with the IBM DB2 e-kit for Database Professionals. Register now, and expand your skills portfolio, or extend your DBMS vendor support to include DB2. The insertion of rows is one of the most common and important tasks you will perform when using a DB2 Universal Database (UDB). This article is a compilation of techniques for optimizing the performance of inserts, particularly high volume inserts. As in most any performance discussion, there are tradeoffs. We'll discuss the tradeoffs that optimizing inserts can introduce. For example, a technique you use for inserts may require additional processing following the inserts, or may impact query performance. I will provide some performance measurement results to give you an idea of the significance of many of the optimization techniques. Appendix A contains a numbered summary of the results., and throughout the article I'll refer to the results by their test numbers. In the Conclusion section below is a summary of the most beneficial techniques, and Appendix B lists all of the suggestions made in the paper.

1 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

Although we won't be examining complete details on how to implement the techniques, this information is available in the DB2 manuals unless otherwise indicated. Please go to the References for more details. Overview of INSERT Processing Let's start by taking a simplified look at the processing steps for an insert of a single row. Each of these steps has potential for optimization, which we'll discuss later in sequence. 1. The statement is prepared on the client. For dynamic SQL, this is done just before execution, and its performance is important; for static SQL the performance is virtually irrelevant since the prepare step is done in advance. 2. The column values for the row to be inserted are assembled on the client and sent to the DB2 server. 3. The DB2 server determines the page in which to insert the row. 4. DB2 reserves a slot in the buffer pool for the page. If it's using an existing page, it may be read from disk; if it's a new page, it may need to be physically allocated to the tablespace (if SMS, system managed space). Each page with inserted rows will eventually be written from the buffer pool to disk. 5. The row is formatted in the target page and an X (exclusive) row lock is obtained on it. 6. A record reflecting the insert is written to the log buffer. 7. The transaction containing the insert is (eventually) committed, at which point the log buffer record(s) are written to the log disk if they haven't already been. There are also numerous types of additional processing that may take place, depending on the database configuration, for example, the existence of indexes or triggers. This extra processing can be highly significant to performance and will be discussed later. Alternatives to inserts Before we discuss optimizing inserts in detail, let's consider the alternatives to inserts: load and import. The import utility is essentially a front end for SQL INSERTs, but some of its capabilities may be useful to you. Load also has useful extra features, but the main reason for using load instead of inserts would be improved performance. We won't discuss import further here, but see the References section below for a pointer to the Data Movement Utilities Guide discussion of how import and load differ. Load formats data pages directly, while avoiding most of the overhead of individual row processing that inserts incur (for example, logging is virtually eliminated). Also, load can better exploit parallelism on multiprocessor machines. Two new capabilities in V8 load are especially interesting in terms of being alternatives to insert: load from a cursor, and load from call level interface (CLI) applications. Load from a cursor

2 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

This approach can be used in application programs (via the db2Load API), or DB2 scripts. As an example of the latter:

declare staffcursor cursor for select * from staff; load from staffcursor of cursor insert into myschema.new_staff;

Those two lines could replace:

insert into myschema.new_staff select * from staff

Tests 6 and 9 in Appendix A showed that loading from a cursor provided almost 20% improvement over the equivalent INSERT ... SELECT statement. Load from CLI This approach is obviously limited to call level interface (CLI) programs, but it's very fast. The technique is very similar to array inserts (discussed later), and a sample is provided with DB2; see sqllib/samples/cli/tbload.c . Looking at test 79 in Appendix A, we see that using load can be over twice as fast as fairly optimized array inserts (test 71), and almost ten times faster than less optimized array inserts (such as test 69). Areas of improvement for all inserts Let's look at the essential steps of insert processing and the techniques you can use to optimize them. 1. Statement preparation Being an SQL statement, an INSERT statement must be compiled by DB2 before it's executed. This can take place automatically (e.g., in CLP, or a CLI SQLExecDirect call), or explicitly (e.g., through an SQL Prepare statement, CLI SQLPrepare, or JDBC prepareStatement). This compilation process involves authorization checking, optimization, and other activities necessary to convert the statement into an executable form. When the statement is compiled, its access plan is stored in the package cache. If the same INSERT statement is executed repeatedly, its access plan will (usually) be found in the package cache and the compilation cost is avoided. However, if the insert contains literal values that differ for each row, each statement is treated as unique and must be separately compiled. It is highly desirable to replace a repeated statement such as:

insert into mytable values (1, 'abc') insert into mytable values (2, 'def')

etc. with one that has parameter markers and is prepared once and repeatedly executed:

insert into mytable values (?, ?)

Comparing test 1 with test 2, and 61-64 with 65-68, we see that the use of parameter markers can make a series of inserts run several times faster. (Using host variables in a static SQL program would have a similar benefit.) 2. Sending column values to the Server

3 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

There are several different optimization techniques that fall into this category. The most important of them is to include more than one row per insert, which avoids costly per-row client-server communication, as well as DB2 overhead. The techniques available for doing multi-row inserts are: Include multiple row contents in the VALUES clause. For example, this will insert three rows: INSERT INTO mytable VALUES (1, 'abc'), (2, 'def'), (3, 'ghi') Use array insert in CLI. This involves preparing an INSERT statement with parameter markers, defining an array for storing the values to be inserted, binding the array to the parameter markers, and executing the insert once for each set of array contents. See the references in the CLI Guide and Reference for details; also, sample program sqllib/samples/cli/tbload.c has the basic framework for array inserts (but does a CLI LOAD instead). Comparing the times for tests 68 (which used 10x fewer rows), 71, 73, 74, and 78, we see that going from no array to an array of 1000 rows cut elapsed time by about 2.5 times. An array of at least 100 rows should be used whenever possible. Use batch operations in JDBC. This is based on the same concept as array inserts in CLI, but with different implementation details. After preparing the insert via the prepareStatement method, the remaining steps are to invoke the appropriate setXXXX method (for example, setString or setInt) for each column, followed by addBatch. Those steps are repeated for each row to be inserted, then executeBatch is invoked to perform the inserts. See the JDBC Tutorial in the References for an example. Use load to get the data into a staging table very quickly, then use INSERT ... SELECT to populate the main table. (See section "Increased parallelism through staging tables and other approaches" below.) The cost savings from this approach arises from the speed of load, coupled with the fact that INSERT ... SELECT transfers data within DB2 itself (on the server), eliminating communication costs. Usually you would not use this approach unless there's additional processing to be done in the INSERT ... SELECT that could not be done in the load itself. If it's not possible for you to pass multiple rows in one insert, the next best thing is to combine multiple inserts into a group that all get passed together from the client to the server. (However, this implies that each insert will contain literal values and will thus be prepared, so performance will be substantially worse than if parameter markers were used, as discussed above in "Statement preparation".) Combining statements into one is done through Compound SQL: At the SQL level, a compound statement is created through the BEGIN ATOMIC or BEGIN COMPOUND statement. Within CLI, compound statements can be established through multiple SQLExecDirect and SQLExecute calls; see the CLI Guide and Reference Volume 1 for details. As of DB2 V8 FixPak 4, another approach to generating compound statements is by setting the statement attribute SQL_ATTR_CHAINING_BEGIN before issuing multiple SQLExecute calls (for a single prepared statement), and SQL_ATTR_CHAINING_END afterwards. Here are some other suggestions on this topic: If possible, have the client running in the same code page as the database, to avoid conversion costs on the server. The database's code page can be determined by running "get db cfg for <database>". CLI will automatically perform data type conversions in some cases, but this comes with a (small) hidden performance penalty. Try to have the input values already in the most appropriate form for the column being inserted into. Minimize the insert-related setup costs in the application. For example, when using array inserts in CLI you should try to execute SQLBindParameter once per column for the entire set of inserts, not once for each set of array contents. These calls are not expensive on an individual basis, but they do add up.

4 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

3. Finding where to store the row DB2 uses one of three algorithms for deciding where to insert a row. (If Multi-dimensional Clustering (MDC) is in use, it's a different story, but we won't cover that here.) For details on the insert algorithms, see the referenced location in the DB2 V8 Administration Guide: Performance. The default mode is for DB2 to search the Free Space Control Records (FSCR's) interspersed in a table's pages to find a page that has enough free space to hold the new row. Obviously, if there is relatively little free space on each page, much of the search time will be wasted. To counter this, DB2 provides the DB2MAXFSCRSEARCH registry variable to allow you to limit the search to fewer than the default of 5 pages. The second algorithm is used when the table is placed in APPEND mode through ALTER TABLE. This avoids the FSCR search entirely, as rows are simply placed immediately at the end of the table. The final algorithm is used when the table has a clustering index; in which case DB2 tries to insert each row on a page with similar key values (according to the clustering index). If there's no room there, it tries nearby pages, and if there's no room there either, it does an FSCR search. If optimizing insert time is the only consideration, using APPEND mode is the fastest approach for bulk inserts, but its effect is not nearly as profound as that of many of the other optimizations discussed here; see tests 22 and 23. The next best approach should be the default algorithm, but changing the value of DB2MAXFSCRSEARCH had negligible impact in the test environment; perhaps in a less I/O constrained environment that change would have been significant. Having a clustered index has a significantly negative impact on insert performance (tests 32 and 38 showed almost a 20% overhead), which is not surprising given that the purpose of such an index is to improve query (i.e., Select) performance by doing extra work at insert time. If having a clustered index is needed, its effect on inserts can be minimized by ensuring that sufficient free space exists: use ALTER TABLE to increase PCTFREE, then REORG to reserve the free space. Allowing too much free space, however, could cause extra pages to be read for queries and largely defeat the purpose of using a clustering index. Alternatively, dropping a clustered index before bulk inserts, and recreating afterwards, may be the optimal approach (there is no more overhead for creating a clustered index than a regular index, just extra overhead at insert time). 4. Buffer Pool, I/O, and Page Cleaning Every insert is performed by storing the new row on a page and eventually writing that page to disk. Once the page is identified, as discussed above, it must be available in the buffer pool before rows can be added to it. For bulk inserts, most pages will be newly assigned to the table, so let's focus on the handling of new pages. When a new page is required and the table is in a System Managed Storage (SMS) tablespace, by default each page is allocated individually from the filesystem. However, if the db2empfa command has been run against the database, each SMS tablespace has new pages allocated for it an extent at a time. Tests 11 and 82 show that running db2empfa can make inserts to SMS about twice as fast when the extent size is the default of 32 pages, because in test 82 there are 32 allocations of one page instead of the one allocation of 32 pages in test 11. Tests 11 and 83-85 show that extent sizes smaller than 32 make performance progressively worse because of the extra allocations, but suggest that extent sizes larger than 32 are unlikely to help much. The suggestion is to

5 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

run db2empfa and use an extent size of 32. With a Database Managed Storage (DMS) tablespace, space is pre-allocated when the tablespace is created, but extents of pages are assigned to tables during insert processing. The pre-allocation of space through DMS provided about a 20% benefit vs. SMS -- see tests 11 and 81. Test 81 used a DMS file tablespace, and a small additional benefit would probably have occurred if a DMS raw tablespace had been used. There was no apparent effect from varying extent size with DMS. If there are indexes on the table, an entry is added to each index for each inserted row. This requires the appropriate index pages to be in the buffer pool. Later on we'll discuss index maintenance, but for now just keep in mind that the buffer pool and I/O considerations for inserts apply in a similar manner to index pages as they do data pages. As an insert progresses, more and more pages will be filled with inserted rows, but DB2 does not require any of the new or updated data or index pages to be written to disk in order for either the insert itself or the subsequent Commit to complete. (That's because of DB2's writeahead logging algorithm. There's an exception that is covered below in the section on Logging.) However, the pages will need to be written to disk at some point, which could be as late as during database shutdown. In general for bulk inserts, you will want asynchronous page cleaning to take place quite aggressively so that there are always free slots in the buffer pool for new pages. The rate of page cleaning, or the total absence of it, can cause large differences in timings which can make performance comparisons misleading. For example, with a buffer pool of 100,000 pages and no page cleaning, a bulk insert may complete without any of the new or changed ("dirty") pages being written to disk, but subsequent operations (such as selects or even a database shutdown) may be greatly delayed while up to 100,000 dirty pages from the inserts are written to disk. On the other hand, if aggressive page cleaning is done in the same situation, the bulk insert may take longer but afterwards there will be fewer dirty pages in the buffer pool, resulting in better performance for subsequent tasks. It may not always be clear which of those results is truly better, but for very large bulk inserts it is usually impossible to store all of the dirty pages in the buffer pool, so effective page cleaning is a necessity for optimal performance. For the best possible page cleaning: Reduce the value of the CHNGPGS_THRESH database configuration parameter from the default of 60 to a value as low as 5. This parameter determines the threshold percentage of dirty pages in the buffer pool at which page cleaning will be initiated. Try enabling the registry variable DB2_USE_ALTERNATE_PAGE_CLEANING (new in DB2 V8 FixPak 4). Setting this variable to ON provides a more proactive approach to page cleaning than the default approach (based on CHNGPGS_THRESH and LSN gap triggers). I have not measured its effect. See the FixPak 4 Release Notes for information. Ensure that the value of the NUM_IOCLEANERS database configuration parameter is set to at least the number of physical storage devices in the database. As far as I/O itself is concerned, it can be minimized when there are indexes that need to be built, by using as large a buffer pool as possible (see the section "Index maintenance" below.) If there are no indexes, a large buffer pool might not help much other than in delaying I/O; that is, it may allow all new pages to fit in the buffer pool, but eventually those pages will still need to be written to disk.

6 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

When I/O for writing pages does occur, it can be made faster by normal I/O tuning steps, such as: Spreading tablespaces over multiple containers (which map to separate disks). Using the fastest hardware and storage management configuration possible, which involves factors such as disk and channel speed, write caching, and parallel writes. Avoiding RAID5 (unless it's used with an efficient storage device such as Shark). 5. Locking By default, each inserted row has an X lock held on it from the time the row is created until the insert is committed. There are two performance issues relating to inserts and locking: The CPU overhead to obtain and release locks, and Possible concurrency problems from lock conflicts. For a well optimized bulk insert, the CPU cost of obtaining and later freeing an X lock on every row can be significant. The only alternative to a lock on every new row is a table lock (since there are no page locks in DB2). Tests 11 and 101 showed a 3% reduction in elapsed time when table locking was used. There are three ways to cause a table lock, which we'll now look at, deferring discussion of the disadavantages for a minute: Run ALTER TABLE <name> LOCKSIZE TABLE. This causes DB2 to use a table lock for all subsequent SQL statements using the table, until the locksize is changed back to ROW. Run LOCK TABLE <name> IN EXCLUSIVE MODE. This causes an X lock to be immediately taken on the table. Note that the lock will be freed at the next commit (or rollback), so if you're running a test where you commit every N rows, the LOCK TABLE statement will need to be re-executed after each Commit. Use default locking, but have small values for the LOCKLIST and MAXLOCKS database configuration parameters. After some small number of row locks are obtained, a lock escalation to a table lock will occur automatically. The disadvantage of all of these, of course, is the concurrency impact: if an X lock exists on the table, no other application can access it unless that application uses isolation level UR (uncommitted read). If you know that exclusive access will not cause problems, by all means use table locking. However, even if you stick with row locking, keep in mind that during a bulk insert you may have thousands of new rows with X locks in the table, so there may be conflicts with any other applications using the table. These conflicts can be minimized in several ways: Make sure that lock escalations don't occur unintentionally. You may need to increase LOCKLIST and/or MAXLOCKS to allow enough locks for the insert application. Use isolation level UR for those other applications. With V8 FixPak 4, lock conflicts may also be reduced via the DB2_EVALUNCOMMITTED registry variable: with it set to YES, in many cases locks are obtained only on rows that qualify for a predicate, not on all rows that are examined. Issuing a COMMIT frees locks, so committing more frequently may provide sufficient relief from lock conflicts.

7 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

Notes There were concurrency issues involving inserts and next key locks in V7, but these have been virtually eliminated in V8 through type-2 indexes. If you are migrating to V8 you should be sure to use the REORG INDEXES command with the CONVERT keyword, to convert indexes from type-1 to type-2. W or NW locks are possible during inserts in V7, but in V8 only when type-1 indexes or isolation level RR are in use. Avoid these situations if possible. The lock taken by an insert (usually an X lock) is in general not affected by the isolation level; e.g., using UR will not prevent locks from being taken on the inserted rows. However, if you are using an INSERT ... SELECT, the isolation level will affect the locks obtained for the SELECT. 6. Logging By default, each insert is logged for recovery purposes. Log records are written first to the log buffer in memory, and then to the log file, most commonly when the log buffer is full or a commit occurs. Optimizing the logging of bulk inserts is a matter of minimizing the number of log writes and making the writes as fast as possible. The first thing to consider here is the log buffer size, controlled by the database configuration parameter LOGBUFSZ. It has a default value of 8 pages, or 32K, which is smaller than ideal for most bulk inserts. Let's take an example. For a bulk insert with 200 bytes logged per row, the log buffer will fill after about 160 rows have been inserted. If there are 1000 rows being inserted, there will be about six log writes due to the log buffer being filled, plus one for the commit. By raising the value of LOGBUFSZ to 64 (256K) or more, the log buffer will not fill, and there will only be one log write (at commit) for the bulk insert. Tests 104 and 105 showed about a 13% improvement from using a larger LOGBUFSZ. The downside of a larger log buffer is that crash recovery can take slightly longer. Another possibility for minimizing log writes is to use "ALTER TABLE <name> ACTIVATE NOT LOGGED INITIALLY" (NLI) for the table being inserted into. When that's done, none of the inserts will be logged for the duration of that same unit of work, but there are two important issues related to NLI: If there's a statement failure, the table will be marked as inaccessible and must be dropped. This and other recovery issues (see the SQL Reference discussion of Create Table) makes NLI not a feasible approach for many situations. The commit at the end of the unit of work cannot complete until all of the dirty pages involved in the unit of work have been written to disk. This means the commit can take a substantial amount of time. In fact, as shown by tests 6 and 7, if page cleaning is not done aggressively, the total elapsed time for Iisert plus commit can be longer with NLI. However, as test 8 showed, utilizing NLI with aggressive page cleaning can shorten the elapsed time substantially. If using NLI, keep your eye on the elapsed times of your commits. As far as speeding up log writes is concerned, some possibilities are: Place the log on disk(s) that are separate from the tables being inserted into. Stripe the log across multiple disks at the operating system level. Consider a raw device for the log, but note that management is more difficult. Avoid RAID 5 due to its inappropriateness for write-intensive activity.

8 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

7. Committing Commit forces log records to disk, thus ensuring that the inserts to that point are guaranteed to be in the database, and frees the locks on the new rows. Those are valuable activities, but since a Commit always involves a synchronous I/O (to the log) and insert doesn't, the overhead of a Commit can easily be higher than that of an insert. Therefore, committing after every row in a bulk insert environment is very bad for performance, so make sure you don't use autocommit (which is the default for CLI and the CLP). Committing after every 1000 rows or so is recommended: tests 61-78 show up to about a ten times performance improvement when committing every 1000 rows instead of every one or two. Committing more than 1000 rows at a time will provide only small savings and increase recovery time if a failure occurs. A slight amendment to the above: If the MINCOMMIT database configuration parameter has a value higher than 1 (the default), DB2 does not necessarily do a synchronous I/O for each commit, but waits and tries to share log I/O among a group of transactions. This is beneficial for some environments, but in general will have a neutral or negative effect on bulk inserts, so MINCOMMIT should be kept at 1 if bulk inserts are the key task being performed. Optional areas of improvement There are several types of processing that will occur automatically for an insert, when they apply. If your main objective is simply to minimize insert time, the simplest approach is to avoid all of these overheads, but that may not be desirable in the overall context. Let's discuss these in turn. Index maintenance For each row inserted, an entry must be added to each index (including any primary key index) on the table. There are two main costs for this process: The CPU cost to traverse each index tree, searching one page at each level, to find where the new entry must be stored (index entries are always stored in key sequence); The I/O cost to read all of the searched index pages into the buffer pool, and to eventually write each updated page to disk. The worst-case scenario is one where there is significant random I/O during index maintenance. Suppose there are 10,000 rows being inserted, 50,000 leaf pages in the index, and 5000 pages in the index's buffer pool, and the key values for the rows being inserted are randomly spread over the entire key range. As many as 10,000 leaf pages (and possibly some non-leaf pages), will need to be brought into the buffer pool to be searched and/or updated, and there's only a 10% chance a given leaf page will already be in the buffer pool. The high probability of a disk read being necessary for each insert makes this scenario prone to poor performance. On a per-row basis, it is much more expensive to add rows to an existing index than it is to create a new index. If you are inserting to an empty table, you should always create indexes after doing volume inserts. (Note that when you use load, indexes should be created beforehand). If you are inserting to a populated table, dropping indexes before doing volume inserts, and recreating the indexes afterwards, can be the fastest approach, but only if a relatively large number of rows is being inserted -- probably more than about 10-20% of the table size. You can help speed up index maintenance by using a large buffer pool for the index tablespace, and, if possible, ordering the inserts so that the key values are ordered, not random.

9 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

Tests 31-37 in Appendix A give some results for how insert time can vary with the number of indexes and when they're created. In a nutshell, inserts can take multiple times longer with four indexes than no indexes, and deferring index creation until after inserts can cut the total time (for inserts plus index creation) by from one-quarter to almost one-half. If maximizing insert performance is the key objective, increasing PCTFREE for the index may reduce the number of page splits that occur during the random insertion of index entries. This must be done carefully, however, because too much free space will mean an abundance of index pages, which could negatively impact query performance and even insert processsing itself. Constraint verification This category of overhead includes CHECK constraint verification and foreign key constraint (referential integrity (RI)) verification. CHECK constraints have quite low overhead (see tests 11-13 in Appendix A), largely because no I/O is needed for each row (since the value(s) to be checked are within the row, although there may be some computation to be done). Inserting a row when there are foreign keys is a different matter. For each foreign key, a lookup must be done in the parent table(s) to ensure that a parent row exists. Although this lookup is done via the primary key index, it does take CPU cycles to do the search, and possibly I/O to read index page(s) into the buffer pool. Tests 11, 14, and 15 show that having two foreign keys can more than double the insert time. In the same way that using CREATE INDEX after a table is populated is cheaper than building an index via individual INSERT statements, using an ALTER TABLE to create a foreign key (i.e., do the verification) as a bulk operation is cheaper than the total incremental cost of the verification during each insert. Comparing tests 14 and 16, and 15 and 17, we see that creating the foreign key(s) after inserts can reduce the total elapsed time by roughly 40%. If possible, before doing mass inserts into a table you should remove all constraints on it using ALTER TABLE, and recreate them afterwards (again using ALTER TABLE), unless you will be inserting fewer than about 10-20% of the rows in the table. Some applications do their own checking to ensure that relationships between tables are valid; that is, they might read a parent row to make sure it exists, before inserting a child row. If such checking is done correctly, having foreign key constraints defined in the database adds extra overhead. However, there are at least three reasons why defining the constraints in the database is better: 1. Applications are simpler without the checking 2. Performance is slightly better if DB2 does the checking. 3. Having the constraints defined in the database allows DB2 to know relationships between tables and in some cases allows it to select better access plans based on that knowledge. If application checking can't be removed, the best alternative is define the foreign keys in DB2, but with the NOT ENFORCED clause in CREATE TABLE or ALTER TABLE, so that the checking overhead is avoided but the optimizer can use the relationship knowledge. Trigger execution

10 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

If one or more insert triggers are defined on a table, each insert will result in the execution of the actions prescribed in the trigger definition. Since those triggered actions are typically one or more INSERT, UPDATE, or DELETE statements, the overhead of triggers can be substantial in a mass insert operation. As shown by the results of tests 18-21 in Appendix A, adding triggers can cause insert performance to be made several times slower. Unlike the temporary removal of indexes and constraints, however, application knowledge is required to know when it is acceptable to avoid trigger execution; that is, when it will not cause data integrity issues. Where it is acceptable, you may wish to temporarily disable triggers using one of the techniques described in the article listed in the References section. Identity columns and sequence objects These are two approaches to having DB2 automatically generate integer column values, typically during inserts. The main performance issue to be aware of for identity and sequence is that, for recoverability reasons, generated values must be logged. To reduce the log overhead, the values can be pre-reserved (cached), and one log record is written each time the cache is exhausted. The default is to cache 20 value. As the results of tests 41-51 in Appendix A show, not having a cache is extremely costly (almost nine times slower than the default), while using a cache size larger than the default can cut the time by more than half and reduce the overhead of using Identity or Sequence to less than 20%. If you have a choice to make between using Identity or Sequence, Identity performs up to a few percent better. Generated columns When inserting rows into a table with one or more columns defined with a "generated as" clause, such as: CREATE TABLE t1 (c1 CHAR(5), c2 CHAR(5) generated always as (UPPER(c1))) additional overhead results from the function call(s) to establish the generated value(s). However, the overhead is very small and should not deter you from using this functionality. Regeneration of "refresh immediate" materialized query tables (MQT's) An MQT can be used to enhance query performance through the pre-computation of aggregate values. For example:

create table staffsum as ( select count(salary) as salcount, sum(salary) as salsum, dept from staff group by dept ) data initially deferred refresh immediate

If the MQT is defined as "refresh immediate", the aggregations in the MQT will be re-computed at the time of each insert, so in general it is not a good idea to do volume inserts against a table with refresh immediate MQT's. However, DB2 will optimize the re-computation as best it can, such as by scanning the summary table instead of the entire base table. We suggest running Explain against your inserts that involve MQT's, to be aware of what's happening behind the scenes. Miscellaneous considerations Partitioned tables (in DPF, formerly V7 EEE)

11 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

When inserting a row into a partitioned table (with the DB2 V8 Data Partitioning Feature (DPF)), the partitioning key value for the row is hashed to determine the partition that the row must be inserted into, and then DB2 sends the row to that partition. This one-row-at-a-time processing is slow, as we've seen before for CLI when compared with array inserts, and is made worse by the need to send each row from the coordinator partition to the target partition. To minimize the overhead you can use buffered inserts. When this approach is in use, hashed rows destined for a given partition are placed in a buffer and sent to that partition as a group instead of one at a time. You can cause buffered inserts to be done by using the "INSERT BUF" option of the Prep or Bind command. For details on buffered inserts, see the reference given for the Application Development Guide: Programming Client Applications. There is also a Developer Domain article on buffered inserts with Java (SQLJ only); see the References below. In cases where the absolute maximum performance is required for repeated bulk inserts in a DPF environment, you may want to explore two related DB2 API's. The first, sqlugtpi, lets your application get the partitioning information for a table. That information can then be used with the sqlugrpn API to find which partition number a row belongs to. You would use these API's to group all data for a given partition together, then connect to that partition so that no transfer of data between partitions needs to be done, and repeat this for each partition. This approach can achieve very fast performance but does require some effort in ensuring that it works perfectly despite multiple data types, code pages, and so on. Another problem that can arise with DPF inserts is if there are foreign key constraints on the table being inserted into (the child table). Suppose the parent and child tables have different partitioning keys. Then each child row's parent will in general be on a different partition, so for most inserted rows, the parent verification check will need to cross from the child partition to the parent partition. Solutions for this include giving the parent and child tables the same partitioning key (which may not be the best choice for query performance), or, if multiple logical partitions are in use, settting the DB2 registry variable DB2_FORCE_FCM_BP to YES. Increased Parallelism Through Staging Tables and Other Approaches The use of a staging table can provide improved performance for some insert scenarios. The typical usage is that instead of doing bulk inserts into a table, the LOAD command is used to load rows into a staging table; from there, INSERT ... SELECT can be used to insert rows into the main table. Both LOAD and INSERT ... SELECT are much faster than regular inserts, and even the two steps together are often faster than regular inserts. However, for performance alone, in V8 it's usually better to use load directly into the main table, because load doesn't have the concurrency restrictions it had in V7. Aside from the massaging of data, the main reason you might still want to use a staging table in V8 is to break up the bulk insert into smaller pieces that can be run in parallel. On a system with multiple processors, each insert will run within a single DB2 agent and will not exploit more than one processor, even if the DBM INTRA_PARALLEL parameter is set to ON. For example, if you are inserting 1M rows on an 8-way machine, normal insert processing will typically not utilize much more than about 12% (100 / 8) of the CPU. (Load, on the other hand, will automatically use a much higher percentage of the CPU, which is another reason it is preferred vs. inserts.) Instead, you could load the 1M rows into a staging table, then run eight concurrent insert ... Select statements, with predicates in the eight Selects chosen to each retrieve a unique subset of about 1/8 of the rows in the staging table. Finally, you could run concurrent inserts through a multithreaded application, with each thread doing its own inserts. For an INSERT ... SELECT, anything that makes the select faster may reduce the elapsed time of the whole statement, but it's beyond the scope of this

12 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

document to go into much detail. Some possibilities are as follows. Note that these apply to the select portion only; the same factors would in general not help the insert portion. Adding indexes (not on the insert table!) Using a large buffer pool Using parallelism (INTRA_PARALLEL=YES and DFT_DEGREE > 1) Using isolation level UR (such as in a WITH UR clause) Inserting LOB and LONG Columns These types of columns are unique in that they are not cached in the buffer pool. Consequently, any insert that includes one or more of these columns results in them being written out to disk immediately, which in DB2 terminology is a "direct write". As you can imagine, this makes LOB/LONG inserts much slower than "normal" inserts: test 91, using a CLOB column, was over 9 times slower than the baseline test (11, with a CHAR column). Some optimization possibilities are: Change from LOB or LONG to VARCHAR. This allows buffer pool caching to take place. It may require putting the table in a tablespace with a large page size, such as 32K, because the page size must be large enough for all of the non-LOB and non-LONG columns to fit. Use SMS or DMS file tablespaces, which may allow operating system caching to negate some of the performance degradation. Use an optimal storage / hardware configuration for the LOB/LONG data. Try using the COMPACT and NOT LOGGED attributes for the LOB columns. They did not provide improvements in my small test, but may when substantial amounts of data are used. Optimization class For a simple insert with no constraints, a test of changing the optimization class from the default (5) to 1 did not result in a significant change in performance, despite the fact that this changed the optimizer's algorithm to a cheaper one. There may be a benefit in using a lower optimization class when inserts are prepared frequently, involve constraints, or have selects. Conversely, a small percentage of inserts that have a complex select in them will benefit from raising the optimization class from 5 to a higher value. Inserting/updating a target table from source table (MERGE statement) A fairly common database task is updating a target table from a source table. A specific case is to take each row in the source table and if it does not already exist in the target table, insert it into the target; otherwise update the target row. In V8 you can use the MERGE statement to do this in one statement rather than many executions of separate statements, and performance should be better. Monitoring and tuning inserts

13 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

As you attempt to monitor and tune inserts, the basic task is no different from that of most other performance analysis: identify what the bottleneck is and deal directly with it. Determining the bottleneck is first a matter of using operating system tools to look at CPU, I/O, memory, and network consumption. This should let you rule out certain areas and focus on one or two. It's beyond the scope of this paper to discuss operating system facilities further. Try not to get sidetracked on extraneous issues. For example, if CPU utilization is at 100%, making a change to reduce I/O is probably not going to improve performance, and it may discourage you from making the same change later when it could be very useful, after the CPU bottleneck has been removed. You should try to have the application report the rate of inserts at various points during the operation. For example, it is useful to know if the number of inserts per second is the same at ten minutes into the run as it was at five minutes in. Often there is a brief initial slow period as the insert begins, then a fast period while the buffer pool fills up and no data page I/O is done. Then, when data pages start being written out, the rate can slow down somewhat, especially if page cleaning or the I/O subsystem is not optimal. For very large bulk inserts there is typically a point at which a steady rate of inserts is reached. If this is not the case, it is typically because indexes are being built during the inserts, causing more and more index pages to be created over time and possibly requiring random I/O to read existing index pages for them to be updated. If this is the case, a larger buffer pool is the best solution, but adding more free space to index pages may also help. Now let's look at the key DB2 facilities to help you monitor and tune inserts: Snapshots, Event Monitoring, and Explain. For more information on snapshots and event monitoring, see the System Monitor Guide and Reference; for Explain, see the Administration Guide: Performance. Snapshot monitoring Snapshot monitoring provides numerous pieces of information describing what's happening during insert processing. You can use the following steps to obtain all of the available information (or you can choose to get subsets of the information): Use the UPDATE MONITOR SWITCHES command to turn on all of the switches. Run RESET MONITOR ALL to reset counters. This makes it easier to compare different snapshots and look for differences over the course of a test. Wait for a standard length of time, such as one minute or five minutes, and then issue GET SNAPSHOT FOR ALL ON <database> . Repeat the previous two steps to get multiple snapshots to compare. Most of the information relevant to inserts can be found in the database snapshot, and most of that information is also available with more granularity in the appropriate bufferpool, application, and tablespace snapshots. The following are the most pertinent lines in the database snapshot:

Buffer pool data writes Asynchronous pool data page writes Buffer pool index writes Asynchronous pool index page writes Total buffer pool write time (ms) = = = = = 500 500 0 0 25000

14 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

Total elapsed asynchronous write time LSN Gap cleaner triggers Dirty page steal cleaner triggers Dirty page threshold cleaner triggers Update/Insert/Delete statements executed Rows inserted

= = = = = =

25000 21 0 0 100000 100000

Take a look at "Rows inserted" in consecutive snapshots to see if the rate of inserts varies during the course of a bulk insert. Most of the other values reflect the amount of I/O and the effectiveness of page cleaning. Regarding the latter, ideally you'll see that all of the data writes are asynchronous, and all of the buffer pool write time is asynchronous (as is the case in the above output); if not, try lowering CHNGPGS_THRESH and/or increasing NUM_IOCLEANERS. Additional information on the insert can usually be found in the dynamic SQL snapshot. It can be very useful to look at the total elapsed time and compare that with the user and system CPU times. Most of the difference between the elapsed and CPU times should taken up by I/O, so it may be clear where the bulk of the time is being spent and where to put your tuning effort. Here's a subset of a dynamic SQL snapshot entry for a CLI array insert of 100,000 rows. Note that the "Number of executions" is one per row, even though the application sent only 1/10 of that number of arrays.

Number of executions Number of compilations Rows written Buffer pool data logical reads Total execution time (sec.ms) Total user cpu time (sec.ms) Total system cpu time (sec.ms) Statement text = = = = = = = = 100000 1 100000 102120 13.830543 10.290000 0.130000 INSERT into test1 values(?, ?, ?, ?, ?)

Some additional information to look at in snapshot output: "Lock waits" and "Time database waited on locks" -- Use these to see if the locks on inserted rows are causing concurrency issues for other applications. Table Snapshot -- "Rows Written" will reflect the number of rows inserted (or updated). Event monitoring DB2 event monitor lets you obtain performance information on events as they occur on the server, such as statement or transaction completion. For the purpose of insert performance analysis, you may want to create an event monitor for statements and activate it during a period of insert executions. Although

15 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

it may be overkill, the event monitor output will show the elapsed time of each insert statement. For OLTP-like applications, the overhead of running event monitoring for statements is quite high, and the output voluminous, so be careful not to have the monitor running for too long; even a few seconds can produce megabytes of output. You could write the monitor information to a table to facilitate analysis of the results, such as the performance trend over time. The following is a condensed sample statement event from a series of 10,000 CLI array inserts of 10 rows each. There is one event per array, in this case per 10 rows.

17) Statement Event ... Appl Handle: 9 Appl Id: *LOCAL.wilkins.0953D9033443 ------------------------------------------Type : Dynamic Operation: Execute Section : 4 Creator : NULLID Package : SYSSH200 Text : INSERT into test1 values(?, ?) ------------------------------------------Start Time: 01-28-2004 22:34:43.918444 Stop Time: 01-28-2004 22:34:43.919763 Exec Time: 0.001319 seconds Number of Agents created: 1 User CPU: 0.000000 seconds System CPU: 0.000000 seconds Fetch Count: 0 Rows read: 0 Rows written: 10 Internal rows deleted: 0 Internal rows updated: 0 Internal rows inserted: 0 Bufferpool data logical reads: 10 SQLCA: sqlcode: 0 sqlstate: 00000

Explain If the performance of your inserts is not as good as expected, it may be because there is "hidden" processing taking place. As discussed earlier, this processing could take such forms as index maintenance, constraint verification, or trigger execution. Running a form of Explain (such as Visual Explain, or the Explain statement plus db2exfmt) against your insert will reveal most of the extra processing (but not index maintenance). You may be able to eliminate the extra processing if it seems to be the cause of the performance problem.

16 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

As a simple example, the following graph (produced by db2exfmt) shows the access plan for an "Insert into Test1 values (?, ?, ...)". Can you guess what the extra processing is here? The answer is below.

Rows RETURN ( 1) Cost I/O | 0.333333 TBSCAN ( 2) 28.2956 1 /----+---\ 0.04 1 FILTER TABFNC: SYSIBM ( 3) GENROW 28.2266 1 | 1 NLJOIN ( 4) 28.1268 1 /---------+--------\ 1 1 INSERT IXSCAN ( 5) ( 7) 25.5248 2.60196 1 0 /---+--\ | 1 116 120 TBSCAN TABLE: WILKINS INDEX: SYSIBM ( 6) TEST1 SQL0401290925513 0.0048 0 | 1 TABFNC: SYSIBM GENROW

17 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

Before I give the answer: Explain is also very useful for INSERT ... SELECT statements. The inserts themselves could be very fast, but there may be an access plan problem for the SELECT which slows down the whole statement. This would be revealed by Explain. Answer: The above access plan is for an insert into the child table of a foreign key relationship. There is a nested loop join (NLJOIN, step 4) of the insert (step 5) to an index scan (IXSCAN, step 7). The latter is actually a primary key lookup against the parent table and constitutes the foreign key constraint verification. In this case there's relatively little overhead from the extra processing: the cost of the index scan was estimated at 2.60196 timerons (timerons are cost units combining (primarily) CPU and I/O costs), while the insert itself had a cost of 25.5248 timerons. If there were a trigger, for example, you would see that reflected in one or more additional insert, Update, or Delete entries in the access plan. By the way, when you see "GENROW" in an access plan, it is a "generate row" step. It represents the creation of temporary row(s) for use in subsequent steps, and is not something you need to worry about. Conclusion In this paper we have presented numerous ways to improve insert performance. See Appendix B for a complete list of them. The following is the most important subset, and each of these can in some cases make performance over twice as fast: Use Load instead, if possible. Use parameter markers to avoid Prepare costs for each row. Issue a Commit every N rows, where N is a large number such as 1000. Definitely do not commit for every row, so watch out for autocommit situations. Insert an array of rows at a time. Minimize the presence of constraints, indexes, and triggers during the inserts. If using SMS tablespaces, run db2empfa. Optimize the use of "special features": buffered inserts with partitioned tables, a large cache for Identity and Sequence values. We hope that this article has given you a good idea of what happens during DB2 insert processing, as well as how to monitor and improve its performance. References Search and download DB2 manuals DB2 V8 Data Movement Utilities Guide; Appendix B, Differences Between the Import and Load Utility. Since Import is fundamentally a front end for insert statements, this appendix can help you decide if you can use Load instead of inserts (or Import). DB2 V8 Application Development Guide: Programming Client Applications. Chapter 17 covers buffered inserts for partitioned databases. DB2 V8 Application Development Guide: Programming Server Applications. Chapter 6 covers the insertion of data to LOB columns. DB2 V8 Call Level Interface Guide and Reference (two volumes):

18 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

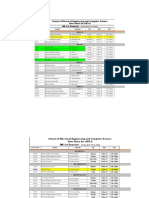

In Volume 1: Chapter 6 covers array operations, and in Chapter 7 there's a section "Importing Data with the CLI LOAD Utility in CLI Applications". Chapter 9 describes Compound SQL. Chapter 26 discusses data conversion. Volume 2 covers the CLI functions. Those that are most pertinent to optimizing inserts are SQLPrepare (for preparing an insert for execution), SQLBindParameter (for binding parameter markers to memory locations), SQLSetStmtAttr (for specifying array size), SQLExecute (for inserting an array of rows), SQLBulkOperations (an alternate method of doing array inserts), and SQLSetConnectAttr (for turning autocommit off). DB2 V8 System Monitor Guide and Reference. It describes the various monitor tools and the information they provide. DB2 V8 Administration Guide: Performance. Chapter 2 has a section called "insert process", which focuses on DB2's algorithms for deciding where to insert a row. The beginning of Chapter 3 has an extensive description of concurrency and locking. Chapter 13 covers the DBM and DB configuration parameters. DB2 V8 Command Reference. It provides details on all of the DB2 commands, such as GET SNAPSHOT and getevmon. DB2 V8 SQL Reference. Chapter 1 in Volume 2 describes the BEGIN ATOMIC, BEGIN COMPOUND, INSERT, and other statements. DB2 V8 Administrative API Reference. It provides details on the sqlugtpi and sqlugrpn API's. DB2 V8 FixPak 4 Release Notes talk about the new features in FixPak 4. Click here, choose the appropriate platform, then get to the Release.Notes directory and download the relnotes.pdf file. The process differs somewhat across the platforms. JDBC Tutorial. Section 3.4 discusses batch operations and gives examples for batch inserts Data Management Developer Domain article on EEE buffered inserts with Java (SQLJ) Data Management Developer Domain article on Temporarily disabling triggers Appendix A -- Performance measurements This appendix illustrates the benefit of some of the optimization techniques discussed in this report (and the impact of non-optimal approaches). Each test was run at least twice and the results were consistent, but the results are not necessarily closely representative of those you would see in your environment. In particular, the results were obtained on a system that was more heavily I/O constrained than ideal, due to the user tablespace and log being in the same filesystem and the same two disks. As a result, improvements that reduce CPU overhead would typically have more benefit than reported here. The following apply to all of these tests, except if noted otherwise: DB2 V8 FixPak 4 on a small RS/6000 system A local client was used An SMS tablespace was used for user data, and the db2empfa command had been run against the database to establish multi-page file allocation. Tablespace page/extent/prefetch sizes were defaults (4K, 32, 32). The table being inserted into started out empty and had no indexes on it. All tests were done using array inserts in CLI, except for tests 1-2, 8, 61-68, and 79. There was a Commit for every 1000 rows. Table 1. Effect of preparing statement and using array Test # Insert method / Notes # Rows Elapsed secs.

19 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

1 2 3

CLI -- SQLExecDirect for each row CLI -- Prepared statement but 1 row/insert CLI -- Prepared statement and array insert (10 rows/insert)

10,000 10,000 10,000

180.63 92.05 12.85

Tests 1-3 had autocommit on and thus had a Commit for each insert. That is, there was a Commit for each row in tests 1 and 2, and for every 10 rows in test 3. For the performance impact of Commit, see tests 61-78. Table 2. Populating one table from another one Test # 6 7 8 9 Insert method / Notes INSERT ... SELECT with logging INSERT ... SELECT with NOT LOGGED INITIALLY Same as test 7, but with CHNGPGS_THRESH = 5 Load from a cursor (same Select as tests 6-8) # Rows 100,000 100,000 100,000 100,000 Elapsed secs. 15.66 16.43 11.80 12.95

Tests 6-9 timed the population of one table from another, including the time to Commit. Test 7 show that using NOT LOGGED INITIALLY (NLI) actually caused a slowdown in performance, because of the new pages needing to be written to disk at Commit time: the Commit took over half of the time. However, with the more aggressive page cleaning in test 8, performance was improved substantially, mostly through the Commit time being reduced by over five seconds. Test 9 showed a nice 17% improvement from using Load instead of insert ... Select, without the risk of NLI as in test 6. Table 3. Effects of check constraints, foreign keys and triggers Test # 11 12 13 14 15 16 17 18 19 20 21 22 23 Insert method / Notes CLI -- Same as test 3, but 100K rows, commit 1000 CLI -- same as test 11, but 1 check constraint CLI -- same as test 11, but 2 check constraints CLI -- same as test 11, but 1 foreign key (FK) CLI -- same as test 11, but 2 foreign keys (FK's) CLI -- same as test 14, but FK added after Inserts CLI -- same as test 15, but FK's added after Inserts CLI -- same as test 11, but 1 trigger doing Insert CLI -- same as test 11, but 2 triggers doing Insert CLI -- same as test 11, but 1 trigger doing Update CLI -- same as test 11, but 2 triggers doing Update CLI -- same as test 11, but with 1M rows CLI -- same as test 22, but with APPEND ON # Rows 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 Elapsed secs. 31.51 33.65 36.63 55.37 72.71 32.84 38.89 67.57 175.95 54.71 150.18 282.02 281.64

Clearly the use of check constraints has a minor effect on performance, but foreign keys and triggers can be extremely significant. In tests 18 and 19, each

20 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

trigger inserted one row into a separate table in the same tablespace as the base table, and this exposed an I/O bottleneck on the database and log disks. In tests 20 and 21, each trigger incremented an "insert count" column in a one-row table; although there were no additional rows written out as there were in tests 18 and 19, the update overhead and logging still caused the inserts to run significantly slower than without any triggers. Although standard database I/O tuning would have improved tests 18-21, the point is that having constraints in place during mass inserts is something to be avoided if possible. Table 4. Effect of creating index before or after inserts Test # 31 32 33 34 35 36 37 38 Insert method / Notes CLI -- Same as test 3, but 100K rows, commit 1000 CLI -- Same as test 31, but with 1 index CLI -- Same as test 31, but with 2 indexes CLI -- Same as test 31, but with 3 indexes CLI -- Same as test 31, but with 4 indexes CLI -- Same as test 35, but indexes created after inserts CLI -- Same as test 32, but index created after inserts CLI -- Same as test 32, but index was clustered # Rows 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 Indexes 0 1 2 3 4 4 (*) 1 1 Elapsed secs. 31.51 53.73 83.26 108.21 141.63 73.75 39.44 62.93

In tests 32-35 the indexes were created before the inserts, while in tests 36-37 the indexes were created after the inserts (and for the latter tests the elapsed times cover the inserts plus the CREATE INDEX statements). Table 5. Using identity or sequence Test # 41 42 43 44 45 46 47 48 49 50 50 Insert method / Notes CLI -- same as test 31 (no Identity or Sequence) CLI -- same as test 41, but Identity column, no cache CLI -- same as test 41, but Identity column, cache 5 CLI -- same as test 41, but Identity column, cache 20 (default) CLI -- same as test 41, but Identity column, cache 100 CLI -- same as test 41, but Identity column, cache 1000 CLI -- same as test 41, but Sequence, no cache CLI -- same as test 41, but Sequence, cache 5 CLI -- same as test 41, but Sequence, cache 20 (default) CLI -- same as test 41, but Sequence, cache 100 CLI -- same as test 41, but Sequence, cache 1000 # Rows 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 100,000 Elapsed secs. 31.51 896.61 212.52 99.06 61.62 37.51 896.92 212.58 101.87 66.55 39.55

Tests 41-51 show that using Identity or Sequence has a profoundly negative effect on performance if a small cache size, or no cache, is used, but using a large cache can make the overhead almost negligible.

21 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

Table 6. Tests showing effect of large arrays and large number of inserts per COMMIT Test # 1 61 62 63 64 2 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 Insert method / Notes CLI -- SQLExecDirect for each row (repeated from above) CLI -- same as test 1, but with a Commit every 5 rows CLI -- same as test 1, but with a Commit every 10 rows CLI -- same as test 1, but with a Commit every 100 rows CLI -- same as test 1, but with a Commit every 1000 rows CLI -- Prepared statement but 1 row/insert (repeated from above) CLI -- same as test 2, but with a Commit every 5 rows CLI -- same as test 2, but with a Commit every 10 rows CLI -- same as test 2, but with a Commit every 100 rows CLI -- same as test 2, but with a Commit every 1000 rows CLI -- as test 3 (10x rows); array size 10, Commit per 10 rows CLI -- same as test 69, but array size 10, Commit per 100 rows CLI -- same as test 69, but array size 10, Commit per 1000 rows CLI -- same as test 69, but array size 100, Commit 100 rows CLI -- same as test 69, but array size 100, Commit 1000 rows CLI -- same as test 69, but array size 1000, Commit 1000 rows CLI -- same as test 69, but array size 2, Commit 2 rows CLI -- same as test 69, but array size 2, Commit 10 rows CLI -- same as test 69, but array size 2, Commit 100 rows CLI -- same as test 69, but array size 2, Commit 1000 rows CLI -- Using Load via SQLSetStmtAttr (array size 10) # Rows 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 10,000 Elapsed secs. 183.55 118.41 114.23 103.43 102.86 92.05 22.34 20.78 10.11 7.58 118.18 56.71 30.09 50.65 24.27 23.43 471.82 155.21 74.82 48.51 13.68

The above tests show the great benefit of using large arrays and committing a large number of rows at a time, with the latter being more important. Table 7. Using SMS or DMS Test # 11 81 82 83 83 84 85 Insert method / Notes CLI -- SMS with db2empfa run (repeated from above) Same as 11 but with DMS file tablespace Same as 11 but db2empfa NOT run (SMS) Same as 11 but extentsize = 4 (and prefetchsize = 4) Same as 11 but extentsize = 4 (and prefetchsize = 4) Same as 11 but extentsize = 8 (and prefetchsize = 8) Same as 11 but extentsize = 16 (and prefetchsize = 16) # Rows 100,000 100,000 100,000 100,000 100,000 100,000 100,000 Elapsed secs. 31.51 25.52 62.87 38.37 38.37 34.61 31.75

22 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

The above tests show that for SMS, running db2empfa is critical to good insert performance, and that using a smaller extentsize than 32 pages is detrimental. DMS is somewhat preferable to SMS. Table 8. Impact of a CLOB column Test # 11 91 Insert method / Notes CLI -- (repeated from above) Same as 11 but with CHAR(10) column now CLOB(10) # Rows 100,000 100,000 Elapsed secs. 31.51 286.49

The above comparison shows the extreme impact of having a CLOB column, even a very short one. Variations of test 91 were tried, but the results were very similar, regardless of the choice of logged or not logged, or compact or not compact, for the CLOB column. Table 9. Using table locks instead of row locks Test # 11 101 Insert method / Notes CLI -- (repeated from above) Same as 11 but with LOCKSIZE TABLE in use # Rows 100,000 100,000 Elapsed secs. 31.51 30.58

The above comparison shows that causing table locks to be used instead of row locks saved about 3% in elapsed time. Table 10. Varying LOGBUFSZ Test # 104 105 Insert method / Notes Same as test 71, but Commit 10K rows (LOGBUFSZ=8) Same as 104 but with LOGBUFSZ = 256 # Rows 100,000 100,000 Elapsed secs. 28.53 24.91

Test 105 showed about a 13% improvement from raising LOGBUFSZ high enough such that there were no log writes forced by having the log buffer fill up during the Inserts. Table 11. Using multiple optimizations Test # 22 111 1(*) Insert method / Notes CLI -- (repeated from above) Same as 22 but with all optimizations (see below) CLI -- (as test 1, but extrapolated to 1,000,000 rows) # Rows 1,000,000 1,000,000 1,000,000 Elapsed secs. 282.02 160.45 ~18000.00

Test 111 used all of the optimizations tried above as alternatives to the baseline: DMS instead of SMS, arrays of 1000 rows (vs. 10), committing every 10000 rows (vs. 1000), LOCKSIZE TABLE, APPEND ON, and LOGBUFSZ 256. Note that test 22 was already somewhat optimized as compared to the original baseline (test 1). The time for test 1 has been extrapolated here to 1,000,000 rows to show the vast difference in performance that is possible with only a few

23 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

factors being changed. Appendix B -- List of optimization suggestions for bulk inserts Here are the suggestions discussed in more detail earlier in this paper. Keep in mind that these have varying degrees of impact and some will have a negative impact on tasks other than Inserts. 1. Prepare the Insert statement once, with parameter markers, and execute it multiple times. If Prepares are frequent, try a lower optimization class. 2. Have multiple rows per Insert, through CLI array or JDBC batch operations, and optimize the application by moving as much processing as possible outside of the array/batch loop. 3. Group Inserts together (Compound SQL). 4. Have the client application and the database use the same codepage. 5. Avoid data type conversions between client and database. 6. Avoid LOB and LONG columns; otherwise, see the suggestions for optimizing their use. 7. Use APPEND mode for the table or set DB2MAXFSCRSEARCH (registry) to a low value. 8. Avoid having any indexes on the table, if possible, but particularly not a clustering index. 9. Reserve an appropriate amount of free space on data pages (0 if using APPEND mode). 10. Reserve an appropriate amount of free space on index pages (more than 10% if random Inserts). 11. DMS tablespaces are best, but if using SMS, run db2empfa and use an extent size of 32 or more. 12. Use a large buffer pool, especially if indexes must be built during the Inserts. 13. Ensure effective page cleaning by lowering CHNGPGS_THRESH and increasing NUM_IOCLEANERS (DB CFG). Consider DB2_USE_ALTERNATE_PAGE_CLEANING (registry) in V8 FP4. 14. Spread data, indexes, and log over multiple disks, with the log on different disks from the others.. Avoid RAID 5 for data, indexes, and log. 15. Use LOCK TABLE or ALTER TABLE to establish locking at the table level. If this has a concurrency impact, you may need to increase LOCKLIST and/or MAXLOCKS to ensure that an exclusive lock escalation does not occur. 16. Consider DB2_EVALUNCOMMITTED (registry, V8 FP4) to reduce lock impacts on other applications. Also, use isolation level UR for other applications to minimize lock impact on them. 17. Increase LOGBUFSZ (DB CFG). 18. Use ACTIVATE NOT LOGGED INITIALLY for the Insert table, but beware of recovery issues. 19. Commit after every N rows, where N is a large number such as 1000. 20. Minimize the triggers, CHECK and foreign key constraints, and generated columns for the table. 21. Avoid IDENTITY and SEQUENCE if possible, but otherwise use a large cache of values. 22. Avoid inserting to tables with "refresh immediate" MQT's based on them. 23. In a DPF environment, use buffered inserts and avoid inter-partition traffic. 24. Use Load and a staging table or a multithreaded application to achieve Insert parallelism. 25. For Insert ... Select, ensure that the Select portion is optimized.

24 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

26. Use Explain to discover "hidden" processing. 27. And, it goes without saying, use the fastest possible CPU's and disks.

Resources Learn "DB2 for OS/390 and z/OS V7 Load Resume and SQL INSERT Performance": (developerWorks, Mar 2003): Read the results of different ways of adding data to a DB2 table in DB2 for z/OS: SQL INSERT, online load resume, and offline load resume. "Allocating for High-Velocity Inserts on DB2 UDB for iSeries" (developerWorks, Mar, 203):Improve the performance of those applications in concurrent environments by preallocating storage. developerWorks Information Management zone: Learn more about DB2. Find technical documentation, how-to articles, education, downloads, product information, and more. Stay current with developerWorks technical events and webcasts. Get products and technologies Build your next development project with IBM trial software, available for download directly from developerWorks. Discuss Participate in developerWorks blogs and get involved in the developerWorks community. About the author Bill Wilkins is a senior technical specialist in the IBM Information Management Business Partner Enablement group in Toronto. Prior to this he worked in DB2 performance analysis in the IBM Toronto development laboratory for six years. He is an IBM Certified Advanced Technical Expert in DB2 UDB, has contributed to two books on DB2, and has over twenty-six years of experience in the IT industry. Close [x]

25 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

developerWorks: Sign in

IBM ID: Need an IBM ID? Forgot your IBM ID? Password: Forgot your password? Change your password Keep me signed in. By clicking Submit, you agree to the developerWorks terms of use.

The first time you sign into developerWorks, a profile is created for you. Select information in your profile (name, country/region, and company) is displayed to the public and will accompany any content you post. You may update your IBM account at any time. All information submitted is secure. Close [x]

Choose your display name

The first time you sign in to developerWorks, a profile is created for you, so you need to choose a display name. Your display name accompanies the content you post on developerWorks. Please choose a display name between 3-31 characters. Your display name must be unique in the developerWorks community and should not be your email address for privacy reasons. Display name: (Must be between 3 31 characters.)

By clicking Submit, you agree to the developerWorks terms of use.

26 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

All information submitted is secure. Average rating (71 votes) 1 star 2 stars 3 stars 4 stars 5 stars 1 star 2 stars 3 stars 4 stars 5 stars

Add comment: Sign in or register to leave a comment. Note: HTML elements are not supported within comments.

Notify me when a comment is added1000 characters left

Be the first to add a comment

Print this page Share this page Follow developerWorks

27 of 28

12/6/2013 4:17 PM

Tips for improving INSERT performance in DB2 Universal Database

http://www.ibm.com/developerworks/data/library/tips/dm-0403wilkins/

About Help Contact us Submit content

Feeds

Report abuse Terms of use Third party notice IBM privacy IBM accessibility

Faculty Students Business Partners

28 of 28

12/6/2013 4:17 PM

Vous aimerez peut-être aussi

- Database Systems Design Implementation and Management 12th Edition Coronel Solutions Manual 1Document65 pagesDatabase Systems Design Implementation and Management 12th Edition Coronel Solutions Manual 1debora97% (30)

- Gas Turb 14Document379 pagesGas Turb 14IzzadAfif1990Pas encore d'évaluation

- Payroll SLA White PaperDocument16 pagesPayroll SLA White PaperIjaz AsimPas encore d'évaluation

- Business Plan Template For Startups and EntrepreneursDocument6 pagesBusiness Plan Template For Startups and EntrepreneursArun Risal67% (3)

- Lead2Pass - Latest Free Oracle 1Z0 060 Dumps (31 40) Download!Document5 pagesLead2Pass - Latest Free Oracle 1Z0 060 Dumps (31 40) Download!aaPas encore d'évaluation

- Bods 2Document385 pagesBods 2kishorePas encore d'évaluation

- Query Processing and Optimization in Oracle RDB: Gennady Antoshenkov, Mohamed ZiauddinDocument9 pagesQuery Processing and Optimization in Oracle RDB: Gennady Antoshenkov, Mohamed ZiauddinEbaybookPas encore d'évaluation

- Lead2Pass - Latest Free Oracle 1Z0 060 Dumps (131 140) Download!Document6 pagesLead2Pass - Latest Free Oracle 1Z0 060 Dumps (131 140) Download!aaPas encore d'évaluation

- Oci Tuning DsDocument37 pagesOci Tuning DsNaresh KumarPas encore d'évaluation

- DB2 9 Fundamentals Exam 730 Prep, Part 6:: Data ConcurrencyDocument29 pagesDB2 9 Fundamentals Exam 730 Prep, Part 6:: Data ConcurrencyrupikavalikiPas encore d'évaluation

- Db2 SQL Tuning TipsDocument11 pagesDb2 SQL Tuning Tipsarieswonder100% (1)

- Stored ProcedureDocument16 pagesStored ProcedurePrasanga WdzPas encore d'évaluation

- SnowPro Advanced Data EngineerDocument8 pagesSnowPro Advanced Data EngineereliPas encore d'évaluation

- Migrating Bde Applications To DbexpressDocument17 pagesMigrating Bde Applications To DbexpressmohjavaPas encore d'évaluation

- Eis 1112000 ReadmeDocument6 pagesEis 1112000 ReadmeganpaPas encore d'évaluation

- SAP ABAP BEST PracticesDocument6 pagesSAP ABAP BEST PracticesVivek V NairPas encore d'évaluation

- Easytrieve Plus /DB2Document14 pagesEasytrieve Plus /DB2samimaktarPas encore d'évaluation

- 121 130 Lead2Pass Latest Free Oracle 1Z0 060 Dumps (51 60) Download!Document5 pages121 130 Lead2Pass Latest Free Oracle 1Z0 060 Dumps (51 60) Download!mahendra.lal71373Pas encore d'évaluation

- Connect Databases PowerCenter Server Windows UNIXDocument9 pagesConnect Databases PowerCenter Server Windows UNIXvpv0002Pas encore d'évaluation

- Sas Oracle Loading MethodsDocument9 pagesSas Oracle Loading MethodsBiswadip PaulPas encore d'évaluation

- Database Performance Tuning and Query OptimizationDocument17 pagesDatabase Performance Tuning and Query OptimizationMohsinPas encore d'évaluation

- Oracle Pass4sure 1z0-599 v2015-03-14 by Elenora 75qDocument56 pagesOracle Pass4sure 1z0-599 v2015-03-14 by Elenora 75qIrving Esteban GuerreroPas encore d'évaluation

- Tutorial PDFDocument16 pagesTutorial PDFSaurabh SinghPas encore d'évaluation

- SQL Transform Customized SQL QueriesDocument7 pagesSQL Transform Customized SQL QueriesnikkusonikPas encore d'évaluation

- Oracle® Application Express: Release Notes Release 3.2Document10 pagesOracle® Application Express: Release Notes Release 3.2dvega1970940Pas encore d'évaluation

- Stored Procedure and Its Purpose Ans AdvantagesDocument7 pagesStored Procedure and Its Purpose Ans AdvantagesVenkateswara RaoPas encore d'évaluation

- Table of Contents for SQL Server DocumentationDocument85 pagesTable of Contents for SQL Server DocumentationPipe CastilloPas encore d'évaluation