Académique Documents

Professionnel Documents

Culture Documents

Saalasti Et Al 2011b

Transféré par

Fernando Ángel Villalva-SánchezDescription originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Saalasti Et Al 2011b

Transféré par

Fernando Ángel Villalva-SánchezDroits d'auteur :

Formats disponibles

J Autism Dev Disord DOI 10.

1007/s10803-011-1400-0

ORIGINAL PAPER

Audiovisual Speech Perception and Eye Gaze Behavior of Adults with Asperger Syndrome

tsyri Kaisa Tiippana Satu Saalasti Jari Ka Mari Laine-Hernandez Lennart von Wendt Mikko Sams

Springer Science+Business Media, LLC 2011

Abstract Audiovisual speech perception was studied in adults with Asperger syndrome (AS), by utilizing the McGurk effect, in which conicting visual articulation alters the perception of heard speech. The AS group perceived the audiovisual stimuli differently from age, sex and IQ matched controls. When a voice saying /p/ was presented with a face articulating /k/, the controls predominantly heard /k/. Instead, the AS group heard /k/ and /t/ with almost equal frequency, but with large differences between individuals. There were no differences in gaze direction or unisensory perception between the AS and control participants that could have contributed to the audiovisual differences. We suggest an explanation in terms of weak support from the motor system for audiovisual speech perception in AS.

S. Saalasti (&) K. Tiippana Institute of Behavioural Sciences, University of Helsinki, P.O. Box 9, 00014 Helsinki, Finland e-mail: satu.saalasti@helsinki. S. Saalasti L. von Wendt Department of Pediatric and Adolescent Medicine, Helsinki University Central Hospital, Helsinki, Finland Present Address: tsyri J. Ka Knowledge Media Laboratory, Center for Knowledge and Innovation Research (CKIR), Aalto University School of Economics, Aalto, Finland tsyri K. Tiippana M. Sams J. Ka Mind and Brain Laboratory, Department of Biomedical Engineering and Computational Science (BECS), Aalto University School of Science, Aalto, Finland M. Laine-Hernandez Department of Media Technology, Aalto University School of Science, Aalto, Finland

Keywords Asperger syndrome Autism spectrum disorders Multisensory integration Audiovisual speech Eye gaze behavior Perception

Introduction Asperger Syndrome (AS) is a neurodevelopmental disorder belonging to the high functioning end of Autism Spectrum Disorders (ASD). It manifests with impairments in social interaction, communication and rigid/repetitive behavior (ICD-10, WHO 1992; DSM IV, APA 1994; Klin et al. 2000). The face-to-face communication of individuals with AS is characterized by difculties in initiating and/or sustaining a conversation, stereotyped use of language, atypical eye gaze behavior and difculties in controlling the rate, rhythm and prosody of speech (Wing 1981; Frith 1992, 2004). The question of whether decient multisensory integration contributes to communication impairment in ASD has been raised in recent years (Iarocci and McDonald, 2006; Oberman and Ramachandran 2008). Autobiographical reports and clinical observations have, for a long time, suggested that individuals with ASD may have problems in combining information from different sense modalities (e.g. Grandin and Scariano 1992; Williams 1992; Attwood 1998). This has led to the development of intervention methods widely used in clinical practice (sensory integration theory, Ayres 1979). However, the current understanding of the nature of such problems is still limited because they have only been addressed in a few studies, as has been pointed out by Molholm and Foxe (2005) and Iacoboni and Dapretto (2006). Multisensory perception is especially important for faceto-face communication, as we perceive speech both with

123

J Autism Dev Disord

our ears and eyes. Viewing articulatory movements improves speech recognition, especially under noisy conditions (Sumby and Pollack 1954). Visual speech can also change the auditory speech percept, as it does in the McGurk effect, where a clear acoustic speech signal is combined with an incongruent visual articulation (McGurk and MacDonald 1976). In the best-known example, a voice saying /ba/ is presented with a face articulating /ga/. This combination is most commonly heard as /da/, which is taken to represent a fusion of the audio and visual components. It is also sometimes heard as /ga/, on the basis of the visual component. In both cases, vision has altered the auditory speech percept. The instances where this combination is heard as /ba/, in accordance with the audio component, are generally assumed to indicate that the components are not integrated, since vision does not seem to inuence the speech percept. Consequently, it is common practice to express the strength of the McGurk effect in terms of responses in accordance with the audio component, and to interpret it to reect the strength of audiovisual integration. In this case, the fewer /ba/-responses an individual gives, the stronger the McGurk effect and the stronger the integration. Several earlier studies on audiovisual speech perception in ASD have used this approach and have reported mixed results. Autistic children experience a weaker McGurk effect than do controls (De Gelder et al. 1991; Massaro and Bosseler 2003; Williams et al. 2004; Mongillo et al. 2008), which at rst glance implies weaker visual inuence on speech perception. However, the weaker visual inuence may also be due to autistic childrens poorer lip-reading skills (Massaro and Bosseler 2003; Williams et al. 2004). In other words, if their lip-reading ability is poor, it is understandable that vision should exert little inuence on speech perception. Nevertheless, De Gelder et al. (1991) reported a weaker McGurk effect without any differences in lip-reading ability between autistic children and controls, suggesting that audiovisual speech perception is impaired in autistic children without any underlying unisensory decits. It is known that high functioning adolescents (aged 1219 years) with ASD benet less from congruent visual speech when listening to sentences embedded in background noise, but this too could be accounted for by poorer lip-reading skills (Smith and Bennetto 2007). In adulthood, high-functioning individuals with ASD neither differ from controls in the strength of the McGurk effect, nor in their lip-reading ability (Keane et al. 2010). Some of the differences in the results of the aforementioned studies could be explained by differences in the age of the participants. Taylor et al. (2010) studied 716 yearold children with ASD and found that the youngest of them were poorer at lip-reading and, additionally, displayed a

weaker McGurk effect than their controls. However, there was some indication that the ASD children caught up with their control peers as they grew older, with the oldest children becoming more like the controls both in lipreading and the strength of the McGurk effect. In summary, previous research suggests that in ASD lip-reading and audiovisual speech perception might be more affected in childhood but improve with age. The purpose of the present study was to investigate audiovisual perception of speech in ASD by examining the McGurk effect in adult individuals with AS. We hypothesized that the McGurk effect would be weaker for individuals with AS, as suggested by several studies (De Gelder et al. 1991; Massaro and Bosseler 2003; Williams et al. 2004; Mongillo et al. 2008at the time when we designed the experiments, the studies by Keane et al. (2010), and Taylor et al. (2010), were not available, which would have suggested a null hypothesis). We also studied visual contributions to audiovisual speech perception by measuring lip-reading skills and by registering eye movements. We used McGurk stimuli where an audio /apa/ was dubbed onto a visual /aka/ or a visual /ata/. We have used these stimuli in previous studies, and they elicit a McGurk effect of hearing the stimulus in accordance with the visual component in typically developed populations (Andersen et al. 2009; Tiippana et al. 2010). Therefore, we expected the control participants to hear k for the former stimulus (ApVk), and t for the latter stimulus (ApVt). Importantly, we expected the AS participants to hear these stimuli more frequently according to the audio component, so that they would give more p-responses than controls, demonstrating a weaker McGurk effect. Eye movements were not recorded in any of the studies reviewed above, even though there is a known difculty in attending to and using information from faces in ASD, and this could also affect speech perception. The eye region is generally xated on most when inspecting emotional faces, but adults with ASD tend to xate more on the mouth (Baron-Cohen et al. 2001; Dalton et al. 2005; Neumann et al. 2006; Pelphrey et al. 2002; but see also Kirchner et al. 2010). Viewing more complex, dynamic social situations has revealed similar tendency to focus on the mouth (Klin et al. 2002a) and recently, increased xations on the mouth have been found to be associated with communicative competence (Norbury et al. 2009). For speech stimuli, the preliminary ndings of one study suggested that at least two children with ASD experienced less visual inuence on heard speech even when their gaze was xated on the talking face (Irwin 2007). It is not known whether eye gaze patterns differ between adults with ASD and controls during audiovisual speech perception. We addressed this issue by measuring eye gaze during speech perception.

123

J Autism Dev Disord

Previous research on emotional and socially complex stimuli suggests that individuals with AS gaze less at the eyes and more at the mouth than do control participants (Baron-Cohen et al. 2001; Klin et al. 2002b; Pelphrey et al. 2002; Dalton et al. 2005; Neumann et al. 2006; Norbury et al. 2009). Alternatively, as the eye region is important for emotion recognition, it is possible that the previously found paucity of gaze in that region is due to a general avoidance of socially relevant stimulus features, rather than a general avoidance of the eye region. In the case of speech perception, this same tendency to avoid socially relevant stimulation may lead participants with AS to avoid the mouth region, as it is the main source of visual communicative information for speech. If this should happen, the processing of the visual features of speech could be weaker for AS participants, since visual resolution decreases rapidly outside the point of xation. Weaker visual processing could then be reected as poorer lip-reading scores and diminished visual inuence in audiovisual speech perception; i.e. as a weaker McGurk effect. In summary, our main hypothesis was that participants with AS would have a weaker McGurk effect than controls. We also expected differences in the eye gaze patterns so that the groups would differ in the amount of xations on the mouth.

(Ehlers et al. 1999) ADI-R (Lord et al. 1994) and ADOS (Lord et al. 1989). The diagnoses were made by an experienced team of professionals (a child neurologist, neuropsychologist and registered nurse), and the diagnosis was only nalized after the team reached a consensus. The exclusion criteria in the study were schizophrenia, obsessivecompulsive disorders, ADHD and learning difculties. The control group consisted of 16 healthy adults (mean age 32, range 2148) who were gender, age, and IQ pair-wise matched (WAIS-R) with the group diagnosed with AS (Table 1). The control group was screened for AS like symptoms (ASSQ, Ehlers et al. 1999). All the participants were native speakers of Finnish who reported normal hearing and vision and were unaware of the purpose of the study. Written consent was obtained from all the participants and their costs were covered. The study was approved by the ethics committee of the Helsinki University Hospital and was therefore performed in accordance with the ethical standards for human studies laid down in the 1964 Declaration of Helsinki. Stimuli The stimuli were video clips of a female articulating meaningless vowel-consonant-vowel (VCV) stimuli. Unvoiced stop consonants were used, since the voiced consonants /b/ and /g/ are not original Finnish phonemes. The audio stimuli were the utterances /aka/, /apa/ and /ata/ (denoted as Ak, Ap and At). A blurred still image of the face was presented during audio-only trials. The visual stimuli were videos of a face articulating /aka/ and /ata/ (Vk and Vt). The audiovisual stimuli were created by dubbing an audio /apa/ onto either a visual /aka/ or an /ata/ (ApVk and ApVt), while preserving the original timing of the audio and video tracks, so that the visual articulation preceded the voice as in natural audiovisual speech, with the voice starting 200 ms after the opening of the mouth. The duration of the visual articulation was 1 s. Each stimulus presentation started with a 2 s static image of the face with the mouth closed. The nal image of the video, which was a return to closed mouth, remained on the

Methods Participants The participants were 16 adult individuals (mean age 32, range 2050) diagnosed with Asperger syndrome and recruited from the Hospital for Children and Adolescents at the Department of Child Neurology in Helsinki University Hospital and from NeuroMental, a private medical center. Their diagnosis was based on standard ICD-10 (World Health Organization 1992) and DSM-IV (American Psychiatric Association 1994) taxonomies. The additional methods used in the diagnostic procedure were ASSQ

Table 1 Demographic variables and IQ results for participants Behavioral AS (n = 16) M (SD) Gender (male/female) Age Verbal IQ Performance IQ Full scale IQ 13/3 32 (10) 111 (13) 114 (16) 113 (14) Controls (n = 16) M (SD) 13/3 32 (8) 118 (9) 115 (12) 118 (9)

Eye gaze p value .93 .07 .88 .29 AS (n = 9) M (SD) 7/2 33 104 (9) 110 (14) 107 (8) Controls (n = 14) M (SD) 12/2 33 118 (7) 116 (11) 118 (8) p value .84 .007 .311 .026

The p values were obtained from the t test for independent samples

123

J Autism Dev Disord

screen until the participant responded. The duration of the visual and audiovisual trials was thus approximately 4 s, depending on response time. The experiment was run with Presentation software (Neurobehavioral Systems, version 9.70). The visual stimuli were presented on a computer screen (LG Flatron L1717S 1700 LCD) where the angle subtended vertically by the face was 8 degrees. The sounds were played through loudspeakers (Roland Stereo Micro Monitor MA-8) placed symmetrically on each side of the screen. The audio, visual and audiovisual stimuli were presented in separate blocks, and the order of the blocks was pseudo-randomized. Procedure Before starting the experiment, the individual hearing threshold of each participant was determined by the standard staircase method (Wetherill and Levitt 1965). The audio stimuli were presented at varying sound intensity levels which changed according to the participants responses: an incorrect response increased the sound intensity and three consecutive correct responses decreased the sound intensity. The hearing threshold was dened as the mean sound level at reversal points after eight such reversals, and the sound level used in the experiment was 10 dB above this threshold. The mean sound pressure level for AS participants was 53.7 dBA (sem 0.3 dBA) and for control participants 54.3 dBA (sem 0.4 dBA), the small difference in sound levels was not signicant. Visual acuity, measured with a standard near Snellen chart, was required to be at least 0.6 at 80 cm. The dominant eye was monitored with an eye tracker. In the actual experiment, each visual, audio and audiovisual stimulus was presented 24 times in pseudo-randomized order. The participants responded by choosing K, P, T or other on a response box (Cedrus RB-400/RB-600), according to which consonant they heard for the audio and audiovisual stimuli, and according to what they saw the face articulating for the visual stimuli. Eye gaze direction was monitored with a SMI iViewXRED remote eye tracking system (www.smivision.com). The gaze position accuracy was 0.518. At the beginning of each block, the eye tracker was calibrated by asking the participant to focus on nine marked screen locations. Due to technical errors, the eye gaze data of seven AS and two control participants were excluded. As a result, the analysis of the eye gaze data included 9 participants with AS and 14 control participants. Since this resulted in a low number of participants for these analyses, the eye-tracking results should be generalized with some caution.1

1

Fig. 1 A sample bitmap from a visual articulation video. The axes that delimit the regions of interest (ROI) ellipses used in the eye gaze analysis are also illustrated

Five ellipsoid regions of interest (ROI) were dened for eye gaze analysis. These centered on the left and right eye, the nose, the mouth and the whole face excluding the other ROIs (other) (Fig. 1b). The mouth ROI was dened by the maximum opening of the mouth during an utterance. Fixation on these ROIs was calculated with SMI BeGaze analysis software (www.smivision.com). Fixation was dened as a continuous eye gaze position focused within the area of one degree visual angle for a period greater than 100 ms. The eye xation data obtained from the eye tracker was preprocessed in Matlab (www.mathworks.com). The analyses were conducted for the time period of visual articulation. Fixations outside the facial image during visual speech were rare and therefore excluded from the analyses (none in 7 AS and 13 control participants, and 1-6% in two AS and one control participant). To compare the differences in gaze behavior between the groups, we analyzed the percentage of xations (%) and the mean amount of time spent xating on each ROI during a repetition of the stimulus (gaze duration in ms). Because

Footnote 1 continued size. An analysis of the behavioral results of those 9 AS participants and 14 control participants who were excluded from the eye gaze analysis gave the same statistically signicant results as the analysis with all 16 participants. The eye gaze results were similar when an analysis was conducted for the matched 9 AS and 9 control participant pairs.

Note that for the behavioral results, all 16 participants of both groups were included in the analysis in order to maximize the group

123

J Autism Dev Disord

both the number of xations and the gaze duration were non-independent and not normally distributed, their statistical signicances on the predened ROIs were tested with generalized estimating equations (GEE). The GEE model included modality (two levels: audiovisual and visual) and ROI (four levels: mouth, nose, eyes, and other) as within-subjects factors and the group (AS and control) as a between-subjects factor. The distribution of the variable was specied as the gamma distribution. A logarithmic link function was used to correct the distribution of the variable. In order to use logarithmic transformation, the variables were scaled by adding one (1) to the original values. Because of multiple comparisons, the Bonferroni correction was applied.

Results Behavioral Data The audiovisual stimuli produced a McGurk effect, so that hearing was strongly inuenced by the visual component (Fig. 2, top). The most common response was k to the ApVk stimulus (95% of responses in controls, 49% in AS participants) and t to the ApVt stimulus (100% controls, 99% AS). There were very few p-responses in either group (ApVk: 1% controls, 5% AS; ApVt: 0% controls, 3% AS).

The unisensory stimuli were recognized well by both groups. All the control participants recognized both visual stimuli 100% correctly, with recognition rates for the AS participants of 91% for Vk and 93% for Vt (Fig. 2, bottom). All the control participants correctly recognized 100% of the audio /aka/ and /ata/ and 96% of the audio / apa/. The corresponding values for the AS participants were 99%, 94% and 98%. There was a conspicuous difference in the perception of the ApVk stimulus between the groups. Control participants gave 95% k-responses, and only 4% t-responses. In contrast, responses in the AS group were distributed almost evenly between the categories k (49%) and t (46%). Consequently, the difference between the groups in k responses [F(1,30) = 18.48, p \ .001, g2 = .38] and t responses [F(1,30) = 19.28, p \ .001, g2 = .39] was statistically signicant in ANOVA. However, the difference between the groups in audio p-responses was not signicant (1% control, 5% AS; F(1,30) = 1.55, p = .223). Responses in the category other were nonexistent in the AS group and minimal in the control group (1%; not shown in Fig. 2). The response distributions of individual control subjects to the ApVk stimulus were homogenous, consisting almost exclusively of k-responses. This was not true of the participants with AS, for whom the individual response distributions instead represented a continuum (Fig. 3), with some individuals with

Fig. 2 Mean response distributions (? SEM) of the AS and control groups for the audiovisual (ApVk, ApVt) and visual (Vk, Vt) stimuli. The asterisks denote signicant differences between the groups

123

J Autism Dev Disord Fig. 3 Response distributions of individual participants with AS for the audiovisual stimulus ApVk

AS responding like the control participants, and others giving t-responses in various proportions. In order to analyze the results for the entire set of stimuli, we used a prole analysis, because ceiling effects in the control groups performance (several cases of 100% responses in one category) precluded the use of a standard ANOVA. A difference variable was formed for each matched participant pair, where the control participants result was subtracted from the result of the corresponding participant in the AS group. The dependent variable was the percentage value corresponding to the most common response for each stimulus. For unisensory audio and visual stimuli this was the correct response (e.g., p for the audio /apa/), and for audiovisual stimuli it was the response corresponding to the visual component (e.g., k for ApVk). The prole analysis was conducted using the SPSS function General Linear Model Repeated-Measures ANOVA, with the stimulus (ApVk, ApVt, Vk, Vt, Ak, Ap, At) as a within-subjects factor. The Bonferroni correction was used because multiple comparisons were performed. The analysis showed a statistically signicant main effect for the stimulus [F(2, 32) = 11.28, p \ .001, g2 = .043]. Post hoc tests (contrasts) showed that this was due to the stimulus ApVk (p = .012), conrming the result described above. Post hoc tests were not signicant for any other stimulus, indicating that there were no differences between the groups for the audiovisual ApVt or any unisensory stimuli. Eye Gaze In the preliminary analysis, the xation patterns were found to be similar within audiovisual and visual stimulus

conditions and, therefore, the eye gaze data were pooled across ApVk and ApVt as well as across Vk and Vt [i.e. there were no statistically signicant effects between the stimuli: for the AV condition: Stimulus F(1,30) = .521, p = .48; Stimulus x ROI F(1,30) = 2.19, p = .11; for the V condition: Stimulus F(1,30) = .088, p = .77; Stimulus x ROI F(1,30) = 1.97, p = .90], and the pooled data were analyzed between the modalities (AV, V). Overall gaze xation distributions were similar in both groups (Table 2). The participants in both groups xated most frequently on the mouth in both visual and audiovisual conditions, as shown by a signicant main effect of ROI for the number of xations (The Wald Chi-Square v2(3) = 165,8, p \ .000). Pair-wise comparisons conrmed that the effect was due to there being the greatest number of xations on the mouth (p = .041) and the smallest on the eyes (p \ .000) compared to the other ROIs. There were no other signicant main effects or interactions. The gaze duration analysis provided similar results. The Wald ChiSquare was signicant for ROI [v2(3) = 39.42, p \ .000], but not for modality and group, or for their interaction. Pair-wise comparisons revealed that the signicant ROI effect was due to there being the longest mean gaze time on the mouth (p = .027) and the shortest gaze time on the eyes (p \ .000) compared to the other ROIs. The eye gaze analysis did not show any signicant differences between the groups. Finally, due to the participant attrition in the eye gaze analyses there was a signicant difference between AS and control groups in verbal (VIQ, p = .007) and full-scale (FIQ p = .026) intelligence (Table 2). Therefore, we investigated the association of these background factors

123

J Autism Dev Disord Table 2 The eye-tracking results for a subset of 9 AS and 14 control participants Percentage of xations (%) AV AS Mouth Nose Eyes Other 34 (8) 22 (5) 15 (4) 30 (4) Controls 47 (10) 21 (6) 7 (2) 26 (6) V AS 48 (10) 19 (7) 5 (2) 28 (5) Controls 51 (10) 17 (6) 5 (2) 27 (7) Gaze durations (ms) AV AS 372 (118) 210 (66) 57 (18) 224 (71) Controls 431 (115) 194 (52) 44 (12) 218 (58) V AS 443 (140) 193 (61) 17 (5) 226 (72) Controls 539 (144) 157 (42) 34 (9) 215 (58)

The percentage of xations (%) and the cumulative gaze duration (ms, scaled for one stimulus presentation) on regions of interest for audiovisual (AV) and visual (V) stimuli. Standard error of the mean is presented in parentheses

with the gaze xation results on the mouth and eye ROIs in a correlation analysis, and found no signicant correlations. Furthermore, we conducted a correlation analysis for the behavioral results with the ApVk stimulus in order to investigate the potential association of VIQ and FIQ with the variability in perception and again, neither factor had signicant correlations with any of the response categories, with full or reduced number of participants.

Discussion The results of the present study revealed that adults with AS perceived audiovisual speech differently from typically developed matched controls. The groups did not differ in the strength of the McGurk effect, since the adults with AS did not hear the McGurk stimuli more frequently in accordance with its audio component than did the controls. Instead, there was a qualitative difference in audiovisual speech perception. When an acoustic /p/ was presented with a visual /k/, the control participants heard this McGurk stimulus predominantly in accordance with the visual component (as in our previous studies using the same stimuli; Andersen et al. 2009; Tiippana et al. 2010). In contrast, at a group level the participants with AS heard this stimulus almost equally often as /k/ and /t/. Moreover, the AS group was not homogenous; rather it represented a continuum in which some individuals behaved like controls but others heard an increasing proportion of /t/. There was no such difference in the perception of an acoustic /p/ presented with a visual /t/. Furthermore, no differences in unisensory perception or gaze behaviour were found that could have accounted for the difference in audiovisual perception. Our nding is similar to that of Keane et al. (2010), who reported that high-functioning adults with ASD experience an equally strong McGurk effect as that of control participants. However, since their participants were asked to report whether the talker said pa (according to the audio

stimulus) or something else, any qualitative differences in perception could not have been detected with this experimental design. Taylor et al. (2010) suggested that by adolescence, individuals with ASD have caught up with normally developing individuals in the strength of the McGurk effect. The qualitative perceptual difference in the current study could be a residual sign of more impaired audiovisual perception in childhood. Tentatively, the results of the current study may be interpreted as a sign of weaker motor support for speech perception in AS. This interpretation may serve as an inspiration for neurophysiological studies, which can be designed to test it directly in the future. There is neurophysiological evidence that audiovisual speech perception is connected with speech production (Ojanen et al. 2005; Pekkola et al. 2006; Skipper et al. 2005), which supports motor theories of speech perception (e.g. Liberman and Mattingly 1985). According to the model of audiovisual speech perception developed by Skipper et al. (2007), the motor system inuences phonetic interpretation via articulatory predictions, particularly through visual speech gestures which precede the auditory signal. Imaging studies have demonstrated decient activation of the brain areas involved in speech production and the planning of articulation in individuals with AS (e.g. Nishitani et al. 2004). It has been suggested that the mirror neuron system (Rizzolatti et al. 1996) is impaired in ASD, so that the linkages between observed actions and the motor plans for those same actions are weaker (for reviews see Iacoboni and Dapretto 2006; Cattaneo et al. 2007). This may also result in multisensory deciencies in ASD (Oberman and Ramachandran 2008). We explain the current results within Skipper et al.s (2007) model of audiovisual speech perception by making a further assumption that the motor system of some individuals with AS offers weaker support to speech perception than that of typically developing individuals. As a result of accurate articulatory predictions by the speech motor system in individuals with typical development, the evidence

123

J Autism Dev Disord

for the interpretation /k/ was the strongest for the ApVk stimulus in the control group and consequently, the predominant percept was that of hearing /k/. However, for some individuals with AS the motor representations for phoneme production were poorer, so that the mapping of the sensory input to the motor commands resulted in less accurate articulatory predictions. Specically, the visual gestures of articulating /k/ activated motor commands for both /k/ and /t/ in these individuals, giving support to the interpretations /k/ and /t/, but not to /p/. On the other hand, the audio /p/ stimulus provided the strongest evidence for the interpretation /p/, while providing intermediate evidence for /t/, but the weakest evidence for /k/ (Smits et al. 1996). Consequently, the interpretation that was most consistent with both sources was /t/ for these individuals. Similarly we propose that as a result of accurate articulatory predictions, the evidence for the interpretation /t/ was strongest for the ApVt stimulus in the control group. Consequently, the predominant percept was that of hearing /t/. Again, for some participants with AS, the articulatory prediction was less clear, giving support to the interpretations /t/ and /k/, but not to /p/. As the audio signal contained strong evidence for /p/, intermediate evidence for /t/, but weak evidence for /k/, the interpretation that was most consistent with both sources was /t/, as was also the case for the control participants. This explains why the difference between the groups emerged in the case of the stimulus ApVk, but not for the stimulus ApVt. We thus suggest that a decient integration process involving the motor system results in perceptual differences in some individuals with AS. However, decits are likely to also exist at other stages of processing. What is certain is that the simplistic view outlined in the introductionthat audiovisual integration would be directly reected in the percentage of responses in accordance with the audio component of a McGurk stimulusis denitely incorrect. This is shown by the qualitative difference in perception in this study, and also by the work of Brancazio and colleagues (e.g. Brancazio and Miller 2005). Individual differences between the AS participants in our study suggest that only a subgroup processes audiovisual speech differently from typically developed individuals. This heterogeneity within the AS population has been tsyri et al. 2008) and could be a previously noticed (e.g. Ka sign of different phenotypes within the diagnostic group (Klin et al. 2002a). However, as the group size in the current study was relatively small, this observation should be generalized with caution. The gaze xation patterns were similar in both groups with most xations in the mouth region. Furthermore, the level of verbal ability was not associated with eye gaze behavior in AS. Previously, however, the level of verbal ability (Norbury et al. 2009) as well as communicative and

social competence (Klin et al. 2002b; Norbury et al. 2009) have been found to be associated with eye gaze behavior in high functioning individuals with ASD. Most likely, atypical viewing patterns did not become evident in the current study because the stimuli and task were simple as the participants were involved in recognizing consonants in short utterances (Buchan et al. 2007). Importantly, the eye gaze and lip-reading results support the view that the differences in audiovisual speech perception between the groups derive from processes other than attentional or visual perceptual processes. In conclusion, many adults with Asperger syndrome perceive audiovisual speech in a manner that is qualitatively different from that of typically developed individuals. This may contribute to the difculties they have in face-to-face communication, as many communicative cues are audiovisualnot only in speech perception, but also in turn-taking and understanding intentions (prosody and emotional expressions).

Acknowledgments We are obliged to the participants of the study. We would like to acknowledge the contibution of the anonymous Reviewers of this paper, whose comments led to considerable improvements. We thank Tapani Suihkonen for participating in the preparation of the experiment, Tuomas Tolvanen for his help in preprocessing the eye gaze tracking data, and Jari Lipsanen for statistical advice. Eira Jansson-Verkasalo Ph.D. and Minna Laakso Ph.D. gave useful comments on the manuscript and Taina Nieminen- von Wendt MD, Ph.D. helped with her diagnostic expertise. We also thank Professor Pirkko Oittinen for providing access to the eye tracking equipment. The study was funded by Langnet, the Finnish Graduate School of Language Studies, and by the Jenny and Antti Wihuri Foundation. We dedicate this study to the memory of Professor Lennart von Wendt.

References

American Psychiatric Association. (1994). Diagnostic and Statistical Manual of Mental Disorders (DSM-IV) (4th ed.). Washington, DC: APA. Andersen, T. S., Tiippana, K., Laarni, J., Kojo, I., & Sams, M. (2009). The role of visual spatial attention in audiovisual speech perception. Speech Communication, 51, 184193. Attwood, T. (1998). Aspergers syndrome: A guide for parents and professionals. London: Jessica Kingsley Publishers Ltd. Ayres, A. J. (1979). Sensory integration and the child. Los Angeles: Western Psychological Association. Baron-Cohen, S., Wheelwright, S., Hill, J., Raste, Y., & Plumb, I. (2001). The reading the mind in the eyes test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry, 42, 241252. Brancazio, L., & Miller, J. L. (2005). Use of visual information in speech perception: Evidence for a visual rate effect both with and without a McGurk effect. Perception & Psychophysics, 67(5), 759769. , M., & Munhall, K. G. (2007). Spatial statistics of Buchan, J. N., Pare gaze xations during dynamic face processing. Social Neuroscience, 2(1), 113.

123

J Autism Dev Disord Cattaneo, L., Fabbri-Destro, M., Boria, S., Pieraccini, C., Monti, A., Cossu, G., et al. (2007). Impairment of actions chains in autism and its possible role in intention understanding. Proceeding of the National Academy of Sciences of the United States of America, 104(45), 1782517830. Dalton, K. M., Nacewicz, B. M., Johnstone, T., Schaefer, H. S., Gernsbacher, M. A., Goldsmith, H. H., et al. (2005). Gaze processing and neural circuitry of face processing in autism. Nature Neuroscience, 8(4), 519526. De Gelder, B., Vroomen, J., & van der Heide, L. (1991). Face recognition and lip-reading in autism. European Journal of Cognitive Psychology, 3, 6986. Ehlers, S., Gillberg, C., & Wing, L. (1999). A screening questionnaire for Asperger syndrome and other high-functioning autism spectrum disorders in school age children. Journal of Autism and Developmental Disorders, 29(2), 129141. Frith, U. (1992). Autism and Asperger syndrome. Cambridge: Cambridge University Press. Frith, U. (2004). Emanuel Miller lecture: Confusions and controversies about Asperger syndrome. Journal of Child Psychology and Psychiatry, 45, 672686. Grandin, T., & Scariano, M. M. (1992). Minun tarinaniUlos skyla n yliopiston autismista [Emergence: Labelled autism]. Jyva ydennyskoulutuskeskus, Jyva skyla . ta Iacoboni, M., & Dapretto, M. (2006). Mirror neuron system and the consequences of its dysfunction. Nature Reviews Neuroscience, 7, 942951. Iarocci, G., & McDonald, J. (2006). Sensory integration and the perceptual experience of persons with autism. Journal of Autism and Developmental Disorders, 36(1), 7790. Irwin, J. R. (2007). Auditory and audiovisual speech perception in children with autism spectrum disorders. Acoustics Today, 3(4), 815. tsyri, J., Saalasti, S., Tiippana, K., von Wendt, L., & Sams, M. Ka (2008). Impaired recognition of facial emotions from low-spatial frequences in Asperger syndrome. Neuropsychologia, 46, 18881897. Keane, B. P., Rosenthal, O., Chun, N., & Shams, L. (2010). Audiovisual integration in high functioning adults with autism. Research in Autism Spectrum Disorders, 4, 276289. Kirchner, J. C., Hatri, A., Heekeren, H., Dziobek, I. (2010). Autistic symptomatology, face processing abilities, and eye xation patterns. Journal of Autism and Developmental Disorders (epub ahead of print). doi 10.1007/s10803-010-1032-9. Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002a). Dening and quantifying the social phenotype in autism. American Journal of Psychiatry, 159(6), 895908. Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002b). Visual xation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry, 59, 809816. Klin, A., Volkmar, F. R., & Sparrow, S. S. (2000). Diagnostic issues in Asperger syndrome. In A. Klin, F. R. Volkmar, & S. S. Sparrow (Eds.), Asperger syndrome (pp. 2571). New York: The Guilford Press. Liberman, A. M., & Mattingly, I. G. (1985). The motor theory of speech perception revised. Cognition, 21, 136. Lord, C., Rutter, M., Goode, S., Heemsbergen, J., Jordan, H., Mawhood, L., et al. (1989). Autism diagnostic observation schedule: A standardized observation of communicative and social behavior. Journal of Autism and Developmental Disorders, 19(2), 185212. Lord, C., Rutter, M., & Le Couteur, A. (1994). Autism diagnostic interview-revised: A revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. Journal of Autism and Developmental Disorders, 24, 659685. Massaro, D. W., & Bosseler, A. (2003). Perceiving speech by ear and eye: Multimodal Integration by children with autism. Journal of Development and Learning Disorders, 7, 111144. McGurk, H., & MacDonald, J. W. (1976). Hearing lips and seeing voices. Nature, 264, 746748. Molholm, S., & Foxe, J. (2005). Look hear, primary auditory cortex is active during lip-reading. Neuroreport, 16, 123124. Mongillo, E. A., Irwin, J., Whalen, D. H., Klaiman, C., Carter, A. S., & Schultz, R. T. (2008). Audiovisual processing in children with and without autism spectrum disorders. Journal of Autism and Developmental Disorders, 38(7), 13491358. Neumann, D., Spezio, M. L., Piven, J., & Adolphs, R. (2006). Looking you in the mouth: Abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Scan, 1, 194202. Nishitani, N., Avikainen, S., & Hari, R. (2004). Abnormal imitationrelated cortical activation sequences in Aspergers syndrome. Annals of Neurology, 55(4), 558562. Norbury, C. F., Brock, J., Cragg, L., Einav, S., Grifths, H., & Nation, K. (2009). Eye-movement patterns are associated with communicative competence in autistic spectrum disorders. Journal of Child Psychology and Psychiatry, 50, 834842. Oberman, L. M., & Ramachandran, V. S. (2008). Preliminary evidence for decits in multisensory integration in autism spectrum disorders: The mirror neuron hypothesis. Social Neuroscience, 3(34), 348355. tto nen, R., Pekkola, J., Ja a skela inen, I. P., Joensuu, R., Ojanen, V., Mo Autti, T., et al. (2005). Processing of audiovisual speech in Brocas area. NeuroImage, 25(2), 333338. a skela inen, I. P., Pekkola, J., Laasonen, M., Ojanen, V., Autti, T., Ja Kujala, T., et al. (2006). Perception of matching and conicting audiovisual speech in dyslexic and uent readers: and fMRI study at 3T. NeuroImage, 29(3), 797807. Pelphrey, K. A., Sasson, N., Reznick, J. S., Paul, G., Goldman, B., & Piven, J. (2002). Visual scanning of faces in autism. Journal of Autism and Developmental Disorders, 32, 249261. Rizzolatti, G., Fadiga, L., Gallese, V., & Fogassi, L. (1996). Premotor cortex and the recognition of motor actions. Cognitive Brain Research, 3, 131141. Skipper, J. I., Nusbaum, H. C., & Small, S. L. (2005). Listening to talking faces: Motor cortical activation during speech perception. NeuroImage, 25(1), 7689. Skipper, J. I., van Wassenhove, V., Nusbaum, H. C., & Small, S. L. (2007). Hearing lips and seeing voices: How cortical areas supporting speech production mediate audiovisual speech perception. Cerebral Cortex, 17(10), 23872399. Smith, E. G., & Bennetto, L. (2007). Audiovisual speech integration and lipreading in autism. Journal of Child Psychology and Psychiatry, 48(8), 813821. Smits, R., ten Bosch, L., & Collier, R. (1996). Evaluation of various sets of acoustic cues for the perception of prevocalic stop consonants. I. Perception experiment. The Journal of the Acoustical Society of America, 100(6), 38523864. Sumby, W. H., & Pollack, I. (1954). Visual contribution to speech intelligibility in noise. The Journal of the Acoustical Society of America, 26(2), 212215. Taylor, N., Isaac, C., & Milne, E. (2010). A comparison of the development of audiovisual integration in children with autism spectrum disorders and typically developing children. Journal of Autism and Developmental Disorders, (Epub ahead of print). doi: 10.1007/s10803-010-1000-4. tto nen R., Kraus N. & Sams M. (2010). Tiippana K., Hayes E. A., Mo The McGurk effect at various auditory signal-to-noise ratios in

123

J Autism Dev Disord American and Finnish listeners. Proceedings of the AVSP2010, International Conference on Auditory-Visual Speech Processing (pp. 166169). Wetherill, G. B., & Levitt, H. (1965). Sequential estimation of points on a psychometric function. British Journal of Mathematical and Statistical Psychology, 18, 110. Williams, D. (1992). Nowbody nowhere. New York: Times Books. Williams, J. H. G., Massaro, D. W., Peel, N. J., Bosseler, A., & Suddendorf, T. (2004). Visualauditory integration during speech imitation in autism. Research in Developmental Disabilities, 25, 559575. Wing, L. (1981). The Asperger syndrome: A clinical account. Psychological Medicine, 11(1), 115129. World Health Organization. (1992). International classication of diseases, 10th revision (ICD-10). Genova: WHO.

123

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (894)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (587)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (119)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2219)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)

- Cell Cycle Lesson PlanDocument4 pagesCell Cycle Lesson PlanJustine Pama94% (17)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Mobilization NotesDocument239 pagesMobilization NotesSuganya Balachandran100% (6)

- Anesthesiology Resident Survival Guide 2014-2015Document32 pagesAnesthesiology Resident Survival Guide 2014-2015Karla Matos100% (1)

- Improving Visual Spatial Working Memory in Younger and Older Adults Effects of Cross Modal CuesDocument21 pagesImproving Visual Spatial Working Memory in Younger and Older Adults Effects of Cross Modal CuesFernando Ángel Villalva-SánchezPas encore d'évaluation

- Chun, A Taxonomy of ExteDocument33 pagesChun, A Taxonomy of ExteFernando Ángel Villalva-SánchezPas encore d'évaluation

- Executive Cognitive de Cits in Primary DystoniaDocument12 pagesExecutive Cognitive de Cits in Primary DystoniaFernando Ángel Villalva-SánchezPas encore d'évaluation

- Hagen - 8 4 2011Document439 pagesHagen - 8 4 2011Fernando Ángel Villalva-SánchezPas encore d'évaluation

- Disorder of CerebDocument12 pagesDisorder of CereblinakamargaPas encore d'évaluation

- Effect of Water Matrices On Removal of Veterinary Pharmaceuticals by Nanofiltration and Reverse Osmosis MembranesDocument9 pagesEffect of Water Matrices On Removal of Veterinary Pharmaceuticals by Nanofiltration and Reverse Osmosis MembranesFernando Ángel Villalva-SánchezPas encore d'évaluation

- Alexander Luria The Mind of A Mnemonist PDFDocument168 pagesAlexander Luria The Mind of A Mnemonist PDFDora100% (1)

- Anatomy and Aging of Amygdala and Hippocampus in ASDDocument10 pagesAnatomy and Aging of Amygdala and Hippocampus in ASDFernando Ángel Villalva-SánchezPas encore d'évaluation

- Increased Amygdala Respeose To Masked Emotional Faces in Depressed Subjects Resolves With Antidepressant Treatmen - An Fmri StudyDocument8 pagesIncreased Amygdala Respeose To Masked Emotional Faces in Depressed Subjects Resolves With Antidepressant Treatmen - An Fmri StudyFernando Ángel Villalva-SánchezPas encore d'évaluation

- Del Missier, F., Mäntylä, T. y Bruine de Bruin, W. (2010) - Executive Fuctions in Decision Making An Individual Differences Approach.Document29 pagesDel Missier, F., Mäntylä, T. y Bruine de Bruin, W. (2010) - Executive Fuctions in Decision Making An Individual Differences Approach.Fernando Ángel Villalva-SánchezPas encore d'évaluation

- Neurobiologia Del Trastorno de HiperactividadDocument11 pagesNeurobiologia Del Trastorno de HiperactividadFernando Ángel Villalva-SánchezPas encore d'évaluation

- M CHATInterviewDocument26 pagesM CHATInterviewFernando Ángel Villalva-SánchezPas encore d'évaluation

- Aging Control EjecutivoDocument25 pagesAging Control EjecutivoFernando Ángel Villalva-SánchezPas encore d'évaluation

- ADDISONS DSE - Etio Trends&Issues + DSDocument9 pagesADDISONS DSE - Etio Trends&Issues + DSgracePas encore d'évaluation

- 2018 Life Sciences Tsset (Answers With Explanation)Document24 pages2018 Life Sciences Tsset (Answers With Explanation)Swetha SrigirirajuPas encore d'évaluation

- 3 PBDocument12 pages3 PBDumitruPas encore d'évaluation

- Checklist FON 1Document27 pagesChecklist FON 1Hadan KhanPas encore d'évaluation

- Haemoglobin: DR Nilesh Kate MBBS, MD Associate ProfDocument31 pagesHaemoglobin: DR Nilesh Kate MBBS, MD Associate ProfMarcellia100% (1)

- Pathogenesis of AtherosclerosisDocument21 pagesPathogenesis of Atherosclerosishakky gamyPas encore d'évaluation

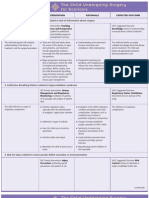

- NURSING CARE PLAN The Child Undergoing Surgery For ScoliosisDocument3 pagesNURSING CARE PLAN The Child Undergoing Surgery For ScoliosisscrewdriverPas encore d'évaluation

- GIT Physio D&R AgamDocument67 pagesGIT Physio D&R Agamvisweswar030406Pas encore d'évaluation

- CM All Compiled NotesDocument100 pagesCM All Compiled NotesKatrina Mae PatalinghugPas encore d'évaluation

- The Anterior Cranial FossaDocument4 pagesThe Anterior Cranial FossaArshad hussainPas encore d'évaluation

- Worksheet - Dna Protein SynthesisDocument2 pagesWorksheet - Dna Protein Synthesisapi-270403367100% (1)

- Nutritional Management of High Output Enterocutaneous FistulaDocument2 pagesNutritional Management of High Output Enterocutaneous FistulaAfra AmiraPas encore d'évaluation

- Animal Morphology (GeoZoo Topics)Document5 pagesAnimal Morphology (GeoZoo Topics)niravhirparaPas encore d'évaluation

- Alternative Modes of Mechanical Ventilation - A Review of The HospitalistDocument14 pagesAlternative Modes of Mechanical Ventilation - A Review of The HospitalistMichael LevitPas encore d'évaluation

- CD JMTE Vol I 2010 PDFDocument276 pagesCD JMTE Vol I 2010 PDFtoshugoPas encore d'évaluation

- Foliar FertilizerDocument7 pagesFoliar FertilizerDjugian GebhardPas encore d'évaluation

- Pharmacology Nursing ReviewDocument19 pagesPharmacology Nursing Reviewp_dawg50% (2)

- Our Senses: Seeing, Hearing, and Smelling The WorldDocument63 pagesOur Senses: Seeing, Hearing, and Smelling The WorldTyler100% (6)

- Anatomy and Physiology-REVIEWER-Practical ExamDocument12 pagesAnatomy and Physiology-REVIEWER-Practical ExamDeity Ann ReuterezPas encore d'évaluation

- Management of Clients With Disturbances in OxygenationDocument13 pagesManagement of Clients With Disturbances in OxygenationClyde CapadnganPas encore d'évaluation

- Electrolyte Imbalance 1Document3 pagesElectrolyte Imbalance 1Marius Clifford BilledoPas encore d'évaluation

- Chapter 3: Movement of Substance Across The Plasma MembraneDocument13 pagesChapter 3: Movement of Substance Across The Plasma MembraneEma FatimahPas encore d'évaluation

- Pathology - Cardiovascular SystemDocument17 pagesPathology - Cardiovascular SystemNdegwa Jesse100% (2)

- Circulatory System: Heart, Blood Vessels & Their FunctionsDocument17 pagesCirculatory System: Heart, Blood Vessels & Their FunctionskangaanushkaPas encore d'évaluation

- Physiological Changes During PregnancyDocument55 pagesPhysiological Changes During PregnancyKrishna PatelPas encore d'évaluation

- Six Elements and Chinese MedicineDocument182 pagesSix Elements and Chinese MedicinePedro Maia67% (3)