Académique Documents

Professionnel Documents

Culture Documents

Transact-SQL Programming - Sample Chapter

Transféré par

mbuyiselwaTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Transact-SQL Programming - Sample Chapter

Transféré par

mbuyiselwaDroits d'auteur :

Formats disponibles

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Transact-SQL Programming

By Kevin Kline, Lee Gould & Andrew Zanevsky 1st Edition March 1999 1-56592-401-0, Order Number: 4010 836 pages, $44.95, Includes CD-ROM

Sample Chapter 1: Introduction to Transact-SQL

In this chapter: SQL and the Introduction of Transact-SQL The Relational Database Model Normalization Row Processing Versus Set Processing The History of SQL Server What Is Transact-SQL? Summary Transact-SQL, an extension to the SQL database programming language, is a powerful language offering many features--a wide variety of datatypes, temporary objects, system and extended stored procedures, scrollable cursors, conditional processing, transaction control, exception and error handling, and much more. We'll introduce those features later in this chapter in the section "What is Transact-SQL?" Before getting to Transact-SQL specifics, however, we'll provide some background information that will help you get a feel for the overall database environment in which Transact-SQL operates. After we explain the basic differences between ANSI SQL and Transact-SQL, we'll jump back to more generalized topics. We'll cover the genesis of the relational database model and its impact on SQL programming languages. We'll talk a bit about normalizing data and introduce you to the idea of row-processing and set-processing information technology. We'll spend a little time talking about the history of SQL Server in general. Finally, we'll introduce many of the features of the Transact-SQL programming language itself.

1 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

SQL and the Introduction of Transact-SQL

SQL, on which Transact-SQL is based, started life in the mid-1970s as an IBM product called SEQUEL. SEQUEL stood for Structured English Query Language. After a permutation or two and some legal problems, IBM changed the name to SQL--the Structured Query Language. The language was designed to provide a standard method for accessing data in a relational database. Ironically, although IBM introduced SQL, Oracle was the first to bring a SQL-using product to market. Today, many different relational database systems utilize SQL as the primary means for accessing and manipulating data. When the American National Standards Institute (ANSI) published a standard for the SQL language in 1989, they set a universal standard to which database vendors could adhere. Later, in 1992, ANSI released an update to the SQL standard, known as SQL-92. The standards helped formalize many of the behaviors and syntax structures of SQL. The ANSI standard covered lots of important details concerning the querying and manipulation of data. The syntax was formalized for many commands; some of these are SELECT, INSERT, UPDATE, DELETE, CREATE, and DROP. Unfortunately, the standards didn't address every facet of programming for a relational database. To meet the needs of their own user communities, database vendors began to extend the SQL language with capabilities that enhanced the basic functionality of SQL. The Transact-SQL language was introduced by Sybase to answer user requirements for programming extensions to SQL--extensions enabling conditional processing, error handling, declared variables, row processing, and numerous other functions. Even some of the simplest operations, like creating an index or performing a conditional operation, are extensions to the SQL language. Furthermore, many relational database products had been on the market for some time before a standard of any kind had been published. As a result, many developers began to implement their own extensions to the SQL language. In most cases, these extensions to SQL were incompatible from vendor to vendor. A program written in Oracle's dialect of SQL, for example, wouldn't run properly under Sybase or DB2 and vice versa unless it contained only the simplest ANSI-standard SQL statements.

The Relational Database Model

These days, relational database management systems (RDBMSs) like SQL Server and Sybase are the primary engines of information systems everywhere--particularly distributed client/server computing systems. Though RDBMSs are now common enough to trip over, it wasn't always that way. Not too long ago, you would probably trip over hierarchical database systems or network database systems or COBOL (heck, that still happens). Here's a quick-and-dirty definition for a relational database: a system whose users view data as a collection of tables related to one another through common data values. Perhaps you are interested in more than a quick-and-dirty definition? Here goes. The whole basis for the relational model follows this train of thought: data is stored in tables, which are composed of rows and columns. Tables of independent data can be linked, or related, to one another if all have columns of data that represent the same data value, called keys. This concept is so common as to seem trivial; however, it was not so long ago that achieving and programming a system capable of sustaining the relational model was

2 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

considered a long shot with limited usefulness. Relational data theory was first proposed by E. F. Codd in his 1970 paper to the ACM entitled "A Relational Model of Data for Large Shared Data Banks." Soon after, Codd clarified his position in the 1974 paper to the Texas Conference on Computing Systems entitled "The Relational Approach to Data Base Management: An Overview." It was in this paper that Codd proposed the now legendary 12 Principles of Relational Databases. If a vendor's database product didn't meet Codd's 12-item litmus test, then it was not a member of the club. The good news is that rules do not apply to applications development; rather, these rules determine whether the database engine itself can be considered truly "relational." Nowadays, most RDBMSs--including both Microsoft and Sybase variants of SQL Server--pass the test. Codd's Rules for a Truly Relational Database System Are you curious about Codd's 12 Principles of Relational Databases? Don't be ashamed that you don't know them by heart; few on the SQL Server's development staff do, and no one on the marketing staff does. However, the few folks who do know these principles by heart treat them like religious doctrine and likely would be mortified by their "lightweight" treatment here: 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. Information is represented logically in tables. Data must be logically accessible by table, primary key, and column. Null values must be uniformly treated as "missing information," not as empty strings, blanks, or zeros. Metadata (data about the database) must be stored in the database just as regular data is. A single language must be able to define data, views, integrity constraints, authorization, transactions, and data manipulation. Views must show the updates of their base tables and vice versa. A single operation must be able to retrieve, insert, update, or delete data. Batch and end user operations are logically separate from physical storage and access methods. Batch and end user operations can change the database schema without having to re-create it or applications built upon it. Integrity constraints must be available and stored in the metadata, not in an application program. The data manipulation language of the relational system should not care where or how the physical data is distributed and should not require alteration if the physical data is centralized or distributed. 12. Any row processing done in the system must obey the same integrity rules and constraints that set-processing operations do. Transact-SQL and the SQL Server database management system accomplish all of these functions. There is some debate about why relational database systems won out over hierarchical and network database systems, but the following reasons seem self-evident: The relational high-level language interface is much simpler to learn and more intuitive than with nonrelational databases (you are, after all, reading this book as opposed to one on Xbase). Relational databases provide efficient and intuitive data structures that easily accommodate ad hoc queries. From phone books to hotel registries, relational databases (of a sort) are second nature to most people.

3 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Relational databases provide powerful integrity controls such as check constraints and referential integrity--thus providing higher-quality data. The RDBMS vendors combined slick marketing and adept hardware positioning to gain a market and mindshare advantage. One of the key risks you face when developing relational databases is their simplicity. They're just so easy. It is no chore to slap together a set of tables and columns for a database. Even assigning relationships between tables is not a big deal. The whole process of database creation can be accomplished so easily that many developers entirely skip the distinct and separate process of database design. There are literally volumes of work written about proper relational database design, which is beyond the scope of this text. But there are a few key concepts you must understand fully if you're going to leverage the power of relational databases and, hence, Transact-SQL. Prominent among them are the concept of normalization and the drastic difference in the set-processing behavior preferred by SQL Server versus the row-processing behavior of popular rapid application development (RAD) tools.

Normalization

There is an old urban legend that claims the term normalization entered the database design lexicon thanks to the late President Richard Nixon. It seems that the researchers developing relational theory in the early 1970s were searching for a term to describe the process of properly defining database tables and their relationships to one another. Coincidentally, the news media were full of stories about President Nixon "normalizing" the relationship between the United States and the People's Republic of China. Somewhere, over someone's head, a lightbulb went off and the term was introduced into the computer world. Don't bet any money on this story, but we have it on good authority from a friend of a friend that this is true. So what is normalization used for? Normalization is a design technique that enables database designers and programmers to develop table structures that minimize programming problems. As we discuss normalization, we'll show you each set of problems that a particular kind of normalization helps negate. The process of normalization is characterized by levels of adherence called normal form. If a table design conforms only to the lowest level of normalization, it is said to be in First Normal Form, which is abbreviated as 1NF. If a table design conforms to the next higher level, it is in Second Normal Form (2NF), and so on. It is uncommon and probably never necessary to take a table design beyond Third Normal Form (3NF). In fact, in some cases, it is actually advantageous in terms of performance to denormalize a table from a higher normal form back to a lower normal form, like from 3NF to 2NF. It is also important to remember that normalizing tables is not full database design; rather, it is an important step in the analysis process. (The database design process is described briefly in Chapter 2, Database Analysis and Design.) Assume that we are keeping a database that contains information about all of our circus animals. (What do you mean you don't own any circus animals? Well, some of you have kids, so that's pretty close.)

Unnormalized.

4 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

The data, when completely unnormalized, looks like this:

Circus_Info Table, Unnormalized 1. animal_nbr (the primary key) 2. animal_name 3. tent_nbr 4. tent_name 5. tent_location 6. trick_nbr1 7. trick_name1 8. trick_learned_at1 9. trick_skill_level1 10. trick_nbr2...(and on through 16) 11. trick_name2...(and on through 16) 12. trick_learned_at2...(and on through 16) 13. trick_skill_level2...(and on through 16)

In this example, the animal_nbr column (in boldface) is our primary key, or the value that uniquely identifies each row. This is an unnormalized structure. But why go to the trouble of normalizing it? Well, otherwise, there would be a number of logical anomalies to deal with. In fact, there would be logic faults whenever you attempted insert, delete, or update operations on this table. (Similar problems are resolved each time you apply a higher level of normalization.) The reason there are so many problems with this table design is that much of the data is redundant and does not wholly depend on the key of the table (animal_nbr). These are the main problems: Insert problem Suppose that a chimpanzee has been acquired and has to be added to the table. It would be impossible to add the new chimp without also adding a trick. Conversely, a new trick couldn't be added to the database without also assigning the trick to a circus animal, which might not reflect the actual business rule. Delete problem What happens if a trick is deleted from the table? Unfortunately, important data about a circus animal would also be deleted. The situation also falters when an animal is deleted. This erases information about a trick that may still be used by the circus. Update problem Updates in the unnormalized table could cause actions on multiple records. Great care must be taken to properly identify the appropriate record when making a change.

First Normal Form (1NF)

To put this in First Normal Form (1NF), we must eliminate repeating groups. That means that any subgroups of data that appear within the record should be split into separate tables. In this case, we have at least two major repeating groups to split. Thus, our unnormalized structure becomes two tables in 1NF:

Circus_Animals Table in 1NF

5 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

1. 2. 3. 4. 5.

animal_nbr (the primary key) animal_name tent_nbr tent_name tent_location

Tricks Table in 1NF 1. animal_nbr (the concatenated key) 2. trick_nbr (the concatenated key) 3. trick_name 4. trick_learned_at 5. trick_skill_level

Now that our tables are in 1NF, we have the obvious advantage of reduced space consumption. Plus, an animal that only knows a few tricks doesn't use up the space allotted for 16 tricks in the unnormalized table. On the flip side, the animal can also know more than 16 tricks. Also note that in the Tricks table, the key had to be expanded to include both animal_nbr and trick_nbr. Concatenated keys are often the byproduct of the normalization process.

Second Normal Form (2NF)

Let's take our 1NF circus tables to Second Normal Form (2NF) by eliminating partial dependencies. Say what? A partial dependency is a fancy word for data that doesn't depend on the primary key of the table to uniquely identify it. For example, the trick name appears in every animal record, but the trick_nbr should be sufficient since we've already got the name in the Tricks table. So this 1NF table:

Tricks Table 1. animal_nbr 2. trick_nbr 3. trick_name 4. trick_learned_at 5. trick_skill_level

becomes these 2NF tables:

Tricks Table 1. trick_nbr 2. trick_nam Animal_Tricks Table 1. animal_nbr 2. trick_nbr 3. trick_learned_at 4. trick_skill_level

6 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Animal Table in 2NF 1. animal_nbr 2. animal_name 3. tent_nbr 4. tent_name 5. tent_location

Unfortunately 2NF, like 1NF, has its own logical faults when attempting data manipulation: Insert problem Assume that we want to create a new record to represent a tiger assigned to the "Big Cats" tent. It's impossible to create an additional tiger record for the Animal table without first creating a new tent. This is due to the fact the tent_nbr column has a transitive dependency to the animal_nbr column, which more uniquely identifies its information than does the tent_nbr column. Delete problem Deleting a particular animal might result in the loss of an entire tent, even though you still want to retain information about that tent. For example, deleting the sole ostrich in the circus completely removes all traces of the "Big Birds" tent, since you only have one of these large birds. Update problem Tent information is redundant in the Animal table, so any change to tent information require a search of the entire table to locate the records needing alteration. Plus, the number of the records requiring the update may vary over time. An additional step of normalization is needed to eliminate these logic faults. This added step converts a 2NF table into a 3NF table.

Third Normal Form (3NF)

To take this motley crew of tables to Third Normal Form (3NF), we must now take 2NF tables and eliminate all transitive (i.e., hidden) dependencies. In other words, every column that isn't a part of the key must depend on the key for its informational value. Non-key columns should have no informational value if they are separated from the key of a 3NF table. Remember that the term "eliminate" doesn't mean "delete." Instead, it means to split into separate tables. Our tables in 3NF would now look like this:

Tricks Table (unchanged, it was already 3NF!) 1. trick_nbr 2. trick_name Animal_Tricks Table (unchanged, it was already 3NF!) 1. animal_nbr 2. trick_nbr 3. trick_learned_at 4. trick_skill_level

7 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

The Animal table becomes two new 3NF tables:

Animal_Lodging Table in 3NF 1. animal_nbr 2. animal_name 3. tent_nbr Tents Table in 3NF 1. tent_nbr 2. tent_name 3. tent_location

2NF and 3NF are all about the relationship between non-key and key fields. A 3NF table should provide a single-value fact about the key and should use the whole key (if concatenated), and nothing but the key, to derive its uniqueness. By the time you get to 2NF, the columns all depend on the primary key for their identity. However, the columns may have such a distinction from other columns in the table that they should be split out into a separate table. In the Animal_Lodging table, the tent_nbr still appears as a non-key column in the table, even though it is the primary key of the Tents table. Thus, tent_nbr is a foreign key within the animal_Lodging table. Using tent_nbr as a foreign key, we are able to join the data together from the two separate tables to form a single data set. Remember, normalizing data means eliminating redundant information from a table and organizing the data so that future changes to the table are easier. A table is in First Normal Form (1NF) when each field contains the smallest meaningful data and the table contains no repeating fields. Second Normal Form (2NF) refers only to tables with a multiple-field primary key. Each non-key field should relate to all of the fields making up the primary key. Third Normal Form (3NF) refers only to tables with a single-key field and requires that each non-key field be a direct description of the primary key field. NOTE: The steps described here are merely guidelines that help you design a set of stable table structures. Beyond 3NF? There are additional steps in normalization used to solve other specific logic faults in table design; however, they are seldom practical in the use of nonacademic applications. Read on for a quick glimpse of normal forms beyond 3NF: Boyce-Codd Normal Form (BCNF) Ensures that absolutely no hidden dependencies exist by splitting off tables if determinate columns exist in a 3NF table. A determinate is a column on which some other column is fully functionally dependent. This would be akin to splitting the store_dept table into two tables: one called store_dept, which contains only store_nbr and dept_id, and the other called dept_name, which contains only dept_id and dept_name.

8 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Fourth Normal Form (4NF) Compensates for multivalued dependencies in a three-column table (of A, B, and C), where columns A and B have a well-defined relationship, columns A and C have a well-defined relationship, but columns B and C are completely independent. Fifth Normal Form (5NF) Almost a purely academic exercise, SNF reduces offset joins and projection dependencies that cause spurious results, hence called Join-Projection Normal Form. Domain-Key Normal Form (DK/NF) Introduced by Donald Fagin back in 1981, it solves most insertion and deletion logic faults and provides generic definitions of deriving normal forms. However, no methodology or standardized algorithm exists for deriving DK/NF.

Denormalization

You've just read all about how and why to normalize tables. Designing table structures to 3NF reduces data redundancy and provides clean, logical table structures. But now we're going to tell you all the reasons to denormalize tables. Odd as this might sound, denormalization is not diametrically opposed to normalization (although some purists might testify otherwise). The main benefit of denormalization is improved performance, although simplified data retrieval and manipulation are sometimes lesser benefits. Both of these benefits are rendered through the reduction in the number of joins needed for the proper functionality of your application. Joins (described in Chapter 3, SQL Primer ) merge the data of two related tables into a single result set, presenting a denormalized view of the data. Joins, however, are rather time consuming and utilize a lot of CPU cycles. So, to reduce the expense of joins, many developers preempt what would otherwise be a very common join with a denormalized table. The table is now exposed to the vulnerabilities inherent in its lower normal form, but considerable time is saved because the application no longer has to join multiple tables together in a temporary workspace. Denormalization can also save processing time that might have been spent on the creation of summary reports. The first thing you need to remember about denormalization is that you must thoroughly understand the needs and behavior of the data. There are some other tips to keep in mind when considering denormalizing tables: Denormalization means that more storage space will be needed for the redundant data. Redundant data usually speeds up queries but can slow down updates since multiple instances of the data item must be altered. Denormalized tables can introduce logic faults that must be compensated for in the application code. Maintaining integrity can be more difficult with redundant data. Denormalization yields the best improvements on tables that are frequently queried, such as validation tables or reporting tables. Tables in which records are frequently inserted, updated, and deleted are less viable candidates for denormalization. Denormalization is generally regarded as a tuning method of last resort by the purists. On the other hand, many database administrators feel that it is perfectly natural to denormalize a frequently-used eight-way join to create a simpler three-way join, for example.

9 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Denormalization is usually accomplished by adding columns and foreign keys from other tables into the denormalized table or by adding aggregate columns to the denormalized table. If every query issued against a given table commonly has to join to columns from one or more other tables, you're looking at a candidate for denormalization.

Row Processing Versus Set Processing

One of the first things an experienced third-generation language (3GL) programmer must do when learning Transact-SQL (or any SQL variant, for that matter) is toss all her programming techniques and tricks out the window. Yes, you must unlearn much of what made you a star 3GL programmer to become a star database programmer. Procedural 3GLs, like FORTRAN, COBOL, or BASIC, perform their data operations in a manner that is quite contrary to effective Transact-SQL programming. That is because the basis of 3GL data manipulation requires the programmer to actually tell the program exactly how to treat the data, one record at a time. Since the 3GL program cycles down through a list of records, performing its logic on one record after the other, this style of programming is frequently called row-processing or declarative programming. Using this style of programming in Transact-SQL programs does to SQL Server what a parade does to traffic--slows things down. On the other side of the equation, programs written for SQL-based RDBMSs prefer to operate in logical sets of data. Unlike the row-processing style, you tell SQL Server only what you want for the data, not how each individual piece of data should be handled. Sometimes you will see set processing referred to as procedural processing, since you code only what you want, as in "Give me all employees in the southern region who earn more than $70,000 per year." To use an analogy, assume that you want to buy a long list of automobile parts for the 1970 Oldsmobile 442 convertible you're restoring (well, that's the car I'd want to restore). You go to the first parts store on your list. There you have to pull out a shopping cart and wheel it up and down every single aisle searching for the parts you want. Furthermore, when you find a part you need, you have to take it out of the shipping crate and, later, you have to barscan it and bag it yourself. That is what row processing is like. To compare set processing in the analogy, you take the same list to another store, where you hand it to a clerk at a receiving window. The clerk disappears behind the window, presumably to fetch your parts. Why's it taking so long? Is he having a talk at the water cooler or getting your parts? You really can't tell, because you can't see past the partition, but soon enough he returns with all your parts, packaged up and ready to go. It seems that the clerk has been gone a long time. But, in reality, the clerk got your list ready much faster than you would have at the first store, because he was able to skip all the aisles that didn't have the parts you wanted. It's very similar with set processing.

The History of SQL Server

SQL Server, like many things related to SQL, is a story of diversity. At one time, both Sybase and Microsoft had virtually the same product. Today, the two products are growing increasingly divergent. In fact, Sybase now calls their implementation of the product Sybase Adaptive Server Enterprise. For purposes of simplicity, in this book we refer to both Microsoft and Sybase implementations as SQL Server.

10 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Today, major differences in the two implementations are largely a result of their most popular operating system and hardware platforms. Sybase Adaptive Server Enterprise is deployable on many operating systems, including Windows NT and stalwart Unix platforms. Adaptive Server has many features that leverage Very Large Database (VLDB) and multithreading capabilities. Sybase carries the Transact-SQL language uniformly throughout their product line. So, you're just as likely to see Transact-SQL programs on the powerful and mobile Sybase SQL Adaptive Server Anywhere (formerly known as SQL Anywhere) running on Windows 95 and Windows 98 computers. Going to the high end of the spectrum for online analytical processing (OLAP) and data warehouses, Sybase Adaptive Server IQ utilizes Transact-SQL for programmatic needs and, in fact, utilizes the same parser as Sybase Adaptive Server. Conversely, Microsoft has focused on ease of use and administration, as well as tightly integrating the product with other no-cost features like replication and alert forwarding. In any event, all of these database platforms provide their respective user groups with efficient and effective database management tools. Today, there's a good deal of discussion about encapsulating Java as the primary SQL programming extension for not only Sybase and Microsoft but also for competitors like Oracle. Even with this alternative looming in the distance, Transact-SQL will enjoy many years of active support and augmentation by the vendors. Even if you know or are learning Java, you'll still have to know Transact-SQL to excel on either Sybase or Microsoft database platforms. Heck, the backward-compatibility issues alone are enough to ensure that Transact-SQL will have many, many more years of coding ahead. But that's the present and future. Maybe you want to know about how things got to be the way they are? By reading this section, you are actually making an admission that many programmers and analysts are loath to: history is interesting or perhaps even enjoyable. This is not actually a sign of weakness as some might attest. With tongue planted firmly in cheek, please read on. Table 1-1 shows the evolution of both Microsoft and Sybase's versions of SQL Server

11 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

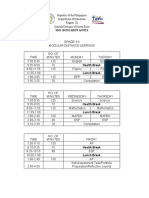

Table 1-1: The Evolution of SQL Server Time Event Microsoft and Sybase announce a technology and marketing partnership. Microsoft gets exclusive rights to market Sybase's 1987 DataServer product on OS/2 and all Microsoft-developed operating systems. Sybase gets royalties and added credibility in the Unix and VMS markets. Sybase ships its first commercial DBMS product, called DataServer, for Sun workstations running Unix. Microsoft and Ashton-Tate announce a marketing partnership. Microsoft can't put a dent in dBase's tremendous market 1988 presence. Ashton-Tate wants access to Sybase's powerful multiuser database management technology, which ships this year. Microsoft forms a three-way alliance. 1989 Ashton-Tate/Microsoft SQL Server Version 1.0 ships. Ashton-Tate dBase IV was floundering. Microsoft wanted to beef up its offerings for the new OS/2 LAN Manager product. 1990 Microsoft and Ashton-Tate quit the partnership. "Microsoft SQL Server" Version 1.1 ships by summer with support for Windows 3.0. A proliferation of Windows 3.0 front-end tools spurs the growth of Microsoft SQL Server. Later that year Microsoft and Sybase amend their contract to allow Microsoft to make actual bug fixes--all under Sybase supervision. IBM and Microsoft call off 1991 their OS/2 partnership with Microsoft to focus on Windows. Sybase surpasses $100 million in revenue and goes public on NASDAQ. Microsoft and Sybase SQL Server 4.2 ships. Microsoft diverts its attention away from OS/2 and into Windows NT. Microsoft 1992 SQL Server for Windows NT later ships its beta release while Sybase ships its much-vaunted System 10. Microsoft Windows NT 3.1 ships, closely followed by Microsoft SQL Server. By 1994, Sybase SQL Server System 10 and 1993 Microsoft SQL Server were competing unabashedly as the two formally ended their partnership. Microsoft SQL Server 6.0, a very significant release, ships in June. Sybase ships the highly lauded, technically potent Sybase 1995 SQL Server 11. Sybase and PowerSoft merge to form the seventh largest independent software house. 1996 Microsoft SQL Server 6.5 goes into production. Sybase Adaptive Server Enterprise 11.5 production ships. 1997 Microsoft SQL Server 7.0 beta ships. Sybase ships the production version of Adaptive Server Enterprise 11.5. Sybase Adaptive Server Anywhere 6.0 ships in the summer, while the Adaptive Server Enterprise 11.9.2 production release 1998 goes out the door in September. Microsoft SQL Server 7.0 ships release to manufacturing copy late in the year.

12 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

What Is Transact-SQL?

The name Transact-SQL isn't exactly self-explanatory, but it does imply the idea of "transactional" extensions to the SQL database programming language. Transact-SQL isn't a standalone product. You can't use it to write applications in the same way you could with C++ or Java. Instead, Transact-SQL is the main enabler of programmatic functionality within the relational databases provided by Microsoft and Sybase. Transact-SQL is very closely integrated with SQL while adding programming capabilities not already standardized within the SQL database programming language. At the same time Transact-SQL extends SQL, it also integrates seamlessly with it. In the following sections, we'll show you some of the capabilities of Transact-SQL, along with some rudimentary examples. Don't worry if the examples don't immediately make sense to you. After a little study, you'll see that these are relatively simple sets of code. Here's an example of a stored procedure in which Transact-SQL statements and SQL statements are combined to provide useful functionality. The following little program allows you to selectively delete old information from the sales table of the pubs database (we'll explain this database in Chapter 2) based on the order_id. The business rule being applied here is "Don't allow a delete from the sales table unless the data is more than two years old." Sounds like a simple proposition, doesn't it? But it's not all that simple. Implementing a sensible business rule like that in a Microsoft or Sybase database requires the combination of both SQL and Transact-SQL statements:

CREATE PROCEDURE purge_sales @order_id VARCHAR(20) = NULL AS IF @order_id IS NULL RAISERROR 50001 "Error! Please supply transaction order number." ELSE DELETE temp_sales -- The datediff function tells us if the order date in the ord_date value -- is more than 2 years older than the current date stored in getdate(). WHERE ord_num = @order_id AND DATEDIFF(year, ord_date, GETDATE()) > 2 GO

Our simple task was to allow a user to purge records more than two years old from the sales table based on the ord_id stored in the table. To accomplish this task, we had to utilize the Transact-SQL components of an IF . . . ELSE construct, variables, the DATEDIFF and GETDATE functions, and finally a RAISERROR statement for error handling. To finish things off, we added a bit of commentary (as all good programmers should) using Transact-SQL extensions to explain what the heck we're doing. At the same time, we had to combine those commands with a basic SQL DELETE statement. This synergy of SQL and Transact-SQL enables you to implement powerful applications and information systems.

13 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Programming Concepts

When you write a program in a third-generation language (3GL) and even most other fourth-generation languages (4GLs), you're looking at a whole other animal. While programs in third-generation and fourth-generation languages can be entirely self-contained, Transact-SQL programs in a sense are merely extensions of the database environment. To explain more fully, programming in Transact-SQL is a blend of several distinct, yet integrated, components. Let's say that you're about to begin developing a new application. You'll probably start out with a period of design and analysis in which you'll map out what the database will (probably) look like. You'll build your development database using declarative SQL statements or perhaps a visual data- modeling tool. You might later implement a set of complex business rules on your database tables through triggers coded in Transact-SQL. From there, you might create reports and business processing modules that support the user interface through stored procedures coded in Transact-SQL. Meanwhile, another group of programmers may have begun building the graphic user interface via a client/server or web application development tool. They might utilize a technology that connects their Java-based application to your database backend. When the frontend graphical user interface performs certain transactions, they may actually call the stored procedures you've coded and stored on the server, or their application may initiate transactions that fire triggers on some of the database tables. This hodgepodge of technologies and programming environments may seem daunting at first. For some developers who have grown up in the structured world of third-generation languages like FORTRAN or COBOL, the comfortable and proven methodologies are no longer 100% effective. At the other end of the spectrum, novice developers may drift about without any structure, cranking out report after report and screen after screen without giving a second thought to documentation, maintainability, or code reusability. The truth is that no matter what background you come from, you must adjust to the different types and techniques of coding you'll face when developing a new application. For what this blend of technologies introduces in terms of challenge, it adds even more in terms of flexibility and power. The kernel features of Transact-SQL are described in the following sections.

A Wide Variety of Datatypes for Variables and Constants

Transact-SQL lets you declare and then utilize local variables and constants within a Transact-SQL object. These variables and constants must be of a datatype known to the database, like VARCHAR or INT. Special-purpose datatypes exist within Transact-SQL to provide a special functionality. For example, the IDENTITY datatype is used to store an automatically increasing numeric counter within the column of a given table. TIMESTAMP is another special-purpose Transact-SQL datatype that is used to stamp each record of a given table with a unique marker. Additionally, you can construct your own special-purpose user-defined datatypes on top of an existing system datatype. These user-defined datatypes enable you to constrain the use of, or data stored within, a variable or constant of your special datatype.

14 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Present in Sybase Adaptive Server and new to Microsoft SQL Server Version 7.0 are several new Unicode datatypes, including NCHAR, NVARCHAR, and NTEXT. These new datatypes allow data storage that is independent of the local language character set. Other new datatypes found in Microsoft SQL Server 7.0 include the UNIQUEIDENTIFIER, which uniquely distinguishes each row in a table even across multiple servers, and the CURSOR datatype, which facilitates the use of cursors within a Transact-SQL program.

Programmer-Defined Temporary Objects

Transact-SQL provides several useful temporary objects that endure only as long as the current session. These temporary objects include tables and stored procedures. The objects are stored in the tempdb database and can be used for short-term applications that need exist only while a user is connected or a system operation is executing. Temporary tables can have all the trimmings of their more permanent counterparts, including indexes, constraints, and triggers. Temporary tables are very useful as a sort of "holding tank" where data can be massaged and manipulated into a more useful format. In some cases, temporary tables are used to simulate the functionality of an array found in a third-generation language. Here's an example of the definition of a temporary table that also populates the temporary table with data:

SELECT INTO FROM WHERE * #temp_titles titles NOT EXISTS (SELECT * FROM sales WHERE title_ID = titles.title_ID)

Using the speedy SELECT . . . INTO syntax enables you to quickly create and populate a temporary (or permanent) table with data. There are good and bad times to use the SELECT . . . INTO statement. Temporary tables are designated by a single pound sign (#) as a prefix. They are usable only by the user or process that created them. Global temporary tables are designated by double pound signs (##). Global temporary tables are available to all users or processes as long as the creating user or process remains connected to the database server.

Specialized Functions and Global Variables

Unlike local variables, which are declared by the programmer in the body of a Transact-SQL program, global variables and functions are provided by Microsoft and Sybase to augment your programming toolset. Global variables enable you to immediately retrieve a particular piece of system information. For example, the global variable @@connections allows you to see how many connections have been attempted since the last time the server was started. The global variable @@error shows the last error number generated within the current user's session (or a 0 if the last command was successful). Functions, on the other hand, allow you shortcuts to manipulate and manage data. There are a large number of functions to choose from, but they fall into these basic categories:

15 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Aggregate functions Aggregate functions compute a single value result, such as the sum or average value of a given column in a table. Conversion functions There are a few versatile functions within Transact-SQL that allow you to convert data from one datatype to another or to reformat data. Date functions Date functions manipulate DATETIME and SMALLDATETIME values. Mathematical functions These functions provide statistical, trigonometric, logarithmic, and exponential capabilities when manipulating numbers. String functions A number of string functions allow you to analyze and modify CHAR, VARCHAR, and, in some cases, TEXT columns and variables. System (niladic) functions System functions allow you to retrieve information from system tables and parameters. In this usage, they are somewhat like global variables. Some system functions also provide a way to perform logical comparison of data. Text and image functions Manipulation of TEXT and IMAGE data differs from other types, because they have several usage restrictions. Transact-SQL provides several specialized functions to support the use and manipulation of these datatypes.

System and Extended Stored Procedures

In addition to the wide variety of system functions and global variables, Microsoft and Sybase supply system stored procedures and extended stored procedures, which provide ready-made programs and/or powerful extensions to Transact-SQL. Here are some examples of system and extended stored procedures: sp_adduser A system stored procedure that allows you to add a new user to a database sp_help A system stored procedure that shows detailed information about a wide variety of database objects sp_lock A system stored procedure that details the locking by user and system processes within the database server sp_who A system stored procedure that shows what user and system processes are active within the database server xp_cmdshell An extended stored procedure that allows you to execute any operating-system command-line executable You might, for example, use the xp_cmdshell extended stored procedure to ensure that a necessary file exists before performing a data load operation.

16 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Control-of-Flow Operations

Transact-SQL provides procedural extensions to SQL that provide several different control-of-flow structures: Conditional processing with IF . . . ELSE Iterative processing with WHILE Branching control with GOTO Delay control with WAITFOR Conditional processing with the IF . . . ELSE construct allows your Transact-SQL program to make choices. You can nest your IF . . . ELSE conditionals as deep as you'd like. The IF . . . ELSE construct requires the use of a BEGIN . . . END block if more than one SQL or Transact-SQL command is dependent upon the condition. The result of this coding constraint is a structured, block orientation. Here's an example of an IF . . . ELSE statement:

-- Simple Boolean comparison with ELSE clause. IF (suser_name() = 'sa') PRINT 'Congratulations. You are SA on this system.' ELSE PRINT 'You are not the SA on this system.'

The Transact-SQL WHILE extension provides iterative controls that allow a single block of Transact-SQL code to be repeated. You can even nest loops within other loops. In the simple example that follows, an output line is constructed and printed 12 times, once for each month. This example shows what a WHILE loop looks like:

DECLARE @month INT, @full_date VARCHAR(30), @line VARCHAR(255) SELECT @month=0 WHILE @month <= 12 BEGIN -- increment a variable for the month SELECT @month = @month + 1 -- build a variable for the full date SELECT @full_date = RTRIM(CONVERT(CHAR(2), @month)) + '/01/99' -- build the output line SELECT @line = 'Processing for date: ' + @full_date -- print the output line PRINT @line END

You have some other useful control-of-flow statements in the GOTO command and the WAITFOR command. GOTO transfers control

17 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

from one executable statement to another labeled section in the current Transact-SQL program. The WAITFOR command provides Transact-SQL programs with delay controls. Here's a Transact-SQL program that illustrates both commands:

-- The line with the colon at the end is a GOTO label. start_of_routine: -- Let's check today's date. IF GETDATE() > 'Jan 1 1999 12:00 AM' BEGIN PRINT 'Ending' GOTO end_of_routine END ELSE BEGIN PRINT 'Waiting' -- The WAITFOR DELAY command will make the program wait 1 hour. WAITFOR DELAY '01:00:00' GOTO start_of_routine END end_of_routine:

Row-Based Operations Using Cursors

As we discussed earlier, one of the most potent features of relational database management systems is their ability to process data in sets of records rather than on a row-by-row basis. Set-based processing is very fast, but it is limiting in that you cannot selectively modify a single record within a given result set. Transact-SQL has extended this set-based functionality to row-based operations using cursors. Cursors allow you a high degree of control over the manipulation of data within your database. In a sense, a cursor is a SELECT statement that steps through its result set one record at a time. To properly use a cursor, you must declare, open, fetch from, and close a cursor. In many cases, you also should deallocate the cursor. Interestingly, you can use a cursor to scroll forward or backward through a result set. You also can set a cursor to step through a table one record at a time or to skip along several records per step. The following example shows you all the steps needed to properly utilize a cursor:

-- declare the cursor DECLARE titles_cursor CURSOR FOR SELECT title_id FROM titles -- declare the variables that will hold values retrieved by the cursor

18 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

DECLARE @title_id CHAR(6) -- fetch the next set of values retrieved by the cursor FETCH titles_cursor INTO @title_id SELECT @title AS 'Name of book' -- close and deallocate the cursor CLOSE titles_cursor DEALLOCATE CURSOR titles_cursor

Error Handling

Transact-SQL allows you to detect the occurrence of errors in the course of executing a program. Errors in Transact-SQL fall into three categories: informational, warning, and fatal. Informational errors output a message but do not cause the program to abort. Warning messages output an error message and abort the currently executing SQL or Transact-SQL statement but do not abort the entire program or Transact-SQL batch. Fatal errors are baaaad. They send an error message and a notification to the operating system error log. Furthermore, fatal errors terminate the Transact-SQL program where the error occurred. Transact-SQL uses a linear code model for error handling. So, if you don't check for a particular level or type of error, Transact-SQL will not provide any special response to it. For that reason, it is very important for you to properly check and evaluate error conditions throughout the execution of your Transact-SQL programs. The following Transact-SQL block checks to see if certain data exists within the database. If the data doesn't exist, then an ad hoc error message is raised:

IF EXISTS (SELECT * FROM authors WHERE au_fname = 'Elmer' AND au_lname 'Fudd') PRINT "Happy Hunting, it's duck season!" ELSE RAISERROR('Warning! Duck hunting widout a wicense!',16,1)

Both Microsoft and Sybase also provide you with the ability to add your own user-defined error messages into the system error message table, sysmessages. This capability allows you to define a set of error messages specifically for your application and embed them in the database, rather than solely within the Transact-SQL program logic.

Transact-SQL Objects and Transaction Control

One of the key features of Microsoft SQL Server and Sybase Adaptive Server is the ability to store Transact-SQL objects (triggers,

19 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

views, and stored procedures) within the database itself. These blocks of Transact-SQL code can then be invoked by any user or system session that has adequate privileges to perform the task. In fact, both Sybase and Microsoft support the use of remote procedure calls (RPCs), allowing a single local server to invoke a stored procedure on a remote server. Through Transact-SQL objects, the database becomes a repository for both application code and data. Transact-SQL objects are stored in their own memory area, the procedure cache, within the database's memory space. At a more elemental level, transactions can be closely controlled and monitored in a Transact-SQL program using the global variables @@transtate and @@trancount. A transaction is a SQL or Transact-SQL statement that produces a measurable unit of work by retrieving, inserting, deleting, or modifying data. You can allow Transact-SQL to use implicit transactions, or you can explicitly define a transaction using the BEGIN TRAN, COMMIT TRAN, and ROLLBACK TRAN statements. By properly gauging and constructing your transactions, you can greatly reduce or even eliminate the possibility of blocks in an application supporting multiple concurrent connections.

Tracing and Debugging

A wide variety of tracing and debugging features are supplied by Transact-SQL using the DBCC and SET commands. In addition to providing tracing and debugging capabilities, these commands allow you to control server behavior, monitor performance, and alter the session environment and behavior. The DBCC command offers functions that fall into several general categories: Database consistency checks Commands like DBCC NEWALLOC and DBCC UPDATEUSAGE check a given database for inconsistencies in the data and, in some cases, take remedial action. Database and server configuration In dire straits, you can use DBCC command to drop or shrink databases. Debugging Common debugging applications for the DBCC command include controlling tracing functions and examining the input and output buffer of various system and user sessions on the database server. Performance tuning and monitoring A wide variety of DBCC commands allow you to monitor the behavior and performance of the server. Other commands enable you to pin tables into memory, rebuild indexes, reestablish fillfactors, or display the selectivity and value of a given index. Conversely, the SET command enables you to mandate specific behaviors for a given session or user connection. You can use the SET command to enable or disable certain ANSI standards, determine deadlock resolution preference, and perform a wide range of other functionalities.

Special Aggregate Operators

20 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

Transact-SQL, under Microsoft SQL Server, has grown to support new aggregate operators, such as ROLLUP and CUBE, that are specifically optimized for very large databases, such as those found in data marts and data warehouses. CUBE and ROLLUP are optional operators of the GROUP BY clause of a SELECT statement. A SELECT query using GROUP BY WITH CUBE returns all rows that it would return without the CUBE switch. In addition, it produces summary rows for every possible combination of GROUP BY keys. A query using WITH ROLLUP returns just a subset of the rows produced by WITH CUBE. ROLLUP is useful in computing running sums and running averages. Unlike CUBE, which computes aggregates for any possible combination of keys of GROUP BY, ROLLUP is sensitive to the order of items in the GROUP BY. Here's an example of a SELECT statement and result set with a SUM aggregation and a simple GROUP BY clause:

SELECT type, pub_id, SUM(ytd_sales) FROM titles GROUP BY type, pub_id

The query returns this result set:

type -----------business business mod_cook popular_comp psychology psychology trad_cook UNDECIDED pub_id -----0736 1389 0877 1389 0736 0877 0877 0877 Sum ----------18722 12066 24278 12875 9564 375 19566 (null)

Yet if we add one simple CUBE operator, we get this:

SELECT type, pub_id, SUM(ytd_sales) FROM titles GROUP BY type, pub_id WITH CUBE

The result set now contains extra rows with the type of NULL per pub_id and a grand total of all titles sold:

type -----------business business business mod_cook mod_cook popular_comp pub_id -----0736 1389 (null) 0877 (null) 1389 Sum ----------18722 12066 30788 24278 24278 12875

21 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

popular_comp psychology psychology psychology trad_cook trad_cook UNDECIDED UNDECIDED (null) (null) (null) (null)

(null) 0736 0877 (null) 0877 (null) 0877 (null) (null) 0736 0877 1389

12875 9564 375 9939 19566 19566 (null) (null) 97446 28286 44219 24941

Finally, if we switch to the ROLLUP functions (CUBE and ROLLUP are mutually exclusive), we get this:

SELECT type, pub_id, SUM(ytd_sales) FROM titles GROUP BY type, pub_id WITH ROLLUP

returning this result set:

type -----------business business business mod_cook mod_cook popular_comp popular_comp psychology psychology psychology trad_cook trad_cook UNDECIDED UNDECIDED (null) pub_id -----0736 1389 (null) 0877 (null) 1389 (null) 0736 0877 (null) 0877 (null) 0877 (null) (null) Sum ----------18722 12066 30788 24278 24278 12875 12875 9564 375 9939 19566 19566 (null) (null) 97446

All of these features, and many more, are described in the following chapters of this book. Enjoy!

Summary

This chapter examined mostly background information about Transact-SQL. It described relational databases in general and looked

22 of 23

9/13/2011 11:48 AM

Transact-SQL Programming -- Sample chapter

http://oreilly.com/catalog/wintrnssql/chapter/ch01.html

specifically at how Transact-SQL got to be the way it is today. Specific points to remember: Transact-SQL in light of the ANSI standard for SQL--how they are similar and how they differ. The relational database model, how it got started, and why it took root and prospered. Benefits and techniques for normalization and the uses for denormalization. Important concepts about the differences in row and set (a.k.a., declarative versus procedural) processing; if you remember anything in this chapter, remember the difference in row versus set processing. The history of the SQL Server database engine, including details about both Microsoft and Sybase development efforts with the product. The basic features and capabilities of Transact-SQL.

oreilly.com Home | O'Reilly Bookstores | How to Order | O'Reilly Contacts International | About O'Reilly | Affiliated Companies | Privacy Policy 2001, O'Reilly & Associates, Inc.

23 of 23

9/13/2011 11:48 AM

Vous aimerez peut-être aussi

- The Cryotron Superconductive Computer ComponentDocument32 pagesThe Cryotron Superconductive Computer ComponentmbuyiselwaPas encore d'évaluation

- Solar Drying AustriaDocument110 pagesSolar Drying AustriaSafka sagkasPas encore d'évaluation

- JPMorgan Guide To Credit DerivativesDocument88 pagesJPMorgan Guide To Credit Derivativesl_lidiyaPas encore d'évaluation

- Complex Systems ScienceDocument199 pagesComplex Systems SciencembuyiselwaPas encore d'évaluation

- A Beginner's Guide To Credit Derivatives: Noel Vaillant Debt Market Exotics Nomura International November 17, 2001Document38 pagesA Beginner's Guide To Credit Derivatives: Noel Vaillant Debt Market Exotics Nomura International November 17, 2001anon-550336Pas encore d'évaluation

- Molecular MechanicsDocument43 pagesMolecular MechanicsmbuyiselwaPas encore d'évaluation

- HostBridge SOA Web Services For CA-Ideal White PaperDocument12 pagesHostBridge SOA Web Services For CA-Ideal White PapermbuyiselwaPas encore d'évaluation

- Binomial Option Pricing - f-0943Document12 pagesBinomial Option Pricing - f-0943Ashish AroraPas encore d'évaluation

- KAM TheoryDocument8 pagesKAM TheorymbuyiselwaPas encore d'évaluation

- Chapter 14 The Remote API (RAPI) PDFDocument22 pagesChapter 14 The Remote API (RAPI) PDFmbuyiselwaPas encore d'évaluation

- MQ Development CodeDocument186 pagesMQ Development CodembuyiselwaPas encore d'évaluation

- EngineeringDocument22 pagesEngineeringmbuyiselwaPas encore d'évaluation

- Statistical LearningDocument105 pagesStatistical LearningmbuyiselwaPas encore d'évaluation

- Euler Bernoulli BeamsDocument8 pagesEuler Bernoulli BeamsmbuyiselwaPas encore d'évaluation

- Axiomatic DesignDocument6 pagesAxiomatic DesignmbuyiselwaPas encore d'évaluation

- Breast Cancer PaperDocument23 pagesBreast Cancer Paperavallonia9Pas encore d'évaluation

- Using Java To Access CA-DatacomDocument36 pagesUsing Java To Access CA-DatacommbuyiselwaPas encore d'évaluation

- Coriolus EffectsDocument50 pagesCoriolus EffectsmbuyiselwaPas encore d'évaluation

- Coriolis Force PDFDocument13 pagesCoriolis Force PDFClaudio Perez100% (1)

- CoriolisDocument20 pagesCoriolisJastina NguyenPas encore d'évaluation

- Auto Cad 20042 D TutorialDocument358 pagesAuto Cad 20042 D TutorialRakman EndRo HutaBaratPas encore d'évaluation

- 30.technical Drawing ApplicationsDocument8 pages30.technical Drawing ApplicationsmbuyiselwaPas encore d'évaluation

- Engineering Drawings: The Blank Engineering Drawing FormDocument16 pagesEngineering Drawings: The Blank Engineering Drawing Formadnan100% (1)

- InTech-Thermoplastic Matrix Reinforced With Natural Fibers A Study On Interfacial BehaviorDocument27 pagesInTech-Thermoplastic Matrix Reinforced With Natural Fibers A Study On Interfacial BehaviorJose Manuel Oliveira PereiraPas encore d'évaluation

- 27 - 2003 - Gil G. C. - Operating Parameters Affecting The Performance of A Mediator-Less Microbial Fuel CellDocument8 pages27 - 2003 - Gil G. C. - Operating Parameters Affecting The Performance of A Mediator-Less Microbial Fuel CellmbuyiselwaPas encore d'évaluation

- Thermodynamics, Statmech PDFDocument201 pagesThermodynamics, Statmech PDFyomnaPas encore d'évaluation

- Detailed Engineering Design PhaseDocument8 pagesDetailed Engineering Design Phaserenjithv_494% (18)

- Using The Simplex Method To Solve LinearDocument28 pagesUsing The Simplex Method To Solve LinearmbuyiselwaPas encore d'évaluation

- 1 SensorsDocument18 pages1 SensorsTimothy LeonardPas encore d'évaluation

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Slang Word Albiola - LaguaDocument31 pagesSlang Word Albiola - LaguaIrish Nicole AlbiolaPas encore d'évaluation

- When Rain Clouds Gather ENG1501Document13 pagesWhen Rain Clouds Gather ENG1501BeNobody2Pas encore d'évaluation

- Grammar CBSE Class 4 WorksheetDocument6 pagesGrammar CBSE Class 4 WorksheetdramiltPas encore d'évaluation

- English FinalDocument30 pagesEnglish FinalKeith KathePas encore d'évaluation

- History Chapter 6Document10 pagesHistory Chapter 6api-485766343Pas encore d'évaluation

- AloeVera - Full GuideDocument20 pagesAloeVera - Full GuideSafi KhanPas encore d'évaluation

- The Crucial Thing About Allophones Is That If You Switch These Sound Around TheDocument2 pagesThe Crucial Thing About Allophones Is That If You Switch These Sound Around TheHazel GeronimoPas encore d'évaluation

- A New Syntax of The Verb in New Testament GreekDocument222 pagesA New Syntax of The Verb in New Testament Greekimaticevic303789% (9)

- MySQL BasicDocument27 pagesMySQL BasicdharmendardPas encore d'évaluation

- Module 35 Integrating Skills in Machine ShorthandDocument8 pagesModule 35 Integrating Skills in Machine ShorthandMargie Mascariola100% (2)

- International Mother Language Day/21 FebruaryDocument13 pagesInternational Mother Language Day/21 FebruaryGolam KibriaPas encore d'évaluation

- Ios Question - CompiledDocument90 pagesIos Question - Compiledilias ahmedPas encore d'évaluation

- Syntactic Development in PsycholinguisticsDocument17 pagesSyntactic Development in PsycholinguisticsMarlina Rina Lukman100% (1)

- Guidance For Employees OromoDocument5 pagesGuidance For Employees OromoJemal San YuyaPas encore d'évaluation

- 10.1 ArrayDocument34 pages10.1 ArrayJuanPas encore d'évaluation

- Trends Test 1 Unidad 8Document2 pagesTrends Test 1 Unidad 8sesnake9983% (6)

- Sdo-Bongabon Annex: Republic of The Philippines Department of Education Region III Schools Division of Nueva EcijaDocument5 pagesSdo-Bongabon Annex: Republic of The Philippines Department of Education Region III Schools Division of Nueva EcijajonalynPas encore d'évaluation

- 5 Quick and Fun Verb Games To PDFDocument6 pages5 Quick and Fun Verb Games To PDFMariaPas encore d'évaluation

- Revision 2Nd Term 1º Bachillerato Unit 4Document9 pagesRevision 2Nd Term 1º Bachillerato Unit 4Juan PedroPas encore d'évaluation

- IMPLANDocument23 pagesIMPLANDada N. NahilPas encore d'évaluation

- Namastakam - Rupa GosvamiDocument3 pagesNamastakam - Rupa GosvamiShannan Em50% (2)

- Wyndham Lewis - Blast 1Document212 pagesWyndham Lewis - Blast 1Bertran2100% (2)

- Complete The Sentences in Past Perfect Simple (Affirmative Form.)Document4 pagesComplete The Sentences in Past Perfect Simple (Affirmative Form.)Kiane NntPas encore d'évaluation

- 4SI0 01 Rms 20170823Document13 pages4SI0 01 Rms 20170823subodya alahakoonPas encore d'évaluation

- AMERICAN INDIAN Ways of Leading and KnowingDocument21 pagesAMERICAN INDIAN Ways of Leading and Knowingtomor2Pas encore d'évaluation

- Derrida - Structure, Signs and PlayDocument17 pagesDerrida - Structure, Signs and PlayMary Mae100% (3)

- 500 Important Spoken Tamil Situations Into Spoken English Sentences - SampleDocument7 pages500 Important Spoken Tamil Situations Into Spoken English Sentences - Sampleksjayakumar89% (546)

- The Billionaire's Obsession (Vaniah)Document117 pagesThe Billionaire's Obsession (Vaniah)Josh Monday Sunday50% (2)

- Caesar Cipher Implementation in C LanguageDocument9 pagesCaesar Cipher Implementation in C LanguageBanGush KanPas encore d'évaluation

- CoherenceDocument3 pagesCoherenceRizkyPas encore d'évaluation