Académique Documents

Professionnel Documents

Culture Documents

Brand Name Distributions (Statistics)

Transféré par

Anton OkhrimchukCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Brand Name Distributions (Statistics)

Transféré par

Anton OkhrimchukDroits d'auteur :

Formats disponibles

Stat 5101 Notes: Brand Name Distributions

Charles J. Geyer

December 15, 2012

Contents

1 Discrete Uniform Distribution 2

2 General Discrete Uniform Distribution 2

3 Uniform Distribution 3

4 General Uniform Distribution 3

5 Bernoulli Distribution 4

6 Binomial Distribution 5

7 Hypergeometric Distribution 6

8 Poisson Distribution 7

9 Geometric Distribution 8

10 Negative Binomial Distribution 9

11 Normal Distribution 10

12 Exponential Distribution 12

13 Gamma Distribution 12

14 Beta Distribution 14

15 Multinomial Distribution 15

1

16 Bivariate Normal Distribution 18

17 Multivariate Normal Distribution 19

18 Chi-Square Distribution 21

19 Students t Distribution 22

20 Snedecors F Distribution 23

21 Cauchy Distribution 24

22 Laplace Distribution 25

1 Discrete Uniform Distribution

Abbreviation DiscUnif(n).

Type Discrete.

Rationale Equally likely outcomes.

Sample Space The interval 1, 2, . . ., n of the integers.

Probability Mass Function

f(x) =

1

n

, x = 1, 2, . . . , n

Moments

E(X) =

n + 1

2

var(X) =

n

2

1

12

2 General Discrete Uniform Distribution

Type Discrete.

Sample Space Any nite set S.

2

Probability Mass Function

f(x) =

1

n

, x S,

where n is the number of elements of S.

3 Uniform Distribution

Abbreviation Unif(a, b).

Type Continuous.

Rationale Continuous analog of the discrete uniform distribution.

Parameters Real numbers a and b with a < b.

Sample Space The interval (a, b) of the real numbers.

Probability Density Function

f(x) =

1

b a

, a < x < b

Moments

E(X) =

a + b

2

var(X) =

(b a)

2

12

Relation to Other Distributions Beta(1, 1) = Unif(0, 1).

4 General Uniform Distribution

Type Continuous.

Sample Space Any open set S in R

n

.

3

Probability Density Function

f(x) =

1

c

, x S

where c is the measure (length in one dimension, area in two, volume in

three, etc.) of the set S.

5 Bernoulli Distribution

Abbreviation Ber(p).

Type Discrete.

Rationale Any zero-or-one-valued random variable.

Parameter Real number 0 p 1.

Sample Space The two-element set {0, 1}.

Probability Mass Function

f(x) =

_

p, x = 1

1 p, x = 0

Moments

E(X) = p

var(X) = p(1 p)

Addition Rule If X

1

, . . ., X

k

are IID Ber(p) random variables, then

X

1

+ + X

k

is a Bin(k, p) random variable.

Degeneracy If p = 0 the distribution is concentrated at 0. If p = 1 the

distribution is concentrated at 1.

Relation to Other Distributions Ber(p) = Bin(1, p).

4

6 Binomial Distribution

Abbreviation Bin(n, p).

Type Discrete.

Rationale Sum of n IID Bernoulli random variables.

Parameters Real number 0 p 1. Integer n 1.

Sample Space The interval 0, 1, . . ., n of the integers.

Probability Mass Function

f(x) =

_

n

x

_

p

x

(1 p)

nx

, x = 0, 1, . . . , n

Moments

E(X) = np

var(X) = np(1 p)

Addition Rule If X

1

, . . ., X

k

are independent random variables, X

i

being

Bin(n

i

, p) distributed, then X

1

+ +X

k

is a Bin(n

1

+ +n

k

, p) random

variable.

Normal Approximation If np and n(1 p) are both large, then

Bin(n, p) N

_

np, np(1 p)

_

Poisson Approximation If n is large but np is small, then

Bin(n, p) Poi(np)

Theorem The fact that the probability mass function sums to one is

equivalent to the binomial theorem: for any real numbers a and b

n

k=0

_

n

k

_

a

k

b

nk

= (a + b)

n

.

5

Degeneracy If p = 0 the distribution is concentrated at 0. If p = 1 the

distribution is concentrated at n.

Relation to Other Distributions Ber(p) = Bin(1, p).

7 Hypergeometric Distribution

Abbreviation Hypergeometric(A, B, n).

Type Discrete.

Rationale Sample of size n without replacement from nite population of

B zeros and A ones.

Sample Space The interval max(0, nB), . . ., min(n, A) of the integers.

Probability Mass Function

f(x) =

_

A

x

__

B

nx

_

_

A+B

n

_ , x = max(0, n B), . . . , min(n, A)

Moments

E(X) = np

var(X) = np(1 p)

N n

N 1

where

p =

A

A + B

(7.1)

N = A + B

Binomial Approximation If n is small compared to either A or B, then

Hypergeometric(n, A, B) Bin(n, p)

where p is given by (7.1).

6

Normal Approximation If n is large, but small compared to either A

or B, then

Hypergeometric(n, A, B) N

_

np, np(1 p)

_

where p is given by (7.1).

Theorem The fact that the probability mass function sums to one is

equivalent to

min(A,n)

x=max(0,nB)

_

A

x

__

B

n x

_

=

_

A + B

n

_

8 Poisson Distribution

Abbreviation Poi()

Type Discrete.

Rationale Counts in a Poisson process.

Parameter Real number > 0.

Sample Space The non-negative integers 0, 1, . . . .

Probability Mass Function

f(x) =

x

x!

e

, x = 0, 1, . . .

Moments

E(X) =

var(X) =

Addition Rule If X

1

, . . ., X

k

are independent random variables, X

i

being

Poi(

i

) distributed, then X

1

+ +X

k

is a Poi(

1

+ +

k

) random variable.

Normal Approximation If is large, then

Poi() N(, )

7

Theorem The fact that the probability mass function sums to one is

equivalent to the Maclaurin series for the exponential function: for any

real number x

k=0

x

k

k!

= e

x

.

9 Geometric Distribution

Abbreviation Geo(p).

Type Discrete.

Rationales

Discrete lifetime of object that does not age.

Waiting time or interarrival time in sequence of IID Bernoulli trials.

Inverse sampling.

Discrete analog of the exponential distribution.

Parameter Real number 0 < p 1.

Sample Space The non-negative integers 0, 1, . . . .

Probability Mass Function

f(x) = p(1 p)

x

x = 0, 1, . . .

Moments

E(X) =

1 p

p

var(X) =

1 p

p

2

Addition Rule If X

1

, . . ., X

k

are IID Geo(p) random variables, then

X

1

+ + X

k

is a NegBin(k, p) random variable.

8

Theorem The fact that the probability mass function sums to one is

equivalent to the geometric series: for any real number s such that |s| < 1

k=0

s

k

=

1

1 s

.

Degeneracy If p = 1 the distribution is concentrated at 0.

10 Negative Binomial Distribution

Abbreviation NegBin(r, p).

Type Discrete.

Rationale

Sum of IID geometric random variables.

Inverse sampling.

Gamma mixture of Poisson distributions.

Parameters Real number 0 < p 1. Integer r 1.

Sample Space The non-negative integers 0, 1, . . . .

Probability Mass Function

f(x) =

_

r + x 1

x

_

p

r

(1 p)

x

, x = 0, 1, . . .

Moments

E(X) =

r(1 p)

p

var(X) =

r(1 p)

p

2

Addition Rule If X

1

, . . ., X

k

are independent random variables, X

i

being

NegBin(r

i

, p) distributed, then X

1

+ + X

k

is a NegBin(r

1

+ + r

k

, p)

random variable.

9

Normal Approximation If r(1 p) is large, then

NegBin(r, p) N

_

r(1 p)

p

,

r(1 p)

p

2

_

Degeneracy If p = 1 the distribution is concentrated at 0.

Extended Denition The denition makes sense for noninteger r if bi-

nomial coecients are dened by

_

r

k

_

=

r (r 1) (r k + 1)

k!

which for integer r agrees with the standard denition.

Also

_

r + x 1

x

_

= (1)

x

_

r

x

_

(10.1)

which explains the name negative binomial.

Theorem The fact that the probability mass function sums to one is

equivalent to the generalized binomial theorem: for any real number

s such that 1 < s < 1 and any real number m

k=0

_

m

k

_

s

k

= (1 + s)

m

. (10.2)

If m is a nonnegative integer, then

_

m

k

_

is zero for k > m, and we get the

ordinary binomial theorem.

Changing variables from m to m and from s to s and using (10.1)

turns (10.2) into

k=0

_

m + k 1

k

_

s

k

=

k=0

_

m

k

_

(s)

k

= (1 s)

m

which has a more obvious relationship to the negative binomial density sum-

ming to one.

11 Normal Distribution

Abbreviation N(,

2

).

10

Type Continuous.

Rationale

Limiting distribution in the central limit theorem.

Error distribution that turns the method of least squares into maxi-

mum likelihood estimation.

Parameters Real numbers and

2

> 0.

Sample Space The real numbers.

Probability Density Function

f(x) =

1

2

e

(x)

2

/2

2

, < x <

Moments

E(X) =

var(X) =

2

E{(X )

3

} = 0

E{(X )

4

} = 3

4

Linear Transformations If X is N(,

2

) distributed, then aX + b is

N(a + b, a

2

2

) distributed.

Addition Rule If X

1

, . . ., X

k

are independent random variables, X

i

being

N(

i

,

2

i

) distributed, then X

1

+ +X

k

is a N(

1

+ +

k

,

2

1

+ +

2

k

)

random variable.

Theorem The fact that the probability density function integrates to one

is equivalent to the integral

_

e

z

2

/2

dz =

2

Relation to Other Distributions If Z is N(0, 1) distributed, then Z

2

is Gam(

1

2

,

1

2

) = chi

2

(1) distributed. Also related to Student t, Snedecor F,

and Cauchy distributions (for which see).

11

12 Exponential Distribution

Abbreviation Exp().

Type Continuous.

Rationales

Lifetime of object that does not age.

Waiting time or interarrival time in Poisson process.

Continuous analog of the geometric distribution.

Parameter Real number > 0.

Sample Space The interval (0, ) of the real numbers.

Probability Density Function

f(x) = e

x

, 0 < x <

Cumulative Distribution Function

F(x) = 1 e

x

, 0 < x <

Moments

E(X) =

1

var(X) =

1

2

Addition Rule If X

1

, . . ., X

k

are IID Exp() random variables, then

X

1

+ + X

k

is a Gam(k, ) random variable.

Relation to Other Distributions Exp() = Gam(1, ).

13 Gamma Distribution

Abbreviation Gam(, ).

12

Type Continuous.

Rationales

Sum of IID exponential random variables.

Conjugate prior for exponential, Poisson, or normal precision family.

Parameter Real numbers > 0 and > 0.

Sample Space The interval (0, ) of the real numbers.

Probability Density Function

f(x) =

()

x

1

e

x

, 0 < x <

where () is dened by (13.1) below.

Moments

E(X) =

var(X) =

2

Addition Rule If X

1

, . . ., X

k

are independent random variables, X

i

being

Gam(

i

, ) distributed, then X

1

+ +X

k

is a Gam(

1

+ +

k

, ) random

variable.

Normal Approximation If is large, then

Gam(, ) N

_

2

_

Theorem The fact that the probability density function integrates to one

is equivalent to the integral

_

0

x

1

e

x

dx =

()

the case = 1 is the denition of the gamma function

() =

_

0

x

1

e

x

dx (13.1)

13

Relation to Other Distributions

Exp() = Gam(1, ).

chi

2

() = Gam(

2

,

1

2

).

If X and Y are independent, X is (

1

, ) distributed and Y is (

2

, )

distributed, then X/(X + Y ) is Beta(

1

,

2

) distributed.

If Z is N(0, 1) distributed, then Z

2

is Gam(

1

2

,

1

2

) distributed.

Facts About Gamma Functions Integration by parts in (13.1) estab-

lishes the gamma function recursion formula

( + 1) = (), > 0 (13.2)

The relationship between the Exp() and Gam(1, ) distributions gives

(1) = 1

and the relationship between the N(0, 1) and Gam(

1

2

,

1

2

) distributions gives

(

1

2

) =

Together with the recursion (13.2) these give for any positive integer n

(n + 1) = n!

and

(n +

1

2

) =

_

n

1

2

_ _

n

3

2

_

3

2

1

2

14 Beta Distribution

Abbreviation Beta(

1

,

2

).

Type Continuous.

Rationales

Ratio of gamma random variables.

Conjugate prior for binomial or negative binomial family.

14

Parameter Real numbers

1

> 0 and

2

> 0.

Sample Space The interval (0, 1) of the real numbers.

Probability Density Function

f(x) =

(

1

+

2

)

(

1

)(

2

)

x

1

1

(1 x)

2

1

0 < x < 1

where () is dened by (13.1) above.

Moments

E(X) =

1

1

+

2

var(X) =

1

2

(

1

+

2

)

2

(

1

+

2

+ 1)

Theorem The fact that the probability density function integrates to one

is equivalent to the integral

_

1

0

x

1

1

(1 x)

2

1

dx =

(

1

)(

2

)

(

1

+

2

)

Relation to Other Distributions

If X and Y are independent, X is (

1

, ) distributed and Y is (

2

, )

distributed, then X/(X + Y ) is Beta(

1

,

2

) distributed.

Beta(1, 1) = Unif(0, 1).

15 Multinomial Distribution

Abbreviation Multi(n, p).

Type Discrete.

Rationale Multivariate analog of the binomial distribution.

15

Parameters Real vector p in the parameter space

_

p R

k

: 0 p

i

, i = 1, . . . , k, and

k

i=1

p

i

= 1

_

(15.1)

(real vectors whose components are nonnegative and sum to one).

Sample Space The set of vectors

S =

_

x Z

k

: 0 x

i

, i = 1, . . . , k, and

k

i=1

x

i

= n

_

(15.2)

(integer vectors whose components are nonnegative and sum to n).

Probability Mass Function

f(x) =

_

n

x

_

k

i=1

p

x

i

i

, x S

where

_

n

x

_

=

n!

k

i=1

x

i

!

is called a multinomial coecient.

Moments

E(X

i

) = np

i

var(X

i

) = np

i

(1 p

i

)

cov(X

i

, X

j

) = np

i

p

j

, i = j

Moments (Vector Form)

E(X) = np

var(X) = nM

where

M = Ppp

T

where P is the diagonal matrix whose vector of diagonal elements is p.

16

Addition Rule If X

1

, . . ., X

k

are independent random vectors, X

i

being

Multi(n

i

, p) distributed, then X

1

+ + X

k

is a Multi(n

1

+ + n

k

, p)

random variable.

Normal Approximation If n is large and p is not near the boundary of

the parameter space (15.1), then

Multi(n, p) N(np, nM)

Theorem The fact that the probability mass function sums to one is

equivalent to the multinomial theorem: for any vector a of real num-

bers

xS

_

n

x

_

k

i=1

a

x

i

i

= (a

1

+ + a

k

)

n

Degeneracy If a vector a exists such that Ma = 0, then var(a

T

X) = 0.

In particular, the vector u = (1, 1, . . . , 1) always satises Mu = 0, so

var(u

T

X) = 0. This is obvious, since u

T

X =

k

i=1

X

i

= n by denition of

the multinomial distribution, and the variance of a constant is zero. This

means a multinomial random vector of dimension k is really of dimension

no more than k 1 because it is concentrated on a hyperplane containing

the sample space (15.2).

Marginal Distributions Every univariate marginal is binomial

X

i

Bin(n, p

i

)

Not, strictly speaking marginals, but random vectors formed by col-

lapsing categories are multinomial. If A

1

, . . ., A

m

is a partition of the set

{1, . . . , k} and

Y

j

=

iA

j

X

i

, j = 1, . . . , m

q

j

=

iA

j

p

i

, j = 1, . . . , m

then the random vector Y has a Multi(n, q) distribution.

17

Conditional Distributions If {i

1

, . . . , i

m

} and {i

m+1

, . . . , i

k

} partition

the set {1, . . . , k}, then the conditional distribution of X

i

1

, . . ., X

im

given

X

i

m+1

, . . ., X

i

k

is Multi(n X

i

m+1

X

i

k

, q), where the parameter

vector q has components

q

j

=

p

i

j

p

i

1

+ + p

im

, j = 1, . . . , m

Relation to Other Distributions

Each marginal of a multinomial is binomial.

If X is Bin(n, p), then the vector (X, n X) is Multi

_

n, (p, 1 p)

_

.

16 Bivariate Normal Distribution

Abbreviation See multivariate normal below.

Type Continuous.

Rationales See multivariate normal below.

Parameters Real vector of dimension 2, real symmetric positive semi-

denite matrix M of dimension 2 2 having the form

M =

_

2

1

1

2

2

2

_

where

1

> 0,

2

> 0 and 1 < < +1.

Sample Space The Euclidean space R

2

.

Probability Density Function

f(x) =

1

2

det(M)

1/2

exp

_

1

2

(x )

T

M

1

(x )

_

=

1

2

_

1

2

2

exp

_

1

2(1

2

)

_

_

x

1

1

_

2

2

_

x

1

1

__

x

2

2

_

+

_

x

2

2

_

2

__

, x R

2

18

Moments

E(X

i

) =

i

, i = 1, 2

var(X

i

) =

2

i

, i = 1, 2

cov(X

1

, X

2

) =

1

2

cor(X

1

, X

2

) =

Moments (Vector Form)

E(X) =

var(X) = M

Linear Transformations See multivariate normal below.

Addition Rule See multivariate normal below.

Marginal Distributions X

i

is N(

i

,

2

i

) distributed, i = 1, 2.

Conditional Distributions The conditional distribution of X

2

given X

1

is

N

_

2

+

1

(x

1

1

), (1

2

)

2

2

_

17 Multivariate Normal Distribution

Abbreviation N(, M)

Type Continuous.

Rationales

Multivariate analog of the univariate normal distribution.

Limiting distribution in the multivariate central limit theorem.

Parameters Real vector of dimension k, real symmetric positive semi-

denite matrix M of dimension k k.

Sample Space The Euclidean space R

k

.

19

Probability Density Function If M is (strictly) positive denite,

f(x) = (2)

k/2

det(M)

1/2

exp

_

1

2

(x )

T

M

1

(x )

_

, x R

k

Otherwise there is no density (X is concentrated on a hyperplane).

Moments (Vector Form)

E(X) =

var(X) = M

Linear Transformations If X is N(, M) distributed, then a + BX,

where a is a constant vector and B is a constant matrix of dimensions

such that the vector addition and matrix multiplication make sense, has the

N(a +B, BMB

T

) distribution.

Addition Rule If X

1

, . . ., X

k

are independent random vectors, X

i

being

N(

i

, M

i

) distributed, then X

1

+ +X

k

is a N(

1

+ +

k

, M

1

+ +M

k

)

random variable.

Degeneracy If a vector a exists such that Ma = 0, then var(a

T

X) = 0.

Partitioned Vectors and Matrices The random vector and parameters

are written in partitioned form

X =

_

X

1

X

2

_

(17.1a)

=

_

2

_

(17.1b)

M =

_

M

11

M

12

M

21

M

2

_

(17.1c)

when X

1

consists of the rst r elements of X and X

2

of the other k r

elements and similarly for

1

and

2

.

Marginal Distributions Every marginal of a multivariate normal is nor-

mal (univariate or multivariate as the case may be). In partitioned form,

the (marginal) distribution of X

1

is N(

1

, M

11

).

20

Conditional Distributions Every conditional of a multivariate normal

is normal (univariate or multivariate as the case may be). In partitioned

form, the conditional distribution of X

1

given X

2

is

N(

1

+M

12

M

22

[X

2

2

], M

11

M

12

M

22

M

21

)

where the notation M

22

denotes the inverse of the matrix M

22

if the matrix

is invertible and otherwise any generalized inverse.

18 Chi-Square Distribution

Abbreviation chi

2

() or

2

().

Type Continuous.

Rationales

Sum of squares of IID standard normal random variables.

Sampling distribution of sample variance when data are IID normal.

Asymptotic distribution in Pearson chi-square test.

Asymptotic distribution of log likelihood ratio.

Parameter Real number > 0 called degrees of freedom.

Sample Space The interval (0, ) of the real numbers.

Probability Density Function

f(x) =

(

1

2

)

/2

(

2

)

x

/21

e

x/2

, 0 < x < .

Moments

E(X) =

var(X) = 2

Addition Rule If X

1

, . . ., X

k

are independent random variables, X

i

being

chi

2

(

i

) distributed, then X

1

+ +X

k

is a chi

2

(

1

+ +

k

) random variable.

21

Normal Approximation If is large, then

chi

2

() N(, 2)

Relation to Other Distributions

chi

2

() = Gam(

2

,

1

2

).

If X is N(0, 1) distributed, then X

2

is chi

2

(1) distributed.

If Z and Y are independent, X is N(0, 1) distributed and Y is chi

2

()

distributed, then X/

_

Y/ is t() distributed.

If X and Y are independent and are chi

2

() and chi

2

() distributed,

respectively, then (X/)/(Y/) is F(, ) distributed.

19 Students t Distribution

Abbreviation t().

Type Continuous.

Rationales

Sampling distribution of pivotal quantity

n(X

n

)/S

n

when data

are IID normal.

Marginal for in conjugate prior family for two-parameter normal

data.

Parameter Real number > 0 called degrees of freedom.

Sample Space The real numbers.

Probability Density Function

f(x) =

1

(

+1

2

)

(

2

)

1

_

1 +

x

2

_

(+1)/2

, < x < +

22

Moments If > 1, then

E(X) = 0.

Otherwise the mean does not exist. If > 2, then

var(X) =

2

.

Otherwise the variance does not exist.

Normal Approximation If is large, then

t() N(0, 1)

Relation to Other Distributions

If X and Y are independent, X is N(0, 1) distributed and Y is chi

2

()

distributed, then X/

_

Y/ is t() distributed.

If X is t() distributed, then X

2

is F(1, ) distributed.

t(1) = Cauchy(0, 1).

20 Snedecors F Distribution

Abbreviation F(, ).

Type Continuous.

Rationale

Ratio of sums of squares for normal data (test statistics in regression

and analysis of variance).

Parameters Real numbers > 0 and > 0 called numerator degrees of

freedom and denominator degrees of freedom, respectively.

Sample Space The interval (0, ) of the real numbers.

Probability Density Function

f(x) =

(

+

2

)

/2

/2

(

2

)(

2

)

x

/21

(x + )

(+)/2

, 0 < x < +

23

Moments If > 2, then

E(X) =

2

.

Otherwise the mean does not exist.

Relation to Other Distributions

If X and Y are independent and are chi

2

() and chi

2

() distributed,

respectively, then (X/)/(Y/) is F(, ) distributed.

If X is t() distributed, then X

2

is F(1, ) distributed.

21 Cauchy Distribution

Abbreviation Cauchy(, ).

Type Continuous.

Rationales

Very heavy tailed distribution.

Counterexample to law of large numbers.

Parameters Real numbers and > 0.

Sample Space The real numbers.

Probability Density Function

f(x) =

1

1

1 +

_

x

_

2

, < x < +

Moments No moments exist.

Addition Rule If X

1

, . . ., X

k

are IID Cauchy(, ) random variables,

then X

n

= (X

1

+ + X

k

)/n is also Cauchy(, ).

24

Relation to Other Distributions

t(1) = Cauchy(0, 1).

22 Laplace Distribution

Abbreviation Laplace(, ).

Type Continuous.

Rationales The sample median is the maximum likelihood estimate of

the location parameter.

Parameters Real numbers and > 0, called the mean and standard

deviation, respectively.

Sample Space The real numbers.

Probability Density Function

f(x) =

2

2

exp

_

_

, < x <

Moments

E(X) =

var(X) =

2

25

Vous aimerez peut-être aussi

- Ib History Command Term PostersDocument6 pagesIb History Command Term Postersapi-263601302100% (4)

- Existential ThreatsDocument6 pagesExistential Threatslolab_4Pas encore d'évaluation

- Elementary Properties of Cyclotomic Polynomials by Yimin GeDocument8 pagesElementary Properties of Cyclotomic Polynomials by Yimin GeGary BlakePas encore d'évaluation

- Chapter 2Document25 pagesChapter 2McNemarPas encore d'évaluation

- B671-672 Supplemental Notes 2 Hypergeometric, Binomial, Poisson and Multinomial Random Variables and Borel SetsDocument13 pagesB671-672 Supplemental Notes 2 Hypergeometric, Binomial, Poisson and Multinomial Random Variables and Borel SetsDesmond SeahPas encore d'évaluation

- My Notes For Discrete and Continuous Distributions 987654Document28 pagesMy Notes For Discrete and Continuous Distributions 987654Shah FahadPas encore d'évaluation

- Common Probability Distributionsi Math 217/218 Probability and StatisticsDocument10 pagesCommon Probability Distributionsi Math 217/218 Probability and StatisticsGrace nyangasiPas encore d'évaluation

- 5 Continuous Random VariablesDocument11 pages5 Continuous Random VariablesAaron LeãoPas encore d'évaluation

- Christian Notes For Exam PDocument9 pagesChristian Notes For Exam Proy_getty100% (1)

- Crib Sheet For Exam #1 Statistics 211 1 Chapter 1: Descriptive StatisticsDocument5 pagesCrib Sheet For Exam #1 Statistics 211 1 Chapter 1: Descriptive StatisticsVolodymyr ZavidovychPas encore d'évaluation

- Revision - Elements or Probability: Notation For EventsDocument20 pagesRevision - Elements or Probability: Notation For EventsAnthony Saracasmo GerdesPas encore d'évaluation

- Probability Theory Nate EldredgeDocument65 pagesProbability Theory Nate Eldredgejoystick2inPas encore d'évaluation

- Analytic Number Theory 1Document21 pagesAnalytic Number Theory 1Tamás TornyiPas encore d'évaluation

- Probability Laws: Complementary EventDocument23 pagesProbability Laws: Complementary EventalvinhimPas encore d'évaluation

- ChapterStat 2Document77 pagesChapterStat 2Md Aziq Md RaziPas encore d'évaluation

- Probability Distributions: 4.1. Some Special Discrete Random Variables 4.1.1. The Bernoulli and Binomial Random VariablesDocument12 pagesProbability Distributions: 4.1. Some Special Discrete Random Variables 4.1.1. The Bernoulli and Binomial Random VariablesYasso ArbidoPas encore d'évaluation

- Lecture 6. Order Statistics: 6.1 The Multinomial FormulaDocument19 pagesLecture 6. Order Statistics: 6.1 The Multinomial FormulaLya Ayu PramestiPas encore d'évaluation

- Lecture11 (Week 12) UpdatedDocument34 pagesLecture11 (Week 12) UpdatedBrian LiPas encore d'évaluation

- Asymptotic Theory For Maximum Likelihood Estimates in Reduced-Rank Multivariate Generalized Linear ModelsDocument19 pagesAsymptotic Theory For Maximum Likelihood Estimates in Reduced-Rank Multivariate Generalized Linear ModelsLiliana ForzaniPas encore d'évaluation

- NotesDocument47 pagesNotesUbanede EbenezerPas encore d'évaluation

- STAT0009 Introductory NotesDocument4 pagesSTAT0009 Introductory NotesMusa AsadPas encore d'évaluation

- 1.6. Families of DistributionsDocument9 pages1.6. Families of DistributionsNogs MujeebPas encore d'évaluation

- IMOMATH - Polynomials of One VariableDocument21 pagesIMOMATH - Polynomials of One VariableDijkschneier100% (1)

- Analytic Number Theory 1Document47 pagesAnalytic Number Theory 1Tamás TornyiPas encore d'évaluation

- MIT18.650. Statistics For Applications Fall 2016. Problem Set 3Document3 pagesMIT18.650. Statistics For Applications Fall 2016. Problem Set 3yilvasPas encore d'évaluation

- An Orthogonality Property of Legendre Polynomials: ResearchDocument13 pagesAn Orthogonality Property of Legendre Polynomials: ResearchRivaldo Cifuentes MonroyPas encore d'évaluation

- Sobolov SpacesDocument49 pagesSobolov SpacesTony StarkPas encore d'évaluation

- Continuous Distributions: Section 2Document27 pagesContinuous Distributions: Section 2eng ineera100% (1)

- SAA For JCCDocument18 pagesSAA For JCCShu-Bo YangPas encore d'évaluation

- Exam P Formula SheetDocument14 pagesExam P Formula SheetToni Thompson100% (4)

- The Polynomials: 0 1 2 2 N N 0 1 2 N NDocument29 pagesThe Polynomials: 0 1 2 2 N N 0 1 2 N NKaran100% (1)

- CPDDocument5 pagesCPDqwertyPas encore d'évaluation

- Analytic Number Theory Winter 2005 Professor C. StewartDocument35 pagesAnalytic Number Theory Winter 2005 Professor C. StewartTimothy P0% (1)

- Chapter 12Document6 pagesChapter 12masrawy eduPas encore d'évaluation

- Continuous FunctionsDocument19 pagesContinuous Functionsap021Pas encore d'évaluation

- Namma Kalvi - 12th - Business - Maths - Application - Answers - Volume - 2 - 215831Document16 pagesNamma Kalvi - 12th - Business - Maths - Application - Answers - Volume - 2 - 215831satish ThamizharPas encore d'évaluation

- Random Vectors and Multivariate Normal DistributionDocument6 pagesRandom Vectors and Multivariate Normal DistributionJohn SmithPas encore d'évaluation

- Binom HandoutDocument21 pagesBinom Handoutsmorshed03Pas encore d'évaluation

- The Uniform DistributnDocument7 pagesThe Uniform DistributnsajeerPas encore d'évaluation

- CLT PDFDocument4 pagesCLT PDFdPas encore d'évaluation

- Special Discrete Probability DistributionsDocument44 pagesSpecial Discrete Probability DistributionsRaisa RashidPas encore d'évaluation

- Common Probability Distributions: D. Joyce, Clark University Aug 2006Document9 pagesCommon Probability Distributions: D. Joyce, Clark University Aug 2006helloThar213Pas encore d'évaluation

- Slide Chap4Document19 pagesSlide Chap4dangxuanhuy3108Pas encore d'évaluation

- Shannon's Theorems: Math and Science Summer Program 2020Document28 pagesShannon's Theorems: Math and Science Summer Program 2020Anh NguyenPas encore d'évaluation

- STAT 538 Maximum Entropy Models C Marina Meil A Mmp@stat - Washington.eduDocument20 pagesSTAT 538 Maximum Entropy Models C Marina Meil A Mmp@stat - Washington.eduMatthew HagenPas encore d'évaluation

- Analytic Number Theory: Davoud Cheraghi May 13, 2016Document66 pagesAnalytic Number Theory: Davoud Cheraghi May 13, 2016Svend Erik FjordPas encore d'évaluation

- S1) Basic Probability ReviewDocument71 pagesS1) Basic Probability ReviewMAYANK HARSANIPas encore d'évaluation

- MA 2213 Numerical Analysis I: Additional TopicsDocument22 pagesMA 2213 Numerical Analysis I: Additional Topicsyewjun91Pas encore d'évaluation

- Probability Theory (MATHIAS LOWE)Document69 pagesProbability Theory (MATHIAS LOWE)Vladimir MorenoPas encore d'évaluation

- SST 204 ModuleDocument84 pagesSST 204 ModuleAtuya Jones100% (1)

- Background For Lesson 5: 1 Cumulative Distribution FunctionDocument5 pagesBackground For Lesson 5: 1 Cumulative Distribution FunctionNguyễn ĐứcPas encore d'évaluation

- MA2216 SummaryDocument1 pageMA2216 SummaryKhor Shi-Jie100% (1)

- Answer: Given Y 3x + 1, XDocument9 pagesAnswer: Given Y 3x + 1, XBALAKRISHNANPas encore d'évaluation

- Lecture Notes Week 1Document10 pagesLecture Notes Week 1tarik BenseddikPas encore d'évaluation

- Statistics Continuous DisributionDocument28 pagesStatistics Continuous Disributionmyrul_shafiqPas encore d'évaluation

- Lecture 09Document15 pagesLecture 09nandish mehtaPas encore d'évaluation

- Multi Varia Da 1Document59 pagesMulti Varia Da 1pereiraomarPas encore d'évaluation

- Maths Model Paper 2Document7 pagesMaths Model Paper 2ravibiriPas encore d'évaluation

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)D'EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)Pas encore d'évaluation

- Nonlinear Functional Analysis and Applications: Proceedings of an Advanced Seminar Conducted by the Mathematics Research Center, the University of Wisconsin, Madison, October 12-14, 1970D'EverandNonlinear Functional Analysis and Applications: Proceedings of an Advanced Seminar Conducted by the Mathematics Research Center, the University of Wisconsin, Madison, October 12-14, 1970Louis B. RallPas encore d'évaluation

- Hima OPC Server ManualDocument36 pagesHima OPC Server ManualAshkan Khajouie100% (3)

- Lithuania DalinaDocument16 pagesLithuania DalinaStunt BackPas encore d'évaluation

- Talking Art As The Spirit Moves UsDocument7 pagesTalking Art As The Spirit Moves UsUCLA_SPARCPas encore d'évaluation

- Obesity - The Health Time Bomb: ©LTPHN 2008Document36 pagesObesity - The Health Time Bomb: ©LTPHN 2008EVA PUTRANTO100% (2)

- Week 7Document24 pagesWeek 7Priyank PatelPas encore d'évaluation

- Skills Redux (10929123)Document23 pagesSkills Redux (10929123)AndrewCollas100% (1)

- Lesson 6 ComprogDocument25 pagesLesson 6 ComprogmarkvillaplazaPas encore d'évaluation

- 18 June 2020 12:03: New Section 1 Page 1Document4 pages18 June 2020 12:03: New Section 1 Page 1KarthikNayakaPas encore d'évaluation

- 2SB817 - 2SD1047 PDFDocument4 pages2SB817 - 2SD1047 PDFisaiasvaPas encore d'évaluation

- Tribal Banditry in Ottoman Ayntab (1690-1730)Document191 pagesTribal Banditry in Ottoman Ayntab (1690-1730)Mahir DemirPas encore d'évaluation

- Huawei R4815N1 DatasheetDocument2 pagesHuawei R4815N1 DatasheetBysPas encore d'évaluation

- AntibioticsDocument36 pagesAntibioticsBen Paolo Cecilia RabaraPas encore d'évaluation

- Modulo EminicDocument13 pagesModulo EminicAndreaPas encore d'évaluation

- Heterogeneity in Macroeconomics: Macroeconomic Theory II (ECO-504) - Spring 2018Document5 pagesHeterogeneity in Macroeconomics: Macroeconomic Theory II (ECO-504) - Spring 2018Gabriel RoblesPas encore d'évaluation

- Application Activity Based Costing (Abc) System As An Alternative For Improving Accuracy of Production CostDocument19 pagesApplication Activity Based Costing (Abc) System As An Alternative For Improving Accuracy of Production CostM Agus SudrajatPas encore d'évaluation

- Chapter 2 ProblemsDocument6 pagesChapter 2 ProblemsYour MaterialsPas encore d'évaluation

- Leigh Shawntel J. Nitro Bsmt-1A Biostatistics Quiz No. 3Document6 pagesLeigh Shawntel J. Nitro Bsmt-1A Biostatistics Quiz No. 3Lue SolesPas encore d'évaluation

- Dtu Placement BrouchureDocument25 pagesDtu Placement BrouchureAbhishek KumarPas encore d'évaluation

- 5c3f1a8b262ec7a Ek PDFDocument5 pages5c3f1a8b262ec7a Ek PDFIsmet HizyoluPas encore d'évaluation

- The Homework Song FunnyDocument5 pagesThe Homework Song Funnyers57e8s100% (1)

- Oracle Forms & Reports 12.2.1.2.0 - Create and Configure On The OEL 7Document50 pagesOracle Forms & Reports 12.2.1.2.0 - Create and Configure On The OEL 7Mario Vilchis Esquivel100% (1)

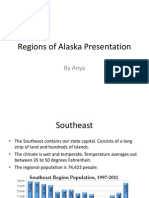

- Regions of Alaska PresentationDocument15 pagesRegions of Alaska Presentationapi-260890532Pas encore d'évaluation

- Chapter 3 - Organization Structure & CultureDocument63 pagesChapter 3 - Organization Structure & CultureDr. Shuva GhoshPas encore d'évaluation

- BNF Pos - StockmockDocument14 pagesBNF Pos - StockmockSatish KumarPas encore d'évaluation

- Revenue and Expenditure AuditDocument38 pagesRevenue and Expenditure AuditPavitra MohanPas encore d'évaluation

- Costbenefit Analysis 2015Document459 pagesCostbenefit Analysis 2015TRÂM NGUYỄN THỊ BÍCHPas encore d'évaluation

- Radiation Safety Densitometer Baker PDFDocument4 pagesRadiation Safety Densitometer Baker PDFLenis CeronPas encore d'évaluation

- DN Cross Cutting IssuesDocument22 pagesDN Cross Cutting Issuesfatmama7031Pas encore d'évaluation