Académique Documents

Professionnel Documents

Culture Documents

Clustering - Ontology Algorithm+++

Transféré par

diedie_hervDescription originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Clustering - Ontology Algorithm+++

Transféré par

diedie_hervDroits d'auteur :

Formats disponibles

Journal of Computational Information Systems 6:9 (2010) 2959-2966 Available at http://www.Jofcis.

com

Ontology-based Clustering Algorithm with Feature Weights

Lei ZHANG, Zhichao WANG

School of Computer Science and Technology, China University of Mining and Technology, Xuzhou 221116, Jiangsu Province, China

Abstract

Multidimensional data has multiple features. Different features cant be treated equivalently because they have different impacts on clustering results. To solve this problem, ontology-based clustering algorithm with feature weights (OFW-Clustering) is proposed to reflect the different importantce of different features in this paper. Prior knowledge described with ontology is introduced into clustering. Ontology-based domain feature graph is built to calculate feature weights in clustering. One feature is viewed as one ontology semantic node. Feature weight in the ontology tree is calculated according to the features overall relevancy. Under the guidance of the prior knowledge, parameter values of optimal clustering results can be gotten through continuous change of , .Experiments show that ontology-based clustering algorithm with feature weights do a better job in getting domain knowledge and a more accurate result. Keywords: Ontology; Cluster; Feature Weights

1. Introduction In most clustering, each feature has equal importance. In most real world problems, features are not considered to be equally important. Feature weights have been hot research topics in cluster analysis. Xiao-jiang Kong brought forward an adaptive algorithm for the weight of the feature weighted of FCM [1]. Richard J. Hathaway proposed a unified framework for performing density-weighted FCM clustering of feature and relational datasets[2]. Chenglong Tang proposed a new kind of data weighted fuzzy c-means clustering approach[3]. Chuang-Cheng Chiu propose a weighted feature C-means clustering algorithm (WF-Cmeans) to group all prior cases into several clusters[4]. Zhiqiang Bao proposed a new general weighted fuzzy clustering algorithm to deal with the mixed data including different sample distributions and different features[5][6]. Eyal Krupka proposed a new learning framework that incorporates meta-features[7]. Huaiguo Fu proposed a new feature weighted fuzzy c-means clustering algorithm in a way which this algorithm was able to obtain the importance of each feature[8]. Current researchers do not consider the domain knowledge in features weight. In fact, weights should be assigned to each feature according to domain knowledge. In [9], weak knowledge in the form of feature relative importance (FRI) is presented and explained. Feature relative importance is a real valued approximation of a features importance provided by experts. This paper focuses on ontology feature weight calculations. Different features have different contribution to cluster. Prior knowledge is introduced by ontology. The data can be better understudied on domain

Corresponding author. Email addresses: zhanglei@cumt.edu.cn (Lei ZHANG).

1553-9105/ Copyright 2010 Binary Information Press September, 2010

2960

L. Zhang et al. /Journal of Computational Information Systems 6:9(2010) 2959-2966

knowledge level and OFW-Clustering algorithm is proposed. The remaining parts of the paper are organized as follows. In section 2, we provide ontology-based feature weighting calculation. Section 3 introduces OFW-Clustering algorithm. Section 4 presents experiments. Our conclusions are presented in Section 5. 2. Ontology-based Feature Weighting Calculation 2.1. Clustering with Feature Definition Supposed Database={t1(f11,f12, ,f1n1),t2(f21,f22, ,f2n2), ,tm(fm1,fm2, ,fmnm)},

m i =1 ini

n = N . ti Database

represents the i-th table. fijti represents the j-th feature of the table ti; feature set S represents all of the features of Database.

S = f11 , f12 , , f1n1 U f 21 , f 22 , , f 2 n2 U U f m1 , f m 2 , , f mnm = { f1 , f 2 , , f N } . (1)

Definition 1. (Clustering with Feature Weight) For the feature set S={f1,f2, ,fm}, there is a corresponding feature weight Weight ={w1,w2,,wm}. Feature weight is taken into the cluster distance calculations. Then Euclidean distance calculation formula of the data records d1, d2 is shown as follows. 2.2. Feature Weight Calculation According to the domain ontology features and the basic ideology, the concept of domain ontology-feature and ontology-feature weight calculation method are put forward below. (1) One feature is viewed as one ontology semantic node. Feature set is classified according to semantic rules. Each feature of the data is only defined as a single semantic category. (2) Feature weight in the ontology tree is calculated according to the features overall relevancy.

} {

Definition 2. (Domain Ontology-feature graph) Ontology-feature graph represents domain knowledge model based on semantic ontology, targeting at specific field of the data. It consists of the concept layer, attribute layer, data-type layer. The conceptual layer consists of ontology concept set Ontology-Concept. Ontology-Concept = {Cpt.1, Cpt.2, , Cpt.ncpt }. Object is abstracted as conceptual layer node. Attribute layer consists of ontology feature set Ontology-Attribute, describing conceptual layer node specifically based on feature set. Ontology-Attribute = {Ab.1, Ab.2,,Ab.nab}. Data-type layer describes the attribute layer node from basis metadata layer. It consists of Ontology-Datatype. Ontology-Datatype = {Dt.1,Dt.2,,Dt.ndt}. Each node of semantic concepts corresponds to an abstract object concept. In figure.1, the solid line between the concept semantic layer and the attribute layer represents the relevance between the two abstract concepts. Concept layer node is described by attribute nodes or other elements in the concept layer collection. Solid lines are used to connect concept layer nodes and attribute layer nodes as relevance description, while dotted lines describe data-type layer nodes independently as not relevance. As

shown in figure 1.

L. Zhang et al. /Journal of Computational Information Systems 6:9(2010) 2959-2966

Concept.root

Concept.3

2961

Concept.2 Concept.1

Concept.1

Concept.2 Attrib.2

Attrib.1 Attrib.3

Attrib.2 Attrib.4

Attrib.5

:Concept :Property

Attrib.1 Concept.3

Attrib.3 Concept.4

Attrib.4

Attrib.5

Attrib.6

:partOf DataType.1 DataType.2 DataType.3 :propertyOf No relativit

Fig. 1 Ontology Feature Graph

Fig.2 Ontology Feature Tree

Data object ontology node is constructed from the semantic features of data source and database storage logical model in the domain ontology feature graph. Database table is abstracted to one or more ontology concepts, built concept layer nodes of domain ontology feature graph, depending on the database design paradigm. The relevant properties of the concept layers are abstracted nodes for the attribute as the elements of attribute set to build ontology attribute layer. The data types of attributes are refined to semantic extension type as the data-type layer. The correlation definition and calculation between concept layer nodes and attribute layer nodes are defined in the following ways. Definition 3.(Semantic Ontology Correlation) Two ontology concepts are described with predicate such as part-of, is-a, in <Subject, Predicate, Object>. In this paper, predicate set Predicate, Predicate= { partOf , propertyOf }, is used as ontology predicate description. Correlation between two ontology concepts is initialized from the data type of the properties. According to the predicate set description, ontology relations, Concpet-Concept and Concept-Attribute, are defined as the CC and CA. CC represents as CC = <Concpet.i, partOf, Concept.j>. CA represents as CA = <Concept.i, propertyOf, Attribute.k>. Concept.i,Concept.j Ontology-Concpet; Attribute.kOntology- Attribute. i,j<ncpt, k<nab. Assuming that Concept.i (Ab.i1,Ab.i2, ,Ab.ini), Concept.j (Ab.j1,Ab.j2, ,Ab.jnj). ni,nj<nab. The initial correlation between Concept.i and Concept.j is Correlation (Concept.i,Concept.j):

Correlatio n(Concept .i, Concept . j) = | Concept .i( Ab.i1, Ab.i2,, Ab.ini ) I Concept . j( Ab. j1, Ab. j 2,, Ab. jnj ) | | Concept .i( Ab.i1, Ab.i2,, Ab.ini ) U Concept . j( Ab. j1, Ab. j 2,, Ab. jnj ) |

Definition 4. (Domain Ontology Feature Tree) Domain ontology feature tree (Fig.2) describes the relationship between concepts and features, including all nodes of concept layer and attribute layer in domain ontology feature graph. It is used to calculate the correlation of ontology-concepts and ontology-attributes. Domain ontology feature tree is represented with triples as Ontology-Tree, Ontology-Tree = {<Cpt.root,partOf,Cpt.1>, <Cpt.root,partOf,Cpt.2>, <Cpt.1,propertyOf, Ab.1>, }. Leaf node of the tree is domain ontology feature and the branches node is ontology concept. Based on domain ontology feature tree, the calculation formula of correlation is shown as follows.

Correlation ( Ab.i, Ab. j ) =

(Height( Ab.i ) + Height( Ab. j )) * + DataType( Ab.i, Ab. j ) * (Distance( Ab.i, Ab. j ) + ) + 2 * Max(Height( Ab.i ), Height( Ab. j )) +

(2)

where the function Height(Ab.i),Height(Ab.j) indicate hierarchy of the features Ab.i and Ab.j in the domain ontology tree. DataType(Ab.i, Ab.j) is a Boolean function and return 1 if data types of features Ab.i and

2962

L. Zhang et al. /Journal of Computational Information Systems 6:9(2010) 2959-2966

Ab.j are same, else return 0.Distance(Ab.i, Ab.j) represents length of the shortest path between features in the domain ontology tree. Max(Height(Ab.i),Height(Ab.j)) represents the bigger hierarchy in the domain ontology tree., are variable parameters and the value range are (0, 1). is used to balance the proportion between Height and Distance; is used to adjust data types in the proportion of the similarity calculation. In figure 2, the correlation calculation between Attrib.4 and Attrib.3 is presented. Height(Ab.4) = 3.Height(Ab.3) = 2.The data type of Ab.4 is not the same as Ab.3, so DataType(Ab.4, Ab.3) = 0.Distance(Ab.4, Ab.3) = 5. So correlation(Ab.4, Ab.3) = (5*)/(5 ++2*3+) = (5*)/(11++). The correlation calculation between Ab.4 and Ab.2 is presented. Height(Ab.4) = 3; Height(Ab.2) = 2; The data type of Attrib.4 is not the same as Ab.2, so DataType(Ab.4, Ab.2) = 0; Distance(Ab.4, Ab.2) = 3; So Correlation(Ab.4, Ab.2) = (5*)/(3 ++2*3+) = (5*)/(9++). Obviously, (5*)/(9++)>(5*)/(11++),that is to say Correlation(Ab.4, Ab.2)> Correlation(Ab.4, Ab.3).The similarity between Ab.4 and Ab.3 is greater than that of Ab.4 and Ab.2.When adjusting the parameters constantly , different results will be obtained, but the bigger one will not exchange. As described above, regardless the value of , , Correlation(Ab.4, Ab.2)> Correlation(Ab.4, Ab.3) is always true and some of the correlation will change. Selected appropriate , parameter values through multiple tests and get a better clustering result. According to the calculation of correlation between two features as above, the calculation formula of feature weight is given as following:

Weight (Ab.k) = Average Correlation( Ab.k , Ab.i )

i =1

(3)

3. OFW-Clustering Algorithm 3.1. Similarity Calculation between Data Records In the clustering data set CDataset(record1,record2, ,recordn)T and the feature set

CFeatures{feature1,feature2,,featurem}, each data record recordi has m fields, which is expressed as recordi=(fieldi1,fieldi2,,fieldim), while fieldij corresponds to the featurej . Similarity calculation between data records with feature weight is shown as follows.

1/ p

m L p = weight ( Ab .k )( field k =1

ki

field kj ) p

when p is 1, L1 represents absolute distance of data records.L2 represents Euclidean distance. When p->, Lp represents Chebyshev distance. Taking into account the dimension of recordi, make p = 2. Euclidean distance is selected to calculate similarity values between data records. When Lp -> 0, they are the most similarly. When Lp->, the similarity is faint. Obviously, after getting the corresponding features weight, which cluster the data records belong to can be obtained.

L. Zhang et al. /Journal of Computational Information Systems 6:9(2010) 2959-2966 3.2. OFW-Clustering Algorithm Description

2963

In this algorithm, domain knowledge introduced by ontology is used to calculate the weight of the clustering features. Domain ontology tree is built using ontology expert knowledge according to a given data collection. Features are abstracted as leaf nodes of the ontology feature tree. Deeper abstraction is used to get ontology concepts. Features weight can be gotten through ontology feature tree calculation. Under the guidance of the prior knowledge, parameter values of optimal clustering results can be gotten through continuous change of , . So more accurate cluster results can be also obtained. Algorithm: Ontology-based Feature Weight Clustering algorithm (OFW-Clustering). Input: data set CDataset(record1,record2,,recordn)T; feature set CFeatures{feature1,feature2,,featurem}; domain ontology feature tree Ontology-Tree = {<Cpt.root,partOf,Cpt.1>, <Cpt.root,partOf,Cpt.2>, <Cpt.1,propertyOf, Ab.1>, }. Output: feature weight set Weight ={w1,w2,,wm}; Clustering results Clusters{C1,C2,,Ck} . Algorithm description: BEGIN 1. Input feature set CFeatures{feature1,feature2,,featurem} 2. Build Ontology feature tree using Features. 3. For i=0 to n 4. for j=0 to m // The size of feature set is m Correlation(Ab.i,Ab.j).// calculate the correlation between Ab.i and Ab.j 5. Endfor 6. EndFor 7. For i=0 to m 8. Weight(Ab. i) ( Correlation( Ab.i, Ab. j )) / n . // calculate average correlation as features weight

j =0 n

9. EndFor 10. Initialize k Clusters in CDataset 11. do 12. begin 13. For int i to n do 14. 15. 16. 17. 18. 19. END

L2 = (Weight( Ab.k )( field ki field kj ) 2 )1 / 2 . // data records calculation with feature weight

k =1

EndFor Get new Clusters. end while(Clusters do not Change) //clusters center no longer changes Output->Clusters{C1,C2,,Ck}.

4. Experiments 4.1. Dataset and Environment Experiments are based on some mobile value-added business database. Sample data is customer information data. The overall data set includes user-related 54 descriptive features, and 10 million pieces of data. 1000 pieces of data is from the 10 million pieces of data randomly as experimental data. Experiment environment for clustering is operating system Windows 7 at 2.93GHz PC. Experiment running interface is

2964

L. Zhang et al. /Journal of Computational Information Systems 6:9(2010) 2959-2966

shown in figure 3.The mobile business customers ontology tree is shown in figure 4.Some of the multi-relation database diagram is shown as figure 5.

Fig.3 Experiment Running Interface

Fig.4 Mobile Business Customers Ontology Tree

4.2. Experiment Results

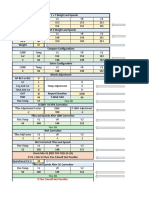

Experiment 1. In OFW-Clustering, domain ontology feature tree is built firstly with test data which is shown in figure 4. Features weight is calculated in the next step using the tree. Finally, clustering with a feature weight is running. Based on historical experience data, =0.4=0.6 are set. Part of features correlation and feature weight calculation results are shown in table 1.

Table 1 Part of Feature Correlation and Feature Weight results School PhoneNum IDCard Sex Name Birthday MarriageType EMail QQ HouseID Address Height Weight Pulse AverageCorr 0.289256 0.289256 0.289256 0.289256 0.247934 0.247934 0.289256 0.289256 0.212766 0.212766 0.212766 0.212766 0.212766 0.260606 Height 0.263736 0.263736 0.263736 0.263736 0.263736 0.318681 0.263736 0.263736 0.318681 0.318681 0.47541 0.408451 0.408451 0.299112 Sex 0.408451 0.408451 0.47541 0.408451 0.338028 0.338028 0.408451 0.408451 0.263736 0.263736 0.263736 0.263736 0.263736 0.300094 Mode 0.263736 0.263736 0.263736 0.263736 0.263736 0.318681 0.263736 0.263736 0.318681 0.318681 0.318681 0.318681 0.318681 0.287189 Birthday 0.338028 0.338028 0.338028 0.338028 0.47541 0.338028 0.338028 0.338028 0.263736 0.263736 0.263736 0.263736 0.263736 0.278973 MarriageType 0.338028 0.338028 0.338028 0.338028 0.338028 0.47541 0.338028 0.338028 0.318681 0.318681 0.318681 0.318681 0.318681 0.294186 Address 0.263736 0.263736 0.263736 0.263736 0.263736 0.318681 0.263736 0.263736 0.408451 0.47541 0.318681 0.318681 0.318681 0.282905

After calculating all of correlations between one feature and the others, feature weight of the one is obtained by averaging the sum of the correlations. On the 1000 test data records, clustering with feature weight is running. Result of experiment 1 is shown in figure 6.

Experiment 2. Cluster test data without feature pretreatment. Feature weights are set same to calculate average Euclidean distance of different data records in clustering. Clustering result is shown in figure 7. Two figures both represent that the horizontal coordinate shows types of history disease and the ordinate

L. Zhang et al. /Journal of Computational Information Systems 6:9(2010) 2959-2966 indicate time of disease. Two kinds of colors represent two clusters.

2965

Fig.5 Part of Multi-relation Database Diagram

Fig.6 Experiment 1 Clustering Result

Fig.7 Experiment 2 Clustering Result

Fig.8 Feature Set Size and Weight Calculation Time

Experiment 3. Part of data with different feature set size is selected in the experiment. It has different calculation time in computing the feature weight in the ontology feature tree. The relationship between

feature set size and calculation time is shown in figure 8. The horizontal represents the size of the data feature set, and the ordinate indicates calculation time. 4.3. Experiments Results Analysis To analyze the results of experiment I and experiment II, A clearly distinguish of the quality of the results is obvious in figure 6 and figure 7. Figure 6 shows two more concentrated and better quality clusters. While figure 7 shows two poor quality and scattered clusters. Experiment I clustering with feature weight is more accurate on the importance of the various features. As a result of using ontologies feature weight calculation method, it will be the introduction of prior knowledge and getting higher accuracy feature weights, so the clustering result is more accurate. Experiment II clustering without feature pretreatment, the importance of features is the same and the result is relatively low. Experiment III is a further contrast with feature size and the time feature weight calculation. According to the feature correlation formula, feature weight calculation time is linear with the feature set size. Feature set size is 54, the proportion of parameter = 0.4, = 0.6, 1.364 seconds is spent on experiment I. The proportion of parameter = 0.4, = 0.6 is invariable, feature set size is changed, and the experiment time are shown in figure 8, abscissa represents different feature set size, while ordinate indicate the time-consuming on feature weight calculation. Linear growth can be educed. Experimental results show that the introduction of ontology as a prior knowledge in the field then calculating the ontology feature weight to guide clustering is more effective. It equals to the use of expert knowledge on the data feature weight calculation and expanding the domain knowledge to clustering, rather than just the dataset itself. The importance of each features are tagged in the clustering with domain

2966

L. Zhang et al. /Journal of Computational Information Systems 6:9(2010) 2959-2966

knowledge. As the feature weight is more accurate using ontology calculation formula, result is also improved in clustering with feature weight. 5. Conclusion Results reflect directly whether clustering process is successful. While the accuracy of the results is close to clustering features, therefore whether the significance of data feature can be incarnated accurately is particularly important. In this paper, OFW-Clustering algorithm is proposed. The feature weight is calculated with prior knowledge in the domain ontology feature tree. The prior knowledge is based on domain rather than dataset itself. It is more accurate to get different importance of the different features. Clustering result with a feature weight is more accurate. The future work is getting different accurate clustering results according to different purpose of users. Acknowledgements This work was financed by post-doctor foundation of Jiangsu Province (0802023C) and youth foundation of China university of mining and technology (2008A040). References

[1] [2] [3] [4] Xiao-jiang Kong, Xiao-jun Tong.Adaptive Algorithms for Weight of the Feature Weighted of FCM. 2009 Fifth International Conference on Image and Graphics, 2009:501-505. Richard J. Hathaway,Yingkang Hu.Density-weighted fuzzy c-means clustering.IEEE Transactions on Fuzzy Systems,2009,17(1):243-252,2009. Chenglong Tang, Shigang Wang, Wei Xu.New fuzzy c-means clustering model based on the data weighted approach. Data& Knowledge Engineering,2010,69(9): 881-900 Chuang-Cheng Chiu and Chieh-Yuan Tsai. A Weighted Feature C-means Clustering Algorithm for Case Indexing and Retrieval in Cased-Based Reasoning. 20th international conference on Industrial, engineering, and other applications of applied intelligent systems,2007: 541-551. Zhiqiang Bao, Bing Han and Shunjun Wu.A General Weighted Fuzzy Clustering Algorithm. Image Analysis and Recognition,2006:102-109. Bing Han, Xinbo Gao and Hongbing Ji.A Novel Feature Weighted Clustering Algorithm Based on Rough Sets for Shot Boundary Detection. Fuzzy Systems and Knowledge Discovery,2006: 471-480. Eyal Krupka,Naftali Tishby. Incorporating Prior Knowledge on Features into Learning. Eleventh International Conference on Artificial Intelligence and Statistics ,2007. Huaiguo Fu, Ahmed M. Elmisery. A New Feature Weighted Fuzzy C-means Clustering Algorithm. IADIS European Conference on Data Mining 2009. Ridwan Al Iqbal. Empirical learning aided by weak domain knowledge in the form of feature importance. http://cogprints.org/6855/,2010.

[5] [6] [7] [8] [9]

Vous aimerez peut-être aussi

- An Energy-Efficient K-Hop Clustering Framework For Wireless Sensor NetworksDocument17 pagesAn Energy-Efficient K-Hop Clustering Framework For Wireless Sensor Networksdiedie_hervPas encore d'évaluation

- Towards Extending The OMNeT++ INET Framework For SimulatinDocument4 pagesTowards Extending The OMNeT++ INET Framework For Simulatindiedie_hervPas encore d'évaluation

- R StreamDocument5 pagesR Streamdiedie_hervPas encore d'évaluation

- The Algorithmicx PackageDocument28 pagesThe Algorithmicx PackageJeff PrattPas encore d'évaluation

- Binu 2015Document12 pagesBinu 2015diedie_hervPas encore d'évaluation

- DE-LEACH: Distance and Energy Aware LEACH: Surender Kumar M.Prateek, N.J.Ahuja Bharat BhushanDocument7 pagesDE-LEACH: Distance and Energy Aware LEACH: Surender Kumar M.Prateek, N.J.Ahuja Bharat Bhushandiedie_hervPas encore d'évaluation

- On The Devolution of Large-Scale Sensor Networks in The Presence of Random FailuresDocument5 pagesOn The Devolution of Large-Scale Sensor Networks in The Presence of Random Failuresdiedie_hervPas encore d'évaluation

- Signal Detection Theory (SDT) : 1 OverviewDocument9 pagesSignal Detection Theory (SDT) : 1 Overviewチャリズモニック ナヴァーロPas encore d'évaluation

- Devillers Et Al (Random Covex Polygon Generation)Document12 pagesDevillers Et Al (Random Covex Polygon Generation)diedie_hervPas encore d'évaluation

- 02 ExerciseDocument1 page02 Exercisediedie_hervPas encore d'évaluation

- 2 Opt 3 OptDocument6 pages2 Opt 3 OptKalpi MittalPas encore d'évaluation

- Random Polygon GenerationDocument6 pagesRandom Polygon Generationdiedie_hervPas encore d'évaluation

- Network Simulation: Broadcast Storm Mitigation and Advanced FloodingDocument3 pagesNetwork Simulation: Broadcast Storm Mitigation and Advanced Floodingdiedie_hervPas encore d'évaluation

- A Comparative Study of Cluster Head Selection Algorithms in WSNDocument12 pagesA Comparative Study of Cluster Head Selection Algorithms in WSNGeorge BismpikisPas encore d'évaluation

- 211 30Document8 pages211 30diedie_hervPas encore d'évaluation

- 07tw Coverage WCNCDocument7 pages07tw Coverage WCNCdiedie_hervPas encore d'évaluation

- Chen & Koutsoukos (Coverage - Survey) 2007Document6 pagesChen & Koutsoukos (Coverage - Survey) 2007diedie_hervPas encore d'évaluation

- Electrical and Computer Engineering Department University of Maryland, College Park, MD 20742, USADocument6 pagesElectrical and Computer Engineering Department University of Maryland, College Park, MD 20742, USAdiedie_hervPas encore d'évaluation

- 485250Document11 pages485250diedie_hervPas encore d'évaluation

- Rafi Et Al (Coverage) 2013Document6 pagesRafi Et Al (Coverage) 2013diedie_hervPas encore d'évaluation

- AOADocument9 pagesAOASravanthi DusiPas encore d'évaluation

- 034758Document13 pages034758diedie_hervPas encore d'évaluation

- Specificity and SensitivityDocument18 pagesSpecificity and SensitivityKaran SharmaPas encore d'évaluation

- 1471 2105 12 77Document8 pages1471 2105 12 77diedie_hervPas encore d'évaluation

- 45Document8 pages45diedie_hervPas encore d'évaluation

- Bondorf & Schmitt (Deployment - Statistical) 2010Document4 pagesBondorf & Schmitt (Deployment - Statistical) 2010diedie_hervPas encore d'évaluation

- Lecture - Performance Good-GraphicsDocument16 pagesLecture - Performance Good-Graphicsdiedie_hervPas encore d'évaluation

- Hossain & Amin (Covex Hull - Approximation) 2013Document7 pagesHossain & Amin (Covex Hull - Approximation) 2013diedie_hervPas encore d'évaluation

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5784)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (119)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Assignment (40%) : A) Formulate The Problem As LPM B) Solve The LPM Using Simplex AlgorithmDocument5 pagesAssignment (40%) : A) Formulate The Problem As LPM B) Solve The LPM Using Simplex Algorithmet100% (1)

- Catalogs - SchlumbergerDocument2 pagesCatalogs - SchlumbergerchengcaiwangPas encore d'évaluation

- Types of Managers and Management Styles: Popmt@uoradea - Ro Dpop@uoradeaDocument7 pagesTypes of Managers and Management Styles: Popmt@uoradea - Ro Dpop@uoradeaJimnadominicPas encore d'évaluation

- SUCHANA EANV SANCHAR PRAUDYOGIK (I.C.T.) AADHARIT SHIKSHAN ADHIGAM VYUV RACHANA KA MADHYAMIK STAR PAR ADHYAYANRAT GRAMIN EANV SHAHARI PARIVESH KE VIDHYARTHITON KI GANIT UPLABDHI PAR PRABHAV KA ADHYAYANDocument9 pagesSUCHANA EANV SANCHAR PRAUDYOGIK (I.C.T.) AADHARIT SHIKSHAN ADHIGAM VYUV RACHANA KA MADHYAMIK STAR PAR ADHYAYANRAT GRAMIN EANV SHAHARI PARIVESH KE VIDHYARTHITON KI GANIT UPLABDHI PAR PRABHAV KA ADHYAYANAnonymous CwJeBCAXpPas encore d'évaluation

- Big Data Analytics and Artificial Intelligence inDocument10 pagesBig Data Analytics and Artificial Intelligence inMbaye Babacar MBODJPas encore d'évaluation

- Managing Change Leading TransitionsDocument42 pagesManaging Change Leading TransitionsSecrets26Pas encore d'évaluation

- Organizational Structure and ProfilesDocument178 pagesOrganizational Structure and ProfilesImran Khan NiaziPas encore d'évaluation

- Us00-000 831 01 02 02Document18 pagesUs00-000 831 01 02 02Cristian Camilo0% (1)

- Aadhaar is proof of identity, not citizenshipDocument1 pageAadhaar is proof of identity, not citizenshipPARTAPPas encore d'évaluation

- Chapter 2 - Key Themes of Environmental ScienceDocument34 pagesChapter 2 - Key Themes of Environmental ScienceJames Abuya BetayoPas encore d'évaluation

- Planets Classification Malefic and BeneficDocument3 pagesPlanets Classification Malefic and Beneficmadhu77Pas encore d'évaluation

- Vocabulary Extension Starter Without AnswersDocument1 pageVocabulary Extension Starter Without AnswersPatrcia CostaPas encore d'évaluation

- Checklist of Bats From Iraq-Mammalian Biology 2020Document14 pagesChecklist of Bats From Iraq-Mammalian Biology 2020Adil DalafPas encore d'évaluation

- Install Steinitz Fractal Breakout Indicator MT4 (39Document10 pagesInstall Steinitz Fractal Breakout Indicator MT4 (39florin_denys-1Pas encore d'évaluation

- A320 Flex CalculationDocument10 pagesA320 Flex CalculationMansour TaoualiPas encore d'évaluation

- Ese 570 Mos Inverters Static Characteristics: Kenneth R. Laker, University of Pennsylvania, Updated 13feb12 1Document44 pagesEse 570 Mos Inverters Static Characteristics: Kenneth R. Laker, University of Pennsylvania, Updated 13feb12 1het shahPas encore d'évaluation

- Process Strategy PPT at BEC DOMSDocument68 pagesProcess Strategy PPT at BEC DOMSBabasab Patil (Karrisatte)100% (1)

- ButeDocument89 pagesButeNassime AmnPas encore d'évaluation

- Exp# 1c Exec System Call Aim: CS2257 Operating System LabDocument3 pagesExp# 1c Exec System Call Aim: CS2257 Operating System LabAbuzar ShPas encore d'évaluation

- Timecode and SourcesDocument4 pagesTimecode and Sourcesapi-483055750Pas encore d'évaluation

- Magazine 55 EnglishPartDocument50 pagesMagazine 55 EnglishPartAli AwamiPas encore d'évaluation

- Universal Chargers and GaugesDocument2 pagesUniversal Chargers and GaugesFaizal JamalPas encore d'évaluation

- Desktop HDD, Processors, and Memory DocumentDocument13 pagesDesktop HDD, Processors, and Memory DocumentsonydearpalPas encore d'évaluation

- Chapter 6 THE SECOND LAW OF THERMODYNAMICS5704685Document29 pagesChapter 6 THE SECOND LAW OF THERMODYNAMICS5704685bensonPas encore d'évaluation

- Energies: Review of Flow-Control Devices For Wind-Turbine Performance EnhancementDocument35 pagesEnergies: Review of Flow-Control Devices For Wind-Turbine Performance Enhancementkarthikeyankv.mech DscetPas encore d'évaluation

- Phil of DepressDocument11 pagesPhil of DepressPriyo DjatmikoPas encore d'évaluation

- USTHB Master's Program Technical English Lesson on Reflexive Pronouns and Antenna FundamentalsDocument4 pagesUSTHB Master's Program Technical English Lesson on Reflexive Pronouns and Antenna Fundamentalsmartin23Pas encore d'évaluation

- Hydrocarbon TechnologyDocument21 pagesHydrocarbon Technologyghatak2100% (1)

- How To Think Like Leonarda Da VinciDocument313 pagesHow To Think Like Leonarda Da VinciAd Las94% (35)

- The Importance of Understanding A CommunityDocument23 pagesThe Importance of Understanding A Communityra sPas encore d'évaluation