Académique Documents

Professionnel Documents

Culture Documents

Parallel Skyline Method Using Two Dimensional Array

Transféré par

daskhagoTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Parallel Skyline Method Using Two Dimensional Array

Transféré par

daskhagoDroits d'auteur :

Formats disponibles

Memoirs of the Faculty of Engineering. Okayama University.VoI.24. No.2. pp.99-1I2.

March 1990

Parallel Skyline Method using Two Dimensional Array

Takeo Taniguchi* & Kohji Fujiwara**

(Received January 14 . 1990)

SYNOPSIS

This paper presents an effective solver for a large

sparse set of linear algebraic equations, which appears

at the application of the finite element and the finite

difference methods in engineering field. Proposed

method is a family of SKYLINE METHOD, and for faster

computation on the vector processors the original

skyline is modified with respect to following three

items; the use of inner products of matrix operations,

the removal of unnecessary numerical operations and the

introduction of two-dimensional array for storing the

data of coefficient matrix.

1. INTRODUCTION

In accordance with the development of supercomputer, the size of

problems to be treated becomes larger and larger, and better solvers

have been always sought by many mathematicians and engineers. Espe-

cially, the requirement became very strong for the solver of large

sparse set of linear algebraic equations which necessarily apppear at

solving 3-dimensional problems.

The conditions of "a good solver" are less memory size and fast

computation, and at present we find two selections, Le. PRECONDI-

TIONED CONJUGATE GRADIENT METHOD and SKYLINE METHOD. The former

belongs to the iterative one, and the latter the direct method.

* Department of Engineering Science

** Department of Civil Engineering

99

100

Takeo TANIGUCHI and Kohji FUJIWARA

PCG (Preconditioned Conjugate Gradient) method apparently requires

less memory-size comparing to the Skyline Method, but we are anxious

on CPU-time and also on the infeasible computation due to the precon-

ditioner used in the solver. On the contrary, the skyline method is

reliable on its computation, and it has been one of the most popular

solvers in the last decade.

Skyline method introduces one-dimensional array for storing data of

coefficient matrix of linear equations in order to remove unnecessary

zero entries which are not used for the elimination calculation. This

method is modified as Parallel Skyline Method for improving the ap-

plicability to supercomputers. The aim of this modified method is to

utilize in the matrix computations the property of the degree-of-

freedom (DOF) appeared in many engineering problems. That is, in order

to use the fast computation facility of the supercomputer in inner

products for matrices, the elimination computation is done by treating

submatrix whose size is set to be DOF as a unit.[1,2,3] But, the data

of the parallel skyline method is still stored in one-dimensional ar-

ray as the one for the original skyline method.

Another effective solver belonging to the skyline method is COL-

SOL.[4] This solver can remove unnecessary numerical operations during

the elimination process, and as a result it can save CPU-time.

The purpose of this investigation is to show the influence of fac-

tors ( the data storing method, the matrix operations, and the removal

of unnecessary numerical operations during the elimination process) to

CPU-time. Finally, we propose a kind of skyline methods which can in-

troduce all of these factors. The listing of new solver is attached in

Appendix A, which is valid for symmetric coefficient matrices. A

sample of the use of the proposed skyline method is also given in Ap-

pendix B.

2. PARALLEL SKYLINE METHOD

Let

Ax b ( 1 )

be a set of linear algebraic equations, where A is a n*n coefficient

matrix, x is the solution vector, and b is a given vector. Here, we

assume A is sparse, symmetric and possitive definite. Since the coef-

Parallel Skyline Method 101

ficient matrix A is symmetric, the matrix can be factorized into the

matrix product of the lower triangular (L), diagonal (0), and upper

triangular matrices (U) by using Modified Cholesky factorization.

Moreover, using the nature that U is equal to the transpose of the

lower triangular matrix, we obtain

( 2 )

Firstly we explain original skyline method. This method consists of

two processes; the forward elimination and the backward substitution.

From the characteriscics of the direct method the forward elimination

process mainly governs CPU-time of the modified Cholesky factorization

method, and, then, the decreasing of the execution time can be firstly

achieved when CPU-time of the forward elimination is saved. Then, our

discussion is concentrated in the treatment of the forward elimination

process.

This elimination process is expressed in eq.3, and the numerical

operation is proceeded downward from the uppermost nonzero entry to

the main diagonal columnwisely as shown in Fig.1-a.

1..

1J

1..

11

i- 1

a ii

j-l

(a .. - I l'k1'kdkk)/d ..

1J k=1 1 J JJ

( 3 )

From this computational ordering for off-diagonal entries the data

of the coefficient matrix are stored columwisely in one-dimensional

array. Since all data are stored in one dimensional array, this method

is suitable to the vector processor.

Equation 3 expressing the elimination process suggests that any

off-diagonal nonzero element can be columnwisely factorized, and,

therefore, each column can be factorized independently. That is, the

elimination process of the skyline solver can be treated in parallel.

Consideration on these characteristics of the factorization process

leads to two types of skyline methods as shown in Fig.1.[1,2,3J The

column or row indicated by arrows in the figure shows the elements to

be factorized, and the shaded zone indicates the entries which are

102

Taken TANIGUCHI and Kohji FUJIWARA

column for

factorization

(a) (b)

Fig.l Two types of Modified cholesky Factorization

used for the factorization.

In case of m-dimensional problems the degree-of-freedom of any node

is m, and the coefficient matrix can be divided into a gathering of

m*m submatrices. Then, their factorization can be proceeded by treat-

ing m*m submatrix as a unit and, m columns of each submatrix can be

treated in parall. These characteristics can be effectively introduced

into the procedure of the forward elimination. These methods are

generally called the parallel skyline method.

Eq.3 suggests that the forward elimination procedure can be im-

proved still more for saving CPU-time. For example, any factorized

lower triangular element, l(i,j), requires the multiplication and also

the subdivision by the same main diagonal entries. If these unneces-

sary operations can be removed from the elimination process by chang-

ing the ordering of the numerical operations, then the execution time

can be largely saved. A solver named COLSOL is the one which can

remove these unnecessary operations, and the method is, at present,

the fastest solver among the direct methods. [4]

In general, the skyline method uses one-dimensional array for stor-

ing the data of the coefficient matrix. But, it is obvious that the

factorization process in parallel computaion requires the searching of

data which can be treated in parallel. This indicates that CPU-time

for searching these data can be saved, if all data are stored in a

two-dimensional array of (m*mN) for the problem with N nodes and m

Parallel Skyline Method

103

degree-of-freedom.

As explained already, skyline-type solvers presently in use are the

parallel skyline method using one-dimensional array and COLSOL. But,

above considerations clarify that we can propose several new solvers

by introducing and combining items explained already. Successive sec-

tion is used for the proposal of these new solvers and their examina-

tion.

3. FAMILY OF PARALLEL SKYLINE METHODS AND THEIR EFFICIENCY

(7) 2PARSKYc

(5) 2PARSKYa

( 4) 1PARSKYb

(6) 2PARSKYb

The family of the skyline method can be classified using follow-

ing items:

a. The difference of the elimination processes (See Fig.1.)

b. The data storing method (One- or two-dimensional array)

c. Treatment of the diagonal entries (Refer to COLSOL.)

By considering these items following solvers can be proposed:

(1) SKY (Original skyline method)

(2) COLSOL

( 3) 1PARSKYa Parallel skyline method using 1-dim. array and

the elimination process of Fig.1-a

Parallel skyline method using 1-dim. array and

the elimination process of Fig.1-b

Parallel skyline method using 2-dim. array and

the elimination process of Fig.1-a

Parallel skyline method using 2-dim. array and

the elimination process of Fog.1-b

Parallel skyline method using 2-dim. array and

the technique of COLSOL

SKY and COLSOL are the original solvers presently in use, 1PARSKYa

and 1PARSKYb are developed by Miyoshi et al (see [1,2]), and the

residuals (2PARSKYa, 2PARSKYb, and 2PARSKYc) are newly developed in

this investigation which are based on the solvers proposed by Miyoshi

et al, include the techniques of COLSOL and use 2-dimensional array

for data storing.

The efficiency of these solvers are examined through the numerical

experiments for following test problems. The first one is the actual

3-dimensional structural problem as shown in Fig.2. A cube subjecting

distributed load on its upper surface and fixed at its bottom surface

is analyzed using the displacement-type finite element method. The

104

Takeo TANIGUCHI and Kohji FUJIWARA

z

x V = 0.2

Fig.2 Problem 1

y

cube is regularily divided by 8-point isoparametric elements.

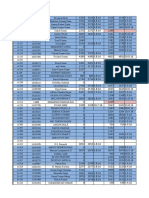

This cube is divided into several types of finite element models,

and they are solved using seven solvers mentioned above. The

execution-time required for these solvers is summarized in Table 1. In

the table N shows the number of equations, and HBW shows the half

bandwidth of coefficient matrices. These problems are 3-dimensional

ones, and the 2-dimensional array storing the data are (3,3*NODE),

where NODE shows the number of nodes which are set in the model. This

table shows CPU-time in seconds required for solving these problems.

The values in parentheses show the ratio of CPU-time between SKY and

other solvers.

The results suggest that COLSOL is the fastest solver among them,

and the 2PARSKYc comes next. That is, the removal of unnecessary

numerical operations through the factorization process is most effec-

tive for decreasing the execution-time.

The row of V-ratio shows the vectorization ratio of these solvers,

and we know that more than 90% of the procedure can be vectorized for

all of them. These values suggest that the family of the skyline

method is suitable for the vector machine.

N HBIJ SKY IPARSKYa IPARSKYb 2PARSKYa 2PARSKYb 2PARSKYc COLSOL

0.1497682 0.1028552 0.0822869 0.0862447 0.0765069 0.0762478 0.0757617

1152 (I) (1. 46) (1. 82) (1. 74) (1. 96) (1.96) (1. 98)

0.2270194 0.1567595 0.1235989 0.1293761 0.1152391 0.1146158 0.1135362

1620 33 (I) (1. 45) (1. 84) (1. 75) (1.97) (1.98) (2.00)

0.2819681 0.1960989 0.1573838 0.1646881 0.1469377 0.1460794 0.1440782

1944 (I) (1.44) (1. 79) (1.71) (1. 92) (1. 93) (1. 96)

0.6269479 0.3459422 0.2901599 0.3005155 0.2752899 0.2687449 0.2635265

1080 (I) (1.81) (2.16) (2.09) (2.28) (2.33) (2.38)

0.9746556 0.5346052 0.4485185 0.4645214 0.4274530 0.4151756 0.4073789

1620 96 (I) (1. 82) (2.17) (2.10) (2.28) (2.35) (2.39)

1.1906687 0.6320499 0.5435475 0.5629893 0.5158620 0.5031210 0.4936866

1944 (I) ( 1.88) (2.19) (2.11 ) (2.31) (2.37) (2.41)

V-RATIO(%) 98.90 95.70 98.31 96.32 94.84 98.84 98.59

Table 1 Results of Problem 1

;p

;;

q:

[

-o

CJ1

106

N

Takeo TANIGUCHI and Kohji FlJJIWARA

HBW

Fig.3 Problem 2

The second problem is an artificial one whose coefficient matrix

shows a. skyline type of nonzero zone as shown in Fig.3. This problem

is used for the examination of the influence of the use of two-

dimensional array to the execution-time, and by considering OOF of

submatrices the number of rows of the two-dimensional array is set 3,

5 and 7.

All data of the computations are summarized in Table 2 which show

only the ratio of the execution time between SKY and other solvers.

The results show that the fastest solver is 2PARSKYc, and that it

becomes fastest when OOF is set to be 5. The reason can be explained

as following: In accordance with the increase of m the computation of

the inner product of matrices becomes effective, but on the contrary,

since total number of variables is fixed, the vector length becomes

short and its computation becomes relatively long. As a result, CPU-

time becomes shortest, when OOF is equal to 5.

Moreover, we can recognize that solvers of the skyline method be-

come effective for problems with bigger bandwidth. Its reason is that

the vector length becomes longer in accordance with the increase of

HBW.

All solvers can be vectorized more than 90%, and it suggests that

the family of the skyline method is very suitable for the vector

processors.

2PARSKYa 2PARSKYb 2PARSKYc

N HBIJ SKY IPARSKYa IPARSKYb COLSOL

DOF=3 OOF=5 DOF=7 OOF=3 OOF=5 OOF=7 OOF=3 OOF=5 OOF=7

105 1 2.11 2.25 2.32 2.39 2.36 2.36 2.49 2.45 2.45 2.59 2.46 2.49

1050

210 1 2.85 3.04 3.12 3.21 2.92 3.15 3.32 3.02 3.34 3.49 3.09 3.28

105 1 2.12 2.26 2.32 2.39 2.36 2.37 2.50 2.46 2.45 2.60 2.47 2.50

1470

210 1 2.80 2.98 3.07 3.15 2.87 3.09 3.27 2.96 3.28 3.43 3.04 3.22

105 1 2.12 2.25 2.32 2.40 2.36 2.37 2.50 2.46 2.46 2.60 2.47 2.50

1995

210 1 2.80 2.99 3.07 3.16 2.87 3.10 3.28 2.97 3.29 3.44 3.04 3.22

.V-RATIO(%) 98.90 95.70 98.31 96.32 94.26 96.18 94.84 97.10 96.15 98.84 98.57 98.18 98.59

Table 2 Results of Problem 2

;p

<l

;;:

a

:::l

108

4. CONCLUDING REMARKS

Takeo TANIGUCHI and Kohji FUJIWARA

Several variations of the skyline method are newly proposed in this

paper, and their efficiency was investigated through a number of test

problems. The numerical experiments can lead to following conclusions:

(1) The skyline method is originally suitable for the vector proces-

sor, and its family also fits to the machine.

(2) In order to fasten the computation of skyline methods the intro-

duction of the inner products for matrices, 2-dimensional array for

data storing, and the removal of unnecessary numerical operations

through the factorization are necessary.

(3) In accordance with the increase of the number of equations and the

half bandwidth, the efficiency of the proposed methods become higher,

and we can fasten more than three times of the original skyline

method.

(4) Skyline methods newly proposed in this paper are expected to be

much faster when they are used on parallel computers.

All the numerical experiments in this paper are done using the su-

percomputer, SX-1E, of Data Processing Center of Okayama University.

REFERENCES

[1] Toshiro Miyoshi & Yuichiro Yoshida, "Fini te element analysis of

surface cracks by the supercomputer", Proc. of JSME, Vol. 53 (1989),

pp.255-260

[2] Toshiro Miyoshi, "The bench mark test for the three dimensional

finite element codes for a supercomputer", Proc. of Symp. on Computa-

tional Methods in Structural Engineering and Related Fields, Vol.13,

(1989), pp.129-132

[3] Toshiro Miyoshi & Naoki Takano,"General purpose high speed solver

for supercomputers", Proc. of Symp. on Computational Methods in Struc-

tural Engineering and Related Fields, Vol.13, (1989), pp.145-148

[4) K.J. Bathe & E.L. Wilson, 'Numerical Methods in Finite Element

Analysis', Prentice-Hall (1976), Chapter 7

APPENDIX A

Parallel Skyline Method

PARALLEL SKYLINE METHOD

109

C

C******************************************************************

C* Input *

C* *

C* A(3,KKl) Coefficient Matrix for Parallel Skyline *

C* IADR(NNl) Addresses of Diagonal Element Blocks *

C* B(N) Right - Hand - Side Vector *

C* *

C* N Number of Equations *

C* KKI Total Number of Elements below Block Skyline *

C* *

C* Output *

C* *

C* X(N): Vector of Solutions *

C* *

C******************************************************************

SUBROUTINE PAR5KY(A,N,IADR,B,X,KK1,IHBW)

IMPLICIT REAL*8(A-H,0-Z)

DIMENSION A(3,KK1),IADR(N+l),D(N),X(N),B(N)

IHBW3=IHBW/3

N3=N/3

C

DO 1000 I=I,N3

IDT=IADR(I+l)-IADR(I)-1

IDT=IDT*3

JI=3*IADR(I)

ID=3*I-2

C

52=0.DO

53=0.DO

56=0.DO

Bl=O.DO

B2=0.DO

B3=0.DO

K=ID

DO 10 L= I , I DT

K=K-l

Cl=A(I,Jl+L)/D(K)

C2=A(2,Jl+L)/D(K)

C3=A(3,Jl+L)/D(K)

S2=S2+Cl*A(2,Jl+L)

S3=53+Cl*A(3,Jl+L)

56=S6+C2*A(3,Jl+L)

Bl=Bl+Cl*A(I,Jl+L)

B2=B2+C2*A(2,Jl+L)

B3=B3+C3*A(3,Jl+L)

A(I,Jl+L)=CI

A(2,Jl+L)=C2

10 A(3,Jl+L)=C3

CC

D(ID)=A<l,Jl)-Bl

A(2,Jl)=A(2,Jl)-S2

A(3,Jl)=A(3,Jl)-S3

C=A(2,Jl)/D(ID)

B2=B2+C*A(2,Jl)

56=56+C*A(3,JI)

A(2,J1)=C

110

CC

C

C

C

Taken TANIGUCHI and Knhji FUjIWARA

0(IO+l)=A(2,Jl-l)-B2

A(3,Jl-l)=A(3,Jl-l)-S6

Cl=A(3,Jl)/0(IO)

C2=A(3,Jl-l)/0(IO+l)

0(IO+2)=A(3,Jl-2)-B3-Cl*A(3,Jl)-C2*A(3,Jl-l)

A(3,Jl>=Cl

A(3,J1-1)=C2

M=MINO(N3-I+1,IHBW3)

00 1100 J=2,M

K1=I+J-1

IJ=IAOR(K1)+J

ISTA=I-IAOR(Kl+1)+IJ

IF(I.LT.ISTA) GO TO 1100

IH=I-ISTA

IH=3*IH

IH=MINO<IOT,IH)

J2=3*IJ-3

Sl=O.OO

S2=0.00

S3=0.00

S4=0.00

S5=0.00

S6=0.00

S7=0.00

S8=0.00

S9=0.00

00 20 K=l,IH

Sl=Sl+A(1,J1+K)*A(1,J2+K)

S2=S2+A(1,J1+K)*A(2,J2+K)

S3=S3+A(1,J1+K)*A(3,J2+K)

S4=S4+A(2,Jl+K)*A(1,J2+K)

S5=S5+A(2,Jl+K)*A(2,J2+K)

S6=S6+A(2,J1+K)*A(3,J2+K)

S7=S7+A(3,Jl+K)*A(1,J2+K)

S8=S8+A(3,J1+K)*A(2,J2+K)

20 S9=S9+A(3,J1+K)*A(3,J2+K)

A(1,J2)=A(l,J2)-Sl

A(2,J2)=A(2,J2)-S2

A(3,J2)=A(3,J2)-S3

C

AA2=A(2,J1)

A(1,J2-1)=A(1,J2-1)-S4-AA2*A(l,J2)

A(2,J2-1)=A(2,J2-1)-S5-AA2*A(2,J2)

A(3,J2-1)=A(3,J2-1)-S6-AA2*A(3,J2)

AA3=A(3,J1-1)

AB3=A(3,Jl>

A(1,J2-2)=A(1,J2-2)-S7-AA3*A(1,J2-1)

& -AB3*A(l,J2)

A(2,J2-2)=A(2,J2-2)-S8-AA3*A(2,J2-1)

& -AB3*A(2,J2)

A(3,J2-2)=A(3,J2-2)-S9-AA3*A(3,J2-1)

& -AB3*A(3,J2)

1100 CONTINUE

1000 CONTINUE

C

Parallel Skylirw Method

C

DO 1200 1=I,N3

IH=IADR(I+l)-IADR(I)-1

IH=3*IH

Jl=3*IADR(I)

ID=3*(I-l)+1

DO 80 K=I, I H

B(ID)=B(ID)-A(I,Jl+K)*B(ID-K)

B(ID+l)=B(ID+l)-A(2,Jl+K)*B(ID-K)

80 B(ID+2)=B(ID+2)-A(3,Jl+K)*B(ID-K)

B(ID+l)=B(ID+l)-A(2,Jl)*B(ID)

B(ID+2)=B(ID+2)-A(3,Jl)*B(ID)-A(3,Jl-l)*B(ID+l)

1200 CONTINUE

C

DO 1250 I =1 , N

B(I)=B(I)/D(I)

1250 CONTINUE

C

DO 1300 I=N3,1,-1

IH=IADR(I+l)-IADR(I)-1

IH=3*IH

Jl=3*IADR(I)

ID=3*<I-l)+1

X(ID+2)=B(ID+2)+X(ID+2)

X(ID+l)=B(ID+l)-A(3,Jl-l)*X(ID+2)+X(ID+l)

X(ID)=B(ID)-A(2,Jl)*X(ID+l)-A(3,Jl)*X(ID+2)+X(ID)

DO 90 K=I,IH

11=ID-K

90 X(ll)=X(ll)-A(I,Jl+K)*X(ID)-A(2,Jl+K)*X(ID+l)

8 -A(3,Jl+K)*X(ID+2)

1300 CONTINUE

C

RETURN

END

III

112 Taken TANIGUCHI and Knhji FUJIWARA

APPENDIX B AN EXAMPLE OF INPUT AND OUTPUT DATA FOR "PARSKY"

Equation

-._._-------------*, ---------------.-

10 3 2 1 3 1 20

11 0 2 0 0 16

<D 10 a 1 3 16

---------------- .. --------_.-------

9 0 1 1 0 3 14

8 2 0 1 0 11

@

9 0 0 0

.

x = 12

.------------.---- -----._---.----

10 0 1 0 0 0 15

S y m 12 1 1 0 1 20

Q)

10 0 1 0 20

-------------- ..

9 0 1 11

10 0 11

8 10

<INPUT DATA>

N= 12

<D

@ Q)

n

[0 010

0 o 9 0 o 10 0 0 1 0 2 1 0 o 9 0 1

A= 0 11 3 0 8 0 o 12 0 0 1 0 1 0 3 o 10 0 1 0

10 0 2 9 2 1 10 1 1 0 0 3 3 0 1 8 0 1 0 1

IADR = ( 2 3 6 8

)

B=( 20 16 16 14 11 12 15 20 20 11 11 10 )

<OUTPUT DATA>

x =( 1 )

Vous aimerez peut-être aussi

- Performance Analysis of Partition Algorithms For Parallel Solution of Nonlinear Systems of EquationsDocument4 pagesPerformance Analysis of Partition Algorithms For Parallel Solution of Nonlinear Systems of EquationsBlueOneGaussPas encore d'évaluation

- Determination of Modal Residues and Residual Flexibility For Time-Domain System RealizationDocument31 pagesDetermination of Modal Residues and Residual Flexibility For Time-Domain System RealizationSamagassi SouleymanePas encore d'évaluation

- Learning Reduced-Order Models of Quadratic Dynamical Systems From Input-Output DataDocument6 pagesLearning Reduced-Order Models of Quadratic Dynamical Systems From Input-Output Datasho bhaPas encore d'évaluation

- A Sparse Matrix Approach To Reverse Mode AutomaticDocument10 pagesA Sparse Matrix Approach To Reverse Mode Automaticgorot1Pas encore d'évaluation

- Analisis Matricial de Segundo OrdenDocument21 pagesAnalisis Matricial de Segundo OrdenZulma WaraPas encore d'évaluation

- Ij N×NDocument5 pagesIj N×NLaila Azwani PanjaitanPas encore d'évaluation

- Efficient Parallel Implementation of The Fox AlgorithmDocument8 pagesEfficient Parallel Implementation of The Fox Algorithmmariame8Pas encore d'évaluation

- Dynamic Analysis of A Car Chassis Frame Using The Finite Element MethodDocument10 pagesDynamic Analysis of A Car Chassis Frame Using The Finite Element MethodNir ShoPas encore d'évaluation

- DSP Implementation of Cholesky DecompositionDocument4 pagesDSP Implementation of Cholesky DecompositionNayim MohammadPas encore d'évaluation

- DGFDFDDocument8 pagesDGFDFDjs9999Pas encore d'évaluation

- DSP Implementation of Cholesky DecompositionDocument4 pagesDSP Implementation of Cholesky DecompositionBaruch CyzsPas encore d'évaluation

- Computers and Mathematics With Applications: D. Mierke, C.F. Janßen, T. RungDocument22 pagesComputers and Mathematics With Applications: D. Mierke, C.F. Janßen, T. RungAT8iPas encore d'évaluation

- LDL FactorizacionDocument7 pagesLDL FactorizacionMiguel PerezPas encore d'évaluation

- Computer Solution: Digital Electromagnetic Transients Multiphase NetworksDocument12 pagesComputer Solution: Digital Electromagnetic Transients Multiphase NetworksObed GarcíaPas encore d'évaluation

- Domme 1967Document12 pagesDomme 1967Carlos ChávezPas encore d'évaluation

- Adaptive Refinement in Vibrational Analysis and Isogemetric AnalysisDocument22 pagesAdaptive Refinement in Vibrational Analysis and Isogemetric AnalysisAbhishek KumarPas encore d'évaluation

- Sparse Implementation of Revised Simplex Algorithms On Parallel ComputersDocument8 pagesSparse Implementation of Revised Simplex Algorithms On Parallel ComputersKaram SalehPas encore d'évaluation

- Computer Solution: Digital Electromagnetic Transients Multiphase NetworksDocument12 pagesComputer Solution: Digital Electromagnetic Transients Multiphase NetworksMuhammed Cihat AltınPas encore d'évaluation

- Latex SubmissionDocument6 pagesLatex SubmissionguluPas encore d'évaluation

- Safe and Effective Determinant Evaluation: February 25, 1994Document16 pagesSafe and Effective Determinant Evaluation: February 25, 1994David ImmanuelPas encore d'évaluation

- 3D Morphing Without User Interaction: Submitted To Eurographics Symposium On Geometry Processing (2004)Document10 pages3D Morphing Without User Interaction: Submitted To Eurographics Symposium On Geometry Processing (2004)tsigelakistisPas encore d'évaluation

- Investigation of Quantum Algorithms For Direct Numerical Simulation of The Navier-Stokes EquationsDocument17 pagesInvestigation of Quantum Algorithms For Direct Numerical Simulation of The Navier-Stokes EquationsマルワPas encore d'évaluation

- Mathematical and Computational Modeling: With Applications in Natural and Social Sciences, Engineering, and the ArtsD'EverandMathematical and Computational Modeling: With Applications in Natural and Social Sciences, Engineering, and the ArtsRoderick MelnikPas encore d'évaluation

- The Adaptive Cross-Approximation Technique For The 3-D Boundary-Element Method PDFDocument4 pagesThe Adaptive Cross-Approximation Technique For The 3-D Boundary-Element Method PDFJonathan MillerPas encore d'évaluation

- Seismic 4 425: T (C TTZ), D, NRL, S, TT, W)Document5 pagesSeismic 4 425: T (C TTZ), D, NRL, S, TT, W)SyedFaridAliPas encore d'évaluation

- A New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big TargetsDocument5 pagesA New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big TargetsMartín FigueroaPas encore d'évaluation

- Breaking Through Memory Limitation in GPU Parallel Processing Using Strassen AlgorithmDocument5 pagesBreaking Through Memory Limitation in GPU Parallel Processing Using Strassen AlgorithmDonkeyTankPas encore d'évaluation

- 2m PDFDocument5 pages2m PDFSAKTHI SANTHOSH BPas encore d'évaluation

- Icfd10 Eg 30i1Document8 pagesIcfd10 Eg 30i1NextScribePas encore d'évaluation

- Reddy Chap3-4Document68 pagesReddy Chap3-4Juan CarlosPas encore d'évaluation

- Profile Front MinimizationDocument12 pagesProfile Front MinimizationvttrlcPas encore d'évaluation

- Convex Hull 3dDocument12 pagesConvex Hull 3dsqc150Pas encore d'évaluation

- Numerical Accuracy in The Solution of The ShallowDocument8 pagesNumerical Accuracy in The Solution of The ShallowFernando Robles AguilarPas encore d'évaluation

- Fast Sparse Matrix MultiplicationDocument11 pagesFast Sparse Matrix MultiplicationRocky BarusPas encore d'évaluation

- Recursive and Residual Algorithms For The Efficient Numerical Integration of Multi-Body SystemsDocument31 pagesRecursive and Residual Algorithms For The Efficient Numerical Integration of Multi-Body SystemsCleves AxiomaPas encore d'évaluation

- A Practical Performance Comparison of Parallel Matrix Multiplication Algorithms On Networks of WorkstationsDocument2 pagesA Practical Performance Comparison of Parallel Matrix Multiplication Algorithms On Networks of WorkstationsPhulturoo KhanPas encore d'évaluation

- Content PDFDocument14 pagesContent PDFrobbyyuPas encore d'évaluation

- Intelligent Simulation of Multibody Dynamics: Space-State and Descriptor Methods in Sequential and Parallel Computing EnvironmentsDocument19 pagesIntelligent Simulation of Multibody Dynamics: Space-State and Descriptor Methods in Sequential and Parallel Computing EnvironmentsChernet TugePas encore d'évaluation

- Discontinuous Finite Difference and Spectral Methods For Self Force ApplicationsDocument29 pagesDiscontinuous Finite Difference and Spectral Methods For Self Force ApplicationsbabistokasPas encore d'évaluation

- Gorong - GorongDocument9 pagesGorong - GorongMuh IzdiharPas encore d'évaluation

- Modern Model Order Reduction For Industrial ApplicationsDocument6 pagesModern Model Order Reduction For Industrial ApplicationsAnonymous lEBdswQXmxPas encore d'évaluation

- Accelerating Marching Cubes With Graphics HardwareDocument6 pagesAccelerating Marching Cubes With Graphics HardwareGustavo Urquizo RodríguezPas encore d'évaluation

- A Mixed Precision Semi-Lagrangian Algorithm and Its Performance On AcceleratorsDocument7 pagesA Mixed Precision Semi-Lagrangian Algorithm and Its Performance On Acceleratorsrtfss13666Pas encore d'évaluation

- A Fast Modal Technique For The Computation of The Campbell Diagram of Multi-Degree-Of-Freedom RotorsDocument18 pagesA Fast Modal Technique For The Computation of The Campbell Diagram of Multi-Degree-Of-Freedom RotorsRafael SoaresPas encore d'évaluation

- Icase: IIII11l11ll IllDocument26 pagesIcase: IIII11l11ll IllSk SharmaPas encore d'évaluation

- Franklin Note 2Document21 pagesFranklin Note 2WyliePas encore d'évaluation

- Nelson L. Passos Robert P. Light Virgil Andronache Edwin H.-M. Sha Midwestern State UniversityDocument6 pagesNelson L. Passos Robert P. Light Virgil Andronache Edwin H.-M. Sha Midwestern State UniversityAkhil KsPas encore d'évaluation

- A Wavelet-Based Anytime Algorithm For K-Means Clustering of Time SeriesDocument12 pagesA Wavelet-Based Anytime Algorithm For K-Means Clustering of Time SeriesYaseen HussainPas encore d'évaluation

- A 2589 Line Topology Optimization Code Written For The Graphics CardDocument20 pagesA 2589 Line Topology Optimization Code Written For The Graphics Cardgorot1Pas encore d'évaluation

- Solution To Industry Benchmark Problems With The Lattice-Boltzmann Code XFlowDocument22 pagesSolution To Industry Benchmark Problems With The Lattice-Boltzmann Code XFlowCFDiran.irPas encore d'évaluation

- 6 59 1 PBDocument8 pages6 59 1 PBSaifizi SaidonPas encore d'évaluation

- Orlando Newmark Bogday 05Document15 pagesOrlando Newmark Bogday 05Alex AraújoPas encore d'évaluation

- Final Project Report Transient Stability of Power System (Programming Massively Parallel Graphics Multiprocessors Using CUDA)Document5 pagesFinal Project Report Transient Stability of Power System (Programming Massively Parallel Graphics Multiprocessors Using CUDA)shotorbariPas encore d'évaluation

- Mcgill University School of Computer Science Acaps LaboratoryDocument34 pagesMcgill University School of Computer Science Acaps Laboratorywoxawi6937Pas encore d'évaluation

- Large Scale Optimization Strategies For Zone Configuration of Simulated Moving BedsDocument18 pagesLarge Scale Optimization Strategies For Zone Configuration of Simulated Moving BedsDoctorObermanPas encore d'évaluation

- Efficient Simplificaiton of Point Sampled SurfacesDocument8 pagesEfficient Simplificaiton of Point Sampled SurfacesVivian LiPas encore d'évaluation

- Finite-Element Method For Elastic Wave PropagationDocument10 pagesFinite-Element Method For Elastic Wave Propagationbahar1234Pas encore d'évaluation

- Journal of Statistical Software: Warping Functional Data in R and C Via A Bayesian Multiresolution ApproachDocument22 pagesJournal of Statistical Software: Warping Functional Data in R and C Via A Bayesian Multiresolution Approacha a aPas encore d'évaluation

- ECSE 420 - Parallel Cholesky Algorithm - ReportDocument17 pagesECSE 420 - Parallel Cholesky Algorithm - ReportpiohmPas encore d'évaluation

- Evolutionary Algorithms for Mobile Ad Hoc NetworksD'EverandEvolutionary Algorithms for Mobile Ad Hoc NetworksPas encore d'évaluation

- Notice: Hostel in From WillDocument1 pageNotice: Hostel in From WilldaskhagoPas encore d'évaluation

- Nodal Position and Element Connectivity of 200 Element Dielectric Placed Between Two Electrodes Whose Potential Difference Is 1VDocument1 pageNodal Position and Element Connectivity of 200 Element Dielectric Placed Between Two Electrodes Whose Potential Difference Is 1VdaskhagoPas encore d'évaluation

- Pranveer Singh Group of Institutions, Nh-2, Bhauti, Kanpur Bus Route No.1 ONLY Dated .11.11.2018Document2 pagesPranveer Singh Group of Institutions, Nh-2, Bhauti, Kanpur Bus Route No.1 ONLY Dated .11.11.2018daskhagoPas encore d'évaluation

- Cug August PaymentDocument1 pageCug August PaymentdaskhagoPas encore d'évaluation

- Weibull Plot For Unstretched ConditionDocument3 pagesWeibull Plot For Unstretched ConditiondaskhagoPas encore d'évaluation

- Moonish Crazing PDFDocument1 pageMoonish Crazing PDFdaskhagoPas encore d'évaluation

- DcascascDocument16 pagesDcascascdaskhagoPas encore d'évaluation

- DVSVVDocument4 pagesDVSVVdaskhagoPas encore d'évaluation

- New Work 6Document4 pagesNew Work 6daskhagoPas encore d'évaluation

- SgfbsfbsDocument6 pagesSgfbsfbsdaskhagoPas encore d'évaluation

- SDVSZZSFBDocument10 pagesSDVSZZSFBdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- ErverjvberkDocument379 pagesErverjvberkdaskhagoPas encore d'évaluation

- Application Form Regular Faculty150816111245 PDFDocument7 pagesApplication Form Regular Faculty150816111245 PDFdaskhagoPas encore d'évaluation

- New Work 4Document3 pagesNew Work 4daskhagoPas encore d'évaluation

- Schematic Diagram Electrode Assembly PDFDocument1 pageSchematic Diagram Electrode Assembly PDFdaskhagoPas encore d'évaluation

- Mess DuesDocument1 pageMess DuesdaskhagoPas encore d'évaluation

- September Mess Bill-2016Document365 pagesSeptember Mess Bill-2016daskhagoPas encore d'évaluation

- Marc Mess PaymentDocument1 pageMarc Mess PaymentdaskhagoPas encore d'évaluation

- Freely-Jointed Chain: For Up To Date Version of This Document, See Z. SuoDocument6 pagesFreely-Jointed Chain: For Up To Date Version of This Document, See Z. SuokhayatPas encore d'évaluation

- Full Length Paper INCAM2015 1Document3 pagesFull Length Paper INCAM2015 1daskhagoPas encore d'évaluation

- Paymnet and Receipt Onward June-16..Document31 pagesPaymnet and Receipt Onward June-16..daskhagoPas encore d'évaluation

- Mess Menu 1 December 2016Document2 pagesMess Menu 1 December 2016daskhagoPas encore d'évaluation

- Me 201 Engineering Mechanics Statics3121Document19 pagesMe 201 Engineering Mechanics Statics3121tugskulPas encore d'évaluation

- VCE Maths Methods Units 1 & 2Document3 pagesVCE Maths Methods Units 1 & 2Asmaa MannasahebPas encore d'évaluation

- Mathematics 9 2ndDocument6 pagesMathematics 9 2ndAngelo Morcilla TiquioPas encore d'évaluation

- ME458 FEM - L2 - Intro To Stiffness MethodDocument90 pagesME458 FEM - L2 - Intro To Stiffness MethodHamza KhizarPas encore d'évaluation

- Emsyll 2Document4 pagesEmsyll 2Pareekshith KattiPas encore d'évaluation

- Q1 - Math 10 ReviewersDocument2 pagesQ1 - Math 10 ReviewersJerry Bhoy ReniedoPas encore d'évaluation

- Weak & Strong FormDocument23 pagesWeak & Strong FormZainuri NorliyatiPas encore d'évaluation

- Example 1: A Natural Number Is Also A Whole Number.: Classification of Real Numbers ExamplesDocument5 pagesExample 1: A Natural Number Is Also A Whole Number.: Classification of Real Numbers Examplescykool_2kPas encore d'évaluation

- Clifford AlgebraDocument22 pagesClifford AlgebraChandra Prakash100% (1)

- Maths Quiz Questions Without Ans (Class 7)Document3 pagesMaths Quiz Questions Without Ans (Class 7)Student Service CellPas encore d'évaluation

- CylinderDocument5 pagesCylinderMohammadFaizanPas encore d'évaluation

- Terminating and Non-Terminating DecimalsDocument2 pagesTerminating and Non-Terminating Decimalshasan bishPas encore d'évaluation

- Taylor Series PDFDocument16 pagesTaylor Series PDFIhsan ALrashidPas encore d'évaluation

- Adding and Subtracting PolynomialsDocument8 pagesAdding and Subtracting PolynomialsHyung BaePas encore d'évaluation

- EEP 311L Laboratory Activity 4Document1 pageEEP 311L Laboratory Activity 4Lemonade TVPas encore d'évaluation

- Sem 2 Maths Chap 2Document19 pagesSem 2 Maths Chap 2Mohammed Abdul Räfåè100% (2)

- Functions and Their RepresentationsDocument7 pagesFunctions and Their RepresentationsLuis Ignacio Lomeli GalazPas encore d'évaluation

- Vectors Tensors Complete PDFDocument198 pagesVectors Tensors Complete PDFsujayan2005Pas encore d'évaluation

- Olympiad Stuff You Should Know For International Olympiad :DDocument10 pagesOlympiad Stuff You Should Know For International Olympiad :DInkePas encore d'évaluation

- Abacus Math Worksheets FreeDocument10 pagesAbacus Math Worksheets FreeBrenda YeePas encore d'évaluation

- Semi Direct ProductDocument5 pagesSemi Direct ProductalbanianretardPas encore d'évaluation

- Ch1 - Laplace Transform L.T. - 26 Pgs PDFDocument26 pagesCh1 - Laplace Transform L.T. - 26 Pgs PDFMohamad Duhoki100% (1)

- Mac1114 m5 ws2Document3 pagesMac1114 m5 ws2api-276143540Pas encore d'évaluation

- Chap 1Document17 pagesChap 1Batma ThurgaPas encore d'évaluation

- Matlab 1Document6 pagesMatlab 1Wafaa AimanPas encore d'évaluation

- Matlab SolverDocument3 pagesMatlab SolverSudhanwa KulkarniPas encore d'évaluation

- Name: - Date: - Grade Level and Section: - ScoreDocument2 pagesName: - Date: - Grade Level and Section: - ScoreJustine Antiojo CruzPas encore d'évaluation

- Sample Quiz LMDocument5 pagesSample Quiz LMA LPas encore d'évaluation

- Generalized Inverses and Solutions of Linear Systems: John Z. HearonDocument6 pagesGeneralized Inverses and Solutions of Linear Systems: John Z. HearonvahidPas encore d'évaluation

- 121 EEE110 LabSheet01Document11 pages121 EEE110 LabSheet01bkmmizanPas encore d'évaluation