Académique Documents

Professionnel Documents

Culture Documents

Face Feature Extraction For Recognition Using Radon Transform

Transféré par

AJER JOURNALTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Face Feature Extraction For Recognition Using Radon Transform

Transféré par

AJER JOURNALDroits d'auteur :

Formats disponibles

American Journal of Engineering Research (AJER) 2014

w w w . a j e r . o r g

Page 219

American Journal of Engineering Research (AJER)

e-ISSN : 2320-0847 p-ISSN : 2320-0936

Volume-03, Issue-03, pp-219-224

www.ajer.org

Research Paper Open Access

Face Feature Extraction for Recognition Using Radon Transform

Justice Kwame Appati

1

, Gabriel Obed Fosu

1

, Gideon Kwadzo Gogovi

1

1

Department of Mathematics, Kwame Nkrumah University of Science and Technology, Kumasi, Ghana

Abstract : - Face recognition for some time now has been a challenging exercise especially when it comes to

recognizing faces with different pose. This perhaps is due to the use of inappropriate descriptors during the

feature extraction stage. In this paper, a thorough examination of the Radon Transform as a face signature

descriptor was investigated on one of the standard database. The global features were rather considered by

constructing a Gray Level Co-occurrences Matrices (GLCMs). Correlation, Energy, Homogeneity and Contrast

are computed from each image to form the feature vector for recognition. We showed that, the transformed face

signatures are robust and invariant to the different pose. With the statistical features extracted, face training

classes are optimally broken up through the use of Support Vector Machine (SVM) whiles recognition rate for

test face images are computed based on the L1 norm.

Keywords: - Radon Transform; SVM; Correlation; Face Recognition

I. INTRODUCTION

In recent years, extensive research has been conducted on Face Recognition Systems. However,

irrespective of all excellent work achieved, there still exist some problems unhandled fully especially when it

comes to databases containing individuals presenting their faces with different poses.

In 1997, Etemad and Chellappa [1], in their Linear Discriminant Analysis (LDA) algorithm, made an

objective evaluation of the significance of features extracted for identifying a unique face from a database. This

LDA of faces provides a small set of features that carries the most relevant information for classification

purposes. These features were obtained through eigenvector analysis of scatter matrices with the objective of

maximizing between-class variations and minimizing within-class variations. The algorithm uses a projection

based feature extraction procedure and an automatic classification scheme for face recognition. A slightly

different method, called the evolutionary pursuit method for face recognition was described by Liu and

Wechsler [2]. Their method processes images in a lower dimensional whitened PCA subspace. Directed but

random rotations of the basis vectors in this subspace are searched by Genetic Algorithm, where evolution is

driven by a fitness function defined in terms of performance accuracy and class separation. From thence, many

face representation approaches have been introduced including subspace based holistic features and local

appearance features [3]. Typical holistic features include the well-known principal component analysis (PCA)

[4-5] and independent component analysis (ICA) [6].

In 2003, Zhao et al [7] made a great progress on database images with small variation in facial pose

and lighting. In their study, Principal Component Analysis (PCA) was use as their face descriptor. Following

their work, Moon and Jonathon [8] implemented a generic modular Principal Component Analysis (PCA)

algorithm where numerous design decisions were stated. Experiment was made by using different approach to

the illumination normalization in order to study its effects on performance. Moreover, variation in the number of

eigenvectors to represent a face image as well as similarity measure in the face classification proceeds.

This study investigates the problem of feature extraction in face recognition to achieve high

performance in the face recognition system. Face Recognition method based on Radon Transform which

provides directional information in face image as a feature extraction descriptor and SVM as a classifier was

achieved by testing it on Face94 Database.

American Journal of Engineering Research (AJER) 2014

w w w . a j e r . o r g

Page 220

II. PRELIMINARY DEFINITIONS

Some definitions of the features extracted from a face image P(i,j) for performance analysis and the proposed

RTFR model using SVM are discussed.

2.1 Energy

It is the sum of squared elements in the GLCMs. The range for GLCMs is given by [0, 1]. Energy is 1 for

constant image [9].

2

1 1

( ( , ))

N N

i j

Energy P i j

= =

=

(1)

2.2 Contrast

It is the measure of the intensity contrast between a pixel and its neighbour over the whole face image. The

range for GLCMs is given by [0, (size (GLCMs, 1) - 1)

2

]. Contrast is zero for constant image [9].

2

1 1

(| |) ( , )

N N

i j

Contrast i j P i j

= =

=

(2)

2.3 Homogeneity

It is a value that measures the closeness of the distribution of elements in the GLCMs to the diagonal. The range

for GLCMs is [0, 1]. Homogeneity is 1 for diagonal GLCMs. [9]

1 1

( , )

(1 ( ))

N N

i j

P i j

Homogeneity

i j

= =

=

+

(3)

2.4 Correlation

It is a measure of how correlated a pixel is to its neighbour over the whole image. The range for GLCMs is

given by [-1, 1]. Correlation is 1 or -1 for a perfectly positively or negatively correlated image. Correlation is

NaN (not a number) for a constant image. [9]

1 1

( )( ) ( , )

N M

i j

i j

i j

i j P i j

Correlation

o o

= =

=

(4)

where is the mean and is the variance.

III. MODEL

3.1Radon Transform

Radon transform computed at different angles along with the estimated facial pixel motion gives a spatio-

temporal representation of the expression, are efficiently tackled. The motion vector at position (x, y) can be

expressed as:

( , )

( , ) ( , )

k

j x y

k

k

v x y a x y e

|

=

(5)

The discrete Radon transform of the motion vector magnitude, at angle is then given by:

cos sin , sin cos

( ) ( , ) |

k x t u y t u

u

R a x y

u u u u

u

= = +

=

=

(6)

As it can be seen from this equation, using Radon transform we estimate the spatial distribution of the energy of

perturbation. An attempt to compute the direction of the facial parts motion, although useful, requires extremely

accurate optical flow estimation as well as global facial feature tracking.

American Journal of Engineering Research (AJER) 2014

w w w . a j e r . o r g

Page 221

Fig. 1. Concept of the Radon Transform

The projections on the Radon Transform on angles give the face signatures and are used to characterize the

expressions.

3.2 Support Vector Machine Classification (SVM)

SVM is class of maximum margin classifier for pattern recognition between two classes. It works by

finding the decision surface that has maximum distance to the closest points in the training set which are termed

support vectors. Assume training set of points x

i

R

n

belongs to one of the two classes identified by the label y-

i

{-1, 1} and also assuming that the data is linearly separable, then the goal of maximum classification is to

separate the two classes by a hyperplane such that the distance to the support vectors is maximized. This

hyperplane is referred to as the optimal separating hyperplane and has the form:

1

( )

i i i

i

f x y x x b o

=

= - +

(7)

The coefficients

i

and the b in Eqn (7) are the solutions of a quadratic programming problem. Classification of

new data point x is performed by computing the sign of the right side of Eqn (7). In the following we will use

1

1

( )

i i i

i

i i i

i

y x x b

d x

y x

o

o

=

=

- +

=

(8)

to perform multi-class classification. The sign of d is the classification result for x and |d| is the distance from x

to the hyper-plane. The further away the point is from the decision surface, the more reliable the classification

result.

In multi-class classification, there are two basic strategies for solving q-class problems with the SVM:

In one-vs-all approach, q SVM is trained and each of the SVM separates a single class from all remaining

classes.In the pairwise approach, q(q - 1)/2 machines are trained and each SVM separates a pair of classes.

However, regarding the training effort, the one-vs-all approach is preferable since only q SVM has to be trained

compared to q(q - 1)/2 SVM.

3.3 Feature Vectors

The Radon transform is used to extract the image intensity along the radial line oriented at a specific

angle. Out of this face signature, the Gray-Level Co-occurrence Matrix is computed from which the following

statistical features are extracted; Correlation, Contrast, Energy and Homogeneity which are concatenated in row

to form the unique feature vector for a particular face image.

3.4 Matching

The final feature vector of the test face image is determined and compared with the trained feature

vector. The Euclidean Distance is then computed between trained face database and the test face data. The

image matched index which recorded the list Euclidean Distance is selected with the assumption that, it is the

American Journal of Engineering Research (AJER) 2014

w w w . a j e r . o r g

Page 222

correct index for the test image. This is then verified with the original index of the test image. If the indices are

the same then a match is recorded otherwise miss-match.

2

2

1

( )

n

i i

i

X A x a

=

=

(9)

where:

X is the feature vector of the trained image.

A is the feature vector of the test image

nis the number of elements in the feature vector

x

i

is the element of the trained feature vector

a

i

is the element of the test feature vector

IV. ANALYSIS AND DISCUSSION

Facial images used for this study were taken from Faces94 database which is composed of 152

individuals with 20 been female, 112 male and 20 male staff kept in separate directories. These separate

directories were merged into one to achieve the different lighting effect. The subjects were sited at

approximately the same distance from the camera and were asked to speak while a sequence of twenty images

was taken and this was to introduce moderate and natural facial expression variation. Each facial image was

taken at a resolution of 180 200 pixels in the portrait format on a plain green background and index 1 to 20

prefixed with a counter. In all, a total of 3040 images were created which make up the database for the study.

Fig. 2. Sample of face image from Database

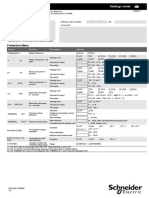

Table 1. Algorithm for the RTFR

1. Read face image from the database.

2. Convert image from color space to gray scale.

3. Compute the intensity map of each face image in the database by applying the Radon Transform on

it.

4. For each intensity image map computed, calculate the Gray-Level Co-occurrence Matrix

5. The global statistical feature, that is: Correlation, Energy, Contract and Homogeneity are established

from the Gray-Level Co-occurrence Matrix.

6. A concatenation of these four features forms the final feature vector per face image.

7. Dataset containing feature vector is now divided in to training and test set for performance analysis

8. SVM is applied to compute the recognition rate

12_4 12_5 12_6

12_7 12_8 12_91 2_10 12_11 12_12

12_1 12_2 12_3

12_7 12_ 12_9

12_7 12_ 12_9

12_7 12_ 12_9

12_7 12_ 12_9

American Journal of Engineering Research (AJER) 2014

w w w . a j e r . o r g

Page 223

Fig. 3. Block Diagram of RTFR

This proposed algorithm accept face image as input and returns a verified face image from the trained

dataset as output. The objective of this design is to extract the least features from a face image which is global

and spacio-temporal in nature so as the make face recognition invariant to pose.

The performance of our proposed method (RTFR) was revealed by testing it on the face94 database. Before this

performance was carried out, the feature vector containing 12,160 features with four features for each face

image was partitioned into two disjoint set that is the trained features and the test features. At the end of the

partition, the test data has 2,432 features whiles the trained date has 9,728 features resulting in 608 and 2432

face images for test and train respectively. Each face feature in the test data is matched against all the face

features in the train dataset while the Euclidean distance is taken. Out of the many distance taken, the minimum

distance is selected along with it image index. At the end of all these processes, the recognition rate was

computed which gave 97% accuracy.

Yes

No

Start

Face Image

Data

Convert to Gray scale

Radon Transform

Compute GLCM

Extract Face Features

Concatenate as

feature vector

SVM Classifier

Faces index

the same?

Matched Mismatched

End

American Journal of Engineering Research (AJER) 2014

w w w . a j e r . o r g

Page 224

V. CONCLUSION

In this paper, we observed that our Radon Transform based feature extraction for face recognition

(RTFR) yielded a good recognition rate though only four features were extracted from the face for recognition

purpose. This may be due to the fact that the Radon transforms extract features that are highly correlated

therefore throwing away the less relevant intensity values. This act as a feature reduction method since only face

projections are of interest to us for further reduction and hence makes feature storage easier for computation

aside the good performance rate recorded.

REFERENCES

[1] K. Etemad and R. Chellappa, Discriminant Analysis for Recognition of Human Face Images, Proc.

First Int. Conf. on Audio and Video Based Biometric Person Authentication, Crans Montana, Switzerland,

Lecture Notes In Computer Science; Vol.1206, August 1997, pp.127 - 1422

[2] C. Liu and H. Wechsler, Face Recognition usingEvolutionary Pursuit, Proc. Fifth European Conf. on

Computer Vision, ECCV, Freiburg, Germany, Vol II, 02-06 June 1998, pp.596-612.

[3] S. Z. Li and A. K. Jain, Handbook of Face Recognition,New York,Springer-Verlag, 2005

[4] M. A. Turk and A.P. Pentland, Face Recognition usingeigenfaces, Proc. IEEE Computer Society Conf.

Comput. vs. Pattern Recognition, June 1991 pp. 586-591.

[5] P. Belhumeur. J. Hespanha and D. Kriegman, Eigenfacesvs. fisherfaces: recognition using class specific

linear projection, IEEE Trans. On Pattern Analysis and Machine Intelligence, vol. 26, no. 9, Sept. 2004,

pp.1222-1228

[6] P. Conor, Independent component analysis a new concept, Signal Processing, vol. 36,1994, pp. 287-

314.

[7] W. Zhao, R. Chellappa, P. J. Phillips, A. Rosenfeld, FaceRecognition: A Literature Survey, ACM

Computing Surveys, Vol. 35, No. 4, 2003, pp.399-458.

[8] H. Moon, P Jonathon Phillips, Computational andPerformance Aspects of PCA Based Face Recognition

Algorithms, Perception 30(3), 2001, pp.303 321

[9] D. R. Shashi Kumar, K. B. Raja, R. K. Chhotaray, and S.Pattanaik, DWT Based Fingerprint Recognition

using Non Minutiae Features, International Journal of Computer Science Issues, vol 8(2), 2011, pp.

257-265.

Vous aimerez peut-être aussi

- Face Recognition Using PCA and SVMDocument5 pagesFace Recognition Using PCA and SVMNhat TrinhPas encore d'évaluation

- Manisha Satone 2014Document14 pagesManisha Satone 2014Neeraj KannaugiyaPas encore d'évaluation

- Labelled Faces in The WildDocument12 pagesLabelled Faces in The Wildabhinav anandPas encore d'évaluation

- Face RecognitionDocument8 pagesFace RecognitionDivya VarshaaPas encore d'évaluation

- Face Recognition Using SURF Features and SVM Classifier: Bhaskar AnandDocument8 pagesFace Recognition Using SURF Features and SVM Classifier: Bhaskar AnandAnonymous Ys17x7Pas encore d'évaluation

- Face Recognition Based On SVM and 2DPCADocument10 pagesFace Recognition Based On SVM and 2DPCAbmwliPas encore d'évaluation

- Study of Different Techniques For Face RecognitionDocument5 pagesStudy of Different Techniques For Face RecognitionInternational Journal of Multidisciplinary Research and Explorer (IJMRE)Pas encore d'évaluation

- Face Recognition Techniques: A Survey: V.VijayakumariDocument10 pagesFace Recognition Techniques: A Survey: V.Vijayakumaribebo_ha2008Pas encore d'évaluation

- School of Biomedical Engineering, Southern Medical University, Guangzhou 510515, ChinaDocument4 pagesSchool of Biomedical Engineering, Southern Medical University, Guangzhou 510515, ChinaTosh JainPas encore d'évaluation

- Linear Regression PDFDocument7 pagesLinear Regression PDFminkowski1987Pas encore d'évaluation

- Performance Analysis of Linear Appearance Based Algorithms For Face RecognitionDocument10 pagesPerformance Analysis of Linear Appearance Based Algorithms For Face Recognitionsurendiran123Pas encore d'évaluation

- Compression Based Face Recognition Using DWT and SVMDocument18 pagesCompression Based Face Recognition Using DWT and SVMsipijPas encore d'évaluation

- Icip 2009Document4 pagesIcip 2009PratikPas encore d'évaluation

- Active Shape Model Segmentation Using Local Edge Structures and AdaboostDocument9 pagesActive Shape Model Segmentation Using Local Edge Structures and AdaboostÖner AyhanPas encore d'évaluation

- Wavelet Based Face Recognition SystemDocument4 pagesWavelet Based Face Recognition SystemrednivsajPas encore d'évaluation

- Vishal M. Patel, Tao Wu, Soma Biswas, P. Jonathon Phillips and Rama ChellappaDocument4 pagesVishal M. Patel, Tao Wu, Soma Biswas, P. Jonathon Phillips and Rama ChellappaSatish NaiduPas encore d'évaluation

- Face Recognition Using Principal Component Analysis of Gabor Filter ResponsesDocument5 pagesFace Recognition Using Principal Component Analysis of Gabor Filter ResponsesTuğçe KadayıfPas encore d'évaluation

- Pose EstimationDocument4 pagesPose Estimationnounou2132Pas encore d'évaluation

- WCECS2008 pp1166-1171 2Document6 pagesWCECS2008 pp1166-1171 2PavanPas encore d'évaluation

- SSRN Id3753064Document14 pagesSSRN Id3753064Abdan SyakuraPas encore d'évaluation

- Face Recognition Using The Most Representative Sift Images: Issam Dagher, Nour El Sallak and Hani HazimDocument10 pagesFace Recognition Using The Most Representative Sift Images: Issam Dagher, Nour El Sallak and Hani Hazimtidjani86Pas encore d'évaluation

- Adaptive Segmentation Algorithm Based On Level Set Model in Medical ImagingDocument9 pagesAdaptive Segmentation Algorithm Based On Level Set Model in Medical ImagingTELKOMNIKAPas encore d'évaluation

- One Millisecond Face Alignment With An Ensemble of Regression TreesDocument8 pagesOne Millisecond Face Alignment With An Ensemble of Regression TreesJeffersonPas encore d'évaluation

- Color Segmentation and Morphological ProcessingDocument3 pagesColor Segmentation and Morphological ProcessingImran KhanPas encore d'évaluation

- MapDocument5 pagesMapMarc AsenjoPas encore d'évaluation

- Two-Dimensional Laplacianfaces Method For Face RecognitionDocument7 pagesTwo-Dimensional Laplacianfaces Method For Face RecognitionKeerti Kumar KorlapatiPas encore d'évaluation

- Pose Estimation For Isar Image ClassificationDocument4 pagesPose Estimation For Isar Image ClassificationDivine Grace BurmalPas encore d'évaluation

- Applications of Linear Algebra in Facial RecognitionDocument3 pagesApplications of Linear Algebra in Facial RecognitionRAJ JAISWALPas encore d'évaluation

- Face Recognition Using DCT - DWT Interleaved Coefficient Vectors With NN and SVM ClassifierDocument6 pagesFace Recognition Using DCT - DWT Interleaved Coefficient Vectors With NN and SVM ClassifiertheijesPas encore d'évaluation

- Analysis of Facial Expression Using Gabor and SVM: Praseeda Lekshmi.V - , Dr.M.SasikumarDocument4 pagesAnalysis of Facial Expression Using Gabor and SVM: Praseeda Lekshmi.V - , Dr.M.SasikumarRahSamPas encore d'évaluation

- Exploiting Facial Landmarks For Emotion Recognition in The WildDocument4 pagesExploiting Facial Landmarks For Emotion Recognition in The Wildassasaa asasaPas encore d'évaluation

- 6614 Ijcsit 12Document8 pages6614 Ijcsit 12Anonymous Gl4IRRjzNPas encore d'évaluation

- Feature and Decision Fusion Based Facial Recognition in Challenging EnvironmentDocument6 pagesFeature and Decision Fusion Based Facial Recognition in Challenging Environmentpayel777Pas encore d'évaluation

- P C O - L - P F F I R: Erformance Omparison F Pca, DWT Pca and WT CA OR ACE Mage EtrievalDocument10 pagesP C O - L - P F F I R: Erformance Omparison F Pca, DWT Pca and WT CA OR ACE Mage EtrievalcseijPas encore d'évaluation

- Pca + SVCDocument5 pagesPca + SVCĐức AnhPas encore d'évaluation

- Face RecognitionDocument8 pagesFace RecognitionDwi RochmaPas encore d'évaluation

- A Deep Learning Approach For Face Detection and LoDocument7 pagesA Deep Learning Approach For Face Detection and LoTemitayo Olutimi EjidokunPas encore d'évaluation

- Technique of Face Recognition Based On PCA With Eigen-Face ApproachDocument12 pagesTechnique of Face Recognition Based On PCA With Eigen-Face ApproachsushilkumarPas encore d'évaluation

- Eye Detection Using 2D Discrete Wavelet Transform and Geometric MomentsDocument6 pagesEye Detection Using 2D Discrete Wavelet Transform and Geometric MomentsAbhishek RajPas encore d'évaluation

- A New Approach For Face Image Enhancement and RecognitionDocument10 pagesA New Approach For Face Image Enhancement and RecognitionDinesh PudasainiPas encore d'évaluation

- Face Recognition by Using Linear Discriminant AnalysisDocument4 pagesFace Recognition by Using Linear Discriminant Analysisapi-233850996Pas encore d'évaluation

- Real-Time Facial Feature Tracking From 2D+3D Video StreamsDocument4 pagesReal-Time Facial Feature Tracking From 2D+3D Video StreamsAbhishek AgrawalPas encore d'évaluation

- Ivanna K. Timotius 2010Document9 pagesIvanna K. Timotius 2010Neeraj Kannaugiya0% (1)

- Age Estimation and Gender Classification Based On PDFDocument7 pagesAge Estimation and Gender Classification Based On PDFRicha AngelPas encore d'évaluation

- Automatic Rectification of Perspective Distortion From A Single Image Using Plane Homography PDFDocument12 pagesAutomatic Rectification of Perspective Distortion From A Single Image Using Plane Homography PDFAnonymous lVQ83F8mCPas encore d'évaluation

- Image-Based Subspace Analysis For Face Recognition: Vo Dinh Minh Nhat and Sungyoung LeeDocument17 pagesImage-Based Subspace Analysis For Face Recognition: Vo Dinh Minh Nhat and Sungyoung Leerhizom cruzPas encore d'évaluation

- Support Vector Machines Applied To Face RecognitionDocument7 pagesSupport Vector Machines Applied To Face RecognitionAnh HungPas encore d'évaluation

- Expectation-Maximization Technique and Spatial - Adaptation Applied To Pel-Recursive Motion Estimation Sci2004Document6 pagesExpectation-Maximization Technique and Spatial - Adaptation Applied To Pel-Recursive Motion Estimation Sci2004ShadowPas encore d'évaluation

- Po-Jui Huang and Duan-Yu Chen Department of Electrical Engineering, Yuan Ze University, Chung-Li, Taiwan Dychen@saturn - Yzu.edu - TW, S970561@mail - Yzu.edu - TWDocument5 pagesPo-Jui Huang and Duan-Yu Chen Department of Electrical Engineering, Yuan Ze University, Chung-Li, Taiwan Dychen@saturn - Yzu.edu - TW, S970561@mail - Yzu.edu - TWArati ChavanPas encore d'évaluation

- Face Recognition With Support Vector Machines: Global Versus Component-Based ApproachDocument7 pagesFace Recognition With Support Vector Machines: Global Versus Component-Based ApproachVuong LePas encore d'évaluation

- Driver's Eye State Identification Based On Robust Iris Pair LocalizationDocument4 pagesDriver's Eye State Identification Based On Robust Iris Pair LocalizationKhalil UllahPas encore d'évaluation

- Comparison On PCA ICA and LDA in Face RecognitionDocument4 pagesComparison On PCA ICA and LDA in Face RecognitionIIR indiaPas encore d'évaluation

- Face Recognition Using Curvelet Based PCADocument4 pagesFace Recognition Using Curvelet Based PCAkd17209Pas encore d'évaluation

- Robust Face Recognition Approaches Using PCA, ICA, LDA Based On DWT, and SVM AlgorithmsDocument5 pagesRobust Face Recognition Approaches Using PCA, ICA, LDA Based On DWT, and SVM AlgorithmsКдйікі КциPas encore d'évaluation

- Kazemi One Millisecond Face 2014 CVPR PaperDocument8 pagesKazemi One Millisecond Face 2014 CVPR PaperRomil ShahPas encore d'évaluation

- A Face Recognition Scheme Based On Principle Component Analysis and Wavelet DecompositionDocument5 pagesA Face Recognition Scheme Based On Principle Component Analysis and Wavelet DecompositionInternational Organization of Scientific Research (IOSR)Pas encore d'évaluation

- Shadowed LaneDocument7 pagesShadowed LaneAshutosh PandeyPas encore d'évaluation

- Eigenfaces and Fisherfaces: Naotoshi SeoDocument5 pagesEigenfaces and Fisherfaces: Naotoshi SeoOsmanPas encore d'évaluation

- 4 Ozdil2014Document3 pages4 Ozdil2014kvinothscetPas encore d'évaluation

- K Nearest Neighbor Algorithm: Fundamentals and ApplicationsD'EverandK Nearest Neighbor Algorithm: Fundamentals and ApplicationsPas encore d'évaluation

- Experimental Investigation On The Effects of Digester Size On Biogas Production From Cow DungDocument6 pagesExperimental Investigation On The Effects of Digester Size On Biogas Production From Cow DungAJER JOURNALPas encore d'évaluation

- Performance Analysis of LTE in Rich Multipath Environments Considering The Combined Effect of Different Download Scheduling Schemes and Transmission ModesDocument6 pagesPerformance Analysis of LTE in Rich Multipath Environments Considering The Combined Effect of Different Download Scheduling Schemes and Transmission ModesAJER JOURNALPas encore d'évaluation

- Pixel Based Off-Line Signature Verification SystemDocument6 pagesPixel Based Off-Line Signature Verification SystemAJER JOURNALPas encore d'évaluation

- The Differentiation Between The Turbulence and Two-Phase Models To Characterize A Diesel Spray at High Injection PressureDocument7 pagesThe Differentiation Between The Turbulence and Two-Phase Models To Characterize A Diesel Spray at High Injection PressureAJER JOURNALPas encore d'évaluation

- Comparative Analysis of Cell Phone Sound Insulation and Its Effects On Ear SystemDocument6 pagesComparative Analysis of Cell Phone Sound Insulation and Its Effects On Ear SystemAJER JOURNALPas encore d'évaluation

- Structure and Surface Characterization of Nanostructured Tio2 Coatings Deposited Via HVOF Thermal Spray ProcessesDocument9 pagesStructure and Surface Characterization of Nanostructured Tio2 Coatings Deposited Via HVOF Thermal Spray ProcessesAJER JOURNALPas encore d'évaluation

- An Evaluation of Skilled Labour Shortage in Selected Construction Firms in Edo State, NigeriaDocument12 pagesAn Evaluation of Skilled Labour Shortage in Selected Construction Firms in Edo State, NigeriaAJER JOURNALPas encore d'évaluation

- Weighted Denoising With Multi-Spectral Decomposition For Image CompressionDocument13 pagesWeighted Denoising With Multi-Spectral Decomposition For Image CompressionAJER JOURNALPas encore d'évaluation

- Utilization of "Marble Slurry" in Cement Concrete Replacing Fine AgreegateDocument4 pagesUtilization of "Marble Slurry" in Cement Concrete Replacing Fine AgreegateAJER JOURNALPas encore d'évaluation

- Improved RSA Cryptosystem Based On The Study of Number Theory and Public Key CryptosystemsDocument7 pagesImproved RSA Cryptosystem Based On The Study of Number Theory and Public Key CryptosystemsAJER JOURNALPas encore d'évaluation

- Production and Comparartive Study of Pellets From Maize Cobs and Groundnut Shell As Fuels For Domestic Use.Document6 pagesProduction and Comparartive Study of Pellets From Maize Cobs and Groundnut Shell As Fuels For Domestic Use.AJER JOURNALPas encore d'évaluation

- Recycling of Scrapped Mating Rings of Mechanical Face SealsDocument5 pagesRecycling of Scrapped Mating Rings of Mechanical Face SealsAJER JOURNALPas encore d'évaluation

- Switching of Security Lighting System Using Gsm.Document12 pagesSwitching of Security Lighting System Using Gsm.AJER JOURNALPas encore d'évaluation

- Minimizing Household Electricity Theft in Nigeria Using GSM Based Prepaid MeterDocument11 pagesMinimizing Household Electricity Theft in Nigeria Using GSM Based Prepaid MeterAJER JOURNALPas encore d'évaluation

- Experimental Evaluation of Aerodynamics Characteristics of A Baseline AirfoilDocument6 pagesExperimental Evaluation of Aerodynamics Characteristics of A Baseline AirfoilAJER JOURNALPas encore d'évaluation

- The Role of Citizen Participant in Urban Management (Case Study: Aligudarz City)Document6 pagesThe Role of Citizen Participant in Urban Management (Case Study: Aligudarz City)AJER JOURNALPas encore d'évaluation

- A Concept of Input-Output Oriented Super-Efficiency in Decision Making Units.Document6 pagesA Concept of Input-Output Oriented Super-Efficiency in Decision Making Units.AJER JOURNALPas encore d'évaluation

- WHY JESUS CHRIST CAME INTO THE WORLD?... (A New Theory On "TIE MINISTRY")Document12 pagesWHY JESUS CHRIST CAME INTO THE WORLD?... (A New Theory On "TIE MINISTRY")AJER JOURNALPas encore d'évaluation

- Unmanned Aerial Vehicle and Geospatial Technology Pushing The Limits of DevelopmentDocument6 pagesUnmanned Aerial Vehicle and Geospatial Technology Pushing The Limits of DevelopmentAJER JOURNALPas encore d'évaluation

- Distinct Revocable Data Hiding in Ciphered ImageDocument7 pagesDistinct Revocable Data Hiding in Ciphered ImageAJER JOURNALPas encore d'évaluation

- Eye State Detection Using Image Processing TechniqueDocument6 pagesEye State Detection Using Image Processing TechniqueAJER JOURNALPas encore d'évaluation

- Statistical Method of Estimating Nigerian Hydrocarbon ReservesDocument10 pagesStatistical Method of Estimating Nigerian Hydrocarbon ReservesAJER JOURNALPas encore d'évaluation

- Urbanization and The Risk of Flooding in The Congo Case of The City of BrazzavilleDocument6 pagesUrbanization and The Risk of Flooding in The Congo Case of The City of BrazzavilleAJER JOURNALPas encore d'évaluation

- Frequency Selective Fading in Wireless Communication Using Genetic AlgorithmDocument6 pagesFrequency Selective Fading in Wireless Communication Using Genetic AlgorithmAJER JOURNALPas encore d'évaluation

- Head Determination and Pump Selection For A Water Treatment Plant in Villages Around Maiduguri, Borno State, NigeriaDocument5 pagesHead Determination and Pump Selection For A Water Treatment Plant in Villages Around Maiduguri, Borno State, NigeriaAJER JOURNALPas encore d'évaluation

- Theoretical and Experimental Study of Cavitation Dispersing in "Liquid-Solid" System For Revelation of Optimum Influence ModesDocument10 pagesTheoretical and Experimental Study of Cavitation Dispersing in "Liquid-Solid" System For Revelation of Optimum Influence ModesAJER JOURNALPas encore d'évaluation

- DMP Packet Scheduling For Wireless Sensor NetworkDocument8 pagesDMP Packet Scheduling For Wireless Sensor NetworkAJER JOURNALPas encore d'évaluation

- An Exponent-Based Propagation Path Loss Model For Wireless System Networks at Vehicular SpeedDocument12 pagesAn Exponent-Based Propagation Path Loss Model For Wireless System Networks at Vehicular SpeedAJER JOURNALPas encore d'évaluation

- The Design and Implementation of A Workshop Reservation SystemDocument7 pagesThe Design and Implementation of A Workshop Reservation SystemAJER JOURNALPas encore d'évaluation

- Risk Assessment and Risk MappingDocument7 pagesRisk Assessment and Risk MappingAJER JOURNALPas encore d'évaluation

- Chattr ManualDocument3 pagesChattr ManualgtirnanicPas encore d'évaluation

- Tektronix P6015a 1000x HV TastkopfDocument74 pagesTektronix P6015a 1000x HV TastkopfMan CangkulPas encore d'évaluation

- Microprocessors and MicrocontrollersDocument34 pagesMicroprocessors and Microcontrollers6012 ANILPas encore d'évaluation

- Akun SiswaDocument10 pagesAkun Siswagame advenPas encore d'évaluation

- EU Login TutorialDocument32 pagesEU Login Tutorialmiguel molinaPas encore d'évaluation

- OPC Factory ServerDocument420 pagesOPC Factory Servergeorgel1605100% (1)

- Cardiette AR2100 - Service ManualDocument55 pagesCardiette AR2100 - Service ManualHernan Vallenilla Rumildo MixPas encore d'évaluation

- Managed Fortiswitch 604Document110 pagesManaged Fortiswitch 604Netsys BilisimPas encore d'évaluation

- TraceDocument318 pagesTraceJonathan EvilaPas encore d'évaluation

- Digital DocumentationDocument5 pagesDigital DocumentationTushar DeshwalPas encore d'évaluation

- Cubase 7 Quick Start GuideDocument95 pagesCubase 7 Quick Start Guidenin_rufaPas encore d'évaluation

- A Generation To End Genocide A Communications Strategy For Reaching MillennialsDocument64 pagesA Generation To End Genocide A Communications Strategy For Reaching MillennialsMadeleine KimblePas encore d'évaluation

- Pass Leader DumpsDocument30 pagesPass Leader DumpsHyder BasetPas encore d'évaluation

- Visual Foxpro Bsamt3aDocument32 pagesVisual Foxpro Bsamt3aJherald NarcisoPas encore d'évaluation

- VIP400 Settings Sheet NRJED311208ENDocument2 pagesVIP400 Settings Sheet NRJED311208ENVăn NguyễnPas encore d'évaluation

- Marelli Sra Ecu For Formula Renault 2000Document8 pagesMarelli Sra Ecu For Formula Renault 2000arielfoxtoolsPas encore d'évaluation

- Data Security in Cloud ComputingDocument324 pagesData Security in Cloud Computingprince2venkatPas encore d'évaluation

- System Imaging and SW Update Admin v10.6Document102 pagesSystem Imaging and SW Update Admin v10.6tseiple7Pas encore d'évaluation

- Statistische Tabellen (Studenmund (2014) Using Econometrics. A Practical Guide, 6th Ed., P 539-553)Document15 pagesStatistische Tabellen (Studenmund (2014) Using Econometrics. A Practical Guide, 6th Ed., P 539-553)Рус ПолешукPas encore d'évaluation

- Sample Cu A QuestionsDocument4 pagesSample Cu A QuestionsIvan Petrov0% (1)

- Project ReportDocument61 pagesProject Reportlove goyal100% (2)

- Error Messages Guide EnglishDocument226 pagesError Messages Guide EnglishSandeep PariharPas encore d'évaluation

- Administrator For IT Services (M - F) - Osijek - Telemach Hrvatska DooDocument3 pagesAdministrator For IT Services (M - F) - Osijek - Telemach Hrvatska DooSergeo ArmaniPas encore d'évaluation

- Name Any Three Client Applications To Connect With FTP ServerDocument5 pagesName Any Three Client Applications To Connect With FTP ServerI2414 Hafiz Muhammad Zeeshan E1Pas encore d'évaluation

- How To Use Epals To Find A Collaborative Partner ClassroomDocument90 pagesHow To Use Epals To Find A Collaborative Partner ClassroomRita OatesPas encore d'évaluation

- Module 1 Web Systems and Technology 2Document19 pagesModule 1 Web Systems and Technology 2Ron Marasigan100% (2)

- Britt Support Cat 9710Document47 pagesBritt Support Cat 9710Jim SkoranskiPas encore d'évaluation

- .Game Development With Blender and Godot - SampleDocument6 pages.Game Development With Blender and Godot - Sampleсупер лимонPas encore d'évaluation

- English Cloze PassageDocument2 pagesEnglish Cloze PassageKaylee CheungPas encore d'évaluation

- c20 Dcaie Artificial Intelligence 6thoctober2021Document345 pagesc20 Dcaie Artificial Intelligence 6thoctober2021Mr KalyanPas encore d'évaluation