Académique Documents

Professionnel Documents

Culture Documents

Antennas and Radar - Ch. 1-2 (David Lee Hysell)

Transféré par

BrimwoodboyCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Antennas and Radar - Ch. 1-2 (David Lee Hysell)

Transféré par

BrimwoodboyDroits d'auteur :

Formats disponibles

Antennas and Radar

January 15, 2010

Contents

1 Introduction 7

1.1 History . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

1.2 Motivation and outline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

1.3 Conventions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.3.1 Antenna measures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

1.3.2 Phasor notation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

1.4 Mathematical preliminaries . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.4.1 Fourier analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.4.2 Probability theory . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.5 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

1.6 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

2 Theory of simple wire antennas 27

2.1 Hertzian dipole . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.1.1 Vector potential: phasor form . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

2.1.2 Electromagnetic elds . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

2.1.3 Radiation pattern . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.1.4 Total radiated power . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.1.5 Radiation resistance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

2.1.6 Directivity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.1.7 Beam solid angle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

2.2 Reciprocity theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

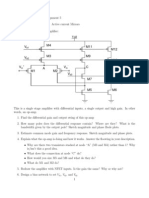

2.3 Practical wire antennas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

2.3.1 Half-wave dipole . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

2.3.2 Antenna effective length . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

2.3.3 Full-wave dipole . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

1

2.3.4 Quarter-wave monopole . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

2.3.5 Folded dipole antenna . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

2.3.6 Short dipole . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.3.7 Small current loop . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

2.3.8 Duality . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.4 Polarization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.4.1 Linear polarization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

2.4.2 Circular polarization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.4.3 Elliptical polarization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

2.4.4 Helical antennas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

2.5 Balanced and unbalanced transmission lines and balun transformers . . . . . . . . . . . . . . . . . . 47

2.6 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

2.7 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

3 Antenna arrays 49

3.1 Uniform linear arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

3.1.1 Broadside array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3.1.2 Endre array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

3.1.3 Hansen-Woodyard array . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.1.4 Binomial arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

3.1.5 Parasitic arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

3.2 Uniform two-dimensional arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

3.3 Three-dimensional arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

3.4 Very long baseline interferometry (VLBI) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

3.5 Preliminary remarks on SAR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

3.6 Beam synthesis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

3.7 Adaptive arrays . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

3.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

3.9 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

4 Aperture antennas 65

4.1 Simple apertures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

4.1.1 Example - rectangular aperture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.1.2 Fourier transform shortcuts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.1.3 Example - circular aperture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

2

4.1.4 Aperture efciency . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

4.2 Reector antennas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

4.2.1 Spillover, obstruction, and cross-polarization losses . . . . . . . . . . . . . . . . . . . . . . . 75

4.2.2 Surface roughness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

4.2.3 Cassegrain, Gregorian, and spherical reectors . . . . . . . . . . . . . . . . . . . . . . . . . 77

4.3 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

4.4 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

5 Noise 79

5.1 Noise in passive linear networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

5.2 Antenna noise temperature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

5.2.1 Atmospheric absorption noise and radiative transport . . . . . . . . . . . . . . . . . . . . . . 82

5.2.2 Feedline absorption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

5.2.3 Cascades . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

5.2.4 Receiver noise temperature, noise gure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

5.2.5 Receiver bandwidth . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

5.3 Matched lters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

5.4 Two simple radar experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

5.5 Quantization noise . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.6 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

5.7 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

6 Scattering 94

6.1 Rayleigh scatter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

6.2 Mie scatter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

6.3 Specular reection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

6.4 Volume scatter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 98

6.5 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

6.6 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

7 Signal processing 100

7.1 Fundamentals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

7.2 Power estimation and incoherent processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

7.3 Spectral estimation and coherent processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

7.4 Summary of ndings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

7.5 CW radars . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

3

7.6 FMCW radars . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

7.7 Pulsed radars . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

7.7.1 Range aliasing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

7.7.2 Frequency aliasing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

7.8 Example - planetary radar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 113

7.9 Range and frequency resolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115

7.10 MTI radars . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 116

7.11 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

7.12 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

8 Pulse compression 119

8.1 Phase coding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

8.1.1 Complementary codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121

8.1.2 Cyclic codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 122

8.1.3 Polyphase codes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

8.2 Frequency chirping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

8.3 Radar ambiguity functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 124

8.3.1 Costas codes revisited . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 128

8.3.2 Volume scatter revisited . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 129

8.4 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

8.5 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130

9 Propagation 131

9.1 Tropospheric ducting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

9.2 Ionospheric refraction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 134

9.2.1 Maximum usable frequency (MUF) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 136

9.3 Generalized wave propagation in inhomogeneous media . . . . . . . . . . . . . . . . . . . . . . . . 137

9.3.1 Stratied media . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 139

9.3.2 Radius of curvature . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 140

9.4 Birefringence (double refraction) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 141

9.4.1 Fresnel equation of wave normals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 142

9.4.2 Astroms equation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

9.4.3 Booker quartic . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143

9.4.4 Appleton-Hartree equation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 144

9.4.5 Phase and group velocity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

4

9.4.6 Index of refraction surfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

9.4.7 Ionospheric sounding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 148

9.4.8 Generalized raytracing in inhomogeneous, anisotropic media . . . . . . . . . . . . . . . . . . 151

9.4.9 Attenuation and absorption . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 151

9.4.10 Faraday rotation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 152

9.5 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

9.6 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 154

10 Overspread targets 155

10.1 Conventional long pulse analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 156

10.2 Amplitude modulation and the multipulse . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 159

10.3 Frequency and polarization diversity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160

10.4 Phase modulation, coded long pulse, and alternating codes . . . . . . . . . . . . . . . . . . . . . . . 160

10.5 Range-lag ambiguity functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 163

10.6 Error analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 166

10.6.1 Noise and self clutter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 168

10.6.2 Comparative analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

10.6.3 Normalization errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

10.7 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

10.8 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 170

11 Radar applications 171

11.1 Radar interferometry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 171

11.1.1 Ambiguity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 172

11.1.2 Volume scatter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

11.2 Acoustic arrays and non-invasive medicine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 174

11.3 Passive radar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

11.4 Inverse problems in radar signal processing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 177

11.5 Synthetic Aperture Radar (SAR) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 180

11.5.1 Image resolution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

11.5.2 Range delay due to chirp . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

11.5.3 Range migration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 183

11.5.4 InSAR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

11.6 Weather radar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

11.7 GPR . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

5

11.8 References . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

11.9 Problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 185

12 Appendix - review of electrodynamics 186

12.1 Coulombs law . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 186

12.2 Gauss law . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 187

12.3 Faradays law (statics) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

12.4 Biot Savart law . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

12.5 Another equation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

12.6 Amperes law (statics) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

12.7 Faradays law of induction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

12.8 Amperes law (revisited) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 192

12.9 Potentials and the wave equation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193

12.10Macroscopic equations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 194

12.11Boundary conditions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

12.12Boundary value problems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 197

12.13Greens functions and the eld equivalence principle . . . . . . . . . . . . . . . . . . . . . . . . . . 199

12.14Reciprocity . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201

6

Chapter 1

Introduction

This text investigates how radars can be used to explore the environment and interrogate natural and manufactured

objects in it remotely. Everyone is familiar with popular portrayals of radar engineers straining to see blips on old-style

radar cathode ray tubes. However, the radar art has evolved beyond this cliche. Radars are now used in geology and

archeology, ornithology and entomology, oceanography, meteorology, and in studies of the Earths upper atmosphere,

ionosphere, and magnetosphere. Radars are also used routinely to construct ne-grained images of the surface of

this planet and others. Astoundingly detailed information, often from otherwise inaccessible places, is being made

available to wide audiences for research, exploration, commerce, security, and commercial purposes. New analysis

methods are emerging all the time, and the radar art is enjoying a period of rapidly increasing sophistication.

1.1 History

Marconi (December, 1901) sent radio signals from Cornwall to Newfoundland (many mishaps)

H ulsmeyer (1904) detected ships in channels

Appleton (1924) early ionospheric sounding: Kennely-Heaviside layer, Appleton layer

Watson-Watt (1935) Death ray? No but aircraft detection by radio possible

Chain Home stations (1939) direction and range detection, 2030 MHz, oodlight Tx, crossed dipole Rx

Chain Home low 200 MHz, mechanical steering

Pearl Harbor (all WWII combatants had radar)

Magnetron (MIT Radiation Lab)

Cold war, BMEWS stations, lunar echoes

Air trafc control

Weather radar, Doppler radar, clear air turbulence and downdrafts

Planetary radar (Venus, Mercury, asteroids, Mars to some degree)

SAR and Earth surveys from aircraft, satellites, space shuttle

GPR (archeology, geology, prospecting, construction, resource management)

Ornithology and entomology

Automotive radar, land mine detection, stud nders, baseball

Sonar, Lidar, etc ...

7

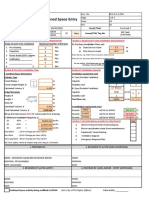

Tx Rx

R

P

t

P

r

Figure 1.1: RF communication link.

1.2 Motivation and outline

Our objective is to understand how radars can be used to learn everything we can about the environment. It is useful

to begin the study of radar, however, with an elementary question: how much of the power transmitted by a radar

will be returned in the echoes from the target and received? The answer to this question, which culminates in the

derivation of the so-called radar equation, illustrates some important aspects of radar design and application. The

derivation therefore serves as a vehicle for introducing basic ideas and terminology about radars. It also illustrates the

central importance of antenna performance on transmission and reception. Much of the early material in this text will

therefore have to address antenna theory, analysis, and design.

We can sketch out the basic ideas behind radar systems with some common-sense reasoning. The analysis begins

by considering a simple communications link involving transmitting and receiving stations and then considers what

happens when a radar target is introduced to the problem. The relationships given beloware dened rather than derived

and serve as the basis for the material to follow in the text. More specic and detailed treatments will come later.

The power budget for the simple communication link illustrated in Figure 1.1 is governed by:

P

rx

= P

tx

G

tx

4R

2

. .

Pinc

A

e

with the received power P

rx

being proportional to the transmitted power P

tx

assuming that the media between the

stations is linear. The incident power density falling on the receiving antenna P

inc

would just be P

tx

/4R

2

if the

illumination was isotropic. However, isotropic radiation cannot be achieved by any antenna and is seldom desirable,

and the transmitting antenna is usually optimized to concentrate radiation in the direction of the receiver. The trans-

mitting antenna gain G

tx

is a dimensionless quantity that represents the degree of concentration achieved. Rewriting

the equation above provides a denition of gain or directivity, which we use interchangeably for the moment:

G

tx

D =

P

inc

P

tx

/4R

2

=

P

inc

P

iso

=

P

inc

(W/m

2

)

P

avg

(W/m

2

)

where the denominator can be interpreted as either the power density that would fall on the receiving station if trans-

mission was isotropic or the average power density on the sphere of radius R on which the receiver lies. So the gain

is the ratio of the power density incident on the receiving station at a distance or range R to the equivalent isotropic

power density or the average power density radiated in all directions at that range.

The receiving antenna intercepts some of the power density incident upon it, producing an output signal with some

amount of power. The factor that expresses how much power is its effective area, A

e

= P

rx

(W)/P

inc

(W/m

2

). The

effective area has units of area and is sometimes (but not always) related to the physical area of the antenna.

This picture is modied somewhat for a radar link, which also involves some kind of target. The transmitter and

receiver are also frequently collocated in a radar, as shown in Figure 1.2. Here, the transmitter and receiver share a

common antenna connected through a transmit-receive (T/R) switch.

P

inc

(W/m

2

) = P

tx

G

tx

4R

2

P

scat

(W) = P

inc

radar

. .

(m

2

)

8

R

Tx

Rx

G

tx

A

eff

Figure 1.2: Radar link.

The rst line above is the power density incident on the radar target. We next dene a radar scattering cross section as

the ratio of the total power scattered by the target to the incident power density illuminating it. The cross-section has

units of area and may or may not be related to the physical area of the target itself. The denition is such that we take

the scattering to be isotropic, simplifying the calculation of the power received back at the radar station.

P

rx

(W) = P

scat

A

e

4R

2

All together, these expressions for the received power can be combined to form the radar equation:

P

rx

(W) = P

tx

G

tx

4R

2

. .

Pinc(W/m

2

)

radar

. .

Pscat(W)

A

e

4R

2

R

4

where we have neglected any losses in the system and propagation channel. The radar equation is useful in evaluating

the power budget of a radar link. Notice in particular the inverse dependence on the fourth power of the range, which

may make long-range detection difcult. While the equation is complete, it has a number of components that require

further investigation. These will make up the body of the content of the text. They are:

1. Antenna gain G

tx

: What is it, and how is it calculated?

2. Antenna effective area A

e

: What is it, how is it calculated, and how is it related to gain?

3. Scattering cross section

radar

: How is it dened, and how is it calculated for different kinds of scatterers?

4. Noise, statistical errors: From where does it come, how is it estimated, and how is it mitigated?

5. Propagation effects, polarization, etc.: How do radar signals propagate from place to place?

6. Signal processing: How are radar data acquired and handled?

The rst two of these items concern antenna performance. More than any other characteristic of a radar, its antenna

determines the kinds of applications for which it will be useful. Chapters 24 of the text consequently discuss antennas

in detail. Estimating the cross-section of a radar target requires some understanding of the physics of scattering. This

topic is addressed in chapter 6. Noise in the environment and the radar equipment itself limit the ability to detect

a scattered signal. Environmental and system noise will be discussed in chapter 5. For a target to be detected, the

radar signal has to propagate from the radar to the target and back, and the manner in which it does so is affected by

the intervening medium. Radio wave propagation in the Earths atmosphere and ionosphere is therefore explored in

9

chapter 9. Perhaps most importantly, a considerable amount of data processing is usually required in order to extract

meaningful information about the radar target from the received signal. Signal processing is therefore described in

detail in chapters 8 and 9. The text concludes with the exploration of a few specialized radar applications in chapter

10.

Fourier analysis and probability and statistics appear as recurring themes throughout the text. Both concepts are

reviewed briey later in this chapter. The review culminates with a denition of the power spectral density of a signal

sampled from a random process. The Doppler spectrum is one of the most important outcomes of a radar experiment,

and its meaning (and the meaning of the corresponding autocorrelation function) warrants special examination.

1.3 Conventions

Like all disciplines, radar engineering is associated with specialized conventions, practices, and vernacular. Much of

this concerns the language and the measures used to quantify antenna performance. In theory, there are any number

of ways in which antennas could be characterized and evaluated. In practice, a system has developed over time for

describing and comparing antennas on the basis of a few parameters. The system is highly intuitive and requires a

minimum of computation. It can be applied in the absence of a detailed theoretical understanding of antennas and so

will be described below.

Another convention in widespread use, particularly among electrical engineers, is phasor notation. Phasor notation

is not universal; physics textbooks often develop antenna theory without it, at the expense of somewhat more cluttered

notation. Phasors simplify problems where they can be made to apply, but one must understand whats going on to

manipulate them properly. We therefore conclude this chapter with a review.

1.3.1 Antenna measures

We begin with some basic, intuitive denitions. These will be rened as the text progresses. Here, the focus is on

developing an intuitive understanding of how antennas are described and their performance quantied.

In chapter 4 of the text, we will see that the gross performance of an antenna is different far away (in the so-called

Fraunhofer zone) than it is up close (in the Fresnel zone). The separatrix between these zones is determined by the

physical size of the antenna and by the wavelength. In most radar applications, the object being observed is safely in the

Fraunhofer zone. This simplies the interpretation of the echoes that are received considerably. Below, the Fraunhofer-

zone approximation is assumed to hold. Fresnel-zone effects are discussed in chapter 4, but a comprehensive treatment

of the effects on the radar equation is beyond the scope of this text.

Radiation pattern

What separates one antenna from another is the distribution of power radiated into different directions or bearings.

There is no such thing as an antenna which radiates isotropically (equally in all directions). The radiation pattern of an

antenna is a diagram (usually in polar coordinates) showing where the RF power is directed. It represents a surface, or

sometimes planar cuts through a surface, with a radial distance proportional to radiated power density along the given

bearing. By convention, radiation patterns are normalized to a maximum value of unity. They are usually drawn with

linear scaling, although logarithmic scaling can be used if care is taken to avoid problems at the nulls of the pattern.

We will soon see that the radiation pattern for an elemental dipole antenna is given by P

r

sin

2

, where is

the polar angle if the antenna is aligned with the z axis. This pattern forms a torus in three dimensions. Cuts through

two representative planes like those shown below usually sufce in conveying an impression of the three-dimensional

shape. If not, 3D rendering software may help with visualization.

Bulbs in the radiation pattern are referred to as beams or lobes, whereas zeros are called nulls. Some lobes have

larger peak amplitudes than others. The lobes with the greatest amplitudes are called the main lobes, and smaller

10

z

x

y

Figure 1.3: Elemental dipole radiation pattern. (left) E-plane. (right) H-plane. The overall pattern is a torus. The

dipole is aligned parallel to the z axis. (A dipole does not radiate off its ends, which we shall see later.)

ones are called sidelobes. If there are several main lobes, they are referred to as grating lobes. The nomenclature can

be ambiguous and subjective. In this example, there is essentially a single lobe at the equator ( = /2) that wraps

around all azimuths .

Several measures of the width of the lobes in the patterns are in common use. These include the half-power full

beamwidth (HPFB or HPBW), which gives the range of angles through which the radiation pattern is at least half its

maximum value in some plane. Engineers often refer to the E- and H-planes, which are orthogonal planes tangential

to the electric and magnetic lines of force, respectively. In this example, the E- and H-planes are shown at left and

right, and the HPFB in the E-plane is 90

. Since the radiation is uniform in the H-plane here, the HPBW is not dened

in that plane. Another measure is the beamwidth between rst nulls (BWFN), which usually works out to be about

twice the HPFB. In this case, it is 180

(exactly twice).

Directivity

A more objective measure of the degree of connement is the directivity. The denition of directivity, D, can be

expressed as

D

power density at center of main beam at range R

avg. power density at range R

=

power density at center of main beam at range R

total power transmitted/4R

2

where the average means an average over all directions. This denition is slightly clumsy. If we interpret power

density as power per unit area, then we have to specify some range R where this can be evaluated. Its more natural to

calculate power densities per unit solid angle, since that quantity does not vary with range. The latter denition will

have to wait until we have discussed solid angles, however.

Gain

Gain G (sometimes called directive gain) and directivity differ only for antennas with losses. Whereas the directivity

is dened in terms of the total power transmitted, the gain is dened in terms of the power delivered to the antenna by

the transmitter. Not all the power delivered is transmitted if the antenna has signicant ohmic losses.

G

power density at center of main beam at range R

power delivered to antenna/4R

2

Note that G D. In many applications, antenna losses are negligible, and the gain and directivity are used inter-

changeably. Both directivity and gain are dimensionless quantities, and it is often useful to represent them in decibels

(dB).

D G = 10 log

10

P

max

P

avg

11

Note also that a high gain implies a small HPBW.

Beam solid angle

We can characterize the main beamof an antenna pattern by the solid angle it occupies. Solid angle is the generalization

of an angle to a radial shape in three dimensions. Just as the arc length swept out on a circle by a differential angle d

is Rd, the differential area swept out on a sphere by a differential solid angle is dA = R

2

d. Thus, we dene d =

dA/R

2

. In spherical coordinates, we can readily see that d = sin dd. The units of solid angle are steradians (Str.)

The total solid angle enclosed by any closed surface is 4 Str.

Tx

R

d

dA

x

y

z

Figure 1.4: Antenna radiation pattern characterized by its solid angle d.

As mentioned earlier, the denitions of directivity and gain given above are somewhat clumsy since they are stated

in terms of power densities which vary with range even though the directivity and gain do not. We can now redene

the directivity more naturally in terms of solid angle with the knowledge that a per-unit-area quantity multiplied by

R

2

becomes a per-unit-solid-angle quantity.

D 4

R

2

(power radiated per unit area at center of main beam)

total power transmitted

= 4

power radiated per unit solid angle at center of main beam

total power transmitted

= 4

P

P

t

We can also characterize the main beam shape in terms of the total solid angle it occupies. Imagine for example

that the main beam is conical, with uniform power radiated within and no power radiated outside of that. Then P

= P

t

/, and we nd that = 4/D. This is in fact a general result for any beam shape (i.e. not just conical). We will

prove this later. Since can be no more than 4, D can be no less than unity. In fact, it is never less than 1.5.

Roughly speaking, the area illuminated by the antenna can be written in terms of the product of the half-power

beamwidths in the E and H planes:

R

2

(R HPBW

E

)(R HPBW

H

)

D

4

HPBW

E

HPBW

H

where the latter term follows from the denition of . Note that the half-power beamwidths must be evaluated

in radians here. This approximation permits approximations of the directivity knowing only something about the

beamwidth of the main beam. It is not likely to give a very good approximation in most cases, however, since antenna

12

patterns normally have tapered main beams and sidelobes. A more useful approximation is given by the rule of thumb:

D

26, 000

HPBW

E

HPBW

H

where the beamwidths are in degrees this time. Exact means of calculating directivity and gain will follow, but the

approximations given here are sufcient for many purposes.

Antenna effective area

Weve dened the effective area of an antenna as the ratio of the power it delivers to a matched (no reected power)

receiver to the incident power density, i.e.

P

rx

(W) = P

inc

(W/m

2

)A

e

(m

2

)

Whereas antenna directivity and gain are more naturally discussed in terms of transmission, effective area is more of

a reception concept. However, directivity and effective area are intimately related, and high gain antennas have large

effective areas.

Effective area has a natural, intuitive interpretation for aperture antennas (reectors, horns, etc.), and we will see

that the effective area is always less than or equal to (less than in practice) the physical area of the aperture. The

effective area of a wire antenna is unintuitive. Nevertheless, all antennas have an affective area, which is governed by

the reciprocity theorem. We will see that

D =

4

2

A

e

where is the wavelength of the radar signal. High gain implies a large effective area, a small HPBW, and a small solid

angle. For wire antennas with lengths L large compared to a wavelength, we will see that HPBW /L generally.

Just as all antennas have an effective area, they also have an effective length. This is related to the physical length

in the case of wire antennas, but there is no intuitive relationship between effective length and antenna size for aperture

antennas. High gain antennas have large effective lengths.

We can consider the Arecibo S-band radar system as an extreme example. This radar operates at a frequency of

2.38 GHz or a wavelength of 12 cm. The diameter of the Arecibo reector is 300 m. Consequently, we can estimate

the directivity to be about 6 10

7

or about 78 dB, equating the effective area with the physical area of the reector.

In fact, this gives an overestimate, and the actual gain of the system is only about 73 dB (still enormous). We will see

how to calculate the effective area of an antenna more accurately later in the semester.

1.3.2 Phasor notation

Phasor notation is a convenient way of describing linear systems which are sinusoidally forced. If we excite a linear

system with a single frequency, all components of the system will respond at that frequency (once the transients have

decayed), and we need only be concerned with the amplitude and phase of the response. The results can be extended

to multiple frequencies and generalized forcing trivially using superposition.

Let us represent signals of the formAcos(t +) as the real part of the complex quantity Aexp(j(t +)). Here,

the frequency is presumed known and the amplitude A and phase are real quantities, perhaps to be determined.

The introduction of complex notation may seem like an unnecessary complication, but it simplies things enormously.

This is because differentiation and integration become algebraic operations. Henceforth, we regard the real part of the

phasor quantity as being physically interesting. The last step in our calculations will usually be to evaluate the real

part of some expression.

It is usually convenient to combine and A into a complex constant representing both the amplitude and phase of

the signal, i.e. C = Aexp(j). C can be viewed as a vector in the complex plane. When dealing with waves, we

13

expect both the amplitude and phase (the magnitude and angle of C) to vary in space. Our task is then usually to solve

for C everywhere. There is no explicit time variation that is contained entirely in the implicit exp(jt) term, which

we often even neglect to write.

Note that the components of vectors too can be expressed using phasor notation. This notation can be confusing

at times but really represents no special complications. Be sure not to mistake the complex plane with the plane or

volume containing the vectors. As usual, the real part of a phasor vector is what is physically interesting.

The rules of arithmetic for complex numbers are similar to those for real numbers, although there are important

differences. For example, the norm of a vector in phasor notation is dened as |E|

2

= E E

. Consider the norm of the

electric eld associated with a circularly polarized wave traveling in the z direction such that E = ( xj y) exp(j(t

kz)). The length of the electric eld vector is clearly unity, and evaluating the Euclidean length of the real part of the

electric eld indeed produces a value of 1. The phasor norm of the electric eld meanwhile gives E E

= 2. In fact,

the real part of inner and outer conjugate products in phasor notation (e.g. A B

) give twice the average value of

the given product. This will be important when we discuss average power ow and the time-average Poynting vector.

1.4 Mathematical preliminaries

The remainder of this chapter concerns powerful mathematical concepts that will be helpful for understanding material

in successive chapters of the text. A brief treatment of Fourier analysis is presented. After that, basic ideas in prob-

ability theory are reviewed. Fourier analysis and probability theory culminate in the denition of the power spectral

density of a signal, something that plays a central role in radar signal processing.

1.4.1 Fourier analysis

Phasor notation can be viewed as a kind of poor-mans Fourier analysis, a fundamental mathematical tool used in a

great many elds of science and engineering, including signal processing. The basis of Fourier analysis is the fact that

a periodic function in time, for example, can be expressed as an innite sum of sinusoidal basis functions:

f(t) =

1

2

a

+a

1

cos

t +a

2

cos 2

t + +b

1

sin

t +b

2

sin2

t +

where is the period of f(t) and

2/. The series converges to f(t) where the function is continuous and

converges to the midpoint where it has discontinuous jumps. The Fourier coefcients can be found by utilizing the

orthogonality of sin and cosine functions over the period :

a

n

=

_

/2

/2

f(t) cos n

t dt

b

n

=

_

/2

/2

f(t) sinn

t dt

where the integrals can be evaluated over any complete period of f(t). Fourier decomposition applies equally well to

real and complex functions. With the aid of the Euler theorem, the decomposition above can be shown to be equivalent

to:

f(t) =

n=

c

n

e

jnt

(1.1)

c

n

=

2

_

/2

/2

f(t)e

jnt

dt (1.2)

Written this way, it is clear that the Fourier coefcients c

n

can be interpreted as the amplitudes of the various frequency

components or harmonics that compose the periodic function f(t). The periodicity of the function ultimately permits

its representation in terms of discrete frequencies.

14

It can be veried easily that the sum of the squares of the harmonic amplitudes gives the mean squared average of

the function,

|f(t)|

2

=

n=

|c

n

|

2

(1.3)

which is a simplied form of Parsevals theorem. This shows that the power in the function f(t) is the sum of the

powers in all the individual harmonics, which are the normal modes of the function. Parsevals theorem is sometimes

called the completeness relation because it shows how all the harmonic terms in the Fourier expansion are required in

general to completely represent the function. Other forms of Parsevals theorem are discussed below.

Fourier transforms

The question immediately arises is there a transformation analogous to (1.1) and (1.2) that applies to functions that

are not periodic? Let us regard a non-periodic function as the limit of a periodic one where the period is taken to

innity. We can write

c

n

=

2

_

/2

/2

f(u)e

jnu

du

and substitute into (1.1), yielding

f(t) =

n=

2

_

/2

/2

f(u)e

jn(tu)

du

=

1

2

n=

g(

n

)

g(

n

)

_

/2

/2

f(u)e

jn(tu)

du

where

n

= n

and =

n+1

n

=

have been dened. Now, in the limit that , the sum

n=

g(

n

)

formally goes over to the integral

_

g()d, representing a continuous independent variable. The corresponding

expression for g() just above becomes:

g() =

_

f(u)e

j(tu)

du

Substituting into the equation above for f(t) yields:

f(t) =

1

2

_

e

jt

d

_

f(u)e

ju

du

Finally, if we change variables and dene F() as (analogous to (1.2))

F() =

_

f(t)e

jt

dt (1.4)

we are left with (analogous to (1.1))

f(t) =

1

2

_

F()e

jt

d (1.5)

where the continuous function F() replaces the discrete set of amplitudes c

n

in a Fourier series. Together, these

two formulas dene the Fourier transform and its inverse. Note that the placement of the factor of 2 is a matter of

convention. The factor appears in the forward transform in some texts, in the inverse in others. In some texts, both

15

integral transforms receive a factor of the square root of 2 in their denominators. What is important is consistency.

Similar remarks hold for the sign convention in the exponents for forward and inverse transforms.

Nowf(t) and F() are said to constitute a continuous Fourier transformpair which relate the time- and frequency-

domain representations of the function. Note that, by using the Euler theorem, the forward and inverse transformations

can be sinusoidal and cosinusoidal parts which can be used to represent antisymmetric and symmetric functions,

respectively. Fourier transforms are often easy to calculate, and tables of some of the more common Fourier transform

pairs have been tabulated. Fourier transforms are very useful for solving ordinary and partial differential equations,

since calculus operations (differentiation and integration) transform to algebraic operations. The Fourier transform

also exhibits numerous properties that simplify evaluation of the integrals and manipulation of the results, linearity

being among them. Some differentiation, scaling, and shifting properties are listed below, where each line contains a

Fourier transform pair.

d

dt

f(t) jF() (1.6)

jtf(t)

d

d

F() (1.7)

f(t t

) e

jt

F() (1.8)

e

jt

f(t) F(

) (1.9)

f(at)

1

|a|

F(/a) (1.10)

f

(t) F

() (1.11)

Convolution theorem

One of the most important properties of the Fourier transform is the way that the convolution operator transforms. The

convolution of two functions f and g is dened by

f g =

_

t

0

f(t )g()d

Convolution appears in a number of signal processing and radar contexts, particularly where lters are concerned.

While the integral can sometimes be carried out simply, it is just as often difcult to calculate analytically and compu-

tationally. The most expedient means of calculation usually involves Fourier analysis.

Consider the product of the frequency-domain representations of f and g:

F()G() =

_

f(

)e

j

g()e

j

d

=

_

e

j(

+)

f(

)g()d

d

With the change of variables t =

+, dt = d

, this becomes

F()G() =

_

e

jt

f(t )g()dtd

=

_

e

jt

__

f(t )g()d

_

dt

The quantity in the square brackets is clearly the convolution of f(t) and g(t). Evidently then, the Fourier transform

of the convolution of two functions is the product of the Fourier transforms of the functions. This suggests an easy

and efcient means of evaluating a convolution integral. It also suggests another way of interpreting the convolution

operation, which translates into a product in the frequency domain.

16

Parsevals theorem

The convolution theorem proven above can be used to reformulate Parsevals theorem for continuous functions. First,

it is necessary to establish how the complex conjugate operation behaves under Fourier transformation. Let

f(t) =

1

2

_

F()e

jt

d

Taking the complex conjugate of both sides gives

f

(t) =

1

2

_

()e

jt

d

Next, replace the variable t with t:

f

(t) =

1

2

_

()e

jt

d

which is then the inverse Fourier transform of F

().

Let us use this information to express the product F

()G(), borrowing from results obtained earlier.

F

()G() =

_

e

jt

__

[(t )]g()d

_

dt

Taking the inverse Fourier transform gives:

1

2

_

()G()e

jt

d =

_

( t)g()d

This expression represents a general form of Parsevals theorem. A more narrow form is given considering t = 0 and

taking f = g, F = G:

1

2

_

|F()|

2

d =

_

|f(t)|

2

dt (1.12)

where t has replaced as the dummy variable on the right side of the last equation.

Parsevals theorem is an intuitive statement equating the energy in a signal in its time- and frequency-domain

formulations. It is a continuous generalization of (1.3). As |f(t)|

2

is the energy density per unit time or power,

so |F()|

2

is the energy density per unit frequency or the energy spectral density (ESD). The ESD is a convenient

quantity to consider in situations involving lters, since the output ESD is the input ESD times the frequency response

function of the lter (modulus squared).

However, (1.12) as written here is problematic in the sense that the integrals as written are not guaranteed to exist.

One normally differentiates at this point between so-called energy signals, where the right side of (1.12) is nite and

there are no existence issues, and more practical power signals, where the average power over some time interval of

interest T is instead nite:

P =

1

T

_

T/2

T/2

|f(t)|

2

dt <

Parsevals theorem can be extended to apply to power signals with the denition of the power spectral density (PSD)

S(), which is the power density per unit frequency. Let us write an expression for the power in the limiting case of

T incorporating Parsevals theorem above:

lim

T

1

T

_

T/2

T/2

|f(t)|

2

dt = lim

T

1

2T

_

|F()|

2

d

=

1

2

_

S()d

S() lim

T

1

T

|F()|

2

(1.13)

17

This is the conventional denition of the PSD, the spectrum of a signal. In practice, an approximation is made where

T is nite but long enough to incorporate all of the behavior of interest in the signal. If the signal is stationary, the

choice of the particular time interval of analysis is arbitrary.

Sampling theorem

Using a Fourier series, we saw in (1.1) how a continuous, periodic function can be expressed in terms of a discrete

set of coefcients. If the function is also bandlimited, then those coefcients form a nite set of nonzero coefcients.

Only these coefcients need be known to completely specify the function. The question arises, can the function be

expressed directly with a nite set of samples? This is tantamount to asking if the integral in (1.2) can be evaluated

exactly using discrete mathematics. That the answer to both questions is yes is the foundation for the way data are

acquired and processed.

Discrete sampling of a signal can be viewed as multiplication with a train of Dirac delta functions separated by an

interval T. The sampling function in this case is

s(t) = T

n=

(t nT)

=

n=

e

j2nt/T

where the second form is the equivalent Fourier series representation. Multiplying this by the sampled function x(t)

yields the samples y(t):

y(t) =

n=

x(t)e

j2nt/T

Y () =

n=

X

_

2n

T

_

where the second line this time is the frequency-domain representation (see Figure 1.5). Evidently, the spectrum of the

sampled signal |Y ()|

2

is an endless succession of replicas of the spectrum of the original signal, spaced by intervals

of the sampling frequency

= 2/T. The replicas can be removed easily by low-pass ltering. None of the original

information is lost so long as the replicas do not overlap or alias onto one another. Overlap can be avoided so long

as the sampling frequency is as great or greater than the total bandwidth of the signal, a number bounded by twice

the maximum frequency component. This requirement denes the Nyquist sampling frequency

s

= 2||

max

. In

practice, ideal low-pass ltering is impossible to implement, and oversampling at a rate above the Nyquist frequency

is usually performed.

Y() B

2/T

Figure 1.5: Illustration of the sampling theorem. The spectrum of the sampled signal is an endless succession of

replicas of the original spectrum. To avoid frequency aliasing, the sampling theorem requires that B 2/T.

18

Discrete Fourier Transform

The sampling theorem implies a transformation between a discrete set of samples and a discrete set of Fourier ampli-

tudes. The discrete Fourier transform (DFT) is dened by

F

m

=

N1

n=0

f

n

e

j2nm/N

, m = 0, , N 1

f

n

=

1

N

N1

m=0

F

m

e

j2nm/N

, n = 0, , N 1

where the sequences F

m

and f

n

can be viewed as frequency- and time- domain representations of a periodic, band-

limited sequence with period N. The two expressions follow immediately from the Fourier series formalism outlined

earlier in this section, given discrete time sampling. Expedient computational means of evaluating the DFT, notably

the fast Fourier transform or FFT, have existed for decades and are at the heart of many signal processing and display

numerical codes. Most often, N is made to be a power of 2, although this is not strictly necessary.

DFTs and FFTs in particular are in widespread use wherever spectral analysis is being performed. Offsetting

their simplicity and efciency is a serious pathology arising from the fact that time series in a great many real-world

situations are not periodic. Power spectra computed using periodograms (algorithms for performing DFTs that assume

the periodicity of the signal) suffer from artifacts as a result, and these may be severe and misleading. No choice of

sample rate or sample length N can completely remedy the problem.

The jump between the rst and last sample in the sequential time series will function like a discontinuity upon

analysis with a DFT, and articial ringing will appear in the spectrum as a result. A non-periodic signal can be viewed

as being excised from longer, periodic signal by means of multiplication by a gate function. The Fourier transform of

a gate function is a sinc function. Consequently, the DFT spectrum reects something like the convolution of the true

F() with a sinc function, producing both ringing and broadening. Articial sidelobes down about -13.5 dB from the

main lobe will accompany features in the spectrum, and these may be mistaken for actual frequency components of

the signal.

The most prevalent remedy for this so-called spectral leakage is windowing, whereby the sampled signal is

weighted prior to Fourier analysis is a way that de-emphasizes samples taken near the start and end of the sample

interval. This has the effect of attenuating the apparent discontinuity in the signal. It can also be viewed as replacing

the gate function mentioned above with another function with lower sidelobes. Reducing the sidelobe level comes at

the expense of broadening the main lobe and degrading the frequency resolution. Various tradeoffs have to be weighed

when selecting the spectral window. Popular choices include the Hann window, which has a sinusoidal shape, and the

Hamming window, which is like the Hann window except with a pedestal.

1.4.2 Probability theory

Probability is a concept that governs the outcomes of experiments that are indeterministic, i.e., random or unpre-

dictable. Noise in radar systems is an example of a random process and can only be described probabilistically. The

unpredictability of noise is a consequence of the complexity of the myriad processes that contribute to it. Radar signals

may reect both random and deterministic processes, depending on the nature of the target, but the sum of signal plus

noise is always a random process, and we need probability theory to understand it.

We should consider the operation of a radar as the performance of an experiment with outcomes that cannot be

predicted because it is simply impossible to determine all of the conditions under which the experiment is performed.

Such an experiment is called a random experiment. Although the outcomes cannot be predicted, they nevertheless

exhibit statistical regularity that can be appreciated after the fact. If a large number of experiments are performed

under similar conditions, a frequency distribution of all the outcomes can be constructed. Because of the statistical

regularity of the experiment, the distribution will converge on a limit as the number of trials increases. We can assign

a probability P(A) to an outcome A as the relative frequency of occurrence of that outcome in the limit of an innite

number of trials. For any A, 0 P(A) 1. This is the frequentist interpretation of statistics. Other interpretations

19

of probability related to the plausibility of events as determined using information theory also exist but are beyond the

scope of this text.

Random variables

Since the outcome of a radar experiment is nally a set of numbers, we are interested in random variables that label all

the possible outcomes of a random experiment numerically. A random variable is a function that maps the outcomes

of a random experiment to numbers. Particular numbers emerging from an experiment are called realizations of the

random variables. Random variables come in discrete and continuous forms.

Probabilities are often stated in terms of cumulative distribution functions (CDFs). The CDF F

x

(a) of a random

variable x is the probability that the random variable x takes on a value less than or equal to a, viz.

F

x

(a) = P(x a)

F

x

(a) is a nondecreasing function of its argument that takes on values between 0 and 1. Another important func-

tion is the probability density function (PDF) f

x

(x) of the random variable x, which is related to the CDF through

integration/differentiation:

F

x

(a) = P(x a) =

_

a

f

x

(x)dx

dF

x

(x)

dx

= f

x

(x)

The PDF is the probability of observing the variable x in the interval (x, x +dx). Since a random variable always has

some value, we must have

_

f

x

(x)dx = 1

Note that, for continuous random variables, a PDF of 0 (1) for some outcome does not mean that that outcome is

prohibited (guaranteed). Either the PDF or the CDF completely determine the behavior of the random variable and are

therefore equivalent.

Random variables that are normal or Gaussian occur frequently in many contexts and have the PDF:

f

N

(x) =

1

2

e

1

2

(x)

2

/

2

which is parametrized by and . Normal distributions are often denoted by the shorthand N(, ). Another common

distribution is the Rayleigh distribution, given by

f

r

(r) =

_

r

2

e

r

2

/2

2

, r 0

0, r < 0

which is parametrized just by . It can be shown that a random variable dened as the length of a 2d Cartesian

vector with component lengths given by Gaussian random variables has this distribution. In terms of radar signals, the

envelope of quadrature Gaussian noise is Rayleigh distributed. Still another important random variable called

2

has

the PDF

f

2 =

1

2

/2

(/2)

x

1

2

1

e

x/2

where is the Gamma function, (x) =

_

0

x1

e

xi

d, and where the parameter is referred to as the number of

degrees of freedom. It can be shown that if the random variable x

i

is N(0, 1), then the random variable

z =

n

i=1

x

2

i

is

2

with n degrees of freedom. If x

i

refers to voltage samples, for example, then z refers to power estimates based

on n voltage samples or realizations.

20

Mean and variance

It is often not the PDF that is of interest but rather just its moments. The expectation or mean of a random variable x,

denoted E(x) or

x

, is given by

E(x) =

_

xf

x

(x)dx

For example, it can readily be shown that the expected value for a normal distribution N(, ) is E(x) = . The

variance of a random variable, denoted V ar(x) or

2

x

, is given by

V ar(x) = E[(x

x

)

2

]

= E(x

2

)

2

x

=

_

(x

x

)

2

f

x

(x)dx

The standard deviation of x meanwhile, which is denoted

x

, is the square root of the variance. In the case of the same

normal distribution N(, ), the variance is given by

2

, and the standard deviation by .

It is important to distinguish between the mean and variance of a random variable and the sample mean and

sample variance of the realizations of a random variable. The latter are experimental quantities constructed from a

nite number of realizations of the random variable x. The latter will depart from the former due to the nite sample

size and perhaps because of estimator biases. The deviations constitute experimental errors, and understanding them

is a substantial part of the radar art.

Joint probability distributions

Two random variables x and y may be described by a joint probability density function (JPD) f(x, y) such that

P(x a and y b) =

_

a

_

b

f(x, y)dydy

In the event the two random variables are independent, then f(x, y) = f

x

(x)f

y

(y). More generally, we can dene the

covariance of x and y by

Cov(x, y) = E[(x

x

)(y

y

)]

= E(xy)

x

y

If x and y are independent, their covariance will be 0. It is possible for x and y to be dependent and still have zero

covariance. In either case, we say that the random variables are uncorrelated.

The Correlation function is dened by

(x, y) =

Cov(x, y)

_

Var(x)Var(y)

The variance, covariance, and correlation function conform to the following properties (where a is a scalar and x and

y are random variables):

1. Var(x) 0

2. Var(x +a) = Var(x)

3. Var(ax) = a

2

Var(x)

4. Var(x +y) = Var(x)+2Cov(x, y)+Var(y)

5. (x, y) 1

These ideas apply not only to different randomvariables but also to realizations of the same randomvariable at different

times. In this case, we write of autocovariances and autocorrelations evaluated at some time lag (see below).

21

Conditional probability

It is often necessary to determine the probability of an event given that some other event has already occurred. The

conditional probability of event A given event B having occurred is

P(A|B) = P(A B)/P(B)

Since P(A B) = P(B A), the equation could equally well be written with the order of A and B reversed. This

leads immediately to Bayes theorem, which gives a prescription for reversing the order of conditioning:

P(B|A) =

P(A|B)P(B)

P(A)

Given conditional probabilities, one can construct conditional PDFs f

x|y

(x), conditional CDFs F

x|y

(a), and also their

moments.

Multivariate normal distribution

Consider now the joint probability distribution for a column vector x with n random variable components. If the

components have a multivariate normal (MVN) distribution, their joint probability density function is

f(x) =

1

(2)

n/2

1

_

det(C)

e

1

2

(xu)

t

C

1

(xu)

(1.14)

where u is a column vector composed of the expectations of the random variables x and C is the covariance matrix

for the random variables. This denition assumes that the covariance matrix is nonsingular.

Normally distributed (Gaussian) random variables possess a number of important properties that simplify their

mathematical manipulation. One of these is that the mean vector and covariance matrix completely specify the joint

probability distribution. This follows from the denition given above. In general, all of the moments and not just the

rst two are necessary to specify a distribution. Another property is that if x

1

, x

2

, , x

n

are uncorrelated, they are

also independent. Independent random variables are always uncorrelated, but the reverse need not always be true. A

third property of jointly Gaussian random variables is that the marginal densities p

xi

(x

i

) and the conditional densities

p

xixj

(x

i

, x

j

|x

k

, x

l

, , x

p

) are also Gaussian.

A fourth property is that linear combinations of jointly Gaussian variables are also jointly Gaussian. As a conse-

quence, the properties of the original variables specify the properties of any other random variable created by a linear

operator acting on x. If y = Ax, then

E(y) = A

Cov(y) = ACA

t

Using these properties, it is straightforward to generate vectors of random variables with arbitrary means and covari-

ances beginning with random variables that are MVN.

Central limit theorem

The central role of MVN random variables in a great many important processes in nature is borne out by the central

limit theorem, which governs random variables that hinge on a large number of individual, independent events. Un-

derlying noise, for example, is a random variable that is the sum of a vast number of other random variables, each

associated with some minuscule, random event. Signals can reect MVN random variables as well, for example sig-

nals from radar pulses scattered from innumerable, random uctuations in the index of refraction of a disturbed air

volume.

22

Let x

i

, i = 1, n be independent, identically distributed random variables with common, nite and . Let

z

n

=

n

i=1

x

i

n

n

The central limit theorem states that, in the limit of n going to innity, z

n

approaches the MVN distribution. Notice

that this result does not depend on the particular PDF of x

i

. Regardless of the physical mechanism at work and the

statistical distribution of the underlying random variables, the overall random variable will be normally distributed.

This accounts for the widespread appearance of MVN statistics in different applications in science and engineering.

Rather than proving the central limit theorem, we will just argue its plausibility. Suppose a random variable is the

sum of two other random variables, z = x +y. We can dene a CDF for z in terms of a JDF for x and y as

F

z

(a) = P(z a) = P(x , y a x)

=

_

_

ax

f

xy

(x, y)dxdy

=

_

dx

_

ax

f

xy

(x, y)dy

The corresponding PDF for z is found through differentiation of the CDF:

f

z

(z) =

dF

z

(z)

dz

=

_

f

xy

(x, z x)dx

Now, if x and y are independent, f

xy

(x, z x) = f

x

(x)f

y

(z x), and

f

z

(z) =

_

f

x

(x)f

y

(x, z x)dx

which is the convolution of the individual PDF. This result can be extended for z being the sum of any number of

PDFs, for which the total PDF is the convolution of all the individual PDFs.

Regardless of the shapes of the individual PDFs, the tendency upon multiple convolutions is to tend toward a net

PDF with a Gaussian shape. This can easily be veried by successive convolution of uniform PDFs, for example,

which have the form of gate functions. By the third of fourth convolution, it is already difcult to distinguish the result

from a Bell curve.

Random processes

A random or stochastic process is an extension of a random variable, the former being the latter but also being a

function of an independent variable, usually time or space. The outcome of a random process is therefore not just a

single value but rather a curve (a waveform). The randomness in a random process refers to the uncertainty regarding

which curve will be realized. The collection of all possible waveforms is called the ensemble, and a member of the

ensemble is a sample waveform.

Consider a random process that is a function of time. For each time, a PDF for the random variable can be

determined from the frequency distribution. The PDF for at time t is denoted f

x

(x; t), emphasizing that the function

can be different at different times. However, the PDF f

x

(x; t) does not completely specify the randomprocess, lacking

information about how the random variables representing different times are correlated. That information is contained

in the autocorrelation function, itself derivable fromthe JDF. In the case of a randomprocess with zero mean and unity

variance, we have

(t

1

, t

2

) = E[x(t

1

)x(t

2

)] = E(x

1

, x

2

)

=

_

x

1

x

2

f

x1,x2

(x

1

, x

2

)dx

1

dx

2

23

(For a complex random process, the autocorrelation function is dened as E(x

1

, x

2

).) Consequently, not only the

PDF but also the second-order or JDF must be known to specify a random process. In fact, the n-th order PDF

f

x1,x2,,xn

(x

1

, x

2

, , x

n

; t

1

, t

2

, , t

n

) must be known to completely specify the random process with n sample

times. Fortunately, this near impossibility is avoided when dealing with Gaussian randomprocesses and linear systems,

for which it is sufcient to deal with rst and second order statistics only. Furthermore, lower-order PDFs are always

derivable from a higher-order PDF, e.g.

f

x1

(x

1

) =

_

f

x1,x2

(x

1

, x

2

)dx

2

so one need only specify the second-order statistics to completely specify a Gaussian random process.

Among random processes is a class for which the statistics are invariant under a time shift. In other words, there

is no preferred time for these processes, and the statistics remain the same regardless of where the origin of the time

axis is located. Such processes are said to be stationary. True stationarity requires invariance of the n-th order PDF.

In practice this can be very difcult to ascertain. Stationarity is often asserted not on the basis of the sample basis but

instead in terms of the known properties of the generating mechanism.

A less restrictive requirement is satised by processes which are wide-sense or weakly stationary. Wide-sense sta-

tionarity requires only that the PDF be invariant under temporal translation through second order. For such processes,

expectations are constant, and the autocorrelation function is a function of lag only, i.e. (t

1

, t

2

) = (t

2

t

1

). Even

this less restrictive characterization of a random variable is an idealization and cannot be met by a process that occurs

over a nite span of times. In practice, the term is meant to apply to a process approximately over some nite time

span of interest.

Demonstrating stationarity or even wide-sense stationarity may be difcult in practice since if the PDF is not known

a priori since one generally does not have access to the ensemble and so cannot performthe ensemble average necessary

to compute, for example, the correlation function. However, a special class of stationary random processes called

ergodic processes lend themselves to experimental interrogation. For these processes, all of the sample waveforms

in the ensemble are realized over the course of time and an ensemble average can therefore be replicated by a time

average (sample average).

E(x(t)) lim

T

1

T

_

T/2

T/2

x(t)dt

() lim

T

1

T

_

T/2

T/2

x(t)x(t +)dt

An ergodic process obviously cannot depend on time and therefore must be stationary.

Gaussian random processes

The properties of random variables were stated in section 1.5.5. A Gaussian random process is one for which the

random variables x(t

1

), x(t

2

), , x(t

n

) are jointly Gaussian according to (1.14) for every n and for every set

(t

1

, t

2

, , t

n

). All of the simplifying properties of jointly normal random variables therefore apply to Gaussian