Académique Documents

Professionnel Documents

Culture Documents

Digital Signal Processing Iii Year Ece B Introduction To DSP

Transféré par

Kavitha SubramaniamDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Digital Signal Processing Iii Year Ece B Introduction To DSP

Transféré par

Kavitha SubramaniamDroits d'auteur :

Formats disponibles

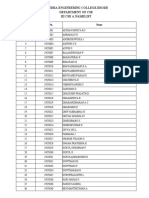

DIGITAL SIGNAL PROCESSING III YEAR ECE B

Introduction to DSP

A signal is any variable that carries information. Examples of the types of signals

of interest are Speech (telephony, radio, everyday communication), Biomedical signals

(EEG brain signals), Sound and music, ideo and image,! "adar signals (range and

bearing).

#igital signal processing (#S$) is concerned %ith the digital representation of

signals and the use of digital processors to analyse, modify, or extract information from

signals. &any signals in #S$ are derived from analogue signals %hich have been

sampled at regular intervals and converted into digital form. 'he (ey advantages of #S$

over analogue processing are Guaranteed accuracy (determined by the number of bits

used), $erfect reproducibility, )o drift in performance due to temperature or age, 'a(es

advantage of advances in semiconductor technology, Greater exibility (can be

reprogrammed %ithout modifying hard%are), Superior performance (linear phase

response possible, and!ltering algorithms can be made adaptive), Sometimes information

may already be in digital form. 'here are ho%ever (still) some disadvantages, Speed and

cost (#S$ design and hard%are may be expensive, especially %ith high band%idth

signals) *inite %ord length problems (limited number of bits may cause degradation).

Application areas of #S$ are considerable+ ! ,mage processing (pattern

recognition, robotic vision, image enhancement, facsimile, satellite %eather map,

animation), ,nstrumentation and control (spectrum analysis, position and rate control,

noise reduction, data compression) ! Speech and audio (speech recognition, speech

synthesis, text to Speech, digital audio, e-ualisation) &ilitary (secure communication,

radar processing, sonar processing, missile guidance) 'elecommunications (echo

cancellation, adaptive e-ualisation, spread spectrum, video conferencing, data

communication) Biomedical (patient monitoring, scanners, EEG brain mappers, E.G

analysis, /0ray storage and enhancement).

UNIT I

Discrete-time signas

A discrete0time signal is represented as a se-uence of numbers+

1ere n is an integer, and x2n3 is the nth sample in the se-uence. #iscrete0time signals are

often obtained by sampling continuous0time signals. ,n this case the nth sample of the

se-uence is e-ual to the value of the analogue signal xa(t) at time t 4 n'+

'he sampling period is then e-ual to ', and the sampling fre-uency is fs 4 54' .

x253

*or this reason, although x2n3 is strictly the nth number in the se-uence, %e often refer to

it as the nth sample. 6e also often refer to 7the se-uence x2n38 %hen %e mean the entire

se-uence. #iscrete0time signals are often depicted graphically as follo%s+

('his can be plotted using the &A'9AB function stem.) 'he value x2n3 is unde!ned for

no integer values of n. Se-uences can be manipulated in several %ays. 'he sum and

product of t%o se-uences x2n3 and y2n3 are de!ned as the sample0by0sample sum and

product respectively. &ultiplication of x2n3 by a is de!ned as the multiplication of each

sample value by a. A se-uence y2n3 is a delayed or shifted version of x2n3 if

%ith n: an integer.

'he unit sample se-uence

is defined as

'his se-uence is often referred to as a discrete0time impulse, or ;ust impulse. ,t plays the

same role for discrete0time signals as the #irac delta function does for continuous0time

signals. 1o%ever, there are no mathematical complications in its definition.

An important aspect of the impulse se-uence is that an arbitrary se-uence can be

represented as a sum of scaled, delayed impulses. *or

example, the

Se-uence can be

represented as

,n general, any se-uence can be expressed as

'he unit step se-uence is defined as

'he unit step is related to the impulse by

Alternatively, this can be expressed as

.onversely, the unit sample se-uence can be expressed as the !rst bac(%ard difference of

the unit step se-uence

Exponential se-uences are important for analy<ing and representing discrete0time

systems. 'he general form is

,f A and ! are real numbers then the se-uence is real. ,f : = ! = 5 and A is positive, then

the se-uence values are positive and decrease %ith increasing n+

*or 5 = ! = :

the se-uence alternates in sign, but decreases in magnitude. *or ;!; > 5 the se-uence

gro%s in magnitude as n increases. A sinusoidal se-uence

has the form

'he fre-uency of this complex sinusoid is?:, and is measured in radians per sample. 'he

phase of the signal is. 'he index n is al%ays an integer. 'his leads to some important

#ifferences bet%een the properties of discrete0time and continuous0time complex

exponentials+ .onsider the complex exponential %ith fre-uency

'hus the se-uence for the

complex exponential %ith fre-uency is exactly the same as that for the

complex exponential %ith fre-uency more generally@ complex exponential se-uences

%ith fre-uencies %here r is an integer are indistinguishable

*rom one another. Similarly, for sinusoidal se-uences

,n the continuous0time case, sinusoidal and complex exponential se-uences are al%ays

periodic. #iscrete0time se-uences are periodic (%ith period )) if x2n3 4 x2n A )3 for all n+

'hus the discrete0time sinusoid is only periodic if

%hich re-uires that

'he same condition is re-uired for the complex exponential

Se-uence to be periodic. 'he t%o factors ;ust described can be combined to

reach the conclusion that there are only ) distinguishable fre-uencies for %hich the

.orresponding se-uences are periodic %ith period ). Bne such set is

Discrete-time s!stems

A discrete0time system is de!ned as a transformation or mapping operator that maps an

input signal x2n3 to an output signal y2n3. 'his can be denoted as

Example+ ,deal delay

"emor!ess s!stems

A system is memory less if the output y2n3 depends only on x2n3 at the

Same n. *or example, y2n3 4 (x2n3) C is memory less, but the ideal delay

Linear s!stems

A system is linear if the principle of superposition applies. 'hus if y52n3

is the response of the system to the input x52n3, and yC2n3 the response

to xC2n3, then linearity implies

Additi#it!$

Scaing$

'hese properties combine to form the general principle of superposition

,n all cases a and b are arbitrary constants. 'his property generalises to many inputs, so

the response of a linear

system to

Time-in#ariant s!stems

A system is time invariant if times shift or delay of the input se-uence

.auses a corresponding shift in the output se-uence. 'hat is, if y2n3 is the response to

x2n3, then y2n 0n:3 is the response to x2n 0n:3.

*or example, the accumulator system

is time invariant, but the compressor system

for & a positive integer (%hich selects every &th sample from a se-uence) is not.

Causait!

A system is causal if the output at n depends only on the input at n

and earlier inputs. *or example, the bac(%ard difference system

is causal, but the for%ard difference system

is not.

Sta%iit!

A system is stable if every bounded input se-uence produces a bounded

output se-uence+

x2n3

is an example of an unbounded system, since its response to the unit

'his has no !nite upper bound.

Linear time-in#ariant s!stems

I& t'e inearit! (ro(ert! is com%ined %ith the representation of a general se-uence as a

linear combination of delayed impulses, then it follo%s that a linear time0invariant (9',)

system can be completely characteri<ed by its impulse response. Suppose h(2n3 is the

response of a linear system to the impulse h2n 0(3

at n 4 (. Since

,f the system is additionally time invariant, then the response to !2n 0(3 is h2n 0(3. 'he

previous e-uation then becomes

'his expression is called the convolution sum. 'herefore, a 9', system has the property

that given h2n3, %e can !nd y2n3 for any input x2n3. Alternatively, y2n3 is the convolution

of x2n3 %ith h2n3, denoted as follo%s+

'he previous derivation suggests the interpretation that the input sample at n 4 (,

represented by is transformed by the system into an output se-uence

. *or each (, these se-uences are superimposed to yield the overall output

se-uence+ A slightly different interpretation, ho%ever, leads to a convenient

computational form+ the nth value of the output, namely y2n3, is obtained by multiplying

the input se-uence (expressed as a function of () by the se-uence %ith values h2n0(3, and

then summing all the values of the products x2(3h2n0(3. 'he (ey to this method is in

understanding ho% to form the se-uence h2n 0(3 for all values of n of interest. 'o this

end, note that h2n 0(3 4 h20 (( 0n)3. 'he se-uence h20(3 is seen to be e-uivalent to the

se-uence h2(3 re;ected around the origin

Since the se-uences are non0

overlapping for all negative n, the output must be <ero y2n3 4 :@ n = :+

'he #iscrete *ourier 'ransform

'he discrete0time *ourier transform (#'*') of a se-uence is a continuous function of ?,

and repeats %ith period C!. ,n practice %e usually %ant to obtain the *ourier components

using digital computation, and can only evaluate them for a discrete set of fre-uencies.

'he discrete *ourier transform (#*') provides a means for achieving this. 'he #*' is

itself a se-uence, and it corresponds roughly to samples, e-ually spaced in fre-uency, of

the *ourier transform of the signal. 'he discrete *ourier transform of a length ) signal

x2n3, n 4 :@ 5@ + + + @) 05 is given by

An important property of the #*' is that it is cyclic, %ith period ), both in the discrete0

time and discrete0fre-uency domains. *or example, for any integer r,

since Similarly, it is easy to sho% that x2n A r)3 4

x2n3, implying periodicity of the synthesis e-uation. 'his is important D even though the

#*' only depends on samples in the interval : to ) 05, it is implicitly assumed that the

signals repeat %ith period ) in both the time and fre-uency domains. 'o this end, it is

sometimes useful to de!ne the periodic extension of the signal x2n3 to be 'o this end, it is

sometimes useful to de!ne the periodic extension of the signal x2n3 to be x2n3 4 x2n mod

)3 4 x2((n)))3+ 1ere n mod ) and ((n))) are ta(en to mean n modulo ), %hich has the

value of the remainder after n is divided by ). Alternatively, if n is %ritten in the form n

4 () A l for : = l = ), then n mod ) 4 ((n))) 4 l+

,t is sometimes better to reason in terms of these periodic extensions %hen dealing %ith

the #*'. Specifically, if /2(3 is the #*' of x2n3, then the inverse #*' of /2(3 is Ex2n3.

'he signals x2n3 and Ex2n3 are identical over the interval : to ) 5, but may differ

outside of this range. Similar statements can be made regarding the transform /f2(3.

$roperties of the #*'

&any of the properties of the #*' are analogous to those of the discrete0time *ourier

transform, %ith the notable exception that all shifts involved must be considered to be

circular, or modulo ). #efining the #*' pairs and

9inear convolution of t%o finite0length se-uences .onsider a se-uence x52n3 %ith length

9 points, and xC2n3 %ith length $ points. 'he linear convolution of the se-uences,

'herefore 9 A $ 5 is the maximum length of xF2n3 resulting from the

9inear convolution. 'he )0point circular convolution of x52n3 and xC2n3 is

,t is easy to see that the circular convolution product %ill be e-ual to the linear

convolution product on the interval : to ) 5 as long as %e choose ) 0 9 A $ A5. 'he

process of augmenting a se-uence %ith <eros to ma(e it of a re-uired length is called <ero

padding.

*ast *ourier transforms

'he %idespread application of the #*' to convolution and spectrum analysis is due to the

existence of fast algorithms for its implementation. 'he class of methods is referred to as

fast *ourier transforms (**'s). .onsider a direct implementation of an G0point #*'+

,f the factors have been calculated in advance (and perhaps stored in a loo(up

table), then the calculation of /2(3 for each value of ( re-uires G complex multiplications

and H complex additions. 'he G0point #*' therefore re-uires G I G multiplications and GI

H additions. *or an )0point #*' these become )C and ) () 0 5) respectively. ,f ) 4

5:CJ, then approximately one million complex multiplications and one million complex

additions are re-uired. 'he (ey to reducing the computational complexity lies in the

Bbservation that the same values of x2n3 are effectively calculated many times as

the computation proceeds D particularly if the transform is long. 'he conventional

decomposition involves decimation0in0time, %here at each stage a )0point transform is

decomposed into t%o )4C0point transforms. 'hat is, /2(3 can be %ritten as /2(3 4)

'he original )0point #*' can therefore be expressed in terms of t%o )4C0point #*'s.

'he )4C0point transforms can again be decomposed, and the process repeated until only

C0point transforms remain. ,n general this re-uires logC) stages of decomposition. Since

each stage re-uires approximately ) complex multiplications, the complexity of the

resulting algorithm is of the order of ) logC ). 'he difference bet%een )C and ) logC )

complex multiplications can become considerable for large values of ). *or example, if

) 4 C:JG then )C4() logC )) ! C::. 'here are numerous variations of **' algorithms,

and all exploit the basic redundancy in the computation of the #*'. ,n almost all cases an

Bf the shelf implementation of the **' %ill be sufficient D there is seldom any reason to

implement a **' yourself.

Some forms of digital filters are more appropriate than others %hen real0%orld effects are

considered. 'his article loo(s at the effects of finite %ord length and suggests that some

implementation forms are less susceptible to the errors that finite %ord length effects

introduce.

,n articles about digital signal processing (#S$) and digital filter design, one thing ,Kve

noticed is that after an in0depth development of the filter design, the implementation is

often ;ust given a passing nod. "eferences abound concerning digital filter design, but

surprisingly fe% deal %ith implementation. 'he implementation of a digital filter can ta(e

many forms. Some forms are more appropriate than others %hen various real0%orld

effects are considered. 'his article examines the effects of finite %ord length. ,t suggests

that certain implementation forms are less susceptible than others to the errors introduced

by finite %ord length effects.

UNIT III

)inite *ord engt'

&ost digital filter design techni-ues are really discrete time filter design

techni-ues. 6hatKs the differenceL #iscrete time signal processing theory assumes

discreti<ation of the time axis only. #igital signal processing is discreti<ation on the time

and amplitude axis. 'he theory for discrete time signal processing is %ell developed and

can be handled %ith deterministic linear models. #igital signal processing, on the other

hand, re-uires the use of stochastic and nonlinear models. ,n discrete time signal

processing, the amplitude of the signal is assumed to be a continuous value0that is, the

amplitude can be any number accurate to infinite precision. 6hen a digital filter design is

moved from theory to implementation, it is typically implemented on a digital computer.

,mplementation on a computer means -uanti<ation in time and amplitude0%hich is true

digital signal processing. .omputers implement real values in a finite number of bits.

Even floating0point numbers in a computer are implemented %ith finite precision0a finite

number of bits and a finite %ord length. *loating0point numbers have finite precision, but

dynamic scaling afforded by the floating point reduces the effects of finite precision.

#igital filters often need to have real0time performance0that usually re-uires fixed0point

integer arithmetic. 6ith fixed0point implementations there is one %ord si<e, typically

dictated by the machine architecture. &ost modern computers store numbers in t%oKs

complement form. Any real number can be represented in t%oKs complement form to

infinite precision, as in E-uation 5+

%here bi is <ero or one and /m is scale factor. ,f the series is truncated to BA5 bits,

%here b: is a sign bit, there is an error bet%een the desired number and the truncated

number. 'he series is truncated by replacing the infinity sign in the summation %ith B,

the number of bits in the fixed0point %ord. 'he truncated series is no longer able to

represent an arbitrary number0the series %ill have an error e-ual to the part of the series

discarded. 'he statistics of the error depend on ho% the last bit value is determined, either

by truncation or rounding. .oefficient Muanti<ation 'he design of a digital filter by

%hatever method %ill eventually lead to an e-uation that can be expressed in the form of

E-uation C+

%ith a set of numerator polynomial coefficients bi, and denominator polynomial

coefficients ai. 6hen the coefficients are stored in the computer, they must be truncated

to some finite precision. 'he coefficients must be -uanti<ed to the bit length of the %ord

si<e used in the digital implementation. 'his truncation or -uanti<ation can lead to

problems in the filter implementation. 'he roots of the numerator polynomial are the

<eroes of the system and the roots of the denominator polynomial are the poles of the

system. 6hen the coefficients are -uanti<ed, the effect is to constrain the allo%able pole

<ero locations in the complex plane. ,f the coefficients are -uanti<ed, they %ill be forced

to lie on a grid of points similar to those in *igure 5. ,f the grid points do not lie exactly

on the desired infinite precision pole and <ero locations, then there is an error in the

implementation. 'he greater the number of bits used in the implementation, the finer the

grid and the smaller the error. So %hat are the implications of forcing the pole <ero

locations to -uanti<ed positionsL ,f the -uanti<ation is coarse enough, the poles can be

moved such that the performance of the filter is seriously degraded, possibly even to the

point of causing the filter to become unstable. 'his condition %ill be demonstrated later.

Rounding Noise

6hen a signal is sampled or a calculation in the computer is performed, the

results must be placed in a register or memory location of fixed bit length. "ounding the

value to the re-uired si<e introduces an error in the sampling or calculation e-ual to the

value of the lost bits, creating a nonlinear effect. 'ypically, rounding error is modeled as

a normally distributed noise in;ected at the point of rounding. 'his model is linear and

allo%s the noise effects to be analy<ed %ith linear theory, something %e can handle. 'he

noise due to rounding is assumed to have a mean value e-ual to <ero and a variance given

in E-uation F+

*or a derivation of this result, see #iscrete 'ime Signal $rocessing.5 'runcating

the value (rounding do%n) produces slightly different statistics. &ultiplying t%o B0bit

variables results in a CB0bit result. 'his CB0bit result must be rounded and stored into a

B0bit length storage location. 'his rounding occurs at every multiplication point.

Scaing 6e donKt often thin( about scaling %hen using floating0point calculations

because the computer scales the values dynamically. Scaling becomes an issue %hen

using fixed0point arithmetic %here calculations %ould cause over0 or under flo%. ,n a

filter %ith multiple stages, or more than a fe% coefficients, calculations can easily

overflo% the %ord length. Scaling is re-uired to prevent over0 and under flo% and, if

placed strategically, can also help offset some of the effects of -uanti<ation.

Signa )o* Gra('s Signal flo% graphs, a variation on bloc( diagrams, give a

slightly more compact notation. A signal flo% graph has nodes and branches. 'he

examples sho%n here %ill use a node as a summing ;unction and a branch as a gain. All

inputs into a node are summed, %hile any signal through a branch is scaled by the gain

along the branch. ,f a branch contains a delay element, itKs noted by a < N 5 branch gain.

*igure C is an example of the basic elements of a signal flo% graph. E-uation J results

from the signal flo% graph in *igure C.

)inite Precision E&&ects in Digita )iters

.ausal, linear, shift0invariant discrete time system difference e-uation+

O0'ransform+

%here is the O0'ransform 'ransfer *unction,

and is the unit sample response

6here+

,s the sinusoidal steady state magnitude fre-uency

response

,s the sinusoidal steady state phase fre-uency response

is the Normalized fre-uency in radians

if

then

,f the input is a sinusoidal signal of fre-uency , then the output is a

sinusoidal signal of fre-uency (9,)EA" SPS'E&)

,f the input sinusoidal fre-uency has an amplitude of one and a phase of <ero, then

the output is a sinusoidal (of the same fre-uency) %ith a magnitude

and phase

So, by selecting and ,

can be determine in terms of the filter order and coefficients+

+

(*ilter Synthesis)

,f the linear, constant coefficient difference e-uation is implemented directly+

&agnitude *re-uency "esponse+

&agnitude *re-uency "esponse ($ass band only)+

1o%ever, to implement this discrete time filter, finite precision arithmetic (even if it is

floating point) is used.

'his implementation is a DIGITAL )ILTER+

'here are t%o main effects %hich occur %hen finite precision arithmetic is used to

implement a #,G,'A9 *,9'E"+ &ultiplier coefficient -uanti<ation, Signal -uanti<ation

5. &ultiplier coefficient -uanti<ation

'he multiplier coefficient must be represented using a finite number of bits. 'o do this

the coefficient value is -uanti<ed. *or example, a multiplier coefficient+

might be implemented as+

'he multiplier coefficient value has been -uanti<ed to a six bit (finite precision) value.

'he value of the filter coefficient %hich is actually implemented is QCRSJ or :.G5CQ

AS A RESULT, THE TRANSFER FUNCTION CHANGES!

'he magnitude fre-uency response of the third order direct form filter (%ith the gain or

scaling coefficient removed) is+

C. Signal -uanti<ation

'he signals in a #,G,'A9 *,9'E" must also be represented by finite, -uanti<ed binary

values. 'here are t%o main conse-uences of this+ A finite "A)GE for signals (,.E. a

maximum value) 9imited "ESB9T',B) (the smallest value is the least significant bit)

*or n0bit t%oKs complement fixed point numbers+

,f t%o numbers are added (or multiplied by and integer value) then the result can be

larger than the most positive number or smaller than the most negative number. 6hen

this happens, an overflo% has occurred. ,f t%oKs complement arithmetic is used, then the

effect of overflo% is to C,ANGE the sign of the result and severe, large amplitude

nonlinearity is introduced.

*or useful filters, BE"*9B6 cannot be allo%ed. 'o prevent overflo%, the digital

hard%are must be capable of representing the largest number %hich can occur. ,t may be

necessary to ma(e the filter internal %ord length larger than the inputRoutput signal %ord

length or reduce the input signal amplitude in order to accommodate signals inside the

#,G,'A9 *,9'E".

#ue to the limited resolution of the digital signals used to implement the #,G,'A9

*,9'E", it is not possible to represent the result of all #,,S,B) operations exactly and

thus the signals in the filter must be -uanti<ed.

'he nonlinear effects due to signal -uanti<ation can result in limit cycles 0 the filter

output may oscillate %hen the input is <ero or a constant. ,n addition, the filter may

exhibit dead bands 0 %here it does not respond to small changes in the input signal

amplitude. 'he effects of this signal -uanti<ation can be modeled by+

%here the error due to -uanti<ation (truncation of a t%oKs complement number) is+

By superposition, the can determine the effect on the filter output due to each

-uanti<ation source. 'o determine the internal %ord length re-uired to prevent overflo%

and the error at the output of the #,G,'A9 *,9'E" due to -uanti<ation, find the GAIN

from the input to every internal node. Either increases the internal %ordlengh so that

overflo% does not occur or reduce the amplitude of the input signal. *ind the GAIN from

each -uanti<ation point to the output. Since the maximum value of e(() is (no%n, a

bound on the largest error at the output due to signal -uanti<ation can be determined

using .onvolution Summation. .onvolution Summation (similar to Bounded0,nput

Bounded0Butput stability re-uirements)+

,f

then

is (no%n as the norm of the unit sample response. ,t is a necessary and

sufficient condition that this value be bounded (less than infinity) for the linear system to

be Bounded0,nput Bounded0Butput Stable.

'he norm is one measure of the GAIN.

.omputing the norm for the third order direct form filter+

input node F, output node G

95 norm bet%een (F, G) ( 5H points) + 5.CSHGCJ

95 norm bet%een (F, J) ( 5Q points ) + F.SGHH5C

95 norm bet%een (F, Q) ( 5Q points ) + F.SGQFQG

95 norm bet%een (F, S) ( 5Q points ) + F.SGCFCU

95 norm bet%een (F, H) ( 5F points ) + F.SSFJ:F

&A/,&T& 4 F.SGHH5C

95 norm bet%een (J, G) ( 5F points ) + 5.CSQHHS

95 norm bet%een (J, G) ( 5F points ) + 5.CSQHHS

95 norm bet%een (J, G) ( 5F points ) + 5.CSQHHS

95 norm bet%een (G, G) ( C points ) + 5.::::::

ST& 4 J.HUHFCG

An alternate filter structure can be used to implement the same ideal transfer function.

'hird Brder 9#, &agnitude "esponse+

'hird Brder 9#, &agnitude "esponse ($ass band #etail)+

)ote that the effects of the same coefficient -uanti<ation as for the #irect *orm filter (six

bits) does not have the same effect on the transfer function. 'his is because of the

reduced sensitivity of this structure to the coefficients. (A general property of integrator

based ladder structures or %ave digital filters %hich have a maximum po%er transfer

characteristic.)

V 9#,F &ultipliers+

V s5 4 :.FUJ:J::F:H5HFS5

V sC 4 :.SQUQHCGUHU:5:5U

V sF 4 :.SQ:FJQUQCFF:GH:

)ote that all coefficient values are less than unity and that only three multiplications are

re-uired. 'here is no gain or scaling coefficient. &ore adders are re-uired than for the

direct form structure.

'he norm values for the 9#, filter are+

input node 5, output node U

95 norm bet%een (5, U) ( 5F points ) + 5.CQGCQS

95 norm bet%een (5, F) ( 5J points ) + C.FCFQ5G

95 norm bet%een (5, H) ( 5J points ) + :.HSSGJ5

95 norm bet%een (5, S) ( 5J points ) + :.UUJCGU

&A/,&T& 4 C.FCFQ5G

95 norm bet%een (5::C5, U) ( 5S points ) + F.CGSFUF

95 norm bet%een (5::F5, U) ( 5H points ) + F.GCCHFF

95 norm bet%een (5::55, U) ( 5H points ) + F.CFFC:5

ST& 4 5:.FJCFCH

)ote that even though the ideal transfer functions are the same, the effects of finite

precision arithmetic are different?

'o implement the direct form filter, three additions and four multiplications are re-uired.

)ote that the placement of the gain or scaling coefficient %ill have a significant effect on

the %ordlenght or the error at the output due to -uanti<ation.

Bf course, a finite0duration impulse response (*,") filter could be used. ,t %ill still have

an error at the output due to signal -uanti<ation, but this error is bounded by the number

of multiplications. A *," filter cannot be unstable for bounded inputs and coefficients

and piece%ise linear phase is possible by using symmetric or anti0symmetric coefficients.

But, as a rough rule an *," filter order of 5:: %ould be re-uired to build a filter %ith the

same selectivity as a fifth order recursive (,nfinite #uration ,mpulse "esponse 0 ,,")

filter.

Effects of finite %ord length

Muanti<ation and multiplication errors

&ultiplication of C &0bit %ords %ill yield a C& bit product %hich is or to an & bit %ord.

'runcated rounded

Suppose that the C& bit number represents an exact value then+

Exact value, xK (C& bits) digiti<ed value, x (& bits) error e 4 x 0 xK

'runcation

x is represented by (& 05) bits, the remaining least significant bits of xK being discarded

Muanti<ation errors

Muanti<ation is a nonlinearity %hich, %hen introduced into a control loop, can lead to or

Steady state error

9imit cycles

Stable limit cycles generally occur in control systems %ith lightly damped poles detailed

nonlinear analysis or simulation may be re-uired to -uantify their effect methods of

reducing the effects are+

0 9arger %ord si<es

0 .ascade or parallel implementations

0 Slo%er sample rates

,ntegrator Bffset

.onsider the approximate integral term+

$ractical features for digital controllers

Scaling

All microprocessors %or( %ith finite length %ords G, 5S, FC or SJ bits.

'he values of all input, output and intermediate variables must lie %ithin the

"ange of the chosen %ord length. 'his is done by appropriate scaling of the variables.

'he goal of scaling is to ensure that neither underflo%s nor overflo%s occur during

arithmetic processing

"ange0chec(ing

.hec( that the output to the actuator is %ithin its capability and saturate

the output value if it is not. ,t is often the case that the physical causes of saturation are

variable %ith temperature, aging and operating conditions.

"oll0over

Bverflo% into the sign bit in output data may cause a #A. to s%itch from a high positive

alue to a high negative value+ this can have very serious conse-uences for the actuator

and $lant.

Scaling for fixed point arithmetic

Scaling can be implemented by shifting

binary values left or right to preserve satisfactory dynamic range and signal to

-uanti<ation noise ratio. Scale so that m is the smallest positive integer that satisfies the

condition

UNIT II

)iter design

5 #esign considerations+ a frame%or(

T'e design o& a digita &iter in#o#es &i#e ste(s$

! Specification+ 'he characteristics of the filter often have to be specified in the

fre-uency domain. *or example, for fre-uency selective filters (lo% pass, high pass, band

pass, etc.) the specification usually involves tolerance limits as sho%n above.

.oefficient calculation+ Approximation methods have to be used to calculate the values

h2(3 for a *," implementation, or a(, b( for an ,," implementation. E-uivalently, this

involves finding a filter %hich has 1 (<) satisfying the re-uirements.

"eali<ation+ 'his involves converting 1(<) into a suitable filter structure. Bloc( or fe%

diagrams are often used to depict filter structures, and sho% the computational procedure

for implementing

the digital filter.

Analysis of finite %ord length effects+ ,n practice one should chec( that the -uanti<ation

used in the implementation does not degrade the performance of the filter to a point

%here it is unusable.

,mplementation+ 'he filter is implemented in soft%are or hard%are. 'he criteria for

selecting the implementation method involve issues such as real0time performance,

complexity, processing re-uirements, and availability of e-uipment.

*inite impulse response (*,") filters design+

A *," !lter is characteri<ed by the e-uations

'he follo%ing are useful properties of *," filters+

'hey are al%ays stable D the system function contains no poles. 'his is

particularly useful for adaptive filters. 'hey can have an exactly linear phase response.

'he result is no fre-uency dispersion, %hich is good for pulse and data transmission. !

*inite length register effects are simpler to analyse and of less conse-uence than for ,,"

filters. 'hey are very simple to implement, and all #S$ processors have architectures that

are suited to *," filtering.

'he center of symmetry is indicated by the dotted line. 'he process of linear0phase filter

design involves choosing the a2n3 values to obtain a filter %ith a desired fre-uency

response. 'his is not al%ays possible, ho%ever D the fre-uency response for a type ,,

filter, for example, has the property that it is al%ays <ero for? 4 !, and is therefore not

appropriate for a high pass filter. Similarly, filters of type F and J introduce a U:! phase

shift, and have a fre-uency response that is al%ays <ero at? 4 : %hich ma(es them

unsuitable for as lo%pass filters. Additionally, the type F response is al%ays <ero at? 4 !,

ma(ing it unsuitable as a high pass filter. 'he type , filter is the most versatile of the four.

9inear phase filters can be thought of in a different %ay. "ecall that a linear phase

characteristic simply corresponds to a time shift or delay. .onsider no% a real *," !lter

%ith an impulse response that satisfies the even symmetry condition h2n3 4 h2n3 1(e;?).

,ncreasing the length ) of h2n3 reduces the main lobe %idth and hence the transition

%idth of the overall response. 'he side lobes of 6 (e;?) affect the pass band and stop

band tolerance of 1 (e;?). 'his can be controlled by changing the shape of the %indo%.

.hanging ) does not affect the side lobe behavior. Some commonly used %indo%s for

filter design are

All %indo%s trade of a reduction in side lobe level against an increase in main lobe

%idth. 'his is demonstrated belo% in a plot of the fre-uency response of each of the

%indo%

Some important %indo% characteristics are compared in the follo%ing

'he Waiser %indo% has a number of parameters that can be used to explicitly tune the

characteristics. ,n practice, the %indo% shape is chosen first based on pass band and stop

band tolerance re-uirements. 'he %indo% si<e is then determined based on transition

%idth re-uirements. 'o determine hd2n3 from 1d(e;?) one can sample 1d(e;?) closely and

use a large inverse #*'.

*re-uency sampling method for *," filter design

,n this design method, the desired fre-uency response 1d(e;?) is sampled at e-ually0

spaced points, and the result is inverse discrete *ourier transformed. Specifically, letting

'he resulting filter %ill have a fre-uency response that is exactly the same as the original

response at the sampling instants. )ote that it is also necessary to specify the phase of the

desired response 1d(e;?), and it is usually chosen to be a linear function of fre-uency to

ensure a linear phase filter. Additionally, if a filter %ith real0valued coefficients is

re-uired, then additional constraints have to be enforced. 'he actual fre-uency response

1(e;?) of the !lter h2n3 still has to be determined. 'he <0transform of the impulse response

is

'his expression can be used to !nd the actual fre-uency response of the !lter obtained,

%hich can be compared %ith the desired response. 'he method described only guarantees

correct fre-uency response values at the points that %ere sampled. 'his sometimes leads

to excessive ripple at intermediate points+

In&inite im(use res(onse -IIR. &iter design

An ,," !lter has non<ero values of the impulse response for all values of n, even as n.

5. 'o implement such a !lter using a *," structure therefore re-uires an infinite number

of calculations. 1o%ever, in many cases ,," filters can be reali<ed using 9..#Es and

computed recursively.

Example+

A !lter %ith the infinite impulse response h2n3 4 (54C)nu2n3 has <0transform

'herefore, y2n3 4 54Cy 2n A53 A x2n3, and y2n3 is easy to calculate. ,," filter structures

can therefore be far more computationally efficient than *," filters, particularly for long

impulse responses. *," filters are stable for h2n3 bounded, and can be made to have a

linear phase response. ,," filters, on the other hand, are stable if the poles are inside the

unit circle, and have a phase response that is difficult to specify. 'he general approach

ta(en is to specify the magnitude response, and regard the phase as acceptable. 'his is a

#isadvantage of ,," filters. ,," filter design is discussed in most #S$ texts.

UNIT /

DSP Processor- Introduction

#S$ processors are microprocessors designed to perform digital signal processing

Xthe mathematical manipulation of digitally represented signals. #igital signal

processing is one of the core technologies in rapidly gro%ing application areas such as

%ireless communications, audio and video processing, and industrial control. Along %ith

the rising popularity of #S$ applications, the variety of #S$0capable processors has

expanded greatly since the introduction of the first commercially successful #S$ chips in

the early 5UG:s. &ar(et research firm *or%ard .oncepts pro;ects that sales of #S$

processors %ill total T.S. YS.C billion in C:::, a gro%th of J: percent over 5UUU. 6ith

semiconductor manufacturers vying for bigger shares of this booming mar(et, designersZ

choices %ill broaden even further in the next fe% years. 'odayZs #S$ processors (or

[#S$s\) are sophisticated devices %ith impressive capabilities. ,n this paper, %e

introduce the features common to modern commercial #S$ processors, explain some of

the important differences among these devices, and focus on features that a system

designer should examine to find the processor that best fits his or her application.

0'at is a DSP Processor1

&ost #S$ processors share some common basic features designed to support

high0performance, repetitive, numerically intensive tas(s. 'he most often cited of these

features are the ability to perform one or more multiply0accumulate operations (often

called [&A.s\) in a single instruction cycle. 'he multiply0accumulate operation is useful

in #S$ algorithms that involve computing a vector dot product, such as digital filters,

correlation, and *ourier transforms. 'o achieve a single0cycle &A., #S$ processors

integrate multiply0accumulate hard%are into the main data path of the processor, as

sho%n in *igure 5. Some recent #S$ processors provide t%o or more multiply0

accumulate units, allo%ing multiply0accumulate operations to be performed in parallel. ,n

addition, to allo% a series of multiply0accumulate operations to proceed %ithout the

possibility of arithmetic overflo% (the generation of numbers greater than the maximum

value the processorZs accumulator can hold), #S$ processors generally provide extra

[guard\ bits in the accumulator. *or example, the &otorola #S$ processor family

examined in *igure 5 offers eight guard bits A second feature shared by #S$ processors

is the ability to complete several accesses to memory in a single instruction cycle. 'his

allo%s the processor to fetch an instruction %hile simultaneously fetching operands

andRor storing the result of a previous instruction to memory. *or example, in calculating

the vector dot product for an *," filter, most #S$ processors are able to perform a &A.

%hile simultaneously loading the data sample and coefficient for the next &A.. Such

single cycle multiple memory accesses are often sub;ect to many restrictions. 'ypically,

all but one of the memory locations accessed must reside on0chip, and multiple memory

accesses can only ta(e place %ith certain instructions.

'o support simultaneous access of multiple memory locations, #S$ processors

provide multiple onchip buses, multi0ported on0chip memories, and in some case multiple

independent memory ban(s. A third feature often used to speed arithmetic processing on

#S$ processors is one or more dedicated address generation units. Bnce the appropriate

addressing registers have been configured, the address generation unit Bperates in the

bac(ground (i.e., %ithout using the main data path of the processor), forming the address.

"e-uired for operand accesses in parallel %ith the execution of arithmetic instructions. ,n

contrast, general0purpose processors often re-uire extra cycles to generate the addresses

needed to load operands. #S$ processor address generation units typically support a

selection of addressing modes tailored to #S$ applications. 'he most common of these is

register-indiret addressing !it" #ost-inrement, %hich is used in situations %here a

repetitive computation is performed on data stored se-uentially in memory. $od%lo

addressing is often supported, to simplify the use of circular buffers. Some processors

also support &it-re'ersed addressing, %hich increases the speed of certain fast *ourier

transform (**') algorithms. Because many #S$ algorithms involve performing repetitive

computations, most #S$ processors provide special support for efficient looping. Bften, a

special loo# or re#eat instruction is provided, %hich allo%s the programmer to implement

a (or-ne)t loop %ithout expending any instruction cycles for updating and testing the loop

counter or branching bac( to the top of the loop. *inally, to allo% lo%0cost, high0

performance input and output, most #S$ processors incorporate one or more serial or

parallel ,RB interfaces, and speciali<ed ,RB handling mechanisms such as lo%0overhead

interrupts and direct memory access (#&A) to allo% data transfers to proceed %ith little

or no intervention from the rest of the processor. 'he rising popularity of #S$ functions

such as speech coding and audio processing has led designers to consider implementing

#S$ on general0purpose processors such as des(top .$Ts and microcontrollers. )early

all general0purpose processor manufacturers have responded by adding signal processing

capabilities to their chips. Examples include the &&/ and SSE instruction set extensions

to the ,ntel $entium line, and the extensive #S$0oriented retrofit of 1itachiZs S10C

microcontroller to form the S10#S$. ,n some cases, system designers may prefer to use a

general0purpose processor rather than a #S$ processor. Although general0purpose

processor architectures often re-uire several instructions to perform operations that can

be performed %ith ;ust one #S$ processor instruction, some general0purpose processors

run at extremely fast cloc( speeds. ,f the designer needs to perform non0 #S$ processing,

and then using a general0purpose processor for both #S$ and non0#S$ processing could

reduce the system parts count and lo%er costs versus using a separate #S$ processor and

general0purpose microprocessor. *urthermore, some popular general0purpose processors

feature a tremendous selection of application development tools. Bn the other hand,

because general0purpose processor architectures generally lac( features that simplify

#S$ programming, soft%are development is sometimes more tedious than on #S$

processors and can result in a%(%ard code thatZs difficult to maintain. &oreover, if

general0purpose processors are used only for signal processing, they are rarely cost0

effective compared to #S$ chips designed specifically for the tas(. 'hus, at least in the

short run, %e believe that system designers %ill continue to use traditional #S$

processors for the ma;ority of #S$ intensive applications. 6e focus on #S$ processors in

this paper.

A((ications

#S$ processors find use in an extremely diverse array of applications, from radar

systems to consumer electronics. )aturally, no one processor can meet the needs of all or

even most applications. 'herefore, the first tas( for the designer selecting a #S$

processor is to %eigh the relative importance of performance, cost, integration, ease of

development, po%er consumption, and other factors for the application at hand. 1ere

%eZll briefly touch on the needs of ;ust a fe% classes of #S$ applications. ,n terms of

dollar volume, the biggest applications for digital signal processors are inexpensive, high0

volume embedded systems, such as cellular telephones, dis( drives (%here #S$s are used

for servo control), and portable digital audio players. ,n these applications, cost and

integration are paramount. *or portable, battery0po%ered products, po%er consumption is

also critical. Ease of development is usually less important@ even though these

applications typically involve the development of custom soft%are to run on the #S$ and

custom hard%are surrounding the #S$, the huge manufacturing volumes ;ustify

expending extra development effort.

A second important class of applications involves processing large volumes of

data %ith complex algorithms for speciali<ed needs. Examples include sonar and seismic

exploration, %here production volumes are lo%er, algorithms more demanding, and

product designs larger and more complex. As a result, designers favor processors %ith

maximum performance, good ease of use, and support for multiprocessor configurations.

,n some cases, rather than designing their o%n hard%are and soft%are from scratch,

designers assemble such systems using off0the0shelf development boards, and ease their

soft%are development tas(s by using existing function libraries as the basis of their

application soft%are.

C'oosing t'e Rig't DSP Processor

As illustrated in the preceding section, the right #S$ processor for a ;ob depends heavily

on the application. Bne processor may perform %ell for some applications, but be a poor

choice for others. 6ith this in mind, one can consider a number of features that vary from

one #S$ to another in selecting a processor. 'hese features are discussed belo%.

Arit'metic )ormat

Bne of the most fundamental characteristics of a programmable digital signal

processor is the type of native arithmetic used in the processor. &ost #S$s use (i)ed-

#oint arithmetic, %here numbers are represented as integers or as fractions in a fixed

range (usually 05.: to A5.:). Bther processors use (loating-#oint arithmetic, %here values

are represented by a mantissa and an e)#onent as mantissa x C exponent. 'he mantissa is

generally a fraction in the range 05.: to A5.:, %hile the exponent is an integer that

represents the number of places that the binary point (analogous to the decimal point in a

base 5: number) must be shifted left or right in order to obtain the value represented.

*loating0point arithmetic is a more flexible and general mechanism than fixed0point.

6ith floating0point, system designers have access to %ider d*nami range (the ratio

bet%een the largest and smallest numbers that can be represented). As a result, floating0

point #S$ processors are generally easier to program than their fixed0point cousins, but

usually are also more expensive and have higher po%er consumption. 'he increased cost

and po%er consumption result from the more complex circuitry re-uired %ithin the

floating0point processor, %hich implies a larger silicon die. 'he ease0of0use advantage of

floating0point processors is due to the fact that in many cases the programmer doesnZt

have to be concerned about dynamic range and precision.

,n contrast, on a fixed0point processor, programmers often must carefully scale

signals at various stages of their programs to ensure ade-uate numeric precision %ith the

limited dynamic range of the fixed0point processor. &ost high0volume, embedded

applications use fixed0point processors because the priority is on lo% cost and, often, lo%

po%er. $rogrammers and algorithm designers determine the dynamic range and precision

needs of their application, either analytically or through simulation, and then add scaling

operations into the code if necessary. *or applications that have extremely demanding

dynamic range and precision re-uirements, or %here ease of development is more

important than unit cost, floating0point processors have the advantage. ,tZs possible to

perform general0purpose floating0point arithmetic on a fixed0point processor by using

soft%are routines that emulate the behavior of a floating0point device. 1o%ever, such

soft%are routines are usually very expensive in terms of processor cycles. .onse-uently,

general0purpose floating0point emulation is seldom used. A more efficient techni-ue to

boost the numeric "ange of fixed0point processors is &lo+ (loating-#oint, %herein a

group of numbers %ith different mantissas but a single, common exponent are processed

as a bloc( of data. Bloc( floating0point is usually handled in soft%are, although some

processors have hard%are features to assist in its implementation.

Data 0idt'

All common floating0point #S$s use a FC0bit data %ord. *or fixed0point #S$s,

the most common data %ord si<e is 5S bits. &otorolaZs #S$QSFxx family uses a CJ0bit

data %ord, ho%ever, %hile OoranZs O"FG::x family uses a C:0bit data %ord. 'he si<e of

the data %ord has a ma;or impact on cost, because it strongly influences the si<e of the

chip and the number of pac(age pins re-uired, as %ell as the si<e of external memory

devices connected to the #S$. 'herefore, designers try to use the chip %ith the smallest

%ord si<e that their application can tolerate. As %ith the choice bet%een fixed0 and

floating0point chips, there is often a trade0off bet%een %ord si<e and development

complexity. *or example, %ith a 5S0bit *ixed0point processor, a programmer can perform

double0 precision FC0bit arithmetic operations by stringing together an appropriate

combination of instructions. (Bf course, double0precision arithmetic is much slo%er than

single0precision arithmetic.) ,f the bul( of an application can be handled %ith single0

precision arithmetic, but the application needs more precision for a small section of the

code, the selective use of double0precision arithmetic may ma(e sense. ,f most of the

application re-uires more precision, a processor %ith a larger data %ord si<e is li(ely to

be a better choice. )ote that %hile most #S$ processors use an instruction %ord si<e

e-ual to their data %ord si<es, not all do. 'he Analog #evices A#S$0C5xx family, for

example, uses a 5S0bit data %ord and a CJ0bit instruction %ord.

S(eed

A (ey measure of the suitability of a processor for a particular application is its execution

speed. 'here are a number of %ays to measure a processorZs speed. $erhaps the most

fundamental is the processorZs instruction cycle time+ the amount of time re-uired to

execute the fastest instruction on the processor. 'he reciprocal of the instruction cycle

time divided by one million and multi plied by the number of instructions executed per

cycle is the processorZs pea( instruction execution rate in millions of instructions per

second, or &,$S. A problem %ith comparing instruction execution times is that the

amount of %or( accomplished by a single instruction varies %idely from one processor to

another. Some of the ne%est #S$ processors use 9,6 (very long instruction %ord)

architectures, in %hich multiple instructions are issued and executed per cycle. 'hese

processors typically use very simple instructions that perform much less %or( than the

instructions typical of conventional #S$ processors. 1ence, comparisons of &,$S ratings

bet%een 9,6 processors and conventional #S$ processors can be particularly

misleading, because of fundamental differences in their instruction set styles. *or an

example contrasting %or( per instruction bet%een 'exas ,nstrumentZs 9,6

'&SFC:.SCxx and &otorolaZs conventional #S$QSFxx, see B#',Zs %hite paper entitled

T"e ,-TImar+ ./ a $eas%re o( -S0 E)e%tion S#eed, available at %%%.B#',.com.

Even %hen comparing conventional #S$ processors, ho%ever, &,$S ratings can be

deceptive. Although the differences in instruction sets are less dramatic than those seen

bet%een conventional #S$ processors and 9,6 processors, they are still sufficient to

ma(e &,$S comparisons inaccurate measures of processor performance. *or example,

some #S$s feature barrel shifters that allo% multi0bit data shifting (used to scale data) in

;ust one instruction, %hile other #S$s re-uire the data to be shifted %ith repeated one0bit

shift instructions. Similarly, some #S$s allo% parallel data moves (the simultaneous

loading of operands %hile executing an instruction) that are unrelated to the A9T

instruction being executed, but other #S$s only support parallel moves that are related to

the operands of an A9T instruction. Some ne%er #S$s allo% t%o &A.s to be specified

in a single instruction, %hich ma(es &,$S0based comparisons even more misleading.

Bne solution to these problems is to decide on a basic o#eration (instead of an

instruction) and use it as a yardstic( %hen comparing processors. A common operation is

the &A. operation. Tnfortunately, &A. execution times provide little information to

differentiate bet%een processors+ on many #S$s a &A. operation executes in a single

instruction cycle, and on these #S$s the &A. time is e-ual to the processorZs instruction

cycle time. And, as mentioned above, some #S$s may be able to do considerably more in

a single &A. instruction than others. Additionally, &A. times donZt reflect

performance on other important types of operations, such as looping, that are present in

virtually all applications. A more general approach is to define a set of standard

benchmar(s and compare their execution speeds on different #S$s. 'hese benchmar(s

may be simple algorithm [(ernel\ functions (such as *," or ,," filters), or they might be

entire applications or portions of applications (such as speech coders). ,mplementing

these benchmar(s in a consistent fashion across various #S$s and analy<ing the results

can be difficult. Bur company, Ber(eley #esign 'echnology, ,nc., pioneered the use of

algorithm (ernels to measure #S$ processor performance %ith the B#', Benchmar(s]

included in our industry report, ,%*er1s G%ide to -S0 0roessors2 Several processorsZ

execution time results on B#',Zs **' benchmar( are sho%n in *igure C. '%o final notes

of caution on processor speed+ *irst, be careful %hen comparing processor speeds -uoted

in terms of [millions of operations per second\ (&B$S) or [millions of floating0point

operations per second\ (&*9B$S) figures, because different processor vendors have

different ideas of %hat constitutes an [operation.\ *or example, many floating0point

processors are claimed to have a &*9B$S rating of t%ice their &,$S rating, because

they are able to execute a floating0point multiply operation in parallel %ith a floating0

point addition operation. Second, use caution %hen comparing processor cloc( rates. A

#S$Zs input cloc( may be the same fre-uency as the processorZs instruction rate, or it

may be t%o to four times higher than the instruction rate, depending on the processor.

Additionally, many #S$ chips no% feature cloc( doublers or phase0loc(ed loops

($99s) that allo% the use of a lo%er0fre-uency external cloc( to generate the needed

high0fre-uency cloc( on chip.

"emor! Organi2ation 'he organi<ation of a processorZs memory subsystem can have a

large impact on its performance. As mentioned earlier, the &A. and other #S$

operations are fundamental to many signal processing algorithms. *ast &A. execution

re-uires fetching an instruction %ord and t%o data %ords from memory at an effective

rate of once every instruction cycle. 'here are a variety of %ays to achieve this, including

multiported memories (to permit multiple memory accesses per instruction cycle),

separate instruction and data memories (the [1arvard\ architecture and its derivatives),

and instruction caches (to allo% instructions to be fetched from cache instead of from

memory, thus freeing a memory access to be used to fetch data). *igures F and J sho%

ho% the 1arvard memory architecture differs from the [on )eumann\ Architecture

used by many microcontrollers. Another concern is the si<e of the supported memory,

both on0 and off0chip. &ost fixed0point #S$s are aimed at the embedded systems mar(et,

%here memory needs tend to be small. As a result, these processors typically have small0

to0medium on0chip memories (bet%een JW and SJW %ords), and small external data

buses. ,n addition, most fixed0point #S$s feature address buses of 5S bits or less, limiting

the amount of easily0accessible external memory.

Some floating0point chips provide relatively little (or no) on0chip memory, but feature

large external data buses. *or example, the 'exas ,nstruments '&SFC:.F: provides SW

%ords of on0chip memory, one CJ0bit external address bus, and one 5F0bit external

address bus. ,n contrast, the Analog #evices A#S$0C5:S: provides J &bits of memory

on0chip that can be divided bet%een program and data memory in a variety of %ays. As

%ith most #S$ features, the best combination of memory organi<ation, si<e, and number

of external buses is heavily application0dependent.

Ease o& De#eo(ment

'he degree to %hich ease of system development is a concern depends on the application.

Engineers performing research or prototyping %ill probably re-uire tools that ma(e

system development as simple as possible. Bn the other hand, a company developing a

next0generation digital cellular telephone may be %illing to suffer %ith poor development

tools and an arduous development environment if the #S$ chip selected shaves YQ off the

cost of the end product. (Bf course, this same company might reach a different

conclusion if the poor development environment results in a three0month delay in getting

their product to mar(et?) 'hat said, items to consider %hen choosing a #S$ are soft%are

tools (assemblers, lin(ers, simulators, debuggers, compilers, code libraries, and real0time

operating systems), hard%are tools (development boards and emu0 lators), and higher0

level tools (such as bloc(0diagram based code0generation environments). A design flo%

using some of these tools is illustrated in *igure Q. A fundamental -uestion to as( %hen

choosing a #S$ is ho% the chip %ill be programmed. 'ypically, developers choose either

assembly language, a high0level languageX such as . or AdaXor a combination of both.

Surprisingly, a large portion of #S$ programming is still done in assembly language.

Because #S$ applications have voracious number0crunching re-uirements, programmers

are often unable to use compilers, %hich often generate assembly code that executes

slo%ly. "ather, programmers can be forced to hand0optimi<e assembly code to lo%er

execution time and code si<e to acceptable levels. 'his is especially true in consumer

applications, %here cost constraints may prohibit upgrading to a higher0 performance

#S$ processor or adding a second processor. Tsers of high0level language compilers

often find that the compilers %or( better for floating0point #S$s than for fixed0point

#S$s, for several reasons. *irst, most high0level languages do not have native support for

fractional arithmetic. Second, floating0point processors tend to feature more regular, less

restrictive instruction sets than smaller, fixed0point processors, and are thus better

compiler targets. 'hird, as mentioned, floatingpoint

*loating point processors typically support larger memory spaces than fixed0point

processors, and are thus better able to accommodate compiler0generated code, %hich

tends to be larger than hand crafted assembly code. 9,60based #S$ processors, %hich

typically use simple, orthogonal ",S.0based instruction sets and have large register files,

are some%hat better compiler targets than traditional #S$ processors. 1o%ever, even

compilers for 9,6 processors tend to generate code that is inefficient in comparison to

hand0optimi<ed assembly code. 1ence, these processors, too, are often programmed in

assembly languageXat least to some degree. 6hether the processor is programmed in a

high0level language or in assembly language, debugging and hard%are emulation tools

deserve close attention since, sadly, a great deal of time may be spent %ith them. Almost

all manufacturers provide instruction set simulators, %hich can be a tremendous help in

debugging programs before hard%are is ready. ,f a high0level language is used, it is

important to evaluate the capabilities of the high0level language debugger+ %ill it run %ith

the simulator andRor the hard%are emulatorL ,s it a separate program from the assembly0

level debugger that re-uires the user to learn another user interfaceL &ost #S$ vendors

provide hard%are emulation tools for use %ith their processors. &odern processors

usually feature on0chip debuggingRemulation capabilities, often accessed through a serial

interface that conforms to the ,EEE 55JU.5 ^'AG standard for test access ports. 'his

serial interface allo%s san-&ased em%lationXprogrammers can load brea(points through

the interface, and then scan the processorZs internal registers to vie% and change the

contents after the processor reaches a brea(point.

Scan0based emulation is especially useful because debugging may be accomplished

%ithout removing the processor from the target system. Bther debugging methods, such

as pod0based emulation, re-uire replacing the processor %ith a special processor emulator

pod. Bff0the0shelf #S$ system development boards are available from a variety of

manufacturers, and can be an important resource. #evelopment boards can allo%

soft%are to run in real0time before the final hard%are is ready, and can thus provide an

important productivity boost. Additionally, some lo%0production0volume systems may

use development boards in the final product.

"uti(rocessor Su((ort

.ertain computationally intensive applications %ith high data rates (e.g., radar and sonar)

often demand multiple #S$ processors. ,n such cases, ease of processor interconnection

(in terms of time to design interprocessor communications circuitry and the cost of

lin(ing processors) and interconnection performance (in terms of communications

throughput, overhead, and latency) may be important factors. Some #S$ familiesX

notably the Analog #evices A#S$0C5:SxXprovide special0purpose hard%are to ease

multiprocessor system design. A#S$0C5:Sx processors feature bidirectional data and

address buses coupled %ith six bidirectional bus re-uest lines. 'hese allo% up to six

processors to be connected together via a common external bus %ith elegant bus

arbitration. &oreover, a uni-ue feature of the A#S$0 C5:Sx processor connected in this

%ay is that each processor can access the internal memory of any other A#S$0C5:Sx on

the shared bus. Six four0bit parallel communication ports round out the A#S$0C5:SxZs

parallel processing features. ,nterestingly, 'exas ,nstrumentZs ne%est floating0point

processor, the 9,60based '&SFC:.SHxx, does not currently provide similar hard%are

support for multiprocessor designs, though it is possible that future family members %ill

address this issue

Vous aimerez peut-être aussi

- Ece 3sem 147303nolDocument12 pagesEce 3sem 147303nolsirapuPas encore d'évaluation

- An Intro To Digital Comms - Part 1Document56 pagesAn Intro To Digital Comms - Part 1BEN GURIONPas encore d'évaluation

- DSP U1 LNDocument42 pagesDSP U1 LNKarthick SekarPas encore d'évaluation

- Rajalakshmi Engineering College, ThandalamDocument40 pagesRajalakshmi Engineering College, Thandalamaarthy1207vaishnavPas encore d'évaluation

- Linear Predictive CodingDocument22 pagesLinear Predictive CodingLeonardo PradoPas encore d'évaluation

- 2d DWT DocumentDocument72 pages2d DWT DocumentRajeshwariDatlaPas encore d'évaluation

- Analog Communication UNIT-1: RANDOM PROCESS: Random Variables: Several RandomDocument8 pagesAnalog Communication UNIT-1: RANDOM PROCESS: Random Variables: Several RandomChaitan NandanPas encore d'évaluation

- Term Paper Report: Applications of Random SignalsDocument10 pagesTerm Paper Report: Applications of Random SignalsNilu PatelPas encore d'évaluation

- Title PageDocument171 pagesTitle PageAngon BhattacharjeePas encore d'évaluation

- Lec 01 Random Variables and FiltersDocument30 pagesLec 01 Random Variables and FiltersEng Adel khaledPas encore d'évaluation

- Experiment No:1: Aim:Write A Program For Sampling. Software Used:matlab TheoryDocument44 pagesExperiment No:1: Aim:Write A Program For Sampling. Software Used:matlab Theoryauro auroPas encore d'évaluation

- Frequency DomainDocument5 pagesFrequency DomainEriane GarciaPas encore d'évaluation

- Experiments1 5Document15 pagesExperiments1 5Sameer ShaikhPas encore d'évaluation

- Analog Communication UNIT-1: RANDOM PROCESS: Random Variables: Several RandomDocument8 pagesAnalog Communication UNIT-1: RANDOM PROCESS: Random Variables: Several RandomManjunath MohitePas encore d'évaluation

- Mathematics Statistical Square Root Mean SquaresDocument5 pagesMathematics Statistical Square Root Mean SquaresLaura TaritaPas encore d'évaluation

- Signal Analysis: 1.1 Analogy of Signals With VectorsDocument29 pagesSignal Analysis: 1.1 Analogy of Signals With VectorsRamachandra ReddyPas encore d'évaluation

- Digital Signal ProcessorsDocument6 pagesDigital Signal ProcessorsVenkatesh SubramanyaPas encore d'évaluation

- Digital Signal Processing (DSP)Document0 pageDigital Signal Processing (DSP)www.bhawesh.com.npPas encore d'évaluation

- Use of Windows For Harmonic Analysis With DFTDocument33 pagesUse of Windows For Harmonic Analysis With DFTNatália Cardoso100% (1)

- LAB 9 - Multirate SamplingDocument8 pagesLAB 9 - Multirate SamplingM Hassan BashirPas encore d'évaluation

- FFT AnalisysDocument36 pagesFFT AnalisysSerj PoltavskiPas encore d'évaluation

- Rand Process-Very Good IntroDocument30 pagesRand Process-Very Good IntroVanidevi ManiPas encore d'évaluation

- DSP Manual 1Document138 pagesDSP Manual 1sunny407Pas encore d'évaluation

- 37.sai DeepthiDocument8 pages37.sai DeepthiSanjay SinglaPas encore d'évaluation

- DSP Lab Manual 5 Semester Electronics and Communication EngineeringDocument147 pagesDSP Lab Manual 5 Semester Electronics and Communication Engineeringrupa_123Pas encore d'évaluation

- MECCE 103 Advanced Digital Communication TechniquesDocument4 pagesMECCE 103 Advanced Digital Communication TechniquesNisha MenonPas encore d'évaluation

- Accurate Calculation of Harmonics/Inter-Harmonics of Time Varying LoadDocument4 pagesAccurate Calculation of Harmonics/Inter-Harmonics of Time Varying LoadIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalPas encore d'évaluation

- The FFT Fundamentals and Concepts PDFDocument199 pagesThe FFT Fundamentals and Concepts PDFbubo28Pas encore d'évaluation

- SignalsDocument7 pagesSignalsRavi K NPas encore d'évaluation

- D Sesqunces RandomnessDocument8 pagesD Sesqunces RandomnessSeshareddy KatamPas encore d'évaluation

- DSP AssignmentDocument31 pagesDSP AssignmentArslan FirdausPas encore d'évaluation

- Digital Signal Processing Approach To Interpolation: AtereDocument11 pagesDigital Signal Processing Approach To Interpolation: AtereArvind BaroniaPas encore d'évaluation

- Systems LabDocument46 pagesSystems LabVIPIN VPas encore d'évaluation

- Lab 13Document9 pagesLab 13abhishek1028Pas encore d'évaluation

- ECE Lab 2 102Document28 pagesECE Lab 2 102azimylabsPas encore d'évaluation

- Unit-I Communication Com (Cum (Latin) "With" or "Together With") + Union (Latin) Union With or Union Together With. Introduction To CommunicationDocument25 pagesUnit-I Communication Com (Cum (Latin) "With" or "Together With") + Union (Latin) Union With or Union Together With. Introduction To Communicationpriy@nciPas encore d'évaluation

- ELE 374 Lab ReportDocument34 pagesELE 374 Lab Reportec09364100% (1)

- An Improved Recursive Formula For Calculating Shock Response Spectra-SRS-SmallwoodDocument7 pagesAn Improved Recursive Formula For Calculating Shock Response Spectra-SRS-SmallwoodjackPas encore d'évaluation

- Signal Processing in MATLABDocument3 pagesSignal Processing in MATLABRadu VancaPas encore d'évaluation

- Descriptive StatisticsDocument5 pagesDescriptive Statisticsidk bruhPas encore d'évaluation

- Super-Exponential Methods For Blind Deconvolution: Shalvi and Ehud Weinstein, IeeeDocument16 pagesSuper-Exponential Methods For Blind Deconvolution: Shalvi and Ehud Weinstein, IeeeengineeringhighPas encore d'évaluation

- Speech AssignmentDocument4 pagesSpeech AssignmentAnithPalatPas encore d'évaluation

- Shafer A Digital Signal Processing Approach To Interpolation IEEE Proc 1973Document11 pagesShafer A Digital Signal Processing Approach To Interpolation IEEE Proc 1973vitPas encore d'évaluation

- LECT - 2 Discrete-Time Systems ConvolutionDocument44 pagesLECT - 2 Discrete-Time Systems ConvolutionTazeb AyelePas encore d'évaluation

- Radar Signal Processing, V.K.anandanDocument68 pagesRadar Signal Processing, V.K.anandanptejaswini904439100% (1)

- Idomu SIR 10.1.1.39.1704Document5 pagesIdomu SIR 10.1.1.39.1704svitkauskasaccountPas encore d'évaluation

- Psuedo Sequence SignalDocument14 pagesPsuedo Sequence Signalzfa91Pas encore d'évaluation

- Aes Audio DigitalDocument40 pagesAes Audio DigitalAnonymous 2Ft4jV2Pas encore d'évaluation

- Lock in Amplification LabDocument5 pagesLock in Amplification LabMike ManciniPas encore d'évaluation

- Exp1 PDFDocument12 pagesExp1 PDFanon_435139760Pas encore d'évaluation

- Analogue Communication 1Document69 pagesAnalogue Communication 1Malu SakthiPas encore d'évaluation

- X, Given Its Mean Value. Given A Particular Value For The Mean, by Calculating TheDocument2 pagesX, Given Its Mean Value. Given A Particular Value For The Mean, by Calculating Theaftab20Pas encore d'évaluation

- Noise Pollution 601Document6 pagesNoise Pollution 601Chandra SPas encore d'évaluation

- Spline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsD'EverandSpline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsPas encore d'évaluation

- Interactions between Electromagnetic Fields and Matter: Vieweg Tracts in Pure and Applied PhysicsD'EverandInteractions between Electromagnetic Fields and Matter: Vieweg Tracts in Pure and Applied PhysicsPas encore d'évaluation

- Understanding Vector Calculus: Practical Development and Solved ProblemsD'EverandUnderstanding Vector Calculus: Practical Development and Solved ProblemsPas encore d'évaluation

- Analysis and Design of Multicell DC/DC Converters Using Vectorized ModelsD'EverandAnalysis and Design of Multicell DC/DC Converters Using Vectorized ModelsPas encore d'évaluation

- Mobile App ANSWER KEYDocument5 pagesMobile App ANSWER KEYKavitha SubramaniamPas encore d'évaluation

- Google Classroom DetailsDocument1 pageGoogle Classroom DetailsKavitha SubramaniamPas encore d'évaluation

- Problem Statements FOR HACKATHONDocument4 pagesProblem Statements FOR HACKATHONKavitha SubramaniamPas encore d'évaluation

- Expected Marks To Attainment 0.7 0.7 0.7 0.7 0.7 1.4 1.4 1.4 1.4 1.4 4.9 7 2.8 4.9 4.9 9.8 9.8Document6 pagesExpected Marks To Attainment 0.7 0.7 0.7 0.7 0.7 1.4 1.4 1.4 1.4 1.4 4.9 7 2.8 4.9 4.9 9.8 9.8Kavitha SubramaniamPas encore d'évaluation

- Nandha Engineering College, Erode Department of Cse Iii Cse A NamelistDocument5 pagesNandha Engineering College, Erode Department of Cse Iii Cse A NamelistKavitha SubramaniamPas encore d'évaluation

- One Credit Course: Chef Automation & Saas Ii CseDocument4 pagesOne Credit Course: Chef Automation & Saas Ii CseKavitha SubramaniamPas encore d'évaluation

- 8279Document32 pages8279Kavitha SubramaniamPas encore d'évaluation

- Po and Co MappingDocument1 pagePo and Co MappingKavitha SubramaniamPas encore d'évaluation

- Dma 8257Document22 pagesDma 8257Kavitha SubramaniamPas encore d'évaluation

- 80286Document28 pages80286Kavitha SubramaniamPas encore d'évaluation

- Nandha Engineering College, Erode-52: Continuous Assessment Test - III 15Cs404 Mobile ComputingDocument2 pagesNandha Engineering College, Erode-52: Continuous Assessment Test - III 15Cs404 Mobile ComputingKavitha SubramaniamPas encore d'évaluation

- No.: E Mail ID:: (Can Be Claimed Once But Cannot Be Every Year)Document3 pagesNo.: E Mail ID:: (Can Be Claimed Once But Cannot Be Every Year)Kavitha SubramaniamPas encore d'évaluation

- Min & MaxDocument13 pagesMin & MaxKavitha SubramaniamPas encore d'évaluation

- Embedded Systems: Co/Po PO1 PO2 PO3 PO4 PO5 PO6 PO7 PO8 PO9 PO1 0 PO1 1 CO.1 CO.2 CO.3 CO.4 CO.5 CODocument1 pageEmbedded Systems: Co/Po PO1 PO2 PO3 PO4 PO5 PO6 PO7 PO8 PO9 PO1 0 PO1 1 CO.1 CO.2 CO.3 CO.4 CO.5 COKavitha SubramaniamPas encore d'évaluation

- Embedded Systems Other College SyllabusDocument13 pagesEmbedded Systems Other College SyllabusKavitha SubramaniamPas encore d'évaluation

- Coverformat For Cat Even SemDocument3 pagesCoverformat For Cat Even SemKavitha SubramaniamPas encore d'évaluation

- Embedded SystemsDocument2 pagesEmbedded SystemsKavitha SubramaniamPas encore d'évaluation

- Instruction Set 8086Document63 pagesInstruction Set 8086Kavitha SubramaniamPas encore d'évaluation

- 8086 PPTDocument30 pages8086 PPTKavitha Subramaniam100% (1)

- UbiquitousDocument3 pagesUbiquitousKavitha SubramaniamPas encore d'évaluation

- Electromagnetism WorksheetDocument3 pagesElectromagnetism WorksheetGuan Jie KhooPas encore d'évaluation

- 7 ElevenDocument80 pages7 ElevenakashPas encore d'évaluation

- User ManualDocument96 pagesUser ManualSherifPas encore d'évaluation

- Process Industry Practices Insulation: PIP INEG2000 Guidelines For Use of Insulation PracticesDocument15 pagesProcess Industry Practices Insulation: PIP INEG2000 Guidelines For Use of Insulation PracticesZubair RaoofPas encore d'évaluation

- Reference by John BatchelorDocument1 pageReference by John Batchelorapi-276994844Pas encore d'évaluation

- Dance Terms Common To Philippine Folk DancesDocument7 pagesDance Terms Common To Philippine Folk DancesSaeym SegoviaPas encore d'évaluation

- Rocker ScientificDocument10 pagesRocker ScientificRody JHPas encore d'évaluation

- Instruction Manual 115cx ENGLISHDocument72 pagesInstruction Manual 115cx ENGLISHRomanPiscraftMosqueteerPas encore d'évaluation

- Bustax Midtem Quiz 1 Answer Key Problem SolvingDocument2 pagesBustax Midtem Quiz 1 Answer Key Problem Solvingralph anthony macahiligPas encore d'évaluation

- Nyambe African Adventures An Introduction To African AdventuresDocument5 pagesNyambe African Adventures An Introduction To African AdventuresKaren LeongPas encore d'évaluation

- Afzal ResumeDocument4 pagesAfzal ResumeASHIQ HUSSAINPas encore d'évaluation

- Comparitive Study of Fifty Cases of Open Pyelolithotomy and Ureterolithotomy With or Without Double J Stent InsertionDocument4 pagesComparitive Study of Fifty Cases of Open Pyelolithotomy and Ureterolithotomy With or Without Double J Stent InsertionSuril VithalaniPas encore d'évaluation

- A2 UNIT 5 Culture Teacher's NotesDocument1 pageA2 UNIT 5 Culture Teacher's NotesCarolinaPas encore d'évaluation

- Assembly InstructionsDocument4 pagesAssembly InstructionsAghzuiPas encore d'évaluation

- Corporate Tax Planning AY 2020-21 Sem V B.ComH - Naveen MittalDocument76 pagesCorporate Tax Planning AY 2020-21 Sem V B.ComH - Naveen MittalNidhi LathPas encore d'évaluation

- Example of Flight PMDG MD 11 PDFDocument2 pagesExample of Flight PMDG MD 11 PDFVivekPas encore d'évaluation

- Generator ControllerDocument21 pagesGenerator ControllerBrianHazePas encore d'évaluation