Académique Documents

Professionnel Documents

Culture Documents

Paralinguistic Information Processing: - Prosody

Transféré par

Codrin EneaTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Paralinguistic Information Processing: - Prosody

Transféré par

Codrin EneaDroits d'auteur :

Formats disponibles

6.

345 Automatic Speech Recognition

Paralinguistic Information Processing 1

Paralinguistic Information Processing

Prosody

Pitch tracking

Intonation, stress, and phrase boundaries

Emotion

Speaker Identification

Multi-modal Processing

Combined face and speaker ID

Lip reading & audio-visual speech recognition

Gesture & multi-modal understanding

Lecture # 23

Session 2003

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 2

Prosody

Prosody is term typically used to describe the extra-linguistic

aspects of speech, such as:

Intonation

Phrase boundaries

Stress patterns

Emotion

Statement/question distinction

Prosody is controlled by manipulation of

Fundamental frequency (F0)

Phonetic durations & speaking rate

Energy

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 3

Robust Pitch Tracking

Fundamental frequency (F0) estimation

Often referred to as pitch tracking

Crucial to the analysis and modeling of speech prosody

A widely studied problem with many proposed algorithms

One recent two-step algorithm (Wang, 2001)

Step 1: Estimate F0 and F0 frame each speech frame based on

harmonic matching

Step 2: Perform dynamic search with continuity constraints to find

optimal F0 stream

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 4

Harmonic template

Signal DLFT spectrum

(law-converted)

Discrete Logarithmic Fourier Transform

Logarithmically sampled narrow-band spectrum

Harmonic peaks have fixed spacing (logF0 + logN)

Derive F0 and F0 estimates through correlation

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 5

Two Correlation Functions

=

i

t

i

t

t

Tx

i X

n i X i T

n R

2

) (

) ( ) (

) (

i

t

i

t

i

t t

X X

i X i X

n i X i X

n R

t t

2

1

2

1

) ( ) (

) ( ) (

) (

1

Voiced

Unvoiced

Template - Frame Cross - Frame

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 6

Dynamic Programming Search

Optimal solution taking into account F0 and F0 constraints

Search space quantized such that F/F is constant

L

o

g

a

r

i

t

h

m

i

c

F

r

e

q

u

e

n

c

y

n correlatio frame - template : R , n correlatio frame - cross :

0

0 )} ( { max

TX

1

0

1

XX

TX

TX X X t j

t

R

) (t c) (i R

) (t c) (i R j i R (j) score

(i) score

t t t

=

> +

=

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 7

The Rhythmic Nature of Speech

Example using two read digit string types in Chinese

Random digit strings (5-10 digits per string)

Phone numbers (9 digits, e.g., 02 - 435 - 8264)

Both types show a declination in pitch (i.e. sentence downdrift)

Phone numbers show a predictable pattern or rhythm

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 8

Local Tones vs. Global Intonation

Position-dependent tone contours in phone numbers

Tone 1 Tone 2

Tone 3 Tone 4

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 9

Characterization of Phrase Contours

Phrases often carry distinction F0 contours

Canonical patterns for specific phrases can be observed

Some research conducted into characterizing prosodic contours

Phrase boundary markers

TOBI (Tone and Break Indices) labeling

Many unanswered questions

Do phrases have some set of predictable canonical patterns ?

How does prosodic phrase structures generalize to new utterances?

Are there interdependencies among phrases in the utterance ?

How can prosodic modeling help speech recognition and/or

understanding ?

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 10

Pilot Study of Phrasal Prosody in JUPITER

Five phrase types were studied:

<what_is>: what is, how is,

<tell_me>: tell me, give me,

<weather>: weather, forecast, dew point,

<SU>: Boston, Monday, ...

<US>: Detroit, tonight,

Phrases studied with a fixed sentence template:

Pitch contours for each example phrase were automatically

clustered into several subclasses

Mutual information of subclasses can predict which subclasses

are likely or unlikely to occur together in an utterance

<what_is> | <tell_me> the <weather> in | for | on <SU> | <US>

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 11

Subclasses Obtained by Clustering

K-means clustering on training data followed by selection

<what_is>

<tell_me>

<weather>

<SU>

<US>

C1

C7

C4

C6

C0

C3

C4

C0

C1

C3

C0

C2

C3

C1

C7

C2

C4

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 12

Example Utterances

1.

2.

3.

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 13

Mutual Information of Subclasses

MI = -0.58

MI = 0.67

Subclasses are

commonly used

together

Subclasses are

unlikely to occur

together

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 14

Emotional Speech

Emotional speech is difficult to recognize:

Neutral speech word error rate in Mercury: 15%

WER of happy speech in Mercury: 25%

WER of frustrated speech: 33%

Acoustic correlates of emotional/frustrated speech:

Fundamental frequency variation

Increased energy

Speaking rate & vowel duration

Hyper-articulation

Breathy sighs

Linguistic content can also indicate frustration:

Questions

Negative constructors

Derogatory terms

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 15

Spectrograms of an Emotional Pair

feb ruary t wen t y s i x th

neutral

frustrated

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 16

Emotion Recognition

Few studies of automatic emotional recognition exist

Common features used for utterance-based emotion recognition:

F0 features: mean, median, min, max, standard deviation

F0 features: mean positive slope, mean negative slope, std.

deviation, ratio of rising and falling slopes

Rhythm features: speaking rate, duration between voiced regions

Some results:

75% accuracy over six classes (happy, sad, angry, disgusted,

surprised, fear) using only mean and standard deviation of F0 (Huang

et al, 1998)

80% accuracy over fours classes (happy, sad, anger, fear) using 16

features (Dellaert et al, 1998)

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 17

Speaker Identification

Speaker verification: Accept or reject claimed identity

Typically used in applications requiring secure transactions

Not 100% reliable

* Speech is highly variable and easily distorted

Can be combined with other techniques

* Possession of a physical key

* Knowledge of a password

* Face ID or other biometric techniques

Speaker recognition: Identify speaker from set of known speakers

Typically used when speakers do not volunteer their identity

Example applications:

* Meeting transcription and indexing

* Voice mail summarization

* Power users of dialogue system

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 18

Speaker Identification Approaches

Potential features used for speaker ID

Formant frequencies (correlated with vocal tract length)

Fundamental frequency averages and contours

Phonetic durations and speaking rate

Word usage patterns

Spectral features (typically MFCCs) are most commonly used

Some modeling approaches:

Text Independent

* Global Gaussian Mixture Models (GMMs) (Reynolds, 1995)

* Phonetically-Structured GMMs

Text/Recognition Dependent

* Phonetically Classed GMMs

* Speaker Adaptive ASR Scoring (Park and Hazen, 2002)

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 19

Global GMMs

Training

Input waveforms for speaker i

split into fixed-length frames

Testing

Input feature vectors scored

against each speaker GMM

Feature Space

GMM for speaker i

p(x

n

|S

i

)

Feature vectors computed

from each frame of speech

GMMs trained from set of

feature vectors

One global GMM per speaker

Training

Utterance

p(x

1

|S

i

) + p(x

2

|S

i

)

Frame scores for each speaker

summed over entire utterance

= score for speaker i

Highest total score is

hypothesized speaker

Test

Utterance

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 20

Feature Space

Stops

Phonetically-Structured GMM

During training, use phonetic transcriptions to train phonetic

class GMMs for each speaker

Strong Fricatives

Vowels

Phonetically structured

GMM for speaker i

Combine class GMMs into a single structured model which

is then used for scoring as in the baseline system

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 21

phonetic class GMMs

for speaker i

fricatives

vowels

stops/

closures

Phonetic Classing

Train independent phone class GMMs w/o combination

Generate word/phone hypothesis from recognizer

Test

Utterance

SUMMIT

f ih f t tcl iy

Word hypothesis

f f

x

1

x

3

ih iy

x

2

x

6

t tcl

x

4

x

5

p(x

n

|S

i

,class(x

n

)) = score for speaker i

Score frames with class models of hypothesized phones

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 22

Speaker Adapted Scoring

Train speaker-dependent (SD) models for each speaker

Rescore hypothesis with SD models

Compute total speaker adapted score by interpolating SD

score with SI score

Get best hypothesis from recognizer using speaker-

independent (SI) models

SD models

for

speaker i

) , | ( p

SD i n n

S u x

SD Model

SUMMIT

(SI models)

Test

utterance

) | ( p

SI n n

u x

SI Model

fifty-five

Features

1

x

2

x

3

x

4

x

5

x

6

x

7

x

8

x

9

x

f ay v f ih f t tcl iy f ay v f ih f t tcl iy

Phones

) , | ( p

) , | ( p ) 1 ( ) , | ( p

) , | ( p

SI

SI SD

SA

i n n

i n n n i n n n

i n n

S u x

S u x S u x

S u x

+

=

K u c

u c

n

n

n

+

=

) (

) (

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 23

Two Experimental Corpora

Corpus

Description

Type of

Speech

#Speakers

Recording

Conditions

Training Data

Test Set Size

YOHO

LDC corpus for speaker

verification evaluation

Prompted Text

Combination lock phrases

(e.g. 34-25-86)

138 (106M, 32F)

Fixed telephone handset

Quiet office environment

8kHz band-limited

96 utterances

From 4 sessions

(~3 seconds each)

5520

Mercury

SLS corpus from

air-travel system

Spontaneous

conversational speech

in air-travel domain

38 (18M, 20F)

Variable telephone

Variable environment

Telephone channel

50-100 utterances

From 2-10 sessions

(variable length)

3219

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 24

Single Utterance Results

Experiment: closed set speaker recognition on single utterances

Results:

All approaches about equal on YOHO corpus

Speaker adaptive approach has poorest performance on Mercury

ASR recognition errors can degrade speaker ID performance

Classifier combination yields improvements over best system

Speaker ID Error Rate%

System

YOHO Mercury

Structured GMM (SGMM) 0.31 21.3

Phone Classing 0.40 21.6

Speaker Adaptive (SA) 0.31 27.8

SA+SGMM 0.25 18.3

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 25

0

5

10

15

20

25

30

0 2 4 6 8 10 12

# of Test Utterances

I

D

E

r

r

o

r

R

a

t

e

(

%

)

GMM (GMM)

Speaker Adaptive (SA)

GMM+SA

Results on Multiple Mercury Utterances

On multiple utterances, speaker adaptive scoring achieves lower

error rates than next best individual method

Relative error rate reductions of 28%, 39%, and 53% on 3, 5, and 10

utterances compared to baseline

11.6 %

5.5 %

14.3 %

10.3 %

13.1 %

7.4 %

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 26

Multi-modal Interfaces

Multimodal interfaces will enable more natural, flexible, efficient,

and robust human-computer interaction

Natural: Requires no special training

Flexible: Users select preferred modalities

Efficient: Language and gestures can be simpler than in uni-modal

interfaces (e.g., Oviatt and Cohen, 2000)

Robust: Inputs are complementary and consistent

Audio and visual signals both contain information about:

Identity of the person: Who is talking?

Linguistic message: What are they saying?

Emotion, mood, stress, etc.: How do they feel?

Integration of these cues can lead to enhanced capabilities for

future human computer interfaces

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 27

Face/Speaker ID on a Handheld Device

An iPaq handheld with Audio/Video Input/Output has been

developed as part of MIT Project Oxygen

Presence of multiple-input channels enables multi-modal

verification schemes

Prototype system uses a login scenario

Snap frontal face image

State name

Recite prompted lock combination phrase

System accepts or rejects user

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 28

Face Identification Approach

Face Detection by Compaq/HP

(Viola/Jones, CVPR 2001)

Efficient cascade of classifiers

Face Recognition by MIT AI

Lab/CBCL (Heisele et al, ICCV 2001)

Based on Support Vector Machines

(SVM)

Runtime face recognition: score

image against each SVM classifier

Implemented on iPaq handheld as

part of MIT Project Oxygen (E.

Weinstein, K. Steele, P. Ho, D.

Dopson)

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 29

Combined Face/Speaker ID

Multi-modal user login verification experiment using iPaq

Enrollment data:

Training data collected from 35 enrolled users

100 facial images and 64 lock combination phrases per user

Test data:

16 face/image pairs from 25 enrolled users

10 face/image pairs from 20 non-enrolled imposters

Evaluation metric: verification equal error rate (EER)

Equal likelihood of false acceptances and false rejections

Fused system reduces equal error rate by 50%

System Equal Error Rate

Face ID Only 7.30%

Speech ID Only 1.77%

Fused System 0.89%

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 30

How can we improve ASR performance?

Humans utilize facial expressions and gestures to augment

the speech signal

Facial cues can improve speech recognition in noise by up to

30 dB, depending on the task

Speech recognition performance can be improved by

incorporating facial cues (e.g., lip movements and mouth

opening)

Figure shows human

recognition performance

Low signal-to-noise ratios

Presented with audio with

video and audio only

Reference: Benoit, 1992

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 31

Audio Visual Speech Recognition (AVSR)

Integrate information about visual mouth/lip/jaw features with

features extracted from audio signal

Visual feature extraction:

Region of Interest (ROI): mostly lips and mouth; some tracking

Features: pixel-, geometric-, or shape-based

Almost all systems need to locate and track landmark points

Correlation and motion information not used explicitly

Example of pixel-based features (Covell & Darrell, 1999)

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 32

AVSR: Preliminary Investigations

Goal: integration with SUMMIT ASR system

Visually-derived measurements based on optical flow

Low-dimensional features represent opening & elongation

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 33

Issues with Audio/Visual Integration

Early vs. Late Integration

Early: concatenate feature vectors from different modes

Late: combine outputs of uni-modal classifiers

* Can be at many levels (phone, syllable, word, utt)

Channel Weighting Schemes

Audio channel usually provides more information

Based on SNR estimate for each channel

Preset weights by optimizing the error rate of a dev. set

Estimate separate weights for each phoneme or viseme

Modeling the audio/visual asynchrony

Many visual cues occur before the phoneme is actually pronounced

Example: rounding lips before producing rounded phoneme

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 34

AVSR: State of the Art

Example: Neti et al, 2000 (JHU Summer Workshop)

>10K word vocabulary

Training and development data: 264 subjects, 40 hours

Test data: 26 subjects, 2.5 hours

Quiet (19.5 dB SNR) and noisy (8.5 dB SNR) conditions

Conditions Clean WER (%) Noisy WER (%)

Audio Only 14.4 48.1

AVSR 13.5 35.3

Conditions Clean WER (%) Noisy WER (%)

Audio Only 14.4 48.1

AVSR 13.5 35.3

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 35

Multi-modal Interaction Research

Understanding the science

How do humans do it (e.g. expressing cross modality context)?

What are the important cues?

Developing an architecture that can adequately describe the

interplays of modalities

LANGUAGE

UNDERSTANDING

LANGUAGE

UNDERSTANDING

SPEECH

RECOGNITION

SPEECH

RECOGNITION

GESTURE

RECOGNITION

GESTURE

RECOGNITION

HANDWRITING

RECOGNITION

HANDWRITING

RECOGNITION

MOUTH & EYES

TRACKING

MOUTH & EYES

TRACKING

MULTI-MODAL

GENERATION

MULTI-MODAL

GENERATION

US

NW

US

NW

DL

279

317

1507

1825

4431

6:00 A.M.

6:50 A.M.

7:00 A.M.

7:40 A.M.

7:40 A.M.

7:37 A.M.

8:27 A.M.

8:40 A.M.

9:19 A.M.

9:45 A.M.

Airline Flight Departs Arrives

DIALOGUE

MANAGER

DIALOGUE

MANAGER

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 36

Multi-modal Interfaces

Timing information is a useful way to relate inputs

Does this mean

yes, one, or

something else?

Inputs need to be understood in the proper context

Where is she looking or

pointing at while saying

this and there?

Move this one

over there

Are there any

over here?

What does he mean by any,

and what is he pointing at?

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 37

Multi-modal Fusion: Initial Progress

All multi-modal inputs are synchronized

Speech recognizer generates absolute times for words

Mouse and gesture movements generate {x,y,t} triples

Speech understanding constrains gesture interpretation

Initial work identifies an object or a location from gesture inputs

Speech constrains what, when, and how items are resolved

Object resolution also depends on information from application

Speech: Move this one over here

Pointing: (object) (location)

time

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 38

Multi-modal Demonstration

Manipulating planets in a

solar-system application

Continuous tracking of

mouse or pointing gesture

Created w. SpeechBuilder

utility with small changes

(Cyphers, Glass, Toledano &

Wang)

Standalone version runs with

mouse/pen input

Can be combined with

gestures from determined

from vision (Darrell &

Demirdjien)

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 39

OUTPUT

GENERATION

OUTPUT

GENERATION

DIALOGUE

MANAGEMENT

DIALOGUE

MANAGEMENT

CONTEXT

RESOLUTION

CONTEXT

RESOLUTION

LANGUAGE

UNDERSTANDING

LANGUAGE

UNDERSTANDING

SPEECH

RECOGNITION

SPEECH

RECOGNITION

MULTI-MODAL

SERVER

MULTI-MODAL

SERVER

SPEECH

UNDERSTANDING

SPEECH

UNDERSTANDING

Recent Activities: Multi-modal Server

General issues:

Common meaning representation

Semantic and temporal compatibility

Meaning fusion mechanism

Handling uncertainty

GESTURE

UNDERSTANDING

GESTURE

UNDERSTANDING

PEN-BASED

UNDERSTANDING

PEN-BASED

UNDERSTANDING

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 40

Summary

Speech carries paralinguistic content:

Prosody, intonation, stress, emphasis, etc.

Emotion, mood, attitude,etc.

Speaker specific characteristics

Multi-modal interfaces can improve upon speech-only systems

Improved person identification using facial features

Improved speech recognition using lip-reading

Natural, flexible, efficient, and robust human-computer interaction

6.345 Automatic Speech Recognition

Paralinguistic Information Processing 41

References

C. Benoit, The intrinsic bimodality of speech communication and the synthesis of talking

faces, Journal on Communications, September 1992.

M. Covell and T. Darrell, Dynamic occluding contours: A new external-energy term for

snakes, CVPR, 1999.

F. Dellaert, T. Polzin, and A. Waibel, Recognizing emotion in speech, ICSLP, 1998.

B. Heisele, P. Ho, and T. Poggio, Face recognition with support vector machines: Global

versus component-based approach, ICCV, 2001.

T. Huang, L. Chen, and H. Tao, Bimodal emotion recognition by man and machine, ATR

Workshop on Virtual Communication Environments, April 1998.

C. Neti, et al, Audio-visual speech recognition, Tech Report CLSP/Johns Hopkins

University, 2000.

S. Oviatt and P. Cohen, Multimodal interfaces that process what comes naturally, Comm.

of the ACM, March 2000.

A. Park and T. Hazen, ASR dependent techniques for speaker identification, ICSLP, 2002.

D. Reynolds, Speaker identification and verification using Gaussian mixture speaker

models, Speech Communication, August 1995.

P. Viola and M. Jones, Rapid object detection using a boosted cascade of simple

features, CVPR, 2001.

C. Wang, Prosodic modeling for improved speech recognition and understanding, PhD

thesis, MIT, 2001.

E. Weinstein, et al, Handheld face identification technology in a pervasive computing

environment, Pervasive 2002.

Vous aimerez peut-être aussi

- Spoken Language Understanding: Systems for Extracting Semantic Information from SpeechD'EverandSpoken Language Understanding: Systems for Extracting Semantic Information from SpeechPas encore d'évaluation

- Automatic Speech RecognitionDocument34 pagesAutomatic Speech RecognitionRishabh PurwarPas encore d'évaluation

- Presentation On Speech RecognitionDocument11 pagesPresentation On Speech Recognitionaditya_4_sharmaPas encore d'évaluation

- Advanced Topics in Speech Processing (IT60116) : K Sreenivasa Rao School of Information Technology IIT KharagpurDocument17 pagesAdvanced Topics in Speech Processing (IT60116) : K Sreenivasa Rao School of Information Technology IIT KharagpurArjya SarkarPas encore d'évaluation

- A Brief Introduction To Automatic Speech RecognitionDocument22 pagesA Brief Introduction To Automatic Speech RecognitionPham Thanh PhuPas encore d'évaluation

- Language Modeling Lecture1112Document47 pagesLanguage Modeling Lecture1112jeysamPas encore d'évaluation

- Natural Language ProcessingDocument57 pagesNatural Language Processingআশফাকুর রহমান আরজুPas encore d'évaluation

- Digital Speech ProcessingDocument46 pagesDigital Speech ProcessingprabhaPas encore d'évaluation

- Voice RecognitionDocument31 pagesVoice RecognitionAmrita052760% (5)

- NLP ADocument6 pagesNLP ARAMYA KANAGARAJ AutoPas encore d'évaluation

- Natural Language ProcessingDocument27 pagesNatural Language ProcessingAbhishek SainiPas encore d'évaluation

- Speech Recognition ArchitectureDocument13 pagesSpeech Recognition ArchitectureDhrumil DasPas encore d'évaluation

- SpeechLab - Speech Verification System OverviewDocument17 pagesSpeechLab - Speech Verification System OverviewAmrPas encore d'évaluation

- Speech Recognition SeminarDocument19 pagesSpeech Recognition Seminargoing12345Pas encore d'évaluation

- Design and ImplementationDocument74 pagesDesign and ImplementationEmPas encore d'évaluation

- Term Paper ECE-300 Topic: - Speech RecognitionDocument14 pagesTerm Paper ECE-300 Topic: - Speech Recognitionhemprakash_dev53Pas encore d'évaluation

- Speech RecognitionDocument4 pagesSpeech RecognitionDinesh ChoudharyPas encore d'évaluation

- NLP TopperDocument71 pagesNLP Toppertejal palwankarPas encore d'évaluation

- Natural Language Processing: by Dr. Parminder KaurDocument26 pagesNatural Language Processing: by Dr. Parminder KaurRiya jainPas encore d'évaluation

- Speech Recognition (Dr. M. Sabarimalai ManikandanDocument2 pagesSpeech Recognition (Dr. M. Sabarimalai ManikandanasmmjanPas encore d'évaluation

- IT Report-1Document14 pagesIT Report-1khushalPas encore d'évaluation

- Ctopp Presentation - Second Version - ModifiedDocument22 pagesCtopp Presentation - Second Version - Modifiedapi-163017967Pas encore d'évaluation

- Natural Language Processing: Dr. Abdulfetah A.ADocument25 pagesNatural Language Processing: Dr. Abdulfetah A.AJemal YayaPas encore d'évaluation

- AI6122 Topic 1.2 - WordLevelDocument63 pagesAI6122 Topic 1.2 - WordLevelYujia TianPas encore d'évaluation

- Feature Extraction Using PCADocument36 pagesFeature Extraction Using PCAKamaraj NaiduPas encore d'évaluation

- English - Afaan Oromoo Machine Translation: An Experiment Using A Statistical ApproachDocument17 pagesEnglish - Afaan Oromoo Machine Translation: An Experiment Using A Statistical ApproachChristine GhaliPas encore d'évaluation

- 11-682: Introduction To Human Language Technologies: Speech Synthesis IDocument25 pages11-682: Introduction To Human Language Technologies: Speech Synthesis IRavi Kumar SinhaPas encore d'évaluation

- Introduction To Natural Language ProcessingDocument45 pagesIntroduction To Natural Language ProcessingMahesh YadavPas encore d'évaluation

- Language ThesisDocument14 pagesLanguage Thesisneskic86Pas encore d'évaluation

- GGDSD College: Speech RecognitionDocument33 pagesGGDSD College: Speech RecognitionDeep SainiPas encore d'évaluation

- NLP Part1Document67 pagesNLP Part1QADEER AHMADPas encore d'évaluation

- IntroductionDocument49 pagesIntroductionsaisuraj1510Pas encore d'évaluation

- Lecture 1 Text Preprocessing PDFDocument29 pagesLecture 1 Text Preprocessing PDFAmmad AsifPas encore d'évaluation

- Automatic Speech RecognitionDocument30 pagesAutomatic Speech Recognitionanand_seshamPas encore d'évaluation

- Large Language Models: Dr. Asgari, Dr. Rohban, Soleymani Fall 2023Document53 pagesLarge Language Models: Dr. Asgari, Dr. Rohban, Soleymani Fall 2023caxoxi6154Pas encore d'évaluation

- 5th Sem SyllabusDocument13 pages5th Sem SyllabusBbushan YadavPas encore d'évaluation

- NLP For Social Media Lecture 5: Direct Processing of Social Media DataDocument35 pagesNLP For Social Media Lecture 5: Direct Processing of Social Media DataRaziAhmedPas encore d'évaluation

- Speech Recognition PresentationDocument36 pagesSpeech Recognition PresentationPrafull Agrawal100% (1)

- Speech Recognition Using Neural Networks: A. Types of Speech UtteranceDocument24 pagesSpeech Recognition Using Neural Networks: A. Types of Speech UtterancejwalithPas encore d'évaluation

- Enhancing Non-Native Accent Recognition Through A Combination of Speaker Embeddings, Prosodic and Vocal Speech FeaturesDocument13 pagesEnhancing Non-Native Accent Recognition Through A Combination of Speaker Embeddings, Prosodic and Vocal Speech FeaturessipijPas encore d'évaluation

- Speech Recognition1Document39 pagesSpeech Recognition1chayan_m_shah100% (1)

- Why We Study Automata TheoryDocument27 pagesWhy We Study Automata TheoryAnamika Maurya0% (1)

- Comparison:Four Approaches To Automatic Language Identification of Telephone SpeechDocument14 pagesComparison:Four Approaches To Automatic Language Identification of Telephone SpeechHuong LePas encore d'évaluation

- Mr. Sibananda Panda Mca 4 SemisterDocument18 pagesMr. Sibananda Panda Mca 4 SemisterAmber AgrawalPas encore d'évaluation

- Etman Paper1Document13 pagesEtman Paper1Sana IsamPas encore d'évaluation

- Speech Processing: Lecture 9 - Large Vocabulary Continuous Speech Recognition (LVCSR)Document32 pagesSpeech Processing: Lecture 9 - Large Vocabulary Continuous Speech Recognition (LVCSR)trlp1712Pas encore d'évaluation

- Unit - 5 Natural Language ProcessingDocument66 pagesUnit - 5 Natural Language ProcessingMeghaPas encore d'évaluation

- Introduction To Computational Linguistics: CS 5890 University of Colorado at Colorado SpringsDocument29 pagesIntroduction To Computational Linguistics: CS 5890 University of Colorado at Colorado SpringsWilia SiskaPas encore d'évaluation

- Report 123Document47 pagesReport 123Siva KrishnaPas encore d'évaluation

- Chapter 2 Part 1 & 2Document58 pagesChapter 2 Part 1 & 2S JPas encore d'évaluation

- LOT WS 2011 IntroductionDocument78 pagesLOT WS 2011 IntroductionJaven BPas encore d'évaluation

- Interactive Telecommunication Systems and Services: Ing. Stanislav Ondáš, PHDDocument35 pagesInteractive Telecommunication Systems and Services: Ing. Stanislav Ondáš, PHDDarkeye18Pas encore d'évaluation

- Natural Language Processing Notes by Prof. Suresh R. Mestry: L I L L L IDocument41 pagesNatural Language Processing Notes by Prof. Suresh R. Mestry: L I L L L Ini9khilduck.comPas encore d'évaluation

- Whitepaper How AI Speech Models WorkDocument18 pagesWhitepaper How AI Speech Models WorkVmpPas encore d'évaluation

- Natural Language ProcessingDocument49 pagesNatural Language ProcessingBharti Gupta100% (3)

- Electrical Engineering (2017-2021) Punjab Engineering College, Chandigarh - 160012Document23 pagesElectrical Engineering (2017-2021) Punjab Engineering College, Chandigarh - 160012202002025.jayeshsvmPas encore d'évaluation

- Developing Speech To Text Messaging System Using Android PlatformDocument31 pagesDeveloping Speech To Text Messaging System Using Android PlatformKyaw Myint NaingPas encore d'évaluation

- Lecture 6-1Document12 pagesLecture 6-1TibyanPas encore d'évaluation

- A Speech RecognitionDocument19 pagesA Speech RecognitionAsan D'VitaPas encore d'évaluation

- Implementation of Speech Synthesis System Using Neural NetworksDocument4 pagesImplementation of Speech Synthesis System Using Neural NetworksInternational Journal of Application or Innovation in Engineering & ManagementPas encore d'évaluation

- LVCore3 2009 ExerciseManual Eng PDFDocument156 pagesLVCore3 2009 ExerciseManual Eng PDFCodrin EneaPas encore d'évaluation

- Reactive Agents: Masma - Lab 2Document5 pagesReactive Agents: Masma - Lab 2Codrin EneaPas encore d'évaluation

- Lesson 1 - Setting Up HardwareDocument33 pagesLesson 1 - Setting Up HardwareCodrin EneaPas encore d'évaluation

- Lesson 2 - Navigating LabVIEWDocument61 pagesLesson 2 - Navigating LabVIEWCodrin EneaPas encore d'évaluation

- LabVIEW Core 3 v2009 Release NotesDocument1 pageLabVIEW Core 3 v2009 Release NotesCodrin EneaPas encore d'évaluation

- Mta html5 PDFDocument297 pagesMta html5 PDFCodrin Enea100% (1)

- Bracknell Campus Map - Office Buildings and Car ParksDocument1 pageBracknell Campus Map - Office Buildings and Car ParksCodrin EneaPas encore d'évaluation

- Use Magnetic Energy To Heal The WorldDocument230 pagesUse Magnetic Energy To Heal The WorldyakyyakyPas encore d'évaluation

- 147-LabVIEWCore1ExerciseManual 2009 Eng PDFDocument204 pages147-LabVIEWCore1ExerciseManual 2009 Eng PDFCodrin EneaPas encore d'évaluation

- CLAD Study GuideDocument45 pagesCLAD Study GuideRicardo Davalos MPas encore d'évaluation

- Basic Proficiency QuizDocument3 pagesBasic Proficiency QuizCodrin EneaPas encore d'évaluation

- FACTS Devices Chapter 1 2 3 4Document14 pagesFACTS Devices Chapter 1 2 3 4Codrin EneaPas encore d'évaluation

- Girl TalkDocument46 pagesGirl Talkcshubham23Pas encore d'évaluation

- MainframeDocument14 pagesMainframeCodrin EneaPas encore d'évaluation

- Reliability Test SystemDocument20 pagesReliability Test SystemCodrin EneaPas encore d'évaluation

- Lulu - MCTS Windows 7 Configuring 70-680 Exam Study Guide (2009)Document375 pagesLulu - MCTS Windows 7 Configuring 70-680 Exam Study Guide (2009)unique22Pas encore d'évaluation

- Interview Tips - Complete GuideDocument7 pagesInterview Tips - Complete GuideCodrin EneaPas encore d'évaluation

- Chpt.3 Nuclear EnergyDocument25 pagesChpt.3 Nuclear EnergyCodrin EneaPas encore d'évaluation

- CalculdistDocument1 pageCalculdistCodrin EneaPas encore d'évaluation

- 08 Communications SystemsDocument38 pages08 Communications SystemsCodrin EneaPas encore d'évaluation

- Correless Induction Furnace G2+Document7 pagesCorreless Induction Furnace G2+Codrin EneaPas encore d'évaluation

- Technical Requirements ElectromobilityDocument18 pagesTechnical Requirements ElectromobilityCodrin EneaPas encore d'évaluation

- Subiecte UEE Eng 12 IanDocument2 pagesSubiecte UEE Eng 12 IanCodrin EneaPas encore d'évaluation

- Registering For A Tax ReturnDocument2 pagesRegistering For A Tax ReturnCodrin EneaPas encore d'évaluation

- Chpt.2 HidropowerDocument12 pagesChpt.2 HidropowerCodrin EneaPas encore d'évaluation

- The Impact of Intellectual Capital Management On Company Competitiveness and Financial PerformanceDocument11 pagesThe Impact of Intellectual Capital Management On Company Competitiveness and Financial PerformanceCodrin EneaPas encore d'évaluation

- Curs - 4+5 - Lamps en - UEE - G3+Document17 pagesCurs - 4+5 - Lamps en - UEE - G3+Codrin EneaPas encore d'évaluation

- Course - 11 Chiar Nu Stiu Despre Ce EsteDocument11 pagesCourse - 11 Chiar Nu Stiu Despre Ce EsteCodrin EneaPas encore d'évaluation

- Hala de Productie: Partner For Contact: Enea Codrin Order No.: Company: Student Customer No.Document7 pagesHala de Productie: Partner For Contact: Enea Codrin Order No.: Company: Student Customer No.Codrin EneaPas encore d'évaluation

- Brushless DC (BLDC) Motor FundamentalsDocument20 pagesBrushless DC (BLDC) Motor FundamentalsEdo007100% (1)

- WestbyDocument3 pagesWestbyEllyn Grace100% (1)

- Structural Shift in Agatha Christie's Mrs. McGinty's DeadDocument14 pagesStructural Shift in Agatha Christie's Mrs. McGinty's DeadRizky MaisyarahPas encore d'évaluation

- Stress & Intonation PracticeDocument8 pagesStress & Intonation PracticeShumaila ZafarPas encore d'évaluation

- Y Sangala NG 101Document5 pagesY Sangala NG 101Demetria Reed0% (1)

- Cronkite News Jul 1 2019-Sep 30 2019 Mco 438Document3 pagesCronkite News Jul 1 2019-Sep 30 2019 Mco 438api-542423116Pas encore d'évaluation

- Translating Terminology Sue Muhr-WalkerDocument19 pagesTranslating Terminology Sue Muhr-WalkerMunkhsoyol GanbatPas encore d'évaluation

- 8 4 2016-GrammarDocument3 pages8 4 2016-GrammarDerek AlistairPas encore d'évaluation

- AMU2439 - EssayDocument4 pagesAMU2439 - EssayFrancesca DivaPas encore d'évaluation

- Alyssa Thorpe Curriculum AuditDocument8 pagesAlyssa Thorpe Curriculum Auditapi-380343266Pas encore d'évaluation

- Learning Activity Sheet: QUARTER 1/first SemesterDocument14 pagesLearning Activity Sheet: QUARTER 1/first SemesterKhylie VaklaPas encore d'évaluation

- Investigating The Use of A Website As A Promotional Tool by Matjhabeng Non-Profit OrganizationsDocument6 pagesInvestigating The Use of A Website As A Promotional Tool by Matjhabeng Non-Profit OrganizationsInternational Organization of Scientific Research (IOSR)Pas encore d'évaluation

- French IPADocument7 pagesFrench IPAyusef_behdari100% (4)

- NDM SyllabusDocument2 pagesNDM Syllabuskrisha vasuPas encore d'évaluation

- SyllabusDocument2 pagesSyllabusNathaniel BaconesPas encore d'évaluation

- 684 Guess Paper Business Communication - B.Com IIDocument2 pages684 Guess Paper Business Communication - B.Com IIkuchemengPas encore d'évaluation

- Group 3 - BC ReportDocument11 pagesGroup 3 - BC ReportSanskriti sahuPas encore d'évaluation

- TEFL Lesson Plan ITTTDocument1 pageTEFL Lesson Plan ITTTSarah Drumm GusterPas encore d'évaluation

- Lesson Closure StrategiesDocument5 pagesLesson Closure Strategiesapi-321128505Pas encore d'évaluation

- UTD Communications Management Plan TemplateDocument9 pagesUTD Communications Management Plan Templatethi.morPas encore d'évaluation

- Sample IGCSE TestsDocument57 pagesSample IGCSE Testssahara1999_991596100% (6)

- Junior It Representative at Sunculture Kenya LTDDocument15 pagesJunior It Representative at Sunculture Kenya LTDMigori Art DataPas encore d'évaluation

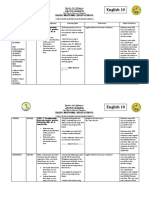

- English 10: Dalig National High SchoolDocument3 pagesEnglish 10: Dalig National High SchoolRhine Crbnl100% (1)

- Week 4 Occ Learning Activity SheetDocument8 pagesWeek 4 Occ Learning Activity SheetAngelynne Bagaconza MirabelPas encore d'évaluation

- Grade 10 Conjunctions in Spoken Texts LPDocument4 pagesGrade 10 Conjunctions in Spoken Texts LPJoshua Lander Soquita CadayonaPas encore d'évaluation

- Ders 3 CeitDocument2 pagesDers 3 Ceitapi-644134344Pas encore d'évaluation

- Diploma PPTT End Term Assignment1Document17 pagesDiploma PPTT End Term Assignment1rupee50% (18)

- The Impact of Social Media Influencers in Tourism, Francesca Magnoa and Fabio CassiabDocument4 pagesThe Impact of Social Media Influencers in Tourism, Francesca Magnoa and Fabio CassiabHồng HạnhPas encore d'évaluation

- KannadaDocument111 pagesKannadasangogiPas encore d'évaluation

- Blended Learning Student SurveyDocument6 pagesBlended Learning Student Surveyemmabea pardo0% (1)

- Assgn1 2 3 4 5 6 7 in Living in The It Era Deadline Before Feb 26 2022Document8 pagesAssgn1 2 3 4 5 6 7 in Living in The It Era Deadline Before Feb 26 2022Sandagon, Valerian V.Pas encore d'évaluation