Académique Documents

Professionnel Documents

Culture Documents

Benchmarks

Transféré par

PauloArmando0 évaluation0% ont trouvé ce document utile (0 vote)

25 vues28 pagesPerformance of application and performance of Application and System Benchmarks 2 Lecture Outline Following Topics will be discussed. Performance Characteristics of Sustained Performance How to measure Sustained Performance of a parallel system? v Benchmark A benchmark is a test program that supposedly captures processing and data movement characteristics of a class of applications v are used to reveal their architectural weak and strong points.

Description originale:

Copyright

© © All Rights Reserved

Formats disponibles

PDF, TXT ou lisez en ligne sur Scribd

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentPerformance of application and performance of Application and System Benchmarks 2 Lecture Outline Following Topics will be discussed. Performance Characteristics of Sustained Performance How to measure Sustained Performance of a parallel system? v Benchmark A benchmark is a test program that supposedly captures processing and data movement characteristics of a class of applications v are used to reveal their architectural weak and strong points.

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF, TXT ou lisez en ligne sur Scribd

0 évaluation0% ont trouvé ce document utile (0 vote)

25 vues28 pagesBenchmarks

Transféré par

PauloArmandoPerformance of application and performance of Application and System Benchmarks 2 Lecture Outline Following Topics will be discussed. Performance Characteristics of Sustained Performance How to measure Sustained Performance of a parallel system? v Benchmark A benchmark is a test program that supposedly captures processing and data movement characteristics of a class of applications v are used to reveal their architectural weak and strong points.

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF, TXT ou lisez en ligne sur Scribd

Vous êtes sur la page 1sur 28

1

Performance of Application and Performance of Application and

System Benchmarks System Benchmarks

2

Lecture Outline

Following Topics will be discussed

v Peak and Sustained performance

v Benchmarks

v Classification of Benchmarks

v Macro Benchmarks

v Micro Benchmarks

v Results of benchmarks on PARAM 10000

Application and System Benchmarks

3

Approaches to measure performance

v Several Approaches exist to measure performance of a computer

system

Summarize key architectural issues of a system and relate

Engineering or design considerations rather than theoretical

calculations

Observe run times for defined set of programs

Performance Characteristics

4

Peak Performance

v Measurement in MFLOPS

v Maximum number of operations that the hardware can execute in

parallel or concurrently

v Rough hardware measure - reflects the cost of the system

v Rare instances of implication

Performance Characteristics: Peak Performance

5

Sustained Performance

v Highest MFLOPS rate that can actual program achieve doing

something recognizably useful for certain length of time

v It essentially provide an upper bound on what a programmer may

be able to achieve

Efficiency rate = The achieved (sustained) performance divided

by the peak performance

Note : The advantage of this number is it is independent of any

absolute speed.

Performance Characteristics: Sustained Performance

6

Sustained Performance

How to measure sustained performance of a parallel system?

v Use benchmarks

v Wide Spectrum of Benchmarks in case of parallel computer

v SYSTEM BUYERs choice as per application requirements

Performance Characteristics: Sustained Performance

(Contd)

7

Benchmark

A benchmark is a test program that

v supposedly captures processing and data movement

characteristics of a class of applications

v are used to reveal their architectural weak and strong points.

v are significantly more reliable than peak performance numbers.

v Note : Sometimes benchmark measurement may be slanted

because of an idiosyncrasy of the compiler

Performance: Benchmarks

8

Benchmark

A benchmark suite = set of programs + set of rules

Platform, input data, the output data results, and the

performance metrics

Gives idea of performance and scalability of a parallel system

Benchmarks can be full-fledged applications or just kernels

Performance: Benchmarks

(Contd)

9

Benchmark Classification

v Benchmarks can be classified according to applications

Scientific Computing

Commercial applications

Network services

Multi media applications

Signal processing

v Benchmark can also be classified as

Micro benchmarks and Macro benchmarks

Performance: Benchmarks Classification

10

Micro Benchmarks

Micro benchmarks tend to be synthetic kernels. Micro benchmarks

measure a specific aspect of computer system.

v CPU speed

v Memory speed

v I/O speed

v Operating system performance

v Networking

Performance: Micro Benchmarks

(Contd)

11

Micro Benchmarks

Representative Micro Benchmark Suits.

LMBENCH

STREAM Memory Bandwidth

System Calls and data movement

operations in UNIX

Numerical Computing (Linear Algebra)

LINPACK

LAPACK

ScaLAPACK

Name

Area

Performance: Micro Benchmarks

(Contd)

12

Macro Benchmarks

v A macro benchmark measures the performance of computer

system as a whole.

v It compares different systems with respect to an application

class, and is useful for the system BUYER.

v However, macro benchmarks do not reveal why a system

performs well or badly.

Performance: Macro Benchmarks

13

NAS Parallel Computing (CFD)

PARKBENCH Parallel Computing

SPEC A mixed benchmark family

Splash Parallel Computing

STAP Signal Processing

TPC Commercial Applications

Name Area

Macro Benchmarks

Performance: Macro Benchmarks

(Contd)

14

Micro Benchmarks

The LINPACK

v The LINPACK benchmark was created and is maintained by Jack

Dongarra at the University of Tennessee.

v It is a collection of Fortran subroutines that solve linear system of

equations and linear least square problems.

v Matrices can be general, banded, symmetric indefinite, symmetric

positive definite, triangular, and tri-diagonal square.

v LINPACK has been modified to ScaLAPACK for distributed-

memory parallel computers.

Micro Benchmarks: LINPACK

15

Objective : Performance of system of Linear equations (LU

factorization) using MPI or PVM for a MIMD machine.

v One can use LINPACK (ScaLAPACK version) maintained by Jack

Dongarra, University of Tennessee

v Estimated performance equations exist but one should know the

following characteristics of any parallel computer

Algorithm time

Communication time

Computation time

Micro Benchmarks: LINPACK

(Contd)

16

LINPACK : Optimization

v Arrangement of data in local memory of each process

v Hierarchical memory features usage for uniprocessor code

performance

v Arrangement of matrix elements within each block

v Matrix blocks in the local memory

v Data in each block to be contiguous in physical memory

v Startup sufficiently large - it is preferable to send data as one large

message rather than as several smaller messages

Micro Benchmarks: LINPACK

(Contd)

17

LINPACK : Optimization

v Overlapping communication and computation

v Tradeoff between load imbalance and communication

v Portability and ease of code maintenance

v Assignment of processes to processors (topology)

Micro Benchmarks: LINPACK

(Contd)

18

Micro Benchmarks

LAPACK : (Used for SMP node performance)

v To obtain sustained performance on one SMP node for

Numerical Linear Algebra Computations

v Highly tuned libraries of Matrix Computations may yield good

performance for LAPACK

v Exploit BLAS Levels 1,2,3 to get performance

v Underlying concept

Use block partitioned algorithms to minimize data

movement between different levels in hierarchical

Micro Benchmarks: LAPACK

19

Micro Benchmarks

LAPACK : (Used for SMP node Configuration )

v Goal is to modify EISPACK and LAPACK libraries run efficiently

on shared-memory architectures

v LAPACK can be regarded as a successor to LINPACK and

EISPACK

v LAPACK gives good performance on current Hierarchical memory

computing systems

Reorganizing the algorithms to use block operations for matrix

computations

Optimized for each architecture to account for the memory

hierarchy

Micro Benchmarks: LAPACK

(Contd)

20

ScaLAPACK (Parallel LAPACK)

v Library of functions for solving problems in Numerical Linear

Algebra Computations on distributed memory systems

v It is based on library LAPACK, ScaLAPACK stands for Scalable

LAPACK.

v LAPACK obtains both portability and high performance by relying

on another library the BLAS (Basic Linear Algebra Sub-program

Library)

v The BLAS performs common operations such as dot product

matrix vector product, matrix-matrix product.

Micro Benchmarks: ScaLAPACK

21

ScaLAPACK (Parallel LAPACK)

The separate libraries are :

v PBLAS (Parallel BLAS)

v BLACS (Basic Communication Sub-programs)

v In order to map matrices and rectors to processes, the libraries

(BLACS, PBLAS, and ScaLAPACK) rely on the complementary

concepts of process grid and block cyclic mapping.

v The libraries create a virtual rectangular grid of processors much

like a topology in MPI to map the matrices and vectors to physical

processors

Micro Benchmarks: ScaLAPACK

(Contd)

22

Micro Benchmarks: ScaLAPACK

ScaLAPACK

LAPACK PB-BLAS Parallel BLAS

Optimised BLACS libraries

for PARAM 10000

Highly tuned Sun Performance

libraries for SMPs (BLAS , , )

Communication Primitives

(MPI, CDAC-MPI)

(Contd)

23

v The LMbench benchmark suite is maintained by Larry McVoy of

SGI.

Focus attention on basic building blocks of many common

computing systems performance issues

It is a portable benchmark aiming at attempting to measure the

most commonly found performance bottlenecks in a wide

range of system applications - latency and bandwidth of data

movement among processors, memory, network, file system

and disk.

It is a simple, yet very useful tool for identifying performance

bottlenecks and for the design of a system. It takes no

advantage of SMP system. It is meant to be a uniprocessor

benchmark.

Micro Benchmarks: LMbench

24

v Bandwidth : Memory Bandwidth; Cached I/O Bandwidth

v Latency : Memory read Latency; Memory Write Latency

v Signal Handling Cost

v Process creation costs; Null System Call; Context Switching (To

measure System overheads)

v IPC Latency: Pipe, TCP/ RPC/UDP Latency, File System Latency;

Disk Latency

v Memory latencies in nanoseconds - smaller is better

Clock Speed (Mhz) : 292 , L1 Cache : 6, L2 Cache : 33,

Main memory : 249

Micro Benchmarks: LMbench

(Contd)

25

v The Stream is a simple synthetic benchmark maintained by John

McCalpin of SGI.

It measures sustainable memory bandwidth (in MB/s) and the

corresponding computation rate.

The motivation for developing the STREAM benchmark is that

processors are getting faster more quickly than memory, and

more programs will be limited in performance by the memory

bandwidth, rather than by the processors speed.

The benchmark is designed to work with data sets much

larger than the available cache

The STREAM Benchmark performs four operations for

number of iterations with unit stride access.

Micro Benchmarks: STREAM

26

Micro Benchmarks: STREAM

2 24 a(i) =b(i) + q x c(i) TRIAD

1 24 a(i) = b(i) + c(i) SUM

1 16 a(i)=q x b(i) SCALE

0 16 a(i)=b(i) COPY

Flop/

Iteration

Byte/

Iteration

Code Name

v Machine Balance Metric =

Peak floating-point (flop/s)

Sustained TRIAD memory bandwidth (word/s)

v The machine balance metric can be interpreted as the number of

flop that can be executed in the time period to read/write a word.

v The machine balance values of many systems have been

increasing over the years, implying that memory bandwidth lags

more and more behind processor speed.

(Contd)

27

Micro Benchmarks: STREAM

Array size = 10000000

Your clock granularity/precision appears to be 1 microseconds.

Stream Results on PARAM 10000

Function Rate (MB/s) RMS time Min time Max time

Copy: 206.6193 0.7816 0.7744 0.7958

Scale: 206.9323 0.7858 0.7732 0.8030

Add: 211.0202 1.1485 1.1373 1.1730

Triad: 220.6552 1.0994 1.0877 1.1222

(Contd)

28

v LLCbench is used to determine the efficiency of the various sub-

systems that affect the performance of an application.

v The sub-systems are:

Memory

Parallel processing environment

System libraries

LLCBench

29

v Evaluate compiler efficiency by comparing performance of

reference BLAS and hand tuned BLAS of the vendor.

v Evaluate the performance of vendor provided BLAS routines

in MFLOPS.

v Provide info for performance modeling of applications that

make heavy use of BLAS.

v Evaluate compiler efficiency by comparing performance of

reference BLAS and hand tuned BLAS of the vendor.

v Validate vendors claims about the numerical performance of

their processor.

v Compare against peak cache performance to establish

bottleneck memory or CPU.

LLC : BLAS Bench

30

v Evaluate the performance of the memory hierarchy of a

computer system.

v Focus on the multiple levels of cache.

v Measures Raw bandwidth in MBps.

v Combination of 8 different benchmarks on cache and memory

LLC : Cache Bench

31

v Evaluate the performance of MPI

v Can be used over any Message passing layer

v Interpretation of results is left to the user

v Uses flexible and portable framework to be able to be used over

any message passing layer.

v Tests Eight Different MPI Calls.

Bandwidth; Roundtrip; Application Latency;Broadcast

Reduce; All Reduce;Bidirectional Bandwidth;All to All

LLC : MPI Bench - Goals

32

The NPB suite

v The NAS Parallel Benchmarks (NPB) is developed and maintained

by the Numerical Aerodynamics Simulation (NAS) program at

NASA Ames Research Centre.

v The computation and data movement characteristics of large scale

Computational Fluid Dynamics (CFD) applications.

v The benchmarks are EP, MG, CG, FT, IS, LU, BT, SP.

Macro Benchmarks : NAS

33

PARKBENCH

v The PARKBENCH (PARallel Kernels and BENCHmarks)

v General features

For distributed-memory multicomputers, shared-memory

architectures coded with Fortran 77.

Support PVM or MPI for message passing

Fortran 90 and HPF versions

v Types :

Low-level benchmarks

Kernel benchmarks

Compact application and HPF compiler benchmarks

Macro Benchmarks: PARKBENCH

34

Point-to-Point Communication calls in MPI

Collective

Communication

Broadcast

Gather

Scatter

Total Exchange

Circular Shift

Collective Communication

and Computation

Barrier

Reduction

Scan

All-to-All

Non-Uniform Data

P-COMS: Communication Overhead Measurement Suites developed

by C-DAC

Communication Overheads : P-COMS

35

P-COMS

v Measuring point-point communication (ping-pong test)

between two nodes

v Measuring point-point communication involving n nodes

(Hot-potato test or Fire Brigade Test)

v Measuring collective communication performance

v Measuring collective communication and computation

performance

Note : MPI provides rich set of library calls for point-to point

and collective communication and computation library calls

Communication Overheads : P-COMS

(Contd)

36

P-COMS

Interpretation of overhead measurement data

v Method 1 : Present the results in tabular form

v Method 2 : Present the data as curve

v Method 3 : Present the data using simple, closed-form

expression

Communication Overheads : P-COMS

(Contd)

37

Shared Memory : Node level and run replicated UNIX OS

Architecture Type : Cluster of SMPs

Nodes : E250 Enterprise Server-

400 MHz dual CPU

Peak Computing Power : 6.4 Gigaflops

Aggregate Main Memory : 2.0 Gbytes

Aggregate Storage : 8.0 Gbytes

System Software : HPCC

Networks : High bandwidth, low latency SANs

PARAMNet and FastEthernet

Message Passing library : MPI

Compilers and Tools : Sun Workshop

PARAM 6.4 GF - Consolidated Specifications

38

v Shared memory at Node level

v Node run replicated UNIX OS

v Nodes connected by low latency high

throughput System Area Networks

PARAMNet/ FastEtherNet/MyriNet

v Standard Message Passing interface(MPI)

and CDAC-MPI

v C-DAC High Performance Computing and

Communication software for Parallel

Program Development and run time support

PARAM 10000 Configuration of 8 Processors

39

LAPACK Performance : (Used for SMP node Configuration )

v Several other routines of LAPACK performance varies from 250

Mflop/s to 550 Mflop/s

v 700 Mflop/s on one node having DUAL CPU for some matrix

computations (BLAS libraries)

Note : Sun Performance Computing Libraries have been used and the

performance, in terms of Mflop/s can be improved by choice of matrix

size, band size, and using several options of Sun performance

libraries etc.

(Contd)

Micro Benchmarks: LAPACK

40

Performance of Round Trip Communication Primitive

(Contd)

Communication Overheads: P-COMS

41

Performance of Round Trip Communication Primitive

(Contd)

Communication Overheads: P-COMS

42

Performance of Global Scatter Communication Primitive

(Contd)

Communication Overheads: P-COMS

43

Performance of Global Scatter Communication Primitive

(Contd)

Communication Overheads: P-COMS

44

Performance of Allreduce Communication Primitive

(Contd)

Communication Overheads: P-COMS

45

Performance of Allreduce Communication Primitive

(Contd)

Communication Overheads: P-COMS

46

2275.14 2059.23 7400/100 08 (D)

AM over PARAMNet

using CMPI

FastEtherNet (TCP/IP)

using mpich

2383.51

1334.97

2166.93 7400/80 08 (D)

1141.35 5800/120 04 (S)

Mflop/s Achieved ()

Matrixsize/

BlockSize

Processors

Used

XSGBLU: Single Precision Banded LU factorization and Solve

Results of Selective ScaLAPACK routines

Indicates that optimization is in progress and further improvement in

performance can be achieved (S indicates single processor of one node is used

and D indicates both processors have been used for computation).

(Contd)

Micro Benchmarks: ScaLAPACK

47

1033.55 626.27 1240/20 08 (D)

AM over PARAMNet

using CMPI

FastEtherNet (TCP/IP)

using mpich

1052.15 665.77 1240/30 08 (D)

Mflop/s Achieved ()

Matrixsize/

BlockSize

Processors

Used

XSLLT: Single Precision Cholesky factorization and Solve

Results of Selective ScaLAPACK routines

Indicates that optimization is in progress and further improvement in performance

can be achieved (D indicates both processors have been used for computation).

(Contd)

Micro Benchmarks: ScaLAPACK

48

Results of LINPACK

3180.79() 1749.83() 10000/50 08 (D)

AM over

PARAMNet using

CMPI

FastEtherNet

(TCP/IP) using

mpich

3100.68() 1899.74() 8000/40 08 (D)

Mflop/s Achieved ()

Matrixsize/

BlockSize

Processors

Used

Indicates that optimization is in progress and further improvement in

performance can be achieved (D indicates both processors have been

used for computation).

(Contd)

Micro Benchmarks: LINPACK

49

v One Node 1 CPU - 190 Mflop/s

v One Node 2 CPU - 363 Mflop/s

v One Node 4 CPU - 645 Mflop/s

4484 3500 12000/60 32(8,4) (SS)

3256 2677 12000/80 24(8,3) (SS)

16(8,2) (SS)

16 (4,4) (SS)

8 (1,8) (SS)

Processors

Used (SS =

single Switch)

7808/64

6000/40

4000/60

Matrixsize/

BlockSize

1945

FastEtherNet

using mpich

Mflop/s Achieved ()

2347

2100

1219

HPCC

(KSHIPRA)

System configuration : 300 MHz (quad CPU) Solaris 2.6

LINPACK : hplbench on PARAM 10000

50

146.48 127.71 FT A

178.54 108.40 CG B

801.42 668.92

8 Processors 8 Processors

775.11 648.81

LU A

B

HPCC software

PARAMNet - CMPI

FastEtherNet

(TCP/IP) using

mpich

629.40

584.32

374.07

350.50

MG A

B

Mflop/s Achieved ( )

Description

of Routine

and Problem

Size

( ) Indicates that optimization is in progress and further improvement in

performance can be achieved

(Contd)

Micro Benchmarks: NAS

System Configuration : 400 MHz (Dual CPU) Solaris 2.6

PARAM 10000 - A cluster of SMPs

51

v Description and objective :

Parallelization of GUES - a Computational Fluid Dynamics (CFD)

Application on PARAM 10000 and extract performance in terms of

Mflop/s.

v Rules : Compiler options/Code restructuring/Optimization of the

code is allowed for extracting performance of CFD code

v Performance, in terms of Mflop/s :

Performance of GUES serial program

Performance of parallel application with HPCC software (AM

over PARAMNet using CDAC-MPI) and FastEtherNet (TCP/IP)

using mpich (version 1.1)

Third Party CFD Application

52

Master

Worker 1 Worker 2 Worker 3 Worker 4

Partitioning the domain

Worker 1 Worker 2 Worker 3 Worker 4

Communication in CFD application

53

1020 Mflop/s 600 Mflop/s 8 (D) 384 16 16

1000 Mflop/s 560 Mflop/s 8 (D) 192 16 16

HPCC Software

(PARAMNet CMPI)

FastEtherNet

(TCP/IP) using

mpich

Mflop/s Achieved ( )

Processors Grid Size

Indicates that optimization is in progress and further improvement in

performance can be achieved. (D indicates two processors)

(Contd)

Third Party CFD Application

Single Processor Optimization : Used compiler optimizations;

code restructuring techniques; Managing Memory overheads;

MPI : Packaging MPI library calls; Using proper MPI library Calls;

System Configuration : 400 MHz (Dual CPU) Solaris 2.6

PARAM 10000 - A cluster of SMPs

54

0.08

0.16

0.24

0.32

0.40

NAS LAPACK ScaLAPACK LINPACK CFD

(Description of Benchmarks)

0.0

E

f

f

i

c

i

e

n

c

y

R

a

t

e

Peak Performance : 6.4 GF

Efficiency Rates for Different Benchmarks

55

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Haldimann - Structural Use of Glass BookDocument221 pagesHaldimann - Structural Use of Glass BookKenny Tournoy100% (6)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- 17 Free Data Science Projects To Boost Your Knowledge & SkillsDocument18 pages17 Free Data Science Projects To Boost Your Knowledge & SkillshamedfazelmPas encore d'évaluation

- Supercontryx®: Innovative Glass For X - Ray ProtectionDocument2 pagesSupercontryx®: Innovative Glass For X - Ray ProtectionUsman AhmedPas encore d'évaluation

- Civil Engineering PDFDocument3 pagesCivil Engineering PDFchetan c patilPas encore d'évaluation

- Calculation Rail Beam (Hoist Capacity 3 Ton)Document4 pagesCalculation Rail Beam (Hoist Capacity 3 Ton)Edo Faizal2Pas encore d'évaluation

- AÇO - DIN17100 St52-3Document1 pageAÇO - DIN17100 St52-3Paulo Henrique NascimentoPas encore d'évaluation

- Allen BradleyDocument36 pagesAllen BradleyotrepaloPas encore d'évaluation

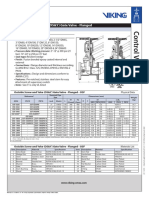

- Outside Screw and Yoke (OS&Y) Gate Valve - Flanged: Technical FeaturesDocument2 pagesOutside Screw and Yoke (OS&Y) Gate Valve - Flanged: Technical FeaturesMark Louie GuintoPas encore d'évaluation

- Refining Mechanical PulpingDocument12 pagesRefining Mechanical PulpingMahdia MahmudPas encore d'évaluation

- Textile AssignmentDocument8 pagesTextile AssignmentMahmudul Hasan Khan40% (5)

- All Over India Company DataDocument32 pagesAll Over India Company DataViren PatelPas encore d'évaluation

- Powered by QFD OnlineDocument1 pagePowered by QFD OnlineNiswa RochimPas encore d'évaluation

- Ceramic Materials: Introduction!: MCEN90014: Materials ! ! !dr. K. Xia! ! ! !1!Document5 pagesCeramic Materials: Introduction!: MCEN90014: Materials ! ! !dr. K. Xia! ! ! !1!hamalPas encore d'évaluation

- Various Allowances Referred To 7th CPCDocument73 pagesVarious Allowances Referred To 7th CPCGiri KumarPas encore d'évaluation

- Recommendation Handling of Norit GL 50Document9 pagesRecommendation Handling of Norit GL 50Mátyás DalnokiPas encore d'évaluation

- 7695v2.1 (G52 76951X8) (A55 G45 - A55 G55)Document70 pages7695v2.1 (G52 76951X8) (A55 G45 - A55 G55)Dávid SzabóPas encore d'évaluation

- Manufacturing Layout Analysis - Comparing Flexsim With Excel SpreadsheetsDocument2 pagesManufacturing Layout Analysis - Comparing Flexsim With Excel Spreadsheetsmano7428Pas encore d'évaluation

- ManualDocument9 pagesManualRonit DattaPas encore d'évaluation

- PPAPDocument2 pagesPPAPVlad NitaPas encore d'évaluation

- Códigos de Falhas Hyundai R3607ADocument13 pagesCódigos de Falhas Hyundai R3607AGuemep GuemepPas encore d'évaluation

- Thermophysical Properties of Containerless Liquid Iron Up To 2500 KDocument10 pagesThermophysical Properties of Containerless Liquid Iron Up To 2500 KJose Velasquez TeranPas encore d'évaluation

- 29L0054805FDocument49 pages29L0054805FszPas encore d'évaluation

- Project Management Quick Reference GuideDocument5 pagesProject Management Quick Reference GuidejcpolicarpiPas encore d'évaluation

- Sample Electrical LayoutDocument1 pageSample Electrical LayoutBentesais Bente UnoPas encore d'évaluation

- Technical InformationDocument8 pagesTechnical Informationmyusuf_engineerPas encore d'évaluation

- EN 61000 3-2 GuideDocument19 pagesEN 61000 3-2 Guideyunus emre KılınçPas encore d'évaluation

- Catalogo Towel RailsDocument1 pageCatalogo Towel RailsrodijammoulPas encore d'évaluation

- MTS Mto Ato Cto Eto PDFDocument5 pagesMTS Mto Ato Cto Eto PDFJuan Villanueva ZamoraPas encore d'évaluation

- Banda Hoja de DatosDocument1 pageBanda Hoja de DatosSergio Guevara MenaPas encore d'évaluation

- Physical Pharmacy Answer Key BLUE PACOPDocument34 pagesPhysical Pharmacy Answer Key BLUE PACOPprincessrhenettePas encore d'évaluation