Académique Documents

Professionnel Documents

Culture Documents

Recursive Mmse Estimation of Wireless Channels Based On Training Data and Structured Correlation Learning

Transféré par

Vanidevi ManiTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Recursive Mmse Estimation of Wireless Channels Based On Training Data and Structured Correlation Learning

Transféré par

Vanidevi ManiDroits d'auteur :

Formats disponibles

RECURSIVE MMSE ESTIMATION OF WIRELESS CHANNELS BASED ON

TRAINING DATA AND STRUCTURED CORRELATION LEARNING

Gerald Matz

Institute of Communications and Radio-Frequency Engineering, Vienna University of Technology

Gusshausstrasse 25/389, A-1040 Vienna, Austria

phone: +43 1 58801 38916, fax: +43 1 58801 38999, email: g.matz@ieee.org

ABSTRACT

We present a novel block-recursive technique for training

based channel estimation that augments MMSE channel es-

timation with structured correlation learning to obtain the

required statistics. We provide formulations for both sta-

tionary and nonstationary wireless channels along with a

robust initialization procedure. The method is ecient, ap-

plicable in a variety of communications schemes (MIMO,

multiuser, OFDM), and has performance superior to that of

RLS from which it diers in an important way. Simulation

examples show nearly optimum performance with particular

gains for strongly correlated channels.

1. INTRODUCTION

We consider the problem of estimating the coecients of

a wireless fading channel on a block-by-block basis using

training data. The simplest approach in such a context, not

requiring any statistical prior knowledge, is the least squares

(LS) channel estimator [1]. Exploiting channel statistics

(i.e., long-term channel properties) via a (linear) minimum

mean square error (MMSE) approach [1] allows to achieve

smaller estimation errors. However, MMSE estimation is

highly dependent on accurate knowledge of channel statis-

tics. Several approaches have been proposed for the acquisi-

tion or specication of the required second-order statistics,

e.g. specication of a representative default channel statis-

tics, which obviously suers from potential mismatch of the

true and assumed statistics. Alternatively, channel statistics

estimated from channel pre-estimates (e.g., the LS estimate)

can be used [2]. In [3], we proposed structured correlation

learning (see [1]) to obtain accurate channel statistics which

also leads to superior channel estimation performance.

The main contributions of this paper build upon [3] and

can be summarized as follows:

In contrast to the batch procedure in [3], we provide an

ecient recursive implementation of MMSE estimation in-

cluding structured correlation learning; we also show how

additional structure of the channel correlation matrix (i.e.,

uncorrelated channel coecients) can be taken into ac-

count in a systematic way.

We introduce a simple modication rendering the method

applicable in nonstationary environments and we propose

a robust initialization for the correlation learning process.

Funding by FWF grant J2302.

We relate our method to RLS channel estimation and point

out an important dierence which explains the superior

performance of our method.

Finally, we illustrate the application of our approach to

dierent contexts including at-fading multi-input multi-

output (MIMO) systems, multiuser uplinks over frequency-

selective channels, and orthogonal frequency division mul-

tiplexing (OFDM) systems transmitting over doubly selec-

tive channels.

2. BACKGROUND

System Model. Our generic discrete system model for the

kth block is given by (see Section 6 for specic applications)

y[k] = Sh[k] +n[k] . (1)

Here, h[k] is the M 1 channel coecient vector to be esti-

mated, S is an N M matrix containing the known training

data, y[k] is the N 1 received vector induced by the train-

ing data, and n[k] is the N 1 noise vector. We assume

that N M and that S has full-rank, i.e., S = span{S}

is an M-dimensional subspace of C

N

. The zero-mean vec-

tors n[k] and h[k] are mutually independent and statisti-

cally characterized by

1

E{n[k] n

H

[k

]} =

2

[k] [kk

] I and

E{h[k] h

H

[k

]} = R

h

[k] [kk

], respectively. The latter im-

plies that subsequent training blocks are separated by more

than the channels coherence time; thus, the channel coe-

cients can be estimated in a block-by-block fashion without

performance loss.

LS and MMSE Channel Estimators. The simplest

channel estimator for the model (1) is the LS estimator [1]

h

LS

[k] = S

#

y[k] = h[k] +S

#

n[k],

where S

#

= (S

H

S)

1

S

H

denotes the pseudo-inverse of S.

Apart of the training data contained in S, the LS estimator

does not require any (statistical) prior knowledge. To ex-

ploit long-term channel properties (i.e., channel statistics),

usually a (linear) MMSE channel estimator is used [1]:

h[k] = R

h

[k]S

H

(SR

h

[k]S

H

+

2

[k]I)

1

y[k] (2a)

= S

#

(I

2

[k]R

1

y

[k]) y[k] . (2b)

Here, R

y

[k] = E

_

y[k] y

H

[k]

_

. In practice, the correlation

matrices R

h

[k], R

y

[k] and the noise variance

2

have to be

1

Here, E{} denotes expectation (ensemble averaging) and su-

perscript

H

denotes hermitian transposition.

specied. In a stationary context (R

y

[k] = R

y

, R

h

[k] =

R

h

), either default specications (i.e., R

h

= I) or robust

designs based on least favorable correlations [4] are used; al-

ternatively, the correlation matrices R

y

and R

h

are replaced

with the respective sample correlation matrices [2]:

R

y

[k] =

1

k

k

i=1

y[i]y

H

[i],

R

LS

h

[k] =

1

k

k

i=1

h

LS

[i]

h

H

LS

[i] , (3)

Although these sample correlations can be shown to be re-

lated as

R

LS

h

[k] = S

#

R

y

[k]S

#H

, it turns out that using

them in (2a) and (2b), respectively, gives dierent MMSE

channel estimators.

3. STRUCTURED CORRELATION LEARNING

The sample correlations

R

LS

h

and

R

y

do not take into ac-

count the correlation structure

R

y

[k] = SR

h

[k]S

H

+

2

[k]I . (4)

This motivates us to propose a novel approach for structured

correlation learning [1] in order to estimate R

y

[k] and R

h

[k]

in a way consistent with (4).

Nonstationary Case. We derive an algorithm for struc-

tured correlation learning in nonstationary scenarios where

R

h

[k], R

y

[k], and

2

[k] depend indeed on the block (time)

index k. This extends the stationary case considered in [3].

With structured correlation estimation (cf. [1]), the chan-

nel correlation R

h

[k] and the noise variance

2

[k] are being

considered as parameters that are estimated via an LS ap-

proach from an observed receive correlation matrix

R

y

[k]

by taking into account (4):

_

R

h

[k],

2

[k]

_

= arg min

R

h

[k]

2

[k]

_

_

R

y

[k] (SR

h

[k]S

H

+

2

[k]I)

_

_

2

(5)

( denotes the Frobenius norm). Since we are interested in

nonstationary scenarios, we propose to include exponential

forgetting in the sample correlation of y[k], i.e.,

R

y

[k] = (1 )

k

i=1

ki

y[i]y

H

[i] . (6)

Here, 0 < < 1 denotes the forgetting factor. According

to [1], (5) amounts to solving the linear equations (tr{}

denotes trace)

S

H

R

y

[k]S = S

H

S

R

h

[k]S

H

S +

2

[k]S

H

S (7)

tr{

R

y

[k]} = tr{S

R

h

[k]S

H

} + N

2

[k] (8)

for

R

h

[k] and

2

. Pre- and post-multiplying (7) by (S

H

S)

1

yields the channel correlation estimate

R

h

[k] = S

#

R

y

[k]S

#H

2

[k](S

H

S)

1

(9a)

= S

#

_

R

y

[k]

2

[k]I

_

S

#H

, (9b)

where the second expression follows from (S

H

S)

1

=S

#

S

#H

.

Plugging this expression into (8) leads to

N

2

[k] = tr{

R

y

[k] P

R

y

[k]P+

2

[k]P}

= tr{P

R

y

[k]P

} + M

2

[k] . (10)

Here, P = SS

#

and P

= I P denote the orthogonal

projection matrices on S and S

, respectively. From (10)

we obtain the noise variance estimate (cf. (6))

2

[k] =

1

NM

tr{P

R

y

[k]P

} (11a)

=

1

NM

k

i=1

ki

_

_

P

y[i]

_

_

2

. (11b)

Note that P

y[k] can be interpreted as estimate of the noise

within the (NM)-dimensional noise subspace S

.

Using (9a) and (11a), the structured correlation estimate

of R

y

[k] is given by

R

(s)

y

[k] = S

R

h

[k]S

H

+

2

[k]I = P

R

y

[k]P+

2

[k]P

. (12)

It is seen that with this estimate,

R

y

[k] is retained within

S while within the noise-only subspace S

it is replaced by

the more accurate estimate

2

[k]P

.

Stationary Case. The results obtained in [3] for station-

ary scenarios (i.e., R

h

[k], R

y

[k], and

2

[k] are independent

of k) are consistent with our foregoing development. In par-

ticular, (9), (11), and (12) are still valid, however, with the

exponential forgetting sample correlation

R

y

[k] in (6) re-

placed by the conventional sample correlation in (3). The

noise variance estimate in this case reads (cf. (11b))

2

[k] =

1

NM

1

k

k

i=1

_

_

P

y[i]

_

_

2

. (13)

Additional Structure. The structured correlation learn-

ing approach discussed above can be further rened if ad-

ditional structural constraints on the channel correlation

R

h

[k] are known. As an example, we consider the special

case where the channel coecients h[k] are uncorrelated, i.e.,

R

h

[k] = diag{

2

1

[k], . . . ,

2

M

[k]} is a diagonal matrix consist-

ing of the channel powers

2

i

[k] = E{ |h

i

[k]|

2

} (h

i

[k] is the

ith element of h[k]). To simplify the discussion, we assume

S to be orthogonal. Denoting the ith column of S by s

i

, the

receive correlation matrix can here be written as

R

y

[k] =

M

i=1

2

i

[k] s

i

s

H

i

+

2

[k]I .

Dening,

2

h

[k] =

_

2

1

[k] . . .

2

M

[k]

T

, the structured correla-

tion learning problem reads

_

2

h

[k],

2

[k]

_

= arg min

2

h

[k]

2

[k]

_

_

_

_

R

y

[k]

_

M

i=1

2

i

[k] s

i

s

H

i

+

2

[k]I

__

_

_

_

2

,

(14)

which amounts to solving the system of linear equations

s

H

i

R

y

[k]s

i

=

2

i

[k] +

2

[k],

tr{

R

y

[k]} =

M

i=1

2

i

[k] +

2

[k] N .

This can be shown to lead to the channel power estimates

2

i

[k] = s

H

i

R

y

[k]s

i

2

[k],

and to the same noise variance estimate as in (11). It is

furthermore easily seen that

R

h

[k] = diag{

2

h

[k]} = diag{S

H

R

y

[k]S}

2

[k]I .

Comparing with (9) and recalling our assumption S

H

S = I,

we see that the additional structure of having uncorrelated

channel coecients is simply enforced by setting all non-

diagonal elements of S

H

R

y

[k]S to zero.

4. RECURSIVE MMSE ESTIMATION

In this section, we show that by using structured correlation

estimates in the MMSE channel estimator (2), we can ar-

rive at an ecient recursive implementation that performs

block-wise MMSE estimation while adaptively learning the

underlying channel statistics.

4.1. Subspace Formulation

Plugging

R

h

[k],

R

(s)

y

[k], and

2

[k] into (2a) and (2b), re-

spectively, leads to two dierent but equivalent expressions

for an improved MMSE channel estimator:

h[k] =

R

h

S

H

(S

R

h

S

H

+

2

[k]I)

1

y[n]

= S

#

_

I

2

[k]

_

R

(s)

y

1

_

y[n] . (15)

The rest of the paper will be based on the second formula-

tion of the MMSE estimator, which allows to show that in

fact all calculations required for channel estimation can be

performed within the M-dimensional subspace S (obviously,

S

contains only uncorrelated noise and thus needs to be

considered only for noise variance estimation). Consider an

orthonormal matrix U whose columns form an orthonormal

basis for S. Dening the transformed length-M observation

vector x[k] = U

H

y[k] = Ch[k] + U

H

n[k] with correlation

matrix R

x

[k] = E{x[k] x

H

[k]} and the transformed M M

training data matrix C = U

H

S, it can indeed be shown that

(15) is equivalent to

h[k] = C

1

_

I

2

[k]

R

1

x

[k]

_

x[k] . (16)

where in the stationary case

R

x

[k] =

1

k

k

i=1

x[i] x

H

[i] (17)

and in the nonstationary case

R

x

[k] = (1 )

k

i=1

ki

x[i] x

H

[i] . (18)

We will show below that the estimator (16) has a complexity

of O(M

2

) operations per block. This follows from the fact

that

R

1

x

[k] can be computed from

R

1

x

[k1] with complex-

ity O(M

2

) using Woodburys identity [1]. Furthermore,

_

_

P

y[k]

_

_

2

=

_

_

y[k]

_

_

2

_

_

Py[k]

_

_

2

=

_

_

y[k]

_

_

2

_

_

x[k]

_

_

2

implies that the noise variance update (cf. (11b) and (13))

amounts to O(N + M) operations.

4.2. Recursive Implementation

We next discuss an ecient block-recursive implementation

of the subspace version (16) of our MMSE channel estimator.

For clarity of presentation, we make the simplifying assump-

tion that the noise variance is independent of k and exactly

known, i.e.,

2

[k] =

2

. We will show below that highly

accurate noise variance estimates justifying this assumption

can be obtained according to (13) during the initialization

phase of the recursive estimator. For the subsequent de-

velopment, we reformulate the channel estimate (16) via an

MMSE (Wiener) lter as

h[k] = W[k] x[k] where

W[k] = C

1

_

I

2

R

1

x

[k]

_

. (19)

Stationary Case. We rst consider a stationary channel

(R

h

[k] = R

h

, R

x

[k] = CR

h

C

H

+

2

I). From (17) we have

R

x

[k] =

_

1

1

k

_

R

x

[k 1] +

1

k

x[k] x

H

[k] .

This is a rank-one update of

R

x

[k 1] that allows to use

Woodburys identity [1] to obtain a recursion for the (scaled)

inverse correlation Q[k] =

_

k

R

x

[k]

1

required in (19):

Q[k] = Q[k 1] Q[k 1] x[k] g

H

[k] . (20)

Here, we used the Kalman-type gain vector

g[k] =

Q[k 1] x[k]

1 +x

H

[k] Q

H

[k 1] x[k]

. (21)

Plugging (20) into (16), it can be shown that

W[k] =

k

k1

_

W[k1] +e[k]g

H

[k]

_

1

k1

C

1

, (22)

with the noisy channel estimation error

e[k] =

h

LS

[k]

h[k|k1] (23)

and the predicted channel vector

h[k|k1] = W[k 1] x[k] , (24)

obtained by applying the MMSE lter from block k1 to the

observation in the kth block. For large k, it is seen that the

recursion (22) approximately simplies to the rank-one RLS-

type update W[k]

W[k] = W[k1] +e[k]g

H

[k]. However,

for small k, the MMSE lter W[k] is a linear combination

of

W[k] and the LS estimation lter

2

C

1

.

By applying (22) to x[k], we obtain

h[k] =

k

k1

1

1 +

2

[k]

h[k|k1] +

2

[k]

1

k1

1 +

2

[k]

h

LS

[k] .

Here, we used the shorthand notation

2

[k] = x

H

[k]Q[k

1]x[k]. For large k, this equation shows that the MMSE

estimate (approximately) is a convex combination of

h[k|k

1] (which involves all statistical information accumulated up

to block k1) and the current LS estimate

h

LS

[k] (which

involves no statistical knowledge at all). In particular, if x[k]

is poorly represented in

R

x

[k] (as will typically be the case

in the initial learning phase),

2

[k] will be very large and

h[k] will be closer to

h

LS

[k]. In contrast, if x[k] is strongly

represented in

R

x

[k] (typically after convergence),

2

[k] is

small and

h[k]

h[k|k1] (i.e., basically W[k] W[k1]).

Nonstationary Case. We next extend the foregoing block-

recursive MMSE lter implementation to channels whose

correlation matrix R

h

[k] changes gradually from block to

block. Obviously, R

x

[k] = CR

h

[k]C

H

+

2

I also depends

2

Note that

h

LS

[k] = S

#

y[k] = C

1

U

H

y[k] = C

1

x[k].

explicitly on the block index k. In that case, the correlation

estimate allows for the recursive computation (cf. (18))

R

x

[k] =

R

x

[k 1] + (1 )x[k] x

H

[k] .

Dening the (scaled) inverse correlation Q[k] =(1)

R

1

x

[k]

and again using Woodburys identity results in

3

Q[k] =

1

_

Q[k 1] Q[k 1] x[k] g

H

[k]

, (25)

with the Kalman-type gain vector

g[k] =

Q[k 1] x[k]

+x

H

[k] Q

H

[k 1] x[k]

. (26)

Plugging (25) into (16), we obtain in a similar way as for

the stationary case the lter recursion

W[k] =

1

_

W[k1] +e[k]g

H

[k]

_

1

C

1

and a corresponding expression for the channel estimate:

h[k] =

1

1

1 +

2

[k]

h[k|k1] +

2

[k]

1

1 +

2

[k]

h

LS

[k] .

Here, the noisy channel estimation error e[k] and the pre-

dicted channel vector

h[k|k 1] are dened as before (cf.

(23) and (24), respectively). It is seen that the results for

the nonstationary case can easily be obtained from those

for the stationary case by formally replacing 1/k by 1

and vice versa. Hence, all interpretations provided in the

stationary case for large k apply to the nonstationary case

with close to 1 (the usual case) as well.

4.3. Complexity

We next provide an analysis of the computational complex-

ity of our block-recursive MMSE lter. The rst stage con-

sists of the transformation x[k] = U

H

y[k] which requires

O(NM) operations. Then the LS estimate

h

LS

[k] =C

1

x[k]

and the predicted channel vector

h[k|k 1] = W[k 1]x[k]

are calculated using O(M

2

) operations each. The calcula-

tion of the Kalman gain vector g[k] is as well dominated by

the O(M

2

) complexity of the matrix-vector product x[k] =

Q[k 1]x[k]. The O(M

2

) outer product of the intermediate

vector x[k] and the Kalman gain vector g[k] determines the

complexity of the update equation for Q[k]. The update of

the MMSE lter W[k] amounts to the outer product of e[k]

and g[k] and requires O(M

2

) operations as well. Finally,

the MMSE ltering step requires also O(M

2

) operations.

4.4. Initialization

Regarding the initialization of the lter recursion, we note

that

R

x

[k] is invertible only for k M. Hence, the recursion

for Q[k] is actually meaningful only for k > M. In our sim-

ulations we observed

R

x

[k] to be statistically unstable even

for block indices up to 2M . . . 3M. This is even more true for

R

1

x

[k] and results in estimates

h[k] that perform worse than

the LS estimate. Intuitively, using poor (i.e., mismatched)

correlation estimates in the nominally optimum MMSE esti-

mator (that tries to exploit the full second-order statistics)

3

To simplify the discussion, we slightly abuse notation and use

the same symbols for the stationary and the nonstationary case.

leads to larger errors than using the rather robust LS esti-

mate that does not attempt to exploit any statistics at all.

As a remedy for this problem, we suggest to use the LS

estimator while learning R

x

[k] in the rst M blocks and af-

terwards switch to the MMSE recursions. The rst M blocks

can also be used to learn the noise variance

2

. Our simula-

tions indicated that typically another M . . . 2M blocks are

required in order for

R

x

[k] and Q[k] to be stable enough to

allow the MMSE estimator to outperform the LS estimator.

To improve initial convergence, we propose a robust ini-

tialization that uses only the diagonal D

x

[k] = diag{

R

x

[k]}

(i.e., the mean power of the channel coecients) for k =

1, . . . , M. Based on D

x

[M], the full correlation and its in-

verse are acquired only for k > M. We note that the diago-

nal matrix D

x

[k] can be stably inverted even for k < M and

it typically allows to outperform the LS estimator already

within the (M +1)th block. In essence, using only D

x

[k] in

the MMSE lter design amounts to a robust MMSE estima-

tor [5] which exploits knowledge of the channel coecient

powers only but ignores potential correlations of the chan-

nel coecients. Such an approach is known to be much less

susceptible to errors (mismatch) in the estimated statistics.

Indeed, we observed in our simulations that this robust ini-

tialization features improved performance (cf. Section 7).

5. RELATION TO RLS

The structure of the block-recursive MMSE estimator and

its O(M

2

) complexity are strongly reminiscent of the RLS

algorithm [6]. We thus next provide a comparison with an

RLS approach to acquire and track the optimal estimation

lter. We note that direct RLS or Kalman tracking of the

channel vector h[k] is not feasible since channel realizations

in dierent blocks are assumed uncorrelated.

To derive the RLS recursions [6], we dene

h

RLS

[k] =

W

RLS

[k]x[k] and minimize the respective errors

2

=

1

k

k

i=1

h[i]

h

RLS

[i]

2

,

2

=

k

i=1

ki

h[i]

h

RLS

[i]

2

,

for the stationary and nonstationary case. It can be shown

that these minimization problems amount to solving a sys-

tem of linear equations for the optimal RLS lter W

RLS

[k]

by inverting the sample correlation of the observation (cf.

(17) and (18)). Doing this inversion in a recursive fashion

leads to the following update equation for the RLS lter:

W

RLS

[k] = W

RLS

[k1] +

_

h[k]

h

RLS

[k|k1]

_

g

H

[k] . (27)

Here, the Kalman gain g

H

[k] is dened as before (cf. (21)

and (26)) and

h

RLS

[k|k1] = W

RLS

[k1] x[k] denotes the

RLS channel prediction. Unfortunately, the true channel

vector h[k] in the RLS update (27) is not available; hence, it

needs to be replaced with the LS estimate

h

LS

[k] in practice.

Comparing the RLS and MMSE updates at time k as-

suming W

RLS

[k1] = W[k1], it is seen that the MMSE

lter W[k] is related to the RLS lter W

RLS

[k] via a scaling

and an oset proportional to C

1

. Consider the ane ma-

trix space C

1

+ (W

RLS

[k] C

1

). Obviously, W

RLS

[k]

is obtained with = 1. In contrast, the MMSE lter is

obtained with = k/(k1) > 1 (stationary case) or =

1/ > 1 (nonstationary case). Thus, when moving on the

ane space connecting C

1

and W

RLS

[k] the MMSE lter

lies beyond W

RLS

[k]. These slightly larger hops are re-

sponsible for the improved convergence and performance of

our MMSE approach as compared to the RLS approach (see

the simulations section). In part, the inferior performance

of the RLS approach can be attributed to the fact that one

is forced to replace h[k] in (27) with

h

LS

[k]. We again em-

phasize that the block-recursive algorithm (27) attempts to

track the optimum channel estimation lter and is dierent

from symbol-recursive Kalman and RLS algorithms tracking

directly the channel (e.g. [7, 8]).

6. APPLICATION EXAMPLES

We next illustrate how the above general developments can

be applied to specic communication schemes.

Flat-Fading MIMO Systems. Consider a block-fading

narrowband MIMO system with M

t

transmit and M

r

receive

antennas. We assume that within each block L transmit

vectors s

1

, . . . , s

L

are dedicated to training. The training

data transmission within the kth block can be modeled as

Y[k] = S

0

H[k] +N[k] ,

with the L M

t

training matrix S

0

= [s

1

. . . s

L

]

T

, and the

M

t

M

r

channel matrix H[k]. Y[k] and N[k] denote the

L M

r

receive and noise matrix, respectively. Our generic

model (1) is obtained with

4

y[k] = vec{Y[k]}, S = I

M

t

S

0

,

h[k] = vec{H[k]}, and n[k] = vec{N[k]}. We thus have N =

LM

r

and M = M

t

M

r

, i.e., N > M amounts to L > M

t

.

Multiuser Uplink. Let L users synchronously communi-

cate training sequences s

l

(l = 1, . . . , L) of length NM

c

1

over frequency selective channels h

l

[k] to a base station. The

channel impulse response vectors h

l

[k] have length M

c

. It

is straightforward to show that the length-N receive signal

y[k] induced by the training data is given by

y[k] =

L

l=1

S

l

h

l

[k] +n[k] ,

where S

l

are NM

c

Toeplitz matrices induced by the train-

ing sequences s

l

. This expression can be rewritten as in (1)

by setting h[k] =

_

h

T

1

[k] . . . h

T

L

[k]

T

and S = [S

1

. . . S

L

]. In

this case, M = LM

c

and the condition N M requires that

the training sequence length is larger than the total number

of channel coecients. Note that single user systems are a

simple special case obtained with L = 1.

Pilot-Symbol-Assisted OFDM. Let us consider OFDM

transmission over a doubly selective fading channel. Each

block consists of L

t

OFDM symbols and L

f

subcarriers

in order to transmit symbols s

m,l

[k] (m = 1, . . . , L

t

, l =

1, . . . , L

f

). The received OFDM demodulator sequence is

y

m,l

[k] =

H

m,l

[k] s

m,l

[k] + n

m,l

[k], where n

m,l

[k] is additive

Gaussian noise and

H

m,l

[k] denotes the channel coecients.

Assuming a maximum delay of M

samples and a maximum

(normalized) Doppler of M

/(L

t

L

f

), there is

H

m,l

[k] =

M

=M

=M

H

,

[k] e

j2

_

l

L

f

m

L

t

_

4

The vec{} operation amounts to stacking the columns of a

matrix into a vector and denotes the Kronecker product [9].

We note that M = 4M

is proportional to the channels

spreading factor [10] and that any other sparse basis expan-

sion model for

H

m,l

[k] could be used instead of the Fourier

expansion.

Within each block, N = N

t

N

f

pilot symbols are trans-

mitted on the regular rectangular lattice (mP

t

, lP

f

), with

N

t

= L

t

/P

t

and N

f

= L

f

/P

f

pilot positions in time and

frequency, respectively. The pilot induced receive sequence

y[k] = vec

__

y

P

1

,P

2

[k] ... y

N

t

P

1

,P

2

[k]

.

.

.

.

.

.

.

.

.

y

P

1

,N

f

P

2

[k] ... y

N

t

P

1

,N

f

P

2

[k]

__

can then be shown to be given by (1) with

h[k] = vec

__

H

M

,M

[k] ... H

M

1,M

1

[k]

.

.

.

.

.

.

.

.

.

H

M

,M

[k] ... H

M

1,M

1

[k]

__

.

and S = diag{s}

_

F

(P

t

)

L

t

M

F

(P

f

)

H

L

f

M

. Here, the pilot sym-

bol vector s is dened analogously to y[k] and F

(c)

ab

is ob-

tained by picking the rst and last b columns and every cth

row from the a a DFT matrix.

7. SIMULATION RESULTS

In this section, we illustrate the performance of the proposed

method via numerical simulation examples.

Stationary MIMO System. First consider estimation of

a 4 4 block-fading stationary MIMO channel with spatial

correlation characterized by the Kronecker correlation ma-

trix R

h

= R

t

R

r

with transmit and receive correlation

(R

t

)

ij

= (R

r

)

ij

=

|ij|/2

. We simulated the stationary

version (i.e., no forgetting) of our block-recursive estima-

tor assuming perfect knowledge of the noise variance. An

orthogonal BPSK training matrix with L = 8 was used

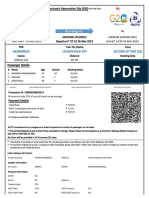

and the SNR was set to 7 dB. Fig. 1(a) shows the perfor-

mance (normalized MSE) of our block recursive estimator

and the LS estimator versus block index k for various

(the rst 16 blocks required to obtain a non-singular

R

x

are not shown). After a short convergence phase (about

2M

t

M

r

= 32 blocks), our estimator has learned the channel

correlation reliably enough to outperform the LS estimator.

The performance gains are at least 0.8 dB (for = 0) and

can be as large as 5.5 dB (for = 0.9). It is furthermore

seen that the performance of our recursive estimator quickly

approaches the theoretical MMSE (achieved with the true

channel statistics).

Nonstationary OFDM System. Our second example

pertains to an OFDM system with L

f

= 64 subcarriers and

a block length of L

t

= 16 OFDM symbols. The N = 16

QPSK pilots per block are P

f

= 16 subcarriers and P

t

= 4

OFDM symbols apart and suce to estimate a doubly se-

lective channel with M

= M

= 4. In contrast to the pre-

vailing WSSUS assumption [11], we assume that the individ-

ual multipath components H

,

[k] are correlated [12]. Fur-

thermore, the path loss changes such that the receive SNR

gradually decreases by 8 dB. Fig. 1(b) shows the normal-

ized MSE versus block index k achieved with the proposed

method using forgetting factor = 0.99 and conventional

initialization (labeled MMSE

0

) and robust initialization (la-

beled MMSE

1

). The rst M = 16 blocks (not shown) are

1 50 100 150 200

10

8

6

4

2

17 50 75 100 500 1000

15

13

11

9

7

5

0 200 400 600 800 1000

13

12

11

10

9

8

7

6

M

S

E

[

d

B

]

M

S

E

[

d

B

]

k

M

S

E

[

d

B

]

k k

(a) (b) (c)

LS

LS LS

RLS

0

MMSE

0

MMSE

1

RLS

1

MMSE

1

MMSE

0

2

= 0.9

2

= 0.5

2

= 0

Figure 1: Normalized MSE versus block index for (a) a 44 at fading MIMO system with dierent correlation parameters

, (b) an OFDM system transmitting over a nonstationary doubly selective channel, (c) a 4 4 MIMO system with = 0.8.

The MSE achieved by the LS estimator (labeled LS) and the theoretical MSE (dashed line) are always shown for comparison.

used for initialization and for estimation of the noise vari-

ance. The remaining part of the initial 100 blocks is shown

on a ner time-scale for better visibility of the convergence

behavior. Note that the theoretical MMSE (dashed line) in-

creases by about 4 dB due to the decrease in receive SNR.

Again, the performance of our method is close to optimal

performance (i.e., we succeed in tracking the nonstationary

statistics) and in general much better (up to 5 dB) than that

of LS estimation. During the initial convergence phase, ro-

bust correlation learning is seen to outperform conventional

learning which is even inferior to LS estimation (due to in-

version of unreliable correlation estimates).

Comparison with RLS. Finally, Fig. 1(c) shows a compar-

ison of our block-recursive MMSE estimator with RLS for a

stationary 4 4 correlated MIMO channel with = 0.8 (see

above), again using conventional and robust initialization

(indicated by subscripts

0

and

1

, respectively). The SNR in

this case was 5 dB. For conventional initialization it is seen

that RLS initially converges faster than our MMSE estima-

tor but degrades rapidly to LS performance for k > 20.

In contrast, the MMSE estimator gradually converges to

the theoretical MMSE. For robust initialization, both RLS

and MMSE start out with signicantly smaller MSE than

with conventional initialization. Again, the MMSE estima-

tor approaches optimal performance while RLS performance

converges towards LS performance which is inferior to our

method by almost 5 dB.

8. CONCLUSIONS

We introduced a block-recursive training-based scheme for

wireless channel estimation that uses structured learning of

the long-term channel properties (statistics). Using a ro-

bust initialization, our scheme allows for quick acquisition

and tracking of channel statistics, leading to a performance

which is close to the theoretical MMSE. We provided an in-

sightful interpretation of our method by comparing it with

an (inferior) RLS approach. The complexity of our algo-

rithm scales at most with the square of the number of chan-

nel coecients. Our algorithm attempts to learn the full

channel correlation matrix and is thus particularly suited to

MIMO channels with spatial correlation and to non-WSSUS

channels [12] with correlated delay-Doppler components.

REFERENCES

[1] L. L. Scharf, Statistical Signal Processing. Reading

(MA): Addison Wesley, 1991.

[2] F. Dietrich and W. Utschick, Pilot-assisted chan-

nel estimation based on second-order statistics, IEEE

Trans. Signal Processing, vol. 53, pp. 11781193, March

2005.

[3] N. Czink, G. Matz, D. Seethaler, and F. Hlawatsch,

Improved MMSE estimation of correlated MIMO

channels using a structured correlation estimator, in

Proc. IEEE SPAWC-05, (New York), June 2005.

[4] Y. Li, L. Cimini, and N. Sollenberger, Robust chan-

nel estimation for OFDM systems with rapid disper-

sive fading channels, IEEE Trans. Comm., vol. 46,

pp. 902915, July 1998.

[5] S. A. Kassam and H. V. Poor, Robust techniques

for signal processing: A survey, Proc. IEEE, vol. 73,

pp. 433481, March 1985.

[6] S. Haykin, Adaptive Filter Theory. Englewood Clis

(NJ): Prentice Hall, 1991.

[7] C. Komninakis, C. Fragouli, A. H. Sayed, and R. D.

Wesel, Multi-input multi-output fading channel track-

ing and equalization using Kalman estimation, IEEE

Trans. Signal Processing, vol. 50, pp. 10651076, May

2002.

[8] D. Schafhuber, G. Matz, and F. Hlawatsch, Kalman

tracking of time-varying channels in wireless MIMO-

OFDM systems, in Proc. 37th Asilomar Conf. Signals,

Systems, Computers, (Pacic Grove, CA), pp. 1261

1265, Nov. 2003.

[9] J. W. Brewer, Kronecker products and matrix calculus

in system theory, IEEE Trans. Circuits and Systems,

vol. CAS-25, pp. 772781, Sept. 1978.

[10] J. G. Proakis, Digital Communications. New York:

McGraw-Hill, 3rd ed., 1995.

[11] P. A. Bello, Characterization of randomly time-variant

linear channels, IEEE Trans. Comm. Syst., vol. 11,

pp. 360393, 1963.

[12] G. Matz, Characterization of non-WSSUS fading dis-

persive channels, in Proc. IEEE ICC-2003, (Anchor-

age, AK), pp. 24802484, May 2003.

Vous aimerez peut-être aussi

- Effect of Macrodiversity on MRC PerformanceDocument10 pagesEffect of Macrodiversity on MRC PerformanceTèoPas encore d'évaluation

- MSE performance of adaptive filters in nonstationary environmentsDocument7 pagesMSE performance of adaptive filters in nonstationary environmentsThanavel UsicPas encore d'évaluation

- Survey of MMSE Channel Equalizers: University of Illinois at ChicagoDocument7 pagesSurvey of MMSE Channel Equalizers: University of Illinois at Chicagoamjc16Pas encore d'évaluation

- Tai Lieu HayDocument5 pagesTai Lieu HaydcngatranPas encore d'évaluation

- The WSSUS Pulse Design Problem in Multicarrier TransmissionDocument24 pagesThe WSSUS Pulse Design Problem in Multicarrier Transmissionhouda88atPas encore d'évaluation

- Optimal MTM Spectral Estimation Based Detection For Cognitive Radio in HDTVDocument5 pagesOptimal MTM Spectral Estimation Based Detection For Cognitive Radio in HDTVAbdul RahimPas encore d'évaluation

- Employing Laplacian-Gaussian Densities For Speech EnhancementDocument4 pagesEmploying Laplacian-Gaussian Densities For Speech Enhancementbavar88Pas encore d'évaluation

- Subspace-Based Localization of Near-Field Signals in Unknown Nonuniform NoiseDocument5 pagesSubspace-Based Localization of Near-Field Signals in Unknown Nonuniform NoiseWeiliang ZuoPas encore d'évaluation

- A Performance Study of MIMO Detectors: Christoph Windpassinger, Lutz Lampe, Robert F. H. Fischer, Thorsten HehnDocument16 pagesA Performance Study of MIMO Detectors: Christoph Windpassinger, Lutz Lampe, Robert F. H. Fischer, Thorsten HehnLe-Nam TranPas encore d'évaluation

- Ofdm Channel Estimation by Singular Value Decomposition: Abstract - A New Approach To Low-Complexity ChanDocument5 pagesOfdm Channel Estimation by Singular Value Decomposition: Abstract - A New Approach To Low-Complexity ChanAlexandro PendosilioPas encore d'évaluation

- Supervised Classification of Scatterers On Sar Imaging Based On Incoherent Polarimetric Time-Frequency SignaturesDocument5 pagesSupervised Classification of Scatterers On Sar Imaging Based On Incoherent Polarimetric Time-Frequency SignaturesSunflowerPas encore d'évaluation

- Effective Partitioning Method For Computing Generalized Inverses and Their GradientsDocument13 pagesEffective Partitioning Method For Computing Generalized Inverses and Their GradientsVukasinnnPas encore d'évaluation

- New Subspace-Based Method For Localization of Multiple Near-Field Signals and Statistical AnalysisDocument5 pagesNew Subspace-Based Method For Localization of Multiple Near-Field Signals and Statistical AnalysisWeiliang ZuoPas encore d'évaluation

- Blind Identification of Multichannel Systems Driven by Impulsive SignalsDocument7 pagesBlind Identification of Multichannel Systems Driven by Impulsive SignalsRafael TorresPas encore d'évaluation

- Mass, Stiffness, and Damping Matrix Estimates From Structural MeasurementsDocument7 pagesMass, Stiffness, and Damping Matrix Estimates From Structural Measurementscarlos0094Pas encore d'évaluation

- Doa EstimationDocument8 pagesDoa Estimationanon_49540064Pas encore d'évaluation

- 2005 10 Milcom ChanquanDocument7 pages2005 10 Milcom ChanquanKehindeOdeyemiPas encore d'évaluation

- AF Repet ComLetter4Document3 pagesAF Repet ComLetter4Hela Chamkhia Ep OmriPas encore d'évaluation

- IsitaDocument6 pagesIsitaali963852Pas encore d'évaluation

- Compressive Sensing in Electromagnetics A Review PDFDocument15 pagesCompressive Sensing in Electromagnetics A Review PDFwuchennuaaPas encore d'évaluation

- Joint Factor Analysis of Speaker and Session Variability: Theory and AlgorithmsDocument17 pagesJoint Factor Analysis of Speaker and Session Variability: Theory and AlgorithmsAnonymous qQjAJFPas encore d'évaluation

- EUSIPCO 13 June 2010 SubmitDocument5 pagesEUSIPCO 13 June 2010 Submitb_makkiabadiPas encore d'évaluation

- A Compressed Sensing Technique For Ofdm Channel Estimation in Mobile Environments: Exploiting Channel Sparsity For Reducing Pilots Georg Taub Ock and Franz HlawatschDocument4 pagesA Compressed Sensing Technique For Ofdm Channel Estimation in Mobile Environments: Exploiting Channel Sparsity For Reducing Pilots Georg Taub Ock and Franz Hlawatschmanorama dinkarPas encore d'évaluation

- Maximum Likelihood Sequence Estimators:: A Geometric ViewDocument9 pagesMaximum Likelihood Sequence Estimators:: A Geometric ViewGeorge MathaiPas encore d'évaluation

- Presented By: Ercherio Marpaung (14S15056) Dewi Fortuna Simangunsong (14S15007)Document30 pagesPresented By: Ercherio Marpaung (14S15056) Dewi Fortuna Simangunsong (14S15007)Ercherio MarpaungPas encore d'évaluation

- Cram Er-Rao Bound Analysis On Multiple Scattering in Multistatic Point Scatterer EstimationDocument4 pagesCram Er-Rao Bound Analysis On Multiple Scattering in Multistatic Point Scatterer EstimationkmchistiPas encore d'évaluation

- Exploiting Spatio-Temporal Correlations in MIMO Wireless Channel PredictionDocument5 pagesExploiting Spatio-Temporal Correlations in MIMO Wireless Channel Predictionkeethu8Pas encore d'évaluation

- Fast Computation of Channel-Estimate Based Equalizers in Packet Data TransmissionDocument24 pagesFast Computation of Channel-Estimate Based Equalizers in Packet Data TransmissionSen ZhangPas encore d'évaluation

- Tao Wan Nishan Canagarajah Alin Achim: Compressive Image FusionDocument4 pagesTao Wan Nishan Canagarajah Alin Achim: Compressive Image FusionManwinder SinghPas encore d'évaluation

- Wcnc07 CfoDocument5 pagesWcnc07 CfowoodksdPas encore d'évaluation

- Research Article: A Novel ULA-Difference-Coarray-Based DOA Estimation Method For General Coherent SignalsDocument13 pagesResearch Article: A Novel ULA-Difference-Coarray-Based DOA Estimation Method For General Coherent SignalsMark LindseyPas encore d'évaluation

- ML Estimation of Covariance Matrix For Tensor Valued Signals in NoiseDocument4 pagesML Estimation of Covariance Matrix For Tensor Valued Signals in NoiseIsaiah SunPas encore d'évaluation

- Bidirectional LMS Analysis PDFDocument13 pagesBidirectional LMS Analysis PDFthirusriviPas encore d'évaluation

- Bayes linear methodology for uncertainty evaluation in reservoir forecastingDocument10 pagesBayes linear methodology for uncertainty evaluation in reservoir forecastingrezaPas encore d'évaluation

- Multivariate AR Model Order Estimation With Unknown Process Order As Published in SpringerDocument11 pagesMultivariate AR Model Order Estimation With Unknown Process Order As Published in SpringerΣΤΥΛΙΑΝΟΣ ΠΑΠΠΑΣPas encore d'évaluation

- Reduced Order Modelling of Linear Dynamic Systems Using Eigen Spectrum Analysis and Modified Cauer Continued FractionDocument6 pagesReduced Order Modelling of Linear Dynamic Systems Using Eigen Spectrum Analysis and Modified Cauer Continued FractionSamarendu BaulPas encore d'évaluation

- Davide Cescato, Moritz Borgmann, Helmut Bölcskei, Jan Hansen, and Andreas BurgDocument5 pagesDavide Cescato, Moritz Borgmann, Helmut Bölcskei, Jan Hansen, and Andreas BurgKhurram ShahzadPas encore d'évaluation

- Performance of Coherent Differential Phase-Shift Keying Free-Space Optical Communication Systems in M-Distributed TurbulenceDocument7 pagesPerformance of Coherent Differential Phase-Shift Keying Free-Space Optical Communication Systems in M-Distributed TurbulencerajeeseenivasanPas encore d'évaluation

- Pilot Optimization and Channel Estimation For Multiuser Massive MIMO SystemsDocument6 pagesPilot Optimization and Channel Estimation For Multiuser Massive MIMO SystemsQuả Ớt Leo-neePas encore d'évaluation

- Performance Enhancement of Robust Expectation-Maximization Direction of Arrival Estimation Algorithm For Wideband Source SignalsDocument7 pagesPerformance Enhancement of Robust Expectation-Maximization Direction of Arrival Estimation Algorithm For Wideband Source SignalsJournalofICTPas encore d'évaluation

- Robust ML Detection Algorithm For Mimo Receivers in Presence of Channel Estimation ErrorDocument5 pagesRobust ML Detection Algorithm For Mimo Receivers in Presence of Channel Estimation ErrortrPas encore d'évaluation

- Joint Optimization of Transceivers With Fractionally Spaced EqualizersDocument4 pagesJoint Optimization of Transceivers With Fractionally Spaced EqualizersVipin SharmaPas encore d'évaluation

- HMM Adaptation Using Vector Taylor Series For Noisy Speech RecognitionDocument4 pagesHMM Adaptation Using Vector Taylor Series For Noisy Speech RecognitionjimakosjpPas encore d'évaluation

- 1043Document6 pages1043Eng-Sa'di Y. TamimiPas encore d'évaluation

- Determination of Modal Residues and Residual Flexibility For Time-Domain System RealizationDocument31 pagesDetermination of Modal Residues and Residual Flexibility For Time-Domain System RealizationSamagassi SouleymanePas encore d'évaluation

- Resistor Network Aproach EITDocument55 pagesResistor Network Aproach EITjorgeluis.unknownman667Pas encore d'évaluation

- High SNR Analysis For MIMO Broadcast Channels: Dirty Paper Coding vs. Linear PrecodingDocument33 pagesHigh SNR Analysis For MIMO Broadcast Channels: Dirty Paper Coding vs. Linear Precodingkumaar1943Pas encore d'évaluation

- Niethammer2010 Dwi AtlasDocument12 pagesNiethammer2010 Dwi AtlasmarcniethammerPas encore d'évaluation

- OFDM System Identification For Cognitive Radio Based On Pilot-Induced CyclostationarityDocument6 pagesOFDM System Identification For Cognitive Radio Based On Pilot-Induced CyclostationarityMohammadreza MirarabPas encore d'évaluation

- Denoising of Natural Images Based On Compressive Sensing: Chege SimonDocument16 pagesDenoising of Natural Images Based On Compressive Sensing: Chege SimonIAEME PublicationPas encore d'évaluation

- Channel Estimation For Adaptive Frequency-Domain EqualizationDocument11 pagesChannel Estimation For Adaptive Frequency-Domain Equalizationअमरेश झाPas encore d'évaluation

- Martin Kroger and Avinoam Ben-Shaul - On The Presence of Loops in Linear Self-Assembling Systems: Statistical Methods and Brownian DynamicsDocument9 pagesMartin Kroger and Avinoam Ben-Shaul - On The Presence of Loops in Linear Self-Assembling Systems: Statistical Methods and Brownian DynamicsLokosooPas encore d'évaluation

- Two Way Coupled Structural Acoustic OptiDocument16 pagesTwo Way Coupled Structural Acoustic OptiSefa KayaPas encore d'évaluation

- Efficient Soft MIMO Detection Algorithms Based On Differential MetricsDocument5 pagesEfficient Soft MIMO Detection Algorithms Based On Differential MetricsshyamPas encore d'évaluation

- Simple Equalization of Time-Varying Channels For OFDMDocument3 pagesSimple Equalization of Time-Varying Channels For OFDMKizg DukenPas encore d'évaluation

- Optimal STBC Precoding With Channel Covariance Feedback For Minimum Error ProbabilityDocument18 pagesOptimal STBC Precoding With Channel Covariance Feedback For Minimum Error ProbabilityManuel VasquezPas encore d'évaluation

- Spline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsD'EverandSpline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsPas encore d'évaluation

- ERS Title for Madurai to Chennai TripDocument2 pagesERS Title for Madurai to Chennai TripVanidevi ManiPas encore d'évaluation

- National FDP on Computer Vision using Deep LearningDocument2 pagesNational FDP on Computer Vision using Deep LearningVanidevi ManiPas encore d'évaluation

- Handbook of Microwave and Radar Engineering: Anatoly BelousDocument992 pagesHandbook of Microwave and Radar Engineering: Anatoly BelousVanidevi ManiPas encore d'évaluation

- Sparse Parameter Estimation and Imaging in Mmwave MIMO Radar Systems With Multiple Stationary and Mobile TargetsDocument17 pagesSparse Parameter Estimation and Imaging in Mmwave MIMO Radar Systems With Multiple Stationary and Mobile TargetsVanidevi ManiPas encore d'évaluation

- (AM/PM: Is ItDocument10 pages(AM/PM: Is ItVanidevi ManiPas encore d'évaluation

- OTFS - Predictability in The Delay-Doppler Domain and Its Value To Communication and Radar SensingDocument18 pagesOTFS - Predictability in The Delay-Doppler Domain and Its Value To Communication and Radar SensingVanidevi ManiPas encore d'évaluation

- Course Material - AP - Antenna ArrayDocument34 pagesCourse Material - AP - Antenna ArrayVanidevi ManiPas encore d'évaluation

- Statistical MIMO RadarDocument34 pagesStatistical MIMO RadarVanidevi ManiPas encore d'évaluation

- FMCW Radar GNU RadioDocument4 pagesFMCW Radar GNU RadioDharmesh PanchalPas encore d'évaluation

- Pier B Vol-53 2013417-435Document20 pagesPier B Vol-53 2013417-435Vanidevi ManiPas encore d'évaluation

- Usrp 4Document6 pagesUsrp 4Vanidevi ManiPas encore d'évaluation

- Arduino Based Snake Robot Controlled Using AndroidDocument3 pagesArduino Based Snake Robot Controlled Using AndroidVanidevi ManiPas encore d'évaluation

- For Digital Communication Over Dispersive Channels: Decision-Feedback EqualizationDocument102 pagesFor Digital Communication Over Dispersive Channels: Decision-Feedback EqualizationVanidevi ManiPas encore d'évaluation

- Women Safety Device With GPS TrackingDocument2 pagesWomen Safety Device With GPS TrackingVanidevi ManiPas encore d'évaluation

- Orthogonal Frequency Division Multiplexing (OFDM) : Concept and System-ModelingDocument31 pagesOrthogonal Frequency Division Multiplexing (OFDM) : Concept and System-ModelingAsif_Iqbal_8466Pas encore d'évaluation

- Career Point: Pre-Foundation DivisionDocument18 pagesCareer Point: Pre-Foundation DivisionVanidevi Mani100% (1)

- DTFS - TutorialDocument7 pagesDTFS - TutorialVanidevi ManiPas encore d'évaluation

- Tuning A Kalman Filter Carrier Tracking Algorithm in The Presence of Ionospheric ScintillationDocument12 pagesTuning A Kalman Filter Carrier Tracking Algorithm in The Presence of Ionospheric ScintillationVanidevi ManiPas encore d'évaluation

- Optimum Receiver For Decoding Automatic Dependent Surveillance Broadcast (ADS-B) SignalsDocument9 pagesOptimum Receiver For Decoding Automatic Dependent Surveillance Broadcast (ADS-B) SignalsVanidevi ManiPas encore d'évaluation

- Brahim2015 PDFDocument12 pagesBrahim2015 PDFVanidevi ManiPas encore d'évaluation

- Digital Communications: Chapter 13 Fading Channels I: Characterization and SignalingDocument118 pagesDigital Communications: Chapter 13 Fading Channels I: Characterization and SignalingVanidevi ManiPas encore d'évaluation

- System NOise and Link BudgetDocument35 pagesSystem NOise and Link Budgetleo3001Pas encore d'évaluation

- Digital Communications: Chapter 12 Spread Spectrum Signals For Digital CommunicaitonsDocument89 pagesDigital Communications: Chapter 12 Spread Spectrum Signals For Digital CommunicaitonsVanidevi ManiPas encore d'évaluation

- Origin and Evolution of Life ClassificationDocument26 pagesOrigin and Evolution of Life ClassificationAhmed Shoukry AminPas encore d'évaluation

- BiomedicalSensorSystem EVADocument9 pagesBiomedicalSensorSystem EVAVanidevi ManiPas encore d'évaluation

- N5625Document286 pagesN5625Feliscio Ascione FelicioPas encore d'évaluation

- Uhf Radio Na Uhf g0 r6Document24 pagesUhf Radio Na Uhf g0 r6Vanidevi ManiPas encore d'évaluation

- A GNU Radio Based Software-Defined RadarDocument126 pagesA GNU Radio Based Software-Defined RadarVanidevi ManiPas encore d'évaluation

- Career Point: Pre-Foundation DivisionDocument18 pagesCareer Point: Pre-Foundation DivisionVanidevi Mani100% (1)

- HS Systems Part 3 For Print - ADocument94 pagesHS Systems Part 3 For Print - AVanidevi ManiPas encore d'évaluation

- A Novel Approach To The Fast Computation of Zernike Moments: Sun-Kyoo Hwang, Whoi-Yul KimDocument12 pagesA Novel Approach To The Fast Computation of Zernike Moments: Sun-Kyoo Hwang, Whoi-Yul KimSaasiPas encore d'évaluation

- 7.5 Solving Linear Trigonometric EquationsDocument10 pages7.5 Solving Linear Trigonometric EquationsZion KeyPas encore d'évaluation

- K - Fibonacci SequencesDocument10 pagesK - Fibonacci SequencesSimona DraghiciPas encore d'évaluation

- Chap 01Document66 pagesChap 01Gaurav HarshaPas encore d'évaluation

- Normal and Tangent Plane SurfacesDocument43 pagesNormal and Tangent Plane SurfacesJhan Kleo VillanuevaPas encore d'évaluation

- 2.1 The Basic Features: Matlab Notes Matlab 2 2. MatrixDocument7 pages2.1 The Basic Features: Matlab Notes Matlab 2 2. MatrixAbdul HakimPas encore d'évaluation

- Functions: Concepts, Activities, Examples & DefinitionsDocument6 pagesFunctions: Concepts, Activities, Examples & DefinitionsRex Reu PortaPas encore d'évaluation

- Calc CH 2 Solutions CPMDocument21 pagesCalc CH 2 Solutions CPMAngel Villa50% (4)

- (@bohring - Bot) VT Question Bank Page 33-45Document45 pages(@bohring - Bot) VT Question Bank Page 33-45Atharv AggarwalPas encore d'évaluation

- Quadrature em PDFDocument13 pagesQuadrature em PDF青山漫步Pas encore d'évaluation

- Image Description with Generalized Pseudo-Zernike MomentsDocument27 pagesImage Description with Generalized Pseudo-Zernike MomentsHicham OmarPas encore d'évaluation

- CSC Int 1Document1 pageCSC Int 1Babitha DhanaPas encore d'évaluation

- Stresses Around Underground ExcavationsDocument101 pagesStresses Around Underground ExcavationsKerim Aydiner100% (1)

- MATH-110 Multivariate CalculusDocument2 pagesMATH-110 Multivariate CalculusBilal AnwarPas encore d'évaluation

- FEA ShockDocument36 pagesFEA ShockKarthick MurugesanPas encore d'évaluation

- 4.synthesis of Driving Point Functions (One Port Systems)Document51 pages4.synthesis of Driving Point Functions (One Port Systems)BerentoPas encore d'évaluation

- Exercises Solutions TumorsDocument32 pagesExercises Solutions TumorsElio EduardoPas encore d'évaluation

- A Few More LBM Boundary ConditionsDocument19 pagesA Few More LBM Boundary Conditionssalem nourPas encore d'évaluation

- Warm-Up Codlets: Bur.f: Burgers Equation KPZ.F: KPZ Equation Sand.f: Sandpile ModelDocument20 pagesWarm-Up Codlets: Bur.f: Burgers Equation KPZ.F: KPZ Equation Sand.f: Sandpile ModeljahidPas encore d'évaluation

- 569 - PR 2-2 - Dirac Particle in Uniform Magnetic FieldDocument2 pages569 - PR 2-2 - Dirac Particle in Uniform Magnetic FieldBradley NartowtPas encore d'évaluation

- Exercises Solved5Document9 pagesExercises Solved5Nguyen Duc TaiPas encore d'évaluation

- Finite Element Analysis of 1D Structural Systems Using Higher Order ElementsDocument77 pagesFinite Element Analysis of 1D Structural Systems Using Higher Order ElementsfefahimPas encore d'évaluation

- Groebner Business Statistics 7 Ch05Document39 pagesGroebner Business Statistics 7 Ch05Zeeshan RiazPas encore d'évaluation

- Abstract AlgebraDocument10 pagesAbstract AlgebraShailanie Valle RiveraPas encore d'évaluation

- Perpetual American Straddle Option: CEN Yuan-Jun, YI Fa-HuaiDocument6 pagesPerpetual American Straddle Option: CEN Yuan-Jun, YI Fa-HuaivaluatPas encore d'évaluation

- Blake Farman: B A N 0 1 2 N 1 NDocument11 pagesBlake Farman: B A N 0 1 2 N 1 NMatthew SimeonPas encore d'évaluation

- Multiplicity Results For Generalized Mean Curvature OperatorDocument6 pagesMultiplicity Results For Generalized Mean Curvature Operatorcristi7sPas encore d'évaluation

- FreeFem - PDE SolverDocument32 pagesFreeFem - PDE SolverAnurag RanjanPas encore d'évaluation

- CVEN2002 Week5Document43 pagesCVEN2002 Week5Kai LiuPas encore d'évaluation