Académique Documents

Professionnel Documents

Culture Documents

Software Reliability

Transféré par

partha_dutta_27Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Software Reliability

Transféré par

partha_dutta_27Droits d'auteur :

Formats disponibles

1

Software Reliability

What is Software Reliability?

As per IEEE standard "Software reliability is defined as the ability of a system or component to perform its

required functions under stated conditions for a specified period of time".

The most acceptable definition of software reliability is: "It is the probability of a failure free operation of a

program for a specified time in a specified environment.

For example, a program X is estimated to have a reliability of 0.96 over eight elapsed processing hours. In other

words, if program X were to be executed 100 times and require a total of eight hours of elapsed processing time

(execution time), it is likely to operate correctly (without failure) 96 times. Since failure means departure the output

of program from the expected output, the concept of software reliability incorporates the notion of performance

being satisfactory.

What is run & run Type?

It is possible to view the execution of a program as a single entity. The execution can last for months or even

years for a real time system. However, it is more convenient to divide the execution into runs. The definition of

run is somewhat arbitrary, but it is generally associated with some function that the program performs. Runs

that are identical repetitions of each other are said to form a run type. The proportion of runs of various types may

vary, depending on the functional environment. Examples of a run type might be:

A particular transaction in an airline reservation system or a business data processing system

Uses of Reliability Studies

There are many reasons for which software reliability measures have great value to the software engineer, manager

or user.

1. Software reliability measures can be used to evaluate software engineering technology

quantitatively. New techniques are continually being proposed for improving the process of

developing software, but unfortunately they have been exposed to little quantitative evaluation.

Software reliability measures offer the promise of establishing at least one criterion for

evaluating the new technology.

2. Software reliability measures offer the possibility of evaluating development status during the

test phases of a project. Reliability generally increases with the amount of testing. An objective

reliability measure (such as failure intensity) established from test data provides a sound means of

determining testing progress. Thus, reliability can be closely linked with project schedules.

Furthermore, the cost of testing is highly correlated with failure intensity improvement. Since two

of the key process attributes that a manager must control are schedule and cost, reliability can be

intimately tied in with project management.

3. One can use software reliability measures to monitor the operational performance of

software and to control new features added and design changes made to the software. The

reliability of software usually decreases as a result of such changes. A reliability objective can be

used to determine when, and perhaps how large, a change will be allowed.

4. Reliability measures give a quantitative understanding of software quality and the various

factors influencing it and affected by it enriches into the software product and the software

development process. One is then much more capable of making informed decisions.

What are Software Failures and Faults?

2

The term software failure is the departure of the external results of program operation

from requirements. Failure is something dynamic and the program has to be executing for a failure to

occur. It can include such things as deficiency in performance attributes and excessive response time.

A fault is the defect in the program that, when executed under particular conditions, causes a

failure. A fault is created when a programmer makes an error. There can be different sets of conditions

that cause a failure, or the conditions can be repeated. Hence a fault can be the source of more than one

failure. A fault is a property the program rather than a property of its execution or behavior.

Hardware vs. Software Reliability

Reliability behavior for hardware and software is very different. For example, hardware failures are

inherently different from software failures. The source of failures in software is design faults, while

the principal source in hardware has generally been physical deterioration-such as component

wear and tear. Although manufacturing can affect the quality of physical components the probability

of failure due to wear and other physical causes has usually been much greater than that due to an

unrecognized design problem. It was possible to keep hardware design failures low because

hardware was generally less complex logically than software. Hardware design failures had to be

kept low because retrofitting of manufactured items in the field was very expensive.

To fix hardware faults, one has to either replace or repair the failed part. On the other hand, a

software product would continue to fail until the error is tracked down and either the design or

the code is changed. Once a software (design) defect is properly fixed, it is in general fixed for all

time.

When hardware is repaired its reliability is maintained at the level that existed before the failure

occurred; whereas when a software failure is repaired, the reliability may either increase or

decrease (reliability may decrease if a bug fix introduces new errors). Since introduction and

removal of design faults occurs during software development, software reliability may be expected to

vary during this period.

To put this fact in a different perspective, hardware reliability study is concerned with stability (for

example, the inter-failure durations remain constant). On the other hand, software reliability

study aims at reliability growth (i.e. inter-failure durations increase).

The changes in failure rate over the product lifetime for a typical hardware product and a

software product are sketched in Figure 1.

In case of hardware product the failure rate is high initially but decreases as the faulty components are

identified and removed. The system then enters its useful life. After some time (called the product

lifetime) the components wear out, and the failure rate increases. The best period is useful life, period.

The shape of this curve is like a "bath tub" and that is why it is known as bathtub curve. (Figure 1a)

On the other hand, for software the failure rate is highest during integration and testing phases. As the

system is tested, more and more errors are identified and moved resulting in a reduced failure rate. This

error removal continues at a slower pace during the useful life of the product. As the software becomes

obsolete, no more error correction occurs and the failure rate remains unchanged. (Figure 1b)

In theory, therefore, the failure rate curve for software should take the form of the "idealized

curve. The idealized curve is a gross oversimplification of actual failure models for software.

However, the implication is clear-software doesn't wear out. But it does deteriorate!

During its life, software will undergo change. As changes are made, it is likely that errors will be

introduced, causing the failure rate curve to spike as shown in figure 1c and before the curve can return

to the original steady-state failure rate, another change is requested, causing the curve to spike again.

3

Slowly, the minimum failure rate level begins to risethe software is deteriorating due to

change.

Relation Of reliability and number of Defects in the System

Burn In

Wear

Out

Useful life

Time

Testing

Useful life Obsolete

Time

Figure 1a

Figure 1b

Figure 1c

4

Intuitively, it is obvious that a software product having a large number of defects is

unreliable. It is also clear to us that the reliability of a system improves, if the number of defects in it is

reduced.

However, there is no simple relationship between the observed system reliability and

the number of latent defects in the system. For example, removing errors from parts of software,

which are rarely executed, makes little difference to the perceived reliability of the product. Thus, the

reliability of a product depends not only on the number of latent errors but also on the exact

location of the errors.

Apart from this, reliability also depends upon how the product is used, i.e. on its execution

profile. If we select input data to the system such that only the correctly implemented functions are

executed, none of the errors will be exposed and the perceived reliability of the product will be high.

On the other hand, if we select the input data such that only those functions, which contain errors, are

invoked, the perceived reliability of the system will be very low.

Explanation

It has been experimentally observed by analyzing the behavior of a large number of programs that 90%

of the execution time of a typical program is spent in executing only 10% of the instructions in the

program. These most used 10% instructions are often called the core of the program. The rest 90% of

the program statements are called non-core and are executed only for 10% of the total execution time.

It therefore may not be very surprising to note that removing 60% product defects from the least used

parts of a system would typically lead to only 3% improvement to the product reliability. It is clear

that the quantity, by which the overall reliability of a program improves, due to the correction of

a single error, depends on how frequently the corresponding instruction is executed.

The reliability figure of a software product is observer-dependent and cannot be determined

absolutely. Explain

Different users use a software product in different ways. So defects, which show up for one user, may

not show up for another. For example, suppose that the functions of Library Automation Software,

which the library members use (issue books, search books, etc.) are error free. Also, assume that the

functions that the librarian uses (such as create member, create book record, delete member record,

etc.) have many bugs. Then, these two categories of users would have very different opinions about the

reliability of the software.

Why software reliability is difficult to measure?

a. The reliability improvement due to fixing a single bug depends on where the bug is

located in the code.

b. The perceived reliability of a software product is highly observer-dependent.

c. The reliability of a product keeps changing as errors are detected and fixed.

Reliability Metrics

The reliability requirements for different categories of software products may be different. For

this reason, it is necessary that the level of reliability required for a software product should be

specified in the SRS (software requirements specification) document. In order to be able to do

this, we need some metrics to quantitatively express the reliability of a software product. A good

reliability measure should be observer-independent, so that different people can agree on the degree of

reliability that a system has.

Reliability metrics, which can be used to quantify the reliability of software products, are discussed

bellow.

Rate of Occurrence of Failure (ROCOF)-ROCOF measures the frequency of occurrence of

unexpected behavior (i.e. failures). The ROCOF measure of a software product can be obtained by

observing the behavior of a software product in operation over a specified time interval and then

calculating the total number of failures during this interval.

5

Mean Time to Failure (MTTF)-MTTF is the average time between two successive failures,

observed over a large number of failures. To measure MTTF, we can record the failure data for n

failures. Let the failures occur at the time instants t

1

, t

2

, ..., t

n

. Then, MTTF can be calculated as

1

1

1

1

=

+

n

t t

n

i

i i

It is important to note that only run-time is considered in the time measurements, i.e. the time for

which the system is down to fix the error; the boot time, etc. is not taken into account in the time

measurements and the clock is stopped at these times.

Mean Time to Repair (MTTR)-Once failure occurs, some time is required to fix the error. MTTR measures the

average time it takes to track the errors causing the failure and then to fix them. MTTR is obtained by

summing of all corrective maintenance and dividing by the total number of failures during the observation

interval, which supplies the average amount of time required repairing the equipment upon failure. If there were

five random failures and each failure required various repair times and the sum of the repairs was 50 hours.

Therefore, the average time to repair or MTTR is 10 hours.

The repair rate () is calculated by:

Repair Rate () = 1/MTTR

= 1/10 repair per hour

= 0.1 repair per hour

Mean Time Between Failures (MTBF)- MTBF is a basic reliability measure for repairable assets. We can

combine the MTTF and MTTR metric to get the MTBF metric: MTBF = MTTF + MTTR. Thus, MTBF of 300

hours indicates that once a failure occurs, the next failure is expected to occur only after 300 hours. In this case,

the time measurements are real time and not the execution time as in MTTF.

MTBF expressed by dividing the number of hours in the observation interval by the number of random failures.

This simple calculation, as with most reliability-based data calculations, requires an observation period long enough

to ensure that an accurate representation of typical productive intervals has been secured.

A typical mechanical system had five random failures occur in an observation interval of 1,000 productive hours.

Simply stated, the MTBF is the average time between failures. In this case, the MTBF is 200 hours.

The failure rate can now be calculated to provide the number of failures that occur on this equipment per hour. The

failure rate, or the number of failures divided by the MTBF, is calculated by:

Failure Rate () = 1/MTBF

= 1/200 hours = 0.005

= 0.005 failure per hour

Probability of Failure on Demand (POFOD)- Unlike the other metrics discussed, this metric does not explicitly

involve time measurements. POFOD measures the likelihood of the system failing when a service request is made.

For example, a POFOD of 0.001 would mean that 1 out of every 1000 service requests would result in a failure.

MTBF, or Mean Time Between Failure, is a basic measure of a systems reliability. The higher the MTBF number

is, the higher the reliability of the product. Following equation illustrates this relationship.

Reliability = e^ -(Time/MTBF)

Availability-It is the degree to which a system or component is operational and accessible when required for use

over a given period of time.

Availability=(MTBF/(MTBF +MTTR))*100

6

Classification of Failures

The relative severity of different failures is different. Failures which are transient and whose consequences are not

serious are of little practical importance in the operational use of a software product. On the other hand, more

severe types of failures may render the system totally unusable. In order to estimate the reliability of a software

product more accurately, it is necessary to classify various types of failures. Note that a failure can at the same time

belong to more than one class. A possible classification of failures is as follows:

Transient- Transient failures occur only for certain input values while invoking function of the system.

Permanent- Permanent failures occur for all input values while invoking function of the system.

Recoverable- When recoverable failures occur, the system recovers with or without operator intervention.

Unrecoverable- In unrecoverable failures, the system may need to be restarted.

Cosmetic-These classes of failures cause only minor irritations, and do not lead to incorrect results. An example of

a cosmetic failure is the case where the mouse button has to be clicked twice instead of once to invoke a given

function through the graphical user interface.

Reliability Growth Modeling

A reliability growth model is a mathematical model of how software reliability improves as errors are

detected and repaired. A reliability growth model can be used to predict when (or if at all) a particular level

of reliability is likely to be attained. Thus, reliability growth modeling can be used to determine when to stop

testing to attain a given reliability level. Although several different reliability growth models have been proposed,

in this text we discuss only two very simple reliability growth models.

Jelinski and Moranda model

It is the simplest reliability growth model where it is assumed that the reliability increases by a constant

increment each time an error is detected and repaired. Here, failure intensity is directly proportional to the

number of remaining errors in a program. Such a model is shown in Figure 2.

ROCOF

or

Failure

rate ()

Time (t)

Figure2

t1 t2 t3 t4 t5 t6 t7

N

(N-1)

(N-2)

(N-3)

(N-4)

(N-5)

7

It proposed a failure intensity function in the form of

(t) = (N i + 1)

= constant of proportionality

N= Total number of errors present

i = number of errors found by time interval t

i

The time interval t

1

, t

2

... t

k

may vary in duration depending upon the occurrence of a failure.

Between (i 1)th and ith failure, failure intensity function is (N i + 1) .

There are 100 errors estimated to be present in a program. We have experienced 60 errors. Use Jelinski

Moranda model to calculate failure intensity with a given value of = 0.03. What will be failure intensity

after the experience of 80 errors?

Solution

N=100 errors

i= 60 failures

= 0.03

We Know,

(t) = (N i + 1)

=0.03 *(100-60+1)

= 1.23 failures/ CPU hour

After 80 failures

(t) = (N i + 1)

=0.03 *(100-80+1)

= 0.63 failures/ CPU hour

However, this simple model of reliability, which implicitly assumes that all errors contribute equally to reliability

growth, is highly unrealistic since we already know that corrections of different errors contribute differently to

reliability growth.

Littlewood and Verall's model

This model allows for negative reliability growth to reflect the fact that when a repair is carried out, it may

introduce additional errors.

It also models the fact that as errors are repaired, the average improvement in reliability per repair

decreases.

It treats an error's contribution to reliability improvement to be an independent random variable having

Gamma distribution. This distribution models the fact that error corrections with large contributions to

reliability growth are removed first. This represents diminishing return as test continues.

IOE maintenance group accounts system has been installed & is normally available to users from 8:00 a.m.

until 6:00 p.m. from Monday to Friday. Over a four-week period the system was unavailable for one whole

day because of problems with a disk drive & was not available on 2 other days until 10:00 a.m. in the

morning because of problems as in overnight batch processing runs. What were the availability & MT

between failures (MTBF)? (2007)

8

Answer:

MTBF=The number of hours in the observation interval / The number of random failures

=10*5*4/3 hours =200/3hours = 66.67 hours

In case of first failure system was unavailable for one whole day means corrective maintenance takes 10 hours

(from 8 a.m. to 6 p.m.)

In case of second and third failures system was unavailable until 10:00 a.m. in the morning means corrective

maintenance takes 2 hours in each day (from 8 a.m. to 10 a.m.)

MTTR= Sum of all corrective maintenance /The total number of failures during the observation interval

=(10+2+2)/3 hours = 14/3 hours = 4.67 hours.

Availability = (MTBF/(MTBF +MTTR))*100=((200/3)/(200/3+14/3))* 100=200/214 = 0.93%

What is Statistical Testing?

The main objective of conventional testing is discovering errors. Statistical testing is a testing process whose

objective is to determine the reliability of the product rather than discovering errors. To carry out statistical

testing we have to perform following steps.

Determining the operation profile of the Software-Different categories of users may use a software for very

different purposes. Formally, we can define the operation profile of a software as the probability distribution of the

input of an average user. If we divide the input into a number of classes {Ci}, the probability value of a class

represents the probability of an average user selecting his next input from this class. Thus, the operation profile

assigns a probability value P

i

to each input class {C

i

}.

Generating a set of test data corresponding to the determined profile-. It requires a statistically significant

number of test cases to be used. It further requires that a small percentage of test inputs that are likely to cause

system failure be included.

Applying the test cases to the software and recording the time between each failure

What Data Will you Collect to find the correlation between Size & Reliability? (2004)

With the increasing size of software, it is inevitable that software fault becomes a serious problem

and hence failure increases or reliability decreases. To find the correlation between software size & reliability

we have to measure software Size & Software Reliability by using some metrics.

Lines Of Code (LOC), or LOC in thousands (KLOC), is an intuitive initial approach to measuring software

size. But LOC is suffering from several drawbacks. Function Point metrics give more accurate estimation.

Function point metric is a method of measuring the size of a proposed software development based upon a count of

inputs, outputs, master files, inquires, and interfaces. The method can be used to estimate the size of a software

system as soon as these functions can be identified.

We can use MTBF (Mean Time Between Failure), which is average time span between two successive failures

as a reliability metrics. Total Defects Delivered specifying the total number of faults in the software that was

reported in the first month of its use can be used as reliability metrics.

We can next correlate FP with MTBF by analyzing data points & it may be possible to define a suitable model,

which satisfy the data points.

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- TÜV Functional Safety Program: WWW - Prosalus.co - UkDocument267 pagesTÜV Functional Safety Program: WWW - Prosalus.co - Ukmurali krishna100% (1)

- SRS Master Login ModuleDocument17 pagesSRS Master Login ModuleMuneeba KaleemPas encore d'évaluation

- C: Finding Code That Counts With Causal Profiling: Charlie Curtsinger Emery D. BergerDocument14 pagesC: Finding Code That Counts With Causal Profiling: Charlie Curtsinger Emery D. BergerkhaledmosharrafmukutPas encore d'évaluation

- What Is Automation - IBMDocument20 pagesWhat Is Automation - IBMkacsa quetzalPas encore d'évaluation

- SAP Master Data Governance For Material Data - OverviewDocument113 pagesSAP Master Data Governance For Material Data - Overviewsudhakar lankapothu100% (1)

- Component DFDDocument9 pagesComponent DFDyolandoPas encore d'évaluation

- Utest GlossaryDocument6 pagesUtest GlossaryHaribabu PalneediPas encore d'évaluation

- JSA Concrete WorkDocument3 pagesJSA Concrete WorkthayounkPas encore d'évaluation

- Operating System: Lecturer Ameer Sameer Hamood University of BabylonDocument26 pagesOperating System: Lecturer Ameer Sameer Hamood University of BabylonMohsen AljazaeryPas encore d'évaluation

- Computer Integrated Manufacturing (CIM) .Document5 pagesComputer Integrated Manufacturing (CIM) .BHAGYESH JHANWARPas encore d'évaluation

- User Manual: INV 06 - Stock CountingDocument8 pagesUser Manual: INV 06 - Stock Countingdaftar aplikasiPas encore d'évaluation

- LXGBLEGRDocument15 pagesLXGBLEGRJose Manuel VidalPas encore d'évaluation

- Live Debugging of Stateflow Charts While Running On EcuDocument27 pagesLive Debugging of Stateflow Charts While Running On EcuAli Emre YılmazPas encore d'évaluation

- System Planning: Bases For Planning in System AnalysisDocument15 pagesSystem Planning: Bases For Planning in System Analysisdivjot batraPas encore d'évaluation

- High-Level Programming Languages: FocusDocument3 pagesHigh-Level Programming Languages: FocusHassanRanaPas encore d'évaluation

- Catálogo de Peças - Escavadeira PC200-8M0 (KEPB162200) BXDocument539 pagesCatálogo de Peças - Escavadeira PC200-8M0 (KEPB162200) BXROGERIO DOS SANTOS100% (1)

- Multi-Threading in CDocument10 pagesMulti-Threading in Chamzand02Pas encore d'évaluation

- Technical Writter JDDocument2 pagesTechnical Writter JDnight writerPas encore d'évaluation

- BPMN by Example - An Introduction To BPMN PDFDocument59 pagesBPMN by Example - An Introduction To BPMN PDFracringandhi100% (11)

- SDLC AssignmentDocument21 pagesSDLC AssignmentNhật HuyPas encore d'évaluation

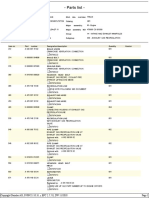

- Parts ListDocument3 pagesParts Listhamdi galipPas encore d'évaluation

- Project ReportDocument13 pagesProject ReportSreedeviPas encore d'évaluation

- Horizontal Flow Wrapper Machine Case Study enDocument4 pagesHorizontal Flow Wrapper Machine Case Study enastojadin1873Pas encore d'évaluation

- 2-Developing An Information SystemDocument7 pages2-Developing An Information SystemWorld of LovePas encore d'évaluation

- UML NotesDocument14 pagesUML NotesBuddhika PrabathPas encore d'évaluation

- Ssos-Qp Cia-IDocument2 pagesSsos-Qp Cia-IVignesh GopalPas encore d'évaluation

- CHAPTER 5 Internal Combustion EngineDocument49 pagesCHAPTER 5 Internal Combustion EngineYann YeuPas encore d'évaluation

- Star UmlDocument9 pagesStar UmlAchintya JhaPas encore d'évaluation

- WbsDocument22 pagesWbshaddan100% (1)