Académique Documents

Professionnel Documents

Culture Documents

Blade

Transféré par

Monika Rathore0 évaluation0% ont trouvé ce document utile (0 vote)

31 vues4 pagesBlade Cluster document

Copyright

© © All Rights Reserved

Formats disponibles

PDF, TXT ou lisez en ligne sur Scribd

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentBlade Cluster document

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF, TXT ou lisez en ligne sur Scribd

0 évaluation0% ont trouvé ce document utile (0 vote)

31 vues4 pagesBlade

Transféré par

Monika RathoreBlade Cluster document

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF, TXT ou lisez en ligne sur Scribd

Vous êtes sur la page 1sur 4

Ericsson MSC

Server Blade Cluster

The MSC-S Blade Cluster, the future-proof server part of Ericssons

Mobile Softswitch solution, provides very high capacity, effortless

scalability, and outstanding system availability. It also means lower

OPEX per subscriber, and sets the stage for business-effcient network

solutions.

PETRI MAEKI NI EMI

JAN SCHEURICH

The Mobile Switching Center

Server (MSC-S) is a key part of

Ericssons Mobile Softswitch

solution, controlling all circuit-

switched call services, the user

plane and media gateways.

And now, with the MSC-S Blade

Cluster, Ericsson has taken

its Mobile Softswitch solution

one step further, substantially

increasing node availability and

server capacity. The MSC-S Blade

Cluster also dramatically sim-

plifes the network, creating an

infrastructure which is always

available and easy to manage and

which can be adjusted to handle

increases in traffc and changing

needs.

Continued strong growth in circuit-

switched voice traffc necessitates fast

and smooth increases in network capac-

ity. The typical industry approach to

increasing network capacity is to intro-

duce high-capacity servers preferably

scalable ones. And the historical solu-

tion to increasing the capacity of MSC

servers has been to introduce a more

powerful central processor. Ericssons

MSC-S Blade Cluster concept, by con-

trast, is an evolution of the MSC server

architecture, where server functional-

ity is implemented on several generic

processor blades that work together in

a group or cluster.

The very-high-capacity nodes that

these clusters form require exception-

al resilience both at the node and net-

work level, a consideration that Ericsson

has addressed in its new blade cluster

concept.

Key benets

The unique scalability of the MSC-S

Blade Cluster gives network opera-

tors great fexibility when building

their mobile softswitch networks and

expanding network capacity as traffc

grows, all without complicating net-

work topology by adding more and more

MSC servers.

The MSC-S Blade Cluster fulflls

the extreme in-service performance

requirements put on large nodes. First,

it is designed to ensure zero downtime

planned or unplanned. Second, it can

be integrated into established and prov-

en network-resilience concepts such as

MSC in Pool.

Because MSC-S Blade Cluster opera-

tion and maintenance (O&M) does not

depend on the number of blades, the

scalability feature signifcantly reduc-

es operating expenses per subscriber as

node capacity expands.

Compared with traditional, non-

scalable MSC servers, the MSC-S Blade

Cluster has the potential to reduce pow-

er consumption by up to 60 percent and

the physical footprint by up to 90 per-

cent thanks especially to its optimized

redundancy concepts and advanced

components. Many of its generic com-

ponents are used in other applications,

such as IMS.

The MSC-S Blade Cluster also supports

advanced, business-effcient network

solutions, such as MSC-S nodes that cov-

er multiple countries or are part of a

shared mobile-softswitch core network

(Table 1).

Key components

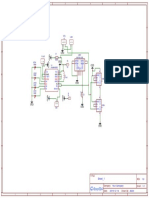

The key components of the MSC-S Blade

Cluster (Figure 1) are the MSC-S blades,

a signaling proxy (SPX), an IP load bal-

ancer, an I/O system and a site infra-

structure support system (SIS).

The MSC-S blades are advanced gener-

ic processor boards, grouped into clus-

ters and jointly running the MSC serv-

er application that controls circuit-

switched calls and the mobile media

gateways.

The signaling proxy (SPX) serves as

BOX A Terms and abbreviations

APG43 Adjunct Processor Group

version 43

ATM Asynchronous transfer mode

E-GEM Enhanced GEM

GEM Generic equipment magazine

GEP Generic processor board

IMS IP Multimedia Subsystem

IO Input/output

IP Internet protocol

M-MGw Media gateway for mobile

networks

MSC Mobile switching center

MSC-S MSC server

O&M Operation and maintenance

OPEX Operating expense

OSS Operations support system

SIS Site infrastructure support

system

SPX Signaling proxy

SS7 Signaling system no. 7

TDM Time-division multiplexing

10

Eri csson rEvi Ew 3 2008 Eri csson rEvi Ew 3 2008

Evolving the MSC server architecture

10

the network interface for SS7 signaling

traffc over TDM, ATM and IP. It distrib-

utes external SS7 signaling traffc to the

MSC-S blades. Two SPXs give 1+1 redun-

dancy. Each SPX resides on a double-

sided APZ processor.

The IP load balancer serves as the

network interface for non-SS7-based

IP-signaling traffc, such as the ses-

sion initiation protocol (SIP). It distrib-

utes external IP signaling to the MSC-S

blades. Two IP load-balancer boards give

1+1 redundancy.

The I/O system handles the transfer

of data for example, charging data,

hot billing data, input via the man-

machine interface, and statistics to and

from MSC-S blades and SPXs. The MSC-S

Blade Cluster I/O system uses the APG43

(Adjunct Processor Group version 43)

and is 1+1 redundant. It is connected

to the operation support system (OSS).

Each I/O system resides on a double-

sided processor.

The site infrastructure support sys-

tem, which is also connected to the

operation support system, provides the

I/O system to Ericssons Infrastructure

components.

Key characteristics

Compared with traditional MSC-S

nodes, the MSC-S Blade Cluster offers

breakthrough advances in terms of

redundancy and scalability.

Redundancy

The MSC-S Blade Cluster features tai-

lored redundancy schemes in different

domains. The network signaling inter-

faces (C7 and non-C7) and the I/O sys-

tems work in a 1+1 redundancy con-

fguration. If one of the components is

unavailable, the other takes over, ensur-

ing that service availability is not affect-

ed, regardless of whether component

downtime is planned (for instance, for

an upgrade or maintenance actions) or

unplanned (hardware failure).

The redundancy scheme in the

domain of the MSC-S blades is n+1.

Although the individual blades do not

feature hardware redundancy, the

cluster of blades is fully redundant. All

blades are equal, meaning that every

blade can assume every role in the sys-

tem. Furthermore, no subscriber record,

mobile media gateway, or neighbor-

ing node is bound to any given blade.

Therefore, the MSC-S Blade Cluster con-

tinues to offer full service availability

when any of its blades is unavailable.

Subscriber records are always main-

tained on two blades to ensure that sub-

scriber data cannot be lost.

In the unlikely event of simultane-

ous failure of multiple blades, the clus-

ter will remain fully operational, expe-

riencing only a loss of capacity (rough-

ly proportional to the number of failed

blades) and minor loss of subscriber

records.

With the exception of very short

interruptions that have no affect

on in-service performance, blade

MSC-S

blade

MSC-S

blade

MSC-S

blade

MSC-S

blade

Signaling proxy

RAN CN OSS

IP load balancer

1+1 1+1 1+1 1+1

Cluster

SIS I/O

MSC-S

blade

FIGURE 1 Main functional components of the MSC-S Blade Cluster.

TABLE 1 Key benets of the MSC-S Blade Cluster.

Feature Benet

Very high capacity Ten-fold increase over traditional MSC servers

Easy scalability Expansion in steps of 500,000 subscribers through the

addition of new blades

No network impact when adding blades

Outstanding system availability Zero downtime at the node level

Upgrade without impact on traffc

Network-level resilience through MSC in Pool

Reduced OPEX Fewer nodes or sites needed in the network

Up to 90 percent smaller footprint

As much as 60 percent lower power consumption

Business-effcient network solutions Multi-country operators

Shared networks

Future-proof hardware Blades can be used in other applications, such as IMS

Eri csson rEvi Ew 3 2008

11

Eri csson rEvi Ew 3 2008

a wide range of cluster capacities from

very small to very large.

The individual MSC-S blades are not

visible to neighboring network nodes,

such as the BSC, RNC, M-MGw, HLR, SCP,

P-CSCF and so on. This frst enabler is

essential for smooth scalability: blades

can be added immediately without

affecting the confguration of cooper-

ating nodes.

Other parts of the network might

also have to be expanded to make full

use of increased blade cluster capac-

ity. When this is the case, these steps

can be decoupled and taken indepen-

dently.

The second enabler is the ability of

the MSC-S Blade Cluster to dynamical-

ly adapt its internal distribution to a

new blade confguration without man-

ual intervention. As a consequence, the

capacity-expansion procedure is almost

fully automatic only a few manual

steps are needed to add a blade to the

running system.

When a generic processor board is

inserted and registered with the clus-

ter middleware, the new blade is loaded

with a 1-to-1 copy of the application soft-

ware and a confguration of the active

blades. The blade then joins the cluster

and is prepared for manual test traffc.

For the time being it remains isolated

from regular traffc.

The blades automatically update their

internal distribution tables to the new

cluster confguration and replicate all

necessary dynamic data, such as sub-

scriber records, on the added blade.

These activities run in the background

and have no affect on cluster capacity

or availability.

After a few minutes, when the inter-

nal preparations are complete and test

results are satisfactory, the blade can be

activated for traffc. From this point on,

it handles its share of the cluster load

and becomes an integral part of the clus-

ter redundancy scheme.

MSC-S Blade Cluster hardware

Building practice

The MSC-S Blade Cluster is housed in an

Enhanced Generic Equipment Magazine

(E-GEM). Compared with the GEM, the

E-GEM provides even more power per

subrack and better cooling capabilities,

which translates into a smaller foot-

print.

failures have no affect on connectiv-

ity to other nodes. Nor do blade failures

affect availability for traffc of the user-

plane resources controlled by the MSC-S

Blade Cluster.

To achieve n+1 redundancy, the

cluster of MSC-S blades employs a set

of advanced distribution algorithms.

Fault-tolerant middleware ensures that

the blades share a consistent view of the

cluster confguration at all times. The

MSC-S application uses stateless distri-

bution algorithms that rely on this clus-

ter view. The middleware also provides a

safe group-communication mechanism

for the blades.

Static confguration data is replicat-

ed on every blade. This way, each blade

that is to execute a requested service has

access to the requisite data. Dynamic

data, such as subscriber records or the

state of external traffc devices, is repli-

cated on two or more blades.

Interworking between the two redun-

dancy domains in the MSC-S Blade

Cluster is handled in an innovative

manner. Components of the 1+1 redun-

dancy domain do not require detailed

information about the distribution of

tasks and roles among the MSC-S blades.

The SPX and IP load balancer, for exam-

ple, can base their forwarding decisions

on stateless algorithms. The blades,

on the other hand, use enhanced,

industry-standard redundancy mecha-

nisms when they interact with the 1+1

redundancy domain for, say, selecting

the outgoing path.

The combination of cluster middle-

ware, data replication and stateless dis-

tribution algorithms provides a distrib-

uted system architecture that is highly

redundant and robust.

One particular beneft of n+1 redun-

dancy is the potential to isolate an

MSC-S blade from traffc to allow

maintenance activities for example,

to update or upgrade software with-

out disturbing cluster operation. This

means zero planned cluster down-

time.

Scalability

The MSC-S Blade Cluster was designed

with scalability in mind: to increase sys-

tem capacity one needs only add MSC-S

blades to the cluster. The shared clus-

ter components, such as the I/O system,

the SPX and IP load balancer, have been

designed and dimensioned to support

Cabinet 1 Cabinet 2

SPX

Optional

Fan

Fan

Fan

Fan

Fan

Fan

Fan

Fan

Fan

Fan

IO

TDM devices

ATM devices

IS Infrastructure

MSC-S blades

FIGURE 2 MSC-S Blade Cluster cabinets.

12

Eri csson rEvi Ew 3 2008 Eri csson rEvi Ew 3 2008

Evolving the MSC server architecture

Generic processor board

The Generic Processor Board (GEP) used

for the MSC-S blades is equipped with an

x86 64-bit architecture processor. There

are several variants of the equipped GEP

board, all manufactured from the same

printed circuit board. In addition, the

GEP is used in a variety of confgura-

tions for several other components in

the MSC-S Blade Cluster, namely the

APG43, the SPX and SIS, and other appli-

cation systems.

Infrastructure components

The infrastructure components pro-

vide layer-2 and layer-3 infrastructure

for the blades, incorporating routers, SIS

and switching components. They also

provide the main on-site layer-2 proto-

col infrastructure for the MSC-S Blade

Cluster. Ethernet is used on the back-

plane for signaling traffc.

Hardware layout

The MSC-S Blade Cluster consists of one

or two cabinets (Figure 2). One cabi-

net houses mandatory subracks for the

MSC-S blades with Infrastructure com-

ponents, the APG43 and SPX. The oth-

er cabinet can be used to house a sub-

rack for expanding the MSC-S blades

and two subracks for TDM or ATM sig-

naling interfaces.

Power consumption

Low power consumption is achieved by

using advanced low-power processors

in E-GEMs and GEP boards. The high

subscriber capacity of the blade-cluster

node means very low power consump-

tion per subscriber.

Conclusion

The MSC-S Blade Cluster makes

Ericssons Mobile Softswitch solution

even better one that is easy to scale

both in terms of capacity and function-

ality. It offers downtime-free MSC-S

upgrades and updates, and outstanding

node and network availability. What is

more, the hardware can be reused in

future node and network migrations.

The architecture, which is based

on a cluster of blades, is aligned with

Ericssons Integrated Site concept and

other components, such as the APG43

and SPX. O&M is supported by the OSS.

The MSC-S Blade Cluster distributes

subscriber traffc between available

blades. One can add, isolate or remove

blades without disturbing traffc. The

system redistributes the subscribers

and replicates subscriber data when

the number of MSC-S blades changes.

Cluster reconfguration is an automatic

procedure; moreover, the procedure is

invisible to entities outside the node.

Petri Maekiniemi

is master strategic prod-

uct manager for the MSC-S

Blade Cluster. He joined

Ericsson in Finland in 1985

but has worked at Ericsson Eurolab

Aachen in Germany since 1991. Over

the years he has served as system

manager and, later, as product manag-

er in several areas connected to the

MSC, including subscriber services,

charging and MSC pool. Petri holds a

degree in telecommunications engi-

neering from the Helsinki Institute of

Technology, Finland.

Jan Scheurich

is a principal systems

designer. He joined

Ericsson Eurolab Aachen

in 1993 and has been em-

ployed since then as software designer,

system manager, system tester and

troubleshooter for various Ericsson

products, ranging from B-ISDN over

classical MSC, SGSN, MMS solutions,

to MSS. He currently serves as system

architect for the MSC-S Blade Cluster.

Jan holds a degree in physics from Kiel

University, Germany.

Acknowledgements

The authors thank Joe Wilke whose

comments and contributions have

helped shape this article. Joe is a man-

ager in R&D and has driven several

technology-shift endeavors, including

the MSC-S Blade Cluster.

Eri csson rEvi Ew 3 2008

13

Eri csson rEvi Ew 3 2008

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Sample Project - CNET 229 Term Project Part1Document7 pagesSample Project - CNET 229 Term Project Part1Nehal GuptaPas encore d'évaluation

- Trump Saturn Service ManualDocument198 pagesTrump Saturn Service ManualBIOMED HCC100% (2)

- D2 PHASER Manual de PreinstalacionDocument45 pagesD2 PHASER Manual de Preinstalacionjdrojasa100% (1)

- S FTP CABLE CAT.7 AWG23 1200 MHZ - Datasheet SchrackDocument3 pagesS FTP CABLE CAT.7 AWG23 1200 MHZ - Datasheet SchrackcantigiPas encore d'évaluation

- Zaber T-NM Spec SheetDocument2 pagesZaber T-NM Spec SheetServo2GoPas encore d'évaluation

- GEDI v2 1 ManualDocument12 pagesGEDI v2 1 ManualMuhammad Yusuf AbdurrohmanPas encore d'évaluation

- LAYOUT L03/L04.: Selection Table Doses Astro Fb-BelgioDocument12 pagesLAYOUT L03/L04.: Selection Table Doses Astro Fb-BelgioforfazePas encore d'évaluation

- Schematic - POWER BANKDocument1 pageSchematic - POWER BANKUjang Priyanto100% (1)

- WKM Dynaseal 370D4 Ball Valve: Installation, Operation and Maintenance ManualDocument20 pagesWKM Dynaseal 370D4 Ball Valve: Installation, Operation and Maintenance Manualchano_mena1157Pas encore d'évaluation

- BOXER JD ProGator Mount InstructionsDocument15 pagesBOXER JD ProGator Mount InstructionsOnescu ValentynPas encore d'évaluation

- Phaser 5500 5550 SRVC Manual PDFDocument573 pagesPhaser 5500 5550 SRVC Manual PDFfmr_setdiPas encore d'évaluation

- Apple - Annual Report PDFDocument114 pagesApple - Annual Report PDFHafzaPas encore d'évaluation

- Tmo 963 PDFDocument112 pagesTmo 963 PDFAlex Parra100% (3)

- 7.3.7 Lab - View The Switch MAC Address TableDocument5 pages7.3.7 Lab - View The Switch MAC Address TableEdson BayotPas encore d'évaluation

- VLSM & Route Summarization PDFDocument43 pagesVLSM & Route Summarization PDFruletriplex100% (5)

- Pioneer DDJ-SB VirtualDJ 8 Operation GuideDocument11 pagesPioneer DDJ-SB VirtualDJ 8 Operation GuideDanny DelgadoPas encore d'évaluation

- Toshiba AC100 Netbook (Compal LA-6352P)Document33 pagesToshiba AC100 Netbook (Compal LA-6352P)Barton EletronicsPas encore d'évaluation

- Tech Tool FAQs v1.45 March 2017Document20 pagesTech Tool FAQs v1.45 March 2017Harlinton descalzi100% (1)

- LanguagesDocument115 pagesLanguagesidchandraPas encore d'évaluation

- STC 60020Document9 pagesSTC 60020darrylcarvalhoPas encore d'évaluation

- Cordova National High School: Quarter 1 Week 6Document15 pagesCordova National High School: Quarter 1 Week 6R TECHPas encore d'évaluation

- Vinhomes Central Park (Latest)Document20 pagesVinhomes Central Park (Latest)TuTuy AnPas encore d'évaluation

- Dolby CP650 UsersManualDocument36 pagesDolby CP650 UsersManualBernard AudisioPas encore d'évaluation

- Total Cost of Ownership (TCO) Calculator: Direct CostsDocument3 pagesTotal Cost of Ownership (TCO) Calculator: Direct CostsSree NivasPas encore d'évaluation

- 3D Geometry Loading in Promax, A Practical Crperl ExampleDocument13 pages3D Geometry Loading in Promax, A Practical Crperl Exampleprouserdesigner77Pas encore d'évaluation

- NodeB Software InstallationDocument30 pagesNodeB Software InstallationAlif WijatmokoPas encore d'évaluation

- Concurrency Control Protocol & RecoveryDocument23 pagesConcurrency Control Protocol & RecoveryNikhil SharmaPas encore d'évaluation

- Grade 5 ICT Mid Term 2023Document4 pagesGrade 5 ICT Mid Term 2023KISAKYE ANTHONYPas encore d'évaluation

- Laser PrinterDocument2 pagesLaser PrinterDaniela de La Hoz0% (1)

- Starcam Manual PDFDocument144 pagesStarcam Manual PDFuripssPas encore d'évaluation