Académique Documents

Professionnel Documents

Culture Documents

Tutorial Part1

Transféré par

api-258247701Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Tutorial Part1

Transféré par

api-258247701Droits d'auteur :

Formats disponibles

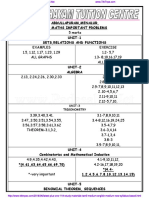

MAP Estimation Algorithms in

M. Pawan Kumar, University of Oxford

Pushmeet Kohli, Microsoft Research

Computer Vision - Part I

Aim of the Tutorial

Description of some successful algorithms

Computational issues

Enough details to implement

Some proofs will be skipped :-(

But references to them will be given :-)

A Vision Application

Binary Image Segmentation

How ?

Cost function Models our knowledge about natural images

Optimize cost function to obtain the segmentation

Object - white, Background - green/grey

Graph G = (V,E)

Each vertex corresponds to a pixel

Edges define a 4-neighbourhood grid graph

Assign a label to each vertex from L = {obj,bkg}

A Vision Application

Binary Image Segmentation

Graph G = (V,E)

Cost of a labelling f : V L

Per Vertex Cost

Cost of label obj low Cost of label bkg high

Object - white, Background - green/grey

A Vision Application

Binary Image Segmentation

Graph G = (V,E)

Cost of a labelling f : V L

Cost of label obj high Cost of label bkg low

Per Vertex Cost

UNARY COST

Object - white, Background - green/grey

A Vision Application

Binary Image Segmentation

Graph G = (V,E)

Cost of a labelling f : V L

Per Edge Cost

Cost of same label low

Cost of different labels high

Object - white, Background - green/grey

A Vision Application

Binary Image Segmentation

Graph G = (V,E)

Cost of a labelling f : V L

Cost of same label high

Cost of different labels low

Per Edge Cost

PAIRWISE

COST

Object - white, Background - green/grey

A Vision Application

Binary Image Segmentation

Graph G = (V,E)

Problem: Find the labelling with minimum cost f*

Object - white, Background - green/grey

A Vision Application

Binary Image Segmentation

Graph G = (V,E)

Problem: Find the labelling with minimum cost f*

A Vision Application

Binary Image Segmentation

Yet Another Vision Application

Stereo Correspondence

Disparity Map

How ?

Minimizing a cost function

Yet Another Vision Application

Stereo Correspondence

Graph G = (V,E)

Vertex corresponds to a pixel

Edges define grid graph

L = {disparities}

Yet Another Vision Application

Stereo Correspondence

Cost of labelling f :

Unary cost + Pairwise Cost

Find minimum cost f*

The General Problem

b

a

e

d

c

f

Graph G = ( V, E )

Discrete label set L = {1,2,,h}

Assign a label to each vertex

f: V L

1

1 2

2 2

3

Cost of a labelling Q(f)

Unary Cost Pairwise Cost

Find f* = arg min Q(f)

Outline

Problem Formulation

Energy Function

MAP Estimation

Computing min-marginals

Reparameterization

Belief Propagation

Tree-reweighted Message Passing

Energy Function

V

a

V

b

V

c

V

d

Label l

0

Label l

1

D

a

D

b

D

c

D

d

Random Variables V = {V

a

, V

b

, .}

Labels L = {l

0

, l

1

, .} Data D

Labelling f: {a, b, . } {0,1, }

Energy Function

V

a

V

b

V

c

V

d

D

a

D

b

D

c

D

d

Q(f) =

a

a;f(a)

Unary Potential

2

5

4

2

6

3

3

7

Label l

0

Label l

1

Easy to minimize

Neighbourhood

Energy Function

V

a

V

b

V

c

V

d

D

a

D

b

D

c

D

d

E : (a,b) E iff V

a

and V

b

are neighbours

E = { (a,b) , (b,c) , (c,d) }

2

5

4

2

6

3

3

7

Label l

0

Label l

1

Energy Function

V

a

V

b

V

c

V

d

D

a

D

b

D

c

D

d

+

(a,b)

ab;f(a)f(b)

Pairwise Potential

0

1

1

0

0

2

1

1

4 1

0

3

2

5

4

2

6

3

3

7

Label l

0

Label l

1

Q(f) =

a

a;f(a)

Energy Function

V

a

V

b

V

c

V

d

D

a

D

b

D

c

D

d

0

1

1

0

0

2

1

1

4 1

0

3

Parameter

2

5

4

2

6

3

3

7

Label l

0

Label l

1

+

(a,b)

ab;f(a)f(b)

Q(f; ) =

a

a;f(a)

Outline

Problem Formulation

Energy Function

MAP Estimation

Computing min-marginals

Reparameterization

Belief Propagation

Tree-reweighted Message Passing

MAP Estimation

V

a

V

b

V

c

V

d

2

5

4

2

6

3

3

7

0

1

1

0

0

2

1

1

4 1

0

3

Q(f; ) =

a

a;f(a)

+

(a,b)

ab;f(a)f(b)

Label l

0

Label l

1

MAP Estimation

V

a

V

b

V

c

V

d

2

5

4

2

6

3

3

7

0

1

1

0

0

2

1

1

4 1

0

3

Q(f; ) =

a

a;f(a)

+

(a,b)

ab;f(a)f(b)

2 + 1 + 2 + 1 + 3 + 1 + 3 = 13

Label l

0

Label l

1

MAP Estimation

V

a

V

b

V

c

V

d

2

5

4

2

6

3

3

7

0

1

1

0

0

2

1

1

4 1

0

3

Q(f; ) =

a

a;f(a)

+

(a,b)

ab;f(a)f(b)

Label l

0

Label l

1

MAP Estimation

V

a

V

b

V

c

V

d

2

5

4

2

6

3

3

7

0

1

1

0

0

2

1

1

4 1

0

3

Q(f; ) =

a

a;f(a)

+

(a,b)

ab;f(a)f(b)

5 + 1 + 4 + 0 + 6 + 4 + 7 = 27

Label l

0

Label l

1

MAP Estimation

V

a

V

b

V

c

V

d

2

5

4

2

6

3

3

7

0

1

1

0

0

2

1

1

4 1

0

3

Q(f; ) =

a

a;f(a)

+

(a,b)

ab;f(a)f(b)

f* = arg min Q(f; )

q* = min Q(f; ) = Q(f*; )

Label l

0

Label l

1

MAP Estimation

f(a) f(b) f(c) f(d) Q(f; )

0 0 0 0 18

0 0 0 1 15

0 0 1 0 27

0 0 1 1 20

0 1 0 0 22

0 1 0 1 19

0 1 1 0 27

0 1 1 1 20

16 possible labellings

f(a) f(b) f(c) f(d) Q(f; )

1 0 0 0 16

1 0 0 1 13

1 0 1 0 25

1 0 1 1 18

1 1 0 0 18

1 1 0 1 15

1 1 1 0 23

1 1 1 1 16

f* = {1, 0, 0, 1}

q* = 13

Computational Complexity

Segmentation

2

|V|

|V| = number of pixels 320 * 480 = 153600

Computational Complexity

|L| = number of pixels 153600

Detection

|L|

|V|

Computational Complexity

|V| = number of pixels 153600

Stereo

|L|

|V|

Can we do better than brute-force?

MAP Estimation is NP-hard !!

Computational Complexity

|V| = number of pixels 153600

Stereo

|L|

|V|

Exact algorithms do exist for special cases

Good approximate algorithms for general case

But first two important definitions

Outline

Problem Formulation

Energy Function

MAP Estimation

Computing min-marginals

Reparameterization

Belief Propagation

Tree-reweighted Message Passing

Min-Marginals

V

a

V

b

V

c

V

d

2

5

4

2

6

3

3

7

0

1

1

0

0

2

1

1

4 1

0

3

f* = arg min Q(f; )

such that f(a) = i

Min-marginal q

a;i

Label l

0

Label l

1

Not a marginal (no summation)

Min-Marginals

16 possible labellings

q

a;0

= 15

f(a) f(b) f(c) f(d) Q(f; )

0 0 0 0 18

0 0 0 1 15

0 0 1 0 27

0 0 1 1 20

0 1 0 0 22

0 1 0 1 19

0 1 1 0 27

0 1 1 1 20

f(a) f(b) f(c) f(d) Q(f; )

1 0 0 0 16

1 0 0 1 13

1 0 1 0 25

1 0 1 1 18

1 1 0 0 18

1 1 0 1 15

1 1 1 0 23

1 1 1 1 16

Min-Marginals

16 possible labellings

q

a;1

= 13

f(a) f(b) f(c) f(d) Q(f; )

1 0 0 0 16

1 0 0 1 13

1 0 1 0 25

1 0 1 1 18

1 1 0 0 18

1 1 0 1 15

1 1 1 0 23

1 1 1 1 16

f(a) f(b) f(c) f(d) Q(f; )

0 0 0 0 18

0 0 0 1 15

0 0 1 0 27

0 0 1 1 20

0 1 0 0 22

0 1 0 1 19

0 1 1 0 27

0 1 1 1 20

Min-Marginals and MAP

Minimum min-marginal of any variable =

energy of MAP labelling

min

f

Q(f; )

such that f(a) = i

q

a;i

min

i

min

i

(

)

V

a

has to take one label

min

f

Q(f; )

Summary

MAP Estimation

f* = arg min Q(f; )

Q(f; ) =

a

a;f(a)

+

(a,b)

ab;f(a)f(b)

Min-marginals

q

a;i

= min Q(f; )

s.t. f(a) = i

Energy Function

Outline

Problem Formulation

Reparameterization

Belief Propagation

Tree-reweighted Message Passing

Reparameterization

V

a

V

b

2

5

4

2

0

1 1

0

f(a) f(b) Q(f; )

0 0 7

0 1 10

1 0 5

1 1 6

2 +

2 +

- 2

- 2

Add a constant to all

a;i

Subtract that constant from all

b;k

Reparameterization

f(a) f(b) Q(f; )

0 0 7 + 2 - 2

0 1 10 + 2 - 2

1 0 5 + 2 - 2

1 1 6 + 2 - 2

Add a constant to all

a;i

Subtract that constant from all

b;k

Q(f; ) = Q(f; )

V

a

V

b

2

5

4

2

0

0

2 +

2 +

- 2

- 2

1 1

Reparameterization

V

a

V

b

2

5

4

2

0

1 1

0

f(a) f(b) Q(f; )

0 0 7

0 1 10

1 0 5

1 1 6

- 3

+ 3

Add a constant to one

b;k

Subtract that constant from

ab;ik

for all i

- 3

Reparameterization

V

a

V

b

2

5

4

2

0

1 1

0

f(a) f(b) Q(f; )

0 0 7

0 1 10 - 3 + 3

1 0 5

1 1 6 - 3 + 3

- 3

+ 3

- 3

Q(f; ) = Q(f; )

Add a constant to one

b;k

Subtract that constant from

ab;ik

for all i

Reparameterization

V

a

V

b

2

5

4

2

3 1

0

1

2

V

a

V

b

2

5

4

2

3 1

1

0

1

- 2

- 2

- 2 + 2

+ 1

+ 1

+ 1

- 1

V

a

V

b

2

5

4

2

3 1

2

1

0

- 4 + 4

- 4

- 4

a;i

=

a;i

b;k

=

b;k

ab;ik

=

ab;ik

+ M

ab;k

- M

ab;k

+ M

ba;i

- M

ba;i

Q(f; )

= Q(f; )

Reparameterization

Q(f; ) = Q(f; ), for all f

is a reparameterization of , iff

b;k

=

b;k

a;i

=

a;i

ab;ik

=

ab;ik

+ M

ab;k

- M

ab;k

+ M

ba;i

- M

ba;i

Equivalently

Kolmogorov, PAMI, 2006

V

a

V

b

2

5

4

2

0

0

2 +

2 +

- 2

- 2

1 1

Recap

MAP Estimation

f* = arg min Q(f; )

Q(f; ) =

a

a;f(a)

+

(a,b)

ab;f(a)f(b)

Min-marginals

q

a;i

= min Q(f; )

s.t. f(a) = i

Q(f; ) = Q(f; ), for all f

Reparameterization

Outline

Problem Formulation

Reparameterization

Belief Propagation

Exact MAP for Chains and Trees

Approximate MAP for general graphs

Computational Issues and Theoretical Properties

Tree-reweighted Message Passing

Belief Propagation

Belief Propagation gives exact MAP for chains

Remember, some MAP problems are easy

Exact MAP for trees

Clever Reparameterization

Two Variables

V

a

V

b

2

5 2

1

0

V

a

V

b

2

5

4 0

1

Choose the right constant

b;k

= q

b;k

Add a constant to one

b;k

Subtract that constant from

ab;ik

for all i

V

a

V

b

2

5 2

1

0

V

a

V

b

2

5

4 0

1

Choose the right constant

b;k

= q

b;k

a;0

+

ab;00

= 5 + 0

a;1

+

ab;10

= 2 + 1

min M

ab;0

=

Two Variables

V

a

V

b

2

5 5

-2

-3

V

a

V

b

2

5

4 0

1

Choose the right constant

b;k

= q

b;k

Two Variables

V

a

V

b

2

5 5

-2

-3

V

a

V

b

2

5

4 0

1

Choose the right constant

b;k

= q

b;k

f(a) = 1

b;0

= q

b;0

Two Variables

Potentials along the red path add up to 0

V

a

V

b

2

5 5

-2

-3

V

a

V

b

2

5

4 0

1

Choose the right constant

b;k

= q

b;k

a;0

+

ab;01

= 5 + 1

a;1

+

ab;11

= 2 + 0

min M

ab;1

=

Two Variables

V

a

V

b

2

5 5

-2

-3

V

a

V

b

2

5

6 -2

-1

Choose the right constant

b;k

= q

b;k

f(a) = 1

b;0

= q

b;0

f(a) = 1

b;1

= q

b;1

Minimum of min-marginals = MAP estimate

Two Variables

V

a

V

b

2

5 5

-2

-3

V

a

V

b

2

5

6 -2

-1

Choose the right constant

b;k

= q

b;k

f(a) = 1

b;0

= q

b;0

f(a) = 1

b;1

= q

b;1

f*(b) = 0 f*(a) = 1

Two Variables

V

a

V

b

2

5 5

-2

-3

V

a

V

b

2

5

6 -2

-1

Choose the right constant

b;k

= q

b;k

f(a) = 1

b;0

= q

b;0

f(a) = 1

b;1

= q

b;1

We get all the min-marginals of V

b

Two Variables

Recap

We only need to know two sets of equations

General form of Reparameterization

a;i

=

a;i

ab;ik

=

ab;ik

+ M

ab;k

- M

ab;k

+ M

ba;i

- M

ba;i

b;k

=

b;k

Reparameterization of (a,b) in Belief Propagation

M

ab;k

= min

i

{

a;i

+

ab;ik

}

M

ba;i

= 0

Three Variables

V

a

V

b

2

5 2

1

0

V

c

4 6 0

1

0

1

3

2 3

Reparameterize the edge (a,b) as before

l

0

l

1

V

a

V

b

2

5 5 -3

V

c

6 6 0

1

-2

3

Reparameterize the edge (a,b) as before

f(a) = 1

f(a) = 1

-2 -1 2 3

Three Variables

l

0

l

1

V

a

V

b

2

5 5 -3

V

c

6 6 0

1

-2

3

Reparameterize the edge (a,b) as before

f(a) = 1

f(a) = 1

Potentials along the red path add up to 0

-2 -1 2 3

Three Variables

l

0

l

1

V

a

V

b

2

5 5 -3

V

c

6 6 0

1

-2

3

Reparameterize the edge (b,c) as before

f(a) = 1

f(a) = 1

Potentials along the red path add up to 0

-2 -1 2 3

Three Variables

l

0

l

1

V

a

V

b

2

5 5 -3

V

c

6 12 -6

-5

-2

9

Reparameterize the edge (b,c) as before

f(a) = 1

f(a) = 1

Potentials along the red path add up to 0

f(b) = 1

f(b) = 0

-2 -1 -4 -3

Three Variables

l

0

l

1

V

a

V

b

2

5 5 -3

V

c

6 12 -6

-5

-2

9

Reparameterize the edge (b,c) as before

f(a) = 1

f(a) = 1

Potentials along the red path add up to 0

f(b) = 1

f(b) = 0

q

c;0

q

c;1

-2 -1 -4 -3

Three Variables

l

0

l

1

V

a

V

b

2

5 5 -3

V

c

6 12 -6

-5

-2

9

f(a) = 1

f(a) = 1

f(b) = 1

f(b) = 0

q

c;0

q

c;1

f*(c) = 0 f*(b) = 0 f*(a) = 1

Generalizes to any length chain

-2 -1 -4 -3

Three Variables

l

0

l

1

V

a

V

b

2

5 5 -3

V

c

6 12 -6

-5

-2

9

f(a) = 1

f(a) = 1

f(b) = 1

f(b) = 0

q

c;0

q

c;1

f*(c) = 0 f*(b) = 0 f*(a) = 1

Only Dynamic Programming

-2 -1 -4 -3

Three Variables

l

0

l

1

Why Dynamic Programming?

3 variables 2 variables + book-keeping

n variables (n-1) variables + book-keeping

Start from left, go to right

Reparameterize current edge (a,b)

M

ab;k

= min

i

{

a;i

+

ab;ik

}

ab;ik

=

ab;ik

+ M

ab;k

- M

ab;k

b;k

=

b;k

Repeat

Why Dynamic Programming?

Start from left, go to right

Reparameterize current edge (a,b)

M

ab;k

= min

i

{

a;i

+

ab;ik

}

ab;ik

=

ab;ik

+ M

ab;k

- M

ab;k

b;k

=

b;k

Repeat

Messages Message Passing

Why stop at dynamic programming?

V

a

V

b

2

5 5 -3

V

c

6 12 -6

-5

-2

9

Reparameterize the edge (c,b) as before

-2 -1 -4 -3

Three Variables

l

0

l

1

V

a

V

b

2

5 9 -3

V

c

11 12 -11

-9

-2

9

Reparameterize the edge (c,b) as before

-2 -1 -9 -7

b;i

= q

b;i

Three Variables

l

0

l

1

V

a

V

b

2

5 9 -3

V

c

11 12 -11

-9

-2

9

Reparameterize the edge (b,a) as before

-2 -1 -9 -7

Three Variables

l

0

l

1

V

a

V

b

9

11 9 -9

V

c

11 12 -11

-9

-9

9

Reparameterize the edge (b,a) as before

-9 -7 -9 -7

a;i

= q

a;i

Three Variables

l

0

l

1

V

a

V

b

9

11 9 -9

V

c

11 12 -11

-9

-9

9

Forward Pass Backward Pass

-9 -7 -9 -7

All min-marginals are computed

Three Variables

l

0

l

1

Belief Propagation on Chains

Start from left, go to right

Reparameterize current edge (a,b)

M

ab;k

= min

i

{

a;i

+

ab;ik

}

ab;ik

=

ab;ik

+ M

ab;k

- M

ab;k

b;k

=

b;k

Repeat till the end of the chain

Start from right, go to left

Repeat till the end of the chain

Belief Propagation on Chains

A way of computing reparam constants

Generalizes to chains of any length

Forward Pass - Start to End

MAP estimate

Min-marginals of final variable

Backward Pass - End to start

All other min-marginals

Wont need this .. But good to know

Computational Complexity

Each constant takes O(|L|)

Number of constants - O(|E||L|)

O(|E||L|

2

)

Memory required ?

O(|E||L|)

Belief Propagation on Trees

V

b

V

a

Forward Pass: Leaf Root

All min-marginals are computed

Backward Pass: Root Leaf

V

c

V

d

V

e

V

g

V

h

Outline

Problem Formulation

Reparameterization

Belief Propagation

Exact MAP for Chains and Trees

Approximate MAP for general graphs

Computational Issues and Theoretical Properties

Tree-reweighted Message Passing

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

Where do we start?

Arbitrarily

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Reparameterize (a,b)

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

-

a;0

-

a;1

a;0

-

a;0

= q

a;0

a;1

-

a;1

= q

a;1

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Pick minimum min-marginal. Follow red path.

-

a;0

-

a;1

a;0

-

a;0

= q

a;0

a;1

-

a;1

= q

a;1

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Potentials along the red path add up to 0

-

a;0

-

a;1

a;1

-

a;1

= q

a;1

a;0

-

a;0

q

a;0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Problem Solved

-

a;0

-

a;1

a;1

-

a;1

= q

a;1

a;0

-

a;0

q

a;0

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

-

a;0

-

a;1

Reparameterize (a,b) again

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Reparameterize (a,b) again

But doesnt this overcount some potentials?

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Reparameterize (a,b) again

Yes. But we will do it anyway

Belief Propagation on Cycles

V

a

V

b

V

d

V

c

a;0

a;1

b;0

b;1

d;0

d;1

c;0

c;1

Keep reparameterizing edges in some order

Hope for convergence and a good solution

Belief Propagation

Generalizes to any arbitrary random field

Complexity per iteration ?

O(|E||L|

2

)

Memory required ?

O(|E||L|)

Outline

Problem Formulation

Reparameterization

Belief Propagation

Exact MAP for Chains and Trees

Approximate MAP for general graphs

Computational Issues and Theoretical Properties

Tree-reweighted Message Passing

Computational Issues of BP

Complexity per iteration

O(|E||L|

2

)

Special Pairwise Potentials

ab;ik

= w

ab

d(|i-k|)

i - k

d

Potts

i - k

d

Truncated Linear

i - k

d

Truncated Quadratic

O(|E||L|) Felzenszwalb & Huttenlocher, 2004

Computational Issues of BP

Memory requirements

O(|E||L|)

Half of original BP

Kolmogorov, 2006

Some approximations exist

But memory still remains an issue

Yu, Lin, Super and Tan, 2007

Lasserre, Kannan and Winn, 2007

Computational Issues of BP

Order of reparameterization

Randomly

Residual Belief Propagation

In some fixed order

The one that results in maximum change

Elidan et al. , 2006

Results

Binary Segmentation

Szeliski et al. , 2008

Labels - {foreground, background}

Unary Potentials: -log(likelihood) using learnt fg/bg models

Pairwise Potentials: 0, if same labels

1 - exp(|D

a

- D

b

|), if different labels

Results

Binary Segmentation

Labels - {foreground, background}

Unary Potentials: -log(likelihood) using learnt fg/bg models

Szeliski et al. , 2008

Pairwise Potentials: 0, if same labels

1 - exp(|D

a

- D

b

|), if different labels

Belief Propagation

Results

Binary Segmentation

Labels - {foreground, background}

Unary Potentials: -log(likelihood) using learnt fg/bg models

Szeliski et al. , 2008

Global optimum

Pairwise Potentials: 0, if same labels

1 - exp(|D

a

- D

b

|), if different labels

Results

Szeliski et al. , 2008

Labels - {disparities}

Unary Potentials: Similarity of pixel colours

Pairwise Potentials: 0, if same labels

1 - exp(|D

a

- D

b

|), if different labels

Stereo Correspondence

Results

Szeliski et al. , 2008

Labels - {disparities}

Unary Potentials: Similarity of pixel colours

Pairwise Potentials: 0, if same labels

1 - exp(|D

a

- D

b

|), if different labels

Belief Propagation

Stereo Correspondence

Results

Szeliski et al. , 2008

Labels - {disparities}

Unary Potentials: Similarity of pixel colours

Global optimum

Pairwise Potentials: 0, if same labels

1 - exp(|D

a

- D

b

|), if different labels

Stereo Correspondence

Summary of BP

Exact for chains

Exact for trees

Approximate MAP for general cases

Not even convergence guaranteed

So can we do something better?

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5795)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Konigsberg Bridge Problem: Jayesh Saxena Leonhard EulerDocument19 pagesKonigsberg Bridge Problem: Jayesh Saxena Leonhard EulerAnanya Saxena100% (1)

- 116 hw1Document5 pages116 hw1thermopolis3012Pas encore d'évaluation

- Integral Transform Techniques For Green's Function: Kazumi WatanabeDocument274 pagesIntegral Transform Techniques For Green's Function: Kazumi WatanabeKarima Ait100% (1)

- Derivation of The Laplacian From PDFDocument6 pagesDerivation of The Laplacian From PDFEloy Alfonso FloresPas encore d'évaluation

- 9.menu Driven For Bfs and DfsDocument7 pages9.menu Driven For Bfs and DfsShruti NinawePas encore d'évaluation

- Inverse Trigonometric Functions and IdentitiesDocument21 pagesInverse Trigonometric Functions and IdentitiesKJPas encore d'évaluation

- OSU Adjustment Notes Part 1Document230 pagesOSU Adjustment Notes Part 1oliverchipoyeraPas encore d'évaluation

- 11th Maths Important Sums Study Material English Medium PDFDocument2 pages11th Maths Important Sums Study Material English Medium PDFasifali juttPas encore d'évaluation

- Matrices: 1.1 Basic Matrix OperationsDocument9 pagesMatrices: 1.1 Basic Matrix OperationsAnonymous StudentPas encore d'évaluation

- 64MaxFlow PDFDocument71 pages64MaxFlow PDFTrần Ngọc LamPas encore d'évaluation

- Practice 1 - Chapter 2Document2 pagesPractice 1 - Chapter 2Nguyễn ĐứcPas encore d'évaluation

- Complex Variables & Numerical Methods (2141905)Document3 pagesComplex Variables & Numerical Methods (2141905)NeelPas encore d'évaluation

- Common Functions Function IntegralDocument5 pagesCommon Functions Function IntegralfebriPas encore d'évaluation

- B.Sc. Sem 3 Practical NotesDocument19 pagesB.Sc. Sem 3 Practical NotesAnkurPas encore d'évaluation

- Numerical Solution of Boole'S Rule in Numerical Integration by Using General Quadrature FormulaDocument4 pagesNumerical Solution of Boole'S Rule in Numerical Integration by Using General Quadrature Formuladyatlov chernobylPas encore d'évaluation

- Maths (T) Coursework STPM 2017 Term 1Document5 pagesMaths (T) Coursework STPM 2017 Term 1KennethLoJinChanPas encore d'évaluation

- 1.4. Inverse FunctionsDocument5 pages1.4. Inverse FunctionsnangungunaPas encore d'évaluation

- Gcei Slides 110325Document79 pagesGcei Slides 110325Jason UchennnaPas encore d'évaluation

- HA2 Week 9: Lesson 1 Homework: X y X yDocument9 pagesHA2 Week 9: Lesson 1 Homework: X y X ySebastien TaylorPas encore d'évaluation

- Probability and Statistics 8 LectureDocument21 pagesProbability and Statistics 8 LectureTales CunhaPas encore d'évaluation

- Math 372: Solutions To Homework: Steven Miller October 21, 2013Document31 pagesMath 372: Solutions To Homework: Steven Miller October 21, 2013C FPas encore d'évaluation

- 6 Uniform Boundedness PrincipleDocument6 pages6 Uniform Boundedness PrincipleMiguel Angel Mora LunaPas encore d'évaluation

- Determinant of A MatrixDocument13 pagesDeterminant of A MatrixAgent LoverPas encore d'évaluation

- S.No. ContentDocument196 pagesS.No. ContentKartikay RajPas encore d'évaluation

- CS8451 QBDocument15 pagesCS8451 QBThilaga MylsamyPas encore d'évaluation

- Pre PHD Course WorkDocument23 pagesPre PHD Course WorkFaizan Ahmad ZargarPas encore d'évaluation

- UNIT-2 Set Theory Set: A Set Is Collection of Well Defined ObjectsDocument28 pagesUNIT-2 Set Theory Set: A Set Is Collection of Well Defined ObjectsScar GamingPas encore d'évaluation

- Math10 SLHT, Q2, Wk2-1, MELC M10AL-IIa-b-1Document6 pagesMath10 SLHT, Q2, Wk2-1, MELC M10AL-IIa-b-1Mii Kiwi-anPas encore d'évaluation

- Solving Polynomial Inequalities by GraphingDocument7 pagesSolving Polynomial Inequalities by Graphingtarun gehlotPas encore d'évaluation

- Design and Analysis of Algorithms Laboratory Manual-15CSL47 4 Semester CSE Department, CIT-Mandya Cbcs SchemeDocument30 pagesDesign and Analysis of Algorithms Laboratory Manual-15CSL47 4 Semester CSE Department, CIT-Mandya Cbcs Schemesachu195100% (1)