Académique Documents

Professionnel Documents

Culture Documents

Pipelining, Superscalar, Multiprocessors: Admin

Transféré par

Nusrat Mary ChowdhuryTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Pipelining, Superscalar, Multiprocessors: Admin

Transféré par

Nusrat Mary ChowdhuryDroits d'auteur :

Formats disponibles

cps 104 pipeline.

1 ARL 2001

CPS 104

Pipelining, Superscalar, Multiprocessors

cps 104 pipeline.2 ARL 2001

Admin

Homework 6 due Wed

Projects due Wed

Final Tuesday April 28, 7pm-10pm

Review (Jie will have one to work problems)

cps 104 pipeline.3 ARL 2001

Wr

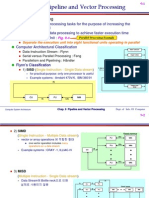

Single Cycle, Multiple Cycle, vs. Pipeline

Clk

Cycle 1

Multiple Cycle Implementation:

Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 Cycle 9 Cycle 10

Load Ifetch Reg Exec Mem Wr

Ifetch Reg Exec Mem

Load Store

Pipeline Implementation:

Ifetch Reg Exec Mem Wr Store

Clk

Single Cycle Implementation:

Load Store Waste

Ifetch

R-type

Ifetch Reg Exec Mem Wr R-type

Cycle 1 Cycle 2

Ifetch Reg Exec Mem

cps 104 pipeline.4 ARL 2001

Pipelining Summary

Most modern processors use pipelining

Pentium 4 has 24 (35) stage pipeline!

Intel Core 2 duo has 14 stages

Alpha 21164 has 7 stages

Pipelining creates more headaches for exceptions, etc

Pipelining augmented with superscalar capabilities

cps 104 pipeline.5 ARL 2001

Superscalar Processors

Key idea: execute more than one instruction per cycle

Pipelining exploits parallelism in the stages of instruction execution

Superscalar exploits parallelism of independent instructions

Example Code:

sub $2, $1, $3

and $12, $3, $5

or $13, $6, $2

add $3, $3, $2

sw $15, 100($2)

Superscalar Execution

sub $2, $1, $3 and $12, $3, $5

or $13, $6, $2 add $3, $3, $2

sw $15, 100($2)

cps 104 pipeline.6 ARL 2001

Superscalar Processors

Key Challenge: Finding the independent instructions

Instruction level parallelism (ILP)

Option 1: Compiler

Static scheduling (Alpha 21064, 21164; UltraSPARC I, II;

Pentium)

Option 2: Hardware

Dynamic Scheduling (Alpha 21264; PowerPC; Pentium Pro,

3, 4)

Out-of-order instruction processing

cps 104 pipeline.7 ARL 2001

Instruction Level Parallelism

Problems:

Program structure: branch every 4-8 instructions

Limited number of registers

Static scheduling: compiler must find and move instructions

from other basic blocks

Dynamic scheduling: Hardware creates a big window to look

for independent instructions

Must know branch directions before branch is executed!

Determines true dependencies.

Example Code:

sub $2, $1, $3

and $12, $3, $2

or $2, $6, $4

add $3, $3, $2

sw $15, 100($2)

cps 104 pipeline.8 ARL 2001

Exposing Instruction Level Parallelism

Branch prediction

Hardware can remember if branch was taken

Next time it sees the branch it uses this to predict outcome

Register renaming

Indirection! The CS solution to almost everything

During decode, map register name to real register location

New location allocated when new value is written to reg.

Example Code:

sub $2, $1, $3 # writes $2 = $p1

and $12, $3, $2 # reads $p1

or $2, $6, $4 # writes $2 = $p3

add $3, $3, $2 # reads $p3

sw $15, 100($2) # reads $p3

cps 104 pipeline.9 ARL 2001

CPU design Summary

Disadvantages of the Single Cycle Processor

Long cycle time

Cycle time is too long for all instructions except the Load

Multiple Clock Cycle Processor:

Divide the instructions into smaller steps

Execute each step (instead of the entire instruction) in one cycle

Pipeline Processor:

Natural enhancement of the multiple clock cycle processor

Each functional unit can only be used once per instruction

If a instruction is going to use a functional unit:

it must use it at the same stage as all other instructions

Pipeline Control:

Each stages control signal depends ONLY on the instruction

that is currently in that stage

cps 104 pipeline.10 ARL 2001

Additional Notes

All Modern CPUs use pipelines.

Many CPUs have 8-12 pipeline stages.

The latest generation processors (Pentium-4, PowerPC G4, SUNs

UltraSPARC) use multiple pipelines to get higher speed (Superscalar

design).

The course: CPS220: Advanced Computer Architecture I covers

Superscalar processors.

Now, Parallel Architectures

cps 104 pipeline.11 ARL 2001

What is Parallel Computer Architecture?

A Parallel Computer is a collection of processing elements that

cooperate to solve large problems fast

how large a collection?

how powerful are the elements?

how does it scale up?

how do they cooperate and communicate?

how is data transmitted between processors?

what are the primitive abstractions?

how does it all translate to performance?

cps 104 pipeline.12 ARL 2001

Parallel Computation: Why and Why Not?

Pros

Performance

Cost-effectiveness (commodity parts)

Smooth upgrade path

Fault Tolerance

Cons

Difficult to parallelize applications

Requires automatic parallelization or parallel program

development

Software! AAHHHH!

cps 104 pipeline.13 ARL 2001

Simple Problem

for i = 1 to N

A[i] = (A[i] + B[i]) * C[i]

sum = sum + A[i]

Split the loops

Independent iterations

for i = 1 to N

A[i] = (A[i] + B[i]) * C[i]

for i = 1 to N

sum = sum + A[i]

cps 104 pipeline.14 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 14

Parallel Programming

Parallel software is the problem

Need to get significant performance

improvement

Otherwise, just use a faster uniprocessor,

since its easier!

Difficulties

Partitioning

Coordination

Communications overhead

7

.

2

T

h

e

D

i

f

f

i

c

u

l

t

y

o

f

C

r

e

a

t

i

n

g

P

a

r

a

l

l

e

l

P

r

o

c

e

s

s

i

n

g

P

r

o

g

r

a

m

s

cps 104 pipeline.15 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 15

Amdahls Law

Sequential part can limit speedup

Example: 100 processors, 90 speedup?

T

new

= T

parallelizable

/100 + T

sequential

Solving: F

parallelizable

= 0.999

Need sequential part to be 0.1% of

original time

90

/100 F ) F (1

1

Speedup

able paralleliz able paralleliz

=

+

=

cps 104 pipeline.16 ARL 2001

Small Scale Shared Memory Multiprocessors

Small number of processors connected to one shared memory

Memory is equidistant from all processors (UMA)

Kernel can run on any processor (symmetric MP)

Intel dual/quad Pentium

Multicore

Main Memory

P

$

P

$

P

$

P

$

P

$

P

$

P

$

P

$

Cache(s)

and

TLB

0 N-1

cps 104 pipeline.17 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 17

Four Example Systems

7

.

1

1

R

e

a

l

S

t

u

f

f

:

B

e

n

c

h

m

a

r

k

i

n

g

F

o

u

r

M

u

l

t

i

c

o

r

e

s

2 quad-core

Intel Xeon e5345

(Clovertown)

2 quad-core

AMD Opteron X4 2356

(Barcelona)

cps 104 pipeline.18 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 18

Four Example Systems

2 oct-core

IBM Cell QS20

2 oct-core

Sun UltraSPARC

T2 5140 (Niagara 2)

cps 104 pipeline.19 ARL 2001

Cache Coherence Problem (Initial State)

P1 P2

x

BUS

Main Memory

T

i

m

e

cps 104 pipeline.20 ARL 2001

Cache Coherence Problem (Step 1)

P1 P2

x

BUS

Main Memory

T

i

m

e

ld r2, x

cps 104 pipeline.21 ARL 2001

Cache Coherence Problem (Step 2)

P1 P2

x

BUS

Main Memory

ld r2, x

T

i

m

e

ld r2, x

cps 104 pipeline.22 ARL 2001

Cache Coherence Problem (Step 3)

P1 P2

x

BUS

Main Memory

ld r2, x

add r1, r2, r4

st x, r1

T

i

m

e

ld r2, x

cps 104 pipeline.23 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 23

Message Passing

Each processor has private physical

address space

Hardware sends/receives messages

between processors

7

.

4

C

l

u

s

t

e

r

s

a

n

d

O

t

h

e

r

M

e

s

s

a

g

e

-

P

a

s

s

i

n

g

M

u

l

t

i

p

r

o

c

e

s

s

o

r

s

cps 104 pipeline.24 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 24

Loosely Coupled Clusters

Network of independent computers

Each has private memory and OS

Connected using I/O system

E.g., Ethernet/switch, Internet

Suitable for applications with independent tasks

Web servers, databases, simulations,

High availability, scalable, affordable

Problems

Administration cost (prefer virtual machines)

Low interconnect bandwidth

c.f. processor/memory bandwidth on an SMP

cps 104 pipeline.25 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 25

Grid Computing

Separate computers interconnected by

long-haul networks

E.g., Internet connections

Work units farmed out, results sent back

Can make use of idle time on PCs

E.g., SETI@home, World Community Grid

cps 104 pipeline.26 ARL 2001

Chapter 7 Multicores, Multiprocessors, and Clusters 26

Multithreading

Performing multiple threads of execution in parallel

Replicate registers, PC, etc.

Fast switching between threads

Fine-grain multithreading

Switch threads after each cycle

Interleave instruction execution

If one thread stalls, others are executed

Coarse-grain multithreading

Only switch on long stall (e.g., L2-cache miss)

Simplifies hardware, but doesnt hide short stalls (eg, data

hazards)

7

.

5

H

a

r

d

w

a

r

e

M

u

l

t

i

t

h

r

e

a

d

i

n

g

Vous aimerez peut-être aussi

- Homework 2Document8 pagesHomework 2Deepak BegrajkaPas encore d'évaluation

- Full Solution Manual For Modern Processor Design by John Paul Shen and Mikko H. Lipasti PDFDocument18 pagesFull Solution Manual For Modern Processor Design by John Paul Shen and Mikko H. Lipasti PDFtargetiesPas encore d'évaluation

- 02-General Purpose ProcessorsDocument37 pages02-General Purpose Processorswaqar khan77Pas encore d'évaluation

- Thyristor Theory and Design ConsiderationsDocument240 pagesThyristor Theory and Design Considerationsvijai daniel100% (4)

- Formula SheetDocument1 pageFormula SheetSaleem SheikhPas encore d'évaluation

- Project Report of Operating SystemDocument14 pagesProject Report of Operating Systemijgour77% (13)

- UNIT-5: Pipeline and Vector ProcessingDocument63 pagesUNIT-5: Pipeline and Vector ProcessingAkash KankariaPas encore d'évaluation

- Introduction To Reconfigurable Systems1Document45 pagesIntroduction To Reconfigurable Systems1sahebzamaniPas encore d'évaluation

- Computer Organization and Architecture Cs2253: Part-ADocument21 pagesComputer Organization and Architecture Cs2253: Part-AjanukarthiPas encore d'évaluation

- Cpe 242 Computer Architecture and Engineering Instruction Level ParallelismDocument46 pagesCpe 242 Computer Architecture and Engineering Instruction Level ParallelismSagar ManePas encore d'évaluation

- COA AnswersDocument7 pagesCOA AnswersAravindh APas encore d'évaluation

- Pipelining and Vector ProcessingDocument37 pagesPipelining and Vector ProcessingJohn DavidPas encore d'évaluation

- Internal Structure of CPUDocument5 pagesInternal Structure of CPUSaad KhanPas encore d'évaluation

- CH 13.ppt Type IDocument35 pagesCH 13.ppt Type Iadele5eve55Pas encore d'évaluation

- Pentium 4 Pipe LiningDocument7 pagesPentium 4 Pipe Liningapi-3801329100% (5)

- DSP-8 (DSP Processors)Document8 pagesDSP-8 (DSP Processors)Sathish BalaPas encore d'évaluation

- NDocument4 pagesNAzri Mohd Khanil0% (1)

- Architecture PDFDocument19 pagesArchitecture PDFkingPas encore d'évaluation

- CSE 820 Graduate Computer Architecture Week 5 - Instruction Level ParallelismDocument38 pagesCSE 820 Graduate Computer Architecture Week 5 - Instruction Level ParallelismkbkkrPas encore d'évaluation

- Computer Organization and Architecture: Notes On RISC-PipeliningDocument14 pagesComputer Organization and Architecture: Notes On RISC-Pipeliningmeeraneela0808Pas encore d'évaluation

- Thread Safe Conversion Techniques For CICS ApplicationDocument97 pagesThread Safe Conversion Techniques For CICS ApplicationEddy ChanPas encore d'évaluation

- Module 4Document12 pagesModule 4Bijay NagPas encore d'évaluation

- EEF011 Computer Architecture 計算機結構: Exploiting Instruction-Level Parallelism with Software ApproachesDocument40 pagesEEF011 Computer Architecture 計算機結構: Exploiting Instruction-Level Parallelism with Software Approachesi_2loveu32350% (1)

- 20 Advanced Processor DesignsDocument28 pages20 Advanced Processor DesignsHaileab YohannesPas encore d'évaluation

- Embedded Systems Design: Pipelining and Instruction SchedulingDocument48 pagesEmbedded Systems Design: Pipelining and Instruction SchedulingMisbah Sajid ChaudhryPas encore d'évaluation

- Comparch 2 PDFDocument33 pagesComparch 2 PDFAdam Iglesia ÜPas encore d'évaluation

- ch09 Morris ManoDocument15 pagesch09 Morris ManoYogita Negi RawatPas encore d'évaluation

- Reduced Instruction Set Computers: William Stallings Computer Organization and Architecture 7 EditionDocument38 pagesReduced Instruction Set Computers: William Stallings Computer Organization and Architecture 7 EditionSaimo FortunePas encore d'évaluation

- Solution Manual For Modern Processor Design by John Paul Shen and Mikko H. LipastiDocument11 pagesSolution Manual For Modern Processor Design by John Paul Shen and Mikko H. LipastitargetiesPas encore d'évaluation

- Full Solution Manual For Modern Processor Design by John Paul Shen and Mikko H. LipastiDocument27 pagesFull Solution Manual For Modern Processor Design by John Paul Shen and Mikko H. Lipastitargeties50% (2)

- Updated Solution Manual For Modern Processor Design by John Paul Shen and Mikko H. LipastiDocument8 pagesUpdated Solution Manual For Modern Processor Design by John Paul Shen and Mikko H. LipastitargetiesPas encore d'évaluation

- Pipeline HazardsDocument94 pagesPipeline HazardsManasa RavelaPas encore d'évaluation

- Lecture: Pipelining BasicsDocument28 pagesLecture: Pipelining BasicsTahsin Arik TusanPas encore d'évaluation

- Oracle - Problems With DPDK From A User Perspective (2016)Document19 pagesOracle - Problems With DPDK From A User Perspective (2016)prashrockPas encore d'évaluation

- ILPDocument47 pagesILPvengat.mailbox5566Pas encore d'évaluation

- 05 Machine BasicsDocument45 pages05 Machine BasicsfoolaiPas encore d'évaluation

- Embedded Systems Design: A Unified Hardware/Software IntroductionDocument8 pagesEmbedded Systems Design: A Unified Hardware/Software IntroductionsarangaslPas encore d'évaluation

- Intel Sandy Ntel Sandy Bridge ArchitectureDocument54 pagesIntel Sandy Ntel Sandy Bridge ArchitectureJaisson K SimonPas encore d'évaluation

- General Purpose ProcessorDocument13 pagesGeneral Purpose ProcessorRajiv SharmaPas encore d'évaluation

- Sharif Digital Flow Introduction Part I: Synthesize & Power AnalyzeDocument128 pagesSharif Digital Flow Introduction Part I: Synthesize & Power AnalyzejohnmechanjiPas encore d'évaluation

- Chapter 6 PPTV 2004 Short V1Document21 pagesChapter 6 PPTV 2004 Short V1zelalem2022Pas encore d'évaluation

- CA Classes-96-100Document5 pagesCA Classes-96-100SrinivasaRaoPas encore d'évaluation

- The Big Picture: Requirements Algorithms Prog. Lang./Os Isa Uarch Circuit DeviceDocument60 pagesThe Big Picture: Requirements Algorithms Prog. Lang./Os Isa Uarch Circuit DevicevshlvvkPas encore d'évaluation

- "Iron Law" of Processor Performance: Prof. R. Iris Bahar EN164 January 31, 2007Document4 pages"Iron Law" of Processor Performance: Prof. R. Iris Bahar EN164 January 31, 2007Charan Pratap SinghPas encore d'évaluation

- Unit 05 Design Space of PipelinesDocument24 pagesUnit 05 Design Space of PipelinesSrinivasaRaoPas encore d'évaluation

- All-Products Esuprt Software Esuprt It Ops Datcentr MGMT High-Computing-Solution-Resources White-Papers84 En-UsDocument8 pagesAll-Products Esuprt Software Esuprt It Ops Datcentr MGMT High-Computing-Solution-Resources White-Papers84 En-Usomar.pro.servicesPas encore d'évaluation

- Basic PLCDocument119 pagesBasic PLCVishal IyerPas encore d'évaluation

- Vliw ArchitectureDocument30 pagesVliw ArchitectureDhiraj KapilaPas encore d'évaluation

- The Moron: CS 152 Final ProjectDocument18 pagesThe Moron: CS 152 Final ProjectCricket Live StreamingPas encore d'évaluation

- EDO FlowDocument17 pagesEDO Flowtoobee3Pas encore d'évaluation

- 10th Lecture: Multiple-Issue Processors: Please Recall: Branch PredictionDocument28 pages10th Lecture: Multiple-Issue Processors: Please Recall: Branch PredictionSohei LaPas encore d'évaluation

- ECE/CS 752: Advanced Computer Architecture I 1Document4 pagesECE/CS 752: Advanced Computer Architecture I 1Nusrat Mary ChowdhuryPas encore d'évaluation

- ACA Question BankDocument19 pagesACA Question BankVanitha VivekPas encore d'évaluation

- Chap1 IntroDocument30 pagesChap1 IntroSetina AliPas encore d'évaluation

- ACA UNit 1Document29 pagesACA UNit 1mannanabdulsattarPas encore d'évaluation

- Lecture1 2Document30 pagesLecture1 2Sundar RajanPas encore d'évaluation

- Parallel Programming Platforms: Alexandre David 1.2.05Document30 pagesParallel Programming Platforms: Alexandre David 1.2.05Priya PatelPas encore d'évaluation

- CA Classes-91-95Document5 pagesCA Classes-91-95SrinivasaRaoPas encore d'évaluation

- Realworld Rac PerfRealworld Rac PerfDocument52 pagesRealworld Rac PerfRealworld Rac PerfgauravkumarsinhaPas encore d'évaluation

- The Architecture of Supercomputers: Titan, a Case StudyD'EverandThe Architecture of Supercomputers: Titan, a Case StudyPas encore d'évaluation

- PLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.D'EverandPLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.Pas encore d'évaluation

- 1 SeminarDocument57 pages1 SeminarNusrat Mary ChowdhuryPas encore d'évaluation

- 8.4.1.1 The Internet of Everything... Naturally InstructionsDocument1 page8.4.1.1 The Internet of Everything... Naturally InstructionsNusrat Mary ChowdhuryPas encore d'évaluation

- Littelfuse Thyristor Fundamental Characteristics of Thyristors Application Note PDFDocument6 pagesLittelfuse Thyristor Fundamental Characteristics of Thyristors Application Note PDFNusrat Mary Chowdhury100% (1)

- Gate-Drive Recommendations For PCT - 5SYA 2034 - 02NewLay PDFDocument8 pagesGate-Drive Recommendations For PCT - 5SYA 2034 - 02NewLay PDFNusrat Mary ChowdhuryPas encore d'évaluation

- 7.2.5.3 Packet Tracer - Configuring IPv6 Addressing Instructions PDFDocument3 pages7.2.5.3 Packet Tracer - Configuring IPv6 Addressing Instructions PDFiraqi4everPas encore d'évaluation

- 8.1.2.7 Lab - Using The Windows Calculator With Network AddressesDocument7 pages8.1.2.7 Lab - Using The Windows Calculator With Network AddressesNusrat Mary ChowdhuryPas encore d'évaluation

- 700-V Asymmetrical 4H-Sic Gate Turn-Off Thyristors (Gto'S)Document3 pages700-V Asymmetrical 4H-Sic Gate Turn-Off Thyristors (Gto'S)Nusrat Mary ChowdhuryPas encore d'évaluation

- The Unified Power Quality Conditioner: The Integration of Series-And Shunt-Active FiltersDocument8 pagesThe Unified Power Quality Conditioner: The Integration of Series-And Shunt-Active FiltersIrshad AhmedPas encore d'évaluation

- Byou DissertationDocument177 pagesByou DissertationNusrat Mary ChowdhuryPas encore d'évaluation

- Byou DissertationDocument177 pagesByou DissertationNusrat Mary ChowdhuryPas encore d'évaluation

- A New Current-Source Converter Using A Symmetric Gate-Commutated Thyristor (SGCT)Document8 pagesA New Current-Source Converter Using A Symmetric Gate-Commutated Thyristor (SGCT)Nusrat Mary ChowdhuryPas encore d'évaluation

- Application of High Power Thyristors in HVDC and FACTS SystemsDocument8 pagesApplication of High Power Thyristors in HVDC and FACTS SystemsNusrat Mary ChowdhuryPas encore d'évaluation

- IELTS in The US and Beyond: A Truly Global Experience: NAFSA Region XII 2012Document30 pagesIELTS in The US and Beyond: A Truly Global Experience: NAFSA Region XII 2012Nusrat Mary ChowdhuryPas encore d'évaluation

- IELTS in The US and Beyond: A Truly Global Experience: NAFSA Region XII 2012Document30 pagesIELTS in The US and Beyond: A Truly Global Experience: NAFSA Region XII 2012Nusrat Mary ChowdhuryPas encore d'évaluation

- WWW Study-India Co inDocument16 pagesWWW Study-India Co inNusrat Mary ChowdhuryPas encore d'évaluation

- 7 - SCRDocument11 pages7 - SCRNusrat Mary ChowdhuryPas encore d'évaluation

- Information For CandidatesDocument8 pagesInformation For CandidatesKhawar AliPas encore d'évaluation

- GatewayDocument80 pagesGatewayNusrat Mary ChowdhuryPas encore d'évaluation

- The Ielts Exam - : ReadingDocument11 pagesThe Ielts Exam - : ReadingNusrat Mary ChowdhuryPas encore d'évaluation

- GatewayDocument80 pagesGatewayNusrat Mary ChowdhuryPas encore d'évaluation

- Ieltsreadingpreparationtips 121229130101 Phpapp02Document29 pagesIeltsreadingpreparationtips 121229130101 Phpapp02Nusrat Mary ChowdhuryPas encore d'évaluation

- Success Starts With IELTS: British Council 2012Document33 pagesSuccess Starts With IELTS: British Council 2012Nusrat Mary ChowdhuryPas encore d'évaluation

- Xilinx VHDL Test Bench Tutorial - 2.0Document17 pagesXilinx VHDL Test Bench Tutorial - 2.0indrajeetkumarPas encore d'évaluation

- Studies Abroad CounselorsDocument38 pagesStudies Abroad CounselorsNusrat Mary ChowdhuryPas encore d'évaluation

- Bigproject Ielts 100602072510 Phpapp02Document21 pagesBigproject Ielts 100602072510 Phpapp02Nusrat Mary ChowdhuryPas encore d'évaluation

- The Ielts Exam - ListeningDocument9 pagesThe Ielts Exam - ListeningNusrat Mary ChowdhuryPas encore d'évaluation

- MicroprocessorsDocument24 pagesMicroprocessorsarokiarajPas encore d'évaluation

- How To Publish Papers in JournalsDocument8 pagesHow To Publish Papers in JournalsghambiraPas encore d'évaluation

- CSC 200Document10 pagesCSC 200timothyosaigbovo3466Pas encore d'évaluation

- Open Handed Lab Power Spectral Density: ObjectiveDocument3 pagesOpen Handed Lab Power Spectral Density: ObjectiveMiraniPas encore d'évaluation

- Sofapp Chapter 13Document16 pagesSofapp Chapter 13Diana EngalladoPas encore d'évaluation

- Revised AT ScheduleDocument6 pagesRevised AT SchedulevenkateshPas encore d'évaluation

- HikCentral Professional - System Requirements and Performance - V2.3 - 20220713Document25 pagesHikCentral Professional - System Requirements and Performance - V2.3 - 20220713TestingtoolPas encore d'évaluation

- Comprog ReviewerDocument11 pagesComprog ReviewerRovic VistaPas encore d'évaluation

- SmartPSSLite - VideoIntercom Manual - EngDocument40 pagesSmartPSSLite - VideoIntercom Manual - EngsaraojmPas encore d'évaluation

- Introducing NX For Reverse EngineeringDocument15 pagesIntroducing NX For Reverse EngineeringTrương Văn TrọngPas encore d'évaluation

- Assignment 2: The North-South Highway System Passing Through Albany, New York Can Accommodate The Capacities ShownDocument4 pagesAssignment 2: The North-South Highway System Passing Through Albany, New York Can Accommodate The Capacities Shownjaydeep barodiaPas encore d'évaluation

- Venkataramana Guduru 3810, 193 PL Se Contact: +1 571 465 0370 Bothell, WA - 98012 Email: Vguduru@live - Co.ukDocument7 pagesVenkataramana Guduru 3810, 193 PL Se Contact: +1 571 465 0370 Bothell, WA - 98012 Email: Vguduru@live - Co.uksandeepntc100% (1)

- BBDMS Report EditedDocument47 pagesBBDMS Report Editednishan shetty318Pas encore d'évaluation

- Swinir: Image Restoration Using Swin TransformerDocument12 pagesSwinir: Image Restoration Using Swin TransformerpeppyguypgPas encore d'évaluation

- A Framework To Make Voting System Transparent Using Blockchain TechnologyDocument11 pagesA Framework To Make Voting System Transparent Using Blockchain Technologypriya dharshiniPas encore d'évaluation

- Review Problem Cap 2 PDFDocument4 pagesReview Problem Cap 2 PDFNicolas LozanoPas encore d'évaluation

- Cygnus 711: Next Generation MultiplexerDocument1 pageCygnus 711: Next Generation MultiplexerPradeep Kumar YadavPas encore d'évaluation

- Magos Systems - Integrators Course - Lesson 3Document30 pagesMagos Systems - Integrators Course - Lesson 3crgonzalezfloresPas encore d'évaluation

- Question BankDocument5 pagesQuestion Banknuthal4u0% (1)

- Honeywell XNX Spec SheetDocument2 pagesHoneywell XNX Spec SheetNico TabanPas encore d'évaluation

- IMS Basic Signaling Procedure SIP IMS ProceduresDocument66 pagesIMS Basic Signaling Procedure SIP IMS ProceduresRanjeet SinghPas encore d'évaluation

- Module 8Document11 pagesModule 8efrenPas encore d'évaluation

- CS331 System Software Lab CSE Syllabus-Semesters - 5Document2 pagesCS331 System Software Lab CSE Syllabus-Semesters - 5RONIN TPas encore d'évaluation

- RB951-series - User Manuals - MikroTik DocumentationDocument4 pagesRB951-series - User Manuals - MikroTik DocumentationGanny RachmadiPas encore d'évaluation

- CycloneTCP DatasheetDocument3 pagesCycloneTCP DatasheetmusatPas encore d'évaluation

- Datasheet PDFDocument173 pagesDatasheet PDFAlexPas encore d'évaluation

- DevlistDocument7 pagesDevlistmichaelqurtisPas encore d'évaluation

- Delta FB SP6Document61 pagesDelta FB SP6Alison MartinsPas encore d'évaluation

- MF0ULX1Document45 pagesMF0ULX1Marcos Velasco GomezPas encore d'évaluation

- Covesa HMC VSMDocument17 pagesCovesa HMC VSMWaseem AnsariPas encore d'évaluation

- Datasheet of iDS 7216HQHI M1 - E - V4.70.140 - 20220712Document4 pagesDatasheet of iDS 7216HQHI M1 - E - V4.70.140 - 20220712ikorangedane02Pas encore d'évaluation