Académique Documents

Professionnel Documents

Culture Documents

Soultion 4

Transféré par

Ahly Zamalek MasryTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Soultion 4

Transféré par

Ahly Zamalek MasryDroits d'auteur :

Formats disponibles

1

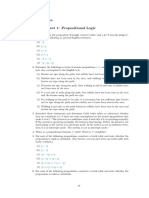

1.1. Give three computer applications for which machine learning approaches seem appropriate and

three for which they seem inappropriate. Pick applications that are not already mentioned in this

chapter, and include a one-sentence justification for each.

Ans.

Machine learning: Face recognition, handwritten recognition, credit card approval.

Not machine learning: calculate payroll, execute a query to database, use WORD.

2.1. Explain why the size of the hypothesis space in the EnjoySport learning task is 973. How

would the number of possible instances and possible hypotheses increase with the addition of the

attribute WaterCurrent, which can take on the values Light, Moderate, or Strong? More generally,

how does the number of possible instances and hypotheses grow with the addition of a new

attribute A that takes on k possible values?

Ans.

Since all occurrence of for an attribute of the hypothesis results in a hypothesis which does not

accept any instance, all these hypotheses are equal to that one where attribute is . So the

number of hypothesis is 4*3*3*3*3*3 +1 =973.

With the addition attribute Watercurrent, the number of instances =3*2*2*2*2*2*3 =288, the

number of hypothesis =4*3*3*3*3*3*4 +1 =3889.

Generally, the number of hypothesis =4*3*3*3*3*3*(k+1)+1.

2.3. Consider again the EnjoySport learning task and the hypothesis space H described in Section

2.2. Let us define a new hypothesis space H' that consists of all pairwise disjunctions of the

hypotheses in H. For example, a typical hypothesis in H' is (?, Cold, High, ?, ?, ?) v (Sunny, ?,

High, ?, ?, Same) Trace the CANDIDATE-ELIMINATATION algorithm for the hypothesis space

H' given the sequence of training examples from Table 2.1 (i.e., show the sequence of S and G

boundary sets.)

Ans.

S0=(,,,,,) v (,,,,,)

G0 =(?, ?, ?, ?, ?, ?) v (?, ?, ?, ?, ?, ?)

Example 1: <Sunny, Warm, Normal, Strong, Warm, Same, Yes>

S1=(Sunny, Warm, Normal, Strong, Warm, Same) v (,,,,,)

G1 =(?, ?, ?, ?, ?, ?) v (?, ?, ?, ?, ?, ?)

Example 2: <Sunny, Warm, High, Strong, Warm, Same, Yes>

S2={(Sunny, Warm, Normal, Strong, Warm, Same) v (Sunny, Warm, High, Strong, Warm, Same)

(Sunny, Warm, , Strong, Warm, Same) v (,,,,,)}

G2 =(?, ?, ?, ?, ?, ?) v (?, ?, ?, ?, ?, ?)

Example 3: <Rainy, Cold, High, Strong, Warm, Change, No>

S3={(Sunny, Warm, Normal, Strong, Warm, Same) v (Sunny, Warm, High, Strong, Warm, Same)

(Sunny, Warm, , Strong, Warm, Same) v (,,,,,)}

G3 ={(Sunny, ?, ?, ?, ?, ?) v (?, Warm, ?, ?, ?, ?),

(Sunny, ?, ?, ?, ?, ?) v (?, ?, ?, ?, ?, Same),

(?, Warm, ?, ?, ?, ?) v (?, ?, ?, ?, ?, Same)}

2

Example 4: <Sunny, Warm, High, Strong, Cool, Change, Yes>

S4={(Sunny, Warm, ?, Strong, ?, ?) v (Sunny, Warm, High, Strong, Warm, Same)

(Sunny, Warm, Normal, Strong, Warm, Same) v (Sunny, Warm, High, Strong, ?, ?)

(Sunny, Warm, , Strong, , ) v (,,,,,)

(Sunny, Warm, , Strong, Warm, Same) v (Sunny, Warm, High, Strong, Cool, Change)}

G4 ={(Sunny, ?, ?, ?, ?, ?) v (?, Warm, ?, ?, ?, ?),

(Sunny, ?, ?, ?, ?, ?) v (?, ?, ?, ?, ?, Same),

(?, Warm, ?, ?, ?, ?) v (?, ?, ?, ?, ?, Same)}

2.4. Consider the instance space consisting of integer points in the x, y plane and the set of

hypotheses H consisting of rectangles. More precisely, hypotheses are of the form a x b, c y

d, where a, b, c, and d can be any integers.

(a) Consider the version space with respect to the set of positive (+) and negative (-) training

examples shown below. What is the S boundary of the version space in this case? Write out the

hypotheses and draw them in on the diagram.

(b) What is the G boundary of this version space? Write out the hypotheses and draw them in.

(c) Suppose the learner may now suggest a new x, y instance and ask the trainer for its

classification. Suggest a query guaranteed to reduce the size of the version space, regardless of

how the trainer classifies it. Suggest one that will not.

(d) Now assume you are a teacher, attempting to teach a particular target concept (e.g., 3 x 5,

2 y 9). What is the smallest number of training examples you can provide so that the

CANDIDATE-ELIMINATION algorithm will perfectly learn the target concept?

Ans. (a) S=(4,6,3,5) (b) G=(3,8,2,7) (c) e.g., (7,6), (5,4) (d) 4 points: (3,2,+), (5,9,+),

(2,1,-),(6,10,-)

2.6. Complete the proof of the version space representation theorem (Theorem 2.1).

Proof: Every member of VS

H,D

satisfies the right-hand side of expression.

Let h be an arbitrary member of VS

H,D

, then h is consistent with all training examples in D.

Assuming h does not satisfy the right-hand side of the expression, it means (sS)(gG)(g h

s) =(sS)(gG) (g h) (h s). Hence, there does not exist g from G so that g is more

general or equal to h or there does not exist s from S so that h is more general or equal to s.

If the former holds, it leads to an inconsistence according to the definition of G. If the later holds, it

3

leads to an inconsistence according to the definition of S. Therefore, h satisfies the right-hand side of

the expression. (Notes: since we assume the expression is not fulfilled, this can be only be if S

or G is empty, which can only be in the case of any inconsistent training examples, such as noise

or the concept target is not member of H.)

Vous aimerez peut-être aussi

- Soultion 5Document3 pagesSoultion 5Ahly Zamalek Masry100% (1)

- Javax SQL PackageDocument50 pagesJavax SQL PackageSudhanshu100% (1)

- Candidate Elimination AlgoDocument13 pagesCandidate Elimination AlgodibankarndPas encore d'évaluation

- Example Sky Airtemp Humidity Wind Water Forecast Enjoysport 1 2 3 4Document6 pagesExample Sky Airtemp Humidity Wind Water Forecast Enjoysport 1 2 3 4ManojPas encore d'évaluation

- PracticeSolution 1Document15 pagesPracticeSolution 1Yogi MahatmaPas encore d'évaluation

- Lecture 2Document31 pagesLecture 2sumathiPas encore d'évaluation

- Lecture3 Concept LearningDocument42 pagesLecture3 Concept LearninghuyebPas encore d'évaluation

- ML Lab Manual-2019Document85 pagesML Lab Manual-2019ManjulaPas encore d'évaluation

- Ma 134 Assignment3 SolutionsDocument3 pagesMa 134 Assignment3 SolutionsimaicolmacPas encore d'évaluation

- ML Lab ObservationDocument44 pagesML Lab Observationjaswanthch16100% (1)

- Lecture No. 2: AU-KBC Research Centre, MIT Campus, Anna UniversityDocument86 pagesLecture No. 2: AU-KBC Research Centre, MIT Campus, Anna UniversityGayathriPas encore d'évaluation

- Affine Kac-Moody Algebras Graded by Affine Root Systems: Josiane NerviDocument50 pagesAffine Kac-Moody Algebras Graded by Affine Root Systems: Josiane NerviLuis FuentesPas encore d'évaluation

- S in Reverse Order. SDocument2 pagesS in Reverse Order. ScreativeshuvoPas encore d'évaluation

- PCP ReportDocument5 pagesPCP ReportOfir Asher AmmarPas encore d'évaluation

- Practice MidtermDocument4 pagesPractice MidtermArka MitraPas encore d'évaluation

- ML 02 ConceptDocument7 pagesML 02 ConceptAli AlhabsiPas encore d'évaluation

- MLlab Manual LIETDocument52 pagesMLlab Manual LIETEthical KhoriyaPas encore d'évaluation

- A-level Maths Revision: Cheeky Revision ShortcutsD'EverandA-level Maths Revision: Cheeky Revision ShortcutsÉvaluation : 3.5 sur 5 étoiles3.5/5 (8)

- Chapter 1-2-Dela Cruz, Mina, JoshuaDocument29 pagesChapter 1-2-Dela Cruz, Mina, JoshuaJerryme Dela Cruz xx100% (1)

- AIR Basic Calculus Q3 W6 - Module 6Document19 pagesAIR Basic Calculus Q3 W6 - Module 6bonifacio gianga jrPas encore d'évaluation

- 1.implement FIND-S Algorithm: DesriptionDocument19 pages1.implement FIND-S Algorithm: DesriptionRavindra ReddyPas encore d'évaluation

- AssigmentsDocument12 pagesAssigmentsShakuntala Khamesra100% (1)

- Seminar 3Document2 pagesSeminar 3mariam husseinPas encore d'évaluation

- Find S AlgorithmDocument7 pagesFind S AlgorithmPooja DixitPas encore d'évaluation

- Assignment 3Document5 pagesAssignment 3RosaPas encore d'évaluation

- Discrete MathematicsDocument11 pagesDiscrete MathematicsRohit SuryavanshiPas encore d'évaluation

- Department of Mathematics & StatisticsDocument2 pagesDepartment of Mathematics & StatisticsSubhamKumarPas encore d'évaluation

- Discrete Mathematical Structures Jan 2014Document2 pagesDiscrete Mathematical Structures Jan 2014Prasad C MPas encore d'évaluation

- Appendix: Background On Probability Theory: Pitman (1999) Jacod and Protter (2000) Devore (2003)Document38 pagesAppendix: Background On Probability Theory: Pitman (1999) Jacod and Protter (2000) Devore (2003)us accPas encore d'évaluation

- ST102 Exercise 1Document4 pagesST102 Exercise 1Jiang HPas encore d'évaluation

- Practice Midterm 2 SolDocument26 pagesPractice Midterm 2 SolZeeshan Ali SayyedPas encore d'évaluation

- 1, Ronald P. Morash - Bridge To Abstract Mathematics - Mathematical Proof and Structures-Random House (1987) - 1 (166-269) (001-010) (06-10)Document5 pages1, Ronald P. Morash - Bridge To Abstract Mathematics - Mathematical Proof and Structures-Random House (1987) - 1 (166-269) (001-010) (06-10)M Nur RamadhanPas encore d'évaluation

- CS2336 Discrete Mathematics: Exam 3: January 10, 2022 (10:10-12:00)Document2 pagesCS2336 Discrete Mathematics: Exam 3: January 10, 2022 (10:10-12:00)蔡奎元Pas encore d'évaluation

- Vol8 Iss3 187-192 Notes and Examples On IntuitionistiDocument6 pagesVol8 Iss3 187-192 Notes and Examples On IntuitionistiNaargees BeghumPas encore d'évaluation

- Math Excercises VIDocument2 pagesMath Excercises VICarlos Eduardo Melo MartínezPas encore d'évaluation

- Cs229 Midterm Aut2015Document21 pagesCs229 Midterm Aut2015JMDP5Pas encore d'évaluation

- ExercisesDocument6 pagesExercisesMazter Cho100% (1)

- CS229 Lecture Notes: Supervised LearningDocument30 pagesCS229 Lecture Notes: Supervised LearninganilkramPas encore d'évaluation

- CS229 Lecture Notes: Supervised LearningDocument30 pagesCS229 Lecture Notes: Supervised LearningAlistair GnowPas encore d'évaluation

- Discrete Applied Mathematics: Delia Garijo, Antonio González, Alberto MárquezDocument8 pagesDiscrete Applied Mathematics: Delia Garijo, Antonio González, Alberto MárquezBeatricePas encore d'évaluation

- Panoramic View of Linear AlgebraDocument35 pagesPanoramic View of Linear AlgebraCharmPas encore d'évaluation

- Research Article: Some Common Coupled Fixed Point Results For Generalized Contraction in Complex-Valued Metric SpacesDocument11 pagesResearch Article: Some Common Coupled Fixed Point Results For Generalized Contraction in Complex-Valued Metric SpacesTalha WaheedPas encore d'évaluation

- Homework #4 Solutions (Due 10/3/06) : Niversity of Ennsylvania Epartment of AthematicsDocument5 pagesHomework #4 Solutions (Due 10/3/06) : Niversity of Ennsylvania Epartment of Athematicsbond12314Pas encore d'évaluation

- Math 2200 Practice Test 3Document8 pagesMath 2200 Practice Test 3Yousif KashatPas encore d'évaluation

- Unit 1.3 Concept Hypothses, Inductive BiasDocument31 pagesUnit 1.3 Concept Hypothses, Inductive BiasMCA Department - AIMS InstitutesPas encore d'évaluation

- Edexcel GCE: Core Mathematics C4 Advanced Level Mock PaperDocument16 pagesEdexcel GCE: Core Mathematics C4 Advanced Level Mock Papersaajan_92Pas encore d'évaluation

- Sample Space and EventsDocument15 pagesSample Space and EventsMa. Rema PiaPas encore d'évaluation

- Homework #1 SolutionsDocument6 pagesHomework #1 Solutionsjdak kaisadPas encore d'évaluation

- Devoir 1Document6 pagesDevoir 1Melania NituPas encore d'évaluation

- ICS 171 HW # 2 Solutions: Nverma@ics - Uci.eduDocument5 pagesICS 171 HW # 2 Solutions: Nverma@ics - Uci.eduKshitij GoyalPas encore d'évaluation

- Chapter 6Document14 pagesChapter 6AbimbolaPas encore d'évaluation

- In The Chapters of This Note, Those Reviews Will Be Stated After ZFC: MarkDocument27 pagesIn The Chapters of This Note, Those Reviews Will Be Stated After ZFC: Mark권예진Pas encore d'évaluation

- HW 2Document7 pagesHW 2calvinlam12100Pas encore d'évaluation

- Answers To Final Exam: MA441: Algebraic Structures I 20 December 2003Document5 pagesAnswers To Final Exam: MA441: Algebraic Structures I 20 December 2003chin2kotakPas encore d'évaluation

- Assignment-5-Linear RegressionDocument1 pageAssignment-5-Linear Regressionsweety sowji13Pas encore d'évaluation

- St213 1993 Lecture NotesDocument25 pagesSt213 1993 Lecture Notessldkfjglskdjfg akdfjghPas encore d'évaluation

- cs229 notes3/SVMDocument25 pagescs229 notes3/SVMHiếuLêPas encore d'évaluation

- Fall 2006 With Solutions PDFDocument10 pagesFall 2006 With Solutions PDFfaraPas encore d'évaluation

- FX 12 SolDocument7 pagesFX 12 SolJeremiePas encore d'évaluation

- On Kato and Kuzumakis Properties For The Milnor K2 of Function Fields of Padic CurvesDocument29 pagesOn Kato and Kuzumakis Properties For The Milnor K2 of Function Fields of Padic CurvesAhly Zamalek MasryPas encore d'évaluation

- Holomorphic Differentials of Klein Four CoversDocument23 pagesHolomorphic Differentials of Klein Four CoversAhly Zamalek MasryPas encore d'évaluation

- p-ADIC INTEGRALS AND LINEARLY DEPENDENT POINTS ON FAMILIES OF CURVES IDocument60 pagesp-ADIC INTEGRALS AND LINEARLY DEPENDENT POINTS ON FAMILIES OF CURVES IAhly Zamalek MasryPas encore d'évaluation

- Pn-FUNCTORS AND STABILITY OF VECTOR BUNDLES ON THE HILBERT SCHEME OF POINTS ON A K3 SURFACEDocument8 pagesPn-FUNCTORS AND STABILITY OF VECTOR BUNDLES ON THE HILBERT SCHEME OF POINTS ON A K3 SURFACEAhly Zamalek MasryPas encore d'évaluation

- Maximal Brill-Noether Loci Via K3 SurfacesDocument29 pagesMaximal Brill-Noether Loci Via K3 SurfacesAhly Zamalek MasryPas encore d'évaluation

- Miller PDFDocument20 pagesMiller PDFAhly Zamalek MasryPas encore d'évaluation

- Operad Structures in Geometric QuantizationDocument23 pagesOperad Structures in Geometric QuantizationAhly Zamalek MasryPas encore d'évaluation

- Soultion 6Document2 pagesSoultion 6Ahly Zamalek Masry50% (6)

- Linear RegressionDocument3 pagesLinear RegressionAhly Zamalek MasryPas encore d'évaluation

- Mlelectures PDFDocument24 pagesMlelectures PDFAhly Zamalek MasryPas encore d'évaluation

- Aam LectureDocument29 pagesAam LectureAhly Zamalek MasryPas encore d'évaluation

- Building Image Mosaics: An Application of Content-Based Image RetrievalDocument4 pagesBuilding Image Mosaics: An Application of Content-Based Image RetrievalAhly Zamalek MasryPas encore d'évaluation

- Quad 2-Input Exclusive Nor Gate SN54/74LS266: Low Power SchottkyDocument2 pagesQuad 2-Input Exclusive Nor Gate SN54/74LS266: Low Power SchottkyAhly Zamalek MasryPas encore d'évaluation

- Customer LoyaltyDocument42 pagesCustomer LoyaltyAhly Zamalek MasryPas encore d'évaluation

- LocusDocument20 pagesLocusmsaid69Pas encore d'évaluation

- Mathematics in The Modern WorldDocument5 pagesMathematics in The Modern WorldVince BesarioPas encore d'évaluation

- ANSYS Turbomachinery CFD System - 14.5 UpdateDocument39 pagesANSYS Turbomachinery CFD System - 14.5 UpdatePrabhuMurugesanPas encore d'évaluation

- Differential ApproachDocument7 pagesDifferential Approachwickedsinner7Pas encore d'évaluation

- 4' - FDM - ExamplesDocument24 pages4' - FDM - ExamplesHasnain MurtazaPas encore d'évaluation

- BroombastickDocument3 pagesBroombastickAllen SornitPas encore d'évaluation

- Excerpt 001Document10 pagesExcerpt 001Divine Kuzivakwashe MatongoPas encore d'évaluation

- Homework and Practice Workbook Holt Middle School Math Course 2 AnswersDocument4 pagesHomework and Practice Workbook Holt Middle School Math Course 2 Answersd0t1f1wujap3100% (1)

- PLL Frequency Synthesizer TutorialDocument32 pagesPLL Frequency Synthesizer TutorialQuốc Sự NguyễnPas encore d'évaluation

- Menu Driven Program in Java Simple CalculatorDocument3 pagesMenu Driven Program in Java Simple CalculatorBasudeb PurkaitPas encore d'évaluation

- Counting Assessment: Learn To Solve This Type of Problems, Not Just This Problem!Document4 pagesCounting Assessment: Learn To Solve This Type of Problems, Not Just This Problem!Kautilya SharmaPas encore d'évaluation

- 10 Transmission LinesDocument6 pages10 Transmission LinesGoldy BanerjeePas encore d'évaluation

- MH3511 Midterm 2017 QDocument4 pagesMH3511 Midterm 2017 QFrancis TanPas encore d'évaluation

- NEOM Final RevDocument15 pagesNEOM Final RevSanyam JainPas encore d'évaluation

- DilaDocument41 pagesDilaTesfaye TesemaPas encore d'évaluation

- X +3 x+5 X +7 X+ +49 X 625: Ce Correl Algebra (C)Document3 pagesX +3 x+5 X +7 X+ +49 X 625: Ce Correl Algebra (C)Glenn Frey LayugPas encore d'évaluation

- Unit 4 Groundwater FlowDocument36 pagesUnit 4 Groundwater FlowdaanaahishmaelsPas encore d'évaluation

- Interactive Buckling and Post-Buckling Studies of Thin-Walled Structural Members With Generalized Beam TheoryDocument148 pagesInteractive Buckling and Post-Buckling Studies of Thin-Walled Structural Members With Generalized Beam TheoryDang Viet HungPas encore d'évaluation

- Lab 2: Modules: Step 1: Examine The Following AlgorithmDocument16 pagesLab 2: Modules: Step 1: Examine The Following Algorithmaidan sanjayaPas encore d'évaluation

- Deteksi Kerusakan Motor InduksiDocument7 pagesDeteksi Kerusakan Motor InduksifaturPas encore d'évaluation

- B.Tech I & II Semester Syllabus - 2018-19 PDFDocument41 pagesB.Tech I & II Semester Syllabus - 2018-19 PDFDatta YallapuPas encore d'évaluation

- Cobol Interview QuestionsDocument4 pagesCobol Interview QuestionshudarulPas encore d'évaluation

- Geometric Analysis of Low-Earth-Orbit Satellite Communication Systems: Covering FunctionsDocument9 pagesGeometric Analysis of Low-Earth-Orbit Satellite Communication Systems: Covering FunctionsIbraheim ZidanPas encore d'évaluation

- Roy FloydDocument2 pagesRoy FloydDaniela Florina LucaPas encore d'évaluation

- Finite Element Programming With MATLABDocument58 pagesFinite Element Programming With MATLABbharathjoda100% (8)

- Multiple Choice RotationDocument20 pagesMultiple Choice RotationJohnathan BrownPas encore d'évaluation

- A2 37+Transformer+Reliability+SurveyDocument57 pagesA2 37+Transformer+Reliability+Surveyrsantana100% (2)

- Irstlm ManualDocument8 pagesIrstlm ManualagataddPas encore d'évaluation

- Computer Aided Design of Electrical ApparatusDocument12 pagesComputer Aided Design of Electrical Apparatusraj selvarajPas encore d'évaluation