Académique Documents

Professionnel Documents

Culture Documents

Pincer Search Algo

Transféré par

aaksb123Description originale:

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Pincer Search Algo

Transféré par

aaksb123Droits d'auteur :

Formats disponibles

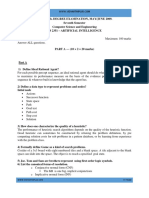

Pincer-Search Algorithm for Discovering Maximum Frequent Set

Notes by Akash Saxena

(NITJ)

Introduction

Pincer Search Algorithm was proposed by, Dao-I Lin & Zvi M. Kedem of

New York University in 1997. This algorithm uses both, the top-down and

bottom-up approaches to Association Rule mining. It is a slight modification

to Original Apriori Algorithm by R. Aggarwal & Srikant. In this the main

search direction is bottom-up (same as Apriori) except that it conducts

simultaneously a restricted top-down search, which basically is used to

maintain another data structure called Maximum Frequent Candidate Set

(MFCS). As output it produces the Maximum Frequent Set i.e. the set

containing all maximal frequent itemsets, which therefore specifies

immediately all frequent itemsets. The algorithm specializes in dealing with

maximal frequent itemsets of large length. The authors got their inspiration

from the notion of version space in Mitchell’s machine learning paper.

Concepts used:

Let I = (i1, i2, …, im) be a set of m distinct items.

Transaction: A transaction T is defined as any subset of items in I.

Database: A database D is a set of transactions.

Itemset: A set of items is called an Itemset.

Length of Itemset: is the number of items in the itemset. Itemsets of length

‘k’ are referred to as k-itemsets.

Support Count: It is the total frequency of appearance of a particular

pattern in a database.

In other terms: If T is a transaction in database D and X is an itemset then T

is said to support X, if X ⊆ T, hence support of X is the fraction of

transactions that support X.

Frequent Itemset: An itemset whose support count is greater than or equal

to the minimum support threshold specified by the user.

Infrequent Itemset: An itemset which is not frequent is infrequent.

Downward Closure Property (Basis for Top-down Search): states that “If

an itemset is frequent then all its subsets must be frequent.”

Upward Closure Property (Basis for Bottom-up Search): states that “If an

itemset is infrequent then all its supersets must be infrequent.”

Maximal Frequent Set: it is a set, which is frequent and so are all its proper

subsets. All its proper supersets are infrequent.

Maximum Frequent Set: is the set of all Maximal Frequent sets.

Association Rule: is the rule of the form R : X Y, where X and Y are two

non-empty and non-intersecting itemsets. Support for rule R is defined as

support of X ∪Y. Confidence is defined as Support of X ∪Y / Support of X.

Interesting Association Rule: An association rule is said to be interesting if

its support and confidence measures are equal to or greater than minimum

support and confidence thresholds (specified by user) respectively.

Candidate Itemsets: It is a set of itemsets, which are to be tested to

determine whether they are frequent or infrequent.

Maximum Frequent Candidate Set (MFCS): is a minimum cardinality set

of itemsets such that the union of all the subsets of elements contains all

frequent itemsets but does not contain any infrequent itemset, i.e. it is a

minimum cardinality set satisfying the conditions:

FREQUENT ⊆ { 2X | X ∈ MFCS}

INFREQUENT ⊆ { 2X | X ∈ MFCS}

Where FREQUENT and INFREQUENT, stand respectively for all frequent

and infrequent items (classified as such as far).

Pincer-Search Method

Pincer Search combines the following two approaches:

Bottom-up: Generate subsets and go on to generating parent-set candidate

sets using frequent subsets.

Top-Down: Generating parent set and then generating subsets.

It also uses two special properties:

Downward Closure Property: If an itemset is frequent, then all its must be

frequent.

Upward Closure Property: If an itemset is infrequent, all its supersets must

be infrequent.

It uses the above two properties for pruning candidate sets, hence decreases

the computation time considerably.

It uses both approaches for pruning in following way:

If some maximal frequent itemset is found in the top down direction, then

this itemset can be used to eliminate (possibly many) candidates in the

bottom-up direction. The subsets of this frequent itemset will be frequent

and hence can be pruned (acc. to Downward closure property).

If an infrequent itemset is found in the bottom up direction, then this

infrequent itemset can be used to eliminate the candidates found in top-down

search found so far (acc. to Upward Closure Property).

This two-way approach makes use of both the properties and speeds up the

search for maximum frequent set.

The algorithm begins with generating 1-itemsets as Apriori algorithm but

uses top-down search to prune candidates produced in each pass. This is

done with the help of MFCS set.

Let MFS denote set of Maximal Frequent sets storing all maximally frequent

itemsets found during the execution. So at anytime during the execution

MFCS is a superset of MFS. Algorithm terminates when MFCS equals

MFS.

In each pass over database, in addition to counting support counts of

candidates in bottom-up direction, the algorithm also counts supports of

itemsets in MFCS: this set is adapted for top-down search.

Consider now some pass k, during which itemsets of size k are to be

classified. If some itemset that is an element of MFCS, say X of cardinality

greater than k is found to be frequent in this pass, then all its subsets will be

frequent. Then all its subsets of cardinality k are pruned from the set of

candidates considered in bottom-up approach in this pass. They and their

supersets will never be candidates again. But, they are not forgotten and

used in the end.

Similarly when a new itemsets is found to infrequent in bottom-up

direction, the algorithm makes use of it to update MFCS, so that no subset of

any itemset in MFCS should have this infrequent itemset as its subset.

By use of MFCS maximal frequent sets can be found early in

execution and hence improve performance drastically.

Note: In general, unlike the search in bottom-up direction, which goes up

one level in one pass, the top down search can go down many levels in one

pass.

Now let’s see algorithmic representation of Pincer search so that we can see

how above concepts are applied:

Pincer Search Method

L0 = ∅ ; k=1; C1 = {{i} | i ∈ I }; S0 = ∅

MFCS = {{ 1,2,…, n}}; MFS = ∅ ;

do until Ck = ∅ and Sk-1 = ∅

read the database and count support for Ck & MFCS.

MFS = MFS ∪ {frequent itemsets in MFCS};

Sk = {infrequent itemsets in Ck };

call MFCS_gen algorithm if Sk ≠ ∅;

call MFS_pruning procedure;

generate candidates Ck+1 from Ck ;

if any frequent itemset in Ck is removed from MFS_pruning procedure

call recovery procedure to recover candidates to Ck+1 .

call MFCS_prune procedure to prune candidates in Ck+1 .

k=k+1;

return MFS

MFCS_gen

for all itemsets s ∈ Sk

for all itemsets m ∈ MFCS

if s is a subset of m

MFCS = MFCS \ {m}

for all items e ∈ itemset s

if m \ {e} is not a subset of any itemset in MFCS

MFCS = MFCS ∪ {m \ {e}}

return MFCS

Recovery

for all itemsets l ∈ Lk

for all itemsets m ∈ MFS

if the first k-1 items in l are also in m

for i from j+1 to |m|

Ck+1 = Ck+1 ∪ {{l.item1, …, l.itemk, m.itemi}}

MFS_prune

for all itemsets c in Lk

if c is a subset of any itemset in the current MFS

delete c from Lk.

MFCS_prune

for all itemsets in Ck+1

if c is not a subset of any itemset in the current MFCS

delete c from Ck+1;

MFCS is initialized to contain one itemset, which contains all of the

database items. MFCS is updated whenever new infrequent itemsets are

found. If an itemset is found to be frequent then its subsets will not

participate in subsequent support counting and candidate generation steps. If

some itemsets in Lk are removed, the algorithm will call the recovery

procedure to recover missing candidates.

Let’s apply this algorithm to the following example:

Example :1 - Customer Basket Database

Transaction Products

1 Burger, Coke, Juice

2 Juice, Potato Chips

3 Coke, Burger

4 Juice, Groundnuts

5 Coke, Groundnuts

Step 1: L0 = ∅ , k=1;

C1 = {{ Burger}, {Coke}, {Juice}, {Potato Chips}, {Ground Nuts}}

MFCS = {Burger, Coke, Juice, Potato Chips, Ground Nuts}

MFS = ∅ ;

Pass 1: Database is read to count support as follows:

{Burger}2, {Coke}3, {Juice} 3, {Potato Chips}1, {Ground Nuts}2

{Burger, Coke, Juice, Potato Chips, Ground Nuts} 0

So MFCS = {Burger, Coke, Juice, Potato Chips, Ground Nuts}

& MFS = ∅;

L1 = {{Burger}, {Coke}, {Juice}, {Potato Chips}, {Ground Nuts}}

S1 = ∅

At the moment since S1 = ∅ we don’t need to update MFCS

C2 = {{Burger, Coke}, {Burger, Juice}, {Burger, Potato Chips}, {Burger, Ground Nuts},

{Coke, Juice}, {Coke, Potato Chips}, {Coke, Ground Nuts}, {Juice, Potato Chips},

{Juice, Ground Nuts}, {Potato Chips, Ground Nuts}}

Pass 2: Read database to count support of elements in C2 & MFCS as given below:

{Burger, Coke} 2, {Burger, Juice}1,

{Burger, Potato Chips}0, {Burger, Ground Nuts}0,

{Coke, Juice}1, {Coke, Potato Chips}0,

{Coke, Ground Nuts}1, {Juice, Potato Chips}1,

{Juice, Ground Nuts}1, {Potato Chips, Ground Nuts}0

{Burger, Coke, Juice, Potato Chips, Ground Nuts}0

MFS = ∅

L2 = {{Burger, Coke}, {Burger, Juice}, {Coke, Juice}, {Coke, Ground Nuts}, {Juice,

Ground Nuts}, {Juice, Potato Chips}}

S2 = {{Burger, Potato Chips}, {Burger, Ground Nuts}, {Coke, Potato Chips}, {Potato

Chips, Ground Nuts}}

For {Burger, Potato Chips} in S2 and for {Burger, Coke, Juice, Potato Chips, Ground

Nuts} in MFCS, we get new elements in MFCS as

{Burger, Coke , Juice, Ground Nuts} and {Coke, Juice, Potato Chips, Ground Nuts}

For {Burger, Ground Nuts} in S2 & for {Coke, Juice, Potato Chips, Ground Nuts} in

MFCS, since {Burger, Ground Nuts} is not contained in this element of MFCS hence no

action.

For {Burger, Coke, Juice, Ground Nuts} in MFCS we get two new elements

{Burger, Coke, Juice} & {Coke, Juice, Ground Nuts}

Since {Coke, Juice, Ground Nuts} is already contained in MFCS, it is excluded from

MFCS

Now, MFCS = {{Burger, Coke, Juice}, {Coke, Juice, Potato Chips, Ground Nuts}}

Now, for {Coke, Potato Chips} in S2 , we get

MFCS = {{Burger, Coke, Juice}, {Coke, Juice, Ground Nuts}, {Potato Chips, Juice,

Ground Nuts}}

Now, for {Potato Chips, Ground Nuts} in S2, we get

MFCS = {{Burger, Coke, Juice}, {Coke, Juice, Ground Nuts}, {Potato Chips, Juice}}

Now, we generate candidate sets as

C3 = {{Burger, Coke, Juice}, {Potato Chips}}

Here algorithm stops since no more candidates need to be tested.

Then we do one scan for calculating actual support counts of all maximally

frequent itemsets and their subsets. Then we go on to generate rules using

minimum confidence threshold to get all interesting rules.

x-------------x

Vous aimerez peut-être aussi

- SRM Mess Management SystemDocument18 pagesSRM Mess Management SystemSahithya Akula AP21110011110Pas encore d'évaluation

- DSP HandoutsDocument11 pagesDSP HandoutsG A E SATISH KUMARPas encore d'évaluation

- Visvesvaraya Technological University: Lung Cancer Segmentation and Detection Using Machine LearningDocument67 pagesVisvesvaraya Technological University: Lung Cancer Segmentation and Detection Using Machine LearningSoni TiwariPas encore d'évaluation

- Deep Reinforcement LearningDocument48 pagesDeep Reinforcement Learningjolamo1122916100% (3)

- AI Practical 05-Greedy Best First Search ImplementationDocument19 pagesAI Practical 05-Greedy Best First Search ImplementationhokPas encore d'évaluation

- Census Income ProjectDocument4 pagesCensus Income ProjectAmit PhulwaniPas encore d'évaluation

- Solution 2Document8 pagesSolution 2Asim AliPas encore d'évaluation

- MSAMB Web App Automates Maharashtra Agri MarketsDocument6 pagesMSAMB Web App Automates Maharashtra Agri MarketsAnuja J PatilPas encore d'évaluation

- Implementing the DGIM AlgorithmDocument5 pagesImplementing the DGIM AlgorithmAlkaPas encore d'évaluation

- Balagurusamy Programming in C#Document22 pagesBalagurusamy Programming in C#Sayan ChatterjeePas encore d'évaluation

- Text To Morse Code Converter: Report OnDocument20 pagesText To Morse Code Converter: Report OnAmruta PoojaryPas encore d'évaluation

- 6 - Arp Implementation Using UdpDocument2 pages6 - Arp Implementation Using UdpSenthil R100% (1)

- Surface Computing Seminar ReportDocument15 pagesSurface Computing Seminar ReportAveek Dasmalakar100% (1)

- UML Diagrams for Software SystemsDocument31 pagesUML Diagrams for Software SystemsarsalPas encore d'évaluation

- Hospital Management SystemDocument14 pagesHospital Management SystemKeerthana keerthana100% (1)

- Database queries and operationsDocument5 pagesDatabase queries and operationsKaushik C100% (1)

- Module 2: Divide and Conquer: Design and Analysis of Algorithms 18CS42Document82 pagesModule 2: Divide and Conquer: Design and Analysis of Algorithms 18CS42Bhanuranjan S BPas encore d'évaluation

- Unit 2 FodDocument27 pagesUnit 2 Fodit hodPas encore d'évaluation

- RPS C Code Mini ProjectDocument7 pagesRPS C Code Mini ProjectRoshan DhruwPas encore d'évaluation

- Representing Knowledge in AI SystemsDocument25 pagesRepresenting Knowledge in AI SystemsAbdela Aman Mtech100% (1)

- GLOBAL WIRELESS e - Voting 7049Document24 pagesGLOBAL WIRELESS e - Voting 7049fuck roachesPas encore d'évaluation

- Railway Reservation Oops ProjectDocument24 pagesRailway Reservation Oops ProjectShamil IqbalPas encore d'évaluation

- What Is Difference Between Backtracking and Branch and Bound MethodDocument4 pagesWhat Is Difference Between Backtracking and Branch and Bound MethodMahi On D Rockzz67% (3)

- Aryan Cs ProjectDocument28 pagesAryan Cs Projectaryan12gautam12Pas encore d'évaluation

- Gold Price Prediction Using Ensemble Based Supervised Machine LearningDocument30 pagesGold Price Prediction Using Ensemble Based Supervised Machine LearningSai Teja deevela100% (1)

- HeartDocument28 pagesHeartAanchal GuptaPas encore d'évaluation

- Computer Graphics (CG CHAP 3)Document15 pagesComputer Graphics (CG CHAP 3)Vuggam Venkatesh0% (1)

- Conceptual Dependency and Natural Language ProcessingDocument59 pagesConceptual Dependency and Natural Language ProcessingHarsha OjhaPas encore d'évaluation

- High Speed LAN TechnologiesDocument29 pagesHigh Speed LAN TechnologiesUtsav Kakkad100% (1)

- Final Year ProjectDocument41 pagesFinal Year ProjectKartik KulkarniPas encore d'évaluation

- Computer MCQDocument127 pagesComputer MCQGiriraju KasturiwariPas encore d'évaluation

- Python Programming LAB IV Sem NEP-1Document22 pagesPython Programming LAB IV Sem NEP-1Magma Omar: Gaming100% (2)

- Ai PDFDocument85 pagesAi PDFRomaldoPas encore d'évaluation

- C Programming Source Code For CRCDocument7 pagesC Programming Source Code For CRCSathiyapriya ShanmugasundaramPas encore d'évaluation

- Computer Organization Lab ManualDocument29 pagesComputer Organization Lab ManualAshis UgetconfusedPas encore d'évaluation

- ADS Lab Manual PDFDocument54 pagesADS Lab Manual PDFjani28csePas encore d'évaluation

- Brute Force and Divide-Conquer AlgorithmsDocument34 pagesBrute Force and Divide-Conquer AlgorithmskarthickamsecPas encore d'évaluation

- Question Paper Code: X10326: Computer Science and EngineeringDocument2 pagesQuestion Paper Code: X10326: Computer Science and EngineeringSamraj JebasinghPas encore d'évaluation

- Cs6402 DAA Notes (Unit-3)Document25 pagesCs6402 DAA Notes (Unit-3)Jayakumar DPas encore d'évaluation

- Application of Soft Computing Question Paper 21 22Document3 pagesApplication of Soft Computing Question Paper 21 22Mohd Ayan BegPas encore d'évaluation

- Industrial Training Report at BBSBECDocument24 pagesIndustrial Training Report at BBSBECDas KumPas encore d'évaluation

- Dbms Lab ManualDocument19 pagesDbms Lab ManualGurpreet SinghPas encore d'évaluation

- Practical Slip Mcs Sem 3 Pune UniversityDocument4 pagesPractical Slip Mcs Sem 3 Pune UniversityAtul Galande0% (1)

- SEO-Optimized Title for English Language Test QuestionsDocument12 pagesSEO-Optimized Title for English Language Test QuestionsAshwani PasrichaPas encore d'évaluation

- Btech-E Div Assignment1Document28 pagesBtech-E Div Assignment1akash singhPas encore d'évaluation

- CSE 100 Project Report on Tic Tac Toe GameDocument15 pagesCSE 100 Project Report on Tic Tac Toe GameAbuPas encore d'évaluation

- Secrecy Preserving Discovery of Subtle Statistic ContentsDocument5 pagesSecrecy Preserving Discovery of Subtle Statistic ContentsIJSTEPas encore d'évaluation

- System Programming Lab Report 04Document12 pagesSystem Programming Lab Report 04Abdul RehmanPas encore d'évaluation

- COMPUTER AND NETWORK SECURITY NOTESDocument53 pagesCOMPUTER AND NETWORK SECURITY NOTESRohit SolankiPas encore d'évaluation

- Two Components of User Interface (Hci)Document2 pagesTwo Components of User Interface (Hci)Idesarie SilladorPas encore d'évaluation

- Phishing SeminarDocument19 pagesPhishing SeminarAditya ghadgePas encore d'évaluation

- Flight Price Prediction Capstone Project Submission 2Document69 pagesFlight Price Prediction Capstone Project Submission 2Bindra JasvinderPas encore d'évaluation

- OOSE SyllabusDocument2 pagesOOSE SyllabusSrikar ChintalaPas encore d'évaluation

- DAA All-QuizzesDocument9 pagesDAA All-QuizzesPM KINGPas encore d'évaluation

- Currency Convertor: 1.abstractDocument6 pagesCurrency Convertor: 1.abstractUSHASI BANERJEE100% (1)

- TOC Unit I MCQDocument4 pagesTOC Unit I MCQBum TumPas encore d'évaluation

- Computer Graphics - TutorialspointDocument66 pagesComputer Graphics - TutorialspointLet’s Talk TechPas encore d'évaluation

- Pattern Recognition CourseDocument2 pagesPattern Recognition CourseJustin SajuPas encore d'évaluation

- Back Face DetectionDocument13 pagesBack Face Detectionpalanirec100% (1)

- Amcat Computer Science Questions-Part-1Document4 pagesAmcat Computer Science Questions-Part-1SuryaPas encore d'évaluation

- Chapter-4 Association Pattern Mining: 4.1 A Critical Look On Currently Used AlgorithmsDocument40 pagesChapter-4 Association Pattern Mining: 4.1 A Critical Look On Currently Used Algorithmsapi-3762786Pas encore d'évaluation

- University of Information Technology Faculty of Information SystemDocument14 pagesUniversity of Information Technology Faculty of Information SystemHữu Phúc NguyễnPas encore d'évaluation

- Cheat SheetDocument21 pagesCheat SheetEric Robert bj21081Pas encore d'évaluation

- Attribute Selection Measures: Decision Tree Based ClassificationDocument16 pagesAttribute Selection Measures: Decision Tree Based ClassificationKumar VasimallaPas encore d'évaluation

- Analysis of Power System II-Lec6Document20 pagesAnalysis of Power System II-Lec6سعدالدين مالك الرفاعي عبدالرحمنPas encore d'évaluation

- Algorithms: CSE 202 - Homework IDocument10 pagesAlgorithms: CSE 202 - Homework IballechasePas encore d'évaluation

- @StudyTime - Channel Maths-4Document12 pages@StudyTime - Channel Maths-4Sipra PaulPas encore d'évaluation

- Introduction To Compressive SamplingDocument92 pagesIntroduction To Compressive SamplingKarthik YogeshPas encore d'évaluation

- Theory of Equations Capter2Document12 pagesTheory of Equations Capter2Immanuel SPas encore d'évaluation

- Fast Fourier TransformDocument10 pagesFast Fourier TransformAnonymous sDiOXHwPPjPas encore d'évaluation

- Chapter - 3 - Transmission Signal & Noise in SystemDocument13 pagesChapter - 3 - Transmission Signal & Noise in Systemعظات روحيةPas encore d'évaluation

- G A II: Boruvka's Algorithm DemoDocument8 pagesG A II: Boruvka's Algorithm DemoJohn Le TourneuxPas encore d'évaluation

- Artificial Bee Colony Algorithm For Economic Load Dispatch ProblemDocument9 pagesArtificial Bee Colony Algorithm For Economic Load Dispatch ProblemHamizan RamlanPas encore d'évaluation

- Unit 5 Mining Frequent Patterns and Cluster AnalysisDocument63 pagesUnit 5 Mining Frequent Patterns and Cluster AnalysisRuchiraPas encore d'évaluation

- Daa Unit-4Document31 pagesDaa Unit-4pihadar269Pas encore d'évaluation

- 04 - Single Linked ListDocument54 pages04 - Single Linked ListrakhaaditPas encore d'évaluation

- Cross-sectional areas, costs, and perimeters of common W-shapesDocument3 pagesCross-sectional areas, costs, and perimeters of common W-shapesAhmed GoudaPas encore d'évaluation

- Factoring GCFDocument3 pagesFactoring GCFSamantha CaileyPas encore d'évaluation

- Inequalities Exam RevisionDocument4 pagesInequalities Exam RevisionMomeena NoumanPas encore d'évaluation

- Line Coding Techniques and Their ComparisonDocument30 pagesLine Coding Techniques and Their Comparisonjohn billy balidioPas encore d'évaluation

- Application of LU DecompositionDocument7 pagesApplication of LU DecompositionRakesh MahtoPas encore d'évaluation

- Kernel MachinesDocument33 pagesKernel MachinesShayan ChowdaryPas encore d'évaluation

- Integration by Partial FractionDocument26 pagesIntegration by Partial FractionSyarifah Nabila Aulia RantiPas encore d'évaluation

- BAsic Concept of AlgorithmDocument17 pagesBAsic Concept of AlgorithmmdmasumicePas encore d'évaluation

- Lecture 7 DP 0-1 KnapsackDocument53 pagesLecture 7 DP 0-1 KnapsackMostafizur RahamanPas encore d'évaluation

- 1-4 Review PolynomialsDocument7 pages1-4 Review PolynomialsEvan JiangPas encore d'évaluation

- CS 188: Artificial Intelligence: SearchDocument55 pagesCS 188: Artificial Intelligence: SearchNicolás ContrerasPas encore d'évaluation

- RAM Model Explained for Algorithm AnalysisDocument39 pagesRAM Model Explained for Algorithm AnalysisShubham SharmaPas encore d'évaluation

- Tower of Hano1 2023Document2 pagesTower of Hano1 2023Queenie DamalerioPas encore d'évaluation

- Data Structures and Algorithms SyllabusDocument2 pagesData Structures and Algorithms SyllabusJoseph MazoPas encore d'évaluation