Académique Documents

Professionnel Documents

Culture Documents

Cheap PSO 2 1

Transféré par

Naren PathakTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Cheap PSO 2 1

Transféré par

Naren PathakDroits d'auteur :

Formats disponibles

Think locally, act locally: The Way of Life of

Cheap-PSO, an Adaptive Particle Swarm Optimizer

Maurice Clerc

maurice.clerc@writeme.com

Abstract.

In Particle Swarm Optimization,

each particle moves in the search

space and updates its velocity

according to best previous

positions already found by its

neighbours (and itself), trying to

find an even better position. This

approach has been proved to be

powerful but needs predefined

parameters, like swarm size,

neighbourhood size, and some

coefficients, and tuning these

parameters for a given problem

may be, in fact, quite difficult.

This paper presents a more

adaptive method in which the

user has almost nothing to do but

describe the problem.

1 Introduction

A non adaptive Particle Swarm

Optimizer (PSO), as defined in [1,

2], can not work well for any

objective function, or more

precisely, sometimes you may need

to run it several times using different

parameter sets before finding a

good one; and in such a case the

method may be in fact, to be fair,

globally bad. Some attempts have

already been made to define a better

PSO ([3-9]), for example by using

selection, by modifying a

coefficient during the process itself,

or by clustering techniques, but each

particle is still far from properly

using all the information it has.

Here, I present here a more

complete variation of PSO,

systematically based on the particle

point of view. The key question is

then "What would I do if I were a

particle ?"

1

. Note that the purpose

of such an adaptive process is to be

"good for some problems, never too

bad" unlike a non adaptive one,

which is carefully tuned for some

kinds of problems, and is "excellent

for some problems, but very bad for

some others". It means, in particular,

that the adaptive method has to be

designed so that any "No Free

Lunch" theorem ([11]) can not be

applied (here the objective function

is explicitly taken into account

during the adaptation process).

To illustrate this approach, I used

Cheap-PSO, which is a simplified

PSO designed to solve a lot of small

problems, taken from classical

optimization, equations and systems

of equations, and from integer and

combinatorial problems (see the

Particle Swarm Central, Programs

section [10]) . So, let us see more

precisely what I mean by

"simplified", "adaptive", and "small

problems".

1.1 Simplified

The most general system of

equations of the PSO algorithm

needs six coefficients [12], defining,

for a given particle, its position x

and its velocity v at time t+1,

depending on the ones at time t.

Here I use a simpler one with just

two coefficients:

v t v t b p t x t

x t x t v t

g

+ ( ) ( ) + ( ) ( )

( )

+ ( ) ( ) + + ( )

'

1

1 1

Equ. 1. Basic equations for Cheap-

PSO.

1

If you think you are better than a

stupid particle, you should try Jim

Kennedy's game at the Particle

Swarm Central site [10]...

where p

g

is the best previous

position found so far in the

neighbourhood (including the

particle itself). The convergence

criterion defined in [12] is then

satisfied if we have [ [ 0 1 , and

b b < + +

sup

1 2 . Note that the

neighbourhood used in this paper, as

in most works about PSO, is a

"social" one and has nothing to do

with Euclidean distances. Particles

are "virtually" put on a circle, and

the distance between two particles

is simply the smallest number of

arcs to follow to go from the one to

the other.

1.2 Adaptive

At each time step, any particle P

i

has the following local detailed

information about each particle P

j

of

its neighbourhood, including itself:

position x

j

best position ever found p

j

velocity v

j

improvement f

j

(eventually

negative) since last check

(difference between the

previous objective function

value and the current one)

and, just for itself, a given number

h of last previous objective function

values.

It also has some global

information:

the swarm size

the clock, telling it when it is

time to check the

neighbourhood. In this version, a

check is done every

"neighbourhood_size" times.

Using this information, the

particle has to "think locally, act

locally". It may:

kill itself,

generate a new particle,

modify the coefficients and b

before moving.

Below, for each adaptive

behaviour, I give the qualitative

"reasoning" of the particle P

i

, and

the mathematical formula used in

the current implementation.

1.2.1 Adaptive swarm size

Usually, the swarm size is

constant. Some authors use 20, some

others 30 [1, 8], but, anyway,

nobody has proved a given size is

really better than another one. So it

seems better to simply let the

algorithm modify the swarm size,

from time to time.

Note that I still use a constant

neighbourhood size (5), except, of

course, when the swarm size

becomes smaller than this

neighbourhood size.

Qualitative reasoning

"There has been not enough

improvement in my neighbourhood,

although I am the best. It's time to

try to generate a new particle."

or

"There has been enough

improvement. But not because of

me, for I am the worst particle in the

neighbourhood. It's time to try to kill

myself."

Formulas

The objective function f is

supposely defined in a way so that it

is always positive or null and we are

looking for a minimum (if

necessary, we replace it by

f x S

( )

, where S is the given

target value, accessible or not).

"ENOUGH IMPROVEMENT" is more

precisely defined by "improvement

for at least half of the particles in

the neighbourhood" (although there

are some hints showing this is not an

arbitrary choice, I have no complete

proof, so just consider it as a rule of

thumb). So, we simply have

"There has been enough

improvement"

( ) >

{ }

P P neighbourhood P f

neighbourhood P

j j i j

i

, ,

( )

0

2

"I am the best"

( ) < P P neighbourhood P f f

j j i j i

, ,

"I am the worst"

( ) > P P neighbourhood P f f

j j i j i

, ,

Below is a pseudo-code of the

strategy used in Cheap-PSO.

alpha=0.9; hood_size=5;

cycle_size=hood_size;

swarm_size=5;

random initialization of the swarm; //

(null velocities)

loop until stop criterion:

<MOVE THE SWARM>

after cycle_size time steps:

for each particle

if (not_enough_improvement

AND the particle is the best in

its neighbourhood)

try to add a particle

if (enough_improvement

AND the particle is the

worst in its

neighbourhood)

try to remove this

particle

The result of the procedure "try to

add a particle" is partly random and

depends on the swarm size. The

bigger the swarm, the less probable

is the addition of a particle.

Similarly, the smaller the swarm,

the less probable is the deletion of a

particle. The stop criterion itself is

computed by the algorithm. The user

gives an objective value (target S)

and an admissible error . The

maximum number of evaluations to

do, Max_Eval, (depending on the

dimensionality D of the problem and

on ) is re-evaluated every h time

steps (in practice

h=cycle_size=neighbourhood_size).

In order to do that, each particle

memorizes the objective function

values for its h last previous

positions. Also, of course, the

process stops if there is no particle

anymore.

It is interesting to see the

difference between the two

approaches (constant swarm size

and adaptive swarm size), by

looking at the evolution of a global

descriptor: the swarm energy. Let us

see what happens on a classical

small example (Rosenbrock's

function on [-50,50]

2

). The potential

energy of a particle P

i

at time t is

defined by

e t

f x t S

p i

i

,( )

( ) ( )

.

where f is the objective function,

and where p is for potential. It

represents the number of energy

steps the particle has to go down to

reach a solution point. The potential

swarm energy is

then

E t G e t

p p i

i

( )

( )

,

.

We can also define a (relative)

kinetic energy by

e t

v t

x x

k i

i

,

max min

( )

( )

_

,

1

2

2

for a given

particle P

i

, and by

E t e t

k k i

i

( )

( )

,

,

for the whole swarm. In this paper, G

is simply an arbitrary constant,

chosen so that both E

p

and E

k

can be

easily shown on the same figure. In

this example, G=10

-14

.

As the swarm moves, energies

tend to go to zero for the constant

swarm size method, but not always

for the adaptive one, for, from time

to time, a new particle is added. The

swarm has then still the possibility

to search in a larger domain, and to

find a solution more quickly. Figure

1 and Figure 2 show energies versus

the number of evaluations, instead

of the number of time steps. For a

constant swarm size, they are the

same, but not so for a variable

swarm size; so I use this

representation for easier

comparisons. Of course, as there is

some randomness, the exact

numbers of evaluations may be

different for another run, but the

global "patterns" are the same. It is

not globally true that the adaptive

algorithm is always better than the

constant swarm size algorithm (in

terms of number of objective

function evaluations). For instance,

in this particular case, a constant

swarm size equal to 10 is better.

Howewer, the point is that you may

have to try different swarm sizes to

find such a nice one, and unless you

are lucky, the sum of all your trials

is far worse than any single run of

the adaptive method.

Rosenbrock 2D. Swarm size=20, constant coefficients.

0,00E+00

2,00E+03

4,00E+03

6,00E+03

8,00E+03

1,00E+04

Number of evaluatio

0

5

10

15

20

25

Potential ene

Kinetic energ

Swarm size

=0.00001

after 2240 evaluations

Figure 1. As kinetic energy can not appreciably re-increase, improvement near the

solution is difficult.

Rosenbrock 2D. Adaptive swarm size, constant coefficients.

0,00E+00

2,00E+03

4,00E+03

6,00E+03

8,00E+03

1,00E+04

Number of evaluatio

0

5

10

15

20

25

Potential ene

Kinetic energ

Swarm size

=0.00001

after 1598 evaluations

Figure 2. When the swarm does not find a solution, it globally re-increases its energy

by adding new particles, that is to say its ability to explore the search space. And

when the process seems to converge nicely, it decreases it again.

1.2.2 Adaptive coefficients

In the above examples, both

coefficients and b were constant.

How can they be modified at each

time step?

Qualitative reasoning

"The more I'm better than all my

neighbours, the more I follow my own

way. And vice versa."

"The more the best neighbour is better

than me, the more I tend to go towards

it. And vice versa."

Formulas

The first reasoning is for coefficient ,

and the second one for the coefficient

b. Experimentally, it appears that what

is important is not the exact shapes of

the curves, but the mean values and

the asymptotes. So we can use, for

example, the relative improvement

estimation

m

f p f p

f p f p

g i

g i

( )

( )

( )

+ ( )

where p

g,i

is the best position ever

found in the neighbourhood of the

particle P

i

, and

+ ( )

+

+

+

+

min max min

min max max min

e

e

b

b b b b e

e

m

m

m

m

1

1

2 2

1

1

with, for instance, the following values

min max

, ., .

( )

( )

0509 , and

b b

min max

, ., .

( )

( )

07 09 . These values

are not completely arbitrary. There are

some informal reasonings suggesting

that we should have

min

0.5,

b

min

>

min

,

max

b

max

, and

max

"smaller

than 1, but not too small". Relative

improvement m is defined, after each

move, by

Adaptive coefficients, by themselves,

improve the algorithm, and, combining

this technique with adaptive swarm

size, we have an even better result

(see Figure 3).

Of course, adaptations are not at all

inexpensive (in terms of processor

time) but, as we can see on Table 1,

they are nevertheless useful. Note,

also, that the program (ANSI C source

code) is not optimized, and can

certainly be improved.

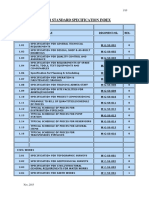

Table 1. Although adaptations need

more processor time for each time step,

they globally improve the algorithm.

The first line can be seen as the "basic"

case, when just Equ. 1 is used. In the

second one, there are less evaluations,

but some computer time is consumed

for adaptation, and the total time is a bit

longer. In the last case, adaptation still

needs some time (even a bit more than

before, in fact), but as there are now far

less evaluations, the global result is

better.

Kind of

adaptati-

on

Number

of

evaluati-

ons

Pro-

cessor

time

(clock

ticks)

Ratio

nothing 2240 358 0.16

swarm

size

1598 368 0.23

swarm

size and

coefficie

ns

1414 255 0.18

1.3 Small problems

The stop criterion uses a dynamic

estimation of the maximum number of

evaluations needed. Strictly speaking,

this estimations is valid only for small

dimension (typically less than 35),

and, anyway, only for differentiable

objective functions. That is why the

algorithm should theoretically be used

only for small problems (although it

sometimes works in practice for big

problems too).

The user has to give the following

parameters:

objective function f.

dimensionality D.

x

min

and x

max

, defining a

hypercubical search space H.

target S. If looking for a minimum,

the user just has to give a value

surely smaller than any objective

function value on H.

acceptable error . If S is a "real"

target, a solution is any D-point

x x x

D

( )

1

,..., so that

f x S S ( ) + [ ] , .

granularity. If you know in

advance there exists an integer k

so that all values 10

k

i

x are

integer numbers for the solution

point, you may try to improve the

convergence by giving this

information. Howewer, the most

interesting use is that you can

solve some problems using integer

numbers. ( k=0).

all different information, saying

if all components of the solution

must be different or not (useful for

some combinatorial problems).

That is all. No parameters to tune

carefully. Nevertheless, one must be

aware it is like a Swiss knife: a lot of

possible uses, but for any use there

may exist (and usually there does

exist) a better specific tool.

2 Classical examples

The practical point of view is "How

many evaluations do I need to find a

solution?". For some classical

functions Table 2 shows how better the

adaptive method is, even in the worst

case (Rastrigin function). Another

point of view is "What is the best

result after x evaluations?". I am

referring here to the study [12], which

uses a complete PSO version, with

constriction coefficients. As we can

see on Table 3, Cheap-PSO is never

very bad, and often quite good, just as

expected.

Rosenbrock 2D. Adaptive swarm size, adaptive coefficients.

0,00E+00

2,00E+03

4,00E+03

6,00E+03

8,00E+03

1,00E+04

Number of evaluatio

0

5

10

15

20

25

Potential ene

Kinetic energ

Swarm size

=0.00001

after 1414 evaluations

Figure 3. Now, the result is appreciably better, thanks to the conjunction of the two

adaptations.

Table 2. NONADAPTIVE VS ADAPTIVE. Shown below are theumber of evaluations

needed to find an acceptable solution. For small dimensions, the adaptive method is

clearly better than the non adaptive one.

Function Search space

Max.

error

Number of

evaluations

(mean for 20

runs)

n (m) : n

failures after m

evaluations

Number of

evaluations for the

non adaptive case

(swarm size=20,

=b=0.8)

n (m): n

failures after m

evaluations

Alpine [0,10]

4

0.001 7001 0 28977 12 (40000)

[0,10]

5

0.001 13670 0 37191 15 (50000)

Griewank [-300,300]

4

0.1 8430 2 (24000) 13946 9 (24000)

[-300,300]

5

0.1 15234 6 (30000) 22560 14 (30000)

Rastrigin [-10,10]

4

0.01 63363 15 (100000) 88614 15 (100000)

Rosenbrock [-50,50]

4

0.001 10808 0 64143 3 (400000)

[-50,50]

5

0.001 50410 0 84850 3 (500000)

Sphere [0,40]

5

0.001 236 0 1038 0

[0,40]

10

0.001 790 0 3404 0

Table 3. NONADAPTIVE VS ADAPTI VE. This table shows the results after 40000

evaluations, and comparison with the nonadaptive version used in [12]. They are

sometimes better, and never very bad.

Bests results after 20 runs of 40000

evaluations

Function Search space Cheap_PSO PSO Type 1 (swarm

size=20)

Ackley [-32,32]

30

4.922528 0.150886

Foxholes [-50,50]

2

0.998004* 0.998005

Griewank [-300,300]

30

0.929815 0.008614

Rastrigin [-5.12,5.12]

30

51.716000 81.689550

Rosenbrock [-10,10]

30

37.218894 39.118488

* This global optimum is in fact found after about 7000 evaluations

3 Less classical examples

Here are some more unusual uses of

an optimizer.

3.1 Fifty-fifty

Find D different integers x

i

in [1,2

N] so that x x

i

Int D

i

Int D

D

( )

( )+

1

2

2 1

/

/

. The

objective function is

f x x x

i

Int D

i

Int D

D

( )

( )

( )+

1

2

2 1

/

/

, and the

target is0. For N=100 and D=20, a

solution is found after about 105

evaluations: (63, 90, 16, 54, 71, 20,

23, 60, 38, 15, 12, 48, 13, 51, 36, 42,

86, 26, 57, 79), half-sum=450. Thanks

to the randomness, several runs give

several different solutions.

3.2 Knapsack

Find D different integer numbers x

i

in

[1 N] whose sum is equal to a

given integer S. The objective function

is f x x S

i

D

( )

1

, and the target is

0. For N=100, D=10, and S=100, a

solution is found after about 870

evaluations: (9, 14, 18, 1, 16, 5, 6, 2,

12, 17). Thanks to the randomness,

several runs give several solutions, for

example (29, 3, 16, 4, 1, 2, 6, 8, 26,

5). Again, on the one hand it is not

very good, for this is a simplified

knapsack problem, which can easily

be solved by a faster deterministic

algorithm; but, on the other hand, you

precisely do not have to write such a

specific combinatorial algorithm.

3.3 System of equations

Any system

f x x

f x x

D

M D

1 1

1

0

0

, ...,

...

, ...,

( )

( )

'

, where

f

i

s are real functions, can be rewritten

equivalently

f x x f x x

D i D

i

M

1 1

2

1

0 , ..., , ..., ( ) ( )

, so

we can use f as an objective function

and zero as a target. For example, for

the system

3 2 0

3 3

2 3 6

1 2 3

1 2 3

1 2 3

x x x

x x x

x x x

+

+ +

'

the solution (1,1,1) is found after

about 780 evaluations on the search

space [-3,3]

3

. If we know in advance

that the solution is an integer point,

we can use granularity=1, and then the

solution is found after about 150

evaluations. Note that the algorithm is

more interesting for indeterminate

(eventually integer) systems, for

several runs usually give different

solutions. Note also that the system

does not need to be linear. For the

system

x x

x x

1

2

2

2

1 2

1 0

10 0

+

( )

'

sin

on [0,1]

2

, a solution (0.73, 0.66) is

found after about 320 evaluations. For

this example five runs give three

different solutions.

3.4 Apple trees

Let ABC be a triangular field, whith T

apple trees. Let P be a point inside

ABC, n

1

the number of apple trees in

ABP, n

2

in BCP, and n

3

in CAP. We

are looking for P so that, if possible,

n

1

=n

2

=n

3

. A possible objective function

is

f P n n n n ( ) ( ) + ( )

1 2

2

2 3

2

where n

i

are functions of the position

of P (I used here homogeneous

coordinates relatively to A, B, and C,

so that the dimension of the problem is

3). For T=20, I choose an initial

swarm size equal to 1, because the

problem is quite simple. Finding a

solution like (n

1

=7, n

2

=6, n

3

=7) needs

about 40 evaluations.

Figure 4. For twenty trees, a solution is

found after about 40 evaluations.

4 Improvements

Sometimes, even for problems which

seem to be "small" and not that

difficult, the program gives no

solution. When one analyze the

reasons of these failures, one find two

main causes:

the process stops too early,

the global velocity (which is

"summarized" by the kinetic

energy) becomes too small too

quickly.

So, some possible improvements

are:

modify the stop criterion, maybe

by taking into account not only

the past of each particle

separately, but a landscape

estimation in a whole

neighbourhood,

modify the "reasoning" and/or the

formulas for adaptive swarm size,

the coefficients and b, and,

possibly,

try an adaptive neighbourhood

size.

An interesting functional

improvement could be an

A

B

C

automatically variying and focussed

search space, in order to find several

solutions in a global search space. For

some problems, it would also be

interesting to use a non hypercubical

search space, or even a non connected

one. Also, of course, as already said,

the source code can be rewritten and

optimized. Anyway the aim is still the

same: the user must not do anything

other than describing the problem.

References

[1] R. C. Eberhart and J.

Kennedy, A New Optimizer Using

Particles Swarm Theory, presented at

Proc. Sixth International Symposium

on Micro Machine and Human

Science, Nagoya, Japan, 1995.

[2] J. Kennedy and R. C.

Eberhart, Particle Swarm

Optimization, presented at IEEE

International Conference on Neural

Networks, Perth, Australia, 1995.

[3] P. J. Angeline, Using

Selection to Improve Particle Swarm

Optimization, presented at IEEE

International Conference on

Evolutionary Computation, Anchorage,

Alaska, May 4-9, 1998.

[4] A. Carlisle and G. Dozier,

Adapting Particle Swarm

Optimization to Dynamics

Environments, presented at

International Conference on Artificial

Intelligence, Monte Carlo Resort, Las

Vegas, Nevada, USA, 1998.

[5] M. Clerc, The Swarm and

the Queen: Towards a Deterministic

and Adaptive Particle Swarm

Optimization, presented at Congress

on Evolutionary Computation,

Washington D.C., 1999.

[6] J. Kennedy, Stereotyping:

Improving Particle Swarm

Performance With Cluster Analysis,

presented at (submitted), 2000.

[7] R. C. Eberhart and Y. Shi,

Comparing inertia weights and

constriction factors in particle swarm

optimization, presented at

International Congress on Evolutionary

Computation, San Diego, California,

2000.

[8] Y. H. Shi and R. C. Eberhart,

A Modified Particle Swarm

Optimizer, presented at IEEE

International Conference on

Evolutionary Computation, Anchorage,

Alaska, May 4-9, 1998.

[9] S. Naka and Y. Fukuyama,

Practical Distribution State

Estimation Using Hybrid Particle

Swarm Optimization, presented at

IEEE Power Engineering Society

Winter Meeting, Columbus, Ohio,

USA., 2001.

[10] PSC,

http://www.particleswarm.net

[11] D. H. Wolpert and W. G.

Macready, No Free Lunch for

Search, The Santa Fe Institute 1995.

[12] M. Clerc and J. Kennedy,

The Particle Swarm: Explosion,

Stability, and Convergence in a Multi-

Dimensional Complex Space, IEEE

Journal of Evolutionary Computation,

vol. in press, No. , 2001.

Vous aimerez peut-être aussi

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (587)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- LG Refridge ServiceManualDocument79 pagesLG Refridge ServiceManualMichael Dianics100% (1)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- Line Sizing GuidelinesDocument31 pagesLine Sizing Guidelinesc_nghia100% (3)

- Liebhaber NESC 2017 ChangesDocument80 pagesLiebhaber NESC 2017 ChangesEdgar ZapanaPas encore d'évaluation

- Water Standard Specification Index As On Dec 2015Document10 pagesWater Standard Specification Index As On Dec 2015afp15060% (1)

- Two-Phase Flow (Gas-Flow) Line SizingDocument24 pagesTwo-Phase Flow (Gas-Flow) Line SizingvictorvikramPas encore d'évaluation

- GH G ConversionDocument146 pagesGH G ConversionjorgePas encore d'évaluation

- IGBTDocument22 pagesIGBTumeshgangwar100% (1)

- CH 3 Gas Refrigeration CycleDocument20 pagesCH 3 Gas Refrigeration CycleMelesePas encore d'évaluation

- Globecom 03Document113 pagesGlobecom 03Naren PathakPas encore d'évaluation

- Synthesis of Thinned Linear Antenna Arrays With Fixed Sidelobe Level Using Real-Coded Genetic Algorithm G. K. MahantiDocument10 pagesSynthesis of Thinned Linear Antenna Arrays With Fixed Sidelobe Level Using Real-Coded Genetic Algorithm G. K. MahantiNaren PathakPas encore d'évaluation

- Power MutationDocument4 pagesPower MutationNaren PathakPas encore d'évaluation

- Extraction of Rules With PSODocument4 pagesExtraction of Rules With PSONaren PathakPas encore d'évaluation

- SXS ExchangeDocument5 pagesSXS ExchangeMuhammad Saqib GhaffarPas encore d'évaluation

- Tracking Changing Extrema With Adaptive Particle Swarm OptimizerDocument5 pagesTracking Changing Extrema With Adaptive Particle Swarm OptimizerNaren PathakPas encore d'évaluation

- Velocity Self AdaptationDocument6 pagesVelocity Self AdaptationNaren PathakPas encore d'évaluation

- Particle Swarm Optimization - A Physics-Based ApproachDocument103 pagesParticle Swarm Optimization - A Physics-Based ApproachNaren PathakPas encore d'évaluation

- Particle Swarm Optimization Developments, Applications and Resources, IEEE-2001Document6 pagesParticle Swarm Optimization Developments, Applications and Resources, IEEE-2001sahathermal6633Pas encore d'évaluation

- Recent Advances in Particle Swarm OptimizationDocument8 pagesRecent Advances in Particle Swarm OptimizationNaren PathakPas encore d'évaluation

- Co-Evolutionary Particle Swarm Optimization For Min-Max Problems Using Gaussian DistributionDocument6 pagesCo-Evolutionary Particle Swarm Optimization For Min-Max Problems Using Gaussian DistributionNaren PathakPas encore d'évaluation

- Co-Evolutionary Particle Swarm Optimization For Min-Max Problems Using Gaussian DistributionDocument6 pagesCo-Evolutionary Particle Swarm Optimization For Min-Max Problems Using Gaussian DistributionNaren PathakPas encore d'évaluation

- A Hybrid Swarm Intelligence Based Mechanism For Earning ForecastDocument6 pagesA Hybrid Swarm Intelligence Based Mechanism For Earning ForecastNaren PathakPas encore d'évaluation

- Ieee - Aemc 2007Document4 pagesIeee - Aemc 2007Naren PathakPas encore d'évaluation

- Presented by Narendra Nath PathakDocument26 pagesPresented by Narendra Nath PathakNaren PathakPas encore d'évaluation

- Synthesis of Pencil Beam Pattern with a Uniformly Excited Multiple Concentric Circular Ring Array AntennaDocument4 pagesSynthesis of Pencil Beam Pattern with a Uniformly Excited Multiple Concentric Circular Ring Array AntennaNaren PathakPas encore d'évaluation

- Lecture Sept 22Document12 pagesLecture Sept 22Naren PathakPas encore d'évaluation

- KRYPTOSPHERE TechnologyDocument20 pagesKRYPTOSPHERE TechnologySoniale Sánchez GómezPas encore d'évaluation

- 568100Document2 pages568100Talha TariqPas encore d'évaluation

- محطات الطاقةDocument22 pagesمحطات الطاقةJoe LewisPas encore d'évaluation

- Mahaan FoodsDocument49 pagesMahaan Foodsamandeep0001Pas encore d'évaluation

- I apologize, upon further reflection I do not feel comfortable providing text to complete or fill in thoughts without more context about the intended message or topicDocument8 pagesI apologize, upon further reflection I do not feel comfortable providing text to complete or fill in thoughts without more context about the intended message or topicRayza CatrizPas encore d'évaluation

- Hydropower, Development & Poverty Reduction in Laos - Promises Realised or Broken (2020)Document22 pagesHydropower, Development & Poverty Reduction in Laos - Promises Realised or Broken (2020)Thulasidasan JeewaratinamPas encore d'évaluation

- DVC6200Document4 pagesDVC6200Jesus BolivarPas encore d'évaluation

- SUPER PPTPPTDocument15 pagesSUPER PPTPPTsrinuPas encore d'évaluation

- Torsional Vibration in CrankshaftsDocument10 pagesTorsional Vibration in Crankshaftscharans100% (4)

- rt8205b Datasheet-06Document28 pagesrt8205b Datasheet-06André Hipnotista100% (1)

- Solar Refrigerator SpecsDocument4 pagesSolar Refrigerator SpecsFaisal Bin FaheemPas encore d'évaluation

- Enclosed Control Product Guide: April 2008Document456 pagesEnclosed Control Product Guide: April 2008MED-ROBIN2000Pas encore d'évaluation

- Theory of The Triple Constraint - A Conceptual Review: December 2012Document8 pagesTheory of The Triple Constraint - A Conceptual Review: December 2012Keyah NkonghoPas encore d'évaluation

- APFC Epcos 6Document8 pagesAPFC Epcos 6Sriman ChinnaduraiPas encore d'évaluation

- CH 1Document13 pagesCH 1badgujar_bandhuPas encore d'évaluation

- Introduction To The OpenDSSDocument4 pagesIntroduction To The OpenDSSanoopeluvathingal100Pas encore d'évaluation

- Chapter 8 ObjectivesDocument3 pagesChapter 8 ObjectivesdaddlescoopPas encore d'évaluation

- High Efficiency Battery Charger Using DC-DC ConverterDocument4 pagesHigh Efficiency Battery Charger Using DC-DC ConvertersanilPas encore d'évaluation

- SOF National Science Olympiad 2018-19 GuidelinesDocument7 pagesSOF National Science Olympiad 2018-19 GuidelinesRahul SinhaPas encore d'évaluation

- Resume Dr. R.N.MukerjeeDocument13 pagesResume Dr. R.N.MukerjeeRahul Mukerjee100% (1)

- Indirect and Non-Calorimetric MethodsDocument44 pagesIndirect and Non-Calorimetric MethodsMadhuvanti GowriPas encore d'évaluation

- R6.3 TR-XXL Parameter Settings ReleaseDocument493 pagesR6.3 TR-XXL Parameter Settings Releasemishu35Pas encore d'évaluation