Académique Documents

Professionnel Documents

Culture Documents

Tut2 2014

Transféré par

Shashwat OmarDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Tut2 2014

Transféré par

Shashwat OmarDroits d'auteur :

Formats disponibles

CL688: Articial Intelligence in Process Engineering Tutorial Set 2

Linear and nonlinear classiers

1. Generate four 2-dimensional data sets x

i

, i = 1, . . . , 4 each containing data vectors from two

classes. In all x

i

s the rst class (denoted -1) contains 100 vectors uniformly distributed in the

square [0, 2] [0, 2]. The second class (denoted +1) contains another 100 vectors uniformly dis-

tributed in the squares [3, 5] [3, 5], [2, 4] [2, 4], [0, 2] [2, 4], and [1, 3] [1, 3] for x

1

, x

2

, x

3

, and

x

4

, respectively. Each data vector is augmented with a third coordinate that equals 1. Perform

the following steps:

(a) Plot the four data sets and notice that as we move from x

1

to x

3

the classes approach each

other but remain linearly separable. In x

4

the two classes overlap.

(b) Run the perceptron algorithm for each x

i

, i = 1, . . . , 4, with learning rate parameters 0.01

and 0.05 and initial estimate for the parameter vector [1, 1, 0.5]

.

(c) Run the perceptron algorithm for x

3

with learning rate 0.05 using as initial estimates for w

[1, 1, 0.5]

and [1, 1, 0.5]

.

(d) Comment on the results.

2. (a) Generate a set X

1

of N

1

= 200 data vectors, such that the rst 100 vectors stem from class

1

which is modeled by the Gaussian distribution with mean m

1

= [0, 0, 0, 0, 0]

. The rest stem

from class

2

, which is modeled by the Gaussian distribution with mean m

2

= [1, 1, 1, 1, 1]

.

Both distributions share the following covariance matrix:

S =

0.9 0.3 0.2 0.05 0.02

0.3 0.8 0.1 0.2 0.05

0.2 0.1 0.7 0.015 0.07

0.05 0.2 0.015 0.8 0.01

0.02 0.05 0.07 0.01 0.75

Generate an additional data set X

2

of N

2

= 200 data vectors, following the prescription used

for X

1

. Apply the optimal Bayes classier on X

2

and compute the classication error.

(b) Augment each feature vector in X

1

and X

2

by adding a 1 as the last coordinate. Dene the

class labels as -1 and +1 for the two classes, respectively. Using X

1

as the training set, obtain

the minimum squared-error (LS) estimate w. Use this estimate to classify the vectors of X

2

according to the inequality

w

x > (<)0

Compute the probability of error. Compare the results with those obtained in step 1.

(c) Repeat the previous steps, rst with X

2

replaced by a set X

3

containing N

3

= 10, 000 data

vectors and then with a set X

4

containing N

4

= 100, 000 data vectors. Both X

3

and X

4

are

generated using the prescription adopted for X

1

. Comment on the results.

3. In the 2-dimensional space, we are given two equiprobable classes, which follow Gaussian dis-

tributions with means m

1

= [0, 0]

and m

2

= [1.2, 1.2]

and covariance matrices S

1

= S

2

= 0.2I,

where I is the 2 2 identity matrix.

(a) Generate and plot a data set X

1

containing 200 points from each class (400 points total), to

be used for training (use the value of 50 as seed for the built-in MATLAB randn function).

Generate another data set X

2

containing 200 points from each class, to be used for testing

(use the value of 100 as seed for the built-in MATLAB randn function).

(b) Based on X

1

, generate SVM classiers that separate the two classes, using C = 0.1, 0.2, 0.5, 1, 2, 20.

Set tol = 0.001.

i. Compute the classication error on the training and test sets.

ii. Count the support vectors.

iii. Compute the margin (2/||w||).

iv. Plot the classier as well as the margin lines.

v. Are your results reproducible? (What would happen if you changed your randn seed?

Try the classication a few times).

c SBN, 2014, IIT-Bombay 1

CL688: Articial Intelligence in Process Engineering Tutorial Set 2

4. (a) Generate a 2-dimensional data set X

1

(training set) as follows. Select N = 150 data points

in the 2-dimensional [5, 5] [5, 5] region according to the uniform distribution (set the

seed for the rand function equal to 0). Assign a point x = [x(1), x(2)]

to the class +1 (1)

according to the rule 0.05(x

3

(1) + x

2

(1) + x(1) +1) > (<)x(2). (Clearly, the two classes are

nonlinearly separable; in fact, they are separated by the curve associated with the cubic. Plot

the points in X

1

. Generate an additional data set X

2

(test set) using the same prescription

as for X

1

(set the seed for the rand function equal to 100).

(b) Design a linear SVM classier. Compute the training and test errors and count the number

of support vectors. Use a tolerance of 0.001.

(c) Generate a nonlinear SVM classier using the radial basis kernel functions (Eq. 6.100 of

handout) for = 0.1 and 2. Use a tolerance of 0.001. Compute the training and test error

rates and count the number of support vectors. Plot the decision regions designed by the

classier.

(d) Repeat step 3 using the polynomial kernel functions (x

y +)

n

for (n, ) = (5, 0) and (3, 1).

Draw conclusions.

(e) Design the SVM classiers using the radial basis kernel function with = 1.5 and using the

polynomial kernel function with n = 3 and = 1.

(f) Comment on the reproducibility of your answers.

5. (a) Generate a 2-dimensional data set X

1

(training set) as follows. Consider the nine squares

[i, i + 1] [j, j + 1], i = 0, 1, 2, j = 0, 1, 2 and draw randomly from each one 30 uniformly

distributed points. The points that stem from squares for which i + j is even (odd) are

assigned to class +1 (-1) (like the white and black squares on a chessboard). Plot the data

set and generate an additional data set X

2

(test set) following the prescription used for X

1

(as in the previous problem, set the seed for rand at 0 for X

1

and 100 for X

2

).

(b) i. Design a linear SVM classier. Compute the training and test errors and count the

number of support vectors.

ii. Employ the previous algorithm to design nonlinear SVM classiers, with radial basis

kernel functions. Use = 1, 1.5, 2, 5. Compute the training and test errors and count

the number of support vectors.

iii. Repeat for polynomial kernel functions, using n = 3, 5 and = 1.

(c) Draw your conclusions regarding the efcacy of the SVM, and the reproducibility of your

results.

6. (a) i. Generate a data set X

1

consisting of 400 2-dimensional vectors that stem from two

classes. The rst 200 stem from the rst class, which is modeled by the Gaussian

distribution with mean m

1

= [8, 8]

; the rest stem from the second class, modeled

by the Gaussian distribution with mean m

2

= [8, 8]

. Both distributions share the

covariance matrix

S =

0.3 1.5

1.5 9.0

ii. Perform PCA on X

1

and compute the percentage of the total amount of variance ex-

plained by each component.

iii. Project the vectors of X

1

along the direction of the rst principal component and plot

the data set X

1

and its projection to the rst principal component. Comment on the

results.

(b) Repeat on data set X

2

, which is generated as X

1

but with m

1

= [1, 0]

and m

2

= [1, 0]

.

(c) Compare the results obtained and draw conclusions.

(d) Apply Fishers linear discriminant analysis on the data set X

2

and compare the results

obtained which those from the analysis above.

c SBN, 2014, IIT-Bombay 2

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (120)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- Class 8 Important Questions For Maths - Rational NumbersDocument108 pagesClass 8 Important Questions For Maths - Rational Numbersarkemishra100% (3)

- Sat Math Easy Practice QuizDocument11 pagesSat Math Easy Practice QuizMohamed Abdalla Mohamed AlyPas encore d'évaluation

- AppendixDocument42 pagesAppendixLucy Brown100% (1)

- Math PDFDocument6 pagesMath PDFsneha papnoiPas encore d'évaluation

- Cement Vertical Mill Vs Ball MillDocument17 pagesCement Vertical Mill Vs Ball Millanrulo50% (2)

- Cement Grinding OPtimizationDocument13 pagesCement Grinding OPtimizationTgemunuPas encore d'évaluation

- Amar Ujala, Kanpur-Page-10, 02.04.2019Document1 pageAmar Ujala, Kanpur-Page-10, 02.04.2019Shashwat OmarPas encore d'évaluation

- Revised Vacancy Circular Singed PDFDocument8 pagesRevised Vacancy Circular Singed PDFSS AkashPas encore d'évaluation

- Harendra Singh Resume ExperienceDocument3 pagesHarendra Singh Resume ExperienceShashwat OmarPas encore d'évaluation

- Amar Ujala - 02.05.2019Document1 pageAmar Ujala - 02.05.2019Shashwat OmarPas encore d'évaluation

- Changed Mid-Sem Schedule PDFDocument92 pagesChanged Mid-Sem Schedule PDFShashwat OmarPas encore d'évaluation

- Direct Recruitment of Scientist 'B' in DRDO: Public NoticeDocument1 pageDirect Recruitment of Scientist 'B' in DRDO: Public NoticeSai Charan.VPas encore d'évaluation

- COVID 19 RT PCR Screening (Nucleic Acid Amplification Qualitative)Document2 pagesCOVID 19 RT PCR Screening (Nucleic Acid Amplification Qualitative)Shashwat OmarPas encore d'évaluation

- GK ALL PaperDocument104 pagesGK ALL PaperShashwat OmarPas encore d'évaluation

- Ankita Pratyush: Wedding InvitationDocument4 pagesAnkita Pratyush: Wedding InvitationShashwat OmarPas encore d'évaluation

- Vi Jayyadav: Car Eerobj Ect I Ve-Educat I OnDocument1 pageVi Jayyadav: Car Eerobj Ect I Ve-Educat I OnShashwat OmarPas encore d'évaluation

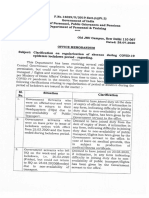

- Clarification Covid19Document2 pagesClarification Covid19Shashwat OmarPas encore d'évaluation

- Ankita Weds Pratyush 507648Document4 pagesAnkita Weds Pratyush 507648Shashwat OmarPas encore d'évaluation

- Wedding InvitationDocument4 pagesWedding InvitationShashwat OmarPas encore d'évaluation

- CHSL All Gs Questions 64 SetsDocument119 pagesCHSL All Gs Questions 64 SetsShashwat Omar100% (1)

- Environment Engineering and Basic ConceptsDocument1 pageEnvironment Engineering and Basic ConceptsShashwat OmarPas encore d'évaluation

- Electronics Engineering and Its FundamentalsDocument1 pageElectronics Engineering and Its FundamentalsShashwat OmarPas encore d'évaluation

- Oil Technology and Its FundamentalsDocument1 pageOil Technology and Its FundamentalsShashwat OmarPas encore d'évaluation

- Information Technology FundamentalsDocument1 pageInformation Technology FundamentalsShashwat OmarPas encore d'évaluation

- Bioscience Engineering and Its ApplicationDocument1 pageBioscience Engineering and Its ApplicationShashwat OmarPas encore d'évaluation

- Plastic Technology and ElasticityDocument1 pagePlastic Technology and ElasticityShashwat OmarPas encore d'évaluation

- Paint Technology and FundamentalsDocument1 pagePaint Technology and FundamentalsShashwat OmarPas encore d'évaluation

- Computer Science and Its Fundamental ConceptsDocument1 pageComputer Science and Its Fundamental ConceptsShashwat OmarPas encore d'évaluation

- Civil Engineering Works and MethodsDocument1 pageCivil Engineering Works and MethodsShashwat OmarPas encore d'évaluation

- Electrical Engineering Fundamentals and Their Application in Real LifeDocument1 pageElectrical Engineering Fundamentals and Their Application in Real LifeShashwat OmarPas encore d'évaluation

- Electrical Engineering Fundamentals and Their Application in Real LifeDocument1 pageElectrical Engineering Fundamentals and Their Application in Real LifeShashwat OmarPas encore d'évaluation

- Civil Engineering Works and MethodsDocument1 pageCivil Engineering Works and MethodsShashwat OmarPas encore d'évaluation

- Cement Manufacturing Process BibliographyDocument5 pagesCement Manufacturing Process BibliographyShashwat OmarPas encore d'évaluation

- MANetwork HospitalsDocument208 pagesMANetwork HospitalsShashwat OmarPas encore d'évaluation

- Function and Euqations - Quantitative Aptitude Questions MCQDocument4 pagesFunction and Euqations - Quantitative Aptitude Questions MCQAnonymous v5QjDW2eHxPas encore d'évaluation

- Invnerse Trignometric FunctionsDocument9 pagesInvnerse Trignometric FunctionsMuhammad HamidPas encore d'évaluation

- Advanced Level DPP Vectors and Vector 3d Question MathongoDocument12 pagesAdvanced Level DPP Vectors and Vector 3d Question MathongoReanna GeraPas encore d'évaluation

- (7-4) Overheads and Problems PDFDocument3 pages(7-4) Overheads and Problems PDFDevita OctaviaPas encore d'évaluation

- Folland 5Document15 pagesFolland 5NimaHadianPas encore d'évaluation

- Selected Solutions To The ExercisesDocument77 pagesSelected Solutions To The ExercisesnicoPas encore d'évaluation

- Evaluate Homework and Practice Module 19 Lesson 1 Page 956Document6 pagesEvaluate Homework and Practice Module 19 Lesson 1 Page 956dytmkttif100% (1)

- Chapter 05Document54 pagesChapter 0510026722Pas encore d'évaluation

- RD Sharma Class 8 Maths Chapter 2 PowersDocument17 pagesRD Sharma Class 8 Maths Chapter 2 PowersKavita DhootPas encore d'évaluation

- Winter School Maths RevisionDocument30 pagesWinter School Maths RevisionAthiPas encore d'évaluation

- 44 Multiplicity of EigenvaluesDocument2 pages44 Multiplicity of EigenvaluesHong Chul NamPas encore d'évaluation

- Systems of Linear Equations Matrices: Section 3 Gauss-Jordan EliminationDocument36 pagesSystems of Linear Equations Matrices: Section 3 Gauss-Jordan Elimination3bbad.aaaPas encore d'évaluation

- Chapter 16 Matrices: Try These 16.1Document48 pagesChapter 16 Matrices: Try These 16.1Yagna LallPas encore d'évaluation

- MA 102 (Ordinary Differential Equations)Document1 pageMA 102 (Ordinary Differential Equations)SHUBHAMPas encore d'évaluation

- Math1049 - Linear Algebra IiDocument36 pagesMath1049 - Linear Algebra IiRaya ManchevaPas encore d'évaluation

- Ma6351 Transforms and Partial Differential Equations Nov/Dec 2014Document2 pagesMa6351 Transforms and Partial Differential Equations Nov/Dec 2014Abisheik KumarPas encore d'évaluation

- Mathematical Analysis For Engineers: B. Dacorogna and C. TanteriDocument8 pagesMathematical Analysis For Engineers: B. Dacorogna and C. TanteriTapanPas encore d'évaluation

- 09 Integration - Extra Exercises PDFDocument11 pages09 Integration - Extra Exercises PDFOoi Chia EnPas encore d'évaluation

- Eigenvalues and Eigenvectors MCQDocument5 pagesEigenvalues and Eigenvectors MCQmohan59msc_76365118Pas encore d'évaluation

- Boolean Algebra Report PDFDocument11 pagesBoolean Algebra Report PDFحيدر عبد الحكيم مزهرPas encore d'évaluation

- JEE Main Maths Sequence and Series Previous Year Questions With SolutionsDocument6 pagesJEE Main Maths Sequence and Series Previous Year Questions With SolutionsGautam GunjanPas encore d'évaluation

- Thomas Algorithm For Tridiagonal Matrix Using PythonDocument2 pagesThomas Algorithm For Tridiagonal Matrix Using PythonMr. Anuse Pruthviraj DadaPas encore d'évaluation

- STML 37 PrevDocument51 pagesSTML 37 PrevArvey Sebastian Velandia Rodriguez -2143414Pas encore d'évaluation

- mth107 - Elementary Mathematics For EngineersDocument7 pagesmth107 - Elementary Mathematics For EngineersShobhit MishraPas encore d'évaluation

- Makalah Integral Tak Tentu English LanguageDocument15 pagesMakalah Integral Tak Tentu English LanguageMujahid Ghoni AbdullahPas encore d'évaluation

- Math ProjectDocument3 pagesMath Projectapi-208339650Pas encore d'évaluation