Académique Documents

Professionnel Documents

Culture Documents

CH 3

Transféré par

truongvinhlan19895148Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

CH 3

Transféré par

truongvinhlan19895148Droits d'auteur :

Formats disponibles

Chapter 3

The I-Measure

Raymond W. Yeung 2010

Department of Information Engineering

The Chinese University of Hong Kong

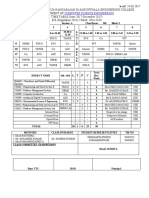

An Example

X

1

X

2

H ( ) ,

X

1

X

2

H ( )

X

1

X

2

H ( )

X

2

X

1

I ( ) ;

X

1

H ( )

2

X H ( )

Substitution of Symbols

H/I

,

;

|

is some signed measure on F

n

.

Examples:

1.

(

X

1

X

2

) = H(X

1

|X

2

)

(

X

2

X

1

) = H(X

2

|X

1

),

(

X

1

X

2

) = I(X

1

; X

2

)

2. Inclusion-Exclusion formulation in set-theory

(

X

1

X

2

) =

(

X

1

) +

(

X

2

)

(

X

1

X

2

)

corresponds to

H(X

1

, X

2

) = H(X

1

) +H(X

2

) I(X

1

; X

2

)

in information theory.

3.1 Preliminaries

Denition 3.1 The eld F

n

generated by sets

X

1

,

X

2

, ,

X

n

is the collection

of sets which can be obtained by any sequence of usual set operations (union,

intersection, complement, and dierence) on

X

1

,

X

2

, ,

X

n

.

Denition 3.2 The atoms of F

n

are sets of the form

n

i=1

Y

i

, where Y

i

is either

X

i

or

X

c

i

, the complement of

X

i

.

Example 3.3

The sets

X

1

and

X

2

generate the eld F

2

.

There are 4 atoms in F

2

.

There are a total of 16 sets in F

2

Denition 3.4 A real function dened on F

n

is called a signed measure if

it is set-additive, i.e., for disjoint A and B in F

n

,

(A B) = (A) +(B).

Remark () = 0.

Example 3.5

X

1

X

2

A signed measure on F

2

is completely specied by the values on the

atoms

(

X

1

X

2

), (

X

c

1

X

2

), (

X

1

X

c

2

), (

X

c

1

X

c

2

)

The value of on other sets in F

2

are obtained by set-additivity.

Section 3.3

Construction of the I-Measure !*

Let

X be a set corresponding to a r.v. X.

N

n

= {1, 2, , n}.

Universal set

=

iN

n

X

i

.

Empty atom of F

n

A

0

=

iN

n

X

c

i

A is the set of other atoms of F

n

, called non-empty atoms. |A| = 2

n

1.

A signed measure on F

n

is completely specied by the values of on

the nonempty atoms of F

n

.

Notations For nonempty subset G of N

n

:

X

G

= (X

i

, i G)

X

G

=

iG

X

i

Theorem 3.6 Let

B =

X

G

: G is a nonempty subset of N

n

.

Then a signed measure on F

n

is completely specied by {(B), B B}, which

can be any set of real numbers.

Proof of Theorem 3.6

|A| = |B| = 2

n

1

u column k-vector of (A), A A

h column k-vector of (B), B B

Obviously can write h = C

n

u, where C

n

is a unique k k matrix.

On the other hand, for each A A, (A) can be expressed as a linear

combination of (B), B B by applying

(A B C) = (AC) +(B C) (A B C)

(AB) = (A B) (B).

(see Appendix 3.A) That is, u = D

n

h.

Then u = (D

n

C

n

)u, showing that D

n

= (C

n

)

1

is unique.

Two Lemmas

Lemma 3.7

(A B C) = (A C) +(B C) (A B C) (C).

Lemma 3.8

I(X; Y |Z) = H(X, Z) +H(Y, Z) H(X, Y, Z) H(Z).

Construct the I-Measure

on F

n

using by dening

(

X

G

) = H(X

G

)

for all nonempty subsets G of N

n

.

is meaningful if it is consistent with all Shannons information mea-

sures via the substitution of symbols, i.e., the following must hold for

all (not necessarily disjoint) subsets G, G

, G

of N

n

where G and G

are

nonempty:

(

X

G

X

G

X

G

) = I(X

G

; X

G

|X

G

)

G

(

X

G

X

G

) = I(X

G

; X

G

)

G = G

(

X

G

X

G

) = H(X

G

|X

G

)

G = G

and G

(

X

G

) = H(X

G

)

Theorem 3.9

is the unique signed measure on F

n

which is consistent with

all Shannons information measures.

Implications

Can formally regard Shannons information measures for n r.v.s as the

unique signed measure

dened on F

n

.

Can employ set-theoretic tools to manipulate expressions of Shannons

information measures.

Proof of Theorem 3.9

(

X

G

X

G

X

G

)

=

(

X

GG

) +

(

X

G

G

)

(

X

GG

G

)

(

X

G

)

= H(X

GG

) +H(X

G

G

) H(X

GG

G

) H(X

G

)

= I(X

G

; X

G

|X

G

),

In order that

is consistent with all Shannons information measures,

(

X

G

) = H(X

G

)

for all nonempty subsets G of N

n

.

Thus

is the unique signed measure on F

n

which is consistent with all

Shannons information measures.

3.4 !* can be Negative

is nonnegative for n = 2.

For n = 3,

(

X

1

X

2

X

3

) = I(X

1

; X

2

; X

3

) can be negative.

Example 3.10

X

1

, X

2

i.i.d. binary r.v.s uniform on {0, 1}

X

3

= X

1

+ X

2

mod 2

Easy to check:

H(X

i

) = 1, for all i

X

1

, X

2

, X

3

are pairwise independent, so that

H(X

i

, X

j

) = 2 and I(X

i

; X

j

) = 0, for all i = j

Under these constraints, I(X

1

; X

2

; X

3

) = 1.

3.5 Information Diagrams

X

1

X

1

X

2

H ( )

X

1

H ( )

X

2

X

3

X

1

I ( ) ; X

3

; ; X

1

I ( ) X

3

X

2

; X

1

I X

3

X

2

( )

, X

3

X

2

X

1

H ( )

0

0 0

1 1

1

1

X

1

X

2

X

3

The information diagram for Example 3.10

X

1

X

2

X

3

X

4

Theorem 3.11 If there is no constraint on X

1

, X

2

, , X

n

, then

can take

any set of nonnegative values on the nonempty atoms of F

n

.

Proof

Let Y

A

, A A be mutually independent r.v.s.

Dene X

i

, i = 1, 2, , n by

X

i

= (Y

A

: A A and A

X

i

).

Claim: X

1

, X

2

, , X

n

so constructed induce the I-Measure

such that

(A) = H(Y

A

), for all A A.

which are arbitrary nonnegative numbers.

Consider

H(X

G

) = H(X

i

, i G)

= H((Y

A

: A A and A

X

i

), i G)

= H(Y

A

: A A and A

X

G

)

=

AA:A

X

G

H(Y

A

)

On the other hand,

H(X

G

) =

(

X

G

) =

AA:A

X

G

(A)

Thus

AA:A

X

G

H(Y

A

) =

AA:A

X

G

(A)

One solution is

(A) = H(Y

A

), for all A A.

By the uniqueness of

, this is the only solution.

Information Diagrams for

Markov Chains

If X

1

X

2

X

n

form a Markov chain, then the structure of

is much simpler and hence the information diagram can be simplied.

For n = 3, X

1

X

2

X

3

i I(X

1

; X

3

|X

2

) = 0. So the atom

X

1

X

3

X

2

can be suppressed.

The values of

on the remaining atoms correspond to Shannons infor-

mation measures and hence are nonnegative. In particular,

(

X

1

;

X

2

;

X

3

) =

(

X

1

;

X

3

) = I(X

1

; X

3

)

Thus,

is a measure.

X

3

X

1

X

2

For n = 4,

vanishes on the following atoms:

X

1

X

c

2

X

3

X

c

4

X

1

X

c

2

X

3

X

4

X

1

X

c

2

X

c

3

X

4

X

1

X

2

X

c

3

X

4

X

c

1

X

2

X

c

3

X

4

The information diagram can be displayed in two dimensions.

The values of

on the remaining atoms correspond to Shannons infor-

mation measures and hence are nonnegative. Thus,

is a measure.

X

1

X

4

X

3

X

2

...

X

1

X

2

X

n -1

X

n

For a general n, the information diagram can be displayed in two dimen-

sions because certain atoms can be suppressed.

The values of

on the remaining atoms correspond to Shannons infor-

mation measures and hence are nonnegative. Thus,

is a measure.

See Ch. 12 for a detailed discussion in the context of Markov random eld.

3.6 Examples of Applications

To obtain information identities is WYSIWYG.

To obtain information inequalities:

If

is nonnegative, if A B, then

(A)

(B)

because

(A)

(A) +

(B A) =

(B)

If

is a signed measure, need to invoke the basic inequalities.

Example 3.12 (Concavity of Entropy) Let X

1

p

1

(x) and X

2

p

2

(x).

Let

X p(x) = p

1

(x) +

p

2

(x),

where 0 1 and

= 1 . Show that

H(X) H(X

1

) +

H(X

2

).

X

Z = 1

Z = 2

X

1

X

2

Example 3.13 (Convexity of Mutual Information) Let

(X, Y ) p(x, y) = p(x)p(y|x).

Show that for xed p(x), I(X; Y ) is a convex functional of p(y|x).

Y X

Z = 1

Z = 2

p

2

( )

y x

p

1

( )

y x

Setup: I(X; Z) = 0.

Example 3.14 (Concavity of Mutual Information) Let

(X, Y ) p(x, y) = p(x)p(y|x).

Show that for xed p(y|x), I(X; Y ) is a concave functional of p(x).

X

Y p ( y | x )

Z =1

p

1

( x )

Z =2

p

2

( x )

Setup: Z X Y .

Shannons Perfect Secrecy Theorem

X plaintext

Y ciphertext

Z key

Perfect Secrecy: I(X; Y ) = 0

Decipherability: H(X|Y, Z) = 0

These requirement implies H(Z) H(X), i.e., the length of the key is at

least the same as the length of the plaintext. Lower bound achievable by

one-time pad.

Shannon (1949) gave a combinatorial proof.

Can readily be proved by an information diagram.

Example 3.15 (Imperfect Secrecy Theorem) Let X be the plain text, Y

be the cipher text, and Z be the key in a secret key cryptosystem. Since X can

be recovered from Y and Z, we have

H(X|Y, Z) = 0.

Show that this constraint implies

I(X; Y ) H(X) H(Z).

Remark Do not need to make these assumptions about the scheme:

H(Y |X, Z) = 0

I(X; Z) = 0

Example 3.17 (Data Processing Theorem) If X Y Z T, then

I(X; T) I(Y ; Z)

in fact

I(Y ; Z) = I(X; T) +I(X; Z|T) +I(Y ; T|X) +I(Y ; Z|X, T)

Example 3.18 If X Y Z T U, then

H(Y ) +H(T) = I(Z; X, Y, T, U) +I(X, Y ; T, U) +H(Y |Z) +H(T|Z)

Very dicult to discover without an information diagram.

Instrumental in proving an outer bound for the multiple description prob-

lem.

Highlight of Ch. 12

The I-Measure completely characterizes a class of Markov structures called

full conditional independence.

Markov random eld is a special case.

Markov chain is a special case of Markov random eld.

Analysis of these Markov structures becomes completely set-theoretic.

Example 12.22

1

:

(X

1

, X

2

) (X

3

, X

4

)

(X

1

, X

3

) (X

2

, X

4

)

2

:

(X

1

, X

2

, X

3

) X

4

(X

1

, X

2

, X

4

) X

3

Each (conditional) independency forces

to vanish on the atoms in the

corresponding set.

E.g., (X

1

, X

2

) (X

3

, X

4

)

vanishes on the atoms in (

X

1

X

2

)

(

X

3

X

4

).

Analysis of Example 12.12

vanishes on atoms with a dot.

X

1

X

2

X

3

X

4

X

1

X

2

X

3

X

4

1

2

Proving Information Inequalities

Information inequalities that are implied by the basic inequalities are

called Shannon-type inequalities.

They can be proved by means of a linear program called ITIP (Information

Theoretic Inequality Prover), developed on Matlab at CUHK (1996):

http://user-www.ie.cuhk.edu.hk/ITIP/

A version running on C called Xitip was developed at EPFL (2007):

http://xitip.ep.ch/

See Ch. 13 and 14 for discussion.

ITIP Examples

1. >> ITIP(H(XYZ) <= H(X) + H(Y) + H(Z))

True

2. >> ITIP(I(X;Z) = 0,I(X;Z|Y) = 0,I(X;Y) = 0)

True

3. >> ITIP(X/Y/Z/T, X/Y/Z, Y/Z/T)

Not provable by ITIP

4. >> ITIP(I(Z;U) - I(Z;U|X) - I(Z;U|Y) <=

0.5 I(X;Y) + 0.25 I(X;ZU) + 0.25 I(Y;ZU))

Not provable by ITIP

#4 is a so-called non-Shannon-type inequalities which is valid but not

implied by the basic inequalities. See Ch. 15 for discussion.

Vous aimerez peut-être aussi

- How To Give A Good TalkDocument2 pagesHow To Give A Good Talktruongvinhlan19895148Pas encore d'évaluation

- Motion Segmentation Using EM - A Short Tutorial: 1 The Expectation (E) StepDocument5 pagesMotion Segmentation Using EM - A Short Tutorial: 1 The Expectation (E) Steptruongvinhlan19895148Pas encore d'évaluation

- How To Write A Good ReviewDocument2 pagesHow To Write A Good Reviewtruongvinhlan19895148Pas encore d'évaluation

- How To Write A Good GrantDocument2 pagesHow To Write A Good Granttruongvinhlan19895148Pas encore d'évaluation

- More On GaussiansDocument11 pagesMore On GaussiansblazingarunPas encore d'évaluation

- Graph-based Image Segmentation TechniquesDocument35 pagesGraph-based Image Segmentation Techniquestruongvinhlan19895148Pas encore d'évaluation

- CUDA C Best Practices GuideDocument76 pagesCUDA C Best Practices GuideForrai KriszitánPas encore d'évaluation

- Sc11 Cuda C BasicsDocument68 pagesSc11 Cuda C BasicsPoncho CoetoPas encore d'évaluation

- Linear RegressionDocument64 pagesLinear Regressiontruongvinhlan19895148Pas encore d'évaluation

- Fourier Transform and Complex NumbersDocument57 pagesFourier Transform and Complex Numberstruongvinhlan19895148Pas encore d'évaluation

- Algo Ds Bloom TypedDocument8 pagesAlgo Ds Bloom Typedtruongvinhlan19895148Pas encore d'évaluation

- The Multivariate Gaussian Distribution: 1 Relationship To Univariate GaussiansDocument10 pagesThe Multivariate Gaussian Distribution: 1 Relationship To Univariate Gaussiansandrewjbrereton31Pas encore d'évaluation

- CUDA C Programming GuideDocument187 pagesCUDA C Programming GuideaelhaddadPas encore d'évaluation

- Analysis of Algorithms CS 477/677: Red-Black Trees Instructor: George Bebis (Chapter 14)Document36 pagesAnalysis of Algorithms CS 477/677: Red-Black Trees Instructor: George Bebis (Chapter 14)truongvinhlan19895148100% (1)

- AIG's Missing Securities Lending Piece and the Role it Played in the BailoutDocument70 pagesAIG's Missing Securities Lending Piece and the Role it Played in the Bailouttruongvinhlan19895148Pas encore d'évaluation

- Robert Gallager LDPC 1963Document90 pagesRobert Gallager LDPC 1963Thao ChipPas encore d'évaluation

- Football Vocabulary Match 0Document2 pagesFootball Vocabulary Match 0Abir MiliPas encore d'évaluation

- CH 2Document41 pagesCH 2truongvinhlan19895148Pas encore d'évaluation

- Football Vocabulary - The GameDocument2 pagesFootball Vocabulary - The Gametruongvinhlan19895148Pas encore d'évaluation

- IMC2012 Day1 SolutionsDocument4 pagesIMC2012 Day1 Solutionstruongvinhlan19895148Pas encore d'évaluation

- Em TutorialDocument3 pagesEm Tutorialtruongvinhlan19895148Pas encore d'évaluation

- 4.1 Probability DistributionsDocument32 pages4.1 Probability Distributionstruongvinhlan19895148Pas encore d'évaluation

- IMC2012 Day2 SolutionsDocument4 pagesIMC2012 Day2 SolutionsMaosePas encore d'évaluation

- IMC2013 Day1 SolutionsDocument3 pagesIMC2013 Day1 Solutionstruongvinhlan19895148Pas encore d'évaluation

- IMC2013 Day2 SolutionsDocument4 pagesIMC2013 Day2 Solutionstruongvinhlan19895148Pas encore d'évaluation

- International Mathematical Olympiad Shortlist 2013Document68 pagesInternational Mathematical Olympiad Shortlist 2013math61366Pas encore d'évaluation

- Problem Shortlist With Solutions: 52 International Mathematical OlympiadDocument77 pagesProblem Shortlist With Solutions: 52 International Mathematical OlympiadHimansu Mookherjee100% (1)

- Baum Welch HMMDocument24 pagesBaum Welch HMMtruongvinhlan19895148Pas encore d'évaluation

- IMO2012SLDocument52 pagesIMO2012SLtruongvinhlan19895148Pas encore d'évaluation

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (119)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Implicit Enhancement in SAP ABAP (New Enhancement Framework) With TutorialDocument7 pagesImplicit Enhancement in SAP ABAP (New Enhancement Framework) With TutorialEmilSPas encore d'évaluation

- Java ScriptDocument193 pagesJava ScriptMonish SoniPas encore d'évaluation

- Whatsapp Bloker and Haking Tool KitDocument2 359 pagesWhatsapp Bloker and Haking Tool KitAaditya KhannaPas encore d'évaluation

- FANUC Robotics Documentation XDocument1 pageFANUC Robotics Documentation XCtor RigelPas encore d'évaluation

- CISSP - Cryptography Drill-Down HandoutDocument43 pagesCISSP - Cryptography Drill-Down HandoutPremdeepakHulagbaliPas encore d'évaluation

- % This Program Prints The Power Flow Solution in A Tabulated Form % On The Screen. % %CLCDocument5 pages% This Program Prints The Power Flow Solution in A Tabulated Form % On The Screen. % %CLCJuanda SijabatPas encore d'évaluation

- Case Study 2 18CO48Document3 pagesCase Study 2 18CO48Sohail SayyedPas encore d'évaluation

- CHP 1 NUMBER SYSTEM (UUM) PDFDocument31 pagesCHP 1 NUMBER SYSTEM (UUM) PDFdaniPas encore d'évaluation

- Python NotesDocument16 pagesPython Notesdhdkla69Pas encore d'évaluation

- JDK Migration Guide PDFDocument54 pagesJDK Migration Guide PDFRajesh JangraPas encore d'évaluation

- Handout 3 - Java OperatorsDocument5 pagesHandout 3 - Java OperatorsJayson Angelo Vale CruzPas encore d'évaluation

- Introduction To Computer ScienceDocument40 pagesIntroduction To Computer ScienceobumnwabudePas encore d'évaluation

- Mpi Lab ProjectDocument4 pagesMpi Lab ProjectGaylethunder007Pas encore d'évaluation

- Buchi Logical Characterization Regular LanguagesDocument28 pagesBuchi Logical Characterization Regular Languagespnddle1Pas encore d'évaluation

- A Tour of the City and New RelationshipsDocument5 pagesA Tour of the City and New RelationshipsRizkiawanPas encore d'évaluation

- CSE2002 Theory of ComputationDocument2 pagesCSE2002 Theory of ComputationArpit AgarwalPas encore d'évaluation

- DSA Assignment 1Document15 pagesDSA Assignment 1PSPas encore d'évaluation

- Three Phase Inverter System Based on Fuzzy Control for Induction Motor Speed RegulationDocument8 pagesThree Phase Inverter System Based on Fuzzy Control for Induction Motor Speed RegulationNaresh Kumar NPas encore d'évaluation

- Android Malware Detection Based On Image AnalysisDocument6 pagesAndroid Malware Detection Based On Image AnalysisThanaa OdatPas encore d'évaluation

- Unit 4Document38 pagesUnit 4Ashmit SharmaPas encore d'évaluation

- Spam SMS Detection Python NLTK Machine LearningDocument23 pagesSpam SMS Detection Python NLTK Machine LearningCollege NotesPas encore d'évaluation

- Arduino LED FSMDocument4 pagesArduino LED FSMRehman KhanPas encore d'évaluation

- Aryan Mittal Resume PDFDocument1 pageAryan Mittal Resume PDFDeepika KumariPas encore d'évaluation

- Cse Time Table Odd Semester 2017-2018 CompletedDocument6 pagesCse Time Table Odd Semester 2017-2018 CompletedFiigigigig8Pas encore d'évaluation

- m4 Daa - TieDocument26 pagesm4 Daa - TieShan MpPas encore d'évaluation

- 8085 Microprocessor Interview QuestionsDocument17 pages8085 Microprocessor Interview QuestionshemanthPas encore d'évaluation

- DSA Question BankDocument7 pagesDSA Question Bankkeerthana GPas encore d'évaluation

- Journal of Computer Science Research - Vol.4, Iss.2 April 2022Document46 pagesJournal of Computer Science Research - Vol.4, Iss.2 April 2022Bilingual PublishingPas encore d'évaluation

- Fixed Versus Floating PointDocument5 pagesFixed Versus Floating PointAnil AgarwalPas encore d'évaluation

- Financial Modelling - 3Document13 pagesFinancial Modelling - 3Hemo BoghosianPas encore d'évaluation