Académique Documents

Professionnel Documents

Culture Documents

Machine Translation

Transféré par

MaRielaParraDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Machine Translation

Transféré par

MaRielaParraDroits d'auteur :

Formats disponibles

History of Machine Translation

The idea of machine translation may be traced back to the 17th century. In

1629, Ren Descartes proposed a universal language, with equivalent ideas in different

tongues sharing one symbol. The field of "machine translation" appeared in Warren

Weaver's Memorandum on Translation (1949). The first researcher in the field, Yehosha

Bar-Hillel, began his research at MIT (1951). A Georgetown MT research team followed

(1951) with a public demonstration of its system in 1954. MT research programs popped

up in Japan and Russia (1955), and the first MT conference was held in London (1956).

Researchers continued to join the field as the Association for Machine Translation and

Computational Linguistics was formed in the U.S. (1962) and the National Academy of

Sciences formed the Automatic Language Processing Advisory Committee (ALPAC) to

study MT (1964). Real progress was much slower, however, and after the ALPAC

report (1966), which found that the ten-year-long research had failed to fulfill expectations,

funding was greatly reduced.

The French Textile Institute also used MT to translate abstracts from and into

French, English, German and Spanish (1970); Brigham Young University started a project

to translate Mormon texts by automated translation (1971); and Xerox used SYSTRAN to

translate technical manuals (1978). Beginning in the late 1980s, as computational power

increased and became less expensive, more interest was shown in statistical models for

machine translation. Various MT companies were launched, including Trados (1984),

which was the first to develop and market translation memory technology (1989). The first

commercial MT system for Russian/ English / German-Ukrainian was developed at

Kharkov State University (1991).

MT on the web started with SYSTRAN Offering free translation of small texts

(1996), followed by AltaVista Babelfish, which racked up 500,000 requests a day (1997).

Franz-Josef Och (the future head of Translation Development AT Google) won DARPA's

speed MT competition (2003). More innovations during this time included MOSES, the

open-source statistical MT engine (2007), a text/SMS translation service for mobiles in

Japan (2008), and a mobile phone with built-in speech-to-speech translation functionality

for English, Japanese and Chinese (2009). Recently, Google announced that Google

Translate translates roughly enough text to fill 1 million books in one day (2012).

The idea of using digital computers for translation of natural languages was

proposed as early as 1946 by A. D. Booth and possibly others. Warren Weaver wrote an

important memorandum "Translation" in 1949. The Georgetown experiment was by no

means the first such application, and a demonstration was made in 1954 on

the APEXC machine atBirkbeck College (University of London) of a rudimentary

translation of English into French. Several papers on the topic were published at the time,

and even articles in popular journals (see for example Wireless World, Sept. 1955, Cleave

and Zacharov). A similar application, also pioneered at Birkbeck College at the time, was

reading and composingBraille texts by computer.

Machine Translation

Machine translation is the translation of text by a computer, with no human

involvement. Pioneered in the 1950s, machine translation can also be referred to as

automated translation, automatic or instant translation.

Machine translation, sometimes referred to by the abbreviation MT, is a sub-field of

computational linguistics that investigates the use of software to translate text or speech

from one natural language to another. Also Machine translation (MT) is the application of

computers to the task of translating texts from one natural language to another. One of the

very earliest pursuits in computer science, MT has proved to be an elusive goal, but today

a reasonable number of systems are available which produce output which, if not perfect,

is of sufficient quality to be useful in a number of specific domains.

There are two types of machine translation system:

Rule-Based Machine Translation Technology

Rule-based machine translation relies on countless built-in linguistic rules and

millions of bilingual dictionaries for each language pair.

The software parses text and creates a transitional representation from which the

text in the target language is generated. This process requires extensive lexicons with

morphological, syntactic, and semantic information, and large sets of rules. The software

uses these complex rule sets and then transfers the grammatical structure of the source

language into the target language.

Translations are built on gigantic dictionaries and sophisticated linguistic rules.

Users can improve the out-of-the-box translation quality by adding their terminology into

the translation process. They create user-defined dictionaries which override the systems

default settings.

Statistical Machine Translation Technology

Statistical machine translation utilizes statistical translation models whose

parameters stem from the analysis of monolingual and bilingual corpora. Building

statistical translation models is a quick process, but the technology relies heavily on

existing multilingual corpora. A minimum of 2 million words for a specific domain and even

more for general language are required. Theoretically it is possible to reach the quality

threshold but most companies do not have such large amounts of existing multilingual

corpora to build the necessary translation models. Additionally, statistical machine

translation is CPU intensive and requires an extensive hardware configuration to run

translation models for average performance levels.

Using machine translation as a teaching tool

Although there have been concerns of machine translation's accuracy, Dr. Ana

Nino of the University of Manchester has researched some of the advantages in utilizing

machine translation in the classroom. One such pedagogical method is called using "MT

as a Bad Model." MT as a Bad Model forces the language learner to identify

inconsistencies or incorrect aspects of a translation; in turn, the individual will (hopefully)

possess a better grasp of the language. Dr. Nino cites that this teaching tool was

implemented in the late 1980s. At the end of various semesters, Dr. Nino was able to

obtain survey results from students who had used MT as a Bad Model (as well as other

models.) Overwhelmingly, students felt that they had observed improved comprehension,

lexical retrieval, and increased confidence in their target language.

Also we can use an Enterprise Machine Translation (EMT)that is a machine

translation technology that has been enhanced to deliver a significant added value to

enterprise globalization initiatives. Enterprise MT technology provides high quality

automated translation, tuned to each companys and industrys language. Using Enterprise

MT, enterprises can remove inefficiencies and create new multi-lingual processes and

assets. Only with high quality Enterprise MT, can globalization teams drive down their

translation costs, accelerate products launch to market and help increase visibility of their

brand in global markets.

Advantage and disadvantage of use a Machine translation

Increased productivity deliver translations faster

Use freely available machine translation to pre-translate new segments that are not

leveraged from translation memory.

Connect to and use a customer's or supplier's trained engine through SDL BeGlobal or

use the industry specific machine translation solution SDL Language Cloud Machine

Translation to achieve better quality results every time.

Flexibility and choice to suit all types of project

Select from a number of different machine translation engines.

Choose from over 50 languages and more than 2,500 language pairs to suit your

project.

Compare rules-based and statistical machine translation engines.

Machine translation and signed languages

In the early 2000s, options for machine translation between spoken and signed

languages were severely limited. It was a common belief that deaf individuals could use

traditional translators. However, stress, intonation, pitch, and timing are conveyed much

differently in spoken languages compared to signed languages. Therefore, a deaf

individual may misinterpret or become confused about the meaning of written text that is

based on a spoken language.

Researchers Zhao, et al. (2000), developed a prototype called TEAM (translation

from English to ASL by machine) that completed English to American Sign

Language (ASL) translations. The program would first analyze the syntactic, grammatical,

and morphological aspects of the English text. Following this step, the program accessed

a sign synthesizer, which acted as a dictionary for ASL. This synthesizer housed the

process one must follow to complete ASL signs, as well as the meanings of these signs.

Once the entire text is analyzed and the signs necessary to complete the translation are

located in the synthesizer, a computer generated human appeared and would use ASL to

sign the English text to the user.

Conclusion

This Machine Translation series will continue with more in-depth blogs on the

technology, implementation, use, benefits, and limitations of Machine Translation. We will

also discuss business situations where Machine Translation would and would not be a

practical solution (with supporting case studies) and will explain how Machine Translation

can be built into a translation/localization workflow in a way that helps organizations

achieve their translation goals.

Reference:

Cohen, J. M. (1986), "Translation", Encyclopedia Americana 27, pp. 1215

Hutchins, W. John; Somers, Harold L. (1992). An Introduction to Machine Translation.

London: Academic Press. ISBN 0-12-362830-X.

Piron, Claude (1994), Le dfi des langues Du gchis au bon sens [The Language

Challenge: From Chaos to Common Sense] (in French), Paris:

L'Harmattan,ISBN 9782738424327

Vous aimerez peut-être aussi

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- Horror in Architecture PDFDocument231 pagesHorror in Architecture PDFOrófenoPas encore d'évaluation

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Job InterviewDocument23 pagesJob InterviewAnonymous hdvqHQDPas encore d'évaluation

- Self-Transcendence As A Human Phenomenon Viktor E. FranklDocument10 pagesSelf-Transcendence As A Human Phenomenon Viktor E. FranklremipedsPas encore d'évaluation

- Banham This Is TomorrowDocument3 pagesBanham This Is Tomorrowadrip01Pas encore d'évaluation

- Gajendra StutiDocument2 pagesGajendra StutiRADHAKRISHNANPas encore d'évaluation

- The Life and Works of Rizal - Why Study RizalDocument4 pagesThe Life and Works of Rizal - Why Study RizalTomas FloresPas encore d'évaluation

- LFCH10Document28 pagesLFCH10sadsad100% (2)

- IntroductionPhilosophy12 Q2 Mod5 v4 Freedom of Human Person Version 4Document26 pagesIntroductionPhilosophy12 Q2 Mod5 v4 Freedom of Human Person Version 4Sarah Jane Lugnasin100% (1)

- DMIT Sample ReportDocument33 pagesDMIT Sample ReportgkreddiPas encore d'évaluation

- Simon Choat - Marx's Grundrisse PDFDocument236 pagesSimon Choat - Marx's Grundrisse PDFFelipe Sanhueza Cespedes100% (1)

- Romeo and Juliet Activities Cheat SheetDocument2 pagesRomeo and Juliet Activities Cheat Sheetapi-427826649Pas encore d'évaluation

- McKittrick - Demonic Grounds-Sylvia WynterDocument47 pagesMcKittrick - Demonic Grounds-Sylvia Wynteryo_isoPas encore d'évaluation

- Combining Habermas and Latour To Create A Comprehensive Public Relations Model With Practical ApplicationDocument11 pagesCombining Habermas and Latour To Create A Comprehensive Public Relations Model With Practical ApplicationKristen Bostedo-ConwayPas encore d'évaluation

- NEBOSH Unit IGC1 - Helicopter View of Course: © RRC InternationalDocument1 pageNEBOSH Unit IGC1 - Helicopter View of Course: © RRC InternationalKarenPas encore d'évaluation

- Research Report: Submitted To: Mr. Salman Abbasi Academic Director, Iqra University, North Nazimabad CampusDocument14 pagesResearch Report: Submitted To: Mr. Salman Abbasi Academic Director, Iqra University, North Nazimabad CampusMuqaddas IsrarPas encore d'évaluation

- Poems pt3Document2 pagesPoems pt3dinonsaurPas encore d'évaluation

- 2014 FoundationDocument84 pages2014 FoundationTerry ChoiPas encore d'évaluation

- Forgiveness Forgiveness Forgiveness Forgiveness: Memory VerseDocument5 pagesForgiveness Forgiveness Forgiveness Forgiveness: Memory VerseKebutuhan Rumah MurahPas encore d'évaluation

- Yoga Sutra PatanjaliDocument9 pagesYoga Sutra PatanjaliMiswantoPas encore d'évaluation

- ForcesDocument116 pagesForcesbloodymerlinPas encore d'évaluation

- Thomas Edison College - College Plan - TESC in CISDocument8 pagesThomas Edison College - College Plan - TESC in CISBruce Thompson Jr.Pas encore d'évaluation

- Come Boldly SampleDocument21 pagesCome Boldly SampleNavPressPas encore d'évaluation

- Lab 6: Electrical Motors: Warattaya (Mook) Nichaporn (Pleng) Pitchaya (Muaylek) Tonrak (Mudaeng) Boonnapa (Crystal) 11-5Document5 pagesLab 6: Electrical Motors: Warattaya (Mook) Nichaporn (Pleng) Pitchaya (Muaylek) Tonrak (Mudaeng) Boonnapa (Crystal) 11-5api-257610307Pas encore d'évaluation

- Organizational Ethics in Health Care: An OverviewDocument24 pagesOrganizational Ethics in Health Care: An Overviewvivek1119Pas encore d'évaluation

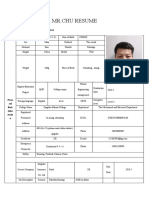

- Mr. Chu ResumeDocument3 pagesMr. Chu Resume楚亚东Pas encore d'évaluation

- Case StudyDocument7 pagesCase StudyAyman Dwedar100% (1)

- Environmental Philo. SterbaDocument4 pagesEnvironmental Philo. SterbaJanbert Rebosura100% (1)

- Marriage As Social InstitutionDocument9 pagesMarriage As Social InstitutionTons MedinaPas encore d'évaluation

- The Tathagatagarbha Sutra: Siddhartha's Teaching On Buddha-NatureDocument6 pagesThe Tathagatagarbha Sutra: Siddhartha's Teaching On Buddha-NatureBalingkangPas encore d'évaluation

- Rewriting The History of Pakistan by Pervez Amirali Hoodbhoy and Abdul Hameed NayyarDocument8 pagesRewriting The History of Pakistan by Pervez Amirali Hoodbhoy and Abdul Hameed NayyarAamir MughalPas encore d'évaluation