Académique Documents

Professionnel Documents

Culture Documents

Hather Pages PDF

Transféré par

pancawawanDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Hather Pages PDF

Transféré par

pancawawanDroits d'auteur :

Formats disponibles

Computer Processing of

Remotely-Sensed Images

An Introduction

Second Edition

Paul M. Mather

School of Geography, The University of Nottingham, U K

ailand,

rested

2 lower

Non-parametric feature selection methods d o not

rely on assumptionsconcerning the frequency distribution of the features. O n e such method, which has not

been widely used, is proposed by Lee and Landgrebe

(1993). Benediktsson and Sveinsson (1997) demonstrate

its application.

8.10 CLASSIFICATION ACCURACY

The methods discussed in section 8.9 have as their aim

the establishment of the degree of separability of the k

be

to which the image pixels are

allocated (though the Bhattacharyya distance is more

like a measure of the probability of misclassification).

Once a classification exercise has been carried out there

is a need to determine the degree of error in the endproduct. These errors could be thought of as being due

to incorrect labelling of the pixels. Conversely, the degree of accuracy could be sought. First of all, if a method

allowing a 'reject' class has been used then the number

of pixels assigned to this class (which is conventionally

labelled 601)will be an indication of the overallrepresentativeness of the training classes. If large numbers of

pixels are labelled aO' then the representativeness of

t3e training data sets is called into question - d o they

adequately sample

the feature space? ~h~ most

manly used method of representing the degree of accuconfusio,l

racy of a classification is to build a

matrix (or error matrix), ~h~ elements of the rows of

this matrix give the number of pixels that the operator

has identified as beiflg members ofclass i that have been

allocated to classes I to k by the classification procedure

(see Table 8.4). Element i of row i (the ith diagonal

element) contains the number of pixels identified by the

operator as belonging to class i that have been correctly

labelled by the classifier. The other elements of row i

give the number and distribution of pixels that have

been incorrectly labelled. T h e classification accuracy for

class i is therefore the number of pixels in cell i divided

by the total number of pixels identified by the operator

from ground data as being class i pixels. The overall

classification accuracy is the average of the individual

class accuracies, which are usually expressed in percentage terms.

Some analystsuse a statistical measure. the kappa

the

provided

by thecontingency matrix(Bishop er

1975). Kappais

computed from:

A

I .(

P"

(I!

sh

wl

gu

da

tri

(I,,.

N

=

.xii -

.xi+.x+;

i= l

i= l

x

r

N'

i= I

The xi; are the diagonal entries of the confusion matrix.

T h e notation x i + and . x + ~indicates, respectively, the

sum of row i and the sum of column i of the confusion

matrix. N is the number of elements in the confusion

matrix. Row totals ( x i + for

) the confusion matrix shown

in Table 8.4 are listed in the column headed (1) and

column totals are given in the last row. The sum of the

diagonal elements bii)

is 350 (Ej=I .xi; for I. = 6). and the

the products

the

and cOiumn marginal

totals(Ej=, ~ ~ + . x + 28

~ )820.

i s Thus the value ofkappais:

=

410x350-28820

168 100 - 28 820

114680

- 0.82

139 280

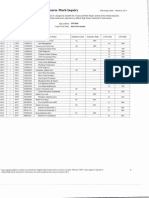

Table 8.4 Conlusion or error matrix lor six classcs. The row labels (Rel.)are those given by an operalor usins pound reference

data. The column labels (Class.)are those generated by the classification procedure. See text for explanation. The lour r~ght-hand

columns are as lollows: (i) number of pixels in class from ground reference data; (ii) estimated classificarion accuracy (per cent);(iii)

class i pixels in relerence data but not given label by classifier; and (iv) pixels given label i by classifier but not class i in rererence

data. The sum of the diagonal elements of the confusion matrix is 350, and the overall accuracy is therefore

(350/410) x 100 = 85.4%.

Class

Ref.

Col. sums

71

72

76

67

81

43

410

60

il

8.10 CIassiJicationaccuracy

,en correctly

nts of row i

Is that have

;iccuracy for

:ell i divided

;he operator

The overall

e individual

:d in percen.. the kappa

on provided

: 5 ) . Kappa is

A value of zero indicates no agreement, while a value of

1.0 shows perfect agreement between the classifier output and the reference data. Montserud and Leamans

(1992) suggest that a value of kappa of 0.75 or greater

shows a 'very good to excellent' classifier performance,

'

while a value of less than 0.4 is 'poor'. However, these

guidelines are only valid when the assumptio'n that the

I data are randomly sampled from a multinomial disi

tribution, with a large sample size, is met.

,

Values of kappa are often cited when classifications

: are compared. If these classifications refer to different

;

procedures (such as maximum l~kelihoodand artificial

j neural networks) applied to the same data set, then

comparisons or kappa values are acceptable, though the

percentage accuracy (overall and for each class) pro:

! vides as much, if not more, information. If the two

j classifications have different numbers of categories then

4 it is not clear whether a straightforward logical comparison is valid. It is hard to see what additional information is provided by kappa over and above that given

by a straightrorward calculation of percentage accuracy. See Congalton (1991). Kalkhan e t a / . (1997). Stehman (1997) and Zhuang er a/. (1995).

The confusion matrix procedure stands or falls by the

availability of a test sample of pixels for each of the k

classes. The use of training-class pixels for this purpose

is dubious and is not recommended - one cannot logically train and test a procedure using the same data set.

A separate set of test pixels should be used for the

calculation of classification accuracy. Users of the

method should be cautious in interpreting the results if

the ground data from which the test pixels were identified were not collected on the same date as the remotelysensed image, for crops can be harvested or forests

cleared. Other problems may arise as a result of differences in scale between test and training data and the image

pixels being classified. So far as is possible, the test pixel

labels should adequately represent reality.

The literal interpretation of accuracy measures derived from a confusion matrix can lead to error. Would

the same level of accuracy have been achieved if a

different test sample of pixels had been used? Figure 8.20

shows an extract from a hypothetical classified image

and the corresponding ground reference data. If the

section outlined in the solid line in Figure 8.20(a) had

been selected as test data the user would infer that the

classification accuracy was loo%, whereas if the area

outlined by the dashed line had been selected then the

accuracy would appear to be 75%. For a given spectral

class there are a very large number of possible configurations of test data and each might give a different accuracy statistic. It is likely that the distribution of accuracy

~sionmatrix.

ectively. the

l e confusion

ie confusion

latrix shown

;ided (i) and

e sum of the

= 6). and the

nn marginal

2 or kappa is:

>undreference

, u r right-hand

(per cent);(iii)

s i in reference

is therefore

207

Figure 8.20 Cover type categories derived from (a) ground

reference data and (b) auromatic image classifier.The choice of

sample locations (solid or dashed lines in (a))will influence the

outcome of accuracy assessment measures.

values could be summarised by a conventional probability distribution, for example the hypergeometric distribution, which describes a situation in which there are

two outcomes to an experiment, labelled P (successful)

and Q (failure), and where samples are drawn from a

population of finite size. If the population being sampled is large the binomial distribution (which is easier to

calculate) can be used in place of the hypergeometric

distribution. These statistical distributions allow the

evaluation of confidence limits, which can be interpreted as follows: If a very large number of samples of

size N are taken and if the true proportion P of successful outcomes is P, then 95% of all the sample values will

lie between P, and P, (the lower and upper 95% confidence limits around P,). The values of the upper and

lower confidence limits depend on (i) the level of probability employed and (ii) the sample size N. The confidence limits get wider as the probability level increases

towards 100% so that we can always say that the 100%

208

Classification

confidence limits range from minus infinity to plus infinity. Confidence limits also get wider as the sample size N

becomes smaller, which is self-evident.

Jensen (1986, p. 228) provides a formula for the calculation of the lower confidence limit associated with a

classification accuracy value obtained from a training

sample of N pixels. The formula used to determine the

required r% lower confidence limits given the values of

P, Q and N is:

where z is the (100 - r)/100th point of the standard

normal distribution. Thus, if r equals 95% then the

z value required will be that having a probability of

(100 - 95)/100 or 0.05 under the standard normal

curve. The tabled z value for this point is z = 1.645. I f r

were 99% then z would be 2.05. T o illustrate the procedure assume that, of480 test pixels, 381 werecorrectly

classified, giving an apparent classification accuracy (P)

of 79.375%. Q is therefore (100 - 79.375) = 20.625%.

If the lower 95% confidence limit was required then z

would equal 1.645 and

79.375 x 20.625

s = 79.375 - [1.645/=

=

z]

79.375 - C1.645 x 1.847 + 0.1041

76.223%

This result indicates that, in the long run, 95% of training samples with observed accuracies of 79.375% will

have true accuracies of 76.223% or greater. As mentioned earlier, the size of the training sample influences

the confidence level. If the training sample in the above

example had been composed of 80 rather than 480

pixels then the lower 95% confidence level would be

s = 79.375

-/

[I645

79.375 x 20.625

g]

= 71.308%

This procedure can also be applied to individual classes

in the same way as described above with the exception

that P is the number of pixels correctly assigned to class

j from a test sample of N j pixels.

The confusion matrix can be used to assess the nature

of erroneous labels besides allowing the calculation of

classification accuracy. Errors of omission are committed when patterns that are really class i become labelled

as members of some other class, whereas errors of commission occur when pixels that are really members of

some other class become labelled as members of class i.

Table 8.4 shows how these error rates are calculated.

From these error rates the user may be able to identify

the main sources of classification accuracy and alter his

or her strategy appropriately. Congalton et al. (1983),

Congalton (1991) and Story and Congalton (1986) give

more advanced reviews of this topic. .

How to calculate the accuracy oTa fuzzy classification

might appear to be a difficult topic; refer to Gopal and

Woodcock (1994) and Foody and Arora (1996). Burrough and Frank (1996) ~ o n s i d e rthe more general

problem of fuzzy geographical boundaries. The Cluestion of estimating area from classified remotely-sensed

images is discussed by Canters (1997) with reference to

fuzzy methods. Dymond (1992) provides a formula to

calculate the root-mean-square error of this area

mate for 'hard' classifications (see also Lawrence and

Ripple, 1996). Cza~lewski(1992) discusses the effect of

misclassification on areal estimates derived from remotely-sensed data, and Fitzgerald and Lees (1994)

examine classification accuracy of multisource remote

sensing data.

The use of single summary statistics to describe the

degree of association between the spatial distribution of

class labels generated by a classification algorithm and

the corresponding distribution of the true (but unknown) ground cover types is rather simplistic. First,

these statistics tell us nothing about the spatial pattern

of agreement or disagreement. An accuracy level of

50% for a particular class would be achieved if all the

test pixels in the upper half of the image were correctly

classified and all those in the lower half of the image

were incorrectly classified, assuming an equal number

of test pixels in both halves of the image. The same

degree of accuracy would be computed if the pixels in

. agreement (and disagreement) were randomly distributed over the image area. Secondly, statements of 'over-all accuracy' levels can hide a multitude of sins. For

example, a small number of generalised classes will

usually be identified more accurately than would a larger number of more specific classes, especially i f one of

the general classes is 'water'. Thirdly, a number of researchers appear to use the same pixels to train and to

test a supervised classification. This practice is illogical

and cannot provide much information other than a

measure of the 'purity' of the training classes. More

thought should perhaps be given to the use of measures

of cc

inter

pixel

espel

map

bet^

grad

data

nece

scrir

clas5

Con

shot

edit1

autl

clas

bee1

om1

fuz/

feat

OCCl

,

.

j

I

)

I

1

F

ly sc

follc

hav

of c

ord

In t

be

tail

Pro

eas

twa

net

Pro

acq

agc

me

1

roo

yea

an!

Ea

agl

im;

len

8.12 Questions 209

of confidence in pixel labelling. It is more useful and

interesting to state that the analyst assigns label x to a

pixel, with the probability of correct labelling being y,

especially if this information can be presented in quasimap form. A possible measure might be the relationship

between the first and second highest membership

grades output by a fuzzy classifier. The use of ground

data to test the output from a classifier is, of course,

necessary. It is not always sufficient, however, as a description or summary of the value or validity of the

classification output.

lation of

commit: labelled

s of comrnbers of

of class i.

~lculated.

.) identify

I alter his

11. ( 19831,

986) give

.sification

;opal and

196). Burs general

The ques:I?-sensed

I'erence to

)rmula to

area estlrence and

e effect of

from re:es (1994)

ce remote

.scribe the

ibution of

rithm and

(but unstic. First,

ial pattern

.y level of

d i f all the

c correctly

the image

al number

The same

ie pixels in

~ l ydistrib~ t of

s 'overr sins. For

:lasses will

ould a lar~ l yif one of

nber of rerain and to

: is illogical

her than a

sses. More

)f measures

8.11 SUMMARY

Compared to other chapters of this book, this chapter

shows the greatest increase in size relative to the 1987

edition. T o some extent this is a reflection of the

author's own interests. However. the developments in

classification methodology over the past 10 years have

been considerable, and the problem has been what to

omit. The introduction of artificial neural net classifiers,

fuzzy methods. new techniques for computing texture

features, and new models of spatial context have all

occurred during the past decade. This chapter has hardly scratched the surface, and readers are encouraged to

follow up the references provided at various points. I

have deliberately avoided providing potted summaries

of each paper or book to which reference is made in

order to encourage readers to spend some of their time

in the library. However, 'learning by doing' is always to

be encouraged. The C D supplied with this book contains some programs for image classification. These

programs are intended to provide the reader with an

easy way into image classification. More elaborate software is required if methods such as artificial neural

networks. evidential reasoning and fuzzy classification

procedures are to be used. It is important, however, to

acquire familiarity with the established methods of imageclassification before becoming involved in advanced

methods and applications.

Despite the efforts of geographers following in the

footsteps of Alexander von Humboldt over the past 150

years, we are still a long way from being able to state with

any acceptable degree of accuracy the proportion of the

Earth's land surface that is occupied by different cover

types. At a regional scale, there is a continuing need to

observe deforestation and other types of land cover

change, and to monitor the extent and productivity of

agricultural crops. More reliable, automatic, methods of

image classification are needed if answers to these problems are to be provided in an efficient manner. New

becoming available. The early years of the new millennium will see a very considerable increase in the volumes

of Earth observation data being collected from space

platrorms,and much greatercomputerpower(with intelligent software) will be needed if the maximum value is to

be obtained from these data. An integrated approach to

geographical data analysis is now being adopted, and

this is having a significant effect on the way image

classification is performed. The use of non-remotelysensed data in the image classification process is providing the possibility of greater accuracy, while - in turn the greater reliability ofimage-based products is improving the capabilities of environmental GIS, particularly

with respect to studies of temporal change.

All or these factors will present challenges to the

remote sensing and GIS communities, and the focus of

research will move away from specialised algorithm

development to the search for methods that satisfy user

needs and are broader in scope than the statistically

based methods of the 1980s, which are still widely used

in commercial GIS and image processing packages. If

progress is to be made then high-quality interdisciplinary work is needed, involving mathematicians, statisticians, computer scientists and engineers as well as Earth

scientists and geographers. The future has never looked

brighter Tor researchers in this fascinating and challenging area.

8.12 QUESTIONS

1. Explain the following terms: labelling, classification,

clustering, unsupervised, supervised, pattern, feature,

pattern recognition, Euclidean space, per-pixel, perfield, texture, context, divergence, decision rule,

spatial autocorrelation, prior probability, neuron,

reed-forward, multi-layer perceptron, steepest descent, geostatistics, variogram, image segmentation,

GLCM, fractal dimension, kappa.

2. What is meant by the term 'feature space'? How can

you measure similarities between points (representing objects to be classified) in a feature space of n

dimensions, where n > 3?

3. Compare the operation of the k-means and also

ISODATA unsupervised classifiers. Use the programs k-means and isodata (described in Appendix B) to carry out two unsupervised classifications

of one of the test images on the CD. Summarise your

experiences in note form.

4. The parallelepiped, supervised k-means and maximum likelihood classifiers are described as parametric. Explain. These three classifiers use, respectively,

the extreme pixel values in each band, the mean pixel

Vous aimerez peut-être aussi

- Invoice 32Document2 pagesInvoice 32pancawawanPas encore d'évaluation

- Indusoft PDFDocument12 pagesIndusoft PDFpancawawanPas encore d'évaluation

- InduSoft - WebStudio - Datasheet 122323423Document4 pagesInduSoft - WebStudio - Datasheet 122323423pancawawanPas encore d'évaluation

- GBU8005 - GBU810: 8.0A Glass Passivated Bridge RectifierDocument2 pagesGBU8005 - GBU810: 8.0A Glass Passivated Bridge RectifierpancawawanPas encore d'évaluation

- Manual English-V2.6 PDFDocument21 pagesManual English-V2.6 PDFpancawawanPas encore d'évaluation

- Advances in Machine Learning and Neural Networks: ° Research India Publications HTTP://WWW - Ijcir.infoDocument2 pagesAdvances in Machine Learning and Neural Networks: ° Research India Publications HTTP://WWW - Ijcir.infopancawawanPas encore d'évaluation

- Analytical Workspace, Kinematics, and Foot Force Based Stability of Hexapod Walking RobotsDocument156 pagesAnalytical Workspace, Kinematics, and Foot Force Based Stability of Hexapod Walking RobotspancawawanPas encore d'évaluation

- Labwindows/CviDocument2 pagesLabwindows/CvipancawawanPas encore d'évaluation

- T8X Quick Start Guide PDFDocument11 pagesT8X Quick Start Guide PDFpancawawanPas encore d'évaluation

- Artificial Neural Networks in Pattern Recognition: Mohammadreza Yadollahi, Ale S Proch AzkaDocument8 pagesArtificial Neural Networks in Pattern Recognition: Mohammadreza Yadollahi, Ale S Proch AzkapancawawanPas encore d'évaluation

- Developing A Multi-Channel Wireless Inductive Charger: White PaperDocument5 pagesDeveloping A Multi-Channel Wireless Inductive Charger: White PaperpancawawanPas encore d'évaluation

- Fuzzy Improvement of The SQL: Miroslav HUDECDocument13 pagesFuzzy Improvement of The SQL: Miroslav HUDECpancawawanPas encore d'évaluation

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Smartfind E5 g5 User ManualDocument49 pagesSmartfind E5 g5 User ManualdrewlioPas encore d'évaluation

- PDFDocument40 pagesPDFAndi NursinarPas encore d'évaluation

- PlateNo 1Document7 pagesPlateNo 1Franz Anfernee Felipe GenerosoPas encore d'évaluation

- Generation III Sonic Feeder Control System Manual 20576Document32 pagesGeneration III Sonic Feeder Control System Manual 20576julianmataPas encore d'évaluation

- CII Sohrabji Godrej GreenDocument30 pagesCII Sohrabji Godrej GreenRITHANYAA100% (2)

- CHAPTER IV The PSYCHOLOGY of YOGA Yoga, One Among The Six Orthodox Schools of Indian ... (PDFDrive)Document64 pagesCHAPTER IV The PSYCHOLOGY of YOGA Yoga, One Among The Six Orthodox Schools of Indian ... (PDFDrive)kriti madhokPas encore d'évaluation

- Derivational and Inflectional Morpheme in English LanguageDocument11 pagesDerivational and Inflectional Morpheme in English LanguageEdificator BroPas encore d'évaluation

- 2 Design of DOSAGE DESIGNDocument16 pages2 Design of DOSAGE DESIGNMarjo100% (1)

- Invisible CitiesDocument14 pagesInvisible Citiesvelveteeny0% (1)

- Word CountDocument3 pagesWord CountLeo LonardelliPas encore d'évaluation

- PostScript Quick ReferenceDocument2 pagesPostScript Quick ReferenceSneetsher CrispyPas encore d'évaluation

- 220245-MSBTE-22412-Java (Unit 1)Document40 pages220245-MSBTE-22412-Java (Unit 1)Nomaan ShaikhPas encore d'évaluation

- Dating Apps MDocument2 pagesDating Apps Mtuanhmt040604Pas encore d'évaluation

- Lesson 1 3 Transes in Reading in Philippine HistoryDocument17 pagesLesson 1 3 Transes in Reading in Philippine HistoryNAPHTALI WILLIAMS GOPas encore d'évaluation

- Engleza Referat-Pantilimonescu IonutDocument13 pagesEngleza Referat-Pantilimonescu IonutAilenei RazvanPas encore d'évaluation

- I. Learning Objectives / Learning Outcomes: Esson LANDocument3 pagesI. Learning Objectives / Learning Outcomes: Esson LANWilliams M. Gamarra ArateaPas encore d'évaluation

- Alfa Week 1Document13 pagesAlfa Week 1Cikgu kannaPas encore d'évaluation

- The Chemistry of The Colorful FireDocument9 pagesThe Chemistry of The Colorful FireHazel Dela CruzPas encore d'évaluation

- AISOY1 KiK User ManualDocument28 pagesAISOY1 KiK User ManualLums TalyerPas encore d'évaluation

- Quotation of Suny PDFDocument5 pagesQuotation of Suny PDFHaider KingPas encore d'évaluation

- AMICO Bar Grating CatalogDocument57 pagesAMICO Bar Grating CatalogAdnanPas encore d'évaluation

- Manuel SYL233 700 EDocument2 pagesManuel SYL233 700 ESiddiqui SarfarazPas encore d'évaluation

- 19 Dark PPT TemplateDocument15 pages19 Dark PPT TemplateKurt W. DelleraPas encore d'évaluation

- Genil v. Rivera DigestDocument3 pagesGenil v. Rivera DigestCharmila SiplonPas encore d'évaluation

- Pediatrics: The Journal ofDocument11 pagesPediatrics: The Journal ofRohini TondaPas encore d'évaluation

- COK - Training PlanDocument22 pagesCOK - Training PlanralphPas encore d'évaluation

- Bcom (HNRS) Project Final Year University of Calcutta (2018)Document50 pagesBcom (HNRS) Project Final Year University of Calcutta (2018)Balaji100% (1)

- The Palestinian Centipede Illustrated ExcerptsDocument58 pagesThe Palestinian Centipede Illustrated ExcerptsWael HaidarPas encore d'évaluation

- Img 20150510 0001Document2 pagesImg 20150510 0001api-284663984Pas encore d'évaluation

- A.meaning and Scope of Education FinalDocument22 pagesA.meaning and Scope of Education FinalMelody CamcamPas encore d'évaluation