Académique Documents

Professionnel Documents

Culture Documents

Configure NFS NaviManager

Transféré par

mrstranger1981Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Configure NFS NaviManager

Transféré par

mrstranger1981Droits d'auteur :

Formats disponibles

Create an NFS export

This procedure explains how to create a Network File System (NFS)

export on your Celerra system. The Celerra system is a

multiprotocol machine that can provide access to data through the

NFS protocol to provide file sharing in network environments.

The NFS protocol enables the Celerra Network Server to assume the

functions of an NFS server. NFS environments typically include:

Native UNIX clients

Linux clients

Windows systems configured with third-party applications

that provide NFS client services

Overview ............................................................................................... 2

Pre-implementation tasks ................................................................... 4

Implementation worksheets ............................................................... 5

Connect external network cables ....................................................... 7

Configure storage for a Fibre Channel enabled system ............... 10

Configure the network ...................................................................... 21

Create a file system ............................................................................ 22

Delete the NFS export created during startup............................... 25

Create NFS exports ............................................................................ 26

Configure hosts .................................................................................. 30

Configure and test standby relationships....................................... 31

Appendix............................................................................................. 38

Create an NFS export

Create an NFS export

Overview

This section contains an overview of the NFS implementation

procedure overview and host requirements for NFS implementation.

Procedure overview

To create a NFS export, you must perform the following tasks:

1. Verify that you have performed the pre-implementation tasks:

Create a Powerlink account.

Register your Celerra with EMC or your service provider.

Install Navisphere Service Taskbar (NST.)

Add additional disk array enclosures (DAEs) using the NST

(Not available for NX4).

2. Complete the implementation worksheets.

3. Cable additional Celerra ports to your network system.

4. Configure unused or new disks with Navisphere Manager.

5. Configure your network by creating a new interface to access the

Celerra storage from a host or workstation.

6. Create a file system using a system-defined storage pool.

7. Delete the NFS export created during startup.

8. Create an NFS export from the file system.

9. Configure host access to the NFS export.

10. Configure and test standby relationships

Host requirements

for NFS

Software

Celerra Network Server version 5.6.

For secure NFS using UNIX or Linux-based Kerberos:

Sun Enterprise Authentication Mechanism (SEAM) software

or Linux KDC running Kerberos version 5

Note: KDCs from other UNIX systems have not been tested.

For secure NFS using Windows-based Kerberos:

Windows 2000 or Windows Server 2003 domain

2

Create an NFS export

Create an NFS export

To use secure NFS, the client computer must be running:

SunOS version 5.8 or later (Solaris 10 for NFSv4)

Linux - kernel 2.4 or later (2.6.12 _ NFSv4 patches for NFSv4)

Hummingbird Maestro version 7 or later (EMC recommends

version 8); version 9 for NFSv4

AIX 5.3 ML3

Note: Other clients have not been tested.

DNS (Domain Name System)

NTP (Network Time Protocol) server

Note: Windows environments require that you configure Celerra in the

Active Directory.

Hardware

No specific hardware requirements

Network

No specific network requirements

Storage

No specific storage requirements

Create an NFS export

Pre-implementation tasks

Before you begin this NFS implementation procedure ensure that you

have completed the following tasks.

Create a Powerlink

account

Register your system

with EMC

You can create a Powerlink account at http://Powerlink.EMC.com.

Use this website to access additional EMC resources, including

documentation, release notes, software updates, information about

EMC products, licensing, and service.

If you did not register your Celerra at the completion of the Celerra

Startup Assistant, you can do so now by downloading the

Registration wizard from Powerlink.

The Registration wizard can also be found on the Applications and

Tools CD that was shipped with your system.

Registering your Celerra ensures that EMC Customer Support has all

pertinent system and site information so they can properly assist you.

Download and

install the

Navisphere Service

Taskbar (NST)

The NST is available for download from the CLARiiON Tools page

on Powerlink and on the Applications and Tools CD that was

shipped with your system.

Add additional disk

array enclosures

Use the NST to add new disk array enclosures (DAEs) to fully

implement your Celerra (Not available for NX4).

Create an NFS export

Create an NFS export

Implementation worksheets

Before you begin this implementation procedure take a moment to fill

out the following implementation worksheets with the values of the

various devices you will need to create.

Create interface

worksheet

The New Network Interface wizard configures individual network

interfaces for the Data Movers. It can also create virtual network

devices: Link Aggregation, Fail-Safe Network, or Ethernet Channel.

Use Table 1 to complete the New Network Interface wizard. You will

need the following information:

Does the network use variable-length subnets?

Yes No

Note: If the network uses variable-length subnets, be sure to use the correct

subnet mask. Do not assume 255.255.255.0 or other common values.

Table 1

Data

Mover

number

Device name or

virtual device

name

Create interface worksheet

IP address

Netmask

Maximum

Transmission

Unit (MTU)

(optional)

Virtual LAN

(VLAN)

identifier

(optional)

Devices (optional)

Create an NFS export

Create file system

worksheet

The Create File System step creates a file system on a Data Mover.

This step can be repeated as needed to create additional file systems.

Read/Write Data Mover: server_2 server_3

Volume Management: Automatic (recommended)

Storage Pool for Automatic Volume Management:

CLARiiON RAID 1 (Not available for NX4)

CLARiiON RAID 5 Performance

CLARiiON RAID 5 Economy

CLARiiON RAID 1/0

CLARiiON RAID 6

File System Name ____________________________________________

File System Size (megabytes) __________________________________

Use Default User and Group Quotas: Yes

No

Hard Limit for User Storage (megabytes) ____________________

Soft Limit for User Storage (megabytes) _____________________

Hard Limit for User Files (files)_____________________________

Soft Limit for User Files (files) _____________________________

Hard Limit for Group Storage (megabytes) __________________

Soft Limit for Group Storage (megabytes) ___________________

Hard Limit for Group Files (files) ___________________________

Soft Limit for Group Files (files) ____________________________

Enforce Hard Limits: Yes

No

Grace Period for Storage (days)_____________________________

Grace Period for Files (days) _______________________________

NFS export

worksheet

NFS export pathname (for example: /test_fs/):

__________________________________________________

IP address of client computer:______________________________

When you have completed the Implementation worksheets, go to

Connect external network cables on page 7.

Create an NFS export

Create an NFS export

Connect external network cables

If you have not already done so, you will need to connect the desired

blade network ports to your network system.

This section covers the following topics:

Connecting the 1U

X-blade network

ports

Connecting the 1U X-blade network ports on page 7

Connecting the 3U X-blade I/O module network ports on

page 8

The Celerra NS20 and NS40 integrated systems and the NX4, NS-120,

and NS-480 unified storage systems have 1U blade enclosures. There

are three possible X-blades available to fill the 1U blade enclosure,

depending on the Celerra:

4-port copper Ethernet X-blade

2-port copper Ethernet and 2-port optical 1 GbE X-blade

2-port copper Ethernet and 2-port optical 10 GbE X-blade

To connect the desired blade network ports to your network system,

follow these guidelines depending on your specific blade

configuration.

Figure 1 shows the 4-port copper Ethernet X-blade. This X-blade is

available to the NX4, NS20, NS40, NS-120 or NS-480. It has four

copper Ethernet ports available for connections to the network

system labeled cge0-cge3. Cable these ports as desired.

Internal

management module

cge0

cge1

cge2

cge3

Com 1

Com 2

BE 0 BE 1

AUX 0 AUX 1

CIP-000560

Figure 1

4-port copper Ethernet X-blade

Figure 2 on page 8 shows the 2-port copper Ethernet and 2-port

optical 1 Gb Ethernet X-blade. This X-blade is available to the NS40 or

NS-480. It has four ports available for connections to the network

7

Create an NFS export

system labeled cge0-cge1, copper Ethernet ports, and fge0-fge1,

optical 1 GbE ports. Cable these ports as desired.

Internal

management module

fge0

fge1

cge0

cge1

Com 1

Com 2

BE 0 BE 1

AUX 0 AUX 1

CIP-000559

Figure 2

2-port copper Ethernet and 2-port optical 1 GbE X-blade

Figure 3 shows the 2-port copper Ethernet and 2-port optical 10 Gb

Ethernet X-blade. This X-blade is available to the NX4, NS-120 or

NS-480. It has four ports available for connections to the network

system labeled cge0-cge1, copper Ethernet ports, and fxg0-fxeg1,

optical 10 GbE ports. Cable these ports as desired.

Internal

management module

fxg0

fxg1

cge0

cge1

Com 1

Com 2

BE 0 BE 1

AUX 0 AUX 1

CNS-001256

Figure 3

Connecting the 3U

X-blade I/O module

network ports

2-port copper Ethernet and 2-port optical 10 GbE X-blade

Currently, the Celerra NS-960 is the only unified storage system with

a 3U blade enclosure. The 3U blade enclosure utilizes different I/O

modules for personalized blade configurations. To connect the

desired blade I/O module network ports to your network system,

follow these guidelines based on your specific I/O module

configuration.

In the four-port Fibre Channel I/O module located in slot 0, the first

two ports, port 0 (BE 0) and port 1 (BE 1), connect to the array. The

next two ports, port 2 (AUX 0) and port 3 (AUX 1) optionally connect

Create an NFS export

Create an NFS export

to tape backup.Do not use these ports for connections to the network

system.

The other I/O modules appear in various slot I/O positions

(represented by x I/O slot ID) to create the supported blade options.

The ports in these I/O modules are available to connect to the

network system.

The four-port copper I/O module has four copper-wire

Gigabit Ethernet (GbE) ports, 0 to 3 (logically, cge-x-0 to

cge-x-3). Cable these ports as desired.

The two-port GbE copper and two-port GbE optical I/O

module has ports, 0 to 3 (logically, cge-x-0, cge-x-1, fge-x-0,

and fge-x-1). Cable these ports as desired.

The one-port 10-GbE I/O module has one port, 0 (logically,

fxg-x-0). Cable these ports as desired.

Note: The x in the port names above are variables representing the slot

position of the I/O module. Thus for a four port copper I/O module in

slot 2, its ports would be cge-2-0 -cge-2-3.

Any advanced configuration of the external network ports is beyond

the scope of this implementation procedure. For more information

about the many network configuration options the Celerra system

supports, such as Ethernet channels, link aggregation, and FSNs,

refer to the Configuring and Managing Celerra Networking and

Configuring and Managing Celerra Network High Availability technical

modules for more information.

When you have finished Connect external network cables go to

Configure storage for a Fibre Channel enabled system on page 10.

Create an NFS export

Configure storage for a Fibre Channel enabled system

There are two ways to configure additional storage in a Fibre

Channel enabled system. This section details how to create additional

storage for a Fibre Channel enabled system using Navisphere

Manager.

Configure storage

with Navisphere

Manager

Configure storage with Navisphere Manager by doing the following:

1. Open an Internet browser: Microsoft Internet Explorer or Mozilla

Firefox.

2. In the browser window, enter the IP address of a storage

processor (SP) that has the most recent version of the FLARE

Operating Environment (OE) installed.

Note: If you do not have a supported version of the JRE installed, you

will be directed to the Sun website where you can select a supported

version to download.

3. Enter the Navisphere Username and Password in the login

prompt, as shown in Figure 4 on page 11.

Note: The default values are username nasadmin and password

nasadmin.

If you changed the Control Station password during the Celerra Startup

Assistant, the Celerra credentials were changed to use those same

passwords. You must use the new password for your Navisphere

credentials.

10

Create an NFS export

Create an NFS export

Figure 4

Navisphere Manage login

4. In Storage Domain, expand the backend storage array, and then

right-click RAID Groups and select Create RAID Group to

create a RAID group for the user LUNs you would like to create,

as shown in Figure 5 on page 12.

11

Create an NFS export

Figure 5

Navisphere Manager tree

5. Enter the RAID Group properties and click Apply to create the

new RAID group, as shown in an example in Figure 6 on page 13.

The RAID Group Parameter values should be applicable to

your system.

For more information, see NAS Support Matrix on

http://Powerlink.EMC.com.

6. To complete the previous step, click Yes to confirm the operation,

click OK for the Success dialog box, and close (or click Cancel)

the Create RAID Group window.

12

Create an NFS export

Create an NFS export

Figure 6

Create RAID Group screen

7. Right-click the newly created RAID Group and select Bind LUN

to create a new LUN in the new RAID Group, as shown in the

example in Figure 7 on page 14.

8. Enter the new LUN(s) properties and click Apply.

Note: The LUN ID must be greater than or equal to 16 if the LUN is to be

managed with Celerra Manager.

13

Create an NFS export

Figure 7

Bind LUN screen

Listed below are recommended best practices:

Always create user LUNs in balanced pairs; one owned by SP

A and one owned by SP B. The paired LUNs must be equal

size. Selecting the Auto radio button in the Default Owner

will automatically assign and balance the pairs.

For Fibre Channel disks, the paired LUNs do not have to be in

the same RAID group. For RAID 5 on Fibre Channel disks, the

RAID group must use five or nine disks. A RAID 1 group

always uses two disks.

14

Create an NFS export

Create an NFS export

For ATA disks, all LUNs in a RAID group must belong to the

same SP. The Celerra system will stripe across pairs of disks

from different RAID groups. ATA disks can be configured as

RAID 5 with seven disks or RAID 3 with five or nine disks.

Use the same LUN ID number for the Host ID number.

We recommend the following settings when creating the user

LUNs:

RAID Type: RAID 5 or RAID 1 for FC disks and RAID 5 or

RAID 3 for ATA disks.

LUN ID: Select the first available value, greater than or equal

to 16.

Element Size: 128

Rebuild Priority: ASAP

Verify Priority: ASAP

Enable Read Cache: Selected

Enable Write Cache: Selected

Enable Auto Assign: the checkbox is unmarked (off)

Number of LUNs to Bind: 2

Alignment Offset: 0

LUN Size: Must not exceed 2 TB

Note: If you are creating 4+1 RAID 3 LUNs, the Number of LUNs to Bind

value should be 1.

9. To complete the previous step, click Yes to confirm the operation,

click OK for the Success dialog box, and close (or click Cancel)

the Bind Lun window.

10. To add LUNs to the Celerra storage group, expand Storage

Groups, right-click the Celerra storage group, and select Select

LUNs.

15

Create an NFS export

Figure 8

Select LUNs screen

11. Maximize the Storage Group Properties window and in the

Available LUNs section, and select the new LUN(s) by

expanding the navigation trees beneath SP A and SP B and

clicking the check box(es) for the new LUNs, as shown in the

example in Figure 8.

Clicking the checkbox(es) causes the new LUN(s) to appear in

Selected LUNs section.

Note that in the Selected LUNs section, the Host ID for the

new LUN(s) is blank.

16

Create an NFS export

Create an NFS export

WARNING

Do not uncheck LUNs that are already checked in the Available

LUNs section when you open the dialog box. Unchecking these LUNs

will render your system inoperable.

12. In the Selected LUNs section, click in the white space in the Host

ID column and select a Host ID greater than 15 for each new

LUN to be added to the Celerra storage group. Click OK to add

the new LUN(s) to the Celerra storage group, as shown in

Figure 9 on page 18.

WARNING

The Host ID must be greater than or equal to 16 for user LUNs. An

incorrect Host ID value can cause serious problems.

13. To complete the previous step, click Yes to confirm the operation

to add LUNs to the storage group, and click OK for the Success

dialog box.

17

Create an NFS export

Figure 9

Selecting LUNs host ID screen

14. To make the new LUN(s) available to the Celerra system, Celerra

Manager must be used. Launch the Celerra Manager by opening

Celerra Manager using the following URL:

https://<control_station>

where <control_station> is the hostname or IP address of the

Control Station.

15. If a security alert appears about the systems security certificate,

click Yes to proceed.

18

Create an NFS export

Create an NFS export

16. At the login prompt, log in as user root. The default password is

nasadmin.

17. If a security warning appears about the systems security

certificate being issued by an untrusted source, click Yes to accept

the certificate.

18. If a warning about a hostname mismatch appears, click Yes.

19. On the Celerra > Storage Systems page, click Rescan, as shown

in Figure 10.

Figure 10

Rescan Storage System in Celerra Manager

19

Create an NFS export

CAUTION

Do not change the host LUN identifier of the Celerra LUNs after

rescanning. This may cause data loss or unavailability.

The user LUNs are now available for the Celerra system. When you

have finished the Configure storage for a Fibre Channel enabled

system go to Configure the network on page 21.

20

Create an NFS export

Create an NFS export

Configure the network

Using Celerra Manager, you can create interfaces on devices that are

not part of a virtual device. Host or workstation access to the Celerra

storage is configured by creating a network interface.

Note: You cannot create a new interface for a Data Mover while the Data

Mover is failed over to its standby.

In Celerra Manager, configure a new network interface and device by

doing the following:

1. Log in to Celerra Manager as root.

2. Click Celerras > <Celerra_name> > Wizards.

3. Click New Network Interface wizard to set up a new network

interface. This wizard can also be used to create a new virtual

device, if desired.

Note: On the Select/Create a network device page, click Create Device to

create a new virtual network device. The new virtual device can be

configured with one of the following high-availability features: Ethernet

Channel, Link Aggregation, or Fail-Safe Network.

When you have completed Configure the network go to Create a

file system on page 22.

21

Create an NFS export

Create a file system

To create a new file system, do the following steps:

1. Go to Celerras > <Celerra_name> > File Systems tab in the left

navigation menu.

2. Click New at the bottom of the File Systems screen, as shown in

Figure 11.

Figure 11

File Systems screen

3. Select the Storage Pool radio button to select where the file

system will be created from, as shown in Figure 12 on page 23.

22

Create an NFS export

Create an NFS export

Figure 12

Create new file system screen

4. Name the file system.

5. Select the system-defined storage pool from the Storage Pool

drop-down menu.

Note: Based on the disks and the RAID types created in the storage

system, different system-defined storage pools will appear in the storage

pool list. For more information about system-defined storage pools refer

to the Disk group and disk volume configurations on page 38.

6. Designate the Storage Capacity of the file system and select any

other desired options.

23

Create an NFS export

Other file system options are listed below:

Auto Extend Enabled: If enabled, the file system

automatically extends when the high water mark is reached.

Virtual Provisioning Enabled: This option can only be used

with automatic file system extension and together they let you

grow the file system as needed.

File-level Retention (FLR) Capability: If enabled, it is

persistently marked as an FLR file system until it is deleted.

File systems can be enabled with FLR capability only at

creation time.

7. Click Create. The new file system will now appear on the File

System screen, as shown in Figure 13.

.

Figure 13

24

Create an NFS export

File System screen with new file system

Create an NFS export

Delete the NFS export created during startup

You may have optionally created a NFS export using the Celerra

Startup Assistant (CSA). If you have a minimum configuration of five

or less disks, then you can begin to use this share as a production

share. If you have more than five disks, delete the NFS export created

during startup, by doing:

1. To delete the NFS export created during startup and make the file

system unavailable to NFS users on the network:

a. Go to Celerras > <Celerra_name> and click the NFS Exports

tab.

b. Select one or more exports to delete, and click Delete.

The Confirm Delete page appears.

c.

Click OK.

When you have completed Delete the NFS export created during

startup go to Create NFS exports on page 26.

25

Create an NFS export

Create NFS exports

To create a new NFS export, do the following:

1. Go to Celerras > <Celerra_name> and click the NFS exports tab.

2.

Figure 14

Click New, as shown in figure Figure 14.

NFS Exports screen

3. Select a Data Mover that manages the file system from the

Choose Data Mover drop-down list on the New NFS export

page, as shown in Figure 15 on page 27.

26

Create an NFS export

Create an NFS export

Figure 15

New NFS Export screen

4. Select the file system or checkpoint that contains the directory to

export from the File System drop-down list.

The list displays the mount point for all file systems and

checkpoints mounted on the selected Data Mover.

Note: The Path field displays the mount point of the selected file system.

This entry exports the root of the file system. To export a subdirectory,

add the rest of the path to the string in the field.

You may also delete the contents of this box and enter a new, complete

path. This path must already exist.

27

Create an NFS export

5. Fill out the Host Access section by defining export permissions

for host access to the NFS export.

Note: The IP address with the subnet mask can be entered in dot (.)

notation, slash (/) notation, or hexadecimal format. Use colons to

separate multiple entries.

Host Access Read-only exports grants read-only access to all

hosts with access to this export, except for hosts given explicit

read/write access on this page.

Read-only Hosts field grants read-only access to the export to

the hostnames, IP addresses, netgroup names, or subnets

listed in this field.

Read/write Hosts field grants read/write access to the export

to the hostnames, IP addresses, netgroup names, or subnets

listed in this field.

6. Click OK to create the export.

Note: If a file system is created using the command line interface (CLI), it

will not be displayed for an NFS export until it is mounted on a Data

Mover.

The new NFS export will now appear on the NFS export screen,

as shown in Figure 16 on page 29.

28

Create an NFS export

Create an NFS export

Figure 16

NFS export screen with new NFS export

When you have finished Create NFS exports go to Configure

hosts on page 30.

29

Create an NFS export

Configure hosts

To mount an NFS export you need the source, including the address

or the hostname of the server. You can collect these values from the

NFS export implementation worksheet. To use this new NFS export

on the network do the following:

1. Open a UNIX prompt on the client computer connected to the

same subnet as the Celerra system. Use the values found on the

NFS worksheet on page 5 to complete this section.

2. Log in as root.

3. Enter the following command at the UNIX prompt to mount the

NFS export:

mount <data_mover_IP>:/<fs_export_name> /<mount_point>

4. Change directories to the new export by typing:

cd /<mount_point>

5. Confirm the amount of storage in the export by typing:

df /<mount_point>

For more information about NFS exports please refer to the

Configuring NFS on Celerra technical module found at

http://Powerlink.EMC.com.

30

Create an NFS export

Create an NFS export

Configure and test standby relationships

EMC recommends that multi-blade Celerra systems be configured

with a Primary/Standby blade failover configuration to ensure data

availability in the case of a blade (server/Data Mover) fault.

Creating a standby blade ensures continuous access to file systems on

the Celerra storage system. When a primary blade fails over to a

standby, the standby blade assumes the identity and functionality of

the failed blade and functions as the primary blade until the faulted

blade is healthy and manually failed back to functioning as the

primary blade.

Configure a standby

relationship

A blade must first be configured as a standby for one or more

primary blades for that blade to function as a standby blade, when

required.

To configure a standby blade:

1. Determine the ideal blade failover configuration for the Celerra

system based on site requirements and EMC recommendations.

EMC recommends a minimum of one standby blade for up to

three Primary blades.

CAUTION

The standby blade(s) must have the same network capabilities

(NICs and cables) as the primary blades with which it will be

associated. This is because the standby blade will assume the

faulted primary blades network identity (NIC IP and MAC

addresses), storage identity (controlled file systems), and

service identity (controlled shares and exports).

2. Define the standby configuration using Celerra Manager

following the blade standby configuration recommendation:

a. Select <Celerra_name> > Data Movers >

<desired_primary_blade> from the left-hand navigation panel.

b. On the Data Mover Properties screen, configure the standby

blade for the selected primary blade by checking the box of the

desired Standby Mover and define the Failover Policy.

Configure and test standby relationships

31

Create an NFS export

Figure 17

Configure a standby in Celerra Manager

Note: A failover policy is a predetermined action that the Control

Station invokes when it detects a blade failure based on the failover

policy type specified. It is recommended that the Failover Policy be

set to auto.

c. Click Apply.

Note: The blade configured as standby will now reboot.

32

Create an NFS export

Create an NFS export

d. Repeat for each primary blade in the Primary/Standby

configuration.

Test the standby

configuration

It is recommended that the functionality of the blade failover

configuration be tested prior to the system going into production.

When a failover condition occurs, the Celerra is able to transfer

functionality from the primary blade to the standby blade without

disrupting file system availability

For a standby blade to successfully stand-in as a primary blade, the

blades must have the same network connections (Ethernet and Fibre

Cables), network configurations (EtherChannel, Fail Safe Network,

High Availability, and so forth), and switch configuration (VLAN

configuration, etc).

CAUTION

You must cable the failover blade identically to its primary blade.

If configured network ports are left uncabled when a failover

occurs, access to files systems will be disrupted.

To test the failover configuration, do the following:

1. Open a SSH session to the Control Station with an SSH client like

PuTTY using the CS.

2. Log in to the CS as nasadmin. Change to the root user by

entering the following command:

su root

Note: The default password for root is nasadmin.

3. Collect the current names and types of the system blades:

# nas_server -l

Sample output:

id type

1 1

2 4

acl slot groupID state name

1000 2

0

server_2

1000 3

0

server_3

Note: In the command output above provides the state name, the

names of the blades. Also, the type column designates the blade type

as 1 (primary) and 4 (standby).

Configure and test standby relationships

33

Create an NFS export

4. After I/O traffic is running on the primary blades network

port(s), monitor this traffic by entering:

# server_netstat <server_name> -i

Example:

[nasadmin@rtpplat11cs0 ~]$ server_netstat server_2 -i

Name

Mtu

Ibytes

Ierror

Obytes

Oerror

PhysAddr

****************************************************************************

fxg0

fxg1

mge0

mge1

cge0

cge1

9000

9000

9000

9000

9000

9000

0

0

851321

28714095

614247

0

0

0

0

0

0

0

0

0

812531

1267209

2022

0

0

0

0

0

0

0

0:60:16:32:4a:30

0:60:16:32:4a:31

0:60:16:2c:43:2

0:60:16:2c:43:1

0:60:16:2b:49:12

0:60:16:2b:49:13

5. Manually force a graceful failover of the primary blade to the

standby blade by using the following command:

# server_standby <primary_blade> -activate mover

Example:

[nasadmin@rtpplat11cs0 ~]$ server_standby server_2

-activate mover

server_2 :

server_2 : going offline

server_3 : going active

replace in progress ...done

failover activity complete

commit in progress (not interruptible)...done

server_2 : renamed as server_2.faulted.server_3

server_3 : renamed as server_2

Note: This command will rename the primary and standby blades. In the

example above, server_2, the primary blade, was rebooted and renamed

server_2.faulter.server_3 and server_3 was renamed as server_2.

34

Create an NFS export

Create an NFS export

6. Verify that the failover has completed successfully by:

a. Checking that the blades have changed names and types:

# nas_server -l

Sample output:

id type

1 1

2 1

acl slot groupID state name

1000 2

0

server_2.faulted.server_3

1000 3

0

server_2

Note: In the command output above each blades state name has

changed and the type column designates both blades as type 1

(primary).

b. Checking I/O traffic is flowing to the primary blade by

entering:

# server_netstat <server_name> -i

Note: The primary blade, though physically a different blade, retains

the initial name.

Sample output:

[nasadmin@rtpplat11cs0 ~]$ server_netstat server_2 -i

Name

Mtu

Ibytes

Ierror

Obytes

Oerror

PhysAddr

****************************************************************************

fxg0

9000 0

0

0

0

0:60:16:32:4b:18

fxg1

9000 0

0

0

0

0:60:16:32:4b:19

mge0

9000 14390362

0

786537

0

0:60:16:2c:43:30

mge1

9000 16946

0

3256

0

0:60:16:2c:43:31

cge0

9000 415447

0

3251

0

0:60:16:2b:49:12

cge1

9000 0

0

0

0

0:60:16:2b:48:ad

Note: The WWNs in the PhysAddr column have changed, thus

reflecting that the failover completed successfully.

7. Verify that the blades appear with reason code 5 by typing;

# /nas/sbin/getreason

Configure and test standby relationships

35

Create an NFS export

8. After the blades appear with reason code 5, manually restore the

failed over blade to its primary status by typing the following

command:

# server_standby <primary_blade> -restore mover

Example:

server_standby server_2 -restore mover

server_2 :

server_2 : going standby

server_2.faulted.server_3 : going active

replace in progress ...done

failover activity complete

commit in progress (not interruptible)...done

server_2 : renamed as server_3

server_2.faulted.server_3 : renamed as server_2

Note: This command will rename the primary and standby blades. In the

example above, server_2, the standing primary blade, was rebooted and

renamed server_3 and server_2.faulter.server_3 was renamed as

server_2.

9. Verify that the failback has completed successfully by:

a. Checking that the blades have changed back to the original

name and type:

# nas_server -l

Sample output:

id type

1 1

2 4

36

Create an NFS export

acl slot groupID state name

1000 2

0

server_2

1000 3

0

server_3

Create an NFS export

b. Checking I/O traffic flowing to the primary blade by entering:

# server_netstat <server_name> -i

Sample output:

[nasadmin@rtpplat11cs0 ~]$ server_netstat server_2 -i

Name

Mtu

Ibytes

Ierror

Obytes

Oerror

PhysAddr

****************************************************************************

fxg0

fxg1

mge0

mge1

cge0

cge1

9000

9000

9000

9000

9000

9000

0

0

851321

28714095

314427

0

0

0

0

0

0

0

0

0

812531

1267209

1324

0

0

0

0

0

0

0

0:60:16:32:4a:30

0:60:16:32:4a:31

0:60:16:2c:43:2

0:60:16:2c:43:1

0:60:16:2b:49:12

0:60:16:2b:49:13

Note: The WWNs in the PhysAddr column have reverted to their

original values, thus reflecting that the failback completed

successfully.

Refer to the Configuring Standbys on EMC Celerra technical module

http://Powerlink.EMC.com for more information about determining

and defining blade standby configurations.

Configure and test standby relationships

37

Create an NFS export

Appendix

Disk group and disk

volume

configurations

Table 2

38

Create an NFS export

Table 2 maps a disk group type to a storage profile, associating the

RAID type and the storage space that results in the automatic volume

management (AVM) pool. The storage profile name is a set of rules

used by AVM to determine what type of disk volumes to use to

provide storage for the pool.

Disk group and disk volume configurations

Disk group type

Attach type

Storage profile

RAID 5 8+1

Fibre Channel

clar r5 economy (8+1)

clar_r5_performance (4+1)

RAID 5 4+1

RAID 1

Fibre Channel

clar_r5_performance

clar_r1

RAID 5 4+1

Fibre Channel

clar_r5_performance

RAID 1

Fibre Channel

clar_r1

RAID 6 4+2

RAID 6 12+2

Fibre Channel

clar_r6

RAID 5 6+1

ATA

clarata_archive

RAID 5 4+1

(CX3 only)

ATA

clarata_archive

RAID 3 4+1

RAID 3 8+1

ATA

clarata_r3

RAID 6 4+2

RAID 6 12+2

ATA

clarata_r6

RAID 5 6+1

(CX3 only)

LCFC

clarata_archive

RAID 5 4+1

(CX3 only)

LCFC

clarata_archive

RAID 3 4+1

RAID 3 8+1

LCFC

clarata_r3

Create an NFS export

Table 2

Disk group and disk volume configurations (continued)

Disk group type

Attach type

Storage profile

RAID 6 4+2

RAID 6 12+2

LCFC

clarata_r6

RAID 5 2+1

SATA

clarata_archive

RAID 5 3+1

SATA

clarata_archive

RAID 5 4+1

SATA

clarata_archive

RAID 5 5+1

SATA

clarata_archive

RAID 1/0 (2 disks)

SATA

clarata_r10

RAID 6 4+2

SATA

clarata_r6

RAID 5 2+1

SAS

clarsas_archive

RAID 5 3+1

SAS

clarsas_archive

RAID 5 4+1

SAS

clarsas_archive

RAID 5 5+1

SAS

clarsas_archive

RAID 1/0 (2 disks)

SAS

clarsas_r10

RAID 6 4+2

SAS

clarsas_r6

Configure and test standby relationships

39

Create an NFS export

40

Create an NFS export

Vous aimerez peut-être aussi

- Automatic Data Optimization With Oracle Database 18c: Oracle White Paper - February 2018Document11 pagesAutomatic Data Optimization With Oracle Database 18c: Oracle White Paper - February 2018mrstranger1981Pas encore d'évaluation

- Security Audit VaultDocument9 pagesSecurity Audit Vaultmrstranger1981Pas encore d'évaluation

- E29010 Managing UsersDocument70 pagesE29010 Managing Usersmrstranger1981Pas encore d'évaluation

- What Is A GC Buffer Busy WaitDocument2 pagesWhat Is A GC Buffer Busy Waitmrstranger1981Pas encore d'évaluation

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5795)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

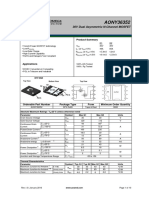

- AONY36352: 30V Dual Asymmetric N-Channel MOSFETDocument10 pagesAONY36352: 30V Dual Asymmetric N-Channel MOSFETrobertjavi1983Pas encore d'évaluation

- F5 101 Test NotesDocument103 pagesF5 101 Test Notesrupinder_gujral5102Pas encore d'évaluation

- Configuring SNMP On ProteusDocument12 pagesConfiguring SNMP On ProteusAijaz MirzaPas encore d'évaluation

- Precision Bias: Every Astm Test Method Requires A AND Section. What Is It? How Do I Create One? Read OnDocument4 pagesPrecision Bias: Every Astm Test Method Requires A AND Section. What Is It? How Do I Create One? Read Onjrlr65Pas encore d'évaluation

- ST Micro1Document308 pagesST Micro1drg09100% (1)

- WebVIewer BrochureDocument2 pagesWebVIewer BrochureCarlos GómezPas encore d'évaluation

- ME302-Syllabus - ME-302 Mechanical Systems IIDocument2 pagesME302-Syllabus - ME-302 Mechanical Systems IIد.محمد كسابPas encore d'évaluation

- Entry Behaviour Brief Introduction: ObjectivesDocument7 pagesEntry Behaviour Brief Introduction: ObjectivesSittie Fahieda Aloyodan100% (1)

- Contents of Project ProposalDocument2 pagesContents of Project ProposalFatima Razzaq100% (1)

- Suzuki GSXR 1000 2007 - 08 Anular EscapeDocument6 pagesSuzuki GSXR 1000 2007 - 08 Anular EscapeBj BenitezPas encore d'évaluation

- Application Form: Full Name ID Card Number Date of Birth Sex Address Phone Number EmailDocument3 pagesApplication Form: Full Name ID Card Number Date of Birth Sex Address Phone Number EmailesadisaPas encore d'évaluation

- MegaRAID SAS 9361-4i SAS 9361-8i Controllers QIGDocument7 pagesMegaRAID SAS 9361-4i SAS 9361-8i Controllers QIGZdravko TodorovicPas encore d'évaluation

- Schneider Electric Price List Feb 2020 V2Document478 pagesSchneider Electric Price List Feb 2020 V2Amr SohilPas encore d'évaluation

- QuestionDocument6 pagesQuestionVj Sudhan100% (3)

- R320 Service ManualDocument113 pagesR320 Service ManualRichard SungaPas encore d'évaluation

- 5 Pengenalan OSDocument44 pages5 Pengenalan OSFahrul WahidPas encore d'évaluation

- CH 9Document63 pagesCH 9alapabainviPas encore d'évaluation

- Ford OBD2 MonitorDocument282 pagesFord OBD2 MonitorAnonymous 4IEjocPas encore d'évaluation

- Ebook Kubernetes PDFDocument26 pagesEbook Kubernetes PDFmostafahassanPas encore d'évaluation

- Assignment 2dbmsDocument13 pagesAssignment 2dbmsTXACORPPas encore d'évaluation

- Miner Statistics - Best Ethereum ETH Mining Pool - 2minersDocument1 pageMiner Statistics - Best Ethereum ETH Mining Pool - 2minersSaeful AnwarPas encore d'évaluation

- Mechatronics Systems PDFDocument23 pagesMechatronics Systems PDFRamanathan DuraiPas encore d'évaluation

- 161-Gyro IXblue Quadrans User Manual 1-10-2014Document31 pages161-Gyro IXblue Quadrans User Manual 1-10-2014Jean-Guy PaulPas encore d'évaluation

- Obsolete Product: Descriptio FeaturesDocument8 pagesObsolete Product: Descriptio FeaturesMaria Das Dores RodriguesPas encore d'évaluation

- Elipar 3m in Healthcare, Lab, and Life Science Equipment Search Result EbayDocument1 pageElipar 3m in Healthcare, Lab, and Life Science Equipment Search Result EbayABRAHAM LEONARDO PAZ GUZMANPas encore d'évaluation

- CSNB244 - Lab 6 - Dynamic Memory Allocation in C++Document5 pagesCSNB244 - Lab 6 - Dynamic Memory Allocation in C++elijahjrs70Pas encore d'évaluation

- Opentext Capture Recognition Engine Release NotesDocument15 pagesOpentext Capture Recognition Engine Release NotesHimanshi GuptaPas encore d'évaluation

- ACTIVITY 7 Discussing Online Privacy - AnswersDocument9 pagesACTIVITY 7 Discussing Online Privacy - AnswersAdrian VSPas encore d'évaluation

- DualityDocument28 pagesDualitygaascrPas encore d'évaluation

- Tutorial-3 With SolutionsDocument3 pagesTutorial-3 With SolutionsSandesh RSPas encore d'évaluation