Académique Documents

Professionnel Documents

Culture Documents

8 PJ Performance Evaluation

Transféré par

BenAndyCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

8 PJ Performance Evaluation

Transféré par

BenAndyDroits d'auteur :

Formats disponibles

Performance Measurement

Public Service Performance

Evaluation: A Strategic Perspective

Peter M. Jackson

PerfonmnceeualiiatitmofgovernmerUatwitiesisesseri^

Government,

no matter the level (central/federal, state, or heal), should he accountable and responsible to the

electorate and a host of other stakeholders. Accountability involves, among other things, an

assessment of policy outcomes, along with the means and processes used to deliver Ihe policies.

Were the policies sidtabk for the problems that the electorate unnted to be solved? Were the

policies implemented efficiently and effectively ? Did the electorate, taxpayers, and users of

public services (often these aw distinct groups) obtain vatue-for-mmey ?

Images of Performance

A large number of public service organizations in

the UK have introduced performance-monitoring

systems'. In doing so, attention has tended to focus

upon the appropriateness and suitability oimeasures

and indicators of performance. A great battery of

indicators has been published and much ink has

been used in evaluating their heuristic value. What

images of performance do these indicators reveal?

Performance-monitoring systems have

important hidden dimensions below the water-line

of indicators and measures. These include the

logical foundations and values upon which the

whole system rests. Weakness in these areas, along

with a lack of appreciation of the difFerent values

that lurk in the depths of the performancemonitoring system, results in severe implementation

problems. The design ofa performance-monitoring

system reflects specific images of public

administration (Kass and Catrow, 1990). These

range from the new public sector management,

with its emphasis upon technical developments in

public policy analysis, market oriented metaphors

and an emphasis upon operational efficiency and

effectiveness, to those whose preferred image

focuses upon the importance of community and

public service values.

Implementation problems arise when these

images conflict within the public service organization

or when the organization's stakeholders hold images

that are significantly different from those of the

ruling organizational elite.

Introducing

performance-monitoring systems can raise

important issues in the management of change

(Jackson and Pedmer, 1993). Performance criteria

are by definition value-laden. They are, therefore,

the currency ofpolitical debate. When considering

the evaluation ofthe performance of public service

* Past issues of Public Money 6f Management have recorded the

introduction of such systems. See aiso: Fimmdat AaountaUUty

and Management, and ]ackson and Palmer (1993).

CIPFA, 1993

Published by Blackwell Publishers, 108 Cowley Road, Oxford OX4 IJF and

238 Main Street, Cambridge, MA 02142, USA.

organizations it is necessary to go beyond a

discussion ofthe data used for the construction of

performance indicators and to confront such

questions as:

What are the origins ofthe criteria used to evaluate

public services?

Who sets the criteria?

Whose interests are being served by the evaluation ?

Peter M. Jackson is

Professor of Economics

and Director ofthe

Public Sector

Economics Research

Centre at the University

of Leicester.

Performance evaluation in public service

organizations is fraught with theoretical,

methodological and practical problems which run

deep in any discussion of democracy. Some of

these issues are reviewed in this article.

Performance Evaluation and Strategic

Management

The introduction of performance-evaluation

systems to public service organizations is one ofthe

elements of the new public sector management.

Some regard the monitoring and measurement of

performance as an expression of classical and

scientific management techniques. Others are

hostile to what they see to be the importation of

naive production management techniques from

the private sector. Yet others view the production

of performance measures as a means of exercising

greater control over public service bureaucracies.

While there is some strength in these views, they

are too negative and too narrow and can result in

the ridiculous conclusions that either the

performance ofpublic service organizations should

not be subjected to measurement or that such

performance is always impossible to measure.

Public service organizations are extremely

complex: they serve multiple objectives; have a

diversity of clients; deliver a wide range of policies

and services; and exist within complex and uncertain

socio-political environments. The values which

drive such org;anizations, and against which their

performance mustbejudged, are also more complex

PUBLIC MONEY & MANAGEMENT OCTOBER-DECEMBER 1 9 9 3

10

than the simple commercial market-led values of

profitability. Given this environment within which

public service managers must make decisions, it is

not a trivial remark to suggest that the challenges

which face public service managers are often more

daunting than their private sector counterparts

and require a wider range and greater intensity of

skills.

It is the sheer complexity of the tasks which

public service organizations face that makes them

candidates for the public domain rather than the

market-place. This simple fact is so often forgotten

by the advocates of privatization. The inclusion of

public service clauses in the contracts awarded to

newly privatized companies highlights some of

the difficulties and the conflicts between competing

objectives that exist for public service managers.

When evaluating the performance of public

service organizations their complexity needs to

be recognized. Too often, it is the trivial and

more easily measured dimensions of performance

which are recorded. The deeper, more highly

valued, but difficult to measure, aspects are

ignored.

The complexity of organizations and the

difficulties of measuring their performance are

not ignored ifa strategic-management perspective,

such as that advocated by Jackson and Palmer

(1992), is adopted. Indeed, the better managed

private sector organizations have tended to use a

strategic-management approach when designing

their performance measures. Simple input/output

measures or profitability and liquidity measures

are of very limited value. They provide managers

in private sector organizations with little relevant

information. This is now well recognized in the

literature on private sector performance (see

Kaplan, 1990),

Successful private sector organizations now

use performance-monitoring systems which are

closely related to their strategic decision-making

cycle. This approach captures a much richer set

of performances than the simple and naive

approach. It focuses attention on consumer

satisfaction, product or service quality, and the

performance of processes, not just the simple

fmancial measures of profitability and rate of

return on investment. Writers such as Kaplan

(op. cit.) have also recognized that financial

statements provide information which is as good

as the conventions and definitions which underlie

the accounts. The same applies to management

accounts which are used for decision-making.

Their relevance for modern capital-intensive

production processes has been called into question

(Johnson and Kaplan, 1987). Much greater care

and attention is given to the fundamentals of

performance measures in private sector

organizations. This now includes going outside

the simple metric of profitability. Attempts to

incorporate non-commercial values, which reflect

the corporation's environmental (green) and social

(ethical) responsibilities, are to be found in many

private companies.

PUBLIC MONEY & MANAGEMENT OCTOBER-DECEMBER 1 9 9 3

Control versus Leaming

Performance monitoring need not be used only as

a means oforganizational control, as it undoubtedly

is in the classical and scientific management

paradigm. In the strategic-management

perspective the information generated by the

performance-monitoring system is a means of

organizational learning. It enables any plan, or set

of targets, to be compared against outcomes and

forces the question: 'Why is there a deviation'?

Answers to th^t question facilitate learning a

discovery that while some critical success factors

might be under the direct control of managers

many others are not. Learning can also mean a

realization that the performance targets set by

management may be too ambitious given tbe

organization's current capabilities.

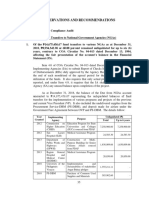

Organizational learning is illustrated in figure

1. This diagram sets out the standard strategicmanagement model which is found in most

textbooks. Where, however, figure 1 differs from

standard models is in the inclusion of a feedback

loop between the information produced by

comparing performance measures and indicators

against targets and the locus of decision-making.

In order to learn, it is necessary to have more than

just a set ofperformance indicators. These indicators

must be compared against some benchmark or

target and there must be a capacity and capability

to analyse any gaps. Furthermore the output of

gap analysis needs to be fed back into the decisionmaking process. Few public service organizations

are in a position to carry out performance-gap

analysis.

The task of strategic management is the

province of the senior management group. This

group should be involved in tackling the following

issues:

Setting the strategic direction (long term) of the

organization.

I mplementing and managing the change process

in line with the chosen strategic direction.

Ensuring continuous improvement ofoperational

performance.

The distinction between strategic and operationcd

management is shown in figure 1.

This list of activities and responsibilities can be

applied to any organization: public or private sector.

Once the strategy is chosen, appropriate

performance measures and indicators are defined

that will show whether the organization is on the

right tracks. Performance indicators show progress

they are milestones along the road and are a

visible manifestation ofwhat the organization stands

for and represents. Thatassumes, however, thatall

significant dimensions of the organization can be

represented by some measure or indicator. This is

not always the case often the measurable drives

out the immeasurable.

In what areas of an organization should

indicators be revealing progress and achievement?

Our research reveals that most public sector

CIPFA, 1993

11

organizations focus upon operational performance

and within that area greatest attention is given to

cost indicators (economy) and efficiency*. Few of

the organizations studied employed an explicit

strategic-management fi-amework. They were,

therefore, not in a position to produce measures

and indicators of the organization's performance

in the achievement of the long-term or strategic

objectives. None ofthe organizations reviewed had

indicators that would demonstrate performance in

the area of change management. Indeed, few of

the organizations had a change-management

programme in place. Another important feature

of the organizations in the sample was that while

they professed to have performance measures and

indicators, few of them had set targets for these

indicators. It was, therefore, impossible to know if

progress had been made or indeed if the

organization had any successes. The indicators

illustrated what was done, not what should be

done. Performance gaps could not be identified.

This obviously impedes organizational learning

and creates the suspicion that indicators were simply

being produced as an end in themselves a

successful organization is one that produces many

performance indicators or that indicators were

an instrument of organization control.

Figure 1.

Evaluation of the

internal environment

Evaluation of the

external environment

i

Setting

Mission-values-objectives

Direction

Structure-style

Reward-information systems

Performance measures

_^ Strategic

management

i

Business/service plans

I

Activities

I

Implementation

Operational

'management

4.

Outputs/outcomes

Implementation

The use of performance indicators by public sector

organizations has been on the public sector

management agenda in the U K for about ten years.

Research at the University of Leicester shows that

despite the encouragement and leadership of

agencies such as the National Audit Office, the

Audit Commission and the Accounts Commission

for Scotland, implementation of performanceevaluation systems has been slow, piecemeal and

often half-hearted. In-depth case studies of those

organizations which have successfiiUy introduced a

performance-evaluation system demonstrate dearly

that a critical success factor is the amount of thought

and effort which is put into managing the change

process. Most organizations underestimate the

time it takes to design a performance-evaluation

system, to negotiate it into place, to trial it, debug it

and develop it. This whole cycle can take between

three and four years.

It is the responsibility ofthe senior management

group to ensure that a system exists that will

produce the strategic, continuous improvement

and change-management performance indicators.

They also need to sanction the resources required

to establish a management information system that

will collect and disseminate such information. Of

course the senior management team do not make

direct decisions on the precise performance

indicators. Successful implementation requires

that those who are responsible for delivering tbe

'Research carried out at the Public Sector Economics Research

Centre, University ofLeicester, into the practice of performance

measurement in puhlic service organizations.

CIPFA, 1993

Compare against performance targets

<-

various elements of performance at the operational

level, throughout the organization, are involved in

designing the system. A sense of ownership of

performance indicators is important for motivation

and the acceptance ofthe indicators.

It is easy to use the results of Leicester's research

to criticise public service organizations for their

limited success in implementing sophisticated

performance-evaluation systems. That would be

an unfortunate and unintended outcome. Public

service organizations when compared to their

private sector counterparts are well in advance of

them in the measurement of performance and the

employment of internal continuous improvement

indicators. A recent study by Harris Research

Centre (1990) shows that the directors of UK

companies have a strong tendency to focus internally

and onfinancialindicators. They pay little attention

(in some cases none) to sucb external factors as the

perceptions of customers; competitors' actions;

and their company's position relative to its

competitors. Strategic planners expressed

dissatisfaction with the quality of the information

they had to formulate strategy. Indeed, few

companies were engaged in strategic planning. If

they did have a strategy they seldom monitored the

outcomes against the plans. Management

information systems were dominated by fmancial

information but even then that information tended

to be confined to those areas which were required

for statutory financial accounting and reporting.

PUBLIC MONEY & MANAGEMENT OCTOBER-DECEMBER 1 9 9 3

12

Little relevant management accounting information

was recorded.

The Governance of Public Service

Organizations

The language and concepts of strategic

managementcan be transported between the public

and the private sectors, but not without some

modification. This is no more so than at the senior

management level ofdecision-making. In a private

company it is the board ofdirectors who are charged

with the strategic areas ofresponsibility. Operating

on behalfofthe owners ofthe company (the ordinary

shareholders) they make decisions about where the

company should be in five, ten, or 15 years' time.

What businesses should they be in? Wbat services

and products sbould they produce? What new

products should be introduced and when? Where

should production be located? These decisions

position the company to serve its customers; to

compete against existing or potential rivals; and to

provide a satisfactory return for shareholders and

other stakeholders including its employees.

What is the equivalent to the board of directors

for public service organizations? What is the senior

management team that is responsible for creating

strategy? Who are its members? These questions

reduce to the single question: 'What is the nature of

the governance system of public service

organizations?'

Attempts at answering this question tend to

reveal a general weakness in the public sector

performance-evaluation literature. There is a

tendency to regard the performance and success of

public services as a managerial responsibility. At

the operational level that is a reasonable assumption

to make but at the strategic level what does it

mean? Who are the members of the senior

management team equivalent to the board of

directors? This is an inherent problem ofthe new

public sector management paradigm it fails to

specify adequately the organization's governance

system, especially the nature of the senior

management group.

Who sets the strategic direction for public

service organizations? Is it the political leaders

(central and local)? Is it the chief executive and

chiefofficers? Or is it a partnership ofboth groups?

These questions are familiar within the context of

the policy-analysis literature who sets public

policy and who is accountable for the outcomes? It

is the age-old separation of powers between the

legislature and tbe executive branch ofgovernment.

For some this will be a statement ofthe glaringly

obvious. But a moment's reflection soon produces

a realization tbat while political leaders are keen to

introduce systems which will ensure operational

performance is measured and evaluated, they do

little to enable the evaluation of the strategic

performance of public services. To do so would be

to evaluate the effectiveness of policies and,

therefore, to put into the public domain politically

sensitive information that would be used to judge,

in the political arena, the efficiency and effectiveness

PUBLIC MONEY & MANAGEMENT OCTOBER-DECEMBER 1 9 9 3

of the policy-making process.

Where are the boundaries ofthe public sector

management model? To what set of activities does

it apply. The National Audit Office, the Audit

Commission and the Scottish Accounts Commission

draw aboundary round the policy-making process.

They investigate, when conducting value-formoney (VFM) studies, the efficiency and

effectiveness ofimplementing given policies. Their

interest is in evaluating managerial effectiveness

and not policy effectiveness per se. Of course in

practice it is difficult to be mute about matters

relating to policy especially ifpublic sector managers

have been assigned an impossible mission of

implementing the unimplementable such as the

Community Charge,

Value-for-Money (VFM): Unbalanced

Empbasis

The separation of powers between the legislature

and the executive has produced a separation ofthe

dimensions of performance evaluation, VFM

auditing is a misnomer since by its very nature it

cannot make anyjudgement about the values placed

on public policy outcomes. Instead, VFM auditing

focuses attention upon technical efficiency (i,e. Xefficiency). It addresses the important issues of

input/output relationships, along with purchasing

and contractual relationships. These are all directed

at one side ofthe value for money equation, namely,

costs.

Nothing is usually said about allocative

efficiency. Significant efforts have been made to

generate performance indicators which reflect the

outputs (final and intermediate) of public services

but such indicators are meaningless and of no

value. Allocative efficiency requires that managers

know something about the output of their services

relative to demand. Is the service being over- or

under-produced? Is the service mix the correct

one? Is the service being targetted on the

appropriate client/user group? To demonstrate,

by using indicators, that service output is rising by

itself is not a useful VFM indicator. If the public

service is producing more and more of a service

which users do not want; which is ofthe wrong mix

or quality; which is targetted on an inappropriate

user group; or which has low value placed on it,

then allocative inefficiency exists.

Many public services are near the efficiency

frontiers of their production functions. There is

probably litde waste or slack in the system on

average so that X-efficiency looks reasonable. Tbis

does not deny scope for improvements in Xefficiency. The issue, however, is the amount of

allocative inefficiency that exists. The suspicion is

that allocative inefficiency is high and is the more

significant source of improvements when it comes

to delivering VFM,

The hole in the middle ofthe VFM doughnut

is the absence of suitable institutions to judge the

effectiveness of public services and policies and,

therefore, to make a judgement about allocative

efficiency. Some have suggested tbat the market

CIPFA, 1993

13

would be an appropriate institution. This image is

advocated by the proponents of privatization.

Markets are, however, notoriously inefficient in

tbeir capacity to evaluate the externalities that are

usually associated with public services.

Select Committees are another institution.

These committees do make some attempt at

evaluating the policy strategies adopted by central

government departments, but they are often

hindered by insufficient information about the

internal and external environment ofthe services.

What is the Select Committee equivalent for local

government; the various health boards and the

new Next Steps agencies? Indeed, who makes

strategy for these organizations?

Another imbalance arises between static and

dynamic concepts of efficiency. The majority of

performance indicators currently in use focus upon

static efficiency, that is, the short run. Dynamic

efficiency emphasizes the long run, and, therefore,

the effectiveness of investment in new productive

capacity, including organizational infi-astructure

and managerial innovations. The capital

expenditure constraints in the public sector suggest

that dynamic efficiency has been sacrificed.

Improvements in static and dynamic efficiency

require that appropriate incentives are in place

that will infiuence decisions and guide actions

towards efficiency improvements. Whether or not

a suitable balance of incentives exists is taken up

below.

Wish-driven Strategies

What makes one organization more successful than

others? This is another way of asking about its

relative superior performance. Success and high

performance is not the 'product of wish-driven

strategy' (Kay, 1993, p.4). Vision, mission and

values are necessary but certainly not sufficient.

Too many strategic management texts incorrectly

assume tbat provided our organization has a mission

statement then it will out-perform those that do

not.

If mission and vision remain in the minds of

the senior management team and are not

communicated throughout the organization then

they are valueless. The critical success factors (CSF)

are an appraisal of the internal strengths and

weaknesses of the organization; an appreciation

and understandingofitsenvironment; thecapacity

to learn and adopt as the uncertain future unfolds.

Strength in these areas must be worked at and

acquired. This is the function of management.

Success is not achieved by accident. Short run

success gained as a result ofchance will be ephemeral

unless strong management exists to capitalize it.

Success requires a match between internal

capabilities (strengths) and external relationships.

This 'strategic fit' demands an understanding of

tbe organization's relationship with its suppliers;

its clients (customers); the political system within

which it is embedded; the socio/demographic

environment etc. Many 'wish-driven strategies'

simply cannot be delivered because the organization

CIPFA, 1993

does not have the necessary capabilities to do so.

Wisb-driven organizations are guided by what the

senior management team would like them to be

not what they are or what, given their existing set

of CSFs they are capable of being. If public service

organizations are to acbieve their aspirations then

the senior management team needs to create the

necessary capabilities. Dreams and visions are not

substitutes for careful assessments of existing

strengths and weaknesses.

The earlier discussion of public services

governance structures raised the issue of who is

responsible for producing the public service

organization's mission and vision. This was seen to

be primarily the role ofthe political leaders with an

input from professional advisors who may or may

not be civil servants. Public service managers are

responsible for producing business or service plans

whicb will deliver the contents of the mission

statement efficiently and effectively.

Most politically generated public service mission

statements are wish-driven. They are made with

little reference to the organization's capabilities of

delivering them. This presents public service

managers with a real problem a mission

impossible or a remit wbich is bound to result in

under-performance. Politicians when making wishdriven strategies should ensure that the organization

is adequately resourced to produce the capabilities

that will enable it to deliver the elements of the

strategy. The question is how can they be made

accountable to do so?

Unless the responsibilities of politicians for the

performance of public service organizations is

adequately recognized then public service managers

are likely to be held to account for decisions over

which they have no (or litde) input or control.

Auditing bodies sucb as the National Audit Office

or the Audit Commission can, and on occasion do,

comment upon the inadequate capabilities of a

public service to deliver. However, stronger

statements need to be made about wbo is responsible

for these inadequacies.

Frequent changes in wish-driven strategies

provide a source of instability for management

action. In the private sector the mission statement

tends to be a fixed point wbicb is changed

infrequently. The strategy of public service

organizations, however, is tied to the political cycle

and within that to changes in Secretaries of State

each time there is a Cabinet reshuffie, A change in

mission and strategy results in changes in service

plans, Sucb instability is destabilizing at tbe

operational level and can result in poor performance

because personnel are constandy adjusting to new

expectations and routines. This suggests that if

public service organizations are to improve their

performance they need to be more flexible and

more loosely coupled in order that they might ride

out tbe storms of instability. The downside of this

recommendation is thatflexibilitycan bring with it

greater amounts of procedural uncertainty wbicb

can paralyse an organization, resulting in delays in

decision-making, Creater decentralization also

PUBLIC MONEY & MANAGEMENT OCTOBER-DECEMBER 1 9 9 3

14

weakens accountability.

So What?

The trend over the past ten years has been to

produce mission statements, strategies and business/

service plans for public service organizations. Data

have been collected which are then reconstituted

into performance measures and indicators. So

what has anything materially changed? Have

services improved; has greater value been

created?

Many of the changes are symbols of good

management practice. Litde of substance or

consequence changes as a result of their

introduction. They are the first step on a long

journey. They are necessary but not sufficient.

Performance indicators simply represent

management information. Like all forms of

information they are an input which comes in a

variety of qualities. Some convey clearer signals

than others. However, like all inputs they need to

be worked on and crafted if they are to be useful.

Our research shows that too often decision-makers

delegate the task of collecting, collating and

publishing statistics for performance measurement

to a specialist group, TTiis is in many cases a form

of sidelining performance measurement. The

information contained in the performance

indicators do not really influence decisions about

resource use and will not help decision-makers to

create value in these cases. Sidelining of

performance measurement has been found in local

authorities; central government departments and

in GP practices.

If resource use decisions are to be influenced

by the information contained in performance

indicators then they need to be related to incentives

tbat will change behaviour. For example, the

information signals contained in the new capital

charges in the NHS have no impact on resource use

because the charges are not related to funding.

They do not influence behaviour in terms of asset

use with the result that their introduction has had

no impact on the efficient use of assets.

At this moment in time it is impossible to know

the value of performance indicators. It is not

possible to know, let alone evaluate, what has

happened as a result of their use. Whether or not

resources are used more efficiendy; whether or not

public services are more effective; and whether or

not more value has been created remain

unanswered questions. The article of faith is that

overall performance is better with the availability of

the information than without.

Important information gaps do, however,

remain. Insufficient resources have been allocated

to analysing the cause and effect relationships

between different means ofpolicy intervention and

outcomes. Until crucial aspects of policy analysis

PUBLIC MONEY & MANAGEMENT OCTOBER-DECEMBER 1 9 9 3

have been carried out assessment ofthe effectiveness

of public services will remain in the policy 'black

box'.

Conclusions

Wben located within a strategic-management

perspective the performance measurementofpublic

services is seen to be necessary but not sufficient for

improved management practice; and a means of

learning rather than a means of control. If tbe

introduction of performance measures/indicators

is to give the expected pay off then it is necessary for

public service organizations to have the capacity to

learn from information signals that indicators

provide, as well as the the organizational capabilities

to act upon that learning. Only then will additional

value be created wbicb justifies the costs of

measuring performance.

A strategic framework also raises important

issues about the appropriate governance structure

for public services and who is responsible for which

areas of strategy. Concentration on X-efficiency,

rather than allocative efficiency, has tended to shift

tbe spodight ofaccountability away fi-om politicians

to public service managers. This has not brought

about an imbalance in the concept of value for

money auditing. The creation of value in public

services is only seen as a transfer of resources fr^om

those who produce services to those who consume

them. Allocative efficiency requires a more careful

assessment ofwbat is currendy being provided and

the values which users place upon it.

Performance evaluation is part ofthe age-old

issue of public service accountability. It is new wine

in old botdes. The new public sector managerialism

and its symbols of good management practice has,

through the use of performance measurement,

introduced new tints to lend new colour to the

spectrum ofaccountability. The dominant ideology

witb its emphasis upon market forces and efficiency

underpins these innovations. While many of the

changes appear to be innocuous the concepts upon

which they rest are contestable,

References

Harris Research Centre (1990), Information for Strategic

Management: A Study ofLeading Companies. Manchester.

Jackson, P, M, and Palmer, A, (1993), Developing

Performance Monitoring in Public Sector Organizations.

Management Centre, University ofLeicester,

Johnson, H, T. and Kaplan, R, S, (1987), Relevance Lost:

The Rise and Fall of Management Accounting. Harvard

Business School Press, Boston,

Kay, J, A (1993), Foundations ofCorporate Success. Oxford

University Press, Oxford,

Kaplan, R, S, (Ed,), (1990), Measures of Manufacturing

Success. Harvard Business School Press, Boston,

Kass, H, D, and Catrow, B, h. (1990), Images and Identities

in Public Administration. Sage Publications, London,

CIPFA, 1993

Vous aimerez peut-être aussi

- Strategy Mapping: An Interventionist Examination of a Homebuilder's Performance Measurement and Incentive SystemsD'EverandStrategy Mapping: An Interventionist Examination of a Homebuilder's Performance Measurement and Incentive SystemsPas encore d'évaluation

- Balanced Scorecard Implementation in A Local Government Authority: Issues and ChallengesDocument35 pagesBalanced Scorecard Implementation in A Local Government Authority: Issues and ChallengessukeksiPas encore d'évaluation

- A Handbook on Transformation and Transitioning Public Sector Governance: Reinventing and Repositioning Public Sector Governance for Delivering Organisational ChangeD'EverandA Handbook on Transformation and Transitioning Public Sector Governance: Reinventing and Repositioning Public Sector Governance for Delivering Organisational ChangePas encore d'évaluation

- Results Based MGTDocument22 pagesResults Based MGTBelmiro Merito NhamithamboPas encore d'évaluation

- Policy Retrospectives: Douglas J. Besharov EditorDocument26 pagesPolicy Retrospectives: Douglas J. Besharov Editoralireza3335Pas encore d'évaluation

- Ratingless Performance Management: Innovative Change to Minimize Human Behavior RoadblocksD'EverandRatingless Performance Management: Innovative Change to Minimize Human Behavior RoadblocksPas encore d'évaluation

- 5 JEL Using The Balanced Scorecard-1Document21 pages5 JEL Using The Balanced Scorecard-1Andreas Azriel NainggolanPas encore d'évaluation

- The Balanced Scorecard: Turn your data into a roadmap to successD'EverandThe Balanced Scorecard: Turn your data into a roadmap to successÉvaluation : 3.5 sur 5 étoiles3.5/5 (4)

- Dwyer Telling A More Credible Performance Story Old Wine in New BottlesDocument12 pagesDwyer Telling A More Credible Performance Story Old Wine in New BottlesInternational Consortium on Governmental Financial ManagementPas encore d'évaluation

- LEWIS Politics Performance Measure 1-s2.0-S1449403515000041-MainDocument12 pagesLEWIS Politics Performance Measure 1-s2.0-S1449403515000041-MainRodrigo Rodrigues S AlmeidaPas encore d'évaluation

- Balanced Scorecard Application in GDocument32 pagesBalanced Scorecard Application in GDeniel KaPas encore d'évaluation

- Connected Reporting A Practical Guide With Worked Examples 17th December 2009Document40 pagesConnected Reporting A Practical Guide With Worked Examples 17th December 2009miguelvelardePas encore d'évaluation

- Barrett (1996) Performance Standards and EvaluationDocument33 pagesBarrett (1996) Performance Standards and EvaluationlittleconspiratorPas encore d'évaluation

- 042 Performance Measurement Practices of Public Sectors in MalaysiaDocument15 pages042 Performance Measurement Practices of Public Sectors in MalaysiaJona Maria MantowPas encore d'évaluation

- Presidency University - BangaloreDocument7 pagesPresidency University - BangaloreLalitha SridharPas encore d'évaluation

- PM Commission Issue Paper 4Document4 pagesPM Commission Issue Paper 4omonydrewPas encore d'évaluation

- Measuring Performance in The PS - Necessity & DifficultyDocument11 pagesMeasuring Performance in The PS - Necessity & DifficultyAnonymous FH8OsADtbQPas encore d'évaluation

- Towards Improving Public Sector Governance A Look at Results Based ManagementDocument5 pagesTowards Improving Public Sector Governance A Look at Results Based ManagementshodybPas encore d'évaluation

- Performance Measurement Utility in Public Budgeting: Application in State and Local GovernmentsDocument22 pagesPerformance Measurement Utility in Public Budgeting: Application in State and Local GovernmentsJoshua WrightPas encore d'évaluation

- New Public Management (Indonesia Case)Document7 pagesNew Public Management (Indonesia Case)Dwi Retno MulyaningrumPas encore d'évaluation

- Notes: Conceptual Framework For Monitoring and EvaluationDocument8 pagesNotes: Conceptual Framework For Monitoring and EvaluationMoneto CasaganPas encore d'évaluation

- Numbers Generated by The Traditional Financial Accounting SystemDocument4 pagesNumbers Generated by The Traditional Financial Accounting Systemgsete0000Pas encore d'évaluation

- Analysis of Transport Performance IndicatorsDocument6 pagesAnalysis of Transport Performance IndicatorsBalakrishna GopinathPas encore d'évaluation

- Lecture / Week No. 10: Course Code: MGT-304 Course Title: Corporate Social ResponsibilityDocument20 pagesLecture / Week No. 10: Course Code: MGT-304 Course Title: Corporate Social ResponsibilityRana ZainPas encore d'évaluation

- Operations Management MBA AssignmentDocument20 pagesOperations Management MBA Assignmentaloy157416767% (6)

- IsoraiteDocument11 pagesIsoraiteuser44448605Pas encore d'évaluation

- Comunicare OrganizationalaDocument24 pagesComunicare OrganizationalaKawaiiPas encore d'évaluation

- Strategic Management of PerformanceDocument12 pagesStrategic Management of PerformanceKasimRandereePas encore d'évaluation

- D.daujotaite, I.macerinskiene Developement of Performance Audit 2008Document9 pagesD.daujotaite, I.macerinskiene Developement of Performance Audit 2008Zlata ChernyadevaPas encore d'évaluation

- Related Lit Gov 2Document13 pagesRelated Lit Gov 2Ako Si PaulPas encore d'évaluation

- Notes: The Public Sector Governance Reform Cycle: Available Diagnostic ToolsDocument8 pagesNotes: The Public Sector Governance Reform Cycle: Available Diagnostic ToolsrussoPas encore d'évaluation

- 1 PBDocument9 pages1 PBFabio_WB_QueirozPas encore d'évaluation

- Strategic Performance MeasurementDocument12 pagesStrategic Performance MeasurementChuột Cao CấpPas encore d'évaluation

- 2.2. Business Enabling Environment: Review Basic Information On TheDocument2 pages2.2. Business Enabling Environment: Review Basic Information On TheErika PetersonPas encore d'évaluation

- CAFR Budget AnalysisDocument11 pagesCAFR Budget AnalysisThomas RichardsPas encore d'évaluation

- Pascal Courty Department of Economics Universitat Pompeu FabraDocument29 pagesPascal Courty Department of Economics Universitat Pompeu FabraDeepa DeviPas encore d'évaluation

- MonitoringDocument6 pagesMonitoringaisahPas encore d'évaluation

- MGT PlanningDocument12 pagesMGT PlanningOliver CorpuzPas encore d'évaluation

- Topic 13-An Introduction To Sustainability Performance, Reporting, and AssuranceDocument36 pagesTopic 13-An Introduction To Sustainability Performance, Reporting, and AssuranceCatherine JaramillaPas encore d'évaluation

- Measuring and Benchmarking Human Resource ManagementDocument15 pagesMeasuring and Benchmarking Human Resource ManagementCamille HofilenaPas encore d'évaluation

- Successful Delivery PocketbookDocument52 pagesSuccessful Delivery PocketbookbboyvnPas encore d'évaluation

- Chapter 10 - Public Sector Accounting - Group AssignmentDocument10 pagesChapter 10 - Public Sector Accounting - Group AssignmentRizzah NianiahPas encore d'évaluation

- Store 24 Balanced ScorecardDocument44 pagesStore 24 Balanced Scorecardk,mg0% (1)

- Management N ProductivityDocument9 pagesManagement N ProductivityKhan ZiaPas encore d'évaluation

- Performance Management Systems - Proposing and TesDocument14 pagesPerformance Management Systems - Proposing and TesIrawaty AmirPas encore d'évaluation

- Performance Measurement For Green Supply Chain ManagementDocument24 pagesPerformance Measurement For Green Supply Chain ManagementbalakaleesPas encore d'évaluation

- Policy EvaluationDocument16 pagesPolicy EvaluationDavies ChiriwoPas encore d'évaluation

- Public Sector Org 0902Document6 pagesPublic Sector Org 0902indahmuliasariPas encore d'évaluation

- Strategic Management: Strategic Management Is A Strategic Design For How A Company Intends ToDocument10 pagesStrategic Management: Strategic Management Is A Strategic Design For How A Company Intends ToPetrescu GeorgianaPas encore d'évaluation

- Corporate Social ResponsibilityDocument30 pagesCorporate Social ResponsibilityAnonymous RNHhfvPas encore d'évaluation

- Strategic ManagementDocument11 pagesStrategic ManagementNothing786Pas encore d'évaluation

- Measuring and Communicating The Value of Public AffairsDocument13 pagesMeasuring and Communicating The Value of Public AffairsCarla ComarellaPas encore d'évaluation

- Mmpo 003Document4 pagesMmpo 003Mohd AnsariPas encore d'évaluation

- Assignment No-5: Q. What Are The Evaluation Criteria of Benefits of Innovation For BussinessDocument9 pagesAssignment No-5: Q. What Are The Evaluation Criteria of Benefits of Innovation For BussinessPROFESSIONAL SOUNDPas encore d'évaluation

- Step by Step Guide To PMDocument43 pagesStep by Step Guide To PMcatherinerenantePas encore d'évaluation

- (IMF Working Papers) Does Performance Budgeting Work? An Analytical Review of The Empirical LiteratureDocument49 pages(IMF Working Papers) Does Performance Budgeting Work? An Analytical Review of The Empirical LiteratureHAMIDOU ATIRBI CÉPHASPas encore d'évaluation

- Key Performance Indicators (Kpis) in Construction Industry of PakistanDocument8 pagesKey Performance Indicators (Kpis) in Construction Industry of PakistanrmdarisaPas encore d'évaluation

- Design and ImplementationDocument2 pagesDesign and ImplementationErika PetersonPas encore d'évaluation

- Why Measure Performance? Different Purposes Require Different MeasuresDocument21 pagesWhy Measure Performance? Different Purposes Require Different Measuresacnc2013Pas encore d'évaluation

- Tsige Semachew PDFDocument57 pagesTsige Semachew PDFbelayneh bekelePas encore d'évaluation

- CBLM Coc1Document48 pagesCBLM Coc1Kenneth Catalan SaelPas encore d'évaluation

- Gs Operation ManualDocument324 pagesGs Operation ManualMohammad Ainuddin100% (3)

- Annual Report 2010-11Document118 pagesAnnual Report 2010-11Amit NisarPas encore d'évaluation

- Small Grants Programme (SGP) : N A O (NAO)Document56 pagesSmall Grants Programme (SGP) : N A O (NAO)Horssivutha UngPas encore d'évaluation

- 2015-1481 Combined Assurance CBOK IIARF S.huibersDocument16 pages2015-1481 Combined Assurance CBOK IIARF S.huibersbemb1ePas encore d'évaluation

- AS 9100 Rev D - IAT Question PaperDocument9 pagesAS 9100 Rev D - IAT Question Paperdaya vashisht100% (1)

- SAP Controlling Question & Answer Session (11 April 2020)Document9 pagesSAP Controlling Question & Answer Session (11 April 2020)Sam RathodPas encore d'évaluation

- Accounting Information Systems 10th Edition by Bodnar and Hopwood Test BankDocument28 pagesAccounting Information Systems 10th Edition by Bodnar and Hopwood Test BankgentaPas encore d'évaluation

- Startup ConclaveDocument18 pagesStartup Conclaveharshdeep gumberPas encore d'évaluation

- 10 Cases On Valid Termination Due To Fraud, Dishonesty & Acts Against Employer - S Property.Document11 pages10 Cases On Valid Termination Due To Fraud, Dishonesty & Acts Against Employer - S Property.Richard Baker100% (2)

- Guaranty Trust Bank Accounts 2022 Full Doc.sDocument149 pagesGuaranty Trust Bank Accounts 2022 Full Doc.sEsther AgyeiPas encore d'évaluation

- How Successful Is Presumptive Tax in Bringing Informal Operators Into The Tax Net in Zimbabwe? A Study of Transport Operators in BulawayoDocument10 pagesHow Successful Is Presumptive Tax in Bringing Informal Operators Into The Tax Net in Zimbabwe? A Study of Transport Operators in BulawayoInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Configuration Management Process GuideDocument34 pagesConfiguration Management Process GuideRajan100% (4)

- P To P ProcessDocument28 pagesP To P ProcessMalleshGollaPas encore d'évaluation

- QBO Cert Exam Module 7 - 8Document114 pagesQBO Cert Exam Module 7 - 8John AnthonyPas encore d'évaluation

- Audit Firm Size and Quality: Does Audit Firm Size Influence Audit Quality in The Libyan Oil Industry?Document14 pagesAudit Firm Size and Quality: Does Audit Firm Size Influence Audit Quality in The Libyan Oil Industry?Zaid SoufianePas encore d'évaluation

- Zubair Syed CMADocument2 pagesZubair Syed CMAM Zubair SyedPas encore d'évaluation

- COA's Observation and Recommendations (2018 Audit Report On OVP)Document38 pagesCOA's Observation and Recommendations (2018 Audit Report On OVP)VERA FilesPas encore d'évaluation

- Strategic ManagementDocument56 pagesStrategic Managementobsinan dejene100% (3)

- Home Energy Audit DiyDocument24 pagesHome Energy Audit DiyStewart7000Pas encore d'évaluation

- COA Employees Handbook PDFDocument85 pagesCOA Employees Handbook PDFFerose MarzoPas encore d'évaluation

- CPD SID 02 Rev 01 Application Form of CPD ProgramDocument2 pagesCPD SID 02 Rev 01 Application Form of CPD ProgramRodel D Dosano0% (1)

- p2 Audit Practice April 2018Document18 pagesp2 Audit Practice April 2018AGUNG GUNAWAN -Pas encore d'évaluation

- Working STNDDocument4 pagesWorking STNDvekas bhatiaPas encore d'évaluation

- ISO27k ISMS Implementation and Certification Process 4v1 PDFDocument1 pageISO27k ISMS Implementation and Certification Process 4v1 PDFJMNAYAGAMPas encore d'évaluation

- Introduction To Cost AccountingDocument21 pagesIntroduction To Cost AccountingkakaoPas encore d'évaluation

- 10 STEPS TO ISO 9001 CERTIFICATION - tcm12-52346 PDFDocument2 pages10 STEPS TO ISO 9001 CERTIFICATION - tcm12-52346 PDFRajanPas encore d'évaluation

- Authority To Sit Examination/Timetable: Lucy Wangari Macharia 35026142 NAC/318898Document2 pagesAuthority To Sit Examination/Timetable: Lucy Wangari Macharia 35026142 NAC/318898Lucy MachariaPas encore d'évaluation

- Day 4 SBL Practice To Pass by Hasan Dossani (Dec 2021)Document33 pagesDay 4 SBL Practice To Pass by Hasan Dossani (Dec 2021)Godlove DentuPas encore d'évaluation