Académique Documents

Professionnel Documents

Culture Documents

Quantifying Human Reliability in Risk Assessments

Transféré par

Kit NottellingCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Quantifying Human Reliability in Risk Assessments

Transféré par

Kit NottellingDroits d'auteur :

Formats disponibles

E I TECHNICAL

Safety

Quantifying human reliability

in risk assessments

Jamie Henderson and David Embrey, from Human Reliability Associates, provide an

overview of the new EI Technical human factors publication Guidance on quantified

human reliability analysis (QHRA).

ollowing the Buncefield accident in

2005, operators of bulk petroleum

storage facilities in the UK were

requested to provide greater assurance

of their overfill protection systems by

risk assessing them using the layers of

protection analysis (LOPA) technique. A

subsequent review of LOPAs1 indicated

a recurring problem with the use of

human error probabilities (HEPs)

without an adequate consideration of

the conditions that influence these

probabilities in the scenario under consideration. It is obvious that the error

probability will be affected by a

number of factors (eg time pressure,

quality of procedures, equipment

design and operating culture) that are

likely to be specific to the situation

being evaluated. Using an HEP from a

database or table without considering

the task context can therefore lead to

inaccurate results in applications such

as quantified risk assessment (QRA),

LOPA and safety integrity level (SIL)

determination studies.

Human reliability analysis (HRA) techniques are available to support the

development of HEPs and, in some cases,

their integration into QRAs. However,

without a basic understanding of human

factors issues, and the strengths, weaknesses and limitations of the

techniques, their use can lead to wildly

pessimistic or optimistic results.

Using funding from its Technical

Partners and other sponsors, the Energy

Institutes (EI) SILs/LOPAs Working

Group commissioned Human Reliability

Associates to develop guidance in this

area. The aim is to reduce instances of

poorly conceived or executed analyses.

The guidance provides an overview of

important practical considerations,

worked examples and supporting

checklists, to assist with commissioning

and reviewing HRAs.2

HRA techniques

HRA techniques are designed to support

the assessment and minimisation of

risks associated with human failures.

They have both qualitative (eg task

analysis, failure identification) and

quantitative (eg human error estimation) components. The guidance focuses

primarily on quantification, but illustrates the importance of the associated

qualitative analyses that can have a significant impact on the numerical results.

Further EI guidance on qualitative

analysis is also available.3 There are a

large number of available HRA techniques that address quantification one

review identified 72 different methods.4

The respective merits of HRA techniques

are not addressed in the new guidance,

since this information, and more

detailed discussion of the concept of

HRA, are available elsewhere.4,5,6

Attempts to quantify the probability

of human failures have a long history.

Early efforts treated people like any

other component in a reliability assessment (eg what is the probability of an

operator failing to respond to an

alarm?). Because these assessments

required probabilities as inputs, there

was a requirement to develop HEP

databases. However, very few industries

were prepared to invest in the effort

required to collect the data to develop

HEPs, so this led to the widespread use

of generic data contained in tools such

as THERP (technique for human error

rate prediction). In fact, the data contained in THERP and other popular

quantification techniques such as

HEART (human error assessment and

reduction technique) are actually

1. Preparation and problem definition

2. Task analysis

3. Failure identification

4. Modelling

5. Quantification

6. Impact assessment

7. Failure reduction

8. Review

Table 1: Generic HRA process

30

Figure 1: Examples of the potential impact of human failures on an event sequence

PETROLEUM REVIEW NOVEMBER 2012

derived primarily from laboratory-based

studies on human performance.

As it became recognised that people

and, consequently HEPs, are significantly

influenced by a wide range of

environmental factors, techniques were

developed to modify baseline generic

HEPs to take into account these contextual

factors (eg time pressure, distractions,

quality of training and quality of the

human machine interface) known as

performance influencing factors (PIFs) or

performance shaping factors (PSFs). A

parallel strand of development was in the

use of expert judgement techniques, such

as paired comparisons and absolute probability judgement, to derive HEPs. Other

techniques, such as SLIM (success likelihood index method) used a combination

of inputs from human factors specialists

and subject matter experts to develop a

context specific set of PIFs/PSFs. These were

then used to derive an index, which could

be converted to a HEP, based on the

quality of these factors in the situation

being assessed.

Despite well-known issues with their

application, and more recent attempts

to develop new techniques that attempt

to address these issues, techniques such

as THERP and HEART are still the most

widely used.

Whilst the quantification of HEPs may

be problematic, the importance of the

human contribution to overall system

risk cannot be overstated. For example,

the bow-tie diagram in Figure 1 shows

how different human failures can affect

the initiation (left hand side), mitigation

and escalation (right hand side) of a

hypothetical event.

Practical issues

The EI guidance2 provides an overview

of some of the most important factors

that can undermine the validity of an

HRA. These include:

Expert judgement Every HRA technique requires some degree of expert

judgement in deciding which factors

influence the likelihood of error in the

situation being assessed and whether

these are adequately addressed in

the quantification technique. A welldeveloped understanding of the task

and operating environment is therefore

essential and any HRA report must

include a documented record of all

assumptions made during the analysis.

In particular, this must provide a justification for any HEPs that have been

imported from an external source such

as a database. It may also be useful, in

interpreting the results, to demonstrate

the potential impact of changes to these

assumptions on the final outcome.

Impact of task context upon HEPs As

discussed previously, human perfor-

PETROLEUM REVIEW NOVEMBER 2012

Commentary Identifying failures

Using a set of guidewords to identify potential deviations is a common approach

to this stage of the analysis. However, this can be a time-consuming and potentially complex process. There are some steps that can be taken to reduce this

complexity, and simplify the subsequent modelling stage:

Be clear about the consequences of identified failures. If the outcomes of concern

are specified at the project preparation stage then some failures will result in

consequences that can be excluded (eg production and occupational safety issues).

Document opportunities for recovery of the failure before consequences are realised

(eg planned checks).

Identify existing control measures designed to prevent or mitigate the consequences

of the identified failures.

Group failures with similar outcomes together. For example, not doing something at

all may be equivalent, in terms of outcome, to doing it too late. Care should be

taken here, however, as whilst the outcomes may be the same, the reasons for the

failures may be different.

Table 2: Example of commentary from the guidance (Step 3 Failure identification)

mance is highly dependent upon

prevailing task conditions. For example,

a simple task, under optimal laboratory

conditions, might have a failure probability of 0.0001 (ie once in 10,000 times).

However, this probability could easily be

degraded to 0.1 (ie once in 10 times) if

the person is subject to PIFs such as high

levels of stress or distractions. There are

very few HEP databases that specify the

contextual factors that were present

when the data were collected. Instead,

the usual approach has been to take

data from other sources, such as laboratory experiments, and modify these HEPs

to suit specific situations.

Sources of data in HRA techniques

Depending on the HRA technique used,

it can be difficult to establish the exact

source of the base HEP data. It might be

from operating experience, experimental research, simulator studies,

expert judgement, or some combination

of these sources. This has implications

for the ability of the analyst to determine the relevance of the data source to

the situation under consideration.

Qualitative modelling

Some HRA techniques, in addition to

HEP estimation, provide the opportunity

to consider and model the impact of PIFs

upon safety critical tasks. This means

that, whilst the generated HEP may be

continued on p34...

Checklist 3: Reviewing HRA outputs

Guidance section

3.1 Was a task analysis developed?

Step 2 Task analysis

3.2 Did the development of the task

analysis involve task experts (ie people

with experience of the task)?

Step 2 Task analysis

3.3 Did the task analysis involve a

walkthrough of the operating

environment?

Step 2 Task analysis

3.4 Is there a record of the inputs to

the task analysis (eg operator

experience, operating instructions,

piping and instrumentation diagrams,

work control documentation)?

Step 2 Task analysis

3.5 Was a formal identification process

used to identify all important failures?

Step 3 Failure

identification

3.6 Does the analysis represent all

obvious errors in the scenario, or explain

why the analysis has been limited to a

sub-set of possible failures?

Step 3 Failure

identification

Yes/No

Table 3: Extract from Checklist 3 Reviewing HRA outputs

31

... continued from p31

treated with scepticism, the analysis

provides useful insights into the factors

affecting task performance and how

these can be improved. For example,

factors such as the quality of communication and equipment layout may be

identified as the PIFs having the

greatest influence over the HEP and,

accordingly, these factors can be prioritised when considering where resources

should be applied in order to achieve

an improved level of reliability.

Whilst in practice it may be difficult

to establish the absolute probability of

failure, an analyst can use appropriate

HRA techniques to establish which factors have the greatest relative impact

on the probability of failure.

Guidance structure

The guidance that HRA has developed

for the EI2 takes a generic HRA process

as its starting point (see Table 1, p30).

Each stage is described, alongside a discussion of relevant potential pitfalls and

commentaries regarding important practical considerations. For example, Table 2

addresses issues related to the failure

identification stage of the process.

34

In addition, to support organisations

commissioning or reviewing HRA

analyses, three checklists are provided:

Checklist 1: Deciding whether to under

take HRA.

Checklist 2: Preparing to undertake

HRA.

Checklist 3: Reviewing HRA outputs.

The checklist items are related to the

stages of the HRA process set out in

Table 1. A short, illustrative extract from

Checklist 3 is provided in Table 3.

The guidance also includes full

worked examples, along with associated

commentary related to the checklist

items, to further illustrate common

issues with HRA analyses.

The use of HEPs and associated HRA

techniques is a difficult area. The aim of

the EI guidance is to better equip

non-specialists thinking of undertaking,

or charged with commissioning, HRAs.

In many cases, a qualitative HRA may be

more appropriate than a quantitative

analysis. Any proposed analysis should

be mindful of the pitfalls set out in

the guidance. Moreover, the limitations

of the outputs should be clearly

communicated to the final user of the

analysis.

References

1. Health & Safety Executive, Research

Report RR716: A review of Layers of

Protection Analysis (LOPA) analyses of

overfill of fuel storage tanks, HSE

Books, 2009.

2. Energy Institute, Guidance on quantified human reliability analysis (QHRA),

2012.

3. Energy Institute, Guidance on human

factors safety critical task analysis, 2011.

www.energyinst.org/scta

4. Health & Safety Executive, Research

Report RR679: Review of human reliability assessment methods, HSE Books,

2009.

5. Embrey, D E, Human reliability

assessment, in Human factors for engineers, Sandom, C and Harvey R S (eds),

ISBN 0 86341 329 3, Institute of

Electrical Engineers Publishing, London,

2004.

6. Kirwan, B, A guide to practical

human reliability assessment, London:

Taylor & Francis, 1994.

Guidance on quantified human reliability analysis (QHRA), ISBN 978 0 85293

635 1, September 2012, is freely available from www.energyinst.org/qhra

PETROLEUM REVIEW NOVEMBER 2012

Vous aimerez peut-être aussi

- Habit 1 Be ProactiveDocument17 pagesHabit 1 Be ProactiveEqraChaudhary100% (7)

- Psychoanalytic Theory byDocument43 pagesPsychoanalytic Theory byjoy millano100% (1)

- Via Character StrengthsDocument2 pagesVia Character Strengthsapi-293644621Pas encore d'évaluation

- Human Error Identification in Human Reliability Assessment - Part 1 - Overview of Approaches PDFDocument20 pagesHuman Error Identification in Human Reliability Assessment - Part 1 - Overview of Approaches PDFalkmindPas encore d'évaluation

- Fatigue Management Guide Airline OperatorsDocument167 pagesFatigue Management Guide Airline OperatorsTirumal raoPas encore d'évaluation

- Chapter 5 - Developmental Psychology HomeworkDocument18 pagesChapter 5 - Developmental Psychology HomeworkHuey Cardeño0% (1)

- A-Systems-Approach-to-Failure-Modes-v1 Paper Good For Functions and Failure MechanismDocument19 pagesA-Systems-Approach-to-Failure-Modes-v1 Paper Good For Functions and Failure Mechanismkhmorteza100% (1)

- PsychoanalysisDocument21 pagesPsychoanalysisJosie DiolataPas encore d'évaluation

- The Philosophical Perspective of The SelfDocument28 pagesThe Philosophical Perspective of The Selfave sambrana71% (7)

- Leadership and Management QuestionnairesDocument13 pagesLeadership and Management QuestionnairesThu Hien PhamPas encore d'évaluation

- Human Factors in LOPADocument26 pagesHuman Factors in LOPASaqib NazirPas encore d'évaluation

- DLL Shs - EappDocument70 pagesDLL Shs - EappGenny Erum Zarate0% (1)

- Build a Pool of Competent InstructorsDocument2 pagesBuild a Pool of Competent InstructorstrixiegariasPas encore d'évaluation

- Character Is DestinyDocument3 pagesCharacter Is DestinyMian Fahad0% (1)

- Andy Clark and His Critics - Colombo, Irvine - Stapleton (Eds.) (2019, Oxford University Press) PDFDocument323 pagesAndy Clark and His Critics - Colombo, Irvine - Stapleton (Eds.) (2019, Oxford University Press) PDFXehukPas encore d'évaluation

- Sample of Training Directory - TemplateDocument3 pagesSample of Training Directory - TemplateFieza PeeJaPas encore d'évaluation

- Selection of Hazard Evaluation Techniques PDFDocument16 pagesSelection of Hazard Evaluation Techniques PDFdediodedPas encore d'évaluation

- Hazard Identification Techniques in IndustryDocument4 pagesHazard Identification Techniques in IndustrylennyPas encore d'évaluation

- Risk Analysis & ContengencyDocument8 pagesRisk Analysis & ContengencymanikantanPas encore d'évaluation

- Risk Assessment MethodsDocument24 pagesRisk Assessment MethodsXie ShjPas encore d'évaluation

- Addressing Human Errors in Process Hazard AnalysesDocument19 pagesAddressing Human Errors in Process Hazard AnalysesAnil Deonarine100% (1)

- JIG Bulletin 74 Fuelling Vehicle Soak Testing Procedure During Commissioning Feb 2015Document2 pagesJIG Bulletin 74 Fuelling Vehicle Soak Testing Procedure During Commissioning Feb 2015Tirumal raoPas encore d'évaluation

- 42R-08 Risk Analysis and Contingency Determination Using Parametric EstimatingDocument9 pages42R-08 Risk Analysis and Contingency Determination Using Parametric EstimatingDody BdgPas encore d'évaluation

- Gas and Oil Reliability Engineering: Modeling and AnalysisD'EverandGas and Oil Reliability Engineering: Modeling and AnalysisÉvaluation : 4.5 sur 5 étoiles4.5/5 (6)

- Preliminary Hazard Analysis (PHA)Document8 pagesPreliminary Hazard Analysis (PHA)dPas encore d'évaluation

- Improved Integration of LOPA With HAZOP Analyses: Dick Baum, Nancy Faulk, and P.E. John Pe RezDocument4 pagesImproved Integration of LOPA With HAZOP Analyses: Dick Baum, Nancy Faulk, and P.E. John Pe RezJéssica LimaPas encore d'évaluation

- Def Stan 91-91 Issue 7Document34 pagesDef Stan 91-91 Issue 7asrahmanPas encore d'évaluation

- Finelli On ArthurDocument14 pagesFinelli On ArthurthornistPas encore d'évaluation

- Mastering Opportunities and Risks in IT Projects: Identifying, anticipating and controlling opportunities and risks: A model for effective management in IT development and operationD'EverandMastering Opportunities and Risks in IT Projects: Identifying, anticipating and controlling opportunities and risks: A model for effective management in IT development and operationPas encore d'évaluation

- Imperio EsmeraldaDocument303 pagesImperio EsmeraldaDiego100% (3)

- Phoenix - A Model-Based Human Reliability AnalysisDocument15 pagesPhoenix - A Model-Based Human Reliability AnalysisstevePas encore d'évaluation

- 2.7. The Sociotechnical PerspectiveDocument17 pages2.7. The Sociotechnical PerspectiveMa NpaPas encore d'évaluation

- Usage of Human Reliability Quantification Methods: Miroljub GrozdanovicDocument7 pagesUsage of Human Reliability Quantification Methods: Miroljub GrozdanovicCSEngineerPas encore d'évaluation

- Analyzing human error and accident causationDocument17 pagesAnalyzing human error and accident causationVeera RagavanPas encore d'évaluation

- Assessing Human Error Probability with HEARTDocument6 pagesAssessing Human Error Probability with HEARTAnnisa MaulidyaPas encore d'évaluation

- Evaluating Human Error in Industrial Control RoomsDocument5 pagesEvaluating Human Error in Industrial Control RoomsJesús SarriaPas encore d'évaluation

- Rio Pipeline Conference Presentation v5Document20 pagesRio Pipeline Conference Presentation v5Sara ZaedPas encore d'évaluation

- Human Error Assessment and Reduction TechniqueDocument4 pagesHuman Error Assessment and Reduction TechniqueJUNIOR OLIVOPas encore d'évaluation

- Iranian Journal of Fuzzy Systems Vol. 15, No. 1, (2018) Pp. 139-161 139Document23 pagesIranian Journal of Fuzzy Systems Vol. 15, No. 1, (2018) Pp. 139-161 139Mia AmaliaPas encore d'évaluation

- Efficient PHA of Non-Continuous Operating ModesDocument25 pagesEfficient PHA of Non-Continuous Operating ModesShakirPas encore d'évaluation

- Application of Heart Technique For Human Reliability Assessment - A Serbian ExperienceDocument10 pagesApplication of Heart Technique For Human Reliability Assessment - A Serbian ExperienceAnonymous imiMwtPas encore d'évaluation

- FMEADocument14 pagesFMEAAndreea FeltAccessoriesPas encore d'évaluation

- Risk Cost Assess.00Document19 pagesRisk Cost Assess.00johnny_cashedPas encore d'évaluation

- Confiabilidade Humana em Sistemas Homem-MáquinaDocument8 pagesConfiabilidade Humana em Sistemas Homem-MáquinaJonas SouzaPas encore d'évaluation

- Issues in Benchmarking Human Reliability Analysis Methods: A Literature ReviewDocument4 pagesIssues in Benchmarking Human Reliability Analysis Methods: A Literature ReviewSiva RamPas encore d'évaluation

- Industrial Process Hazard Analysis: What Is It and How Do I Do It?Document5 pagesIndustrial Process Hazard Analysis: What Is It and How Do I Do It?Heri IsmantoPas encore d'évaluation

- Sms Tools& Analysis MethodDocument27 pagesSms Tools& Analysis Methodsaif ur rehman shahid hussain (aviator)Pas encore d'évaluation

- Human Reliability Assessment On RTG Operational Activities in Container Service Companies Based On Sherpa and Heart MethodsDocument7 pagesHuman Reliability Assessment On RTG Operational Activities in Container Service Companies Based On Sherpa and Heart MethodsInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- SherpaDocument17 pagesSherpaShivani HubliPas encore d'évaluation

- Evaluation Human Errorin Control RoomDocument6 pagesEvaluation Human Errorin Control RoomJesús SarriaPas encore d'évaluation

- Failure Mode and Effects Analysis Considering Consensus and Preferences InterdependenceDocument24 pagesFailure Mode and Effects Analysis Considering Consensus and Preferences InterdependenceMia AmaliaPas encore d'évaluation

- Utilizing Criteria For Assessing Multiple-Task Manual Materials Handling JobsDocument12 pagesUtilizing Criteria For Assessing Multiple-Task Manual Materials Handling JobsdessynurvitariniPas encore d'évaluation

- Zarei Et Al-2016-ProcessDocument8 pagesZarei Et Al-2016-ProcessIntrépido BufonPas encore d'évaluation

- Chen JK. 2007. Utility Priority Number Evaluation For FMEADocument8 pagesChen JK. 2007. Utility Priority Number Evaluation For FMEAAlwi SalamPas encore d'évaluation

- Tree Level of Causes Fmea Dan RcaDocument8 pagesTree Level of Causes Fmea Dan RcaWahyu Radityo UtomoPas encore d'évaluation

- 1 s2.0 S0169814118302737 MainDocument19 pages1 s2.0 S0169814118302737 Mainashish kumarPas encore d'évaluation

- TRIZ Method by Genrich AltshullerDocument5 pagesTRIZ Method by Genrich AltshullerAnonymous JyrLl3RPas encore d'évaluation

- Failure Mode Effect Analysis and Fault Tree Analysis As A Combined Methodology in Risk ManagementDocument12 pagesFailure Mode Effect Analysis and Fault Tree Analysis As A Combined Methodology in Risk ManagementandrianioktafPas encore d'évaluation

- Dynamic Human Reliability Analysis Benefits and Challenges of Simulating Human PerformanceDocument8 pagesDynamic Human Reliability Analysis Benefits and Challenges of Simulating Human PerformancepxmPas encore d'évaluation

- ANALYZEDocument6 pagesANALYZEpranitsadhuPas encore d'évaluation

- Process Fault Diagnosis - AAMDocument61 pagesProcess Fault Diagnosis - AAMsaynapogado18Pas encore d'évaluation

- AHP-GP model allocates auditing timeDocument18 pagesAHP-GP model allocates auditing timeMarco AraújoPas encore d'évaluation

- 5 - DATA FOR RISK ANALYSIS AND HAZARD IDENTIFY - Muh. Iqran Al MuktadirDocument13 pages5 - DATA FOR RISK ANALYSIS AND HAZARD IDENTIFY - Muh. Iqran Al MuktadirLala CheesePas encore d'évaluation

- Fuzzy logic risk assessment model for HSE in oil and gasDocument17 pagesFuzzy logic risk assessment model for HSE in oil and gasUmair SarwarPas encore d'évaluation

- Application of Fmea-Fta in Reliability-Centered Maintenance PlanningDocument12 pagesApplication of Fmea-Fta in Reliability-Centered Maintenance PlanningcuongPas encore d'évaluation

- Beyond FMEA The Structured What-If Techn PDFDocument13 pagesBeyond FMEA The Structured What-If Techn PDFDaniel88036Pas encore d'évaluation

- 5 - HazopDocument23 pages5 - HazopMuhammad Raditya Adjie PratamaPas encore d'évaluation

- Process Hazards Analysis MethodsDocument1 pageProcess Hazards Analysis MethodsRobert MontoyaPas encore d'évaluation

- An Ontology To Support Semantic Management of FMEADocument16 pagesAn Ontology To Support Semantic Management of FMEAcsvspcal143Pas encore d'évaluation

- Comprehensive fuzzy FMEA model for ERP risksDocument32 pagesComprehensive fuzzy FMEA model for ERP risksIbtasamLatifPas encore d'évaluation

- Understanding The TaskDocument6 pagesUnderstanding The TaskMohamedPas encore d'évaluation

- Fuzzy FMEA for Reach Stacker CranesDocument13 pagesFuzzy FMEA for Reach Stacker CranesEva WatiPas encore d'évaluation

- Study On The Main Factors Influencing HumanDocument8 pagesStudy On The Main Factors Influencing HumanDoru ToaderPas encore d'évaluation

- Optimization Under Stochastic Uncertainty: Methods, Control and Random Search MethodsD'EverandOptimization Under Stochastic Uncertainty: Methods, Control and Random Search MethodsPas encore d'évaluation

- Report On Indian AviationDocument72 pagesReport On Indian AviationTirumal raoPas encore d'évaluation

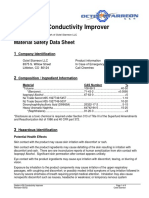

- MSDS of Stadis-450 PDFDocument8 pagesMSDS of Stadis-450 PDFTirumal raoPas encore d'évaluation

- JIG Bulletin 65Document3 pagesJIG Bulletin 65Tirumal raoPas encore d'évaluation

- Oisd STD 235Document110 pagesOisd STD 235naved ahmed100% (5)

- Synthesis Report of Four Working Groups On Road Safety - 2916469697Document87 pagesSynthesis Report of Four Working Groups On Road Safety - 2916469697Tirumal raoPas encore d'évaluation

- ATSB Take-Off Performance Calculation and Entry ErrorsDocument100 pagesATSB Take-Off Performance Calculation and Entry ErrorsTirumal raoPas encore d'évaluation

- Wine - EnG 2100-Essay 2-Hadassah McGillDocument9 pagesWine - EnG 2100-Essay 2-Hadassah McGillHadassah Lola McGillPas encore d'évaluation

- Lawrence Persons CyberpunkDocument9 pagesLawrence Persons CyberpunkFrancis AdamePas encore d'évaluation

- Introduction To PshycholinguisticsDocument3 pagesIntroduction To PshycholinguisticsamirahalimaPas encore d'évaluation

- GA2-W8-S12-R2 RevisedDocument4 pagesGA2-W8-S12-R2 RevisedBina IzzatiyaPas encore d'évaluation

- Comparing Catherine's reactions to her time at LJI and FenwayDocument2 pagesComparing Catherine's reactions to her time at LJI and FenwayKarl RebuenoPas encore d'évaluation

- Content: Presentation of Mary School of Clarin, Inc Table of Specifications Final Examination in Oral CommunicationDocument6 pagesContent: Presentation of Mary School of Clarin, Inc Table of Specifications Final Examination in Oral CommunicationJoel Opciar CuribPas encore d'évaluation

- Definition of Physical Educated PeopleDocument5 pagesDefinition of Physical Educated PeopleJzarriane JaramillaPas encore d'évaluation

- Communication Training ProgramDocument25 pagesCommunication Training Programchetan bhatiaPas encore d'évaluation

- Nietzsche's PsychologyDocument18 pagesNietzsche's PsychologyOliviu BraicauPas encore d'évaluation

- Core Behavioral CompetenciesDocument2 pagesCore Behavioral CompetenciesYeah, CoolPas encore d'évaluation

- Discuss The Ways in Which Thomas Presents Death in Lights Out'Document2 pagesDiscuss The Ways in Which Thomas Presents Death in Lights Out'sarahPas encore d'évaluation

- Sanchez, Nino Mike Angelo M. Thesis 2Document33 pagesSanchez, Nino Mike Angelo M. Thesis 2Niño Mike Angelo Sanchez100% (1)

- Chapter 6 ReflectionDocument2 pagesChapter 6 ReflectionAndrei Bustillos SevillenoPas encore d'évaluation

- Individual Adlerian Psychology CounselingDocument5 pagesIndividual Adlerian Psychology CounselinghopeIshanzaPas encore d'évaluation

- Resume: Shiv Shankar UpadhyayDocument2 pagesResume: Shiv Shankar Upadhyayvishal sharmaPas encore d'évaluation

- AntagningsstatistikDocument1 pageAntagningsstatistikMahmud SelimPas encore d'évaluation