Académique Documents

Professionnel Documents

Culture Documents

EC2029-Digital Image Processing Two Marks Questions and Answers - New PDF

Transféré par

Nathan Bose Thiruvaimalar NathanDescription originale:

Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

EC2029-Digital Image Processing Two Marks Questions and Answers - New PDF

Transféré par

Nathan Bose Thiruvaimalar NathanDroits d'auteur :

Formats disponibles

EC2029- Digital Image Processing

VII Semester ECE

UNIT I

DIGITAL IMAGE FUNDAMENTALS

1.1. Define Image.

An Image may be defined as a two dimensional function f(x,y) where x & y are spatial

(plane) coordinates, and the amplitude of f at any pair of coordinates (x,y) is called intensity or

gray level of the image at that point. When x,y and the amplitude values of f are all finite,

discrete quantities we call the image as Digital Image.

1.2. Define Image Sampling.

Digitization of spatial coordinates (x,y) is called Image Sampling. To be suitable for

computer processing, an image function f(x,y) must be digitized both spatially and in

magnitude.

1.3. Define Quantization.

Digitizing the amplitude values is called Quantization. Quality of digital image is

determined to a large degree by the number of samples and discrete gray levels used in sampling

and quantization.

1.4. What is Dynamic Range?

The range of values spanned by the gray scale is called dynamic range of an image.

Image will have high contrast, if the dynamic range is high, and image will have dull washed

out gray look if the dynamic range is low.

1.5. Define Mach band effect.

The spatial interaction of Luminance from an object and its surround creates a

phenomenon called the mach band effect.

1.6. Define Brightness.

Brightness of an object is the perceived luminance of the surround. Two objects with

different surroundings would have identical luminance but different brightness.

1.7. What is meant by Tapered Quantization?

If gray levels in a certain range occur frequently while others occurs rarely, the

quantization levels are finely spaced in this range and coarsely spaced outside of it. This method

is sometimes called Tapered Quantization.

1.8. What do you meant by Gray level?

Gray level refers to a scalar measure of intensity, that ranges from black to grays and

finally to white.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 1

EC2029- Digital Image Processing

VII Semester ECE

1.9. What do you meant by Color model?

A Color model is a specification of 3D-coordinates system and a subspace within that

system where each color is represented by a single point.

1.10. List the hardware oriented color models.

The hardware oriented color models are as follows,

i. RGB model

ii. CMY model

iii. YIQ model

iv. HSI model

1.11. What is Hue of saturation?

Hue is a color attribute that describes a pure color where saturation gives a measure of

the degree to which a pure color is diluted by white light.

1.12. List the applications of color models.

The applications of color models are,

i. RGB model--- used for color monitor & color video camera

ii. CMY model---used for color printing

iii. HIS model----used for color image processing

iv. YIQ model---used for color picture transmission

1.13. What is Chromatic Adoption?

The hue of a perceived color depends on the adoption of the viewer. For example, the

American Flag will not immediately appear red, white, and blue, the viewer has been subjected

to high intensity red light before viewing the flag. The color of the flag will appear to shift in

hue toward the red component cyan.

1.14. Define Resolutions.

Resolution is defined as the smallest number of discernible detail in an image. Spatial

resolution is the smallest discernible detail in an image and gray level resolution refers to the

smallest discernible change is gray level.

1.15. Write the M X N digital image in compact matrix form?

f(x,y )= f(0,0) f(0,1)f(0,N-1)

f(1,0) f(1,1)f(1,N-1)

.

.

.

.

.

f(M-1) f(M-1,1)f(M-1,N-1)

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 2

EC2029- Digital Image Processing

VII Semester ECE

1.16. Write the expression to find the number of bits to store a digital image?

The number of bits required to store a digital image is

b=M X N X k

When M=N, this equation becomes

b=N^2k

1.17. What is meant by pixel?

A digital image is composed of a finite number of elements, each of which has a

particular location of value. These elements are referred to as pixels or image elements or

picture elements or pels elements.

1.18. Define digital image.

A digital image is an image f(x,y), that has been discretized both in spatial coordinates

and brightness.

1.19. List the steps involved in digital image processing.

The steps involved in digital image processing are,

i.Image Acquisition.

ii.Preprocessing.

iii.Segmentation.

iv.Representation and description.

v.Recognition and interpretation.

1.20. What is recognition and interpretation?

Recognition is a process that assigns a label to an object based on the information

provided by its descriptors. Interpretation means assigning to a recognized object.

1.21. Specify the elements of DIP system.

The elements of DIP system are,

i.Image acquisition.

ii.Storage.

iii.Processing.

iv.Communication.

v.Display.

1.22. List the categories of digital storage.

The categories of digital storage are,

i.Short term storage for use during processing.

ii.Online storage for relatively fast recall.

iii. Archical storage for frequent access.

1.23. Write the two types of light receptors.

The two types of light receptors are,

i.Cones.

ii.Rods.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 3

EC2029- Digital Image Processing

VII Semester ECE

1.24. Differentiate photopic and scotopic vision.

Photopic vision

The human being can resolve the fine

details with these cones because each

cone is connected to its own nerve end.

This is also known as bright light vision.

Scotopic vision

Several rods are connected to one nerve

end. So it gives the overall picture of the

image.

This is also known as dim light vision.

1.25. How ones and rods are distributed in retina?

In each eye, cones are in the range 6-7 million, and rods are in the range 75-150

million.

1.26. Define subjective brightness and adaption.

Subjective brightness means, intensity as preserved by the human visual system. Brightness

adaptation means the human visual system can operate only from scotopic to glare limit. It

cannot operate over the range simultaneously. It accomplishes this large variation by changes

in its overall intensity.

1.27. Define weber ratio.

The ratio of increment of illumination to background if illumination is known as weber

ratio i.e. (Ic /I).

If the ratio (Ic /I) is small, then small percentage of change in intensity is needed ie good

brightness adaptation.

If the ratio (Ic /I) is large, then large percentage of change in intensity is needed ie poor

brightness adaptation

1.28. What is mach band effect?

Mach band effect means the intensity of the stripes is constant. Therefore it preserves the

brightness pattern near the boundaries; these bands are called as mach band effect.

1.29. What is simultaneous contrast?

The regions perceived brightness does not depend on its intensity but also on its

background. All centre square have exactly the same intensity. However they appear to the eye

to become darker as the background becomes lighter.

1.30. What do you meant by Zooming of digital images?

Zooming may be viewed as over sampling. It involves the creation of new pixel

locations and the assignment of gray levels to those new locations.

1.31. What do you meant by shrinking of digital images?

Shrinking may be viewed as under sampling. To shrink an image by one half, we

delete every row and column. To reduce possible aliasing effect, it is a good idea to blue an

image slightly before shrinking it.

1.32. Define the term Radiance.

Radiance is the total amount of energy that flows from the light source, and it is usually

measured in watts (w).

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 4

EC2029- Digital Image Processing

VII Semester ECE

1.33. Define the term Luminance.

Luminance measured in lumens (lm), gives a measure of the amount of energy an

observer perceiver from a light source.

1.34. What is Image Transform?

An image can be expanded in terms of a discrete set of basis arrays called basis images.

These basis images can be generated by unitary matrices. Alternatively, a given NXN image

can be viewed as an N^2X1 vectors. An image transform provides aset of coordinates or basis

vectors for vector space.

1.35. List the applications of transform.

The applications of transforms are,

i.To reduce band width

ii.To reduce redundancy

iii.To extract feature.

1.36. Give the Conditions for perfect transform.

The conditions of perfect transform are,

Transpose of matrix = Inverse of a matrix.

Orthoganality.

1.37. What are the properties of unitary transform?

The properties of unitary transform are,

i.Determinant and the Eigen values of a unitary matrix have unity magnitude

ii.The entropy of a random vector is preserved under a unitary Transformation

iii.Entropy is a measure of average information.

1.38. Write the expression of one-dimensional discrete Fourier transforms

The expression of one-dimensional discrete Fourier transforms in

Forward transform

The sequence of x(n) is given by x(n) = { x0,x1,x2, xN-1}.

N-1

- j 2* pi* nk/N

X(k) = x(n) e

; k = 0,1,2,N-1

n=0

Reverse transforms

N-1

- j 2* pi* nk/N

X(n) = x(k) e

; n = 0,1,2,N-1

k=0

1.39. List the Properties of twiddle factor.

The properties of twiddle factor are,

i. Periodicity

WN^(K+N)= WN^K

ii. Symmetry

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 5

EC2029- Digital Image Processing

VII Semester ECE

WN^(K+N/2)= -WN^K

1.40. Give the Properties of one-dimensional DFT.

The properties of one-dimensional DFT are,

i. The DFT and unitary DFT matrices are symmetric.

ii. The extensions of the DFT and unitary DFT of a sequence and their inverse transforms

are periodic with period N.

iii. The DFT or unitary DFT of a real sequence is conjugate symmetric about N/2.

1.41. Give the Properties of two-dimensional DFT.

The properties of two-dimensional DFT are,

i.Symmetric

ii.Periodic extensions

iii.Sampled Fourier transform

iv.Conjugate symmetry.

1.42. Write the properties of cosine transform:

The properties of cosine transform are as follows,

i.Real & orthogonal.

ii.Fast transform.

iii.Has excellent energy compaction for highly correlated data

1.43. Write the properties of Hadamard transform

The properties of hadamard transform are as follows,

i.Hadamard transform contains any one value.

ii.No multiplications are required in the transform calculations.

iii.The no: of additions or subtractions required can be reduced from N 2 to about Nlog2N

iv.Very good energy compaction for highly correlated images.

1.44. Define Haar transform.

The Haar functions are defined on a continuous interval Xe [0,1] and for K=0,1, N1,where N=2^n..The integer k can be uniquely decomposed as K=2^P+Q-1.

1.45. Write the properties of Haar transform.

The properties of Haar transform are,

i. Haar transform is real and orthogonal.

ii. Haar transform is a very fast transform

iii. Haar transform has very poor energy compaction for images

iv. The basic vectors of Haar matrix sequensly ordered.

1.46.Write the Properties of Slant transform.

The properties of Slant transform are,

i.Slant transform is real and orthogonal.

ii.Slant transform is a fast transform

iii.Slant transform has very good energy compaction for images

iv.The basic vectors of Slant matrix are not sequensely ordered.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 6

EC2029- Digital Image Processing

VII Semester ECE

1.47. Define of KL Transform

KL Transform is an optimal in the sense that it minimizes the mean square error

between the vectors X and their approximations X^. Due to this idea of using the Eigen vectors

corresponding to largest Eigen values. It is also known as principal component transform.

1.48. Justify that KLT is an optimal transform.

Since mean square error of reconstructed image and original image is minimum and the

mean value of transformed image is zero so that uncorrelated.

1.49.Write any four applications of DIP.

The applications of DIP are,

i.Remote sensing

ii.Image transmission and storage for business application

iii.Medical imaging

iv.Astronomy

1.50.What is the effect of Mach band pattern?

The intensity or the brightness pattern perceive a darker stribe in region D, and brighter

stribe in region B.This effect is called Mach band pattern or effect.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 7

EC2029- Digital Image Processing

VII Semester ECE

UNIT II

IMAGE ENHANCEMENT

2.1. What is Image Enhancement?

Image enhancement is to process an image so that the output is more suitable

for specific application.

2.2. Name the categories of Image Enhancement and explain?

The categories of Image Enhancement are,

i.Spatial domain

ii.Frequency domain

Spatial domain: It refers to the image plane and it is based on direct

manipulation of pixels of an image.

Frequency domain techniques are based on modifying the Fourier transform of an image.

2.3. What do you mean by Point processing?

Image enhancement at any Point in an image depends only on the gray level at

that point is often referred to as Point processing.

2.4.What is gray level slicing?

Highlighting a specific range of gray levels in an image is referred to as gray level

slicing. It is used in satellite imagery and x-ray images

2.5. What do you mean by Mask or Kernels.

A Mask is a small two-dimensional array, in which the value of the mask

coefficient determines the nature of the process, such as image sharpening.

2.6. What is Image Negative?

The negative of an image with gray levels in the range [0, L-1] is obtained by

using the negative transformation, which is given by the expression.

s = L-1- r ,Where s is output pixel, r is input pixel

2.7. Define Histogram.

The histogram of a digital image with gray levels in the range [0, L-1] is a discrete

function h (rk) = nk, where rk is the kth gray level and nk is the number of pixels in the image

having gray level rk.

2.8.What is histogram equalization

It is a technique used to obtain linear histogram . It is also known as histogram

linearization. Condition for uniform histogram is Ps(s) = 1

2.9. What is contrast stretching?

Contrast stretching reduces an image of higher contrast than the original, by darkening the

levels below m and brightening the levels above m in the image.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 8

EC2029- Digital Image Processing

VII Semester ECE

2.10. What is spatial filtering?

Spatial filtering is the process of moving the filter mask from point to point in an

image. For linear spatial filter, the response is given by a sum of products of the filter

coefficients, and the corresponding image pixels in the area spanned by the filter mask.

2.11. Define averaging filters.

The output of a smoothing, linear spatial filter is the average of the pixels contained in

the neighborhood of the filter mask. These filters are called averaging filters.

2.12. What is a Median filter?

The median filter replaces the value of a pixel by the median of the gray levels in the

neighborhood of that pixel.

2.13. What is maximum filter and minimum filter?

The 100th percentile is maximum filter is used in finding brightest points in an image.

The 0th percentile filter is minimum filter used for finding darkest points in an image.

2.14. Define high boost filter.

High boost filtered image is defined as

HBF = A (original image) LPF

= (A-1) original image + original image LPF

HBF = (A-1) original image + HPF

2.15. State the condition of transformation function s=T(r).

i. T(r) is single-valued and monotonically increasing in the interval 0_r_1 and

ii. 0_T(r) _1 for 0_r_1.

2.16. Write the application of sharpening filters.

The applications of sharpening filters are as follows,

i. Electronic printing and medical imaging to industrial application

ii. Autonomous target detection in smart weapons.

2.17. Name the different types of derivative filters.

The different types of derivative filters are

i. Perwitt operators

ii. Roberts cross gradient operators

iii. Sobel operators.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 9

EC2029- Digital Image Processing

VII Semester ECE

UNIT III

IMAGE RESTORATION

3.1. Define Restoration.

Restoration is a process of reconstructing or recovering an image that has been

degraded by using a priori knowledge of the degradation phenomenon. Thus restoration

techniques are oriented towards modeling the degradation and applying the inverse process in

order to recover the original image.

3.2. How a degradation process id modeled?

A system operator H, which together with an additive white noise term n(x,y) a operates on

an input image f(x,y) to produce a degraded image g(x,y).

3.3. What is homogeneity property and what is the significance of this property?

Homogeneity property states that

H [k1f1(x,y)] = k1H[f1(x,y)]

Where H=operator

K1=constant

f(x,y)=input image.

It says that the response to a constant multiple of any input is equal to the response to that

input multiplied by the same constant.

3.4.What is meant by image restoration?

Restoration attempts to reconstruct or recover an image that has been degraded by using a

clear knowledge of the degrading phenomenon.

3.5. Define circulant matrix.

A square matrix, in which each row is a circular shift of the preceding row and the first

row is a circular shift of the last row, is called circulant matrix.

3.6. What is the concept behind algebraic approach to restoration?

Algebraic approach is the concept of seeking an estimate of f, denoted f^, that

minimizes a predefined criterion of performance where f is the image.

3.7. Why the image is subjected to wiener filtering?

This method of filtering consider images and noise as random process and the objective

is to find an estimate f^ of the uncorrupted image f such that the mean square error between

them is minimized. So that image is subjected to wiener filtering to minimize the error.

3.8. Define spatial transformation.

Spatial transformation is defined as the rearrangement of pixels on an image plane.

3.9. Define Gray-level interpolation.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 10

EC2029- Digital Image Processing

VII Semester ECE

Gray-level interpolation deals with the assignment of gray levels to pixels in the spatially

transformed image.

3.10. Give one example for the principal source of noise.

The principal source of noise in digital images arise image acquisition (digitization)

and/or transmission. The performance of imaging sensors is affected by a variety of factors,

such as environmental conditions during image acquisition and by the quality of the sensing

elements. The factors are light levels and sensor temperature.

3.11. When does the degradation model satisfy position invariant property?

An operator having input-output relationship g(x,y)=H[f(x,y)] is said to position invariant

if H[f(x-,y-_)]=g(x-,y-_) for any f(x,y) and and _. This definition indicates that the response at

any point in the image depends only on the value of the input at that point not on its position.

3.12. Why the restoration is called as unconstrained restoration?

In the absence of any knowledge about the noise n, a meaningful criterion function is

to seek an f^ such that H f^ approximates of in a least square sense by assuming the noise term

is as small as possible.

Where H = system operator.

f^ = estimated input image.

g = degraded image.

3.13. Which is the most frequent method to overcome the difficulty to formulate the

spatial relocation of pixels?

The point is the most frequent method, which are subsets of pixels whose location in

the input (distorted) and output (corrected) imaged is known precisely.

3.14. What are the three methods of estimating the degradation function?

The three methods of degradation function are,

i.Observation

ii.Experimentation

iii.Mathematical modeling.

3.15. How the blur is removed caused by uniform linear motion?

An image f(x,y) undergoes planar motion in the x and y-direction and x0(t) and y0(t)

are the time varying components of motion. The total exposure at any point of the recording

medium (digital memory) is obtained by integrating the instantaneous exposure over the time

interval during which the imaging system shutter is open.

3.16. What is inverse filtering?

The simplest approach to restoration is direct inverse filtering, an estimate F^(u,v) of

thetransform of the original image simply by dividing the transform of the degraded image

G^(u,v) by the degradation function.

F^ (u,v) = G^(u,v)/H(u,v)

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 11

EC2029- Digital Image Processing

VII Semester ECE

3.17. Give the difference between Enhancement and Restoration.

Enhancement technique is based primarily on the pleasing aspects it might present to

the viewer. For example: Contrast Stretching. Where as Removal of image blur by applying a

deblurrings function is considered

a restoration technique.

3.18. Define the degradation phenomena.

Image restoration or degradation is a process that attempts to reconstruct or recover an

image that has been degraded by using some clear knowledge of the degradation phenomena.

Degradation may be in the form of Sensor noise ,

, Relative

object camera motion

3.19. What is unconstrained restoration.

It is also known as least square error approach.

n = g-Hf

To estimate the original image f^,noise n has to be minimized and

f^ = g/H

3.20.Draw the image observation model.

3.21.What is blind image restoration?

Degradation may be difficult to measure or may be time varying in an unpredictable

manner. In such cases information about the degradation must be extracted from the observed

image either explicitly or implicitly. This task is called blind image restoration.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 12

EC2029- Digital Image Processing

VII Semester ECE

UNIT IV

DATA COMPRESSION

4.1. What is Data Compression?

Data compression requires the identification and extraction of source redundancy. In

other words, data compression seeks to reduce the number of bits used to store or transmit

information.

4.2. What are two main types of Data compression?

The two main types of data compression are lossless compression and lossy

compression.

4.3.What is Lossless compression?

Lossless compression can recover the exact original data after compression. It is used

mainly for compressing database records, spreadsheets or word processing files, where exact

replication of the original is essential.

4.4. What is lossy compression?

Lossy compression will result in a certain loss of accuracy in exchange for a substantial

increase in compression. Lossy compression is more effective when used to compress graphic

images and digitized voice where losses outside visual or aural perception can be tolerated.

4.5. What is the Need For Compression?

In terms of storage, the capacity of a storage device can be effectively increased with

methods that compress a body of data on its way to a storage device and decompresses it when it

is retrieved. In terms of communications, the bandwidth of a digital communication link can be

effectively increased by compressing data at the sending end and decompressing data at the

receiving end.

At any given time, the ability of the Internet to transfer data is fixed. Thus, if data can

effectively be compressed wherever possible, significant improvements of data throughput can

be achieved. Many files can be combined into one compressed document making sending easier.

4.6. What are different Compression Methods?

The different compression methods are,

i.Run Length Encoding (RLE)

ii.Arithmetic coding

iii.Huffman coding and

iv.Transform coding

4.7. What is run length coding?

Run-length Encoding, or RLE is a technique used to reduce the size of a repeating

string of characters. This repeating string is called a run; typically RLE encodes a run of

symbols into two bytes, a count and a symbol. RLE can compress any type of data regardless

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 13

EC2029- Digital Image Processing

VII Semester ECE

of its information content, but the content of data to be compressed affects the compression

ratio. Compression is normally measured with the compression ratio:

4.8. Define compression ratio.

Compression ratio is defined as the ratio of original size of the image to compressed size of the

image .It is given as

Compression Ratio = original size / compressed size: 1

4.9. Give an example for Run length Encoding.

Consider a character run of 15 'A' characters, which normally would require 15 bytes to

store: AAAAAAAAAAAAAAA coded into 15A With RLE, this would only require two bytes

to store; the count (15) is stored as the first byte and the symbol (A) as the second byte.

4.10. What is Huffman Coding?

Huffman compression reduces the average code length used to represent the symbols of

an alphabet. Symbols of the source alphabet, which occur frequently, are assigned with short

length codes. The general strategy is to allow the code length to vary from character to

character and to ensure that the frequently occurring characters have shorter codes.

4.11. What is Arithmetic Coding?

Arithmetic compression is an alternative to Huffman compression; it enables characters

to be represented as fractional bit lengths. Arithmetic coding works by representing a number

by an interval of real numbers greater or equal to zero, but less than one. As a message

becomes longer, the interval needed to represent it becomes smaller and smaller, and the

number of bits needed to specify it increases.

4.12. What is JPEG?

The acronym is expanded as "Joint Photographic Expert Group". It is an international

standard in 1992. It perfectly Works with colour and greyscale images, Many applications e.g.,

satellite, medical,...

4.13. What are the basic steps in JPEG?

The Major Steps in JPEG Coding involve:

i. DCT (Discrete Cosine Transformation)

ii. Quantization

iii. Zigzag Scan

iv. DPCM on DC component

v.RLE on AC Components

vi. Entropy Coding

4.14. What is MPEG?

The acronym is expanded as "Moving Picture Expert Group". It is an international

standard in 1992. It perfectly Works with video and also used in teleconferencing

4.15. What is transform coding?

Transform coding is used to convert spatial image pixel values to transform coefficient

values. Since this is a linear process and no information is lost, the number of coefficients

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 14

EC2029- Digital Image Processing

VII Semester ECE

produced is equal to the number of pixels transformed. The desired effect is that most of the

energy in the image will be contained in a few large transform coefficients. If it is generally the

same few coefficients that contain most of the energy in most pictures, then the coefficients

may be further coded by loss less entropy coding. In addition, it is likely that the smaller

coefficients can be coarsely quantized or deleted (lossy coding) without doing visible damage

to the reproduced image.

4.16.Define I-frame.

I-frame is intraframe or independent frame. An I-frame is compressed independently of all

frames. It resembles a JPEG encoded image. It is the reference point for the motion estimation

needed to generate subsequent P and P-frame.

4.17. Define p-frame.

P-frame is called predictive frame. A P-frame is the compressed difference between the

current frame and a prediction of it based on the previous I or P-frame.

4.18. Define B-frame.

B-frame is the bidirectional frame. A B-frame is the compressed difference between the

current frame and a prediction of it based on the previous I or P frame or next P-frame.

Accordingly the decoder must have access to both past and future reference frames.

4.19. Draw the JPEG Encoder.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 15

EC2029- Digital Image Processing

VII Semester ECE

4.20. Draw the JPEG Decoder.

4.21. What is zig zag sequence?

The purpose of the Zig-zag Scanare ,

i.To group low frequency coefficients in top of vector.

ii.Maps 8 x 8 to a 1 x 64 vector

4.22. What is block code?

Each source symbol is mapped into fixed sequence of code symbols or code words. So it is

called as block code.

4.23. Define instantaneous code.

A code that is not a prefix of any other code word is called instantaneous or prefix code

word.

4.24. Define B2 code.

Each code word is made up of continuation bit c and information bit which are binary

numbers. This is called B2 code or B code. This is called B2 code because two information bits

are used for continuation bits.

4.25. Define arithmetic coding.

In arithmetic coding, one to one corresponds between source symbols and code word

doesnt exist where as the single arithmetic code word assigned for a sequence of source

symbols. A code word defines an interval of number between 0 and 1.

4.26. What is bit plane decomposition?

An effective technique for reducing an images inter-pixel redundancies is to process the

images bit plane individually. This technique is based on the concept of decomposing

multilevel images into a series of binary images and compressing each binary image via one of

several well-known binary compression methods.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 16

EC2029- Digital Image Processing

VII Semester ECE

UNIT V

IMAGE SEGMENTATION

5.1. What is segmentation?

The first step in image analysis is to segment the image. Segmentation subdivides an

image into its constituent parts or objects.

5.2. Write the applications of segmentation.

The applications of segmentation are,

i. Detection of isolated points.

ii. Detection of lines and edges in an image.

5.3. What are the three types of discontinuity in digital image?

Three types of discontinuity in digital image are points, lines and edges.

5.4. How the discontinuity is detected in an image using segmentation?

The steps used to detect the discontinuity in an image using segmentation are

i.Compute the sum of the products of the coefficient with the gray levels contained inthe

region encompassed by the mask.

ii.The response of the mask at any point in the image is R = w1z1+ w2z2 + w3z3 +..+

w9z9

iii.Where zi = gray level of pixels associated with mass coefficient w i.

iv.The response of the mask is defined with respect to its center location.

5.5. Why edge detection is most common approach for detecting discontinuities?

The isolated points and thin lines are not frequent occurrences in most practical

applications, so edge detection is mostly preferred in detection of discontinuities.

5.6. How the derivatives are obtained in edge detection during formulation?

The first derivative at any point in an image is obtained by using the magnitude of the

gradient at that point. Similarly the second derivatives are obtained by using the laplacian.

5.7. Write about linking edge points.

The approach for linking edge points is to analyse the characteristics of pixels in a

small neighborhood (3x3 or 5x5) about every point (x,y)in an image that has undergone edge

detection. All points that are similar are linked, forming a boundary of pixels that share some

common properties.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 17

EC2029- Digital Image Processing

VII Semester ECE

5.8. What is the advantage of using sobel operator?

Sobel operators have the advantage of providing both the differencing and a smoothing

effect. Because derivatives enhance noise, the smoothing effect is particularly attractive feature

of the sobel operators.

5.9. What is pattern?

Pattern is a quantitative or structural description of an object or some other entity of

interest in an image. It is formed by one or more descriptors.

5.10. What is pattern class?

It is a family of patterns that share some common properties. Pattern classes are

denoted as w1 w2 w3 wM , where M is the number of classes.

5.11. What is pattern recognition?

It involves the techniques for arranging pattern to their respective classes by

automatically and with a little human intervention.

5.12. What are the three principle pattern arrangements?

The three principal pattern arrangements are vectors, Strings and trees. Pattern vectors

are represented by old lowercase letters such as x ,y, z and it is represented in the form

x=[x1, x2, .., xn ]. Each component x represents I th descriptor and n is the number of

such descriptor.

5.13. What is edge?

An edge is a set of connected pixels that lie on the boundary between two regions. Edges

are more closely modeled as having a ramp like profile. The slope of the ramp is inversely

proportional to the degree of blurring in the edge.

5.14. What is meant by object point and background point?

To execute the objects from the background is to select a threshold T that separate these

modes. Then any point(x,y) for which f(x,y)>T is called an object point. Otherwise the point is

called background point.

5.15. Define region growing.

Region growing is a procedure that groups pixels or sub regions into layer regions based on

predefined criteria. The basic approach is to start with a set of seed points and from the grow

regions by appending to each seed these neighboring pixels that have properties similar to the

seed.

5.16. What is meant by markers?

An approach used to control over segmentation is based on markers. Marker is a connected

component belonging to an image. We have internal markers, associated with objects of interest

and external markers associated with background.

5.17. What are the two principle steps involved in marker selection?

The two steps are

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 18

EC2029- Digital Image Processing

VII Semester ECE

i.Preprocessing.

ii.Definition of a set of criteria that markers must satisfy.

5.18. Define chain code.

Chain codes are used to represent a boundary by a connected sequence of straight line

segment of specified length and direction. Typically this representation is based on 4 or 8

connectivity of segments. The direction of each segment is coded by using a numbering

scheme.

5.19. What are the demerits of chain code?

The demerits of chain code are,

i.The resulting chain code tends to be quite long.

ii.Any small disturbance along the boundary due to noise cause changes in the code that

may not be related to the shape of the boundary.

5.20. What is polygonal approximation method?

Polygonal approximation is a image representation approach in which a digital

boundary can be approximated with arbitrary accuracy by a polygon. For a closed curve the

approximation is exact when the number of segments in polygon is equal to the number of

points in the boundary so that each pair of adjacent points defines a segment in the polygon.

5.21. Specify the various polygonal approximation methods.

The various polygonal approximation methods are

i.Minimum perimeter polygons.

ii.Merging techniques.

iii.Splitting techniques.

5.22. Name few boundary descriptors.

i.Simple descriptors.

ii.Shape descriptors.

iii.Fourier descriptors.

5.23. Define length of a boundary.

The length of a boundary is the number of pixels along a boundary. Example, for a chain

coded curve with unit spacing in both directions, the number of vertical and horizontal

components plus 2 times the number of diagonal components gives its exact length.

5.24. Define shape numbers.

Shape number is defined as the first difference of smallest magnitude. The order n of a

shape number is the number of digits in its representation.

5.25. Name few measures used as simple descriptors in region descriptors.

i.Area.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 19

EC2029- Digital Image Processing

VII Semester ECE

ii.Perimeter.

iii.Mean and median gray levels

iv.Minimum and maximum of gray levels.

v.Number of pixels with values above and below mean.

5.26. Define texture.

Texture is one of the regional descriptors. It provides measure measures of properties such

as smoothness, coarseness and regularity.

5.27. Define compactness.

Compactness of a region is defined as (perimeter) 2 / area. It is a dimensionless quantity and

is insensitive to uniform scale changes.

5.28. List the approaches to describe texture of a region.

The approaches to describe the texture of a region are,

i.Statistical approach.

ii.Structural approach.

iii.Spectural approach.

5.29. What is global, local and dynamic or adaptive threshold?

When threshold T depends only on f(x,y) then the threshold is called global. If T depends

both on f(x,y) and p(x,y) then it is called local. If T depends on the spatial coordinates x and y ,

the threshold is called dynamic or adaptive where f(x,y) is the original image.

5.30. What is thinning or skeletonizing algorithm?

An important approach to represent the structural shape of a plane region is to reduce it to a

graph. This reduction may be accomplished by obtaining the skeletonizing algorithm. It plays a

central role in a broad range of problems in image processing, ranging from automated

inspection of printed circuit boards to counting of asbestos fibers in air filter.

Prepared by A.Devasena., Associate Professor., Dept/ECE

Page 20

Vous aimerez peut-être aussi

- ST - Joseph's College of Engineering, Chennai-119 Department of ECE Digital Image Processing (Ec2029)Document19 pagesST - Joseph's College of Engineering, Chennai-119 Department of ECE Digital Image Processing (Ec2029)Shenthur PandianPas encore d'évaluation

- Ec1009 Digital Image ProcessingDocument37 pagesEc1009 Digital Image Processinganon-694871100% (15)

- Question BankDocument37 pagesQuestion BankViren PatelPas encore d'évaluation

- Unit I Digital Image FundamentalsDocument20 pagesUnit I Digital Image FundamentalsRajesh WaranPas encore d'évaluation

- Digital Image Processing Question Answer BankDocument69 pagesDigital Image Processing Question Answer Bankkarpagamkj759590% (72)

- 2 Marks Digital Image ProcessingDocument37 pages2 Marks Digital Image Processingrajat287100% (1)

- Question Bank: ST - Annes College of Engineering and Technology Anguchettypalayam, Panruti - 607 110Document42 pagesQuestion Bank: ST - Annes College of Engineering and Technology Anguchettypalayam, Panruti - 607 110Sathis Kumar KathiresanPas encore d'évaluation

- Fatima Michael College of Engineering & TechnologyDocument16 pagesFatima Michael College of Engineering & Technologyragini tiwariPas encore d'évaluation

- AnswersDocument8 pagesAnswersAnonymous lt2LFZHPas encore d'évaluation

- Digital Image Processing (It 603) : 2marks Questions and Answers)Document19 pagesDigital Image Processing (It 603) : 2marks Questions and Answers)nannurahPas encore d'évaluation

- Image Processing Question Answer BankDocument54 pagesImage Processing Question Answer Bankchris rubishaPas encore d'évaluation

- Ec 1009 - Digital Image ProcessingDocument30 pagesEc 1009 - Digital Image Processingainugiri75% (4)

- Ec 1034 - Digital Image ProcessingDocument15 pagesEc 1034 - Digital Image ProcessingSudharsan PadmanabhanPas encore d'évaluation

- E21Document34 pagesE21aishuvc1822Pas encore d'évaluation

- Digital Image Processing Tutorial Questions AnswersDocument38 pagesDigital Image Processing Tutorial Questions Answersanandbabugopathoti100% (1)

- Digital Image Processing Question Answer BankDocument3 pagesDigital Image Processing Question Answer BankJerryPas encore d'évaluation

- DIP 2 MarksDocument34 pagesDIP 2 MarkspoongodiPas encore d'évaluation

- Digital Image Processing Question BankDocument29 pagesDigital Image Processing Question BankVenkata Krishna100% (3)

- ST - Anne'S: College of Engineering & TechnologyDocument23 pagesST - Anne'S: College of Engineering & TechnologyANNAMALAIPas encore d'évaluation

- Narayana Engineering College: 1.define Image?Document9 pagesNarayana Engineering College: 1.define Image?DurgadeviPas encore d'évaluation

- Digital Image Processing - 2 Marks-Questions and AnswersDocument19 pagesDigital Image Processing - 2 Marks-Questions and AnswersnikitatayaPas encore d'évaluation

- It6005 Digital Image Processing - QBDocument21 pagesIt6005 Digital Image Processing - QBJagadeesanSrinivasanPas encore d'évaluation

- Ganesh College of Engineering, Salem: Ec8093-Digital Image Processing NotesDocument239 pagesGanesh College of Engineering, Salem: Ec8093-Digital Image Processing NotesVinod Vinod BPas encore d'évaluation

- FINALDIPEC8093Document190 pagesFINALDIPEC8093BME AKASH SPas encore d'évaluation

- Digital Image ProcessingDocument24 pagesDigital Image Processingkainsu12100% (1)

- Image Processing SeminarDocument48 pagesImage Processing SeminarAnkur SinghPas encore d'évaluation

- Lecture 1Document19 pagesLecture 1RoberaPas encore d'évaluation

- Digital Image ProcessingDocument27 pagesDigital Image Processingainugiri91% (11)

- It6005 - Digital Image ProcessingDocument27 pagesIt6005 - Digital Image ProcessingRagunath TPas encore d'évaluation

- Digital Image Processing (Image Enhancement)Document45 pagesDigital Image Processing (Image Enhancement)MATHANKUMAR.S100% (1)

- LolDocument40 pagesLolRajini ReddyPas encore d'évaluation

- Image Restoration Part 1Document5 pagesImage Restoration Part 1Nishi YadavPas encore d'évaluation

- Digital Image FundamentalsDocument50 pagesDigital Image Fundamentalshussenkago3Pas encore d'évaluation

- C SectionDocument16 pagesC SectionPayalPas encore d'évaluation

- Ec8093 Dip - Question Bank With AnswersDocument189 pagesEc8093 Dip - Question Bank With AnswersSanthosh PaPas encore d'évaluation

- Digital Image ProcessingDocument18 pagesDigital Image ProcessingMahender NaikPas encore d'évaluation

- A New Method of Image Fusion Technique For Impulse Noise Removal in Digital Images Using The Quality Assessment in Spatial DomainDocument4 pagesA New Method of Image Fusion Technique For Impulse Noise Removal in Digital Images Using The Quality Assessment in Spatial DomainInternational Organization of Scientific Research (IOSR)Pas encore d'évaluation

- 02 Topic ImageDataDocument61 pages02 Topic ImageDatadevenderPas encore d'évaluation

- DIP-LECTURE - NOTES FinalDocument222 pagesDIP-LECTURE - NOTES FinalBoobeshPas encore d'évaluation

- Dip Lecture - Notes Final 1Document173 pagesDip Lecture - Notes Final 1Ammu AmmuPas encore d'évaluation

- Digital ImageProcessing - Sampling & QuantizationDocument80 pagesDigital ImageProcessing - Sampling & Quantizationcareer Point BastiPas encore d'évaluation

- Unit Ii Image Enhancement Part A QuestionsDocument6 pagesUnit Ii Image Enhancement Part A QuestionsTripathi VinaPas encore d'évaluation

- (Main Concepts) : Digital Image ProcessingDocument7 pages(Main Concepts) : Digital Image ProcessingalshabotiPas encore d'évaluation

- Lecture 2: Image Processing Review, Neighbors, Connected Components, and DistanceDocument7 pagesLecture 2: Image Processing Review, Neighbors, Connected Components, and DistanceWilliam MartinezPas encore d'évaluation

- Motivation For Ip: Rakesh Soni M.E. (CSE) Assistant Professor, PietDocument87 pagesMotivation For Ip: Rakesh Soni M.E. (CSE) Assistant Professor, PietRakeshSoniPas encore d'évaluation

- Reinhard Photographic PDFDocument10 pagesReinhard Photographic PDFtaunbv7711Pas encore d'évaluation

- Option Com - Content&view Section&id 3&itemid 41: Article IndexDocument53 pagesOption Com - Content&view Section&id 3&itemid 41: Article IndexnaninanyeshPas encore d'évaluation

- 470 GraphsDocument3 pages470 Graphsalissahavens00Pas encore d'évaluation

- Tone Mapping: Tone Mapping: Illuminating Perspectives in Computer VisionD'EverandTone Mapping: Tone Mapping: Illuminating Perspectives in Computer VisionPas encore d'évaluation

- Image Based Modeling and Rendering: Exploring Visual Realism: Techniques in Computer VisionD'EverandImage Based Modeling and Rendering: Exploring Visual Realism: Techniques in Computer VisionPas encore d'évaluation

- Global Illumination: Advancing Vision: Insights into Global IlluminationD'EverandGlobal Illumination: Advancing Vision: Insights into Global IlluminationPas encore d'évaluation

- Computer Stereo Vision: Exploring Depth Perception in Computer VisionD'EverandComputer Stereo Vision: Exploring Depth Perception in Computer VisionPas encore d'évaluation

- Color Matching Function: Understanding Spectral Sensitivity in Computer VisionD'EverandColor Matching Function: Understanding Spectral Sensitivity in Computer VisionPas encore d'évaluation

- Anti Aliasing: Enhancing Visual Clarity in Computer VisionD'EverandAnti Aliasing: Enhancing Visual Clarity in Computer VisionPas encore d'évaluation

- Color Appearance Model: Understanding Perception and Representation in Computer VisionD'EverandColor Appearance Model: Understanding Perception and Representation in Computer VisionPas encore d'évaluation

- Standard and Super-Resolution Bioimaging Data Analysis: A PrimerD'EverandStandard and Super-Resolution Bioimaging Data Analysis: A PrimerPas encore d'évaluation

- Some Case Studies on Signal, Audio and Image Processing Using MatlabD'EverandSome Case Studies on Signal, Audio and Image Processing Using MatlabPas encore d'évaluation

- Histogram Equalization: Enhancing Image Contrast for Enhanced Visual PerceptionD'EverandHistogram Equalization: Enhancing Image Contrast for Enhanced Visual PerceptionPas encore d'évaluation

- Distance Fog: Exploring the Visual Frontier: Insights into Computer Vision's Distance FogD'EverandDistance Fog: Exploring the Visual Frontier: Insights into Computer Vision's Distance FogPas encore d'évaluation

- Yellow Oxide 42Document1 pageYellow Oxide 42ahboon145Pas encore d'évaluation

- Corporate Visual Identity ManualDocument173 pagesCorporate Visual Identity ManualGau MonPas encore d'évaluation

- Healing Language and ColoursDocument86 pagesHealing Language and ColoursSatinder BhallaPas encore d'évaluation

- 4TH Quarter Practice Test Science 7Document8 pages4TH Quarter Practice Test Science 7Georgia Grace GuarinPas encore d'évaluation

- Edward Tufte Meets Christopher Alexander - John W Stamey (2005)Document7 pagesEdward Tufte Meets Christopher Alexander - John W Stamey (2005)xurxofPas encore d'évaluation

- Lesson PlanDocument5 pagesLesson PlanDELMARK MIENPas encore d'évaluation

- Pastel Painting TechniquesDocument7 pagesPastel Painting TechniquesMohamad Lezeik100% (2)

- Ingles 1 - Leccion 4Document7 pagesIngles 1 - Leccion 4mIGUELPas encore d'évaluation

- ArtConference BianchiDocument3 pagesArtConference BianchiMc SweetPas encore d'évaluation

- Calculate and Color 1 PDFDocument1 pageCalculate and Color 1 PDFastor toPas encore d'évaluation

- Quality Textiles - Qjutie Kids Collection - High Summer 2022Document104 pagesQuality Textiles - Qjutie Kids Collection - High Summer 2022thebestsecondchancePas encore d'évaluation

- Singh 2011Document11 pagesSingh 2011Stefan BasilPas encore d'évaluation

- Colour of The Year 2024 Apricot CrushDocument11 pagesColour of The Year 2024 Apricot CrushRaquel Mantovani100% (1)

- SRA Reading Laboratory Levels Chart UpatedDocument1 pageSRA Reading Laboratory Levels Chart UpatedSan Htiet Oo0% (1)

- Aa01loox PDFDocument168 pagesAa01loox PDFAbdelrahman HassanPas encore d'évaluation

- K50 Pro Indicator With IO-Link: DatasheetDocument5 pagesK50 Pro Indicator With IO-Link: DatasheetRicardo Daniel Martinez HernandezPas encore d'évaluation

- 1 Novacron S OverviewDocument3 pages1 Novacron S OverviewsaskoPas encore d'évaluation

- Learning P5.jsDocument7 pagesLearning P5.jsolive100% (1)

- What Is The Purpose ? Flagging Concept: InformationDocument10 pagesWhat Is The Purpose ? Flagging Concept: Informationjoni Mart SitioPas encore d'évaluation

- Kalinkina JuliaDocument65 pagesKalinkina Juliadiana.gorokhova.visualsPas encore d'évaluation

- Physics - INVESTIGATORY PROJECTDocument20 pagesPhysics - INVESTIGATORY PROJECTYashvardhan Singh100% (1)

- Contemporary Philippine Arts From The Regions 4th Quarter HandoutDocument2 pagesContemporary Philippine Arts From The Regions 4th Quarter HandoutGail Buenaventura88% (8)

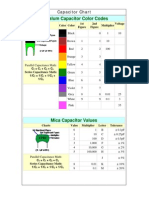

- Capacitor ChartDocument2 pagesCapacitor ChartmuhibrazaPas encore d'évaluation

- Before and After 0680Document0 pageBefore and After 0680DSNVPas encore d'évaluation

- RAL Color ChartDocument1 pageRAL Color ChartPero Plemeniti SimicPas encore d'évaluation

- Cordelia SlidesCarnivalDocument28 pagesCordelia SlidesCarnivalminjiPas encore d'évaluation

- Unit and Lesson Standards Goals and Objectives Alignment-1 2 1Document6 pagesUnit and Lesson Standards Goals and Objectives Alignment-1 2 1api-381640094Pas encore d'évaluation

- Color, True and Apparent, Low Range: How To Use Instrument-Specific InformationDocument6 pagesColor, True and Apparent, Low Range: How To Use Instrument-Specific Informationfawad_aPas encore d'évaluation

- Iit Kharagpur (Rcgsidm) : (Weekly Report-2)Document15 pagesIit Kharagpur (Rcgsidm) : (Weekly Report-2)sameer bakshiPas encore d'évaluation

- D7937-15 Standard Test Method For In-Situ Determination of Turbidity Above 1 Turbidity Unit (TU) in Surface WaterDocument19 pagesD7937-15 Standard Test Method For In-Situ Determination of Turbidity Above 1 Turbidity Unit (TU) in Surface Waterastewayb_964354182Pas encore d'évaluation