Académique Documents

Professionnel Documents

Culture Documents

EAS 520 UmassD Syllabus Sheer

Transféré par

Gurugubelli Venkata Sukumar0 évaluation0% ont trouvé ce document utile (0 vote)

101 vues2 pagesSyllabus book

Titre original

EAS 520 UmassD Syllabus sheer

Copyright

© © All Rights Reserved

Formats disponibles

PDF, TXT ou lisez en ligne sur Scribd

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentSyllabus book

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF, TXT ou lisez en ligne sur Scribd

0 évaluation0% ont trouvé ce document utile (0 vote)

101 vues2 pagesEAS 520 UmassD Syllabus Sheer

Transféré par

Gurugubelli Venkata SukumarSyllabus book

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF, TXT ou lisez en ligne sur Scribd

Vous êtes sur la page 1sur 2

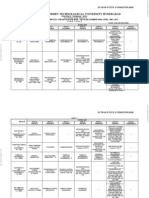

Syllabus

EAS 520 High Performance Scientific Computing

Course Description: A graduate-level course covering an assortment of topics in high

performance computing (HPC). Topics will be selected from the following: parallel processing,

computer arithmetic, processes and operating systems, memory hierarchies, compilers, run time

environment, memory allocation, preprocessors, multi-cores, clusters, and message passing.

Introduction to the design, analysis, and implementation, of high-performance computational

science and engineering applications.

Prerequisites: EAS 502 or instructor approval

Purpose:

This class will introduce the student to the fundamentals of parallel scientific computing. High

performance computing refers to the use of (parallel) supercomputers and computer clusters, and

everything from software and hardware to accelerate computations. In this course students will

learn how to write faster code that is highly optimized for modern multi-core processors and

clusters, using modern software development tools, performance profilers, specialized

algorithms, parallelization strategies, and advanced parallel programming constructs in OpenMP

and MPI.

The course is meant for graduate students in computer science, engineering, mathematics, and

the sciences, especially for those who need to use high performance computing in their research.

The course will emphasize practical aspects of high performance computing on both sequential

and parallel machines, so that you will be able to effectively use high performance computing in

your research.

Learning Objectives:

Students will develop competencies including:

1. An overview of existing HPC software and hardware

2. Basic software design patterns for high-performance parallel computing

3. Mapping of applications to HPC systems

4. Factors affecting performance of computational science and engineering applications

5. Utilization of techniques to automatically implement, optimize, and adapt programs to

different platforms.

Learning Outcomes:

Familiarity with programming language (e.g. FORTRAN, C, C++, or Python) and

computer algebra packages (e.g. Maple, Mathematica, MATLAB or Sage);

The UNIX family of operating systems;

Parallel programming with MPI and OpenMP;

OpenCL/CUDA for GPU computing; and,

Use of the above in the design and optimization of performance driven software.

Recommended Text: Parallel Programming: Techniques and Applications Using Networked

Workstations and Parallel Computers (2nd Ed.), B. Wilkinson and M. Allen, Prentice-Hall. The

text will be supplemented with other online and printed resources.

Course topics (outline):

A. Programming languages and programming-language extensions for HPC

B. Compiler options and optimizations for modern single-core and multi-core processors

C. Single-processor performance, memory hierarchy, and pipelines

D. Overview of parallel system organization

E. Parallelization strategies, task parallelism, data parallelism, and work sharing techniques

F. Introduction to message passing and MPI programming

G. Embarrassingly parallel problems

H. HPC numerical libraries and auto-tuning libraries

I. Using pMATLAB

J. Programming with toolkits (PETSc, Trilinos, etc).

K. Verification and validation of results

L. Execution profiling, timing techniques, and benchmarking for modern single-core and

multi-core processors

M. Problem decomposition, graph partitioning, and load balancing

N. Introduction to shared memory and OpenMP programming

O. General-purpose computing on graphics processing units (GPGPU) using OpenCL

P. Introduction to scientific visualization tools, e.g., Visualization Toolkit (VTK), gnuplot,

GNU Octave, Scilab, MayaVi, Maxima, OpenDX.

Assignments:

This is a hands-on class where students are expected to use the lecture information, a series of

assignments using Matlab, MPI, OpenMP, and GPU (OpenCL) programming, and a final project

to emerge at the end of the class with parallel programming knowledge that can be immediately

applied to their research projects.

Grading:

Four programming exercises (60%)

Final Project (40%)

Computing Laboratory:

Students should be proficient in basic numerical methods and some programming language, e.g.,

Matlab, Python, Fortran, C, or C++. The various software packages are available on University

servers. All use of computer equipment at UMass Dartmouth must comply with acceptable

computer use guidelines of the University. http://www.umassd.edu/cits/policies/responsibleuse/

Vous aimerez peut-être aussi

- PCD ManualDocument89 pagesPCD Manualdheeraj dwarakanathPas encore d'évaluation

- En BodyDocument2 pagesEn BodySarwar KhanPas encore d'évaluation

- Parallel and Distributed Computing - Zhang Zhiguo.2009w-1Document9 pagesParallel and Distributed Computing - Zhang Zhiguo.2009w-1feliugarciaPas encore d'évaluation

- C++ SyllabusDocument3 pagesC++ Syllabuspomar026Pas encore d'évaluation

- Operating Systems Lab ManualDocument56 pagesOperating Systems Lab ManualAnonymous AVjZFiYcltPas encore d'évaluation

- Achieving High Performance ComputingDocument58 pagesAchieving High Performance ComputingIshamPas encore d'évaluation

- CD Lab FPPDocument12 pagesCD Lab FPPAnonymous gkHLuFPas encore d'évaluation

- Parametric Curve Lab ImplementationDocument9 pagesParametric Curve Lab ImplementationharikaPas encore d'évaluation

- Os Lab Manual - 0 PDFDocument56 pagesOs Lab Manual - 0 PDFVani BalamuraliPas encore d'évaluation

- Programmable System On Chip Psoc Usage in An Engineering Technology ProgramDocument15 pagesProgrammable System On Chip Psoc Usage in An Engineering Technology ProgramrifqiPas encore d'évaluation

- 4 Bit MicroprocessorDocument4 pages4 Bit MicroprocessorSiddharth SharmaPas encore d'évaluation

- IT CodinDocument4 pagesIT Codinsasaman82Pas encore d'évaluation

- EC527 Spring 2014Document6 pagesEC527 Spring 2014deepim4Pas encore d'évaluation

- Cost-Effective HPC Clustering For Computer Vision ApplicationsDocument6 pagesCost-Effective HPC Clustering For Computer Vision ApplicationsgurushethraPas encore d'évaluation

- 4 Year B.Tech CSE FullSyllabusDocument37 pages4 Year B.Tech CSE FullSyllabusgmttmPas encore d'évaluation

- MarshallingDocument10 pagesMarshallingrahul9924Pas encore d'évaluation

- CFD and Heat Transfer Spring 2013Document3 pagesCFD and Heat Transfer Spring 2013lujiaksaiPas encore d'évaluation

- Intro HPC Linux GentDocument124 pagesIntro HPC Linux GentNatenczas WojskiPas encore d'évaluation

- Gujarat Technological University: Linear Algebra and Numerical Methods, Parallel AlgorithmsDocument2 pagesGujarat Technological University: Linear Algebra and Numerical Methods, Parallel AlgorithmsEr Umesh ThoriyaPas encore d'évaluation

- Review ArticleDocument19 pagesReview ArticleShilpa MarathePas encore d'évaluation

- Computer Science - Caltech CatalogDocument10 pagesComputer Science - Caltech CatalogAnmol GuptaPas encore d'évaluation

- All PDocument5 pagesAll PKamioShikaPas encore d'évaluation

- CS101: From Nand to TetrisDocument2 pagesCS101: From Nand to TetrisKirti Susan VarghesePas encore d'évaluation

- Subject GoalDocument2 pagesSubject Goalcbm95Pas encore d'évaluation

- CSE5006 Multicore-Architectures ETH 1 AC41Document9 pagesCSE5006 Multicore-Architectures ETH 1 AC41;(Pas encore d'évaluation

- MECH 215 Programming For Mechanical and Industrial Engineers Fall 2019 ObjectivesDocument3 pagesMECH 215 Programming For Mechanical and Industrial Engineers Fall 2019 ObjectivesDion BrownPas encore d'évaluation

- COURSE TEMPLATE For WEBSITE 1Document85 pagesCOURSE TEMPLATE For WEBSITE 1Nishchay VermaPas encore d'évaluation

- CSE 115 - 115L Course Objective and OutcomeDocument6 pagesCSE 115 - 115L Course Objective and OutcomeRoksana IslamPas encore d'évaluation

- Mpi For PythonDocument5 pagesMpi For Pythonmutsanna qoid alamPas encore d'évaluation

- ECEP Advance EmertxeInfotechBangaloreDocument8 pagesECEP Advance EmertxeInfotechBangaloreAtul BhatiaPas encore d'évaluation

- Parallel and Distributed AlgorithmsDocument65 pagesParallel and Distributed AlgorithmsSergiu VolocPas encore d'évaluation

- DLD31Document36 pagesDLD31rajPas encore d'évaluation

- 1.6 Final Thoughts: 1 Parallel Programming Models 49Document5 pages1.6 Final Thoughts: 1 Parallel Programming Models 49latinwolfPas encore d'évaluation

- FULLTEXT01Document18 pagesFULLTEXT01Dejan VujičićPas encore d'évaluation

- Python package for geophysical data analysisDocument9 pagesPython package for geophysical data analysissandro0112Pas encore d'évaluation

- High Performance Computing Lecture 1 HPC PublicDocument50 pagesHigh Performance Computing Lecture 1 HPC PublicЯeaderPas encore d'évaluation

- Computer Programming: Course OverviewDocument2 pagesComputer Programming: Course OverviewJanus Raymond TanPas encore d'évaluation

- Print Version: Search AgainDocument5 pagesPrint Version: Search AgainalicePas encore d'évaluation

- High Performance Computing: 772 10 91 Thomas@chalmers - SeDocument75 pagesHigh Performance Computing: 772 10 91 Thomas@chalmers - SetedlaonPas encore d'évaluation

- Advanced Digital System Design With FpgasDocument2 pagesAdvanced Digital System Design With FpgasSayeed AnwarPas encore d'évaluation

- PPSC Unit 1&2 Lesson NotesDocument94 pagesPPSC Unit 1&2 Lesson NotesHermione GrangerPas encore d'évaluation

- Parallel Programming: Homework Number 5 ObjectiveDocument6 pagesParallel Programming: Homework Number 5 ObjectiveJack BloodLetterPas encore d'évaluation

- Systems Programming CourseDocument18 pagesSystems Programming Coursemehari kirosPas encore d'évaluation

- Simulating Mechanical Engineer Equations Using C++ Programming LanguageDocument6 pagesSimulating Mechanical Engineer Equations Using C++ Programming LanguageZulhafizan ZulkifliPas encore d'évaluation

- An Introductory Digital Design Course Using A Low-Cost Autonomous RobotDocument8 pagesAn Introductory Digital Design Course Using A Low-Cost Autonomous RobotBachir El FilPas encore d'évaluation

- Introducing Parallel Programming To Traditional Undergraduate CoursesDocument7 pagesIntroducing Parallel Programming To Traditional Undergraduate CoursesDejan VujičićPas encore d'évaluation

- ECEP Linux Systems Course SyllabusDocument9 pagesECEP Linux Systems Course SyllabussidduPas encore d'évaluation

- Nep-30-11-22 04 - 20 - 16273Document12 pagesNep-30-11-22 04 - 20 - 16273suhelkhan900500Pas encore d'évaluation

- OOP, Software Eng, Networks, ArchitectureDocument3 pagesOOP, Software Eng, Networks, ArchitectureAmir SohailPas encore d'évaluation

- C++ CSC1181Document3 pagesC++ CSC1181Jessica StewartPas encore d'évaluation

- SYLLABUSDocument14 pagesSYLLABUSHamida YusufPas encore d'évaluation

- New Advances in High Performance Computing and Simulation: Parallel and Distributed Systems, Algorithms, and ApplicationsDocument7 pagesNew Advances in High Performance Computing and Simulation: Parallel and Distributed Systems, Algorithms, and ApplicationspattysuarezPas encore d'évaluation

- ACAC 2001 Advanced Computer Architecture CourseDocument11 pagesACAC 2001 Advanced Computer Architecture Coursecore9418Pas encore d'évaluation

- Course Title: Advanced Programming (Adv-Prog)Document5 pagesCourse Title: Advanced Programming (Adv-Prog)melkamu mollaPas encore d'évaluation

- Cache NptelDocument3 pagesCache NpteljanepricePas encore d'évaluation

- Distributed Operating System Concepts and DesignDocument2 pagesDistributed Operating System Concepts and DesignNirali DutiyaPas encore d'évaluation

- MATLAB Programming IntroductionDocument51 pagesMATLAB Programming Introductionokware brianPas encore d'évaluation

- Parallel Programming Course OverviewDocument10 pagesParallel Programming Course OverviewHussainPas encore d'évaluation

- CIS 570 - Advanced Computing Systems Assignment 1: ObjectiveDocument2 pagesCIS 570 - Advanced Computing Systems Assignment 1: ObjectiveGurugubelli Venkata SukumarPas encore d'évaluation

- Workout Frank Medrano1Document1 pageWorkout Frank Medrano1Scotty Jurinesurtamer100% (1)

- Workout Frank Medrano1Document1 pageWorkout Frank Medrano1Scotty Jurinesurtamer100% (1)

- Iii Year Ii Sem. R09Document8 pagesIii Year Ii Sem. R09Sai Raghava RajeevPas encore d'évaluation

- ELP ConfirmationDocument1 pageELP ConfirmationGurugubelli Venkata SukumarPas encore d'évaluation

- ETHICAL HACKING (Original 2010)Document34 pagesETHICAL HACKING (Original 2010)Gurugubelli Venkata SukumarPas encore d'évaluation

- UE Requested Bearer Resource ModificationDocument3 pagesUE Requested Bearer Resource Modificationcollinsg123Pas encore d'évaluation

- Data warehouse roles of education in delivery and mining systemsDocument4 pagesData warehouse roles of education in delivery and mining systemskiet eduPas encore d'évaluation

- PIC16F73Document174 pagesPIC16F73dan_tiganucPas encore d'évaluation

- F2y DatabaseDocument28 pagesF2y Databaseapi-272851576Pas encore d'évaluation

- Why ADO.NET Addresses Database ProblemsDocument2 pagesWhy ADO.NET Addresses Database ProblemssandysirPas encore d'évaluation

- Shivam Dissertation Front PagesDocument4 pagesShivam Dissertation Front PagesJay Prakash SinghPas encore d'évaluation

- Unit 13 - Week 12: Assignment 12Document4 pagesUnit 13 - Week 12: Assignment 12DrJagannath SamantaPas encore d'évaluation

- Sap HR FaqDocument36 pagesSap HR FaqAnonymous 5mSMeP2jPas encore d'évaluation

- Real Time ClockDocument44 pagesReal Time Clocksubucud100% (1)

- Yadgir JC - NWDocument12 pagesYadgir JC - NWsagar4pranith4t100% (1)

- Ansys Mechanical PostProcessingDocument28 pagesAnsys Mechanical PostProcessingYuryPas encore d'évaluation

- Table Invalid Index DumpDocument9 pagesTable Invalid Index DumpBac AstonPas encore d'évaluation

- SamDocument21 pagesSamPrateek SharanPas encore d'évaluation

- Automating HCM Data Loader R11Document14 pagesAutomating HCM Data Loader R11Nive NivethaPas encore d'évaluation

- Keypoints Algebra 1 ADocument3 pagesKeypoints Algebra 1 AtnezkiPas encore d'évaluation

- (B) Ruan D., Huang C. (Eds) Fuzzy Sets and Fuzzy Information - Granulation Theory (BNUP, 2000) (T) (522s)Document522 pages(B) Ruan D., Huang C. (Eds) Fuzzy Sets and Fuzzy Information - Granulation Theory (BNUP, 2000) (T) (522s)Mostafa MirshekariPas encore d'évaluation

- Blue Mountain's Service Quality JourneyDocument3 pagesBlue Mountain's Service Quality JourneyAshish Singhal100% (1)

- Platform1 Server and N-MINI 2 Configuration Guide V1.01Document47 pagesPlatform1 Server and N-MINI 2 Configuration Guide V1.01sirengeniusPas encore d'évaluation

- Mup LabDocument4 pagesMup LabAbhishek SinghPas encore d'évaluation

- Joint Target Tracking and Classification Using Radar and ESM SensorsDocument17 pagesJoint Target Tracking and Classification Using Radar and ESM SensorsYumnaPas encore d'évaluation

- Turing Machine NotesDocument11 pagesTuring Machine NotesShivamPas encore d'évaluation

- ConcreteDrawingProduction ProConcreteS7Document38 pagesConcreteDrawingProduction ProConcreteS7Lula Caracoleta100% (1)

- Multivector&SIMD Computers Ch8Document12 pagesMultivector&SIMD Computers Ch8Charu DhingraPas encore d'évaluation

- PPL 2017 Aktu PaperDocument2 pagesPPL 2017 Aktu PaperShivanand PalPas encore d'évaluation

- Anup Kumar Gupta-Microsoft Certified Professional ResumeDocument4 pagesAnup Kumar Gupta-Microsoft Certified Professional ResumeAnup kumar GuptaPas encore d'évaluation

- Computer Science-Class-Xii-Sample Question Paper-1Document12 pagesComputer Science-Class-Xii-Sample Question Paper-1rajeshPas encore d'évaluation

- ORF 4 User Manual V 1.0Document23 pagesORF 4 User Manual V 1.02005590% (1)

- Texto de Algebra Lineal U de Harvard PDFDocument47 pagesTexto de Algebra Lineal U de Harvard PDFJohn J Gomes EsquivelPas encore d'évaluation

- Object Oriented Programming C++ GuideDocument43 pagesObject Oriented Programming C++ GuideSumant LuharPas encore d'évaluation