Académique Documents

Professionnel Documents

Culture Documents

Challenges in Online Charging System Virtualization

Transféré par

Magnus AbrahamssonCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Challenges in Online Charging System Virtualization

Transféré par

Magnus AbrahamssonDroits d'auteur :

Formats disponibles

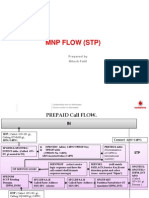

A project assignment within

Datafreningen Kompetens - Certifierad IT-arkitekt Master, K54

CHALLENGES IN ONLINE CHARGING

SYSTEM VIRTUALIZATION

Magnus Abrahamsson

Challenges in Online Charging System virtualization

Page |2

This paper examines and describes various virtualization architectures, its challenges for soft

real-time, and effects on an Online Charging System (OCS) deployment architecture therein.

CHALLENGES IN ONLINE CHARGING SYSTEM VIRTUALIZATION

Author: Magnus Abrahamsson, Solution Architect Charging & Rating

E-mail address: magnus.abrahamsson@teliasonera.com

Study counselor: Michael Thurell (DataFreningen)

Date: 2016-02-15

Version : 4.0

Challenges in Online Charging System virtualization

Page |3

1 Abstract

Virtualization technology and Cloud computing have revolutionized general-purpose computing applications in the past decade. The cloud paradigm offers advantages through reduction

of operation costs, server consolidation, flexible system configuration and elastic resource provisioning. However, despite the success of cloud computing for general-purpose computing,

existing cloud computing and virtualization technology face tremendous challenges in supporting emerging real-time applications such as online-charging, online video streaming, and other

telecommunication management. These applications demand real-time performance in open,

shared and virtualized computing environments. This paper studies various virtualization architecture to identify the technical challenges in supporting real-time applications therein, focusing on Online-Charging. It also surveys recent advancement in real-time virtualization and

cloud computing technology, and research directions to enable cloud-based real-time applications in the future.

Keywords: Visualization, Cloud computing, Hypervisor, OCS, real-time, NFVI, Charging, distributed system.

Challenges in Online Charging System virtualization

Page |4

TABLE OF CONTENTS

1

Abstract ........................................................................................................................................ 3

Terminology.................................................................................................................................. 6

Introduction .................................................................................................................................. 8

3.1

3.2

3.3

3.4

3.5

3.6

3.7

3.8

Background ................................................................................................................................. 8

Concerns ..................................................................................................................................... 8

Objectives ................................................................................................................................... 9

Scope .......................................................................................................................................... 9

Stakeholders ............................................................................................................................... 9

Methods...................................................................................................................................... 9

Note to the reader .................................................................................................................... 10

Outline ...................................................................................................................................... 10

Current situation......................................................................................................................... 11

Stakeholders and requirements .................................................................................................. 11

5.1

5.2

Online Charging System .............................................................................................................. 12

6.1

6.2

Virtual machine architectures................................................................................................... 16

Virtualization techniques and performance characteristics ..................................................... 18

Know the performance curve .................................................................................................... 22

Real-time challenges in cloud computing .................................................................................... 23

8.1

Current OCS deployment .......................................................................................................... 15

Service availability classification level ...................................................................................... 16

Cloud computing and virtualization ............................................................................................ 16

7.1

7.2

7.3

Business requirement ............................................................................................................... 11

None-functional requirement (Quality attributes) ................................................................... 11

Terminology mapping ............................................................................................................... 23

Network Functions Virtualization ............................................................................................... 24

9.1

9.2

9.3

NFV framework ......................................................................................................................... 25

VNF ........................................................................................................................................... 25

NFV Management and Orchestration ...................................................................................... 28

10

NFV challenges............................................................................................................................ 29

11

Solution architecture .................................................................................................................. 31

11.1

11.2

11.3

Generic VNF Cluster Architecture ............................................................................................. 32

Deploying OCS as VNFs ............................................................................................................. 33

Analyze of the Solution architecture ......................................................................................... 36

12

Future directions ........................................................................................................................ 37

13

Conclusions ................................................................................................................................. 38

Challenges in Online Charging System virtualization

Page |5

14

Discussions ................................................................................................................................. 39

15

References .................................................................................................................................. 40

16

Appendix A: VMWare ................................................................................................................. 42

17

Appendix B : OpenStack Tracker .............................................................................................. 42

18

Appendix C: MANO architecture ................................................................................................. 43

19

Appendix D: OCS RTO calculation & Latency ............................................................................... 44

Page |6

Challenges in Online Charging System virtualization

2 Terminology

4G

4th generation (LTE)

ABMF

Account Balance Management Function

BE

Back-end

BRM

Billing and Revenue Management

BSS

Business Support systems

CAPEX

Capital Expenditures

CBA

Component Based Architecture

CDR

Charging data record

CPU

Central Processing Unit

CRM

Customer Relationship Management

CSP

Communications Service Provider

CTF

Charging Trigger Function

DCCA

Diameter Credit-Control Application

EM

Element Management

EPC

Evolved Packet Core

EPG

Evolved Packet Gateway (Ericsson)

ERP

Enterprise Resource Planning

ETSI

European Telecommunications Standards Institute

FE

Front-end

GGSN

Gateway GPRS Support Node

IaaS

Infrastructure-as-a-Service

IETF

Internet Engineering Task Force

IMS

IP Multimedia Subsystem

IT

Information Technology

KVM

Kernel-based Virtual Machine

LTE

Long-Term Evolution

MMS

Multi Media Message

MTAS

Multimedia Telephony Application Server

NaaS

Network-as-a-Service

NFV

Network Functions Virtualization

NFVI

NFV Infrastructure

NFV-MANO

NFV Management and Orchestration

NIC

Network Interface Controller

Page |7

Challenges in Online Charging System virtualization

OCF

Online-Charging Function

OCS

Online-Charging System

OM

Operation & Maintenace

OPEX

Operating Expenditures

OS

Operating System

OSS

Operation Support System

PaaS

Platform-as-a-Service

PCI

Peripheral Component Interconnect

PL

Payload

QoS

Quality of Service

RT

Rating Function

RTO

Retransmission TimeOut

SaaS

Software-as-a-Service

SC

System Controllers

SCUR

Session Charging with Unit Reservation

SDP

Service Data Point

SLA

Service Level Agreements

SR-IOV

Single-Root I/O Virtualization

TCO

Total Cost of Ownership

TTM

Time to market

UDR

Usage Detail Record

vCPU

Virtual CPU

vDC

Virtual Data Center

VF

Virtual Functions

VIM

Virtualized Infrastructure Manager

VM

Virtual Machine

VMM

Virtual machine monitor (hypervisor)

VNF

Virtualized Network Function

VNFC

VNF Component

VNFM

VNF Manager

vNIC

Virtual NIC

VoLTE

Voice over LTE

VOMS

VOucher Management System

Challenges in Online Charging System virtualization

Page |8

3 Introduction

3.1 Background

The current online charging system (OCS) for TeliaSonera has provides real-time charging successfully since 2006 for three of the Nordic countries. It offers prepaid services for brands like

Refill, Halebop, Netcom, Telia DK & Chess.

These kind of systems has high demands on availability and performance to achieve their functions, which usually require software installed on proprietary hardware. The rapid pace of innovation for these types of hardware, OCS dependencies to it, and drive for cost efficient solutions has reduced the system life cycle. Today, resulting in a nightmare because of more or less

a constant flow of tedious upgrades. Their consequences are not limited to increased costs and

time consumption, it also risk limit the business delivery capabilities.

As the industry demands, faster time to market (TTM) and reduced total cost of ownership

(TCO) this is not sustainable in the long run and calls for some action.

Ideas about migrating the system from todays native, dedicated hardware, into a privatecloud has been discussed. That would most likely simplify todays life cycle management of the

system infrastructure, provide elastic scaling, reduce costs and bring other virtualization benefits. However, there are some doubts on whether a soft real-time system, like OCS, in a virtual

environment can provide the same overall quality attributes. In particular reliability, availability, manageability, security and performance as the current setup.

3.2 Concerns

The virtualized data centre are currently considered state-of-the-art technology in the Information Technology (IT) domain while in the telecom domain there are no widespread deployments yet. One key differentiator between the IT and telecom domains is the level of service

continuity required. In the IT domain outages lasting seconds are tolerable and the service user

typically initiates retries whereas in the telecom domain there is an underlying service expectation that outages will be below the recognizable level (i.e. in milliseconds), and service recovery is performed automatically.

One of the disruptive technologies that is emerging in the area of telecom Cloud Computing

and data centers architectures is the one of Network Functions Virtualization (NFV) [1]. Shifting from hardware-based provisioning of network functions to a software based provisioning

where so-called virtualized network functions (VNF) [1] are deployed in private or hybrid

clouds of communication service providers1 (CSP) [2].

Identifies the technical challenges in supporting real-time applications like OCS in the

cloud.

Survey recent NFV architect framework and cloud computing technology.

How compliant is the OCS architecture to the Telco-cloud (NFV reference architecture)?

CSP is broad category encompassing telecommunications, entertainment and media, and Internet/Web service businesses.

Challenges in Online Charging System virtualization

Page |9

3.3 Objectives

The objectives of this paper are

give an overview of the virtualization technology for cloud computing focusing on the realtime issues that appear therein.

identify real-time challenges in cloud computing.

present a subset of selected examples that illustrate some of the design decisions taken by

virtualization technology to integrate real-time support.

give an overview of the OCS architecture and the NFV framework.

proposing a deployment of for OCS as VNF in the Telco-cloud (NFVI) and identify architectural impact.

to learn more about the areas mentioned above.

3.4 Scope

Because of the limited time I will focus on OCSs integration points with the network which is

handling real-time charging of IMS services, e.g. voice call over 4G and messaging. Its framework is regarded as future-proof for charging solutions. Online charging of 2G/3G voice call are

out of the scoop for this paper. So is top-up, voucher management and balance control.

The deployment scenario in this paper will focus on a Monolithic Operator, where the same organization that operates the virtualized network functions deploys and controls the hardware

and hypervisors they run on, and physically secures the premises in which they are located.

3.5 Stakeholders

IT Solution owner

IT solution manager

Solution and Infrastructure architects

CSP Vendor(s)

Study counselor for the DF IT architecture training.

3.6 Methods

Unstructured interviews with various stakeholder to explore requirements and get more

knowledge.

System observations to provide the most objective picture of reality as possible and an inductive approach. Literature studies and perusing of available material for information gathering

about the theories to meet the objectives.

Challenges in Online Charging System virtualization

P a g e | 10

3.7 Note to the reader

This paper can be read from start to end or pick a section of interest with help with the outline

below.

3.8 Outline

Section 5 overviews and explains various important requirements affecting OCS

Section 6 offers an overview of OCS architecture, and how its link to the current solution.

Section 7 offers an overview of the virtualization technology for cloud computing

Section 8 identifies different real-time challenges in cloud computing

Section 9 an overview of the technology and architecture for network function virtualization.

Section 10 identifies VNF challenges in NFV, with suggest solutions and requirements.

Section 11 proposing a solution architecture of for OCS as VNF in the NFVI

Section 12 points out open areas of research related to NFV.

Section 13 conclusion

Challenges in Online Charging System virtualization

P a g e | 11

4 Current situation

Besides what we mention in the background section, converting charging has gained momentum during the last years as the IMS technology get wider spread. Requiring operators to integrate or replace there various types of charging systems, e.g. for fixed, mobile and broadband,

with a new common one. The new common charging & rating system are often an OCS or similar. Furthermore, as the company planes for a new system is slowly taking shape, the legacy

prepaid OCS are facing a major upgrade of the platform software version and its hardware during next year.

The company can today provide a private-cloud with Platform-as-a-service (PaaS) and will soon

have a Telco-cloud as Infrastructure-as-a-Service (IaaS) ready. These gives us different options for the technical implementations. What type of cloud is most suitable based on the

Online Charging System requirement? If any?

In a virtualized context where a multitude of virtual machines (VMs) share the same physical

hardware for providing a plethora of services with highly various performance requirements to

independent customers/end-users brings many challenges, some of which can be tackled as

summarized in this paper.

5 Stakeholders and requirements

This section overview and explains various important requirements affecting OCS.

5.1 Business requirement

According to the prepaid business policy should customer be able to use their services even

though the charging is out of function. Negative customer account balance is prohibited as

well, since different user surveys has indicated it to have negatively impact on the customer

experience.

This policy combination will risk revenue lost at any major disturbance related to the online

charging service. If the real time cost-control is out of order the customer usage can potentially be higher than her/his account balance as the cost-control can no longer determine in

real time whether the account balance are exhausted and/or cut customer usage. For flat rate

(all you can eat) services there is no risk of revenue lost but for services like Pay As You Go and

quota buckets post charging of accounts are not allowed to cause overdraw(negative balance).

Other important services related to prepaid are; top-up (refill) and balance control. A similar

disturbance will affect those services as well. Customer will not be able check account balances

and perform top-ups, e.g. top-up additional allowance or activate new services (e.g. 3rd party

like Spotify). The top-up, voucher management and balance control are out of the scoop for

this paper.

5.2 None-functional requirement (Quality attributes)

The online charging consider being a highly available service with availability requirement of

99.98%, including planned and unplanned maintenance. The service availability calculates, simplified, by dividing the total amount of network Usage Detail Records (UDRs) with the OCS

charging data records (CDR).

OCS and underlying functions expectation that response time should be predictable, outages

should be below the recognizable level (i.e. in milliseconds), and service recovery should be

performed automatically.

P a g e | 12

Challenges in Online Charging System virtualization

One of the most important of these performance metrics quantifies are Retransmission

Timeouts (RTOs). The table below show the figures for the OCS Rc/Re reference point (page 14

- Figure 3) which is one of many integration point end-to-end for a charging control transition,

e.g. setup a VoLTE call.

maximum RTO

200 ms

minimum RTO

80 ms

TABLE 1 : SHOWING MAX/MIN RTO FOR RC/RE REFERENCE POINT2

The charging and billing information is to be generated, processed, and transported to a desired conclusion in less than 1 second. The customer will expect the call to be setup in reasonable time, normally 3-4 seconds. Figure 1 below illustrates the expected performance requirements.

FIGURE 1 : PERFORMANCE SCENARIO FOR SETTING UP A CALL.

6 Online Charging System

This session offers an overview of the OCS architecture, its different components and how they

interact, with focus on the real-time issues that appear therein.

OCS allows Communications Service Provider (CSP) to charge their customers in real time (RT)

based on service usage. Its a soft RT application that demand certain degree of service level

agreements in terms of real-time performance but doesnt require hard real-time performance

guarantees.

The OCS system has integrations both with the business support systems (BSS), marked green

in Figure 2, and the network layer. OCS integration with BSS is out of the scoop for this paper.

Statistics from the DCCA server showing max response time for CCR-I and number of PADS initial statistics from the current OCS solution. See Appendix D: OCS RTO calculation & Latency for more details

P a g e | 13

Challenges in Online Charging System virtualization

Billing

CRM

ERP

systems

systems

systems

Online Charging

Network layer

FIGURE 2 : SIMPLE DEPLOYMENT VIEW STACK OF ONLINE CHARGING.

The 3rd Generation Partnership Project (3GPP) Charging architecture divides OCS into three

main functions; the online-charging function (OCF), account balance management function

(ABMF) and finally the rating function (RT). The different functions are described below:

The Online Charging Function (OCF) consists of two distinct charging modules, namely

the Session Based Charging Function (SBCF) and the Event Based Charging Function

(EBCF). The charging events are forwarded from the CTF, outside of OCS, to the Online

Charging Function (OCF) in order to obtain authorization for the chargeable event

and/or network resource usage3 requested by the end-user. The OCF communicates

with the Rating Function in order to determine the value of the requested bearer resources or session, and then with the Account Balance Management Function to query

and update the subscribers' account and counters status [3].

Account Balance Management Function (ABMF) is the location of the subscribers account balance within the OCS. E.g. Recharged/top-up money or account counters like

free Giga bytes, free Calls/Message etc.

The Rating Function (RF) determines the value of the network resource usage on behalf of the Online Charging Function. To this end, the Online Charging Function furnishes the necessary information, obtained from the charging event to the RF and receives in return the rating output (monetary or non-monetary units) via the Re reference point. The RF may handle a wide variety of ratable instances [3].

The Charging Trigger Function (CTF)4 is outside of OCS and is able to delay the actual resource

usage until permission by the OCS has been granted. It also track the availability of resource

usage permission ("quota supervision") during the network resource usage, and can enforce

termination of the end users network resource usage when permission by the OCS is not

granted or expires. From the online charging architecture perspective, the IMS gateway function (GWF), the Gateway GPRS Support Node (GGSN) or Telephony Application Server (TAS called MTAS in this paper) are examples of online charging capable CTFs.

The Ro reference point supports interaction between a Charging Trigger Function and an

Online Charging Function. The information flow across this reference point are online charging

events. The protocol crossing this reference point, called Diameter Credit-Control Application

Typical examples of network resource usage are a voice call of certain duration, the transport of a certain volume of data, or the submission of a MMS of a certain size

4

Part of networks provide functions that implement offline and/or online charging mechanisms on the

bearer (e.g. EPC), subsystem (e.g. IMS) and service (e.g. MMS) levels.

P a g e | 14

Challenges in Online Charging System virtualization

(DCCA) [4], supports the capabilities of real-time transactions in stateless mode (event based

charging) and statefull mode (session based charging) [3].

Figure 3 below provides an overview of the online parts in a common charging architecture.

OCS (Onlince Charging System)

CN

Domain

ABMF

C

T

F

Service

element

Ro/CAP

O

C

F

Rc

Re

RT

Subsystem

FIGURE 3 : COMPUTATIONAL VIEW OF A UBIQUITOUS ONLINE CHARGING ARCHITECTURE

Figure 4 shows the transactions that are required on the Ro reference point in order to perform the Session Charging with Unit Reservation (SCUR), e.g. for IMS Voice Call or Data session.

OCS/

CTF

1. Session Request

Reserve Units operation

2. CCR[INITIAL_REQUEST, RSU]

3. Perfor m

Charging Control

4. CCA[INITIAL_REQUEST, GSU, [VT]]

5. Session Delivery

Reserve Units and Debit Units operations

6. CCR[UPDATE_REQUEST, RSU, USU]

7. Perform Charging Control

8. CCA[UPDATE_REQUEST, GSU, [FUI]]

9. Session Delivery

10. Session Terminate

Debit Units operation

11. CCR[TERMINATION_REQUEST, USU]

12. Perform Charging Control

13. CCA[TERMINATION_REQUEST, CI]

FIGURE 4 : LOGICAL VIEW OF SCUR - SESSION BASED CREDIT-CONTROL.

P a g e | 15

Challenges in Online Charging System virtualization

6.1 Current OCS deployment

OCS has three tiers, a Front-End (FE) tier, a Back-End (BE) tier, and finally a database tier (also

known as SDP). The Online Charging Function has been split into client/server components

where the OCF-client is deployed in FE tier and the OCF-server in a BE tier. The FE are considered almost stateless5 with no data centric parts, and the BE/SDP (for now on only referred to

as BE) is stateful and by that have higher demands on transaction reliability and security. The

BE also has to relate to the CAP theorem [5], which in short states that it is impossible for a distributed computer system to simultaneously provide all three of the following guarantees;

data consistency, availability, and partition tolerance. This mainly affects the account balance

management function (ABMF) where there are high demands on the data consistency of the

account balances at all times. The BE has a geo-redundancy Active/Standby high availability/disaster recovery model. The SDP is a relationship database. The FE are deployed geo-redundant active/active with support for horizontal scalability of the application layer.

OCS (Onlince Charging System)

CTF

DCCA

client

DCCA server

EPG

OCF client

Customer

Module

EPG Gy Quota Server

Gy

Charging

Client

Diameter

Ro

MTAS

ABMF

OCF server

MTAS Ro Quota Server

Front-End

Charging

Server

Rc

Re

RT

Customer

Rating

Rating Engine

Back-End

BE-SDP

FIGURE 5 : CURRENT OCS DEPLOYMENT VIEW WITH 3GPP OCS ARCHITECTURE MAPPING

Front-End (FE): has two components; DCCA server and OCF client. The DCCA server communicates externally with the CTS DCCA client via the Ro (or Gy) integration point and internally

with the OCF client, which externally communicates with the OCF server.

Back-End (BE): has three components; OCF server, ABMF and RT. The BE can have one or many

FE connected. OCF-server communicates externally with OCF client(s), and internally with the

ABMF and RT. It also contain a Voucher Manager (VOM) component, but that is outside the

scope of this paper.

BE-Service Data Point (SDP): holds the data for Customer and Rating information in a relationship database.

Stateless during normal operation, stateful in case the front-end cant do online-charging against backend. The front-end then stores transaction and post-charge them as the connection to the back-end is

established again.

Challenges in Online Charging System virtualization

P a g e | 16

6.2 Service availability classification level

As in any service, the online charging needs to ensure the availability of its part of the end-toend. However, the relative availability of the service, the impact of failures is the second important factor for service continuity for the operator. I have identified OCS to have a service

availability classification levels of 2B [6], because of the real-time network dependencies to

provide continuous revenue.

Although the OCS do not always demand hard time guarantees, it require the underlying virtualization layer supports low latency and provide adequate computational resources for completion within a reasonable or predictable timeframe.

In the next section, we will overview the different virtualization technology for cloud computing, to assist in the choices of infrastructure.

7 Cloud computing and virtualization

This session offers an overview of the virtualization technology for cloud computing focusing on

the real-time issues that appear therein.

The cloud computing paradigm provides a wide range of benefits through the presence of enablers, i.e., the virtualization technologies. It is seen as an efficient solution for optimum use of

hardware, improved reliability and security. It has transformed the thinking from physical to

logical, treating IT resources as logical resources rather than separate physical resources. In

simple words, it is a technology that introduces a software abstraction layer between the underlying hardware i.e. the physical platform/host and the operating system(s) (OS), e.g. a guest

virtual machine(s) (VM) including the applications running on top of it. This software abstraction layer is known as the virtual machine monitor (VMM) or a hypervisor.

In practice, the specific virtualization type is always a trade-off among the proximity of control

and access to the actual underlying hardware platform, the performance offered to the hosted

software, and the flexibility of the development and deployment. But to what extent and what

can we do about it?

7.1 Virtual machine architectures

Before describing the different types of virtualization that is provided within this section, it is

necessary to explain the architecture of virtual machines that is, in fact, closely related to the

architecture of a hardware platform.

7.1.1 Hypervisor types

The design and implementation of the hypervisor is of highest importance because it has a direct influence on the throughput of VMs, which can be very close to that of the bare machine

hardware. Hypervisors are the primary technology of choice for system virtualization since it is

possible to achieve high flexibility in how virtual resources are defined and managed. The replication provided by hypervisors is achieved by partitioning and/or virtualizing platform resources. There are two main types of hypervisors (Figure 6):

P a g e | 17

Challenges in Online Charging System virtualization

App

App

Guest OS

Guest

OS

VM

Bin/libs

VM

Bin/libs

App

Bin/libs

Bin/libs

Guest OS

Guest

OS

VM

App

VM

App

App

Hypervisor

Hypervisor

Host OS

Hardware

Hardware

FIGURE 6 : TYPE 1 (BARE METAL) HYPERVISORS VERSUS TYPE 2 HYPERVISORS.

Bare metal or type 1 that runs directly on the physical hardware platform. It virtualizes

the critical hardware devices offering several independent isolated partitions. It also

provides basic services for inter-partition control and communication.

Type 2 or hosted hypervisors that run on top of an operating system that acts as a

host. These are hosted hypervisors since they run within a conventional operating system environment. The hypervisor layer is typically a differentiated software level on

top of the host operating system (that runs directly above the hardware), and the

guest operating system runs at a different level.

Note: the distinction between these two types is not necessarily clear. For example, the Linux's

Kernel-based Virtual Machine (KVM) that effectively convert the host operating system to a

type 1 hypervisor, can also be categorized as type 2 hypervisor.

Conclusion: The type 1 hypervisors are suitable for real-time systems since its

VMs are close to the hardware and able to use hardware resources directly rather than

going through an operating system. It is more efficient than a hosted architecture and

delivers greater scalability, robustness and performance. Therefor type 2 hypervisor is

not in the scope for this paper.

Bare-metal hypervisors are divided into two subcategories: Monolithic and Micro-kernelized

designs. The difference between them are the way of dealing with the device drivers. Example,

Hyper-V (Microsoft) and Xen (open source) are micro-kernelized hypervisors which leverages

para-virtualization together with full-virtualization, while VMware ESXi is a monolithic hypervisor which leverages hardware emulation [7].

7.1.2 Monolithic vs Microkernelized

Monolithic hypervisor (Figure 7), such as VMware ESXi, handles all the hardware access for

each VM. It hosts drivers for all the hardware (storage, network, and input devices) that the

VMs need to access [7].

The biggest advantage of this design is that it does not require a host operating system. The hypervisor acts as the operating platform with drivers, so it is easily possible

to run multiple operating systems, even heterogeneous ones, on the same hardware.

P a g e | 18

Challenges in Online Charging System virtualization

Then, the hypervisor takes care of the necessary network emulation, in order to let the

VMs communicate with the outside world and with each other.

The drawbacks are limited hardware support and instability - the device drivers are directly incorporated into the layers of functionality, which means that if one driver is hit

by an update, bug, or security vulnerability, the entire virtual architecture within that

physical machine will be compromised [8].

Microkernelized Hypervisor

Monolithic Hypervisor

Management

Console

Parent Partition

Virtual Machines

Virtual Machines

Drivers

Hypervisor

Drivers

Hypervisor

Hardware

Hardware

FIGURE 7: MICROKERNELIZED VS MONOLITHIC HYPERVISOR

In contrast, Micro-kernelized design (Figure 7), e.g. Hyper-V Server and Xen, does not require

the device drivers to be part of the hypervisor layer. Instead, drivers for the physical hardware

are installed in the operating system, which is running in the parent partition (VM) of the hypervisor [9]. This means that there is no need to install the physical hardware supporting device drivers for each guest operating system running as a child partition, because when these

guest operating systems need to access physical hardware resources on the host computer,

they simply do this by communicating through the parent partition [10]. This communication

can be via a very fast memory-based bus in case the child partition is para-virtualized, or using

the emulated devices provided by the parent partition in case of full-virtualization.

Micro-kernalized is out of the scoop for this paper. However, worth mentioning is that studies

has shown that Xen predictability is very close to the bare-machine (non-virtualized hardware)

which makes it a candidate to be used for soft real-time hypervisor [11].

7.2 Virtualization techniques and performance characteristics

The various techniques to virtualization environments are used in different slightly different

ways and even with some semantic overlapping. In this section, the VMware ESXi (Elastic Sky X

Integrated) are put forward and summarized, and references to its different performance characteristics are given.

7.2.1 Full virtualization

Full virtualization allows for running unmodified guest operating systems by full emulation of

the hardware that they believe to be running on top of, e.g., network adapters and other peripherals. This way, it is easily possible to run multiple operating systems, even heterogeneous

ones, on the same hardware. Then, the hypervisor takes care of the necessary network emulation, in order to let the VMs communicate with the outside world and with each other.

Challenges in Online Charging System virtualization

P a g e | 19

We have to take a closer look at VMware ESXi, as it is the main stream vendors, and chosen as

hypervisor by the company. ESXi as is a type 1 monolithic hypervisor. It is an operating systemindependent hypervisor based on the VMkernel OS, interfacing with agents and approved

third-party modules that run atop it. VMkernel is a POSIX-like (Unix style) operating system developed by VMware and provides certain functionality similar to that found in other operating

systems, such as process creation and control, signals, file system, and process threads.

FIGURE 8 : ARCHITECTURE OF VMWARE ESXI

Designed specifically to support running multiple virtual machines and provides such

core functionality as resource scheduling, I/O stacks, and device drivers [12].

Full virtualization environment, like ESXi, will cause continuous traps to the hypervisor

that intercepts the special privileged instructions that a guest operating system kernel

believes to execute, but whose effect is actually solely emulated by the hypervisor. Examples of such privileged instructions are accesses to peripheral registers. The implied

performance issues may be mitigated by recurring to hardware-assisted virtualization

[13].

7.2.2 Hardware assisted virtualization

Hardware-assisted virtualization is a technique where the hardware provides additional features that speed-up the execution of VMs. For example, an additional layer for translation in

hardware of virtualized to physical memory addresses enables unmodified guest operating systems to manipulate their page tables without trapping; this is provided by the VT-x technologies from Intel [13] or IOMMU from AMD, among others. With these modifications, VMs can

actually manipulate only virtual page tables, while the physical page tables are under the control of the hypervisor. Another noteworthy example is the one of a physical network adapter

capable of behaving as multiple logical ones, each with its own MAC address and send/receive

queues, to be used by different VMs running on the same physical system. The Single-Root I/O

Virtualization (SR-IOV) technology from Intel [14] goes beyond network peripherals, allowing

for extending the concept to any PCIe peripheral, see Figure 9. These functions may be correlated to the three NFVI domains and subsequently address Compute, Network and Storage Acceleration.

Challenges in Online Charging System virtualization

P a g e | 20

FIGURE 9 : NATIVELY AND SOFTWARE SHARED

The biggest advantage of this design improved I/O throughput and reduced CPU utilization, lower latency, improved scalability.

This designs drawbacks are: portability; Live Migration is not possible, reduced features for VM configuration patching. But probably the major problem is the lack of full

support for SR-IOV in management and orchestration solutions for NFV.

7.2.3 Para-virtualization

It was the open-source Xen hypervisor that brought in the concept of para-virtualization, also

used by Hyper-V (MS product name Enlightened [15]). It is a technique by which the guest operating system is modified so as to be aware to be running within a VM. This results in avoiding

the wasteful emulation of virtualized hardware. Rather, the modified kernel and drivers of the

guest operating system are capable of performing direct calls to the hypervisor (also known as

hypercalls) whenever needed6. The evolution of hardware assisted virtualization, coupled with

para-virtualization techniques, allow virtualized applications now days to achieve an average

performance only slightly below the native one.

Para-virtualization is more suitable for real-time cloud computing since it identifies

the specific components of an operating system that have to be virtualized in order to

optimize performance.

The guest OS must be tailored specifically to run on top of the virtual machine monitor

(VMM). And it eliminates the need for the virtual machine to trap privileged instructions. Trapping, means of handling unexpected or unallowable conditions, can be timeconsuming and can adversely impact performance in systems that employ full virtualization.

The performance advantages of para-virtualization over full-virtualization have to face the weakened

isolation from a security perspective, but these aspects are outside the scope of this paper.

P a g e | 21

Challenges in Online Charging System virtualization

7.2.4 Container-based virtualization

A lightweight alternative to the hypervisors is container-based virtualization, also called operating system level virtualization, sometimes it's not really talked about as virtualization at all.

But as container-based virtualization can have advantages in terms of performance and scalability we will take a closer look at it.

Bin/libs

App

App

Bin/libs

Bin/libs

Guest OS

Guest

OS

VM

Bin/libs

Apps

VM

Apps

Container

Container

App

Apps

Bins/libs

Host OS

Hypervisor

Hardware

Hardware

FIGURE 10 : CONTAINER-BASED VIRTUALIZATION VERSUS HYPERVISOR-BASED VIRTUALIZATION (TYPE1)

The containers provides operating system-level virtualization through a virtual environment

that has its own process and network space, usually imposes little to no overhead instead to

creating a full-fledged VM. Each container are isolated but must have the same OS and, where

appropriate, libs / bins.

The separation can improved security, hardware independence, and added resource

management features [16].

However, this operating system level virtualization are not as flexible as other virtualization approaches. For example, with Linux, different distributions are fine, but other

operating systems such as Windows cannot be hosted. [17]

Note: The Internet Engineering Task Force (IETF) have an ongoing analysis the challenges of using VM for NFV workloads and how containers can potentially address these challenges [18].

Challenges in Online Charging System virtualization

P a g e | 22

7.3 Know the performance curve

In the world of networking there is an old adage which says: If you have a network problem,

throw bandwidth at it. This type of statement is usually true in that more bandwidth will

make a network problem go away. A similar saying happens in compute environments: increase CPU (processing power) if there are application issues. When applying this to VNF performance, it is important to apply the right number of resources for the desired performance.

Most VNF vendors are providing guidelines on performance from a minimum to maximum resource allocation.

FIGURE 11 : CPS VS VCPU CORES PERFORMANCE CURVE7

Using Ixias IxLoad to test HTTP connections/sec increasing from 2 vCPU to 6 vCPU achieves significant

performance improvements. When moving to 8 or beyond, the performance flattens or can even decrease.

P a g e | 23

Challenges in Online Charging System virtualization

8 Real-time challenges in cloud computing

This section will identify some of the real-time challenges in cloud computing.

8.1 Terminology mapping

In essence, both real-time and cloud computing communities have the goal of guaranteeing

the assigned resources or levels of contracted services between users and the execution platform, or between VMs and the platform. However, what it means to a cloud computing person

(typically coming from the fields of networking, distributed systems, or operating systems)

may be somehow different from the idea conceived from a real-time systems point of view.

Therefore, a clarification of concepts and terminology mapping is proposed in this section as

shown in Table 2.

TABLE 2 - TERMINOLOGY MAPPING FOR THE DOMAINS OF CLOUD COMPUTING AND REAL-TIME.

Cloud terminology

Real-time concern

Multi-tenancy

Avoidance of interference of

multiple workloads in the

same platform

Spatial and temporal

isolation

Dynamic provisioning

Autonomous configuration, allocation, and deployment of

virtual cluster resources

Dynamic resource Management

Service Level Agreements (SLA)

Guaranteed QoS levels for applications that include the

computational resources and

networking performance.

Resource contracts and

budget enforcement

QoS guarantees

Quality of service with respect

to network performance parameters that guarantee the

level of service for multiple

users

Temporal guarantees

Applications must not interfere either

on the processor resource (to avoid

deadline misses) nor on the usage of

memory (to avoid synchronization delays that may cause deadline misses)

Resource managers are typically implemented close to (and even as part of)

the real-time operating system. They include the needed policies to temporarily

scale vertical (up/down) resource assignments if it does not cause any temporal or spatial conflict

Applications negotiate with the resource

managers their required resource assignments. Resource managers have admission policies that are able to determine whether a specific assignment can

be fulfilled. If the contract is set, the system will guarantee it at all times

Timeliness of the execution is required

in real-time systems.

Results, i.e., either operations or communications, must fulfill specific deadlines. QoS is also considered as tradingoff assigned resources for the quality of

the output

To ensure redundant infrastructures are

in different locations. This enables a VNF

instance to be implemented on different

physical resources, e.g. compute resources and hypervisors, and/or be geographically dispersed as long as its overall end-to-end service performance and

other policy constraints are met

In this line, one may think of the increasingly importance of network functions virtualization,

which we will take a closer look at in next session. Still there is an increasing need for controlling the temporal behavior of virtualized software, making their behavior more predictable.

P a g e | 24

Challenges in Online Charging System virtualization

9 Network Functions Virtualization

This session offers an overview of the technology and architecture for network function virtualization.

In the telecommunication industry, there is a major shift from hardware-based provisioning of

network functions to a software based provisioning paradigm where virtualized network functions are deployed in private or hybrid clouds of CSP [2].

Network function virtualization (NFV) technology has been developed by the European Telecommunications Standards Institute (ETSI) and reviled in 2012. ETSI NFV aims to define an architecture and a set of interfaces so that physical network functions, like routers, firewalls,

content distribution networks (CDNs) and Telco applications, can be transformed: from software applications designed to run on specific dedicated hardware into decoupled applications

called virtual network functions (VNF) deployed on VMs or containers, on commercial offthe-shelf (COTS) equipment.

The VMs are deployed on high-volume servers which can be located in datacenters, at network

nodes, and in end-user facilities. The most VMs provide on-demand computing resources using

cloud. Cloud-computing services are offered in various formats [19]: IaaS, PaaS, Software-as-aService (SaaS), and Network-as-a-Service (NaaS). There is no agreement on a standard definition of NaaS. However it is often considered to be provided under IaaS. The NFV technology

takes advantage of infrastructure and networking services (IaaS and NaaS), see Figure 12, to

form the network function virtualization infrastructure (NFVI) [20], also called Telco-cloud.

NaaS

IaaS

NFVIaaS

FIGURE 12 : MAPPING IAAS AND NAAS WITHIN THE NFV INFRASTRUCTURE

As mentioned above there are different service offerings in cloud computing. Depending on

where cloud stack are split between tenant and provider defines the type of service offering

(X-as-a-Service). For the traditional IT systems the whole stack are managed by the owner,

whereas in e.g. PaaS the tenet are responsible for the top layers (data and application), and

the provider of the PaaS manage everything below. Compared to the Telco-cloud (IaaS) where

the tenet (for VNF) are responsible for the OS8 layer and above, and the provider of the IaaS

manage everything below. Figure 13 below illustrates how the different offerings of cloud

computing services are divided and managed.

This could various, in some cases the OS stack are split into two, as the figure below.

Challenges in Online Charging System virtualization

P a g e | 25

FIGURE 13 MANAGEMENT OF DIFFERENT CLOUD COMPUTING SERVICE OFFERINGS

9.1 NFV framework

The ETSI NFV group has defined the NFV architectural framework at the functional level using

functional entities and reference points, without any indication of a specific implementation.

The functional entities of the architectural framework and the reference points are listed and

defined in [21] and shown in Figure 14.

FIGURE 14: NFV REFERENCE ARCHITECTURAL FRAMEWORK

9.2 VNF

A Virtualized Network Function (VNF) is a functional element of the NFV architecture framework as represented on Figure 14 above. A VNF could e.g. be a Telco applications like OCS or

IMS component.

Note : When designing and developing the software that provides the VNF, VNF Providers may

structure that software into software components (implementation view of software architecture) and package those components into one or more images (deployment view of software

architecture). In the following, these VNF Provider defined software components are called VNF

Components (VNFCs).

Challenges in Online Charging System virtualization

P a g e | 26

The VNF design patterns and properties are described in [22], but will shortly described next.

The goal is to capture all practically relevant points in the design space.

9.2.1 VNF Internal Structure

VNFs are implemented with one or more VNFCs and it is assumed, without loss of generality,

that VNFC Instance map 1:1 (Figure 15) to NFVI Virtualized Container interface (Vn-Nf, see Figure 14). A VNFC in this case is a software entity deployed in a virtualization container, see Figure 16. A VNF realized by a set of one or more VNFCs appear to the outside as a single, integrated system, illustrated as circles in the figure.

FIGURE 15 : VNF INSTANTIATION

Parallelizable: Instantiation of multiple time per VNF instance but with constraints on number.

Non-Parallelizable: Instantiation once per VNF

FIGURE 16 : VNF COMPOSITION

Remark: Virtualization Containers in a VNF context are not necessary similar to in section 7.2.3.

9.2.2 VNF states

Each VNFC of a VNF could either be stateless or stateful.

Statefulness will create another level of complexity, e.g. a session (transaction) consistency has to be preserved and has to be taken into account in procedures such as

load balancing.

The data repository holding the externalized state may itself be a stateful VNFC in the

same VNF.

The data repository holding the externalized state may be an external VNF.

Challenges in Online Charging System virtualization

P a g e | 27

9.2.3 VNF Scaling

A VNF can be scaled by scaling one or more of it VNFC, depending on type of workload. Three basic

models of VNF scaling have been identified:

Auto scaling:

-

VNFM will trigger the scaling of VNF according to the defined rules setup for the VNF in

the VNFM. For VNFM to trigger the scaling the VNF Instance's state has to be monitored by tracking its events on an infrastructure-level and/or a VNF-level. Infrastructure-level events are generated by the VIM. VNF-Level events may be generated by the

VNF Instance or its EM. Auto scaling supports both horizontal and vertically scaling.

On- demand scaling:

-

VNFM will be trigger the scaling of VNF through explicit request from VNF or its EM,

based on monitoring of VNFs VNFCs states. On-demand scaling supports both horizontal and vertically scaling.

Scaling based on management request:

-

Manual triggered scaling. OSS/BSS trigger scaling based on rules in NFVO or by human

operator triggers scaling through the VNFM.

Vertically scaling (out/in) allows adding/removing VNFC instance(s) that belong to the VNF.

Horizontal scaling (up/down) allows dynamic (adding/removing) resources from existing VNFC

that belong to the VNF.

9.2.4 VNF Load balancing

There are different type of load balancing (LB)

VNF-internal LB

The Peer NF sees the VNF instance as one logical NF. The VNF has at least one VNFC that can

be replicated and an internal LB (which is also a VNFC) that direct flows between different

VNFC instances, see Figure 17.

Challenges in Online Charging System virtualization

P a g e | 28

FIGURE 17 : VNF INTERNAL LOAD BALANCER

VNF-external LB

The Peer NF sees the many VNF Instances as one logical NF. A load balancer external to the

VNF (which may be a VNF itself) direct flows between the different VNF instances. (Important,

not the VNFCs!), see Figure 18.

FIGURE 18 : VNF EXTERNAL LOAD BALANCER

End-to-End Load Balancing

The Peer NF sees the many VNF instances as many logical NFs. In this case the Peer NF itself

contains load balancing functionality to balance between the different logical interfaces.

FIGURE 19 : VNF E2E LOAD BALANCING

9.3 NFV Management and Orchestration

The NFV Management and Orchestration (NFV-MANO), called MANO from now on, acts as the

heart and brain of NFV architecture. The NFV decoupling of Network Functions from the physical infrastructure results in a new set of management functions. Their handling requires a new

and different set of management and orchestration functions. MANO has the role to manage

the NFVI and orchestrate the allocation of resources needed by the VNFs. Such coordination is

necessary now because of the decoupling of the Network Functions software from the NFVI.

Traditional network functions needs just one management system i.e. an FMS or at most supported by an OSS. The NFV network, on the other hand, needs multiple managers i.e. a VIM

Manager, a VNF Manager and an Orchestrator.

See Appendix C: MANO architecture for more details.

Challenges in Online Charging System virtualization

P a g e | 29

10 NFV challenges

This section identify some of the VNF challenges in NFV, with suggested solutions and requirements.

Although NFV is a promising solution for CSP, it faces certain challenges that could degrade its

performance and hinder its implementation in the telecommunications industry. In this section, some of the NFV requirements and challenges, and proposed solutions are discussed. Table 3 summarizes this section.

TABLE 3 : NFV CHALLENGES AND REQUIREMENTS

Challenges

Security

Description

Virtualization security risks according to

functional domains:

1) Virtualization environment domain

(Hypervisor):

Unauthorized access or data leakage.

2) Computing domain:

Shared computing resources: CPU,

memory, disketc.

3) Infrastructure networking domain:

Shared logical-networking layer

(vSwitches).

Shared physical NICs.

Network isolation for tenants and application.

Computing performance

The VNF should provide comparable

performance to NF running on proprietary hardware equipment.

VNF interconnection

Virtualized environment has different

approaches from classical network function interconnection.

Portability

VNFs should be decoupled from any underlying hardware and software. VNFs

should be deployable on different virtual environments to take advantage of

virtualization techniques like live migrations.

Operation and management Existence

with legacy networks Carrier-grade

service assurance

VNFs should be easy to manage and migrate with existing legacy systems without losing the specification of a carriergrade service.

Solution and Requirements

Security implementations according to

functional domains:

1) Virtualization environment domain

(Hypervisor):

Isolation of the served virtual-machine space, with access provided only

with authentication controls.

Use virtual data centers (VDCs) that

serve as resource containers and zones

of isolation.

2) Computing domain:

Private and shared disk/memory allocations should be erased before their

re-allocation, and verify that it has be

erased.

Lawfull encryption. Data should be

used and stored in an encrypted manner by which exclusive access is provided only to the VNF.

3) Infrastructure domain (networking):

Usage of secured networking techniques (TLS, IPSec, or SSH).

VNF software could achieve high performance using the following techniques:

Multithreading to be executed over

multiple cores, or could be scaled over

different hosts.

Independent memory structures to

avoid operating-system deadlocks.

VNF should implement its own network stack.

Processor affinity techniques should

be implemented.

Important to allocate the proper

amount of vCPU to each VM used by

the VNF. Over-provisioning a VNF with

too much CPU may be wasteful or even

degrade the performance.

VNFs should take advantage of accelerated vSwitches and use NICs that are

single-root I/O virtualization (SR-IOV)

[14] compliant. Caveats: Breaks live migrations of VMs.

The VNF development should be based

on a cross-platform virtual resource

manager that ensure its portability.

The NFVO should support a heterogeneous VNF environment to reduce vendor dependencies. E.g. OpenStack Tracker [23].

To achieve the desired operation and

management performance, a standard

template of NFV framework entities

should be well-defined. It should be

Challenges in Online Charging System virtualization

Scalability - Resource Allocation & Optimization

VNFs should be able to temporarily

scale vertically resource assignments if

it does not cause any temporal or spatial conflict.

P a g e | 30

able to interact with legacy management systems with minimal effects on

existing networks. The NFVO must monitor network function performance almost in real time.

To achieve automated dynamic scaling

of the resources the design patterns of

Auto- or On-demand scaling should be

implemented. For a more manual approach the Scaling based on management request pattern is good enough.

Challenges in Online Charging System virtualization

P a g e | 31

11 Solution architecture

This section are proposing a solution architecture of for OCS as VNF in the Telco-cloud (NFVI)

and identify architectural impacts.

The OCS system has the following high level requirements

Front-End

Site + Geo redundant

Stateless9

Active/active

Communicate with BE+ELM via internal network for charging and monitoring.

Communicate with Network domain (EPG/MTAS/DSC) via signaling network (SIG).

Operation and maintenance(OAM) network for admin and monitoring

SAN for backups

RTO FE-BE max 200ms (avg. ~100ms)

Back-End

Site + Geo redundant

Average latency may not exceed 10 ms between the sites.

Stateful

Active/Standby

Communicate with FE+ELM via internal network for charging and monitoring.

Need an OAM network connection for admin and integration with Business Support System (BSS)

Need a SAN for backups

Support horizontal scaling of application.

Dont support horizontal scaling of relationship database.

DB replication

No Single point of failure (SPOF)

Element Manager (ELM) Alarm/Event monitor for OCS.

Geo redundant (2 nodes)

Active/Standby

Communicates with FE+BE via internal network.

Need an OAM network connection for O&M and integration with Operation Support System (OSS).

Located on the same subnet as FE & BE to work as deployment server.

We will start with describing a generic VNF cluster architecture for OCS and later deploy the

components therein.

See remark in section 6.1

Challenges in Online Charging System virtualization

P a g e | 32

11.1 Generic VNF Cluster Architecture

The OCS VNF are built according to Component Based Architecture (CBA) using same component base for network connectivity. Generally, VNF, follow same network connectivity design

independently on the VNF type/function. However, there is a degree of flexibility in network

connectivity providing, if necessary, adjustments for achieving overall better network design.

Generally speaking, every VNF can connect to a number of different Virtual Routing Function

(VRF) on site routers. However, in order to achieve resiliency at VNF level, it is required to connect a VNF with at least two interfaces for each VRF/VPN for external communication.

Figure 20 shows example of generic VNF cluster architecture with connectivity to multiple subnets for signaling (SIG) VRF

FIGURE 20 : GENERIC VNF CLUSTER ARCHITECTURE WITH CONNECTION TO MULTIPLE SIG SUBNETS

There are two distinct types of VNF included in the cluster architecture: System Controllers

(SC) VNF and Payload (PL) VNF. The SC VNF are none-traffic nodes and handling system management/control of the PL VNF. The PL VNF are traffic nodes and connected to the signaling

network.

The figure above shows LB stretched over both types of VNF: SC and PL. In order to keep homogeneous networking design for all VNF in the NFVI, two SC for communicating externally

with both VRF enabled OM network (OM-SC) and emergency OM network (OM-CN) are used.

OM-SC is used for communicating with the BSS domain. The OM-CM is used for some auxiliary

functions as well, such as NTP sync, etc.

Independently on number of PL VNF included in the cluster, as a result of dimensioning output,

there are two dedicated PL VNF which are communicating externally through Signaling VRF.

Independently on the VNF type, there is an internal (backplane) network for intra-cluster

communication. Different types of VNF may have different number of internal networks required for their operation. The number of internal networks may vary between one and three.

However, one internal network is always present in any VNF. The backplane is also used for

backup/restore via the vSAN.

It is important to notice that current VNF Cluster Architecture is not limited to such design,

however, it is chosen due to the following reasons:

Homogeneous connectivity of all VNF to external networks

Two VNF of each kind are chosen in order to provide redundancy

P a g e | 33

Challenges in Online Charging System virtualization

Traffic dimensioning results show that two Traffic VNF for OM and Signaling are enough to cope with the traffic load.

It is possible to increase number of traffic VFN for each type of traffic

during runtime (horizontal), although will most likely requires cluster

reboot for changes to take effect.

Another example of network connectivity is when all PL nodes are taking part in sending and

receiving traffic along with load balancing of processing capacity. This example is, as generic as

previous, shown in Figure 21.

Net :Backplane : internal

.

LB

LB

VNF (PL)

VNF (PL)

VNF (PL)

VRF

VRF

VRF

VNF (PL)

VRF

Net: SIG A

VNF (SC)

VRF

VNF (SC)

VRF

Net: OM-SC

Net: OM-CN

FIGURE 21 : : GENERIC VNF CLUSTER ARCHITECTURE WITH ALL PL VNF CONNECTED TO THE SAME SUBNET AND

TAKING PART IN LOAD SHARING OF BOTH TRAFFIC AND PROCESSING CAPACITY

The BE and the ELM in OCS are not traffic nodes and will therefore not follow the generic design with separated SC and PL. They will be plane VNFs with external load balancer and VRF.

Note: ELM in this context is not the same as element manager (EM) in the NFV.

11.2 Deploying OCS as VNFs

The OCS system components are deployed as followed:

VNFs

Front-Ends: Each site has two pay-load FE-VNF with non-parallelizable instantiation of one VNF

container with capabilities to handle real-time transactions (DCCA-server) and trigger onlinecharging (OCF-client). Also two service control FE-VNF with non-parallelizable instantiation of

VNF containers to handle operation and maintenance (OAM) of all front-end VNFs. Site B has

the same instantiation. The pay load VNFs are active at both sites to take traffic. The system

control VNFs has only one active for all sites, the rest are standby.

Figure 22 shows a deployment view of OCS Front-End VNFs with instantiation of with associated function components for one site.

Challenges in Online Charging System virtualization

P a g e | 34

FIGURE 22 : DEPLOYING VIEW OF OCS FRONT-END AS VNFS ONE SITE

Back-Ends: Each site has one BE-VNF with parallelizable instantiation of 3 components. VNFC1

with capabilities to handle rating (RT), account balance management (ABMF) and charging

(OCF-server). VNFC2 has the database (SDP). VNFC3 act as system manager/controller (OAM)

for the back-end.

ELM: Each site has one ELM-VNF with non-parallelizable instantiation of one VNF container

with capabilities to handle alarm and event handling (ELM), as well as management of the ELM

itself (OAM).

Figure 23 shows a deployment view of OCS Back-End VNFs with instantiation of VNFCs with associated function components for one site.

FIGURE 23 : DEPLOYING VIEW OF OCS BACK-END AND ELM AS VNFS ONE SITE

The reason for having two BE-VNF one each site is to provide redundancy (no SPOF). Most

likely just one BE-NVF per site is enough, but its unclear to me if the virtual routers could be

configured redundant for a single VNF at this point. Further research is needed.

Challenges in Online Charging System virtualization

P a g e | 35

Principally the splitting of a cluster is done by keeping the primary database on one site and

moving the standby database to another site. Furthermore, traffic handling nodes (FE) are divided between the sites. The external VNF load balancer for ELM and BE shall direct flows to

the active VNF that has the appropriate configured/learned state. Replication is done between

the active and standby databases. Figure 24 shows the geographically split cluster following a

high availability (HA)/disaster recovery (DR) model. Note: example has one BE-VNF per site.

FIGURE 24 : GEOGRAPHICALLY SPLIT CLUSTER OVERVIEW

The BE-VNF and FE-VNF are deployed in a single tenant virtual data center (vDC) on each site

for isolation of the served VM space, and provided access only with authentication controls,

shown below in Figure 25. The VNFs in the vDC should be able to temporarily scale vertically

resource assignments if it does not cause any temporal or spatial conflict. Carefully plan how

Allocation Models are placed in Provider vDCs [24]. The average latency between site A and B

must not exceed 10 ms during normal operation.

FIGURE 25 : TENANTS VIEW OF VIRTUAL DATA CENTERS EXECUTION WITH OCS VNFS10

10

Note that the figure is simplified, e.g. missing load balancer for the OAM network.

Challenges in Online Charging System virtualization

P a g e | 36

The business support system (BSS) communication via the OAM network should be encrypted.

The VNFs should take advantage of accelerated vSwitches and use NICs that are single-root I/O

virtualization (SR-IOV). The VNFC VM should not have more than 6vCPU, if needed - scaled

horizontal.

11.2.1 Scaling and fault handling

The ELM component monitors all of the OCS VNFs. ELM can trigger On-demand to the VNFM.

Horizontal scaling is supported by FE-VFN, by adding payload VNFs. BE-VNF will only support

vertical scaling.

ELM also send alarm related to application, middleware and OS to the fault management system one the OSS side. No EMs are introduced at this point.

In case of a site failover for the BE-VNF, the external VNF LB has be identified by the failover

and point to the active. The same goes for the system control FE-VNF.

11.3 Analyze of the Solution architecture

I will not draw any conclusions whether OCS architecture are compliance to the Telco-cloud

based on this theoretical study. Further analysis and a suggested proof of concept are needed

before that. But from a theoretical point of view, subject to the investigation time has been

very short, I have not find any major obstacles that prevent a deployment. The lack of API between EM and VNFM has not been identified as critical, as OCS is a fairly static system. My only

concern, from a scalability perspective, is that the Back-End doesnt have the capability of horizontal scaling with its current architecture. The risk of capacity shortage is obvious, based on

the the performance curve, as the vertical scaling reaches its limits.

One alternative to support horizontal scaling of the Back-End is to introduce a new component

Account Finder (AF). The AF should hold the location information of which Back-End has

which subscriber account balance state. Another alternative could be customer segmentation.

How this affect the rest of the OCS architecture is out of scope for this paper, as further research is needed.

Challenges in Online Charging System virtualization

P a g e | 37

12 Future directions

This section points out open areas of research related to the NFV. They are additional to the

once earlier identified in this paper and have been identified during the research but not highlighted.

Network virtualization for cloud computing is focused at the different networking layers (layer

3, 2, or 1). For example, Layer 3 VPNs or Virtual Private Networks (L3VPN) are distinguished by

their use of layer 3 protocols (e.g., IP or MPLS) in the VPN backbone to carry data between the

distributed end points with possible heterogeneous tunneling techniques. QoS with respect to

network performance has to be guaranteed even when multiple users are sharing a specific infrastructure simultaneously. Some studies (such as [25]) have shown that performance can improve significantly if virtual machines are interoperated via a high-speed cluster interconnect.

There are some implementations that back up this idea based on the usage of InfiniBand [26],

providing improved networked latencies as compared to IaaS solutions based on Ethernet.

However, merging networking research with real-time design and scheduling techniques is an

open area of research.

The VNFM (Appendix C: MANO architecture) is attracting a lot of interest from all directions,

and while the ETSI standards are not yet set in stone and neither are the companies MANO

strategies and products [27]. As the NFV initiative has developed over the past several years,

the focus of attention in NFV management has shifted perceptibly. Initially, the VIM (e.g.,

OpenStack, VMware, etc.) at the bottom of the stack was the issue; then, the special requirements and complexities of Telco network orchestration kicked in and attention shifted up to

the NFVO at the top. So now, its the turn of the VNFM, which notwithstanding the awesome

responsibilities of the NFVO is the bit in the middle that often turns out to be the most

contentious.

Put very simply, the VNFM is responsible for the lifecycle management of the VNF under the

control of the NFVO, which it achieves by instructing the VIM. However, this is the big question: who is best placed to supply the VNFM? Vendors are taking different approaches to

VNFM development; they will need to be harmonized if carriers are to realize multi-vendor

NFV MANO. Is the mark of a good VNF supplier one that also provides its own VNFM? Is the

mark of a good MANO supplier one that can accommodate a VNF without a VNFM? Is the

mark of a good NFVI platform vendor one that takes away the need for a VNF supplier to even

develop a VNFM? There are likely many more angles to explore around the VNFM, but from a

CSPs perspective more research how to reduce the risk of multi-vendor NFV implementations

is an open area of research.

Challenges in Online Charging System virtualization

P a g e | 38

13 Conclusions

Despite that it has been a reality for some years, Cloud computing is a fairly new paradigm for

dynamically provisioning computing services (X-as-a-service). Located in data centers using virtualization technology allowing server consolidation and efficient resource usage in general. In

the last decades, important advances mainly at machine virtualization, network, and storage

levels have contributed to the wide spread usage and adoption of this paradigms in different

domains.

Real-time application domains are still behind in the full adoption of cloud computing, due to

their strong timing requirements and needed predictability guarantees. Merging cloud computing with real-time is a complex problem that requires real-time virtualization technology to

consolidate the predictability capabilities.

This paper has analyzed some of the problems and challenges for achieving real-time cloud

computing as a first step towards presenting an abstract map of the situation today, identifying the needed elements on different levels to make it happen. The presented concerns range

from the hypervisor structure and role, the different possible types of virtualization and their

respective performance, general resource management concerns, and the important role of

the network in the overall picture of virtualization technology. For the latter, this paper has described the OCS architecture, the different components and how they interact, and the realtime challenges that appear therein. A terminology mapping between cloud and real-time systems domains has been settled in order to connect both areas.

Furthermore, an overview of the technology and architecture for NFV that aims to revolutionize the telecommunication industry by decoupling network functions from the underlying proprietary hardware has been presented. Although NFV is a promising solution for CSP, it faces

certain challenges that could degrade its performance and hinder its implementation in the

telecommunications industry. Some of the NFV challenges, and proposed solutions have been

discussed. Lastly, a proposed solution architecture of for OCS as VNFs in the Telco-cloud (NFVI)