Académique Documents

Professionnel Documents

Culture Documents

Abdi EVD2007 Pretty PDF

Transféré par

aliTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Abdi EVD2007 Pretty PDF

Transféré par

aliDroits d'auteur :

Formats disponibles

The Eigen-Decomposition:

Eigenvalues and Eigenvectors

Herv Abdi1

1 Overview

Eigenvectors and eigenvalues are numbers and vectors associated

to square matrices, and together they provide the eigen-decomposition of a matrix which analyzes the structure of this matrix. Even

though the eigen-decomposition does not exist for all square matrices, it has a particularly simple expression for a class of matrices often used in multivariate analysis such as correlation, covariance, or cross-product matrices. The eigen-decomposition of this

type of matrices is important in statistics because it is used to find

the maximum (or minimum) of functions involving these matrices. For example, principal component analysis is obtained from

the eigen-decomposition of a covariance matrix and gives the least

square estimate of the original data matrix.

Eigenvectors and eigenvalues are also referred to as characteristic vectors and latent roots or characteristic equation (in German,

eigen means specific of or characteristic of). The set of eigenvalues of a matrix is also called its spectrum.

1

In: Neil Salkind (Ed.) (2007). Encyclopedia of Measurement and Statistics.

Thousand Oaks (CA): Sage.

Address correspondence to: Herv Abdi

Program in Cognition and Neurosciences, MS: Gr.4.1,

The University of Texas at Dallas,

Richardson, TX 750830688, USA

E-mail: herve@utdallas.edu http://www.utd.edu/herve

Herv Abdi: The Eigen-Decomposition

Au 1

8

u1

u2

1

3

-1

12

Au 2

-1

a

b

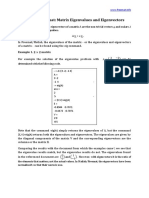

Figure 1: Two eigenvectors of a matrix.

2 Notations and definition

There are several ways to define eigenvectors and eigenvalues, the

most common approach defines an eigenvector of the matrix A as

a vector u that satisfies the following equation:

Au = u .

(1)

when rewritten, the equation becomes:

(A I)u = 0 ,

(2)

where is a scalar called the eigenvalue associated to the eigenvector.

In a similar manner, we can also say that a vector u is an eigenvector of a matrix A if the length of the vector (but not its direction)

is changed when it is multiplied by A.

For example, the matrix:

2 3

A=

(3)

2 1

has the eigenvectors:

3

u1 =

2

with eigenvalue 1 = 4

(4)

Herv Abdi: The Eigen-Decomposition

and

1

u2 =

1

with eigenvalue 2 = 1

(5)

We can verify (as illustrated in Figure 1) that only the length of u1

and u2 is changed when one of these two vectors is multiplied by

the matrix A:

2 3 3

3

12

=4

=

(6)

2 1 2

2

8

and

1

1

2 3 1

=

.

= 1

1

1

1

2 1

(7)

For most applications we normalize the eigenvectors (i.e., transform them such that their length is equal to one):

uT u = 1 .

For the previous example we obtain:

.8331

u1 =

.

.5547

We can check that:

.8331

3.3284

2 3 .8331

=4

=

.5547

2.2188

2 1 .5547

and

2 3 .7071

.7071

.7071

=

= 1

.

2 1

.7071

.7071

.7071

(8)

(9)

(10)

(11)

Traditionally, we put together the set of eigenvectors of A in a matrix denoted U. Each column of U is an eigenvector of A. The

eigenvalues are stored in a diagonal matrix (denoted ), where the

diagonal elements gives the eigenvalues (and all the other values

are zeros). We can rewrite the first equation as:

AU = U ;

3

(12)

Herv Abdi: The Eigen-Decomposition

or also as:

A = UU1 .

(13)

For the previous example we obtain:

A = UU1

3 1

2

1

2 3

2 1

4

0

0 1

2 2

4 6

(14)

It is important to note

thatnot all matrices have eigenvalues.

0 1

For example, the matrix

does not have eigenvalues. Even

0 0

when a matrix has eigenvalues and eigenvectors, the computation

of the eigenvectors and eigenvalues of a matrix requires a large

number of computations and is therefore better performed by computers.

2.1 Digression:

An infinity of eigenvectors for one eigenvalue

It is only through a slight abuse of language that we can talk about

the eigenvector associated with one given eigenvalue. Strictly speaking, there is an infinity of eigenvectors associated to each eigenvalue of a matrix. Because any scalar multiple of an eigenvector is

still an eigenvector, there is, in fact, an (infinite) family of eigenvectors for each eigenvalue, but they are all proportional to each

other. For example,

1

(15)

1

is an eigenvector of the matrix A:

2 3

.

2 1

4

(16)

Herv Abdi: The Eigen-Decomposition

Therefore:

1

2

2

=

1

2

(17)

is also an eigenvector of A:

2 3

2

2

1

=

= 1 2

.

2 1 2

2

1

(18)

3 Positive (semi-)definite matrices

A type of matrices used very often in statistics are called positive

semi-definite. The eigen-decomposition of these matrices always

exists, and has a particularly convenient form. A matrix is said to

be positive semi-definite when it can be obtained as the product of

a matrix by its transpose. This implies that a positive semi-definite

matrix is always symmetric. So, formally, the matrix A is positive

semi-definite if it can be obtained as:

A = XXT

(19)

for a certain matrix X (containing real numbers). Positive semidefinite matrices of special relevance for multivariate analysis positive semi-definite matrices include correlation matrices. covariance, and, cross-product matrices.

The important properties of a positive semi-definite matrix is

that its eigenvalues are always positive or null, and that its eigenvectors are pairwise orthogonal when their eigenvalues are different. The eigenvectors are also composed of real values (these last

two properties are a consequence of the symmetry of the matrix,

for proofs see, e.g., Strang, 2003; or Abdi & Valentin, 2006). Because eigenvectors corresponding to different eigenvalues are orthogonal, it is possible to store all the eigenvectors in an orthogonal matrix (recall that a matrix is orthogonal when the product of

this matrix by its transpose is a diagonal matrix).

This implies the following equality:

U1 = UT .

(20)

Herv Abdi: The Eigen-Decomposition

We can, therefore, express the positive semi-definite matrix A as:

A = UUT

(21)

where UT U = I are the normalized eigenvectors; if they are not

normalized then UT U is a diagonal matrix.

For example, the matrix:

3 1

A=

1 3

(22)

can be decomposed as:

A = UUT

q

1

q2

1

2

1

q2

12

1

4 0 q2

1

0 2

2

1

q2

12

3 1

=

,

1 3

with

1

q2

1

2

1

q2

12

(23)

1

q2

1

2

1

q2

12

1

0

=

.

0 1

(24)

3.1 Diagonalization

When a matrix is positive semi-definite we can rewrite Equation

21 as

A = UUT = UT AU .

(25)

This shows that we can transform the matrix A into an equivalent

diagonal matrix. As a consequence, the eigen-decomposition of a

positive semi-definite matrix is often referred to as its diagonalization.

Herv Abdi: The Eigen-Decomposition

3.2 Another definition for positive semi-definite matrices

A matrix A is said to be positive semi-definite if we observe the

following relationship for any non-zero vector x:

xT Ax 0

x .

(26)

(when the relationship is 0 we say that the matrix is negative

semi-definite).

When all the eigenvalues of a symmetric matrix are positive,

we say that the matrix is positive definite. In that case, Equation 26

becomes:

xT Ax > 0

x .

(27)

4 Trace, Determinant, etc.

The eigenvalues of a matrix are closely related to three important

numbers associated to a square matrix, namely its trace, its determinant and its rank.

4.1 Trace

The trace of a matrix A is denoted trace {A} and is equal to the sum

of its diagonal elements. For example, with the matrix:

1 2 3

A = 4 5 6

7 8 9

(28)

trace {A} = 1 + 5 + 9 = 15 .

(29)

we obtain:

The trace of a matrix is also equal to the sum of its eigenvalues:

X

(30)

trace {A} = = trace {}

with being the matrix of the eigenvalues of A. For the previous

example, we have:

= diag {16.1168, 1.1168, 0} .

7

(31)

Herv Abdi: The Eigen-Decomposition

We can verify that:

trace {A} =

= 16.1168 + (1.1168) = 15

(32)

4.2 Determinant and rank

Another classic quantity associated to a square matrix is its determinant. This concept of determinant, which was originally defined as a combinatoric notion, plays an important rle in computing the inverse of a matrix and in finding the solution of systems of linear equations (the term determinant is used because

this quantity determines the existence of a solution in systems of

linear equations). The determinant of a matrix is also equal to the

product of its eigenvalues. Formally, if |A| the determinant of A, we

have:

Y

|A| = with being the -th eigenvalue of A .

(33)

For example, the determinant of matrix A (from the previous section), is equal to:

|A| = 16.1168 1.1168 0 = 0 .

(34)

Finally, the rank of a matrix can be defined as being the number of non-zero eigenvalues of the matrix. For our example:

rank {A} = 2 .

(35)

For a positive semi-definite matrix, the rank corresponds to the

dimensionality of the Euclidean space which can be used to represent the matrix. A matrix whose rank is equal to its dimensions

is called a full rank matrix. When the rank of a matrix is smaller

than its dimensions, the matrix is called rank-deficient, singular,

or multicolinear. Only full rank matrices have an inverse.

5 Statistical properties of

the eigen-decomposition

The eigen-decomposition is important because it is involved in

problems of optimization. For example, in principal component

8

Herv Abdi: The Eigen-Decomposition

analysis, we want to analyze an I J matrix X where the rows are

observations and the columns are variables describing these observations. The goal of the analysis is to find row factor scores,

such that these factor scores explain as much of the variance of

X as possible, and such that the sets of factor scores are pairwise

orthogonal. This amounts to defining the factor score matrix as

F = XP ,

(36)

FT F = PT XT XP

(37)

under the constraints that

is a diagonal matrix (i.e., F is an orthogonal matrix) and that

PT P = I

(38)

(i.e., P is an orthonormal matrix). There are several ways of obtaining the solution of this problem. One possible approach is to use

the technique of the Lagrangian multipliers where the constraint

from Equation 38 is expressed as the multiplication with a diagonal matrix of Lagrangian multipliers denoted in order to give the

following expression

PT P I

(39)

(see Harris, 2001; and Abdi & Valentin, 2006; for details). This

amount to defining the following equation

L = FT F PT P I = PT XT XP PT P I .

(40)

In order to find the values of P which give the maximum values of

L , we first compute the derivative of L relative to P:

L

= 2XT XP 2P,

P

(41)

and then set this derivative to zero:

XT XP P = 0 XT XP = P .

(42)

Herv Abdi: The Eigen-Decomposition

Because is diagonal, this is clearly an eigen-decomposition problem, and this indicates that is the matrix of eigenvalues of the

positive semi-definite matrix XT X ordered from the largest to the

smallest and that P is the matrix of eigenvectors of XT X associated

to . Finally, we find that the factor matrix has the form

1

F = P 2 .

(43)

The variance of the factors scores is equal to the eigenvalues:

1

FT F = 2 PT P 2 = .

(44)

Taking into account that the sum of the eigenvalues is equal to

the trace of XT X, this shows that the first factor scores extract

as much of the variances of the original data as possible, and that

the second factor scores extract as much of the variance left unexplained by the first factor, and so on for the remaining factors.

1

Incidently, the diagonal elements of the matrix 2 which are the

standard deviations of the factor scores are called the singular values of matrix X (see entry on singular value decomposition).

References

[1] Abdi, H. (2003). Multivariate analysis. In M. Lewis-Beck, A. Bryman, & T. Futing (Eds): Encyclopedia for research methods for

the social sciences. Thousand Oaks: Sage.

[2] Abdi, H., Valentin, D. (2006). Mathmatiques pour les sciences

cognitives (Mathematics for cognitive sciences). Grenoble: PUG.

[3] Harris, R.j. (2001). A primer of multivariate statistics. Mahwah

(NJ): Erlbaum.

[4] Strang, G. (2003). Introduction to linear algebra. Cambridge

(MA): Wellesley-Cambridge Press.

10

Vous aimerez peut-être aussi

- Difference Equations in Normed Spaces: Stability and OscillationsD'EverandDifference Equations in Normed Spaces: Stability and OscillationsPas encore d'évaluation

- Exercises of Matrices and Linear AlgebraD'EverandExercises of Matrices and Linear AlgebraÉvaluation : 4 sur 5 étoiles4/5 (1)

- Eigenvector and Eigenvalue ProjectDocument10 pagesEigenvector and Eigenvalue ProjectIzzy ConceptsPas encore d'évaluation

- Properties and SignificanceDocument19 pagesProperties and SignificancexcalibratorPas encore d'évaluation

- Dimensionality Reduction Unit-5 Dr. H C Vijayalakshmi: Reference 1. AmpleDocument66 pagesDimensionality Reduction Unit-5 Dr. H C Vijayalakshmi: Reference 1. AmpleBatemanPas encore d'évaluation

- Kashif Khan Assignment of Linear Algebra.Document10 pagesKashif Khan Assignment of Linear Algebra.engineerkashif97Pas encore d'évaluation

- Lecture 15Document43 pagesLecture 15Apel KingPas encore d'évaluation

- 25681461-c37b-42da-bf65-0a6923c7d643Document8 pages25681461-c37b-42da-bf65-0a6923c7d643A.s.qudah QudahPas encore d'évaluation

- Term Paper of MTH (101) : Submitted To-Ms. ASHU SACHDEVADocument10 pagesTerm Paper of MTH (101) : Submitted To-Ms. ASHU SACHDEVARashesh BhatiaPas encore d'évaluation

- Properties and SignificanceDocument20 pagesProperties and SignificancexcalibratorPas encore d'évaluation

- Basic Math Review for Linear Algebra CourseDocument13 pagesBasic Math Review for Linear Algebra Coursetejas.s.mathaiPas encore d'évaluation

- Graph Eigenvalues Explained: Largest, Smallest, Gap and MoreDocument29 pagesGraph Eigenvalues Explained: Largest, Smallest, Gap and MoreDenise ParksPas encore d'évaluation

- Eigen Values, Eigen Vectors - Afzaal - 1Document81 pagesEigen Values, Eigen Vectors - Afzaal - 1syedabdullah786Pas encore d'évaluation

- S10 Prodigies PPTDocument33 pagesS10 Prodigies PPTKrish GuptaPas encore d'évaluation

- Lec20 - 11 10 2023Document9 pagesLec20 - 11 10 2023aryan.pandey23bPas encore d'évaluation

- PcaDocument10 pagesPcajhansiprs2001Pas encore d'évaluation

- Notas de ClaseDocument61 pagesNotas de ClaseBoris PolancoPas encore d'évaluation

- Eigenvalue Problems Explained in 40 CharactersDocument11 pagesEigenvalue Problems Explained in 40 CharactersWilly KwokPas encore d'évaluation

- Singular Value Decomposition (SVD)Document94 pagesSingular Value Decomposition (SVD)Roudra ChakrabortyPas encore d'évaluation

- 19 Eigenvalues, Eigenvectors, Ordinary Differential Equations, and ControlDocument12 pages19 Eigenvalues, Eigenvectors, Ordinary Differential Equations, and ControljackkelthorPas encore d'évaluation

- MIT18 S096F13 Lecnote2Document6 pagesMIT18 S096F13 Lecnote2Yacim GenPas encore d'évaluation

- Linear Algebra and Matrix Theory: Part 4 - Eigenvalues and EigenvectorsDocument8 pagesLinear Algebra and Matrix Theory: Part 4 - Eigenvalues and EigenvectorscrazynupPas encore d'évaluation

- Mit18 06scf11 Ses2.8sumDocument4 pagesMit18 06scf11 Ses2.8sumnarendraPas encore d'évaluation

- Eigenvalues and EigenvectorsDocument5 pagesEigenvalues and EigenvectorsMostafa Shameem RaihanPas encore d'évaluation

- 22 3 Repted Eignval Sym MatxDocument16 pages22 3 Repted Eignval Sym MatxDaniel SolhPas encore d'évaluation

- Review Math FoundationDocument8 pagesReview Math FoundationPrince Arc MiguelPas encore d'évaluation

- Module 2 - DS IDocument94 pagesModule 2 - DS IRoudra ChakrabortyPas encore d'évaluation

- Assignment 11 Answers Math 130 Linear AlgebraDocument3 pagesAssignment 11 Answers Math 130 Linear AlgebraCody SagePas encore d'évaluation

- T T V Is Parallel To V? This Is An Example: Av V Av Iv Iv Av 0 I ADocument5 pagesT T V Is Parallel To V? This Is An Example: Av V Av Iv Iv Av 0 I AAnonymous ewKJI4xUPas encore d'évaluation

- Representations in Lie GroupsDocument4 pagesRepresentations in Lie Groupsprivado088Pas encore d'évaluation

- Application of Eigen Value N Eigen VectorDocument15 pagesApplication of Eigen Value N Eigen VectorAman KheraPas encore d'évaluation

- Chapter7 7.1 7.3Document53 pagesChapter7 7.1 7.3Fakhri AkbarPas encore d'évaluation

- Linear AlgebraDocument16 pagesLinear Algebralelu8210Pas encore d'évaluation

- Similarity TransofmationDocument15 pagesSimilarity Transofmationsubbu205Pas encore d'évaluation

- Eigen Vectors: TopicDocument7 pagesEigen Vectors: TopicUmar HaroonPas encore d'évaluation

- Matrixanalysis PDFDocument46 pagesMatrixanalysis PDFDzenis PucicPas encore d'évaluation

- Solving Eigenvalue ProblemsDocument2 pagesSolving Eigenvalue ProblemsSiti Mariam AnakpakijanPas encore d'évaluation

- Factor AnalysisDocument57 pagesFactor AnalysisRajendra KumarPas encore d'évaluation

- Self Adjoint Transformations in Inner-Product SpacesDocument6 pagesSelf Adjoint Transformations in Inner-Product SpacesmohammadH7Pas encore d'évaluation

- AE 483 Linear Algebra Review PDFDocument12 pagesAE 483 Linear Algebra Review PDFpuhumightPas encore d'évaluation

- Definition Eigenvalue and EigenvectorDocument5 pagesDefinition Eigenvalue and Eigenvectortunghuong211Pas encore d'évaluation

- Spektralna Teorija 1dfDocument64 pagesSpektralna Teorija 1dfEmir BahtijarevicPas encore d'évaluation

- Problem Set 6: 2 1 2 1 T 2 T T 2 T 3 TDocument6 pagesProblem Set 6: 2 1 2 1 T 2 T T 2 T 3 TAkshu AshPas encore d'évaluation

- Eigenvalue EigenvectorDocument69 pagesEigenvalue EigenvectordibyodibakarPas encore d'évaluation

- Mce371 13Document19 pagesMce371 13Abul HasnatPas encore d'évaluation

- Review of Properties of Eigenvalues and EigenvectorsDocument11 pagesReview of Properties of Eigenvalues and EigenvectorsLaxman Naidu NPas encore d'évaluation

- Matrix Computations for Signal Processing Lecture NotesDocument21 pagesMatrix Computations for Signal Processing Lecture NotesKuruvachan K GeorgePas encore d'évaluation

- On Sensitivity of Eigenvalues and Eigendecompositions of MatricesDocument29 pagesOn Sensitivity of Eigenvalues and Eigendecompositions of MatricessalehPas encore d'évaluation

- The Existence of Generalized Inverses of Fuzzy MatricesDocument14 pagesThe Existence of Generalized Inverses of Fuzzy MatricesmciricnisPas encore d'évaluation

- Chapter 1 - Matrices & Determinants: Arthur Cayley (August 16, 1821 - January 26, 1895) Was ADocument11 pagesChapter 1 - Matrices & Determinants: Arthur Cayley (August 16, 1821 - January 26, 1895) Was Azhr arrobaPas encore d'évaluation

- BCA - 1st - SEMESTER - MATH NotesDocument25 pagesBCA - 1st - SEMESTER - MATH Noteskiller botPas encore d'évaluation

- Lie Groups in Quantum Mechanics: M. SaturkaDocument6 pagesLie Groups in Quantum Mechanics: M. SaturkamarioasensicollantesPas encore d'évaluation

- Deep LearningDocument28 pagesDeep Learning20010700Pas encore d'évaluation

- Diagonalization by A Unitary Similarity TransformationDocument9 pagesDiagonalization by A Unitary Similarity TransformationgowthamkurriPas encore d'évaluation

- Dynamic Analysis: Eigenvalues and EigenvectorsDocument10 pagesDynamic Analysis: Eigenvalues and Eigenvectorsagonza70Pas encore d'évaluation

- Eigen Values and Eigen VectorsDocument32 pagesEigen Values and Eigen VectorsKAPIL SHARMA100% (1)

- Matrix Factorizations and Low Rank ApproximationDocument11 pagesMatrix Factorizations and Low Rank ApproximationtantantanPas encore d'évaluation

- Eigen Values Eigen VectorsDocument35 pagesEigen Values Eigen VectorsArnav ChopraPas encore d'évaluation

- CS168: The Modern Algorithmic Toolbox Lecture #9: The Singular Value Decomposition (SVD) and Low-Rank Matrix ApproximationsDocument10 pagesCS168: The Modern Algorithmic Toolbox Lecture #9: The Singular Value Decomposition (SVD) and Low-Rank Matrix Approximationsenjamamul hoqPas encore d'évaluation

- Chapter 21Document6 pagesChapter 21manikannanp_ponPas encore d'évaluation

- Matrix Eigenvalues and Eigenvectors PDFDocument3 pagesMatrix Eigenvalues and Eigenvectors PDFaliPas encore d'évaluation

- Graph Structured Statistical Inference Thesis ProposalDocument20 pagesGraph Structured Statistical Inference Thesis ProposalaliPas encore d'évaluation

- Section 2: Eigendecomposition.: 1 Selecting Elements of MatricesDocument4 pagesSection 2: Eigendecomposition.: 1 Selecting Elements of MatricesaliPas encore d'évaluation

- Ieeesp PDFDocument31 pagesIeeesp PDFaliPas encore d'évaluation

- 1 Introduction & Objective: Lab 15: Fourier SeriesDocument9 pages1 Introduction & Objective: Lab 15: Fourier SeriesaliPas encore d'évaluation

- × 5 Square Matrix of Random Elements Using The Matlab Function Rand (5), CallDocument1 page× 5 Square Matrix of Random Elements Using The Matlab Function Rand (5), CallaliPas encore d'évaluation

- Lab PDFDocument11 pagesLab PDFaliPas encore d'évaluation

- SVD and Eigenvalue Decompositions ExplainedDocument2 pagesSVD and Eigenvalue Decompositions ExplainedaliPas encore d'évaluation

- A New Blind Adaptive Antenna Array For GNSS Interference CancellationDocument5 pagesA New Blind Adaptive Antenna Array For GNSS Interference CancellationaliPas encore d'évaluation

- Satellite Antenna Fundamentals Rev1 PDFDocument4 pagesSatellite Antenna Fundamentals Rev1 PDFaliPas encore d'évaluation

- Smart Antennas For Mobile Communications: A. J. Paulraj, D. Gesbert, C. PapadiasDocument15 pagesSmart Antennas For Mobile Communications: A. J. Paulraj, D. Gesbert, C. PapadiasparthhsarathiPas encore d'évaluation

- Smartantennas 140321052931 Phpapp02Document19 pagesSmartantennas 140321052931 Phpapp02aliPas encore d'évaluation

- Antenna FundamentalDocument22 pagesAntenna FundamentalH3liax100% (16)

- Chapter10 PDFDocument18 pagesChapter10 PDFPranveer Singh PariharPas encore d'évaluation

- DOA Estimation Using MUSIC and Root MUSIC Methods: Chhavipreet Singh (Y5156) Siddharth Sahoo (Y5827447)Document20 pagesDOA Estimation Using MUSIC and Root MUSIC Methods: Chhavipreet Singh (Y5156) Siddharth Sahoo (Y5827447)aliPas encore d'évaluation

- Coding Area: Roman IterationDocument3 pagesCoding Area: Roman IterationPratyush GoelPas encore d'évaluation

- Artificial Neural Networks: Dr. Md. Aminul Haque Akhand Dept. of CSE, KUETDocument82 pagesArtificial Neural Networks: Dr. Md. Aminul Haque Akhand Dept. of CSE, KUETMD. SHAHIDUL ISLAM100% (1)

- Demand: - Demand (D) Is A Schedule That Shows The Various Amounts of ProductDocument2 pagesDemand: - Demand (D) Is A Schedule That Shows The Various Amounts of ProductRaymond Phillip Maria DatuonPas encore d'évaluation

- Unit 4 DeadlocksDocument14 pagesUnit 4 DeadlocksMairos Kunze BongaPas encore d'évaluation

- Pump CavitationDocument5 pagesPump Cavitationjrri16Pas encore d'évaluation

- Wind Load On StructuesDocument14 pagesWind Load On StructuesNasri Ahmed mohammedPas encore d'évaluation

- Packed Bed Catalytic Reactor Chapter 19Document33 pagesPacked Bed Catalytic Reactor Chapter 19Faris Rahmansya NurcahyoPas encore d'évaluation

- ICCM2014Document28 pagesICCM2014chenlei07Pas encore d'évaluation

- Table of Common Laplace TransformsDocument2 pagesTable of Common Laplace TransformsJohn Carlo SacramentoPas encore d'évaluation

- DOMENE WHO - QoLDocument21 pagesDOMENE WHO - QoLSahim KahrimanovicPas encore d'évaluation

- World-GAN - A Generative Model For MinecraftDocument8 pagesWorld-GAN - A Generative Model For MinecraftGordonPas encore d'évaluation

- Dynamic SolutionsDocument2 pagesDynamic Solutionsaleksandarv100% (1)

- Optimal f ratio for inverter chainDocument6 pagesOptimal f ratio for inverter chainVIKAS RAOPas encore d'évaluation

- HuwDocument12 pagesHuwCharles MorganPas encore d'évaluation

- International System of UnitsDocument11 pagesInternational System of UnitsjbahalkehPas encore d'évaluation

- 1e1: Engineering Mathematics I (5 Credits) LecturerDocument2 pages1e1: Engineering Mathematics I (5 Credits) LecturerlyonsvPas encore d'évaluation

- CE6306 NotesDocument125 pagesCE6306 Noteskl42c4300Pas encore d'évaluation

- The Gran Plot 8Document5 pagesThe Gran Plot 8Yasmim YamaguchiPas encore d'évaluation

- Simple Harmonic Motion and ElasticityDocument105 pagesSimple Harmonic Motion and ElasticityyashveerPas encore d'évaluation

- I Can Statements - 4th Grade CC Math - NBT - Numbers and Operations in Base Ten Polka DotsDocument13 pagesI Can Statements - 4th Grade CC Math - NBT - Numbers and Operations in Base Ten Polka DotsbrunerteachPas encore d'évaluation

- MCS 1st SemesterDocument15 pagesMCS 1st SemesterRehman Ahmad Ch67% (3)

- 4 Integral Equations by D.C. Sharma M.C. GoyalDocument200 pages4 Integral Equations by D.C. Sharma M.C. GoyalManas Ranjan MishraPas encore d'évaluation

- Table-Of-Specifications-Math 9Document2 pagesTable-Of-Specifications-Math 9johayma fernandezPas encore d'évaluation

- Time Series Modelling Using Eviews 2. Macroeconomic Modelling Using Eviews 3. Macroeconometrics Using EviewsDocument29 pagesTime Series Modelling Using Eviews 2. Macroeconomic Modelling Using Eviews 3. Macroeconometrics Using Eviewsshobu_iujPas encore d'évaluation

- Jyothi Swarup's SAS Programming ResumeDocument4 pagesJyothi Swarup's SAS Programming Resumethiru_lageshetti368Pas encore d'évaluation

- 1-15-15 Kinematics AP1 - Review SetDocument8 pages1-15-15 Kinematics AP1 - Review SetLudwig Van Beethoven100% (1)

- Prestressed Concrete: A Fundamental Approach, ACI 318-11 Code Philosophy & Stresses LimitationsDocument14 pagesPrestressed Concrete: A Fundamental Approach, ACI 318-11 Code Philosophy & Stresses LimitationsAnmar Al-AdlyPas encore d'évaluation