Académique Documents

Professionnel Documents

Culture Documents

Engineering Journal::A Key Approach To Maintain Security Through Log Management For Data Sharing in Cloud

Transféré par

Engineering JournalTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Engineering Journal::A Key Approach To Maintain Security Through Log Management For Data Sharing in Cloud

Transféré par

Engineering JournalDroits d'auteur :

Formats disponibles

International Journal of Engineering Research & Science (IJOER)

[Vol-1, Issue-4, July- 2015]

A Key Approach To Maintain Security Through Log

Management For Data Sharing In Cloud

Shriram Vaidya1, Aruna Gupta2

1

Department of Computer Engineering, Savitribai Phule Pune University, Pune, India

Department of Computer Engineering, Savitribai Phule Pune University, Pune, India

Abstract Cloud computing ensures highly scalable services to be easily available on the Internet. Users data are usually

processed remotely in unknown machines that users do not own in cloud. While enjoying the convenience of this new

emerging technology, users are worried about losing control of their own data mainly in financial and health sectors. This

is becoming a significant problem to the wide use of cloud services. To solve this problem, a novel highly decentralized

information accountability framework that keeps track of the actual usage of the users data is proposed in the cloud. It

contains an object-centered approach that enables enclosing our logging mechanism together with users data and policies.

For this we leverage the JAR programmable capabilities to both create a dynamic and traveling object, and to ensure that

any access to users data will trigger authentication and automated logging local to the JARs. In addition it also provides

distributed auditing mechanisms and sends notification of data access via SMS (Short Message service) to data owners

mobile.

Keywords Cloud Computing, accountability, data sharing, auditing, log management.

I. INTRODUCTION

Cloud computing provides cloud services and their delivery to users. Cloud computing is a technology which uses internet

and remote servers to stored data and application and provides on demand services [2]. It also supplements the current

consumption and delivery of services based on the Internet. Cloud users worried about losing control of their own data while

enjoying the convenience brought by this new technology. The data processed on clouds are generally outsourced that leads

to a number of issues related to accountability that includes the handling of personally identifiable information. These are

becoming barriers to the wide adoption of cloud services [3] [4]. These problems can solve by the proposed topic. In Cloud,

Cloud Service Provider transfers their work to other entities hence, data sharing goes through complex and dynamic service

chain.

To solve this problem the concept of CIA (Cloud Information Accountability) is proposed that is based on Information

Accountability which focuses on keeping the data usage transparent and traceable [2] [3]. CIA is able to provide end to end

accountability. CIA has ability of maintaining lightweight and powerful accountability that combines aspects of access

control, usage control and authentication through JAR. JAR which has programming capability is used in proposed work.

There are two distinct modes for auditing provided in CIA i.e. push mode and pull mode. The push mode refers to logs being

periodically sent to the data owner or stakeholder while the pull mode refers to an alternative approach whereby the user (or

another authorized party) can retrieve the logs as needed. JAR (Java Archives) files automatically log the usage of the users

data by any entity in the cloud to ensure the reliability of the log, adapting to a highly decentralized infrastructure etc. Data

owner will get log files through which he/she can detect who is using their data. This ensures distributed accountability for

shared data in cloud.

a. Accountability

Accountability is fundamental concept in cloud that helps for growth of trust in cloud computing. The term

Accountability refers to a contracted and accurate requirement that met by reporting and reviewing mechanisms.

Accountability is the agreement, to act as an authority to protect the personal information from others [2].

Accountability is used for security and protects against use of that information beyond legal boundaries and it will be

held responsible for misuse of that information. Here Users data along with any policies such as access control policies

and logging policies that they want to enforce are encapsulate in JAR and user will send that JAR to cloud service

providers[1][3]. Any access to the data will trigger an automated and authenticated logging mechanism local to the

JARs; we call it as Strong Binding. Moreover JARs are able to send error correction information which allows it to

monitor the loss of any logs from any of the JARs. To test or experiment the performance proposed work focus on

image, pdf, swf files which are common content type for end user. We send log files to users mobile phone as

Page | 39

International Journal of Engineering Research & Science (IJOER)

[Vol-1, Issue-4, July- 2015]

notification about who is accessing his data in the proposed work, architecture used is platform independent and highly

decentralized which does not required any dedicated authentication or storage system in place.

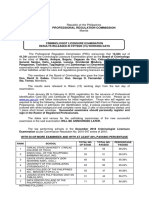

FIG.1. CLOUD DATA STORAGE ARCHITECTURE THAT IS USED TO SHARE DATA ON CLOUD

II.

RELATED WORK

Cloud computing has raised an amount of privacy and security issues [4]. Such issues are due to outsourcing of data to third

party by cloud service providers. As Cloud store the amount of users' data, for that well- known security is very important.

The vendor of the data does not aware about where their data is stored and they do not have control of where data to be

placed. Pearson et al. have proposed accountability to address privacy concerns of end users [4] and then develop a privacy

manager [5] [9]. Attribute Based Encryption Sahai and Waters proposed notion of ABE and it was introduced as a new

method for fuzzy identity based encryption [5]. The first drawback of the scheme is that its threshold semantics lacks

expressibility. Several efforts followed in the literature that tries to solve the expressibility problem. In the ABE scheme,

cipher texts are not encrypted to one particular user as in traditional public key cryptography. Rather, both cipher texts and

users decryption keys are associated with a set of attributes or a policy over attributes. A user is able to decrypt a cipher text

only if there is a match between his decryption key and the cipher text. ABE schemes are classified into key policy attributebased encryption (KP-ABE) [5] and cipher text-policy attribute- based encryption (CPABE), depending how attributes and

policy are associated with cipher texts and users decryption keys. Jagadeesan et al. proposed logic for designing

accountability-based distributed systems in his Towards a Theory of Accountability and Audit journal. In that the usage of

policies attached to the data and presents logic for accountability data in distributed settings. The few existing systems are

partial and restricted to single domain [4][5].

Other Techniques

J.W. Holford proposed that, With respect to Java-based techniques for security, here methods are related to self defending

objects (SDO). SDOs are an extension of the object oriented programming paradigm, where software objects that offer

sensitive functions or hold sensitive data and that are responsible for protecting those data. The key difference in

implementations is that the authors still rely on a centralized database to maintain the access records, while the items being

protected are held as separate files. Previous work provided a Java-based approach to prevent privacy leakage from indexing

which could be integrated with the CIA framework proposed in this work so they build on related architectures. In this

proposed paper we do not cover issues related to data storage security which are a complementary aspect of the privacy

issues.

III.

IMPLEMENATATION

There is a need to provide technique which will audit data in widespread use of cloud. On the basis of accountability, we

proposed one mechanism which keeps use of data transparent means data owner should get information about usage of his

data. This mechanism support accountability in distributed environment. Data owner should not bother about his data. He

may know that this data is handled according to service level agreement and his data is safe on cloud. In proposed work, we

consider three kinds of files that are image file, swf files for video and pdf file documents. A logger component is consists of

Java JAR file which stores a users data items and corresponding log files.

Page | 40

International Journal of Engineering Research & Science (IJOER)

[Vol-1, Issue-4, July- 2015]

FIG.2-ARCHITECTURE

The above diagram shows model architecture that specifies how data flows from one entity to another entity. As shown in

figure first user creates public and private key based on IBE (Identity Based Encryption) [3]. Logger component which is a

JAR File will be created by user with the generated keys to store its data items. The JAR includes some set of simple access

controls that specifies how those entities are authorized to access the contents. Then user sends JAR file to Cloud Service

provider (CSP) that he/she subscribes to. (Steps 3-5) Open SSL based certificates are used to authenticate CSP to the JAR

wherein a trusted certificate authority certifies the CSP. In event where access is requested by user occurred then we employ

SAML-based authentication where a trusted identity provider issues certificates verifying the users identity based on his

username [7]. The CSP service provider or user will be allowed to access the data enclosed in the JAR when authentication

completed. The JAR will provide usage control associated with logging depending on configuration setting defined at time of

JAR creation. Whenever there is access to data, log is automatically generated by JAR [13][3]. That log file is encrypted by

public key distributed by user and is stored along with data in step 6 in figure. Encryption ensures unauthorized changes to

log files. Some error correction information will be sent to the log harmonizer to handle possible log file corruption.

Individual records are hashed together to create a chain structure and that are able to quickly detect possible errors or missing

records. Data owner and other stake holder can access data at time of auditing with aid to log harmonizer.

FIG.3-ACCOUNTABILITY MECHANISM

Page | 41

International Journal of Engineering Research & Science (IJOER)

[Vol-1, Issue-4, July- 2015]

Since the logger does not need to be installed on any system or dont require any special support from the server. It is not

very intrusive in its actions. Since, the logger is responsible for generating the error correction information for each log

record and sends the same to the log harmonizer. The error correction information combined with the encryption and

authentication mechanism provides a robust and reliable recovery mechanism, therefore meeting the requirement.

A logger component is consists of Java JAR file which stores a users data items and corresponding log files Log records are

automatically generated by the logger

component. Logging occurs at any access to the data in the JAR, and new log

entries are appended sequentially, Each record ri is encrypted individually and appended to the log file. Here, ri indicates that

an entity identified by ID has performed an action Act on the users data at particular time. The generated log files

notification say short message service (SMS) will be sent to data owners mobile. The SMS will contain IP address of user

who accessed data, access type, date and time.

Algorithm for Push and Pull mode

Size: maximum size of log file specified by the data owner,

Time: Maximum time allowed to elapse before the log file is dumped, tbeg: time stamp at which the last dump occurred, log:

current log file, pull indicates whether the command from owner is received.

1.

2.

3.

4.

5.

6.

7.

8.

9.

10.

11.

12.

13.

14.

15.

16.

17.

18.

19.

20.

21.

22.

23.

24.

25.

Let TS(NTP) be the network time protocol timestamp

Pull=0

Rcc={UID,OID,ACCESSTYPE,Result,Time,Date}

Curtime=TS(NTP)

Lsize=size,of(log) // Current size of log

If ((curtime tbeg) <time) && (lsize <size) && (pull= =0) then

Log=log+ENCRYPT(rcc) // Encryption function

used to encrypt the record

PING to CJAR // send ping to harmonizer to

check if it is alive

If PING-CJAR then

PUSH RS(rss) // write error correcting bits.

Else

Exit(1) // error if no PING is received

End if

End if

If ((curtime tbeg) >time) || (lsize >size) || (pull !=0) then

// check if PING is received

If PING-CJAR then

PUSH log // write the log file to harmonizer

RS(log):=NULL //reset the error correcting records

Tbcg=TS(NTP)// reset the tbcg variable

Pull:=0

Else

EXIT(1) // error if no PING is received

End if

End if

In proposed work, the algorithm uses for push and pull model presents logging and synchronization steps with the

harmonizer in Push mode. First, the algorithm checks whether the size of the JAR has exceeded a fixed size or the normal

time between two consecutive dumps has elapsed. The size and threshold time for a dump are specified by the data owner at

the time of creation of the JAR. It also checks whether the data owner has requested a dump of the log files. If events has not

occurred then it proceeds to encrypt the record and write the error correction information to the harmonizer. The

communication with the harmonizer begins with a simple handshake. If no response is received, the log file records an error.

If the JAR is configured to send error notifications then the data owner is then alerted through notifications. Once the

Page | 42

International Journal of Engineering Research & Science (IJOER)

[Vol-1, Issue-4, July- 2015]

handshake is completed, the communication with the harmonizer proceeds using a TCP/IP protocol [3][13]. If any request of

the log file, or the size or time exceeds the threshold has occurred, the JAR simply dumps the log files and resets all the

variables to make space for new records. In case of Access Log (Pull mode), the algorithm is modified by adding an

additional check after some step 6. Precisely, the Access Log (Pull mode) checks whether the CSP accessing the log satisfies

all the conditions specified in the policies pertaining to it. If the conditions are satisfied, access is granted; otherwise, access

is denied. Irrespective of the access control outcome, the attempted access to the data in the JAR file will be logged.

IV.

CONCLUSION AND FUTURE SCOPE

This paper presents effective mechanism, which performs automatic authentication of users and create log records of each

data access by the user. Data owner can audit his content on cloud and he can get the confirmation that his data is safe on the

cloud. Data owner also able to know the modification of data made without his knowledge. Data owner should not worry

about his data on cloud using this mechanism and data usage is transparent using this mechanism. The kind of security is

regarding with only image file which are often content in cloud, In future we can be able to add security to all types of files

shared in cloud. This will give better approach which provides authentication, accountability and auditing features. We can

also use encrypted algorithm to ensure security but it leads to increase in cost and time. The notifications can also send to

genuinely used devices of data owner like mobile phones or iphones. In future we would like to develop a cloud, on which

we will install JRE and JVM, to do the authentication of JAR. Proposed work tried to improve security of store data and to

reduce log record generation time.

V.

ACKNOWLEDGMENT

I wish to express my sincere thanks and deep gratitude towards Dr.M.G Jadhav [Principal JSCOE] and my guide Prof. Aruna

Gupta for her guidance, valuable suggestions and constant encouragement in all phases. I am highly indebted to her help in

solving my difficulties which came across whole Paper work. Finally I extend my sincere thanks to respected Head of the

department Prof. S. M. Shinde and Prof. M .D. Ingle [P.G Co- Ordinator] and all the staff members for their kind support and

encouragement for this paper. I extend my thanks to coordinators of organization for providing us platform.Last but not the

least, I wish to thank my friends.

REFERENCES

[1] Yathi Raja Kumar Gottapu and A. Raja Gopal Paper: OPTIMAL STORAGE SERVICES IN CLOUD COMPUTING ENSURING

SECURITY International Journal of Advanced Research in IT and Engineering ISSN: 2278-6244

[2] G. Ateniese, R. Burns, R. Curtmola, J. Herring, L. Kissner, Z. Peterson, and D. Song, Provable Data Possession at Untrusted Stores,

Proc. ACM Conf. Computer and Comm. Security, pp. 598- 609, 2007

[3] Smitha Sundareswaran, Anna C. Squicciarini and DanLin:"Ensuring Distributed Accountability for Data Sharing in the Cloud, IEEE

Transaction on dependable a secure computing, VOL. 9, NO 4, pg 556-568, 2012

[4] S. Pearson and A. Charlesworth, Accountability as a Way Forward for Privacy Protection in the Cloud, Proc. First Intl Conf. Cloud

Computing, 2009.

[5] Boneh and M.K. Franklin, Identity-Based Encryption from the Weil Pairing, Proc. Intl Cryptology Conf. Advances in Cryptology,

pp. 213-229, 2001.

[6] B. Chun and A.C. Bavier, Decentralized Trust Management and Accountability in Federated Systems, Proc. Ann. Hawaii Intl

Conf. System Sciences (HICSS), 2004.

[7] OASIS

Security

Services

Technical

Committee,

Security

Assertion

Markup

Language

(saml)

2.0,

http://www.oasisopen.org/committees/tchome.php?wg abbrev=security, 2012.

[8] S. Pearson , Y. Shen, and M. Mowbray," A privacy Manager for Cloud Computing," Proc. Int'l Conf Cloud Computing

(cloudcom),pp.90- 106,2009.

[9] S. Pearson and A. Charlesworth, "Accountability as a Way Forward for Privacy Protection in the Cloud," Proc First Intl conf. Cloud

Computing, 2009.

[10] Flickr, http://www.flickr.com/, 2012.

[11] S. Sundareswaran, A. Squicciarini, D. Lin, and S. Huang, Promoting Distributed Accountability in the Cloud, Proc.

IEEE Intl Conf. Cloud Computing, 2011.

[12] ASHWINI N S AND MRS. TAMILARASI T DISTRIBUTED ACCOUNTABILITY AND AUDITING IN CLOUD International

Journal of Internet Computing (IJIC) ISSN No: 2231 6965, Vol 2, Iss 1, 2013.

Page | 43

Vous aimerez peut-être aussi

- Engineering Journal Role of Indian Spices in Cancer Prevention: A ReviewDocument2 pagesEngineering Journal Role of Indian Spices in Cancer Prevention: A ReviewEngineering JournalPas encore d'évaluation

- Engineering Journal Comparative Study of Various Pre-Treatments Coupled To Vacuum Drying in Terms of Structural, Functional and Physical Properties of Carrot DaucusCarotaDocument15 pagesEngineering Journal Comparative Study of Various Pre-Treatments Coupled To Vacuum Drying in Terms of Structural, Functional and Physical Properties of Carrot DaucusCarotaEngineering JournalPas encore d'évaluation

- Engineering Journal A Professional PID Implemented Using A Non-Singleton Type-1 Fuzzy Logic System To Control A Stepper MotorDocument8 pagesEngineering Journal A Professional PID Implemented Using A Non-Singleton Type-1 Fuzzy Logic System To Control A Stepper MotorEngineering JournalPas encore d'évaluation

- Engineering Journal Preparation and Characterization of Cross-Linked Polymer Supports For Catalysts ImmobilizationDocument24 pagesEngineering Journal Preparation and Characterization of Cross-Linked Polymer Supports For Catalysts ImmobilizationEngineering JournalPas encore d'évaluation

- Engineering Journal Thermo-Mechanical Fatigue Behavior of A Copper-Alumina Metal Matrix Composite With Interpenetrating Network StructureDocument9 pagesEngineering Journal Thermo-Mechanical Fatigue Behavior of A Copper-Alumina Metal Matrix Composite With Interpenetrating Network StructureEngineering JournalPas encore d'évaluation

- Engineering Journal The Microorganism Contamination Affect The Physical and Chemical Composition of MilkDocument7 pagesEngineering Journal The Microorganism Contamination Affect The Physical and Chemical Composition of MilkEngineering JournalPas encore d'évaluation

- Engineering Journal Estimation of Rolling-Contact Bearings Operational Properties by Electrical Probe MethodDocument7 pagesEngineering Journal Estimation of Rolling-Contact Bearings Operational Properties by Electrical Probe MethodEngineering JournalPas encore d'évaluation

- Engineering Journal Micromechanics of Thermoelastic Behavior of AA6070 Alloy/Zirconium Oxide Nanoparticle Metal Matrix CompositesDocument3 pagesEngineering Journal Micromechanics of Thermoelastic Behavior of AA6070 Alloy/Zirconium Oxide Nanoparticle Metal Matrix CompositesEngineering JournalPas encore d'évaluation

- Engineering Journal Strategic and Operational Scope of Foreign Subsidiary UnitsDocument11 pagesEngineering Journal Strategic and Operational Scope of Foreign Subsidiary UnitsEngineering JournalPas encore d'évaluation

- Engineering Journal Online Tuning of The Fuzzy PID Controller Using The Back-Propagation AlgorithmDocument8 pagesEngineering Journal Online Tuning of The Fuzzy PID Controller Using The Back-Propagation AlgorithmEngineering JournalPas encore d'évaluation

- Engineering Journal Numerical Study of Microbial Depolymerization Process With The Newton-Raphson Method and The Newton's MethodDocument12 pagesEngineering Journal Numerical Study of Microbial Depolymerization Process With The Newton-Raphson Method and The Newton's MethodEngineering JournalPas encore d'évaluation

- Engineering Journal Effects of Soil Physical and Chemical Properties On The Distribution of Trees in Some Ecological Zones of Zalingei-Darfur SudanDocument9 pagesEngineering Journal Effects of Soil Physical and Chemical Properties On The Distribution of Trees in Some Ecological Zones of Zalingei-Darfur SudanEngineering JournalPas encore d'évaluation

- Engineering Journal Alteration of Pelvic Floor Biometry in Different Modes of DeliveryDocument7 pagesEngineering Journal Alteration of Pelvic Floor Biometry in Different Modes of DeliveryEngineering JournalPas encore d'évaluation

- Engineering Journal Isomorphic Transformation of The Lorenz Equations Into A Single-Control-Parameter StructureDocument9 pagesEngineering Journal Isomorphic Transformation of The Lorenz Equations Into A Single-Control-Parameter StructureEngineering JournalPas encore d'évaluation

- Engineering Journal A Systematic Approach To Stable Components Synthesis From Legacy ApplicationsDocument10 pagesEngineering Journal A Systematic Approach To Stable Components Synthesis From Legacy ApplicationsEngineering JournalPas encore d'évaluation

- Engineering Journal A Silicon-Containing Polytriazole Resin With Long Storage TimeDocument9 pagesEngineering Journal A Silicon-Containing Polytriazole Resin With Long Storage TimeEngineering JournalPas encore d'évaluation

- Engineering Journal Study of Different Surface Pre-Treatment Methods On Bonding Strength of Multilayer Aluminum Alloys/Steel Clad MaterialDocument9 pagesEngineering Journal Study of Different Surface Pre-Treatment Methods On Bonding Strength of Multilayer Aluminum Alloys/Steel Clad MaterialEngineering JournalPas encore d'évaluation

- Engineering Journal Analysis of Vibro-Isolated Building Excited by The Technical Seismicity of Traffic EffectsDocument6 pagesEngineering Journal Analysis of Vibro-Isolated Building Excited by The Technical Seismicity of Traffic EffectsEngineering JournalPas encore d'évaluation

- Engineering Journal Controller Design For Nonlinear Networked Control Systems With Random Data Packet DropoutDocument6 pagesEngineering Journal Controller Design For Nonlinear Networked Control Systems With Random Data Packet DropoutEngineering JournalPas encore d'évaluation

- Engineering Journal Securing of Elderly Houses in Term of Elderly's Vision DisordersDocument10 pagesEngineering Journal Securing of Elderly Houses in Term of Elderly's Vision DisordersEngineering JournalPas encore d'évaluation

- Engineering Journal Impact of Texturing/cooling by Instant Controlled Pressure Drop DIC On Pressing And/or Solvent Extraction of Vegetal OilDocument12 pagesEngineering Journal Impact of Texturing/cooling by Instant Controlled Pressure Drop DIC On Pressing And/or Solvent Extraction of Vegetal OilEngineering JournalPas encore d'évaluation

- Engineering Journal Salicylic Acid Enhanced Phytoremediation of Lead by Maize (Zea Mays) PlantDocument7 pagesEngineering Journal Salicylic Acid Enhanced Phytoremediation of Lead by Maize (Zea Mays) PlantEngineering JournalPas encore d'évaluation

- Engineering Journal Power Improvement in 32-Bit Full Adder Using Embedded TechnologiesDocument8 pagesEngineering Journal Power Improvement in 32-Bit Full Adder Using Embedded TechnologiesEngineering JournalPas encore d'évaluation

- Engineering Journal Bio-Oil Production by Thermal Cracking in The Presence of HydrogenDocument20 pagesEngineering Journal Bio-Oil Production by Thermal Cracking in The Presence of HydrogenEngineering JournalPas encore d'évaluation

- Engineering Journal The Sinkhole Hazard Study at Casalabate (Lecce, Italy) Using Geophysical and Geological SurveysDocument11 pagesEngineering Journal The Sinkhole Hazard Study at Casalabate (Lecce, Italy) Using Geophysical and Geological SurveysEngineering JournalPas encore d'évaluation

- Engineering Journal Extracorporal Shock Wave Induced Mechanical Transduction For The Treatment of Low Back Pain - A Randomized Controlled TrialDocument6 pagesEngineering Journal Extracorporal Shock Wave Induced Mechanical Transduction For The Treatment of Low Back Pain - A Randomized Controlled TrialEngineering JournalPas encore d'évaluation

- Engineering Journal Studies On Stigma Receptivity of Grewia Asiatica L. With Reference To Esterase and Peroxidase ActivityDocument4 pagesEngineering Journal Studies On Stigma Receptivity of Grewia Asiatica L. With Reference To Esterase and Peroxidase ActivityEngineering JournalPas encore d'évaluation

- Engineering Journal A Novel Oxygenator-Right Ventricular Assist Device Circuit Using The SYNERGY Micropump As Right Heart Support in A Swine Model of Pulmonary HypertensionDocument8 pagesEngineering Journal A Novel Oxygenator-Right Ventricular Assist Device Circuit Using The SYNERGY Micropump As Right Heart Support in A Swine Model of Pulmonary HypertensionEngineering JournalPas encore d'évaluation

- Engineering Journal Factors Influencing Grid Interactive Biomass Power IndustryDocument12 pagesEngineering Journal Factors Influencing Grid Interactive Biomass Power IndustryEngineering JournalPas encore d'évaluation

- Engineering Journal Estimation of Global Solar Radiation Using Artificial Neural Network in Kathmandu, NepalDocument9 pagesEngineering Journal Estimation of Global Solar Radiation Using Artificial Neural Network in Kathmandu, NepalEngineering JournalPas encore d'évaluation

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- InstructionsDocument3 pagesInstructionsreachyahyaPas encore d'évaluation

- Professional Regulation Commission: Republic of The Philippines ManilaDocument2 pagesProfessional Regulation Commission: Republic of The Philippines ManilaRozelyn Rodil Leal-LayantePas encore d'évaluation

- Opos GuideDocument111 pagesOpos GuideKhaled Salahul DenPas encore d'évaluation

- Caste Certificate PranayDocument1 pageCaste Certificate PranayPrajwal BhandarkarPas encore d'évaluation

- Security PolicyDocument39 pagesSecurity PolicyHeidi Jennifer100% (4)

- History Intro - 1Document35 pagesHistory Intro - 1Trisha Lhey EchavezPas encore d'évaluation

- Security Plan Customer Name: Directions For Using TemplateDocument24 pagesSecurity Plan Customer Name: Directions For Using Templatesemiari100% (1)

- Mizan Tepi UniversityDocument66 pagesMizan Tepi UniversityNaomer OllyPas encore d'évaluation

- Csol 510 Final ProjectDocument19 pagesCsol 510 Final Projectapi-567951159Pas encore d'évaluation

- IOT SecurityDocument22 pagesIOT Securitysamson cherla100% (2)

- Notarized Verification Statement FormDocument1 pageNotarized Verification Statement Formumam hasaniPas encore d'évaluation

- UntitledDocument12 pagesUntitledmd marufPas encore d'évaluation

- The Active Directory StoryDocument20 pagesThe Active Directory StorySathish Kumar MarimuthuPas encore d'évaluation

- Safeguards Against Internet and Network Attacks: Secure It 5-2Document15 pagesSafeguards Against Internet and Network Attacks: Secure It 5-2Gesler Pilvan SainPas encore d'évaluation

- 9k Plus Mobile Legends by Navion TVDocument968 pages9k Plus Mobile Legends by Navion TVFrancepogi4ever YT100% (6)

- Enrolment No.: 1178/96311/53909: To Rajeev Singh S/O: Sanjeev Singh vill-Dumraon-post-Dumraon Buxar Bihar - 802119Document1 pageEnrolment No.: 1178/96311/53909: To Rajeev Singh S/O: Sanjeev Singh vill-Dumraon-post-Dumraon Buxar Bihar - 802119Badshah Anikesh Singh Shanvanshi75% (4)

- F5 Privileged User Access With F5 Access Policy Manager F5GS APMDocument5 pagesF5 Privileged User Access With F5 Access Policy Manager F5GS APMmandijnns1Pas encore d'évaluation

- DocumentDocument10 pagesDocumentChandan ShaivaPas encore d'évaluation

- Imaster NCE-Campus V300R019C10 Training - APRIL2020Document30 pagesImaster NCE-Campus V300R019C10 Training - APRIL2020julioPas encore d'évaluation

- ShowreportDocument1 pageShowreportDEBASIS DEYPas encore d'évaluation

- KYC - HISTORICBONDSBOXES 2018 TemplateDocument35 pagesKYC - HISTORICBONDSBOXES 2018 TemplateMother of Three100% (1)

- Group 29 SynopsisDocument5 pagesGroup 29 SynopsisOnkar GagarePas encore d'évaluation

- Police Clearance Verification Certificate: Office of Superintendent of Police, KaithalDocument1 pagePolice Clearance Verification Certificate: Office of Superintendent of Police, Kaithalsk9979221Pas encore d'évaluation

- A8 H FZ6 J 3Document12 pagesA8 H FZ6 J 3plainnuts420Pas encore d'évaluation

- Improving ANiTW Performance Using Bigrams Character Encoding and Identity-Based Signature PDFDocument25 pagesImproving ANiTW Performance Using Bigrams Character Encoding and Identity-Based Signature PDFابن الجنوبPas encore d'évaluation

- Birth Certificate Leh Death Certificate Chungchanga Mipuite HriatDocument4 pagesBirth Certificate Leh Death Certificate Chungchanga Mipuite HriatPascal ChhakchhuakPas encore d'évaluation

- 750 HP Rig SpecificationDocument230 pages750 HP Rig SpecificationPrakhar SarkarPas encore d'évaluation

- Vsphere Hardening GuideDocument22 pagesVsphere Hardening Guidexyz4uPas encore d'évaluation

- Administrator Guide Apeos IV C2270/3370Document673 pagesAdministrator Guide Apeos IV C2270/3370Hendro NugrohoPas encore d'évaluation

- EMVCo A Guide To EMV 2011051203091992Document35 pagesEMVCo A Guide To EMV 2011051203091992puneetpurwarPas encore d'évaluation