Académique Documents

Professionnel Documents

Culture Documents

A Survey of Effective Techniques For SubjectiveTest Assessment

Transféré par

Editor IJRITCCTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

A Survey of Effective Techniques For SubjectiveTest Assessment

Transféré par

Editor IJRITCCDroits d'auteur :

Formats disponibles

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 4 Issue: 3

ISSN: 2321-8169

400 - 403

_______________________________________________________________________________________

A Survey of Effective Techniques for SubjectiveTest Assessment

Ankita Patil

Prof. Achamma Thomas

Department of Computer Science Engineering

G.H.Raisoni College of Engineering

Nagpur, India.

Email- ankitapatil999@gmail.com

Department of Master of Computer Application

G.H. Raisoni College of Engineering

Nagpur, India.

Email- achamma.thomas@raisoni.net

AbstractSubjective test is rarely used for the assessment of online test examinations. In online examination, objective test exams are already

available but the subjective test exams are in need which is considered as the best way in terms of understanding and knowledge. This paper

presents a survey on the effective techniques for subjective test assessment. In this, the answers are unstructured data which have to be

evaluated. The evaluation is based on the semantic similarity between the model answer and the user answer. Different techniques are compared

and a new approach isproposed to evaluate the subjective test assessment of text.

Keywords: Subjective test assessment; Online examinations; Semantic Similarity; Evaluation.

__________________________________________________*****_________________________________________________

I.

INTRODUCTION(HEADING 1)

Although assessment is a tough job, but it can be helpful by

making it computerized. Normally, examinations are of two

types objective type such as multiple choice questions(MCQs)

and subjective type such as descriptive answers pattern.

Nowadays online examinations held are multiple choice

questions as bank exams, GRE, GMAT, AIEEE, etc. These

examinations are usually MCQs, where the answers are

selected out of the given options. The multiple choice is a

form of assessment in which respondents are asked to select

the best possible answer (or answers) out of the choices from a

list. If guessing an answer, there's usually a 25 percent chance

of getting it correct on a 4 answer choice question. Finding the

right answer from multiple choices can be automated using

multiple choice question answering systems. The multiple

choice format is most frequently used in educational testing, in

market research, and in elections, when a person chooses

between multiple candidates, parties, or policies. But this

multiple choice have many disadvantages such as it has the

limited types of knowledge that can be assessed by multiple

choice tests. Multiple choice tests are best adapted for testing

well-defined or lower-order skills. Problem-solving and

higher-order reasoning skills are better assessed through shortanswer and essay tests.

Another disadvantage of multiple choice tests is the

examinee's interpretation of the item. Failing to interpret

information as the test maker intended can result in an

"incorrect" response, even if the students response is

potentially valid. In addition, even if students have some

knowledge of a question, they receive no credit for knowing

that information if they select the wrong answer. Similarly, if a

student who is incapable of answering a particular question

can simply select a random answer and still have a chance of

receiving a mark for it. It is common practice for students with

no time left to give all remaining questions random answers in

the hope that they will get at least some of them right. In this

method, the score is reduced by the number of wrong answers

divided by the average number of possible answers for all

questions.

For many mentors, evaluating the questions and scoring of

questions is a difficult task. Ranking of marks is based on the

observations, understanding and explanation of specified

answer, essential terminologies set by the teacher. During the

major assessment, the teachers are overloaded with large

number of answersheets. Due to which assessment becomes

difficult for teachers and causes stress, strain and mental

steadiness.

By the new initiations in the technology, there are many

innovations in natural language processing and information

extractions, which constitute specific categories of free-text

questions in automated tests that makes scoring now

achievable. Advantages of computerized tests scoring

comprises of time and price savings, reduces deficiency of

steadiness. As compared to objective type, descriptive pattern

is more reliable. Students can write own answers, it can also

permits students to put across their thoughts in answers, put

their responses to the questions

produces their own

assumptions. This can increase the capabilities and talents of

the student. Computerized evaluation of these subjective text

may have difficulties but there are many algorithms that can

be used to evaluate those answers.

II.

LITERATURE SURVEY(HEADING 2)

The proposed approach is divided into two parts, first part is

keyword extraction of words and second is semantic similarity

of words.This paper presents the survey on the different

techniques of keyword extraction and semantic similarity and

best suited method for the proposed work.

A. Keyword Extraction of words

Keyword extraction is basically information retrieval which

automatically identifies the best terms in the given document.

These terms can be key phrases, key terms or just keywords.

In this approach keywords are extracted. Keywords are easy to

400

IJRITCC | March 2016, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 4 Issue: 3

ISSN: 2321-8169

400 - 403

_______________________________________________________________________________________

define as they are widely used within the information

retrieval(IR).

Example: Clustering is the process of grouping the data into

classes or clusters.

The keywords of the above example can be clustering,

process, grouping, data, classes,clusters.Keyword extraction

also improves the quality of document that are mentioned in

the text. Words that are occurred in the document are

analyzed to represent the most appropriate words.The

techniques below shows the survey of keyword extraction of

words from large text document.

a. Term Frequency- Inverse Document Frequency(TF-IDF)

TF-IDF is the weighing factor in information retrieval and text

mining. It evaluates the important word in the corpus of large

text. Term Frequency(TF) is the number of times the word

appears in the document and Inverse Document

Frequency(IDF) is the weight to measure the importance of

term in text document. Weighing is generally multiplying the

IDF by TF as TF*IDF to filter out common terms. It can be

calculated as

tfidf(t, d, D) = tf(t, d) * idf(t, D)(1)

Menaka Sand Radha N, [1] have classified the text using

keyword extraction. The keywords are extracted using TF-IDF

and WordNet. TF-IDF algorithm is used to select the words

and WordNet is the lexical database of English used to find the

similarity among the words. In this proposed work, the word

which have the highest similarity are selected as keywords.

Sungjick Lee and Han-joonKim[2] proposed conventional TFIDF model for keyword extraction. It involves cross domain

filtering and table term frequency(TTF) for extraction. Ari

Aulia Hakim, Alva Erwin, Kho I Eng, MaulahikmahGalinium,

andWahyuMuliady[3] works on the TF-IDF algorithm which

create a classifier that can classify the online articles. Stephen

Robertson[4], explains the understanding concepts of IDF.

b. Conditional Random Fields(CRF)

CRF is the probabilistic framework for segmenting the

structured data and labeling. The basic idea of conditional

random

field

is

the

distribution

of

labels

sequence.JasmeenKaur and Vishal Gupta[5] presents CRF

model for keyword extraction as the suitable model that is

efficient for keyword extraction.Feng Yu, Hong-weiXuan and

De-quanZheng[6] works on the CRF model to extract the key

phrase and uses SVM model to build classification model. The

experimental result shows the method better as compared to

other machine learning approach.ChengzhiZhang,HuilinWang,

Yao Liu1, Dan Wu, Yi Liao and Bo Wang[7] have proposed

and implemented CRF model to extract keywords.

c. Query Focused keyword extraction

Keywords are correlated with the query sentences and

calculates the query by relating feature which obtains

important words. Query is calculated by words w1 and w2 of

length k words. It works on query, sentence pruning followed

by query related feature and keywords are extracted. Liang

Ma, Tingting He, Fang Li, ZhuominGui and JinguangChen[8]

proposed a strategy which summarized sentence using query

focused multi-document and extract the keywords. Massih R.

Aminiand Nicolas Usunier[9] presents the idea which expands

the keywords with their respective cluster terms. Each

sentence is characterized by features and each sentence

compared the similarities with the current sentence.Claudio

CarpinetoAnd Giovanni Romano, FondazioneUgoBordoni[10]

discussed the automatic keyword expansion for information

retrieval.

B. Semantic Similarity of words

Semantic similarity of words is set of documents or terms,

where the distance between the two terms are measured based

on their meaning. The two terms are semantically similar, if

their meanings are close, or if the concepts or objects

represents common attributes.

Example 1 : Google and Microsoft.

In the above example, Google and Microsoft are similar

because they are software companies. The term semantic

similarity is confused with semantic relatedness. Semantic

relatedness includes relation between two terms.

Example 2 : Apple and selling.

This example is not semantically similar, because Apple is a

fruit and selling is an activity where Apple is related to the

activity of selling. However, semantic similarity is harder to

model than sematic relatedness[11]. To evaluate semantic

similarity between two terms, the different techniques are

discussed on the basis of extracted words.

a. Latent Semantic Analysis(LSA)

LSA is based on singular value decomposition, a mathematical

matrix decomposition technique closely related to factor

analysis that is applicable to text corpora. LSA produces wordword, word-passage, and passage-passage relations. It

represents any set of words such as a sentence, paragraph, or

essay taken from the original corpus or new, in a very high

dimensional semantic space. LSA can use term-document

matrix that describes the occurrence of term in the document.

Example: X is the matrix where element(i, j) describes the

occurrence of term i in the document j.

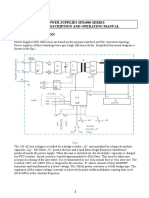

Fig: Example of LSA[23]

Shaymaa E. Sorour, KazumasaGoda and TsunenoriMine[12]

identifies the hidden meaning of textual information by

occurrence and co-occurrence of textusing LSA.

AshwiniDeshmukhand GayatriHegde[13] presents information

retrieval technique called latent semantic indexing.

ShuchuXiongand YihuiLuo[14] works on LSA to evaluate

sentence subset based to reproduce terms using multi

document.Zongli Jiang and ChangdongLu[15] works on the

401

IJRITCC | March 2016, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 4 Issue: 3

ISSN: 2321-8169

400 - 403

_______________________________________________________________________________________

semantic relations between words and documents using LSA.

The category of attributes of words are shown by the search

engine using Latent semantic method while the useless are

removed.

algorithm is used.LSA is the algorithm for comparison of

model answer and user answer. It represents word-word,

word-passage in matrix form.

b. Ontology Method

The data model are ontologies which is the working model of

entities and interaction. An ontology is a set of concepts,

objects, relations and other entities and relationships among

them. Ontology can be defined in the form:

O = [ C, P, RC, RP, A, I ]

Model

answer

(2)

keyword extraction algorithm

Where C is the concepts, P is the properties, R and RP are the

relations between concepts and properties, A is the set of

axioms and I are the instances. V Senthilkumaransand A

Sankar[16] proposed an automated system to assess short

answers using ontology mapping. The mapping is done with

two different ontologies O1 and O2 and find the similarity

Extracted keywords

Comparison of model answer

and student answerusing

semantic similarity algorithm

Betweentwo different concepts. S. BloehdornCimiano and A,

Hotho and S.Staab[17] discussed the framework of ontology

for text mining which describes different architecture of

ontology

mapping.

BadarSami,HudaYasinandMohsin

Mohammad Yasin[18] presented the evaluation of

unstructured text using ontology. In this, answers are collected

and compared with the model answer. Chin Pang

Cheng,Gloria T. Lau, Jiayi Pan, Kincho H. Law[19] proposed

domain specific mapping using ontology. To compare the

similarity two vector based similarity algorithm is used and

compute the results.

c. Context based method

Context based used for calculating similarities between

words and large corpus. Yue Wang, Hongsong Li, Haixun

Wang, and Kenny Q.Zhu[20] presents the context based web

search method. In this paper, the framework classifies web

queries into different patterns and concepts which contain the

queries. Then answers are produced through these queries with

knowledge base. VesileEvrim, and Dennis McLeod[21]

proposed the context based approach which finds the relevant

information through web. The information provided in the

documents are measured by semantic. An Information

retrieval method is used which examines the context and gives

the relevant results.EnekoAgirre, Enrique Alfonseca, Keith

Hall, Jana Kravalova, Marius Pasca and AitorSoroa[22]

describes the context based approach through different aspects

which calculates similarity of words.

III.

Student

answer

PROPOSED WORK(HEADING 3)

The subjective assessment of text are divided into two parts.

The first part is the extraction of keywords from the model

answer and the student answer and the second part is the

comparison between both the answers. The input are the

answers which will preprocessed by using keyword extraction

algorithm.The keywords are extracted by applying

algorithm.The CRF model is best suited for keyword

extraction. It selects the keywords by sequencing the labels.

For the comparison part the semantic similarity of words is

used. The keywords of model answer and student answers are

compared to measure the semantic similarity of words. To

evaluate the correctness of answer semantic similarity

Output (Score)

Fig : The flowchart of proposed work.

IV.

CONCLUSION(HEADING 4)

Subjective assessment is used to evaluate the understanding

concept of student through descriptive pattern. The assessment

can be done automated. There are several methods available to

assess this online subjective text. By this online subjective

assessment, the student perspective can be thoroughly assess.

In this paper, we have discussed different techniquesfor

keyword extraction such as TF-IDF, CRF model and Query

focused method. Similarly for semantic similarity such as

LSA, ontology method and context based method. By

comparing these techniques, we conclude that CRF model and

LSA is an effective method for extraction of keywords and

assessment of subjective text.

REFERENCES

Menaka S and Radha N, Text Classification using Keyword

Extraction Technique, International Journal of Advanced

Research in Computer Science and Software Engineering,

Volume 3, Issue 12, December 2013.

[2] Sungjick Lee and Han-joon Kim, News Keyword Extraction

for Topic Tracking, Fourth International Conference on

Networked Computing and Advanced Information Management,

2008.

[3] Ari Aulia Hakim, Alva Erwin, Kho I Eng, Maulahikmah

Galinium,

Wahyu

Muliady,

Automated

Document

Classification for News Article in Bahasa Indonesia based on

Term Frequency Inverse Document Frequency (TF-IDF)

Approach, 6th International Conference on Information

Technology and Electrical Engineering (ICITEE), Yogyakarta,

Indonesia, 2014.

[4] Stephen Robertson, Understanding inverse document

frequency: on theoretical arguments for IDF, Journal of

Documentation, Vol. 60, No.5, pp 503-520, 2004.

[1]

402

IJRITCC | March 2016, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 4 Issue: 3

ISSN: 2321-8169

400 - 403

_______________________________________________________________________________________

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

Jasmeen Kaur and Vishal Gupta, Effective Approaches For

Extraction Of Keywords, IJCSI International Journal of

Computer Science Issues, Vol. 7, Issue 6, November 2010.

Feng Yu, Hong-wei Xuan ,De-quan Zheng, Key-Phrase

Extraction Based on a Combination of CRF Model with

Document Structure, Eighth International Conference on

Computational Intelligence and Security, 2012.

Chengzhi Zhang, Huilin Wang, Yao Liu1, Dan Wu, Yi Liao, Bo

Wang, Automatic Keyword Extraction from Documents Using

Conditional Random Fields, Journal of Computational

Information Systems, March 2008.

Liang Ma, Tingting He, Fang Li, Zhuomin Gui and Jinguang

Chen, Query-focused Multi-document Summarization Using

Keyword Extraction, International Conference on Computer

Science and Software Engineering, 2008.

Massih R. Amini and Nicolas Usunier, A Contextual Query

Expansion Approach by Term Clustering for Robust Text

Summarization, Proceedings of DUC, 2007.

Claudio Carpineto And Giovanni Romano, Fondazione Ugo

Bordoni, A Survey Of Automatic Query Expansion In

Information Retrieval, ACM Computing Surveys, Vol. 44, No.

1, Article 1, January 2012.

Peipei Li, Haixun Wang,Kenny Q. Zhu,Zhongyuan Wang,

Xuegang Hu, and Xindong Wu, Fellow, A Large Probabilistic

Semantic Network based Approach to Compute Term

Similarity, IEEE Transactions on Knowledge and Data

Engineering, 2015.

Shaymaa E. Sorour, KazumasaGoda, Tsunenori Mine,

Correlation of Topic Model and Student Grades Using

Comment Data Mining, International Conference on Learning

Analytics and Knowledge 2011.

Ashwini Deshmukh and Gayatri Hegde, A Literature Survey

on Latent Semantic Indexing, International Journal of

Engineering Inventions Volume 1, Issue 4 PP: 01-05 September

2012.

Shuchu Xiong and Yihui Luo, A New Approach for MultiDocument Summarization based on Latent Semantic Analysis,

[15]

[16]

[17]

[18]

[19]

[20]

[21]

[22]

[23]

Seventh International Symposium on Computational Intelligence

and Design, 2014.

Zongli Jiang and Changdong Lu, A Latent Semantic Analysis

Based Method of Getting the Category Attribute of Words,

International Conference on Electronic Computer Technology,

2009.

V Senthil kumaran and A Sankar, Towards an automated

system for short-answer assessment using ontology mapping,

International Arab Journal of e-Technology, Vol. 4, No. 1,

January 2015.

S. Bloehdorn and P, Cimiano and A, Hotho and S.Staab, An

Ontology-based Framework for Text Mining, LDV Forum,

kde.cs.uni-kassel.de 2005.

Badar

Sami,

Huda

Yasinand

Mohsin

Mohammad

Yasin,Automated Score Evaluation of Unstructured Text using

Ontology, International Journal of Computer Applications Vol.

39 No.18, February 2012.

Chin Pang Cheng, Gloria T. Lau, Jiayi Pan, Kincho H. Law,

Domain-Specific Ontology Mapping by Corpus-Based

Semantic Similarity, Proceedings NSF CMMI Engineering

Research and Innovation Conference, Knoxville, Tennessee,

2008.

Yue Wang, Hongsong Li, Haixun Wang, and Kenny Q. Zhu,

Concept-Based Web Search, Springer 31st International

Conference ER 2012 Proceeding, Vol 7532, pp 449-462,

Florence, Italy, October 2012.

Vesile Evrim and Dennis McLeod, Context-based information

analysis for the Web Environment, International Journal

Knowledge and Information Systems, March 2013.

Eneko Agirre,Enrique Alfonseca, Keith Hall, Jana Kravalova,

Marius Pasca and Aitor Soroa, A Study on Similarity and

Relatedness

Using

Distributional

andWordNet-based

Approaches, ACL conference on Human Language

Technologies, pp 1927, 2009.

https://en.wikipedia.org

403

IJRITCC | March 2016, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Business Model Canvas PlanningDocument3 pagesBusiness Model Canvas PlanningjosliPas encore d'évaluation

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- 3412 Generator Set Engines Electronically Contr-1 PDFDocument50 pages3412 Generator Set Engines Electronically Contr-1 PDFFrezgi Birhanu100% (1)

- Image Restoration Techniques Using Fusion To Remove Motion BlurDocument5 pagesImage Restoration Techniques Using Fusion To Remove Motion Blurrahul sharmaPas encore d'évaluation

- Application Structure and Files-R14Document23 pagesApplication Structure and Files-R14Developer T24Pas encore d'évaluation

- Sg247851 - IBM ZOS Management Facility V2R3Document614 pagesSg247851 - IBM ZOS Management Facility V2R3gborja8881331Pas encore d'évaluation

- A Review of Wearable Antenna For Body Area Network ApplicationDocument4 pagesA Review of Wearable Antenna For Body Area Network ApplicationEditor IJRITCCPas encore d'évaluation

- Channel Estimation Techniques Over MIMO-OFDM SystemDocument4 pagesChannel Estimation Techniques Over MIMO-OFDM SystemEditor IJRITCCPas encore d'évaluation

- A Review of 2D &3D Image Steganography TechniquesDocument5 pagesA Review of 2D &3D Image Steganography TechniquesEditor IJRITCCPas encore d'évaluation

- Regression Based Comparative Study For Continuous BP Measurement Using Pulse Transit TimeDocument7 pagesRegression Based Comparative Study For Continuous BP Measurement Using Pulse Transit TimeEditor IJRITCCPas encore d'évaluation

- Performance Analysis of Image Restoration Techniques at Different NoisesDocument4 pagesPerformance Analysis of Image Restoration Techniques at Different NoisesEditor IJRITCCPas encore d'évaluation

- A Review of Wearable Antenna For Body Area Network ApplicationDocument4 pagesA Review of Wearable Antenna For Body Area Network ApplicationEditor IJRITCCPas encore d'évaluation

- Importance of Similarity Measures in Effective Web Information RetrievalDocument5 pagesImportance of Similarity Measures in Effective Web Information RetrievalEditor IJRITCCPas encore d'évaluation

- Comparative Analysis of Hybrid Algorithms in Information HidingDocument5 pagesComparative Analysis of Hybrid Algorithms in Information HidingEditor IJRITCCPas encore d'évaluation

- Diagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmDocument5 pagesDiagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmEditor IJRITCCPas encore d'évaluation

- IJRITCC Call For Papers (October 2016 Issue) Citation in Google Scholar Impact Factor 5.837 DOI (CrossRef USA) For Each Paper, IC Value 5.075Document3 pagesIJRITCC Call For Papers (October 2016 Issue) Citation in Google Scholar Impact Factor 5.837 DOI (CrossRef USA) For Each Paper, IC Value 5.075Editor IJRITCCPas encore d'évaluation

- A Review of 2D &3D Image Steganography TechniquesDocument5 pagesA Review of 2D &3D Image Steganography TechniquesEditor IJRITCCPas encore d'évaluation

- Channel Estimation Techniques Over MIMO-OFDM SystemDocument4 pagesChannel Estimation Techniques Over MIMO-OFDM SystemEditor IJRITCCPas encore d'évaluation

- Modeling Heterogeneous Vehicle Routing Problem With Strict Time ScheduleDocument4 pagesModeling Heterogeneous Vehicle Routing Problem With Strict Time ScheduleEditor IJRITCCPas encore d'évaluation

- A Study of Focused Web Crawling TechniquesDocument4 pagesA Study of Focused Web Crawling TechniquesEditor IJRITCCPas encore d'évaluation

- Network Approach Based Hindi Numeral RecognitionDocument4 pagesNetwork Approach Based Hindi Numeral RecognitionEditor IJRITCCPas encore d'évaluation

- Efficient Techniques For Image CompressionDocument4 pagesEfficient Techniques For Image CompressionEditor IJRITCCPas encore d'évaluation

- 41 1530347319 - 30-06-2018 PDFDocument9 pages41 1530347319 - 30-06-2018 PDFrahul sharmaPas encore d'évaluation

- Fuzzy Logic A Soft Computing Approach For E-Learning: A Qualitative ReviewDocument4 pagesFuzzy Logic A Soft Computing Approach For E-Learning: A Qualitative ReviewEditor IJRITCCPas encore d'évaluation

- Itimer: Count On Your TimeDocument4 pagesItimer: Count On Your Timerahul sharmaPas encore d'évaluation

- Predictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth KotianDocument3 pagesPredictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth Kotianrahul sharmaPas encore d'évaluation

- Prediction of Crop Yield Using LS-SVMDocument3 pagesPrediction of Crop Yield Using LS-SVMEditor IJRITCCPas encore d'évaluation

- Safeguarding Data Privacy by Placing Multi-Level Access RestrictionsDocument3 pagesSafeguarding Data Privacy by Placing Multi-Level Access Restrictionsrahul sharmaPas encore d'évaluation

- A Clustering and Associativity Analysis Based Probabilistic Method For Web Page PredictionDocument5 pagesA Clustering and Associativity Analysis Based Probabilistic Method For Web Page Predictionrahul sharmaPas encore d'évaluation

- 45 1530697786 - 04-07-2018 PDFDocument5 pages45 1530697786 - 04-07-2018 PDFrahul sharmaPas encore d'évaluation

- Hybrid Algorithm For Enhanced Watermark Security With Robust DetectionDocument5 pagesHybrid Algorithm For Enhanced Watermark Security With Robust Detectionrahul sharmaPas encore d'évaluation

- 44 1530697679 - 04-07-2018 PDFDocument3 pages44 1530697679 - 04-07-2018 PDFrahul sharmaPas encore d'évaluation

- Vehicular Ad-Hoc Network, Its Security and Issues: A ReviewDocument4 pagesVehicular Ad-Hoc Network, Its Security and Issues: A Reviewrahul sharmaPas encore d'évaluation

- A Content Based Region Separation and Analysis Approach For Sar Image ClassificationDocument7 pagesA Content Based Region Separation and Analysis Approach For Sar Image Classificationrahul sharmaPas encore d'évaluation

- Space Complexity Analysis of Rsa and Ecc Based Security Algorithms in Cloud DataDocument12 pagesSpace Complexity Analysis of Rsa and Ecc Based Security Algorithms in Cloud Datarahul sharmaPas encore d'évaluation

- Irrigation Canal Longitudinal & Cross Section Drawing and Quantity Offtake PackageDocument16 pagesIrrigation Canal Longitudinal & Cross Section Drawing and Quantity Offtake Packageram singhPas encore d'évaluation

- Filetype PDF ConfidentialDocument2 pagesFiletype PDF ConfidentialRussellPas encore d'évaluation

- Lecture Guide 4 - Transfer Function and State-Space ModelsDocument3 pagesLecture Guide 4 - Transfer Function and State-Space ModelsMariella SingsonPas encore d'évaluation

- Lesson 6.1 - Managing ICT Content Using Online PlatformsDocument9 pagesLesson 6.1 - Managing ICT Content Using Online PlatformsMATTHEW LOUIZ DAIROPas encore d'évaluation

- A Self-help App for Syrian Refugees with PTSDDocument51 pagesA Self-help App for Syrian Refugees with PTSDMiguel SobredoPas encore d'évaluation

- G 4 DocumentationDocument33 pagesG 4 DocumentationEsku LuluPas encore d'évaluation

- Quiz1 - Conditional Control If StatementsDocument5 pagesQuiz1 - Conditional Control If StatementsAncuta CorcodelPas encore d'évaluation

- Prediction of Aircraft Lost of Control in The Flight by Continuation, Bifurcation, and Catastrophe Theory MethodsDocument10 pagesPrediction of Aircraft Lost of Control in The Flight by Continuation, Bifurcation, and Catastrophe Theory MethodsAnonymous mE6MEje0Pas encore d'évaluation

- Dawood University of Engineering and Technology, KarachiDocument19 pagesDawood University of Engineering and Technology, KarachiOSAMAPas encore d'évaluation

- Micromouse: BY:-Shubham Kumar MishraDocument14 pagesMicromouse: BY:-Shubham Kumar Mishrashubham kumar mishraPas encore d'évaluation

- Remote SensingDocument31 pagesRemote SensingKousik BiswasPas encore d'évaluation

- Civil 3D 2014 Creating Alignments and ProfilesDocument13 pagesCivil 3D 2014 Creating Alignments and ProfilesBhavsar NilayPas encore d'évaluation

- Akhilesh - 00318002719 - SynchronizationDocument7 pagesAkhilesh - 00318002719 - SynchronizationAman NagarkotiPas encore d'évaluation

- SAP Business All-In-One FlyerDocument14 pagesSAP Business All-In-One FlyerwillitbeokPas encore d'évaluation

- Columbia 1987-02-25 0001Document11 pagesColumbia 1987-02-25 0001Becket AdamsPas encore d'évaluation

- Power Supplies SPS1000 Series Operating ManualDocument7 pagesPower Supplies SPS1000 Series Operating ManualKrystyna ZaczekPas encore d'évaluation

- Release Notes Intel® Proset/Wireless Software 22.50.1: Supported Operating SystemsDocument3 pagesRelease Notes Intel® Proset/Wireless Software 22.50.1: Supported Operating SystemsSelçuk AlacalıPas encore d'évaluation

- Certified Ethical Hacker: Ceh V10Document10 pagesCertified Ethical Hacker: Ceh V10Pragati sisodiyaPas encore d'évaluation

- A Simple and Effective Method For Filling Gaps in Landsat ETM+ SLC-off ImagesDocument12 pagesA Simple and Effective Method For Filling Gaps in Landsat ETM+ SLC-off ImagesJanos HidiPas encore d'évaluation

- I, Robot - Future (Will)Document2 pagesI, Robot - Future (Will)CarolinaDeCastroCerviPas encore d'évaluation

- Dwnload Full Marketing 13th Edition Kerin Solutions Manual PDFDocument35 pagesDwnload Full Marketing 13th Edition Kerin Solutions Manual PDFdalikifukiauj100% (11)

- Tim Nauta ResumeDocument3 pagesTim Nauta Resumeapi-311449364Pas encore d'évaluation

- Dijkstra's, Kruskals and Floyd-Warshall AlgorithmsDocument38 pagesDijkstra's, Kruskals and Floyd-Warshall AlgorithmsRajan JaiprakashPas encore d'évaluation

- General Instructions: Universite Du 7 Novembre A CarthageDocument8 pagesGeneral Instructions: Universite Du 7 Novembre A Carthagemakram74Pas encore d'évaluation

- HP LoadRunner Mobile Recorder tutorial captures Android app trafficDocument3 pagesHP LoadRunner Mobile Recorder tutorial captures Android app trafficPriyank AgarwalPas encore d'évaluation

- CodetantraDocument51 pagesCodetantrapratik ghoshPas encore d'évaluation