Académique Documents

Professionnel Documents

Culture Documents

6821

Transféré par

Brightworld ProjectsTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

6821

Transféré par

Brightworld ProjectsDroits d'auteur :

Formats disponibles

International Journal of Database and Theory and Application

Vol.9, No.2 (2016), pp.183-192

http://dx.doi.org/10.14257/ijdta.2016.9.2.20

Hyperspectral Image Classification by Fusion of Multiple

Classifiers

Yanbin Peng1, Zhigang Pan1, Zhijun Zheng1 ,Xiaoyong Li1

1

School of Information and Electronic Engineering, Zhejiang University of

Science and Technology, Hangzhou 310023, China

E-mail: pyb2010@126.com

Abstract

Hyperspectral image mostly have very large amounts of data which makes the

computational cost and subsequent classification task a difficult issue. Firstly, to solve the

problem of computational complexity, spectral clustering algorithm is imported to select

efficient bands for subsequent classification task. Secondly, due to lack of labeled training

sample points, this paper proposes a new algorithm that combines support vector

machines and Bayesian classifier to create a discriminative/generative hyperspectral

image classification method using the selected features. Experimental results on real

hyperspectral image show that the proposed method has better performance than the

other state-of-the-art methods.

Keywords: hyperspectral image, band selection, classification, support vector machine,

Bayesian.

1. Introduction

Hyperspectral sensors record reflection and emittance information in hundreds of

narrowly spaced spectral bands with wavelengths ranging from the visible spectrum to the

infrared region. In a hyperspectral image, each pixel is represented by a feature vector

whose entries correspond to reflection of various bands. The obtained three-dimensional

image cube contains large amounts of discriminative information for hyperspectral image

classification.

Nowadays, a considerable amount of research has been done on hyperspectral image

classification using machine learning algorithms during the past decade. Melgani [1]

propose a theoretical discussion and experimental analysis aiming at understanding and

assessing the potentialities of SVM classifier in hyper-dimensional feature spaces, and

studies the potentially critical issue of applying binary SVMs to multi-class problems in

hyperspectral data. Chen [2] sparsely represents a test sample in terms of all of the

training samples in a feature space induced by a kernel function. The recovered sparse

representation vector is then used directly to determine the class label of the test pixel. Li

[3] constructs a new family of generalized composite kernels which exhibit great

flexibility when combining the spectral and the spatial information contained in

hyperspectral data. And then propose a multinomial logistic regression classifier for

hyperspectral image classification. Liu [4] present a post processing algorithm for a kernel

sparse representation based hyperspectral image classifier, which is based on the

integration of spatial and spectral information. Qian [5] propose a hyperspectral feature

extraction and pixel classification method based on structured sparse logistic regression

and three-dimensional discrete wavelet transform texture features. Chang [6] propose a

nearest feature line embedding transformation for the dimension reduction of a

hyperspectral image. Three factors, including class separability, neighborhood structure

preservation, and feature line embedding measurement, are considered simultaneously to

determine an effective and discriminating transformation in the Eigen spaces for land

ISSN: 1975-0080 IJDTA

Copyright 2016 SERSC

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

cover classification. Ye [7] propose a fusion-classification system to alleviate ill-

conditioned distributions in hyperspectral image classification. This method combines a

windowed 3-D discrete wavelet transform with a feature grouping algorithm to extract

and select spectral-spatial features from the hyperspectral image dataset, and then,

employs a multi classifier decision-fusion approach for the final classification.

However, there are two major problems in the process of hyperspectral image

classification and recognition. On the one hand, the computational cost is too high in

classifying pixels when all bands are used. Due to high correlation between spectral

bands, band selection algorithm should be imported to remove the redundant bands which

do not contribute to the classification task. According to non-linear relation between band

data of hyperspectral image, spectral clustering can be introduced to cluster and select

effective bands for classification. In spectral clustering based band selection algorithm,

neighbor graph and similarity matrix are generated from histogram of band image, then

spectral bands are divided in to k clusters by spectral clustering algorithm. At last, k

selected representative bands are generated for subsequent classification and recognition

task. On the other hand, the labeling cost in hyperspectral image is too high, resulting in

the lack of labeled training sample points. Therefore, new classification algorithm should

be proposed to improve the classification accuracy under the environment of small sample

set.

Therefore, based our former research works [8-11], this paper propose a new

framework for hyperspectral image classification, firstly, spectral clustering is used to

select effective bands for the subsequent classification task. Secondly, a new algorithm is

proposed which combines support vector machines and Bayesian classifier to create a

discriminative/generative hyperspectral image classification method using the selected

features. Experimental results on real hyperspectral image show that the proposed method

has better performance than the other state-of-the-art methods.

2. Band Selection Algorithm

As displayed in Figure 1, hyperspectral image is a three dimensional image array

with I, J, and K dimension in sequence. Wherein, I and J dimension correspond to

width and length spatial dimensions. Dimension K corresponds to spectral

dimension. H stands for the whole image array in which every band H k is an image

matrix. Therefore, each band in image cube can be regarded as a data point with

I J dimensions. In spectral clustering based band selection algorithm, bands with

high correlations are grouped into a cluster, after that, a representative band is

selected from each cluster. At last, the selected bands perform the subsequent

classification task on behalf of all bands.

184 Copyright 2016 SERSC

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

Hk

I

Figure 1. Hyperspectral Image Cube H

Spectral clustering starts with the construction of a similarity matrix W={wij }, in

which the component wij R L L measures how well band i is similar to band j.

There are several measurements which can be used to build the similarities between

band images, such as Mahalanobis distance, Euclidean distance, mutual information

based distance, etc. However, those similarity measures are not suitable for directly

used in spectral clustering algorithm. Therefore, KL divergence based measure [12,

13] is used. We assume that q i (c) and q j (c) are the probability distributions of image

of spectral band i and j respectively. The KL divergence of between q i (c) and q j (c) is

defined as: KL(i,j)= q i (c)log(q i (c)/ q j (c))+ qj (c)log(qj (c)/ q i (c)). However,

divergence describes the difference between two bands. Therefore, similarity

between q i (c) and q j (c) is defined as: wij =(1- KL(i,j)/2). Obviously, when i=j, wij =1,

it means that the same band has the largest similarity. Based on the former

definition, spectral clustering based band selection algorithm is as follows [14, 15]:

Algorithm 1: spectral clustering based band selection algorithm

Input: bands vector H 1 ,H2,,Hk .

1) Compute histogram (probability distribution): q 1(c), q 2(c),,q k(c) for all

bands.

2) Compute similarity between bands using KL divergence formula, obtaining

similarity matrix W.

3) Compute the unnormalized Laplacian matrix L of W.

4) Compute the first m eigenvectors z1 ,z2,zm of L.

5) Let Z R nm be the matrix containing the vectors z1 ,z2,zm as colums.

6) For i=1,2,,n, let x i R m be the vector corresponding to the i-th row of Z.

7) Cluster the points x 1 ,x 2 ,,x n with the k-means algorithm into m clusters:

B1 ,B2,Bm.

8) select a band from every cluster randomly, obtaining m representative bands.

Output: m representative bands

Copyright 2016 SERSC 185

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

3. Fusion of SVM and Bayesian

3.1. Hyperspectral Image Classifier based on SVM

SVM was found in 1997 by Marshall Reavis which combines linear modeling and

instance based learning [16]. SVM model selects a small number of support vectors

(instances in the boundary) from every class and builds a linear discriminative

function which separates the training set as far as possible. In the case of nonlinear

separable, kernel function (such as RBF kernel) is used to project the training

instances into a high dimensional space where the training instances become linear

separable. In the environment of small training sample sets, SVM has better

prediction precision against other classifiers in solving classification and regression

problem. In this paper, the labeled training sample set is Samples={(O 1,p 1 ),

(O2 ,p 2 ),, (O l,p l )}, Oi R m, p i {+1-1} is the class label, +1 stands that sample

point belongs to the class, -1 stands that sample point doesnt belongs to the class.

SVM algorithm finds classification hyper plane which maximize classification

margin based on training sample set. Then, SVM use the classification hyper plane

to classify new sample points. Specific algorithm is as follows:

1) Assumes that we have training sample set: Samples={(O 1,p1 ), (O 2,p 2),,

(Ol ,p l )}, wherein O i R n, p i {+1-1} i=1,2,l;

2) Selects kernel function K O O and penalty parameter C, constructs and

1 l l l

solves Optimization problem: min i j i j i j

2 i 1 j 1

p p K (O , O )

j 1

j,

l

s.t. p

i 1

i i 0,

0 i C , i 1,..., l

Gets the optimal solution: * (1* ,..., l* )T ;

3Selects a positive component *j from * which is less than C, according to

l

*j we calculate b* p j pi i* K (Oi , O j ) ;

i 1

l

4decision function is: f (O) sgn{ p K (O , O) b }

i 1

i

*

i i

*

In optimal solution * , the corresponding samples of nonzero components are

support vectors. Only support vectors can influence decision function. Therefore,

decision function can be rewritten as follows:

f (O) sgn{ i* pi K (Oi, O) b*} = sgn{ i* pi K (Oi, O) b*}

Oi SV j Oi SV j

wherein, SV is the set of support vectors; SV j is the set of the support vectors in

class j, j {+1 -1}; Oi is support vector; pi is the corresponding class label of

sample Oi; i* is the correspongding weight of sample Oi; b is bias term; K() is

kernel function. In the case of nonlinear separable, feature space is mapped into a

high dimensional space through kernel function, while the training samples in high

dimensional space are linear separable.

186 Copyright 2016 SERSC

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

The support vector machine is essentially a two-class classifier. In our

framework, we face a multiclass problem. Various algorithms have been proposed

for combining multiple two-class SVMs to build a multiclass classifier. One

commonly used method is to construct M separate SVMs, in which the M th

classification function f m(O) is trained using the data from class C m as the positive

training samples and the data from the other M-1 classes as the negative training

samples. However, this method lead to the predicament that one pixel will be

assigned to multiple classes simultaneously. This is solved by making prediction for

a new sample O using the following decision functions: f (O ) max f m (O) .

m

3.2. Hyperspectral Image Classification based on Bayesian

In machine learning, Bayesian classifiers are a family of generative classifiers

based on applying Bayes theorem with strong independence assumptions between

the features. Bayesian classifier uses Bayesian formula to minimize the error rate of

classification rules through calculating the Maximum a posteriori (MAP for short).

Bayesian classifiers are highly scalable, requiring a number of linear parameters in a

learning problem. Maximum-likelihood training can be done by calculating a

closed-form expression, which takes linear time, rather than by expensive iterative

approximation as used for many other classifiers. The Bayesian classification

function of hyperspectral image is as follows:

f (O) arg max j{1,1}{P(O | j ) P( j )}

num j num j

P(O | j ) P(O | i, j) P(i | j) N (O |

i 1 i 1

ji , ji ) P(i | j )

Wherein, P ( j ) is prior probability, we use the proportion of all kinds of samples

points to approximate prior probability, P( j ) | sample j | / | sample | , samplej is

sample set of class j, sample is the whole sample set. P(O | j ) is likelihood

function. f (O ) is maximum a posteriori, which represents the most possible class

in the current sample value O. the jth class is expressed as a Gaussian mixture

distribution with numj clusters. P(i | j ) is the weight of ith cluster in jth class.

N (O | ji , ji ) is the distribution of ith cluster, wherein ji is mean value and ji

covariance matrix. The parameters of Gaussian mixture distribution

( P(i | j ), ji , ji ) are calculated by Expectation Maximization (EM for short). In

EM algorithm, a new constraint condition ji = I is added, wherein, is

variance, I is unit matrix. When sample points is not uniform, we can assure

ji = I through down sampling and up sampling. The reason why we add this

condition is to promote classifier fusion in the next section.

3.3. Classifier Fusion

As mentioned above, SVM is a discriminative classifier, and Bayesian is a

generative classifier. Discriminative classifier only needs a small amount of training

sample points, while suffer from a lack of robustness with respect to noise and over

fitting. Generative classifier is not sensitive to noise, but needs a large amount of

training sample points to reach a good classification precision. This paper proposes

the fusion of two kinds of classifier, combining their advantages, implemented a

classifier which is not sensitive to noise while need less training sample points.

The decision function of SVM classifier with RBF kernel function is as follows:

Copyright 2016 SERSC 187

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

f (O) arg max j{1,1}{

Oi SV j

*

i pi K (Oi, O) b*}

(1)

arg max j{1,1}{

Oi SV j

i* pi exp( || O Oi ||2 )}

Wherein, SV j is support vector set of jth class, i is corresponding weight of

support vector, is parameter of kernel function, b* is support vector O0 with

weight 0* .

The decision function of Bayesian classifier is as follows:

num j

1 || O ji ||2

f (O) arg max j{1,1}{ P( j ) P(i | j ) exp( )} (2)

i 1 (2 2 )n /2 2 2

Compare formula (1) and (2), can be found that as long as we make:

1

i* pi = P( j ) P(i | j ) (3)

(2 2 ) n /2

1

(4)

2 2

Oi ji , for all Oi SV j (5)

Then, the two formulas have uniform expression. Thus, the two kinds of classifier

can be mutual conversion. The mean value of cluster in Bayesian corresponding to

support vector in SVM; the variance of cluster in Bayesian can be used to calculate

1

parameter in RBF kernel related to support vector; P( j ) P(i | j ) can be

(2 2 ) n /2

used to calculate weight related to support vector. Seen from formula (1), SVM

classifier is defined with the set of support vector (OS1 ,OS2,,OSn), the weight set of

support vector ( S1 , S2 ,, Sn), and parameter set ( S1 , S2 ,, Sn), therefore

can be represented as:

SVM = {(O S1,O S2,,OSn),( S1 , S2,, Sn) ,( S1, S2,, Sn )}

According to the correspondence between Bayesian and SVM classifier, Bayesi an

classifier can be expressed as the style of SVM classifier as follows:

Bayes = {(O B1,O B2,,OBm),( B1 , B2,, Bm) ,( B1, B2,, Bm)}

Given the scale factor [0,1], the fused classifier is as follows:

SVM/Bayes={(O B1 ,OB2 ,,OBm,OS1 ,OS2,,OSn),( B1 , B2 ,, Bm,(1-

) S1 , (1- ) S2 ,, (1- ) Sn) ,(

B1 , B2 ,, Bm, S1 , S2 ,, Sn )}

=0 and =1 Correspond to simple SVM and Bayesian classifier respectively.

The fused classifier converts middle point of cluster in the Bayesian into support

vector in SVM. In the new set of support vectors (OB1,OB2,,OBm,OS1 ,OS2 ,,OSn),

predicting the class label for new pixels using SVM prediction algorithm. As

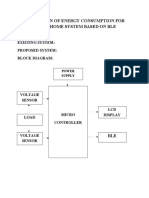

described in figure 2, solid circular points and hollow circular points represent two

classes of samples. HP is separating hyper plane. The points in HP1 and HP2 are

support vectors generated by SVM training algorithm. In the process of predicting,

SVM only consider support vector, which declines the predicting precision when

support vector is noised. Bayesian consider every class as mixture Gaussian

distribution, and calculating mean value and covariance of every Gaussian

188 Copyright 2016 SERSC

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

distribution using EM algorithm. The resulting Gaussian distribution is shown as

ellipse in figure 2. The fusion of classifiers is to expand support vector set with

mean value of Gaussian distribution. The expanded support vector set is then used

in predicting the class of new pixel, which will improve classification performance.

HP1

HP

HP2

Figure 2. The Schematic Diagram of Classifier Fusion

4. Experiments and Results

Having presented the method of fusing SVM and Bayesian classifier (SB for

short) in the previous sections, we now demonstrate the effect of our new method

through several comparative experiments. These experiments are done in a real-

world hyperspectral image data set which is a section of the scene taken over

Washington DC mall by the sensor of HYDICE (hyperspectral digital imagery

collection experiment). This data set has 500 307pixels, 210 spectral bands and

seven classes composed of water, vegetation, manmade structures and shadow etc.

Figure 3 shows the 60 th band image of Washington DC mall data set.

In order to assess the performance of the new method proposed in this paper, we

choose two models for comparison: 1) ID algorithm proposed in literature [17] with

SVM classifier; 2) MVPCA algorithm proposed in literature [18] with Bayesian

classifier.

Figure 3. Image of 60 th Band in Washington DC Mall Data Set

4.1. The Comparison of Average Classification Precision in Noiseless Environment

The experiment result is as follows, wherein, the weight of SVM and Bayesian is

0.5.

Copyright 2016 SERSC 189

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

Table 1. Comparison Table of Average Classification Precision in

Noiseless Environment

Number of training Average classification precision

samples

ID+SVM MVPCA+Bayesian SB

20 0.721 0.591 0.736

40 0.757 0.662 0.843

60 0.779 0.731 0.889

80 0.792 0.780 0.912

100 0.796 0.825 0.924

It can be seen from the above experimental data, as the number of training

samples increase, the average classification precision of all algorithms are gradually

increasing. This is because that the increase of the sample points improves the

classification accuracy of classifiers. Wherein, ID+SVM algorithm achieves good

classification precision (0.721) under the condition of small sample set (20 sample

points), it is because of the SVM is a discriminative classifier whic h has good

performance in small sample set. With the increase number of sample points, the

improving effect of ID+SVM algorithm is not obvious. This is because the new

sample points are not taken as support vectors, where only support vector can

influence the final classification. MVPCA+Bayesian algorithm have bad

classification precision (0.591) under the condition of small sample set (20 sample

points), it is because of the Bayesian is a generative classifier. Generative classifier

classifies samples according to the statistical law of sample points. Small sample set

cannot reflect statistical law of sample points. Thus this algorithm has low

classification precision. With the number of sample points increase, the

classification performance of Bayesian improves, which in turn increase the

classification precision of pixels. Due to the fusion of SVM and Bayesian classifier,

SB algorithm has merits of these two classifiers. Therefore, in the small sample set

(20 sample points), SB achieves good classification precision (0.736). In general,

the effect of SB algorithm is higher than the other two algorithms.

4.2. The Comparison of Average Classification Precision in Noise Environment

In order to validate the robustness of new method in the noise environment, we

randomly choose 5 percent of sample points, and change their label. The repeated

experimental result is as follows:

Table 2. Comparison Table of Average Classification Precision in Noise

Environment

Number of training Average classification precision

samples

ID+SVM MVPCA+Bayesian SB

20 0.506 0.561 0.696

40 0.621 0.629 0.789

60 0.698 0.708 0.856

80 0.748 0.764 0.897

100 0.763 0.812 0.919

Seen from above experimental data, in small sample set (20 sample points),

classification precision of ID+SVM algorithm fell sharply. It is because

discriminative classifier is sensitive to noise. The sample set is too small, where

noise points have great influence to the result. As the number of sample point

190 Copyright 2016 SERSC

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

increase, the influence of noise points to the result decrease, but the classification

precision is still lower than the noiseless environment. MVPCA+Bayesian algorithm

has relatively small influence by noise point as to that of the ID+SVM algorithm.

The classification precision is a little lower than that of the noiseless environment.

This is because Bayesian is a generative classifier, which classifies samples

according statistical parameters, and is not sensitive to noise points. SB algorithm

has merits of these two classifiers, showing good performance in smal l sample set

and noise environment.

5. Conclusions

In this paper, we present an approach of fusing SVM and Bayesian classifier for

hyperspectral data classification. Firstly, according to non-linear relation between

band data of hyperspectral image, spectral clustering is introduced to cluster and

select effective bands for subsequent classification task. Secondly, Aiming at

solving the problem of small training sample set, a new algorithm is proposed which

combines support vector machines and Bayesian classifier to create a

discriminative/generative hyperspectral image classification method using the selected

features. Experimental results on real hyperspectral image show that the proposed

method has better performance than the other state-of-the-art methods.

Acknowledgements

Project supported by the Zhejiang Provincial Natural Science Foundation of China (No.

LQ13F020015).

References

[1] F. Melgani and L. Bruzzone, Classification of hyper-spectral remote sensing images with support

vector machines, IEEE Transactions on Geoscience and Remote Sensing, vol. 42, no. 8, (2004), pp.

1778-1790.

[2] Y. Chen, N. M. Nasrabadi and T. D. Tran, Hyper-spectral image classification via kernel sparse

representation, IEEE Transactions on Geoscience and Remote Sensing, vol. 51, no. 1, (2013), pp. 217-

231.

[3] J. Li, P. R. Marpu and A. Plaza, Generalized composite kernel framework for hyper-spectral image

classification, IEEE Transactions on Geoscience and Remote Sensing, vol. 51, no. 9, (2013), pp. 4816-

4829.

[4] J. Liu, Z. Wu and L. Sun, Hyper-spectral Image Classification Using Kernel Sparse Representation and

Semi-local Spatial Graph Regularization, Geoscience and Remote Sensing Letters, vol. 11, no. 8,

(2014), pp. 1320 1324.

[5] Y. Qian, M. Ye and J. Zhou, Hyper-spectral image classification based on structured sparse logistic

regression and three-dimensional wavelet texture features, IEEE Transactions on Geoscience and

Remote Sensing, vol. 51, no. 4, (2013), pp. 2276-2291.

[6] Y. L. Chang, J. N. Liu and C. C. Han, Hyper-spectral image classification using nearest feature line

embedding approach, IEEE Transactions on Geoscience and Remote Sensing, vol. 52, no. 1, (2014), pp.

278-287.

[7] Z. Ye, S. Prasad and W. Li, Classification based on 3-D DWT and decision fusion for hyper-spectral

image analysis, Geoscience and Remote Sensing Letters, vol. 11, no. 1, (2014), pp. 173-177.

[8] Z. Zheng and Y. Peng, Semi-Supervised Based Hyper-spectral Imagery Classification, Sensors and

Transducers, vol. 156, no. 9, (2013), pp. 298-303.

[9] P. Y. Bin and A. I. J. Qing, Hyper-spectral Imagery Classification Based on Spectral Clustering Band

Selection, Opto-Electronic Engineering, vol. 39, no. 2, (2012), pp.63-67.

[10] J. Li, S. Jia and Y. Peng, Hyper-spectral Data Classification with Spectral and Texture Features by Co-

training Algorithm, Opto-Electronic Engineering, vol. 39, no. 11, (2012), pp.88-94.

[11] Y. Peng, Z. Zheng and C. Yu, Automated negotiation decision model based on classifier fusion,

Journal of Shanghai Jiaotong University, vol. 47, no. 4, (2013), pp. 644-649.

[12] J. R. Hershey and P. A. Olsen, Approximating the Kullback Leibler Divergence Between Gaussian

Mixture Models, ICASSP, no. 4, (2007), pp. 317-320.

[13] R. Neher and A. Srivastava, A Bayesian MRF framework for labeling terrain using hyper-spectral

imaging, IEEE Transactions on Geoscience and Remote Sensing, vol. 43, no. 6, (2005), pp.1363-1374.

Copyright 2016 SERSC 191

International Journal of Database and Theory and Application

Vol.9, No.2 (2016)

[14] A. Y. Ng, M. I. Jordan and Y. Weiss, On spectral clustering: Analysis and an algorithm, Advances in

neural information processing systems, (2002), pp. 849-856.

[15] U. V. Luxburg, A tutorial on spectral clustering, Statistics and computing, vol. 17, no. 4, (2007),

pp.395-416.

[16] C. M. Bishop, Pattern recognition and machine learning, New York: springer, (2006).

[17] C. I. Chang and S. Wang, Constrained band selection for hyper-spectral imagery, IEEE Transactions

on Geoscience and Remote Sensing, vol. 44, no. 6, (2006), pp. 1575-1585.

[18] C. I. Chang, Q. Du and T. L. Sun, A joint band prioritization and band-decor-relation approach to band

selection for hyper-spectral image classification, IEEE Transactions on Geoscience and Remote

Sensing, vol. 37, no. 6, (1999), pp. 2631-2641.

192 Copyright 2016 SERSC

Vous aimerez peut-être aussi

- ABSTRACTDocument4 pagesABSTRACTBrightworld ProjectsPas encore d'évaluation

- ABSTRACTDocument3 pagesABSTRACTBrightworld ProjectsPas encore d'évaluation

- ABSTRACTDocument4 pagesABSTRACTBrightworld ProjectsPas encore d'évaluation

- ABSTRACTDocument4 pagesABSTRACTBrightworld ProjectsPas encore d'évaluation

- ABSTRACTDocument4 pagesABSTRACTBrightworld ProjectsPas encore d'évaluation

- ABSTRACTDocument4 pagesABSTRACTBrightworld ProjectsPas encore d'évaluation

- ABSTRACTDocument4 pagesABSTRACTBrightworld ProjectsPas encore d'évaluation

- ABSTARCTDocument4 pagesABSTARCTBrightworld ProjectsPas encore d'évaluation

- Automated Detection of Human RBC in Diagnosing Sickle Cell Anemia With Laplacian of Gaussian FilterDocument4 pagesAutomated Detection of Human RBC in Diagnosing Sickle Cell Anemia With Laplacian of Gaussian FilterBrightworld ProjectsPas encore d'évaluation

- Identification of Abnormal Red Blood Cells and Diagnosing Specific Types of Anemia Using Image Processing and Support Vector MachineDocument6 pagesIdentification of Abnormal Red Blood Cells and Diagnosing Specific Types of Anemia Using Image Processing and Support Vector MachineBrightworld ProjectsPas encore d'évaluation

- OutputDocument1 pageOutputBrightworld ProjectsPas encore d'évaluation

- AbstractDocument1 pageAbstractBrightworld ProjectsPas encore d'évaluation

- Analysis of Anemia Using Data Mining Techniques With Risk Factors SpecificationDocument5 pagesAnalysis of Anemia Using Data Mining Techniques With Risk Factors SpecificationBrightworld ProjectsPas encore d'évaluation

- Anemia Prediction Based On Rule Classification: Sahar J. Mohammed MOHAMMED Amjad A. Ahmed Arshed A. AhmadDocument5 pagesAnemia Prediction Based On Rule Classification: Sahar J. Mohammed MOHAMMED Amjad A. Ahmed Arshed A. AhmadBrightworld ProjectsPas encore d'évaluation

- Grid-Voltage-Oriented Sliding Mode Control For Dfig Under Balanced and Unbalanced Grid FaultsDocument3 pagesGrid-Voltage-Oriented Sliding Mode Control For Dfig Under Balanced and Unbalanced Grid FaultsBrightworld ProjectsPas encore d'évaluation

- Synchronization and Reactive Current Support of PMSG Based Wind Farm During Severe Grid FaultDocument4 pagesSynchronization and Reactive Current Support of PMSG Based Wind Farm During Severe Grid FaultBrightworld ProjectsPas encore d'évaluation

- 118Document3 pages118Brightworld ProjectsPas encore d'évaluation

- Detailed Investigation and Performance Improvement of The Dynamic Behavior of Grid-Connected Dfig Based Wind Turbines Under LVRT ConditionsDocument4 pagesDetailed Investigation and Performance Improvement of The Dynamic Behavior of Grid-Connected Dfig Based Wind Turbines Under LVRT ConditionsBrightworld ProjectsPas encore d'évaluation

- New Insights Into Coupled Frequency Dynamics of Ac Grids in Rectifier and Inverter Sides of LCC-HVDC Interfacing Dfig-Based Wind FarmsDocument5 pagesNew Insights Into Coupled Frequency Dynamics of Ac Grids in Rectifier and Inverter Sides of LCC-HVDC Interfacing Dfig-Based Wind FarmsBrightworld ProjectsPas encore d'évaluation

- Analysis, Control, and Design of A Hybrid Grid-Connected Inverter For Renewable Energy Generation With Power Quality ConditioningDocument3 pagesAnalysis, Control, and Design of A Hybrid Grid-Connected Inverter For Renewable Energy Generation With Power Quality ConditioningBrightworld ProjectsPas encore d'évaluation

- High Speed LIFI Based Data Communication in Vehicular NetworksDocument5 pagesHigh Speed LIFI Based Data Communication in Vehicular NetworksBrightworld ProjectsPas encore d'évaluation

- An Effective Voltage Controller For Quasi-Z-Source Inverter-Based Statcom With Constant Dc-Link VoltageDocument4 pagesAn Effective Voltage Controller For Quasi-Z-Source Inverter-Based Statcom With Constant Dc-Link VoltageBrightworld ProjectsPas encore d'évaluation

- Single-Phase To Three-Phase Unified Power Quality Conditioner Applied in Single Wire Earth Return Electric Power Distribution GridsDocument3 pagesSingle-Phase To Three-Phase Unified Power Quality Conditioner Applied in Single Wire Earth Return Electric Power Distribution GridsBrightworld ProjectsPas encore d'évaluation

- PV Battery Charger Using An L3C Resonant Converter For Electric Vehicle ApplicationsDocument4 pagesPV Battery Charger Using An L3C Resonant Converter For Electric Vehicle ApplicationsBrightworld ProjectsPas encore d'évaluation

- A Novel Step-Up Single Source Multilevel Inverter: Topology, Operating Principle and ModulationDocument5 pagesA Novel Step-Up Single Source Multilevel Inverter: Topology, Operating Principle and ModulationBrightworld ProjectsPas encore d'évaluation

- Proposed Framework For V2V Communication Using Li-Fi TechnologyDocument4 pagesProposed Framework For V2V Communication Using Li-Fi TechnologyBrightworld ProjectsPas encore d'évaluation

- A 5-Level High Efficiency Low Cost Hybrid Neutral Point Clamped Transformerless Inverter For Grid Connected Photovoltaic ApplicationDocument4 pagesA 5-Level High Efficiency Low Cost Hybrid Neutral Point Clamped Transformerless Inverter For Grid Connected Photovoltaic ApplicationBrightworld ProjectsPas encore d'évaluation

- Enhanced-Boost Quasi-Z-Source Inverters With Two Switched Impedance NetworkDocument3 pagesEnhanced-Boost Quasi-Z-Source Inverters With Two Switched Impedance NetworkBrightworld ProjectsPas encore d'évaluation

- An Isolated Multi-Input Zcs DC-DC Front-End-Converter Based Multilevel Inverter For The Integration of Renewable Energy Sources BECAUSE of Limited Availability, Increasing Prices, andDocument4 pagesAn Isolated Multi-Input Zcs DC-DC Front-End-Converter Based Multilevel Inverter For The Integration of Renewable Energy Sources BECAUSE of Limited Availability, Increasing Prices, andBrightworld ProjectsPas encore d'évaluation

- Buck-Boost Dual-Leg-Integrated Step-Up Inverter With Low THD and Single Variable Control For Single-Phase High-Frequency Ac MicrogridsDocument4 pagesBuck-Boost Dual-Leg-Integrated Step-Up Inverter With Low THD and Single Variable Control For Single-Phase High-Frequency Ac MicrogridsBrightworld ProjectsPas encore d'évaluation

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Practical Research 1 (Final Proposal Paper)Document39 pagesPractical Research 1 (Final Proposal Paper)SHEEN ALUBAPas encore d'évaluation

- Core-Drilling Technique For In-Situ StressDocument6 pagesCore-Drilling Technique For In-Situ StressAnshul JainPas encore d'évaluation

- Statistical Conclusion ValidityDocument2 pagesStatistical Conclusion ValidityCut AzraPas encore d'évaluation

- Study Centres 2018-19Document5 pagesStudy Centres 2018-19Ananya NadendlaPas encore d'évaluation

- Introduction To Consumer BehaviourDocument66 pagesIntroduction To Consumer BehaviourAnoop AjayanPas encore d'évaluation

- DR ImranZahraanProf DR AbuBakarDocument6 pagesDR ImranZahraanProf DR AbuBakarAdnan SheikhPas encore d'évaluation

- Contingency Reserve VS Management Reserve - PM VidyaDocument1 pageContingency Reserve VS Management Reserve - PM VidyaYousef APas encore d'évaluation

- HRM340.2 FInal Group Project DarazDocument14 pagesHRM340.2 FInal Group Project DarazUmar Faruq EftiPas encore d'évaluation

- Lab Sheet PDFDocument9 pagesLab Sheet PDFtehpohkeePas encore d'évaluation

- The Influence of Social Media On Sales Performance - Journal of Business - 2020Document10 pagesThe Influence of Social Media On Sales Performance - Journal of Business - 2020Boo-Boo Boo-BooPas encore d'évaluation

- A Research Submitted To: Student ResearchersDocument4 pagesA Research Submitted To: Student ResearchersDorothy Ann AllurinPas encore d'évaluation

- Your Instructions Are Not Always HelpfuDocument10 pagesYour Instructions Are Not Always Helpfupatricio silvaPas encore d'évaluation

- Rainfall Analysis For Crop Planning in Ganjam District: Mintu Kumar Adak Adm No: 01AS/14Document84 pagesRainfall Analysis For Crop Planning in Ganjam District: Mintu Kumar Adak Adm No: 01AS/14sivakumar656Pas encore d'évaluation

- SCES3383 General Physics Lab ReportDocument3 pagesSCES3383 General Physics Lab ReportPISMPPSV10623 Dania Yasmin Binti DollahPas encore d'évaluation

- A General Contingency Theory of ManagementDocument16 pagesA General Contingency Theory of ManagementlucasPas encore d'évaluation

- RIFTY VALLEY University Gada Campus Departement of AccountingDocument9 pagesRIFTY VALLEY University Gada Campus Departement of Accountingdereje solomonPas encore d'évaluation

- EBS 341-Lab Briefing-19 Feb 2019Document71 pagesEBS 341-Lab Briefing-19 Feb 2019NNadiah Isa100% (1)

- Fieldwork ObservationDocument6 pagesFieldwork ObservationYesenia SolisPas encore d'évaluation

- Consumer BehaviorDocument54 pagesConsumer BehaviorGuruKPO100% (5)

- How Can We Analyze A QuestionnaireDocument8 pagesHow Can We Analyze A QuestionnaireAsmaa BaghliPas encore d'évaluation

- FL Pocket Book-1Document19 pagesFL Pocket Book-1IISCIrelandPas encore d'évaluation

- Applied12 EAPP Q3 W1 LeaPDocument6 pagesApplied12 EAPP Q3 W1 LeaPSophia100% (1)

- Software Project ManagementDocument57 pagesSoftware Project ManagementSameer MemonPas encore d'évaluation

- Drone Delivery Project WBSDocument2 pagesDrone Delivery Project WBSDevenn CastilloPas encore d'évaluation

- Evaluate The Importance Question (12 Marks)Document1 pageEvaluate The Importance Question (12 Marks)Natalia100% (1)

- b1b8 PDFDocument2 pagesb1b8 PDFAhmed Daahir AhmedPas encore d'évaluation

- Applying Machine Learning in Capital MarketsDocument5 pagesApplying Machine Learning in Capital MarketsmaritzaPas encore d'évaluation

- Bagaya CarolineDocument83 pagesBagaya Carolinemunna shabaniPas encore d'évaluation

- Principles of Marketing Digital Assignment - I: When To Start A Company?Document5 pagesPrinciples of Marketing Digital Assignment - I: When To Start A Company?VINAYPas encore d'évaluation

- ABC News/Ipsos Poll Oct 29-30, 2021Document5 pagesABC News/Ipsos Poll Oct 29-30, 2021ABC News PoliticsPas encore d'évaluation