Académique Documents

Professionnel Documents

Culture Documents

AppLabs Performance Testing Process

Transféré par

Vishal MhatreCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

AppLabs Performance Testing Process

Transféré par

Vishal MhatreDroits d'auteur :

Formats disponibles

Confidential

AppLabs Performance Testing Process Whitepaper

AppLabs Technologies

1601 Market Street, Suite 395, Philadelphia, PA 19103

Phone: 215.569.9976 Fax: 215.569.9956

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 1 of 11

Confidential

Table of Contents

1 Introduction................................................................................................................. 3

2 Tools Used .................................................................................................................. 3

2.1 AppMeter Feature Details................................................................................... 3

3 General Summary ....................................................................................................... 4

3.1 Types of Performance Testing ............................................................................ 4

3.2 Reporting Metrics ............................................................................................... 5

3.3 Keys to Accuracy................................................................................................ 5

3.4 Load Scalability .................................................................................................. 6

4 Process Steps............................................................................................................... 6

4.1 Conducting a System Analysis ........................................................................... 6

4.2 Usage Patterns – Understanding the Nature of the Load.................................... 6

4.3 Usage Patterns – Determine User Session Variables / Page Request Distribution

7

4.4 Determine Range & Distribution of values for these variables .......................... 7

4.5 Estimating Target Load Levels........................................................................... 7

4.6 Develop Base Test Scripts – Load Test Design.................................................. 8

4.7 Determine Frequency of Execution .................................................................... 8

4.8 Execute Test Scripts............................................................................................ 8

4.9 Monitor Execution .............................................................................................. 9

4.10 Collect & Examine Reports ................................................................................ 9

4.11 Provide Reports and Suggestions...................................................................... 11

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 2 of 11

Confidential

1 Introduction

AppLabs Technologies provides offshore and on-site quality assurance services.

We have a dedicated staff of QA professionals to perform test plan creation, script

development for automated testing, manual and automated test plan execution, and stress,

load and capacity testing.

As part of our suggested QA guidelines, AppLabs encourages and supports stress,

load and performance testing. The elements contained in these tests are critical to the

successful sustainability of any client/server applications, including web-based

applications.

Load testing is done by creating a set of virtual users that simulate a load on a real

application. It increases confidence and knowledge of response times, performance,

reliability and scalability. Without conducting proper load tests, it is difficult or

impossible to know the number of users an application can effectively support. Load

testing increases up-time, customer satisfaction, and decreases both real and potential

costs of hardware, software, and development resources. Load testing can identify

bottlenecks and defects that can’t be found with conventional methods of testing.

2 Tools Used

A variety of tools are available for generating performance tests on web-based and client-

server applications. Mercury Interactive’s LoadRunner® or Radview’s Webload® is

recommended in most scenarios; however, some applications require the use of custom

developed tools. AppLabs has developed AppMeter, a Load Testing tool based on the

open source Java tool, JMeter. AppLabs can also develop a custom tool solution known

as a “Test Harness” if it is required by the application under test.

2.1 AppMeter Feature Details

¾ 100% pure Java

o It is a 100% pure Java desktop application designed to load test functional

behavior and measure performance.

o Complete portability across different platforms.

o Fully Swing based application and thus lightweight component support.

¾ Multithreaded Framework: Full multithreading framework allows concurrent

sampling by many threads and simultaneous sampling of different functions by

separate thread groups

¾ Unlimited virtual users: Other tools charge on a per-virtual-user basis. With

AppMeter, we can use an unlimited number of virtual users to create any size load

that is needed without increasing costs or overhead

¾ Saving results into CSV file: As the test results are saved into a CSV file

(comma delimited/separated values), the statistical analysis of the test results is

quickly and easily done with Excel or other CSV compatible software.

¾ Statistical friendly test results: The tool provides all the parameters required for

statistical analysis. So finding the statistical significance of the test results is

effortless.

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 3 of 11

Confidential

¾ Built-in log analyzers: AppMeter comes with built-in tools such as an exception

analyzer and word analyzers, which are instrumental in analyzing the log files of

different servers.

¾ Conditional Branching Support: Not every load test will include a

predetermined sequence of events. When the user-actions are based on previous

actions (or correct, different results from the same actions), conditional branching

is an absolute necessity. AppMeter supports conditional branching and allows the

flexibility to test today’s dynamic applications.

¾ Regular Expressions: Using regular expressions, AppMeter can quickly and

easily be used to find patterns, errors, and other text-intensive operations.

Regular expressions are very handy in determining if a request fails based on the

response. Another advantage of this feature is the ease of capturing the required

values from a response; This can allow AppMeter to efficiently use these values

for conditional navigation/branching or for using as an input for the next request.

¾ HTTP Head Request Support: This feature just checks for the availability of a

particular resource (say images) instead of getting its content. In situations where

we have to check for the availability of a resource, but not to download its

content, this feature is superb.

¾ SSL Support: The tool supports HTTPS protocol as well as supporting many

other protocols. It supports HTTP, FTP, JDBC, and SOAP etc.

¾ Variety of Timers: The most common timers used in any load testing are

constant timer, uniform random timer and Gaussian random timer. The tool has

all these built-in timers.

¾ Many other Features: Some of the other features available are counters,

assertions, cookie manager, header manager etc.

3 General Summary

3.1 Types of Performance Testing

Load: Testing an application against a requested number of users. The

objective is to determine whether the site can sustain this requested

number of users with acceptable response times.

Stress: Load testing over an extended period of time. The objective is to

validate an application’s stability and reliability.

Capacity: Testing to determine the maximum number of concurrent users

an application can manage. The objective is to benchmark the maximum

loads of concurrent users a site can sustain before experiencing system

failure.

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 4 of 11

Confidential

3.2 Reporting Metrics

Load size: The number of concurrent Virtual Clients trying to access the

site.

Throughput: The average number of bytes per second transmitted from

the ABT (Application being tested) to the Virtual Clients running this

Agenda during the last reporting interval

Round Time: It is the average time it took the virtual clients to finish one

complete iteration of the agenda during the last reporting interval.

Transaction Time: The time it takes to complete a successful request, in

seconds. (For a web-based application, each request for each gif, jpeg,

html file, etc. is a single transaction.) The time of a transaction is the sum

of the Connect Time, Send Time, Response Time, and Process Time

Connect Time: The Time it takes for a Virtual client to connect to the

Application Being Tested.

Send Time: The time it takes the Virtual Clients to write a request to the

ABT (Application being tested), in seconds.

Response Time: The time it takes the ABT(Application being tested) to

send the object of a request back to a Virtual Client, in seconds. In other

words, the time from the end of the request until the Virtual Client has

received the complete item it requested.

Process Time: The time it takes to parse a response from the ABT

(Application being tested) and then populate the document-object model

(the DOM), in seconds.

Wait Time (Average Latency): The time it takes from when a request is

sent until the first byte is received.

Receive Time: The elapsed time between receiving the first byte and the

last byte.

3.3 Keys to Accuracy

• Recording ability against a real client application

• Capturing protocol-level communication between client application and

rest of system

• Providing flexibility and ability to define user behavior

• Verifying that all requested content returns to the browser to ensure a

successful transaction

• Showing detailed performance results that can easily be understood and

analyzed to quickly pinpoint the root cause of problems

• Measuring end-to-end response time

• Using real-life data

• Synchronizing virtual user to generate peak loads

• Monitoring different tiers of the system with minimal intrusion

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 5 of 11

Confidential

3.4 Load Scalability

• Generating the maximum number of virtual users that can be run on a

single machine before exceeding the machine’s capacity

• Generating the maximum number of hits per second against a

Web/application server

• Managing thousands of virtual users

• Increasing the number of virtual users in a controlled fashion.

4 Process Steps

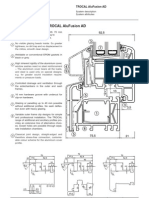

Diagram 1: Explained in Detail Below

System Analysis /

Determining Load Determine User

Determine Range &

Nature Session

Distribution of values

Variables

Estimate Target Develop Base Create Load-testing

Load Levels Test Scripts Scripts combining scripts

in a logical

Determine frequency Execute Test

of execution Scripts

Monitor Collect & Provide Reports and

Execution Examine Reports Suggestions to Client

4.1 Conducting a System Analysis

During this phase, the entire system is analyzed and broken down into specific

components. Any one component can have a dramatic effect on the performance

of the system. This step is critical to simulating and understanding the load and

potential problem areas. Furthermore, it can later aid in making suggestions on

how to resolve bottlenecks and improve performance. Some example tasks

include:

• Identify all hardware components

• Identify all software components

• Identify all unrelated processes and services that may affect system

components

4.2 Usage Patterns – Understanding the Nature of the Load

Understanding where and when the load comes from is necessary to correctly

simulate the load. With this information, minimum, maximum, and average loads

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 6 of 11

Confidential

can be determined, as well as the distribution of the load. Some data that is

collected includes:

• Visitors’ IP addresses

• Date & time each page visited

• Size of the file or page accessed

• Code indicating access successful or failed

• Visitor's browser & OS / client-side configuration

4.3 Usage Patterns – Determine User Session Variables / Page

Request Distribution

What processes that the load triggers is needed to simulate the correct load. Some

information that is collected is as follows:

• Length of the session (measured in pages)

• Duration of the session (measured in minutes and seconds)

• Type of pages that were visited during the session, and what processes are

triggered for each

Examples:

o “Home page”

o Product info page

o Credit Card Info page

• Varying User Activities from page types.

Examples:

o Uploading information

o Downloading information

o Purchasing

• Page Rendering Issues

Examples:

o Dynamic pages (such as time sensitive Auction/trade pages)

o Static pages

• New users vs. Existing users

4.4 Determine Range & Distribution of values for these variables

With the previous two phases, statistical data can be formed. Some distribution

figures that are computer are the following:

• Average (e.g., 4pg views per session)

• Standard deviation

• Discrete distribution

4.5 Estimating Target Load Levels

With the guidance of the client, and reflecting on the data previously collected,

target load levels, or goals, can be established. Such goals often include:

• Overall traffic growth (historic data vs. sales/marketing expectations)

• Peak Load Level (day of week, time of day, or after specific event)

• How long the peak load level is expected to last

• Options on getting these numbers

Concurrent users

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 7 of 11

Confidential

Page views

User session per unit of time

4.6 Develop Base Test Scripts – Load Test Design

During this phase the base scripts that will be used to generate the load are

determined. The steps and some examples follow.

a. Test Objective

SAMPLE: The objective of this load test is to determine if the Web

site, as currently configured, will be able to handle the 12,000-

sessions/hr-peak load level anticipated for the coming holiday season.

If the system fails to scale as anticipated, the results will be analyzed

to identify the bottlenecks, and the test will be run again after the

suspected bottlenecks have been addressed.

b. Pass / Fail Criteria

SAMPLE: The load test will be considered a success if the Web site

will handle the target load of 12,000 sessions/hr while maintaining the

average page response times defined below. The page response time

will be measured over T1 lines and will represent the elapsed time

between a page request and the time the last byte is received.

c. Script Labels and Distribution

• Type of scripts – (keeping Page Request Distribution as the main

concept here)

• Number of Scripts (usually 10 -15)

• Naming of Scripts

SAMPLE:

1. Browse NoBuy: Home >> Product Information(1) >>

Product

2. Information(2) >> Exit

3. View Calendar: Home >>Event Calendars Page >> Next 30

days >> back >>Exit

• Calculate the target page distribution based on the types of pages

hit by each script, and the relative frequency with which each

script will be executed.

4.7 Determine Frequency of Execution

When, and how often the load test is run can have an affect on the

outcome of the results. Other processes and unrelated loads may impact

the performance of the application under test. During this stage, the

proper tests are determined to run at specific points, or specific number of

iterations.

4.8 Execute Test Scripts

Combine Scripts to create load-testing Scenario

a. Scripts Executed

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 8 of 11

Confidential

b. Percentages in which those scripts are run

c. Description of how load will be ramped up

Sample Table 1: Script Table for static eCommerce site

Script Name Basic Description

Home Only Home Æ Exit

BrowseNoBuy Home Æ Product Information(1) Æ Product

Information(2) Æ Exit

BrowseAndBuy Home Æ Product Information(1) Æ Product

Information(2) Æ Order Entry Æ Credit Card

Validation Æ Order Confirmation Æ Exit

Sample Table 2: Spreadsheet (script table vs. user frequency)

Script Name Relative Home Product Order Credit Order

Frequency* Info Entry Card Confirmation

Home Only 55% 1

BrowseNoBuy 34% 1 2

BrowseAndBuy 11% 1 2 1 1 1

Total

Relative pg 1 .9 .11 .11 .11 2.23

Views

Resulting pg 45% 40% 5% 5% 5% 100%

view

distribution

Target pg view 48% 37% 6% 5% 4% 100%

distribution

Difference** 3% -3% 1% 0% 1%

* Obtained from historic log files or created from projected

user patterns

** Target Difference should be < 5% for non-transaction pages

and <2% for transaction pages.

4.9 Monitor Execution

During execution, systems will be automatically logged and monitored.

At times, systems may also be manually monitored for immediate

problems. Data gathered in this phase will be used to generate reports that

will be used to judge the performance of the system and each system

component.

4.10 Collect & Examine Reports

Graphs: AppLabs utilized a suite of graphs from either LoadRunner, WebLoad,

AppMeter, or other customized performance testing tool to provide the client with

easy to use, relevant information.

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 9 of 11

Confidential

Sample Graph1: Transaction Summary

This performance graph displays the number of transactions that passed, failed, aborted or ended with errors.

For example, these results show the Submit_Search business process to passed all its transactions at a rate of

approximately 96%.

Sample Graph 2: Throughput

This web graph displays the amount of throughput (in bytes) on the Web server during

load testing. This graph helps testers evaluate the amount of load X users generate in

terms of server throughput. For example, this graph reveals a total throughput of over 7

million bytes per second.

Additional Report Info Available during & after Scenario Execution

The below are additional reporting metrics available to those item

mentioned in ‘definitions’ section.

a. Rendezvous – Indicates when and how virtual users are released at

each point.

b. Transactions/sec (passed) – The number of completed, successful

transactions performed per second. (see sample table 2)

c. Transactions/sec (failed) – The number of incomplete, failed

transactions per second. (see sample table 2)

d. Percentile – Analyzes percentage of transactions that were performed

within a given time range. (see sample table 2)

e. Performance Under Load – Transaction times relative to the number

of virtual users running at any given point during the scenario.

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 10 of 11

Confidential

f. Transaction Performance – Average time taken to perform

transactions during each second of scenario.

g. Transaction Performance Summary – Minimum, maximum, and

average performance times for all transactions in scenario.

h. Transaction Performance by Virtual User – Time taken by an

individual virtual user to perform transactions during the scenario.

i. Transaction Distribution – The distribution of the time taken to

perform a transaction.

j. Connections per Second – Shows the number of connections made to

the Web server by virtual users during each second of the scenario run.

4.11 Provide Reports and Suggestions

Summary reports of the performance tests will be provided with respect to the

metrics above, or the metrics the customer requires. After analyzing these

reports, AppLabs will recommend courses of action to resolve bottlenecks,

increase scalability, and performance.

Performance Testing AppLabs

Load, Stress, & Capacity Technologies Page 11 of 11

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- 2005 TJ BodyDocument214 pages2005 TJ BodyArt Doe100% (1)

- M312 and M315 Excavators Electrical System: M312: 6TL1-UP M315: 7ML1-UPDocument2 pagesM312 and M315 Excavators Electrical System: M312: 6TL1-UP M315: 7ML1-UPСергей ТаргоньPas encore d'évaluation

- Regulation JigDocument16 pagesRegulation JigMichaelZaratePas encore d'évaluation

- Photography Is The: PictureDocument3 pagesPhotography Is The: PictureJrom EcleoPas encore d'évaluation

- Business Plan ScrunshineDocument2 pagesBusiness Plan ScrunshineEnjhelle MarinoPas encore d'évaluation

- Tokopedia Engineer CultureDocument25 pagesTokopedia Engineer CultureDIna LestariPas encore d'évaluation

- CRI AssignmentDocument19 pagesCRI AssignmentLim Keng LiangPas encore d'évaluation

- 6.9. How To Send SSL-Encrypted EmailDocument3 pages6.9. How To Send SSL-Encrypted EmailJoxPas encore d'évaluation

- Check Mode Procedure: Hand-Held TesterDocument1 pageCheck Mode Procedure: Hand-Held TesterClodoaldo BiassioPas encore d'évaluation

- How To Find MAC Address On A Mobile PhoneDocument11 pagesHow To Find MAC Address On A Mobile PhoneEyiwin WongPas encore d'évaluation

- Ford Transsit... 2.4 Wwiring DiagramDocument3 pagesFord Transsit... 2.4 Wwiring DiagramTuan TranPas encore d'évaluation

- IP10G-CLI User Guide Version 6.7 March2011Document124 pagesIP10G-CLI User Guide Version 6.7 March2011JorgIVariuS100% (1)

- Color CCD Imaging With Luminance Layering: by Robert GendlerDocument4 pagesColor CCD Imaging With Luminance Layering: by Robert GendlerbirbiburbiPas encore d'évaluation

- Oil Checks On Linde Reach Stacker Heavy TrucksDocument2 pagesOil Checks On Linde Reach Stacker Heavy TrucksmliugongPas encore d'évaluation

- Python Setup and Usage: Release 2.7.8Document57 pagesPython Setup and Usage: Release 2.7.8dingko_34Pas encore d'évaluation

- ANSI-IEEE, NEMA and UL Requirements For SwitchgearDocument4 pagesANSI-IEEE, NEMA and UL Requirements For Switchgearefmartin21Pas encore d'évaluation

- How To Choose Circuit Breakers For Electric MotorsDocument2 pagesHow To Choose Circuit Breakers For Electric Motorsحسن التميميPas encore d'évaluation

- Summer Practice Report Format For CeDocument8 pagesSummer Practice Report Format For CesohrabPas encore d'évaluation

- Math Lesson Plan-Topic 8.4 - Tens and OnesDocument4 pagesMath Lesson Plan-Topic 8.4 - Tens and Onesapi-352123670Pas encore d'évaluation

- 12 008 00 (01) Vibrating Drum Smooth (Group 1) (nd104240)Document4 pages12 008 00 (01) Vibrating Drum Smooth (Group 1) (nd104240)DAVID ORLANDO MURCIA BARRERAPas encore d'évaluation

- CSC712 - Questions On Chapter 10 - Project ManagementDocument3 pagesCSC712 - Questions On Chapter 10 - Project ManagementKhairiBudayawanPas encore d'évaluation

- 50 Xtream M3uDocument33 pages50 Xtream M3uRachitPas encore d'évaluation

- Workbook Answer Key Unit 9 Useful Stuff - 59cc01951723dd7c77f5bac7 PDFDocument2 pagesWorkbook Answer Key Unit 9 Useful Stuff - 59cc01951723dd7c77f5bac7 PDFarielveron50% (2)

- Alufusion Eng TrocalDocument226 pagesAlufusion Eng TrocalSid SilviuPas encore d'évaluation

- Drainage Service GuidelinesDocument15 pagesDrainage Service GuidelinesMarllon LobatoPas encore d'évaluation

- DER11001 Reference DrawingsDocument2 pagesDER11001 Reference DrawingsPrime Energy Warehouse-YemenPas encore d'évaluation

- GSM BSS Integration For Field Maintenance: ExercisesDocument14 pagesGSM BSS Integration For Field Maintenance: Exercisesswr cluster100% (1)

- Test AND Measurement: Eagle PhotonicsDocument90 pagesTest AND Measurement: Eagle PhotonicsPankaj SharmaPas encore d'évaluation

- J030 J032 Eu Aa V1.00Document41 pagesJ030 J032 Eu Aa V1.00gkalman_2Pas encore d'évaluation