Académique Documents

Professionnel Documents

Culture Documents

Best Poster Award Poster

Transféré par

api-377566712Titre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Best Poster Award Poster

Transféré par

api-377566712Droits d'auteur :

Formats disponibles

Tsai,

Yi-Ting, University of Hong Kong ;

Lauren Diana Liao, University of California, San Diego

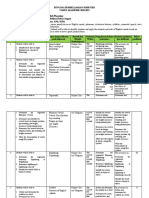

INTRODUCTION OBJECTIVES

Cochlear implant (CI) electronically stimulates the nerve to Purpose a raw waveform based FCN model for denoising speech with

help those with severe hearing lost. Under noisy human conversations noises, which has the same or better performance

backgrounds, however, speech perception tasks have as LPS based DNN on quantitative result and hearing test result.

remained difficult for CI users. Therefore, speech

enhancement (SE) is a critical component to improve PROCEDURE

speech perception examining through different noise

Noisy speech 1. Given the data set and train

scenarios. In this study, we makes use of deep learning Vocoded

and focus on the fully convolutional network (FCN) model speech FCN/DNN model

DNN/

to extract clear speech signals on non-stationary noises of 2. Generate testing data set

Train FCN Hearing 3. Transfer enhanced speech

human conversations in the background, and further model Vocoder test

model result into vocoded speech

compare the performance with Log power spectrum (LPS)

4. Conduct hearing test and

based Deep neural network (DNN) model by conducting 1 Enhanced Evaluation

hearing test of enhanced speech which simulated in CI. speech evaluate

2 3 4

Raw waveform based FCN D. Vocoded speech:

B. Training dataset:

METHODS Encoder-decoder architecture, Encoder and

Vocoder (Voice Operated reCOrDER)

200 utterances artificially mixed is a voice processing system that can

A. Models: decoder have 10 convolu\onal layers each. with 9 different noise types at 5 simulate the voice heard by CI users.

Log power spectrum based different signal to noise ratio The testing dataset results were

DNN. A fully connected Enhanced speech (SNRs) (-10 dB, -5 dB, 0 dB, 5 vocoded and used in the hearing test.

network with 5 hidden layers. dB, 10 dB), resulting in a total E. Hearing test:

of (200 x 9 x 5) utterances. Subject: 8 native mandarin speakers

layers

10

with normal hearing and not familiar

Conv+ReLU

… …

C. Testing dataset: with the utterances.

… 2 noises are not seen in the Utterance: 120 utterances, 3-4

training set- 2 girls talkers and seconds each sentence, spoken by

layers

10

4 mix gender talkers. In total native mandarin speakers.

there are 120 clean utterances Randomly pick each 10 utterances

Output

layer

mixed with the 2 noise types at from DNN or FCN and original

Input Hidden Hidden noisy speech playing to the subject

layer layer 1 layer 5 Noisy speech -3dB and -6 dB SNRs.

and analyze the correctness.

RESULTS B. Hearing test result

The figure in the table is the median correct characters they have

A. Quantitative result

answered and heard during the hearing test. The full score for each

To evaluate the performance of the proposed models, noise type at different SNR is 50 characters, since we randomly

the perceptual evaluation of speech quality (PESQ) chose 5 Mandarin utterances for each noise type and each utterance

and the short-time objective intelligibility (STOI) includes 10 characters. The bar chart is derived from denoting noisy

scores were used to evaluate the speech quality and

speech as base. This provides a fair comparison for the results

intelligibility, respectively. between the models.

DNN (LPS) FCN (Waveform) i. ii. iii. Number of correctly answered

Noisy Enhanced Enhanced characters

SNR(dB) STOI PESQ STOI PESQ speech speech by speech by (Speech from DNN/FCN – Noisy)

DNN FCN 20

-3 dB 0.6169 1.1604 0.6870 1.3020 18

2 girls 11.5 24.5 27 16

-6 dB 0.5781 1.1301 0.6426 1.2401 -3dB 14

12

Average 0.5975 1.1453 0.6648 1.2710 2 girls 11 20.5 16 10

DNN

-6dB 8

6

Since the fully connected layers were removed, the 4 talkers 7 11 24.5 4

FCN

number of weights involved in FCN was approximately -3dB 2

0

only 10% when compared to that involved in DNN. 4 talkers 10 10.5 17.5 2 2 4 4

girls girls talkers talkers

-6dB -3dB -6dB -3dB -6dB

• Due to good quan\ta\ve result, if the hearing Denoising human talking, the non-sta2onary

CONCLUSIONS

test results show similarity, the FCN model s\ll background noise, FCN model shows an

• FCN has higher PESQ AND STOI score than LPS concludes as a beher model. improvement and in this case could be considered

based DNN in terms of standardized • For the noise type of two girls talking at -3 dB to outperform DNN. Therefore, for future

quan\ta\ve evalua\on. and -6 dB, FCN and DNN models produced prospect, we can op2mize the FCN and conduct

• Moreover, the numbers of parameters in similar results as well as for four talkers at -6 further tes2ng with more subjects.

network is drama\cally reduced in FCN model dB. However, for 4 talkers at -3 dB, the FCN REFERENCES

and this could benefit in both faster calcula\on model is sta\s\cally significantly beher than Y.-H. Lai, F. Chen, S.-S. Wang, X. Lu, Y. Tsao, and C.-H.Lee, "A deep denoising autoencoder

approach to improving the intelligibility of vocoded speech in cochlear implant simula\on,”,2016

and less hard-drive spaced needed for CI users.

the DNN model.

S.P, A.B, J.S, “SEGAN: Speech Enhancement genera\ve adversarial network”

S-W Fu, Y. Tsao, X. L, H. K, “Raw waveform-based speech enhancement by fully convolu\onal

networks”, 2017.

Vous aimerez peut-être aussi

- Cave Rescue ActivityDocument6 pagesCave Rescue Activityshweta bambuwalaPas encore d'évaluation

- More Med Surg Practice QuestionsDocument14 pagesMore Med Surg Practice QuestionsmisscoombsPas encore d'évaluation

- French Revolution ChoiceDocument3 pagesFrench Revolution Choiceapi-483679267Pas encore d'évaluation

- Quality of Good TeacherDocument5 pagesQuality of Good TeacherRandyPas encore d'évaluation

- Palo Alto Firewall VirtualizationDocument394 pagesPalo Alto Firewall VirtualizationRyanb378Pas encore d'évaluation

- Plate Tectonics LessonDocument3 pagesPlate Tectonics LessonChristy P. Adalim100% (2)

- Generative Model-Based TTSDocument76 pagesGenerative Model-Based TTSViswakarma ChakravarthyPas encore d'évaluation

- Lecture 9 - Speech RecognitionDocument65 pagesLecture 9 - Speech RecognitionViswakarma ChakravarthyPas encore d'évaluation

- Douglas Frayne Sargonic and Gutian Periods, 2334-2113 BCDocument182 pagesDouglas Frayne Sargonic and Gutian Periods, 2334-2113 BClibrary364100% (3)

- Research Methods in Clinical Linguistics and Phonetics: A Practical GuideD'EverandResearch Methods in Clinical Linguistics and Phonetics: A Practical GuidePas encore d'évaluation

- SoundDocument5 pagesSoundaaaaPas encore d'évaluation

- ICASSP_2021_DNS_challengeDocument5 pagesICASSP_2021_DNS_challengemeeamitechs20notePas encore d'évaluation

- Deep Neural Networks For Cochannel Speaker Identification: Xiaojia Zhao, Yuxuan Wang and Deliang WangDocument5 pagesDeep Neural Networks For Cochannel Speaker Identification: Xiaojia Zhao, Yuxuan Wang and Deliang WangdumbabubuPas encore d'évaluation

- A Simple LPC Vocoder Bob Beauchaine EE586, Spring 2004: Vocal Tract ModelingDocument12 pagesA Simple LPC Vocoder Bob Beauchaine EE586, Spring 2004: Vocal Tract Modeling5866215Pas encore d'évaluation

- Identifying Regions of Non Modal Phonation Using Features of The Wavelet TransformDocument4 pagesIdentifying Regions of Non Modal Phonation Using Features of The Wavelet TransformAlicePas encore d'évaluation

- SLP Ted05Document6 pagesSLP Ted05patel_musicmsncomPas encore d'évaluation

- 1826 Worrall Linda 3Document18 pages1826 Worrall Linda 3yeseniaPas encore d'évaluation

- Spin PDFDocument5 pagesSpin PDFthirumuru udayaPas encore d'évaluation

- Isra PDFDocument5 pagesIsra PDFhorcajada-1Pas encore d'évaluation

- Mohini Dey - CapstoneDocument52 pagesMohini Dey - CapstoneGautham Krishna KongattilPas encore d'évaluation

- LPCKalman DNNConvDocument12 pagesLPCKalman DNNConvAlfredo EsquivelJPas encore d'évaluation

- Improving English Conversational Telephone Speech RecognitionDocument6 pagesImproving English Conversational Telephone Speech RecognitionSingitan YomiyuPas encore d'évaluation

- Modulation-Based Digital Noise Reduction For Application To Hearing Protectors To Reduce Noise and Maintain IntelligibilityDocument12 pagesModulation-Based Digital Noise Reduction For Application To Hearing Protectors To Reduce Noise and Maintain IntelligibilityveronicaPas encore d'évaluation

- An Experimental Study On Speech Enhancement Based On Deep Neural NetworksDocument4 pagesAn Experimental Study On Speech Enhancement Based On Deep Neural NetworksSuleiman ShamasnehPas encore d'évaluation

- Punjabi Speech Recognition: A Survey: by Muskan and Dr. Naveen AggarwalDocument7 pagesPunjabi Speech Recognition: A Survey: by Muskan and Dr. Naveen AggarwalKarthik RPas encore d'évaluation

- Faculty of Education University of Hasyim Asy'Ari Tebuireng Jombang Course Syllabus of Odd Semester 2014/2015Document3 pagesFaculty of Education University of Hasyim Asy'Ari Tebuireng Jombang Course Syllabus of Odd Semester 2014/2015Elisa Nurul LailiPas encore d'évaluation

- Department of Computer Science and Engineering: A Technical Seminar OnDocument15 pagesDepartment of Computer Science and Engineering: A Technical Seminar OnRaam Sai BharadwajPas encore d'évaluation

- softvc语音转换2111 02392Document5 pagessoftvc语音转换2111 02392灯火Pas encore d'évaluation

- Deep Audio-Visual Speech RecognitionDocument13 pagesDeep Audio-Visual Speech RecognitionholissonPas encore d'évaluation

- Audio Word2vec: Disentangling Phonetic and Speaker InformationDocument13 pagesAudio Word2vec: Disentangling Phonetic and Speaker Information陳奕禎Pas encore d'évaluation

- Robust Speaker Recognition Using Speech Enhancement and Attention ModelDocument8 pagesRobust Speaker Recognition Using Speech Enhancement and Attention ModellgaleanocPas encore d'évaluation

- David Crawford EpsonDocument31 pagesDavid Crawford Epsonapi-3826975Pas encore d'évaluation

- Glow WaveganDocument5 pagesGlow Wavegan灯火Pas encore d'évaluation

- Diphone Set Cover For Speech Synthesis - A Java ImplementationDocument6 pagesDiphone Set Cover For Speech Synthesis - A Java ImplementationDatalore.TVPas encore d'évaluation

- DSP Implementation of Voice Recognition Using Dynamic Time Warping AlgorithmDocument7 pagesDSP Implementation of Voice Recognition Using Dynamic Time Warping Algorithmssn2korPas encore d'évaluation

- Streaming multi-talker ASR and few-shot learning for low-resource languagesDocument1 pageStreaming multi-talker ASR and few-shot learning for low-resource languagessantoso elektroPas encore d'évaluation

- Developing listening and speaking skills in year 4 studentsDocument7 pagesDeveloping listening and speaking skills in year 4 studentsAzlin RaniPas encore d'évaluation

- RPS Phonology GNP 2020-2021Document6 pagesRPS Phonology GNP 2020-2021SiannaPas encore d'évaluation

- keynote_slidesDocument33 pageskeynote_slidessliu2002Pas encore d'évaluation

- Speech Emotion Recognition Using CNN and Random ForestDocument5 pagesSpeech Emotion Recognition Using CNN and Random ForestWoo Yong ChoiPas encore d'évaluation

- rnn-1406 1078 PDFDocument15 pagesrnn-1406 1078 PDFalanPas encore d'évaluation

- Integrating Acoustic Prosodic and Phonotactic Features For Spoken Language IdentificationDocument4 pagesIntegrating Acoustic Prosodic and Phonotactic Features For Spoken Language IdentificationAdel MasonPas encore d'évaluation

- Monaural Audio Source Separation using Variational AutoencodersDocument5 pagesMonaural Audio Source Separation using Variational AutoencodersRahul KumarPas encore d'évaluation

- 1707 06519Document8 pages1707 06519Lihui TanPas encore d'évaluation

- Input, Interaction, Input, Interaction, and Output and Output P PDocument8 pagesInput, Interaction, Input, Interaction, and Output and Output P PThina Amador GarciaPas encore d'évaluation

- Abad Et Al. - 2019 - Cross Lingual Transfer Learning For Zero-ResourceDocument5 pagesAbad Et Al. - 2019 - Cross Lingual Transfer Learning For Zero-Resourcemomo JzPas encore d'évaluation

- Accurate Speech Decomposition Into Periodic and Aperiodic Components Based On Discrete Harmonic TransformDocument5 pagesAccurate Speech Decomposition Into Periodic and Aperiodic Components Based On Discrete Harmonic TransformRuiqi GuoPas encore d'évaluation

- Forensi Comparaçao F0Document4 pagesForensi Comparaçao F0Paulo CasemiroPas encore d'évaluation

- Cocktail Party Problem As Binary Classification: Deliang WangDocument39 pagesCocktail Party Problem As Binary Classification: Deliang WangSumit HukmaniPas encore d'évaluation

- End-To-End Speaker Segmentation For Overlap-Aware ResegmentationDocument5 pagesEnd-To-End Speaker Segmentation For Overlap-Aware ResegmentationinventtydfPas encore d'évaluation

- Deep Karaoke: Extracting Vocals From Musical Mixtures Using A Convolutional Deep Neural NetworkDocument5 pagesDeep Karaoke: Extracting Vocals From Musical Mixtures Using A Convolutional Deep Neural NetworkMathieu PlagnePas encore d'évaluation

- Unsupervised Cross-Lingual Representation Learning For Speech RecognitionDocument11 pagesUnsupervised Cross-Lingual Representation Learning For Speech RecognitionCraig BakerPas encore d'évaluation

- Quantitative Perceptual Separation of Two Kinds of Degradation in Speech Denoising ApplicationsDocument4 pagesQuantitative Perceptual Separation of Two Kinds of Degradation in Speech Denoising ApplicationsAnis Ben AichaPas encore d'évaluation

- A Vector Quantization Approach To Speaker RecognitionDocument4 pagesA Vector Quantization Approach To Speaker RecognitionManoj Kumar MauryaPas encore d'évaluation

- Collaborative Learning by Distance Chorus On The InternetDocument22 pagesCollaborative Learning by Distance Chorus On The Internetchipa34100% (2)

- 1j92g768v-Phonics Assessment PresentationDocument27 pages1j92g768v-Phonics Assessment PresentationpolthiyuveekshaPas encore d'évaluation

- 2021-Titanet Neural Model For Speaker Representation With 1D Depth-WiseDocument5 pages2021-Titanet Neural Model For Speaker Representation With 1D Depth-WiseMohammed NabilPas encore d'évaluation

- SD State Standards Disaggregated English Language Arts: Essential Questions Big Idea StatementsDocument3 pagesSD State Standards Disaggregated English Language Arts: Essential Questions Big Idea StatementsJiji AmouPas encore d'évaluation

- Acoustic Measurements On Prosody Using Praat: Bert RemijsenDocument70 pagesAcoustic Measurements On Prosody Using Praat: Bert RemijsenAnkitaPas encore d'évaluation

- Deep Speech - Scaling Up End-To-End Speech RecognitionDocument12 pagesDeep Speech - Scaling Up End-To-End Speech RecognitionSubhankar ChakrabortyPas encore d'évaluation

- Speaker Recognition by Neural Networks: Artificial IntelligenceDocument4 pagesSpeaker Recognition by Neural Networks: Artificial IntelligenceQuang Định TrươngPas encore d'évaluation

- InceptraDocument29 pagesInceptraMangalanageshwariPas encore d'évaluation

- Odyssey 2018 Speaker and Language Recognition WorkshopDocument6 pagesOdyssey 2018 Speaker and Language Recognition Workshopmoodle teacher4Pas encore d'évaluation

- Comparative Analysis of Speech Compression Algorithms With Perceptual and LP Based Quality EvaluationsDocument5 pagesComparative Analysis of Speech Compression Algorithms With Perceptual and LP Based Quality Evaluationsnasir saleemPas encore d'évaluation

- A Convolutional Recurrent Neural Network for Real-Time Speech EnhancementDocument5 pagesA Convolutional Recurrent Neural Network for Real-Time Speech EnhancementVasanth YannamPas encore d'évaluation

- An Articulatory-Based Singing Voice Synthesis UsingDocument6 pagesAn Articulatory-Based Singing Voice Synthesis UsingRama RaynsfordPas encore d'évaluation

- Voice Activation Using Speaker Recognition For Controlling Humanoid RobotDocument6 pagesVoice Activation Using Speaker Recognition For Controlling Humanoid RobotDyah Ayu AnggreiniPas encore d'évaluation

- Final Case Study 0506Document25 pagesFinal Case Study 0506Namit Nahar67% (3)

- Booklet English 2016Document17 pagesBooklet English 2016Noranita ZakariaPas encore d'évaluation

- Eca Important QuestionsDocument3 pagesEca Important QuestionsSri KrishnaPas encore d'évaluation

- Localized Commercial LeafletDocument14 pagesLocalized Commercial LeafletJohn Kim CarandangPas encore d'évaluation

- Wilo Mather and Platt Pumps Pvt. LTD.: Technical DatasheetDocument2 pagesWilo Mather and Platt Pumps Pvt. LTD.: Technical DatasheetTrung Trần MinhPas encore d'évaluation

- Hope 03 21 22Document3 pagesHope 03 21 22Shaina AgravantePas encore d'évaluation

- Academic Language Use in Academic WritingDocument15 pagesAcademic Language Use in Academic WritingDir Kim FelicianoPas encore d'évaluation

- Iwwusa Final Report IdsDocument216 pagesIwwusa Final Report IdsRituPas encore d'évaluation

- 4439 Chap01Document28 pages4439 Chap01bouthaina otPas encore d'évaluation

- Range of Muscle Work.Document54 pagesRange of Muscle Work.Salman KhanPas encore d'évaluation

- Silyzer 300 - Next Generation PEM ElectrolysisDocument2 pagesSilyzer 300 - Next Generation PEM ElectrolysisSaul Villalba100% (1)

- Xbox Accessories en ZH Ja Ko - CN Si TW HK JP KoDocument64 pagesXbox Accessories en ZH Ja Ko - CN Si TW HK JP KoM RyuPas encore d'évaluation

- Impacts of DecarbonizationDocument2 pagesImpacts of DecarbonizationCM SoongPas encore d'évaluation

- The Issue of Body ShamingDocument4 pagesThe Issue of Body ShamingErleenPas encore d'évaluation

- DX DiagDocument42 pagesDX DiagVinvin PatrimonioPas encore d'évaluation

- Biology 11th Edition Mader Test BankDocument25 pagesBiology 11th Edition Mader Test BankAnthonyWeaveracey100% (44)

- Database Case Study Mountain View HospitalDocument6 pagesDatabase Case Study Mountain View HospitalNicole Tulagan57% (7)

- Split Plot Design GuideDocument25 pagesSplit Plot Design GuidefrawatPas encore d'évaluation

- Implementing a JITD system to reduce bullwhip effect and inventory costsDocument7 pagesImplementing a JITD system to reduce bullwhip effect and inventory costsRaman GuptaPas encore d'évaluation

- Ti 1000 0200 - enDocument2 pagesTi 1000 0200 - enJamil AhmedPas encore d'évaluation

- A. Rationale: Paulin Tomasuow, Cross Cultural Understanding, (Jakarta: Karunika, 1986), First Edition, p.1Document12 pagesA. Rationale: Paulin Tomasuow, Cross Cultural Understanding, (Jakarta: Karunika, 1986), First Edition, p.1Nur HaeniPas encore d'évaluation

- (Salim Ross) PUA 524 - Introduction To Law and The Legal System (Mid Term)Document4 pages(Salim Ross) PUA 524 - Introduction To Law and The Legal System (Mid Term)Salim RossPas encore d'évaluation

- RCC Lintel and Slab PlanDocument3 pagesRCC Lintel and Slab PlanSaurabh Parmar 28Pas encore d'évaluation