Académique Documents

Professionnel Documents

Culture Documents

Case-Based Behavior Recognition in Beyond Visual Range Air Combat

Transféré par

Amitabh PrasadCopyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Case-Based Behavior Recognition in Beyond Visual Range Air Combat

Transféré par

Amitabh PrasadDroits d'auteur :

Formats disponibles

Case-Based Behavior Recognition in Beyond Visual Range Air Combat

Hayley Borck1, Justin Karneeb1, Ron Alford2 & David W. Aha3

1

Knexus Research Corporation; Springfield, VA; USA

2

ASEE Postdoctoral Fellow; Naval Research Laboratory (Code 5514); Washington, DC; USA

3

Navy Center for Applied Research in Artificial Intelligence; Naval Research Laboratory (Code 5514); Washington, DC; USA

{first.last}@knexusresearch.com | {ronald.alford.ctr, david.aha}@nrl.navy.mil

Abstract effectively account for hostile and allied agents we use a

An unmanned air vehicle (UAV) can operate as a capable Case-Based Behavior Recognition (CBBR) algorithm to

team member in mixed human-robot teams if it is controlled recognize their behaviors so that, in combination with a

by an agent that can intelligently plan. However, planning predictive planner, UAV plans can be evaluated in real

effectively in a beyond-visual-range air combat scenario

time.

requires understanding the behaviors of hostile agents,

which is challenging in partially observable environments We define a behavior as tendency or policy of the agent

such as the one we study. In particular, unobserved hostile over a given amount of time. A behavior is comprised of a

behaviors in our domain may alter the world state. To set of unordered actions (e.g., ‘fly to target’, ‘fire missile’)

effectively counter hostile behaviors, they need to be taken in relation to other agents in the scenario. This

recognized and predicted. We present a Case-Based

differs from a plan in that the agent is not following a set

Behavior Recognition (CBBR) algorithm that annotates an

agent’s behaviors using a discrete feature set derived from a of ordered actions; it is rather taking actions that are

continuous spatio-temporal world state. These behaviors are indicative of certain behaviors.

then given as input to an air combat simulation, along with BVR air combat involves executing precise tactics at

the UAV’s plan, to predict hostile actions and estimate the large distances where little data relative is available of the

effectiveness of the given plan. We describe an

hostiles. What is available is only partially observable. Yet

implementation and evaluation of our CBBR algorithm in

the context of a goal reasoning agent designed to control a if the UAV can identify a hostile agent’s behavior or plan

UAV and report an empirical study that shows CBBR it can use that information when reasoning about its own

outperforms a baseline algorithm. Our study also indicates actions.

that using features which model an agent’s prior behaviors We hypothesize that Case-Based Reasoning (CBR)

can increase behavior recognition accuracy.

techniques can effectively recognize behavior in domains

such as ours, where information on hostile agents is scarce.

1. Introduction Additionally, we hypothesize that representing and

leveraging a memory of a hostile agent’s behaviors during

We are studying the use of intelligent agents for CBR will improve behavior recognition. To assess this, we

controlling an unmanned air vehicle (UAV) in a team of encode discrete state information over time in cases, and

piloted and unmanned aircraft in simulated beyond-visual- compare the performance of CBBR using this information

range (BVR) air combat scenarios. In our work, a wingman versus an ablation that lacks this memory.

is a UAV that is given a mission to complete and may We summarize related work in Section 2 and describe

optionally also receive orders from a human pilot. In the our CBBR algorithm in Section 3. In Section 4, we

situations where the UAV’s agent does not receive explicit describe its application in 2 vs 2 scenarios (i.e., two

orders, it must create a plan for itself. Although UAVs can ‘friendly’ aircraft versus two ‘hostile’ aircraft), where we

perform well in these scenarios (Nielsen et al. 2006), found that (1) CBBR outperformed baseline algorithms

planning may be ineffective if the behaviors of the other and (2) using features that model the past behavior of other

agents operating in the scenario are unknown. To agents increases recognition accuracy. Finally, we discuss

the implications of our results and conclude with future

work directions in Section 5.

Copyright © 2015, Association for the Advancement of Artificial

Intelligence (www.aaai.org). All rights reserved.

2. Related Research that stores the mental states of adversarial agents in air

combat scenarios (i.e., representing their beliefs, desires,

Our behavior recognition component, which lies within a and intentions), but did not evaluate it. Smith et al. (2000)

larger goal reasoning (GR) agent called the Tactical Battle use a genetic algorithm (GA) to learn effective tactics for

Manager (TBM), is designed to help determine if a UAV their agents in a two-sided experiment, but assumed perfect

wingman’s plan is effective (Borck et al. 2014). In this observability and focused on visual-range air combat. In

paper we extend the CBBR algorithm’s recognition contrast, we assume partial observability and focus on

abilities by (1) employing a confidence factor 𝐶𝑞 for a BVR air combat, and are not aware of prior work by other

query 𝑞 and (2) using features to model other agents’ past groups on this task that use CBR techniques.

behaviors. We also present the first empirical evaluation of

our CBBR system; we test it against a baseline algorithm

and assess our hypothesis that these new features improve 3. Case-Based Behavior Recognition

CBBR performance.

The following subsections describe our CBBR algorithm.

In recent years, CBR has been used in several GR

In particular, we describe its operating context, our case

agents. For example, Weber et al. (2010) use a case base to

representation, its retrieval function, and details on how

formulate new goals for an agent, and Jaidee et al. (2013)

cases are pruned during and after case library acquisition.

use CBR techniques for goal selection and reinforcement

learning (RL) for goal-specific policy selection. In

3.1 CBBR in a BVR Air Combat Context

contrast, our system uses CBR to recognize the behavior of

other agents, so that we can predict their responses to our Our CBBR implementation serves as a component in the

agent’s actions. TBM, a system we are collaboratively developing for pilot-

CBR researchers have investigated methods for UAV interaction and autonomous UAV control. The

combating adversaries in other types of real-time CBBR component takes as input an incomplete world state

simulations. For example, Aha et al. (2005) employ a case and outputs behaviors that are used to predict the

base to select a sub-plan for an agent at each state, where effectiveness of a UAV’s plan. The TBM maintains a

cases record the performance of tested sub-plans. world model that contains each known agent’s capabilities,

Auslander et al. (2008) instead use case-based RL to past observed states, currently recognized behaviors, and

overcome slow learning, where cases are action policies predicted future states. A complete state contains, for each

indexed by current game state features. Unlike our work, time step in the simulation, the position and actions for

neither approach performs opponent behavior recognition. each known agent. The set of actions that our agent can

Opponent agents can be recognized as a team or as a infer from the information available are Pursuit (an agent

single agent. Team composition can be dynamic flies directly at another agent), Drag (an agent tries to

(Sukthankar and Sycara 2006), resulting in a more kinematically avoid a missile by flying away from it), and

complex version of the plan recognition problem (Laviers Crank (an agent flies at the maximum offset but tries to

et al. 2009; Sukthankar and Sycara 2011). Kabanza et al. keep its target in radar). For the UAV and its allies the past

(2014) use a plan library to recognize opponent behavior in states are complete. However, any hostile agent’s position

real-time strategy games. By recognizing the plan the team for a given time is known only if the hostile agent appears

is enacting they are able to recognize the opponent’s, or on the UAV’s radar. Also, a hostile agent’s actions are

team leader’s, intent. Similarly our algorithm uses a case never known and must be inferred from the potentially

base of previously observed behaviors to recognize the incomplete prior states. We infer these actions by

current opponents’ behaviors. Our approach however discretizing the position and heading of each agent into the

attempts to recognize each agent in the opposing team as a features of our cases. We currently assume that the

separate entity. Another approach to coordinating team capabilities of each hostile aircraft are known, though in

behaviors involves setting multiagent planning parameters future work they will be inferred through observations.

(Auslander et al. 2014), which can then be given to a plan The CBBR component revises the world model with

generator. Recognizing high-level behaviors, which is our recognized behaviors. Afterward, we use an instance of the

focus, should also help to recognize team behaviors. For Analytic Framework for Simulation, Integration, &

example, two hostile agents categorized as ‘All Out Modeling (AFSIM), a mature air combat simulation that is

Aggressive’ by our system could execute a pincer used by the USAF (and defense organizations in several

maneuver (in which two agents attack both flanks of an other countries), to simulate the execution of the plans for

opponent). the UAV and the other agents in a scenario. AFSIM

Some researchers describe approaches for selecting projects all the agents’ recognized behaviors to determine

behaviors in air combat simulations. For example, Rao and the effectiveness of the UAV’s plan. Thus, the accuracy of

Murray (1994) describe a formalism for plan recognition these predictions depends on the ability of the CBBR

component to accurately recognize and update the HASINTERESTINOPPOSINGTEAM – This agent has

behaviors of the other agents in the world model. reacted to an opposing agent.

Features have Boolean or percentage values. For

example, the HASSEENOPPOSINGTEAM global feature is

‘true’ if the UAV observes an opposing team member in its

radar. Conversely FACINGOPPOSINGTEAM is a value

that represents how much an opposing agent is facing this

agent, calculated via relative bearing. This value is

averaged over all opposing agents in the agents’ radar

which are also facing it. In the current system the Global

features are all Boolean while the Time Step features are

have percentage values. The set of behaviors include All

Out Aggressive (actions that imply a desire to destroy

opposing team members regardless of its own safety),

Safety Aggressive (the same as All Out Aggressive except

Figure 1: Case Representation that it also considers its own safety), Passive (actions that

do not engage with opposing team members but keep them

3.2 Case Representation in radar range), and Oblivious (actions that imply the agent

does not know the hostile team exists).

A case in our system describes an agent’s behavior and its

response to a given situation including a memory and Table 1: Feature Specific Weights

tendencies. Cases are represented as problem, solution Global Feature Weight Time Step Feature Weight

pairs, where the problem is represented by a set of discrete Seen Opposing 0.1 Closing on Hostiles 0.1

features and the solution is the agent’s response behavior. Aggressive 0.3 Is Facing Hostiles 0.3

The feature set contains two feature types: global features Tendencies

and time step features (Figure 1). Global features act as a

Preservation 0.2 In Radar Range 0.1

memory and represent overarching tendencies about how Tendencies

the agent has acted in the past. Time step features represent

Has Disengaged 0.2 In Weapon Range 0.2

features that affect the agent for the duration of the time

step. To keep the cases lean, we merge time steps that have Interest in 0.2 In Danger 0.3

Opposing Team

the same features and sum their durations. The features we

model are listed below.

Time Step Features: 3.3 Case Retrieval

CLOSINGONOPPOSINGTEAM – This agent is To calculate the similarity between a query 𝑞 and a case

closing on an opposing agent. 𝑐’s problem descriptions, we compute a weighted average

FACINGOPPOSINGTEAM – This agent is facing an from the sum of the distances between their matching

opposing agent. global and time step features. Equation 1 displays the

INOPPOSINGTEAMSRADAR – This agent is in an function for computing similarity, where 𝜎(𝑤𝑓 , 𝑞𝑓 , 𝑐𝑓 ) is

opposing agent’s radar cone. the weighted distance between two values for feature 𝑓, 𝑁

INOPPOSINGTEAMSWEAPONRANGE – This agent is the set of time step features, and 𝑀 is the set of global

is in an opposing agent’s weapon range. features.

INDANGER – This agent is in an opposing agent’s ∑𝑓∈𝑁 𝜎(𝑤𝑓 ,𝑞𝑓 ,𝑐𝑓 ) ∑𝑓∈𝑀 𝜎(𝑤𝑓 ,𝑞𝑓 ,𝑐𝑓 )

radar cone and in missile range. sim(𝑞, 𝑐) = − 𝛼 |𝑁|

−𝛽 |𝑀|

(1)

We use a weight of 𝛼 for time step features and 𝛽 for

Global Features: global features, where 𝛼 ≥ 0, 𝛽 ≥ 0, and 𝛼 + 𝛽 = 1. We

HASSEENOPPOSINGTEAM – This agent has also weighted individual features based on intuition and on

observed an opposing agent. feedback from initial experiments as shown in Table 1.

HASAGGRESSIVETENDENCIES – This agent has These feature weights remain static throughout the

behaved aggressively. experiments in this paper.

HASSELFPRESERVATIONTENDENCIES – This agent In addition we calculate a confidence factor 𝐶𝑞 for each

has avoided an opposing agent. query 𝑞. For a query 𝑞 all cases greater than a similarity

HASDISENGAGED – This agent has reacted to an threshold 𝜏𝑠 are retrieved from the case base ℒ. 𝐶𝑞 is then

opposing agent by disengaging. computed as the percentage of the retrieved cases whose

solution is the same as that of the most similar case 𝑐1 solution of one of the cases is All Out Aggressive, then that

(𝑐1 . 𝑠). case is retained in ℒ while the other is pruned.

|{𝑐∈ℒ | 𝑠𝑖𝑚(𝑞,𝑐)>𝜏𝑠 ∧ 𝑐.𝑠=𝑐1 .𝑠} |

𝐶𝑞 = |{𝑐∈ℒ | 𝑠𝑖𝑚(𝑞,𝑐)>𝜏𝑠 }|

(2)

4. Empirical Study

If no cases are retrieved or the 𝐶𝑞 of the most similar

case is below a confidence threshold 𝜏𝑐 , then the solution is 4.1 Experimental Design

labeled as unknown. We set 𝜏𝑐 low (see Section 4.1) so

Our design focuses on two hypotheses:

that a solution is returned even when the CBBR is not

confident. The confidence factor is used only in the H1: CBBR’s recognition accuracy will exceed those of

retrieval process in the current CBBR system. In future the baseline.

work, we will extend it to reason over the confidence H2: CBBR’s recognition accuracy is higher than an

factor. ablation that does not use global features.

To test our hypotheses we compared algorithms using

3.4 Case Acquisition recognition accuracy. Recognition accuracy is the fraction

Cases are acquired by running a BVR simulation with of time the algorithm recognized the correct behavior

CBBR in acquisition mode. The simulation is run using the during the fair duration, which is the period of time in

same pool of experimental scenarios as the empirical study which it is possible to differentiate two behaviors. We use

fair duration rather than total duration because it is not

but with different random trials (see Section 4.1). During a

always possible to recognize an agent’s behavior before it

run, the acquisition system receives perfect state

completes an action. One example of this is that Safety

information as well as each agent’s actual behavior. Aggressive acts exactly like All Out Aggressive until the

Unfortunately these are not necessarily accurate for the agent performs a drag action. Thus, in this example, we

duration of the simulation. For example most behaviors are should not assess performance until the observed agent has

similar to All Out Aggressive until the agent performs an performed a drag action. To calculate the fair duration

identifying action that differentiates it (i.e., a drag). This is defining actions were identified for each behavior.

a limitation of the current acquisition system as it can Defining actions were then logged during each trial when

create cases with incorrectly labeled behaviors. We address an agent performed that action.

this issue during case pruning in Section 3.5. We tested the CBBR component with eleven values for

𝛼 and 𝛽 that sum to 1, and pruned the case base for each

3.5 Case Pruning CBBR variant using their corresponding weights. We

compare against the baseline behavior recognizer Random,

To constrain the size of the case base, and perform case

which randomly selects a behavior every 60 seconds of

base maintenance, cases are pruned from ℒ after all cases

simulation time.

are constructed (Smyth and Keane 1995). We prune ℒ by

We ran our experiments with 10 randomized test trials

removing (1) pairs of cases that have similar problems but

drawn from each of the 3 base scenarios shown in Figure 2.

distinct solutions and (2) redundant cases. Similarity is

In these scenarios one of the blue agents is the UAV while

computed using Equation 1. If the problem 𝑐. 𝑝 of a case

the other is running one of the behaviors. These scenarios

𝑐 ∈ ℒ is used as query 𝑞, and any cases 𝑐′ ∈ 𝐶′ ⊆ ℒ are

reflect different tactics described by subject matter experts,

retrieved such that 𝑐. 𝑠 = 𝑐 ′ . 𝑠 and sim(𝑐. 𝑝, 𝑐’. 𝑝) > 𝜏𝑏 (for

and are representative of real-world BVR scenarios. In

a similarity threshold 𝜏𝑏 ), then a representative case is

Scenario 1 the hostiles and friendlies fly directly at each

randomly selected and retained from 𝐶′ and the rest are

other. In Scenarios 2 and 3 the hostiles perform an offset

removed from ℒ. In the future we plan to retain the most

common case instead of a random case from 𝐶′. If instead

any case 𝑐′ ∈ ℒ has a different solution than 𝑐. 𝑠 (i.e., 𝑐. 𝑠 ≠

𝑐 ′ . 𝑠), then both cases 𝑐′ and 𝑐 are removed, if

sim(𝑐. 𝑝, 𝑐’. 𝑝) > 𝜏𝑑 .

As discussed in Section 3.4 the pruning algorithm must

take into account the limitations of the acquisition system,

which generally results in All Out Aggressive cases being

mislabeled. When pruning cases with different solutions

we check a final threshold 𝜏𝑎 . If the similarity of a

retrieved case 𝑐′ is such that sim(𝑐. 𝑝, 𝑐 ′ . 𝑝) > 𝜏𝛼 and the Figure 2: Prototypes for the Empirical Study's Scenarios

0.8

Recognition Accuracy

0.6

Accuracy

0.4

0.2

0

1,0 0.9, 0.1 0.8, 0.2 0.7, 0.3 0.6, 0.4 0.5, 0.5 0.4, 0.6 0.3, 0.7 0.2, 0.8 0.1, 0.9 0, 1

CBBR

(α, β)

ALL_OUT_AGGRESSIVE SAFETY_AGGRESSIVE PASSIVE OBLIVIOUS

Figure 3: CBBR’s average recognition accuracies per behavior for each combination of weight settings tested

flanking maneuver from the right and left of the friendlies, and 𝛽 = 0.5, which has an average recognition accuracy of

respectively. For each trial the starting position, starting 0.55 over all behaviors (Figure 4), whereas this average is

heading, and behavior of the agents were randomized only 0.50 when 𝛼 = 1.0 and 𝛽 = 0.0. Figure 4 also shows

within bounds to ensure valid scenarios, which are that CBBR with 𝛼 = 0.5 and 𝛽 = 0.5 outperforms

scenarios where the hostile agents nearly always enter Random for behavior recognition accuracy. Another

radar range of the UAV. (Agents operating outside of the observation (from Figure 3) is that the Safety Aggressive

UAV’s radar range cannot be sensed by the UAV, which behavior clearly relies on time step features.

prevents behavior recognition.) During the case acquisition

process we created case bases from a pool using the same Recognition Accuracy for All Behaviors

1

base scenarios used during the experiment but with

different randomized trials. 0.8

We set the pruning thresholds as follows: 𝜏𝒃 = .97, 𝜏𝑑 = 0.6

.973, and 𝜏𝛼 = .99. The thresholds for the similarity 0.4

calculation were set at 𝜏𝑐 = 0.1 and 𝜏𝑠 = 0.8. These values

0.2

were hand-tuned based on insight from subject matter

experts and experimentation. In the future we plan to tune 0

0 100 200 300 400 500 600

these weights using an optimization algorithm.

Figure 5: Average recognition accuracy (with standard error

CBBR 0.5, 0.5 vs Baseline bars) for all behaviors versus simulation time

0.8

0.6 The experimental results support H1; for all values of 𝛼

and 𝛽, CBBR had better recognition accuracy than

0.4 Random on a paired t-test (𝑝 > 0.08). In particular,

CBBR with weights 𝛼 = 0.5 and 𝛽 = 0.5 significantly

0.2 outperformed Random on a paired t-test (𝑝 > 0.01) for all

behaviors.

0

All Out Safety Passive Oblivious The results also provide some support for H2. In

Aggressive Aggressive particular, we found that the average recognition accuracy

CBBR Random of CBBR when 𝛼 = 0.5 and 𝛽 = 0.5 is significantly

higher than when 𝛼 = 1.0 and 𝛽 = 0.0 (𝑝 > 0.04).

Figure 4: Comparing the average recognition accuracies of Therefore, this suggests that the inclusion of global

CBBR, using equal global weights, with the Random baseline features, when weighted appropriately, increases

recognition performance.

4.2 Results Figure 5 displays CBBR’s (𝛼 = 0.5 and 𝛽 = 0.5)

We hypothesized that the CBBR algorithm would increase average recognition accuracy with standard error bars

recognition performance in comparison to the baseline (across all behaviors) as the fair recognition time increases.

(H1) and that recognition performance should be higher This showcases how recognition accuracy varies with more

than an ablation that does not use global features (H2). observation time, and in particular demonstrates the impact

Figure 3 displays the results for recognition accuracy. of global features, given that their values accrue over time

When testing CBBR with a variety of global weight (unlike the time step features). In more detail, recognition

settings, its best performance was obtained when 𝛼 = 0.5 accuracy starts out the same as would be expected of a

random guess among the four behaviors (0.25), increases

over time, and finally becomes erratic due to the spurious Auslander, B., Apker, T., & Aha, D.W. (2014). Case-based

“triggering” of the global features. For example an agent parameter selection for plans: Coordinating autonomous vehicle

teams. Proceedings of the Twenty-Second International

which is Safety Aggressive may during its’ execution be Conference on Case-Based Reasoning (pp. 189-203). Cork,

within radar range and missile range of another agent while Ireland: Springer.

actively trying to disengage (i.e. without meaning to be) Auslander, B., Lee-Urban, S., Hogg, C., & Muñoz-Avila, H.

and will then be categorized as All Aggressive because it (2008). Recognizing the enemy: Combining reinforcement

triggered the INDANGER feature. learning with strategy selection using case-based reasoning.

Proceedings of the Ninth European Conference on Case-Based

Reasoning (pp. 59-73). Trier, Germany: Springer.

5. Conclusion Borck, H., Karneeb, J., Alford, R., & Aha, D.W. (2014). Case-

based behavior recognition to facilitate planning in unmanned air

We presented a case-based behavior prediction (CBBR) vehicles. In S. Vattam & D.W. Aha (Eds.) Case-Based Agents:

algorithm for the real time recognition of agent behaviors Papers from the ICCBR Workshop (Technical Report). Cork,

for simulations of Beyond Visual Range (BVR) Air Ireland: University College Cork.

Combat. In an initial empirical study, we found that CBBR Jaidee, U., Munoz-Avila, H., & Aha, D.W. (2013). Case-based

outperforms a baseline strategy, and that memory of agent goal-driven coordination of multiple learning agents. Proceedings

of the Twenty-First International Conference on Case-Based

behavior can increase its performance. Reasoning (pp. 164-178). Saratoga Springs, NY: Springer.

Our CBBR algorithm has two main limitations. First, it

Kabanza, F., Bellefeuille, P., Bisson, F., Benaskeur, A. R., &

does not perform online learning. For our domain, this may Irandoust, H. (2014). Opponent behavior recognition for real-time

be appropriate until we can provide guarantees on the strategy games. In G. Sukthankar, R.P. Goldman, C. Geib, D.V.

learned behavior, which is a topic for future research. Pynadath, & H.H. Bui (Eds.) Plan, Activity, and Intent

Second, CBBR cannot recognize behaviors it has not Recognition. Amsterdam, The Netherlands: Elsevier.

previously encountered. To partially address this, we plan Laviers, K., Sukthankar, G., Klenk, M., Aha, D.W. & Molineaux,

to conduct further interviews with subject matter experts to M. (2009). Opponent modeling and spatial similarity to retrieve

and reuse superior plays. In S.J. Delany (Ed.) Case-Based

identify other likely behaviors that may arise during BVR Reasoning for Computer Games: Papers from the ICCBR

combat scenarios. Workshop (Technical Report 7/2009). Tacoma, WA: University

Our future work includes completing an integration of of Washington Tacoma, Institute of Technology.

our CBBR algorithm as a component within a larger goal Nielsen, P., Smoot, D., & Dennison, J.D. (2006). Participation of

reasoning (Aha et al 2013) system, where it will be used to TacAir-Soar in RoadRunner and Coyote exercises at Air Force

provide a UAV’s agent with state expectations that will be Research Lab, Mesa AZ (Technical Report). Ann Arbor, MI: Soar

Technology Inc.

compared against its state observations. Whenever

expectation violations arise, the GR system will be Rao, A.S., & Murray, G. (1994). Multi-agent mental-state

recognition and its application to air-combat modelling. In M.

triggered to react, perhaps by setting a new goal for the Klein (Ed.) Distributed Artificial Intelligence: Papers from the

UAV to pursue. We plan to test the CBBR’s contributions AAAI Workshop (Technical Report WS-94-02). Menlo Park, CA:

in this context in the near future. AAAI Press.

Smith, R.E., Dike, B.A., Mehra, R.K., Ravichandran, B., & El-

Fallah, A. (2000). Classifier systems in combat: Two-sided

Acknowledgements learning of maneuvers for advanced fighter aircraft. Computer

Methods in Applied Mechanics and Engineering, 186(2), 421-

Thanks to OSD ASD (R&E) for sponsoring this research. 437.

The views and opinions contained in this paper are those of Smyth, B., & Keane, M.T. (1995). Remembering to forget.

the authors and should not be interpreted as representing Proceedings of the Fourteenth International Joint Conference on

the official views or policies, either expressed or implied, Artificial Intelligence (pp. 377-382). Montreal, Quebec: AAAI

of NRL or OSD. Additional thanks to Josh Darrow and Press.

Michael Floyd for their insightful conversations/feedback. Sukthankar, G., & Sycara, K. (2006). Simultaneous team

assignment and behavior recognition from spatio-temporal agent

traces. Proceedings of the Twenty-First National Conference on

References Artificial Intelligence (pp. 716-721). Boston, MA: AAAI Press.

Sukthankar, G., & Sycara, K.P. (2011). Activity recognition for

Aha, D.W., Molineaux, M., & Ponsen, M. (2005). Learning to dynamic multi-agent teams. ACM Transactions on Intelligent

win: Case-based plan selection in a real-time strategy game. Systems Technology, 18, 1-24.

Proceedings of the Sixth International Conference on Case-Based

Weber, B. G., Mateas, M., & Jhala, A. (2010). Case-based goal

Reasoning (pp. 5-20). Chicago, IL: Springer.

formulation. In D.W. Aha, M. Klenk, H. Muñoz-Avila, A. Ram,

Aha, D.W., Cox, M.T., & Muñoz-Avila, H. (Eds.) (2013). Goal & D. Shapiro (Eds.) Goal-Directed Autonomy: Notes from the

reasoning: Papers from the ACS Workshop (Technical Report AAAI Workshop (W4). Atlanta, GA: AAAI Press.

CS-TR-5029). College Park, MD: University of Maryland,

Department of Computer Science.

Vous aimerez peut-être aussi

- A Goal Reasoning Agent For Controlling Uavs in Beyond-Visual-Range Air CombatDocument8 pagesA Goal Reasoning Agent For Controlling Uavs in Beyond-Visual-Range Air Combatcoopter zhangPas encore d'évaluation

- Case-Based Reasoning For Group Strategy and Coordination in Multi-Robot SystemsDocument2 pagesCase-Based Reasoning For Group Strategy and Coordination in Multi-Robot SystemsAnonymous r8hUcbPas encore d'évaluation

- Robo Report OfficialDocument9 pagesRobo Report OfficialPham Van HaiPas encore d'évaluation

- Agent Based Approaches For Behavioural MDocument9 pagesAgent Based Approaches For Behavioural MAnsh SinghPas encore d'évaluation

- Agent-Based Evaluation of Driver Heterogeneous Behavior During Safety-Critical EventsDocument6 pagesAgent-Based Evaluation of Driver Heterogeneous Behavior During Safety-Critical EventscielyaoPas encore d'évaluation

- Mapping, Localization and Path Planning of A UAV (Quad-Copter)Document6 pagesMapping, Localization and Path Planning of A UAV (Quad-Copter)HusnainLodhiPas encore d'évaluation

- Particle Model Rts GamesDocument6 pagesParticle Model Rts GameszandreePas encore d'évaluation

- Optimization of Target Objects For Natural Feature TrackingDocument4 pagesOptimization of Target Objects For Natural Feature TrackingAgnes ElaarasuPas encore d'évaluation

- Session2paper1 DOMINIO MARITIMODocument9 pagesSession2paper1 DOMINIO MARITIMOEusPas encore d'évaluation

- New Guidance Filter Structure For Homing Missiles With Strapdown IIR SeekerDocument10 pagesNew Guidance Filter Structure For Homing Missiles With Strapdown IIR SeekerMissile MtaPas encore d'évaluation

- Kshitij Final SeminarDocument16 pagesKshitij Final SeminarJADHAV KUNALPas encore d'évaluation

- Scene Representations for Robot Manipulators ReviewDocument23 pagesScene Representations for Robot Manipulators ReviewYan AecPas encore d'évaluation

- A Comparison of Lidar-Based Slam Systems For Control of Unmanned Aerial VehiclesDocument7 pagesA Comparison of Lidar-Based Slam Systems For Control of Unmanned Aerial VehiclesMetehan DoğanPas encore d'évaluation

- Agent-Based Simulation Environment For Ucav Mission Planning and ExecutionDocument11 pagesAgent-Based Simulation Environment For Ucav Mission Planning and ExecutionjimakosjpPas encore d'évaluation

- Reinforcement Learning Aircraft Detection Remote SensingDocument19 pagesReinforcement Learning Aircraft Detection Remote SensingTheanmozhi SPas encore d'évaluation

- YOLO-G: A Lightweight Network Model For Improving The Performance of Military Targets DetectionDocument19 pagesYOLO-G: A Lightweight Network Model For Improving The Performance of Military Targets DetectionSarvaniPas encore d'évaluation

- AI CH 2Document27 pagesAI CH 2Antehun asefaPas encore d'évaluation

- 9 Integrating Human Observer Inferences - Into Robot Motion PlanningDocument16 pages9 Integrating Human Observer Inferences - Into Robot Motion PlanningoldenPas encore d'évaluation

- Ibarz Et Al 2021 How To Train Your Robot With Deep Reinforcement Learning Lessons We Have LearnedDocument24 pagesIbarz Et Al 2021 How To Train Your Robot With Deep Reinforcement Learning Lessons We Have LearnedhadiPas encore d'évaluation

- 11-Mechatronics Robot Navigation Using Machine Learning and AIDocument4 pages11-Mechatronics Robot Navigation Using Machine Learning and AIsanjayshelarPas encore d'évaluation

- Remote Agent Demonstration: Gregory A. Dorais James Kurien Kanna RajanDocument3 pagesRemote Agent Demonstration: Gregory A. Dorais James Kurien Kanna Rajaniky77Pas encore d'évaluation

- Bachelorpaper Wouter PoolDocument7 pagesBachelorpaper Wouter PoolFrancisco Miguel Estrada PastorPas encore d'évaluation

- Sa MDPDocument16 pagesSa MDPHet PatelPas encore d'évaluation

- Uav Thesis PDFDocument5 pagesUav Thesis PDFk1kywukigog2100% (2)

- CMUnited-97 Simulator Team ArchitectureDocument9 pagesCMUnited-97 Simulator Team ArchitectureWayan SuardinataPas encore d'évaluation

- IJETR021664Document5 pagesIJETR021664erpublicationPas encore d'évaluation

- Heuristic Search For Coordinating Robot Agents in Adversarial DomainsDocument7 pagesHeuristic Search For Coordinating Robot Agents in Adversarial Domainsspoddar_5Pas encore d'évaluation

- Object Handling in Cluttered Indoor Environment With A Mobile ManipulatorDocument6 pagesObject Handling in Cluttered Indoor Environment With A Mobile ManipulatorSomeone LolPas encore d'évaluation

- 截圖 2022-06-26 上午12.09.18Document12 pages截圖 2022-06-26 上午12.09.18黃祐音Pas encore d'évaluation

- Ou Anane 2013Document5 pagesOu Anane 2013Muhammad MubeenPas encore d'évaluation

- Kormushev Humanoids 2010Document7 pagesKormushev Humanoids 2010David Dave Mateos JiménezPas encore d'évaluation

- A Search Algorithm: Goal Based AgentDocument8 pagesA Search Algorithm: Goal Based AgentAshwinMahatkarPas encore d'évaluation

- Cooperative Mutual 3D Laser Mapping and LocalizationDocument6 pagesCooperative Mutual 3D Laser Mapping and LocalizationIan MedeirosPas encore d'évaluation

- SrsDocument16 pagesSrsHarish Kumar GangulaPas encore d'évaluation

- AI Homework #1 - Define Key AI Terms and Develop PEAS DescriptionsDocument3 pagesAI Homework #1 - Define Key AI Terms and Develop PEAS Descriptionssingu ruthwik100% (1)

- A Low Cost Gun Launched Seeker Concept Design For Naval Fire SupportDocument14 pagesA Low Cost Gun Launched Seeker Concept Design For Naval Fire SupportAshraf FadelPas encore d'évaluation

- s40815-015-0019-2Document13 pagess40815-015-0019-2Wikipedia.Pas encore d'évaluation

- Biomav: Bio-Inspired Intelligence For Autonomous Flight: BstractDocument8 pagesBiomav: Bio-Inspired Intelligence For Autonomous Flight: BstractVincent SliekerPas encore d'évaluation

- Kim Object Tracking in A Video SequenceDocument5 pagesKim Object Tracking in A Video Sequencespylife48Pas encore d'évaluation

- Learning Driven Coarse-to-Fine Articulated Robot TrackingDocument7 pagesLearning Driven Coarse-to-Fine Articulated Robot TrackingArun KumarPas encore d'évaluation

- Poster SampleDocument1 pagePoster SampleAnoop R KrishnanPas encore d'évaluation

- Particle Swarm OptimisationDocument16 pagesParticle Swarm OptimisationdoraemonPas encore d'évaluation

- Artificial Intelligence: COMP-241, Level-6Document10 pagesArtificial Intelligence: COMP-241, Level-6ابراهيم آلحبشيPas encore d'évaluation

- Estimating Pilots' Cognitive Load From Ocular ParametersDocument16 pagesEstimating Pilots' Cognitive Load From Ocular Parametersyifan hePas encore d'évaluation

- Ramezani 2020Document7 pagesRamezani 2020Nguyễn PhúcPas encore d'évaluation

- Requirements For Laser Countermeasures Against Imaging SeekersDocument11 pagesRequirements For Laser Countermeasures Against Imaging SeekersBernie CanecattivoPas encore d'évaluation

- ROS Based SLAM Implementation For Autonomous Navigation Using TurtlebotDocument5 pagesROS Based SLAM Implementation For Autonomous Navigation Using TurtlebotMohammed El SisiPas encore d'évaluation

- Cooperative Multi-Robot Estimation and Control For Radio Source LocalizationDocument12 pagesCooperative Multi-Robot Estimation and Control For Radio Source LocalizationMarinelPas encore d'évaluation

- KPCA+LDA IEEE PapersDocument5 pagesKPCA+LDA IEEE PapersArunadevi RajmohanPas encore d'évaluation

- Towards Explainability in Modular Autonomous Vehicle SoftwareDocument8 pagesTowards Explainability in Modular Autonomous Vehicle SoftwarevadavaskiPas encore d'évaluation

- IROS 2020 Robust, Perception Based Control With QuadrotorsDocument7 pagesIROS 2020 Robust, Perception Based Control With QuadrotorsFouad YacefPas encore d'évaluation

- 2023 CapDocument9 pages2023 Capg4w85z29fnPas encore d'évaluation

- A Reactive Trajectory Controller For Object Manipulation in Human Robot InteractionDocument11 pagesA Reactive Trajectory Controller For Object Manipulation in Human Robot InteractionChristy PollyPas encore d'évaluation

- Inteligence Agents ArchitectureDocument12 pagesInteligence Agents ArchitectureReshma DharmarajPas encore d'évaluation

- Kshitij SynopsisDocument8 pagesKshitij SynopsisJADHAV KUNALPas encore d'évaluation

- Rotation-Invariant Infrared Aerial Target Identification Based On SRCDocument9 pagesRotation-Invariant Infrared Aerial Target Identification Based On SRCapplebao1022Pas encore d'évaluation

- DR Track: Towards Real-Time Visual Tracking For UAV Via Distractor Repressed Dynamic RegressionDocument8 pagesDR Track: Towards Real-Time Visual Tracking For UAV Via Distractor Repressed Dynamic RegressionYiming LiPas encore d'évaluation

- Decentralized Coordinated Tracking With Mixed Discrete-Continuous Decisions Rob.21471Document24 pagesDecentralized Coordinated Tracking With Mixed Discrete-Continuous Decisions Rob.21471Mehmed BrkićPas encore d'évaluation

- 10 3390@drones3030058Document14 pages10 3390@drones3030058XxgametrollerxXPas encore d'évaluation

- Technology and The SpiritDocument156 pagesTechnology and The SpiritAmitabh PrasadPas encore d'évaluation

- Magic and Medieval SocietyDocument182 pagesMagic and Medieval SocietyAmitabh PrasadPas encore d'évaluation

- Magic and Medieval SocietyDocument182 pagesMagic and Medieval SocietyAmitabh PrasadPas encore d'évaluation

- Guide To The PsychologyDocument2 pagesGuide To The PsychologyAmitabh PrasadPas encore d'évaluation

- Broadway Song CompanionDocument332 pagesBroadway Song CompanionAmitabh Prasad67% (3)

- Air To Air ReportDocument76 pagesAir To Air ReportAvi DasPas encore d'évaluation

- WhatsApp Encryption Overview Technical White PaperDocument12 pagesWhatsApp Encryption Overview Technical White Paperjuanperez23Pas encore d'évaluation

- Censorship in Fascist Italy, 1922-43 Policies, Procedures and ProtagonistsDocument261 pagesCensorship in Fascist Italy, 1922-43 Policies, Procedures and ProtagonistsAmitabh PrasadPas encore d'évaluation

- 21 ST Century Air-To-Air Short Range Weapon RequirementsDocument38 pages21 ST Century Air-To-Air Short Range Weapon RequirementsAmitabh PrasadPas encore d'évaluation

- Iskcon TemplesDocument1 pageIskcon TemplesAmitabh PrasadPas encore d'évaluation

- Analytical Modeling of Beyond Visual Range Air CombatDocument13 pagesAnalytical Modeling of Beyond Visual Range Air CombatAmitabh PrasadPas encore d'évaluation

- Advances in Algebraic Geometry Codes PDFDocument453 pagesAdvances in Algebraic Geometry Codes PDFAmitabh Prasad100% (1)

- Case-Based Behavior Recognition in Beyond Visual Range Air CombatDocument6 pagesCase-Based Behavior Recognition in Beyond Visual Range Air CombatAmitabh PrasadPas encore d'évaluation

- Promise and Reality... Beyond Visual Range (BVR) Air-To-Air CombatDocument21 pagesPromise and Reality... Beyond Visual Range (BVR) Air-To-Air CombatManuel SolisPas encore d'évaluation

- Bourdieu Piere Art RulesDocument225 pagesBourdieu Piere Art RulesFuensanta Donaji100% (1)

- Dropout: A Simple Way To Prevent Neural Networks From Over TtingDocument30 pagesDropout: A Simple Way To Prevent Neural Networks From Over TtingJosephPas encore d'évaluation

- Pango SVC Manual EngDocument35 pagesPango SVC Manual EngYeow YeowoeyPas encore d'évaluation

- Guitar Fretboard MapDocument2 pagesGuitar Fretboard MapAmitabh PrasadPas encore d'évaluation

- Learning Every Note On The Guitar: A System For Building Your Own Mental Map of The Guitar FretboardDocument15 pagesLearning Every Note On The Guitar: A System For Building Your Own Mental Map of The Guitar Fretboardnaveenmanuel8879Pas encore d'évaluation

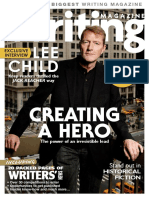

- Writing Magazine - January 2017 PDFDocument111 pagesWriting Magazine - January 2017 PDFAmitabh PrasadPas encore d'évaluation

- Lessons On PresentationDocument33 pagesLessons On PresentationSarfaraj AhmedPas encore d'évaluation

- Guitar Blank Fretboard Charts 15 Frets With Inlays PDFDocument1 pageGuitar Blank Fretboard Charts 15 Frets With Inlays PDFAmitabh PrasadPas encore d'évaluation

- NCERT Hindi Class 5 HindiDocument147 pagesNCERT Hindi Class 5 HindiVijay Kumar100% (1)

- Tutorial 11Document6 pagesTutorial 11sumendersinghPas encore d'évaluation

- Dropout: A Simple Way To Prevent Neural Networks From Over TtingDocument30 pagesDropout: A Simple Way To Prevent Neural Networks From Over TtingJosephPas encore d'évaluation

- Writing Magazine - January 2017 PDFDocument111 pagesWriting Magazine - January 2017 PDFAmitabh PrasadPas encore d'évaluation

- Notes On The FretboardDocument1 pageNotes On The FretboardAmitabh PrasadPas encore d'évaluation

- Understanding by Design Handbook PDFDocument302 pagesUnderstanding by Design Handbook PDFAmitabh Prasad100% (10)

- Stony Brook UniversityDocument30 pagesStony Brook UniversityAkshat PandeyPas encore d'évaluation

- The Dallas Morning NewsDocument16 pagesThe Dallas Morning NewsmlandauerPas encore d'évaluation

- Ai ML Lab Project Template FinalDocument27 pagesAi ML Lab Project Template FinalRishabh KhetanPas encore d'évaluation

- Gartner - Generative AI Briefing Bunnings - August 2023Document49 pagesGartner - Generative AI Briefing Bunnings - August 2023artie.mellorsPas encore d'évaluation

- Cybersecurity Trends 2021 PDFDocument38 pagesCybersecurity Trends 2021 PDFAddini Ainul HaqPas encore d'évaluation

- C2 Entity Agent Modeling Based On OOFPN Approach: WU Chenghai, LI Xiong, PU WeiDocument6 pagesC2 Entity Agent Modeling Based On OOFPN Approach: WU Chenghai, LI Xiong, PU WeiAmmar BoucheritPas encore d'évaluation

- Big Data Quarterly Fall 2021 IssueDocument44 pagesBig Data Quarterly Fall 2021 IssueTwee YuPas encore d'évaluation

- Managerial Economics Question PaperDocument3 pagesManagerial Economics Question PaperMahima AgarwalPas encore d'évaluation

- Robotics Research PaperDocument23 pagesRobotics Research PaperAayush Garg100% (1)

- Customer Churn Prediction Using Machine Learning Techniques: The Case of Lion InsuranceDocument14 pagesCustomer Churn Prediction Using Machine Learning Techniques: The Case of Lion InsuranceAsian Journal of Basic Science & ResearchPas encore d'évaluation

- Case Study About "Ai For Real World (Solution) "Document7 pagesCase Study About "Ai For Real World (Solution) "damso recshPas encore d'évaluation

- AI Research Article SummaryDocument13 pagesAI Research Article Summarysiri rgPas encore d'évaluation

- MS POTYA 2020 Final 7.22Document46 pagesMS POTYA 2020 Final 7.22lasidohPas encore d'évaluation

- How I Cheated On My Matura Exam With AI - MetawersumDocument2 pagesHow I Cheated On My Matura Exam With AI - MetawersumbasiaPas encore d'évaluation

- MLP and BackpropagationDocument30 pagesMLP and BackpropagationFadhlanPas encore d'évaluation

- An Overview of E-Documents Classification: January 2009Document10 pagesAn Overview of E-Documents Classification: January 2009rohitPas encore d'évaluation

- Essay On AI and Robotics As A Challenge PDFDocument6 pagesEssay On AI and Robotics As A Challenge PDFsalmanyz6Pas encore d'évaluation

- Blank Production Log 22 23 1Document23 pagesBlank Production Log 22 23 1Nasteexo CaliPas encore d'évaluation

- ICE WorkbenchDocument286 pagesICE Workbenchmohamed farmaanPas encore d'évaluation

- Stock Market Prediction using MLP and Random ForestDocument18 pagesStock Market Prediction using MLP and Random ForestVaibhav PawarPas encore d'évaluation

- Muscle Movement and Facial Emoji Detection Via Raspberry Pi AuthorsDocument8 pagesMuscle Movement and Facial Emoji Detection Via Raspberry Pi AuthorsGokul KPas encore d'évaluation

- Gujarat Technological UniversityDocument1 pageGujarat Technological Universityfeyayel988Pas encore d'évaluation

- Z02 Investor Day BrochureDocument14 pagesZ02 Investor Day BrochurekevinPas encore d'évaluation

- AI STUDY MetarialDocument9 pagesAI STUDY MetarialArohan BuddyPas encore d'évaluation

- ECI Final ReportDocument41 pagesECI Final ReportPhạm Thu Hà 1M-20ACNPas encore d'évaluation

- M 0705 Computer Science and EngineeringDocument80 pagesM 0705 Computer Science and Engineeringaarsha tkPas encore d'évaluation

- Predictions From Top Investors On AI in 2024Document4 pagesPredictions From Top Investors On AI in 2024Kommu RohithPas encore d'évaluation

- AI in Film and Entertainment: From CGI To StorytellingDocument2 pagesAI in Film and Entertainment: From CGI To StorytellingInoka welagedaraPas encore d'évaluation

- Ai Usage in SpaceDocument2 pagesAi Usage in SpaceHossam MohamedPas encore d'évaluation

- Autonomous Driving: Moonshot Project With Quantum Leap From Hardware To Software & AI FocusDocument56 pagesAutonomous Driving: Moonshot Project With Quantum Leap From Hardware To Software & AI FocusSatyam AgrawalPas encore d'évaluation