Académique Documents

Professionnel Documents

Culture Documents

Image Captioning and Image Retrieval

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

Image Captioning and Image Retrieval

Droits d'auteur :

Formats disponibles

Volume 4, Issue 4, April – 2019 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Image Captioning and Image Retrieval

Ankit Kumar Kinshuk Kumar Meenu Garg (Assistant Professor)

Department of Information Technology Department of Information Technology Department of Information Technology

Maharaja Agrasen Institute of Maharaja Agrasen Institute of Maharaja Agrasen Institute of

Technology (GGSIPU) Technology (GGSIPU) Technology (GGSIPU)

Delhi, India Delhi, India Delhi, India

Abstract:- Most images do not have a description, but This process is repeated until it gets the ending token of the

the human can largely understand them without their sentence. We have used Flickr8k dataset for our training

detailed captions, but machine needs to understand our model because it is small in size and can be loaded

some form of description of image. In Image completely in ram. We can load the entire dataset in ram in

Captioning, textual description of an image is one go. BLEU (Bilingual evaluation understudy) is a metric

generated. These captions could be used for various that is used to measure the quality of machine generated

purposes like automatic image indexing. Image indexing text. The central idea behind BLUE is to check the

is an important of Content-Based Image Retrieval closeness in the text generated by machine to a human

(CBIR) and hence, it can be applied to many areas, translator. However, grammatical correctness is not taken

including biomedicine, commerce, the military, into account herein the image retrieval part, images are

education, digital libraries, and web searching. We have searched on the basis of caption generated from the image

divided the task into two parts- one is image based captioning model. In text based image retrieval, user inputs

model which is used to extract the content of the image the keyword and search operation is performed on image

for that purpose we have used CNN model and other a database whereas in content based image retrieval, an

language model which is used to translate the feature in image is used for performing search operation.

sentences for that purpose we have used

RNN(LSTM).The applicative part of this model is II. RELATED WORK

Image Retrieval ,both Text based(TBIR) and Content

based(CBIR).In TBIR, a keyword (text) is used to Krizhevsky et al. [1] proposed a neural network that

perform image search whereas in CBIR, an image is uses non-saturating neurons and a very efficient method

used as the search object. We used Flickr8k dataset to GPU implementation of the convolution function. They

train our model which consists of 8000 images along employed a regularization technique called dropout which

with their captions. For evaluation we used BLUE reduces the overfitting of the training dataset. Deng et al.

metric. BLEU (Bilingual evaluation understudy) is a [2] proposed a new database which they called ImageNet.

metric that is used to measure the quality of machine ImageNet was an extensive collection of images. ImageNet

generated text. organized the divergent classes of images in a densely

populated semantic pecking. Karpathy and FeiFei [3] made

Keywords:- CNN, RNN, LSTM, TBIR, CBIR, BLEU. use of datasets of images and their textual descriptions in

order to learn about the inner correlation between visual

I. INTRODUCTION data and language. They described a Multimodal Recurrent

Neural Network architecture that employ the inferred co-

Image captioning means generating a description in linear arrangement of features in order to learn how to

form of text for an image. Image captioning needs generate novel descriptions of images. Yang et al. [4]

understanding and recognition of objects. It requires a implemented a system for the automatic generation of a

computer model or an encoder, to understand the content of natural language interpretation of an image, which will help

the image and a language model or a decoder from natural acutely in image understanding. The proposed multimodel

language processing to convert the understanding of the neural network technique, consisting of object detection

image into words in correct order. What is most impressive and localization modules which is very like to the human

about these methods is a single end-to-end model can be visual system which is able to learn how to describe an of

defined to predict a caption, given a photo, instead of images automatically. Aneja et al. [5] proposed a

requiring complicated data preparation. In deep learning convolutional neural network model for machine transferal

based techniques, automatically features are learned from and conditional image generation in order to solve to

training data and large and diverse set of images are also problem of LSTM being composite. Xu et al. [6] proposed

handled. We have used pre-trained VGG 16 model which an attention based model that learned to describe the image

won ImageNet competition. Vanilla RNN suffers from the regions automatically. The model was trained using

problem of vanishing gradient for that purpose we have backpropagation techniques. The model was able to

used LSTM. LSTM is capable of keeping track of the recognize object boundaries, at the same time was able to

objects that have already been described using text. While induce a precise description. Flickr8k [7] is a widely used

generating captions, image information is included to the dataset that consists of 8000 images taken from Flickr.com.

initial state of an LSTM. The next words are generated The dataset consists of 8,092 photographs in JPEG format

based on the previous hidden state and current time step. and each image in the dataset has 5 corresponding captions

IJISRT19AP690 www.ijisrt.com 909

Volume 4, Issue 4, April – 2019 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

annotated by humans. The dataset is divided into three the overall quality of the generated text, the computed

disjoint sets: pre-defined training dataset containing 6,000 scores are averaged. However, grammatical correctness are

images, development dataset and test dataset containing not taken into account. The performance of the BLEU

1,000 images each. BLEU (bilingual evaluation metric is varied depending on the number of reference

understudy) [8] metric is used for evaluating the text that translations and the size of the generated text. The BLUE

has been generated by a machine from one language to metric ranges from 0 to 1. Score closer to 1 is considered to

another. The central idea behind BLUE is to check the be perfect i.e. machine generated and human annotated text

closeness in the text generated by machine to a human are similar and score 0 is perfect mismatch. This evaluation

translator. In estimating the overall quality of the generated method was proposed by Kishore Papineni, et al. Since it is

text, the computed scores are averaged. However, inexpensive to calculate and language independent it is

grammatical correctness are not taken into account. BLUE widely accepted and used.

metric ranges from 0 to 1. Sepp Hochreiter and JÃijrgen

Schmidhuber describes LSTM [9] neural networks type of The following equation is used to calculate BLUE

recurrent neural network that has hidden units along with score:

standard units .LSTM units consists of 256 hidden states, it

is memory cell that can preserve information in memory for

longer durations. LSTM solves the vanishing gradient

problem of vanilla RNN. Huang and Dai [10] proposed a

texture-based image retrieval system which coalesce the

wavelet decomposition and the gradient vector. Lin et al. V. ENCODER-DECODER ARCHITECTURE

[11] proposed a method using Colour-Texture and Colour-

Histogram for Image Retrieval .The model uses three Our model is neural network-based image captioning

disjoint features namely image colour, image texture and which works in end to end manner. Here image features are

colour distribution. Hiremath and Pujari [12] proposed a extracted VGG-16 model which is a 16 layer model and

content based image retrieval system that utilised colour, then fed them into an LSTM to generate a sequence of

texture and shape features and divides the image into words, which are later used to form meaningful sentences.

matrix. Zhang [13] proposed a method in which he We described the model in three parts:

enumerates colour and texture features from the image

database. So, for every image, colour and textures features A. Photo Feature Extractor

are calculated. For any query image these features are This is a 16-layer VGG model pre-trained on the

calculated and then the images are classified according to ImageNet dataset. We have pre-processed the photos with

the colour features then the top-ranked images from colour the VGG model. We have removed the last layer as we are

features are again ranked according to the texture features. using this for feature extraction not for classification .Later

the features extracted by the model are fed to the LSTM.

III. DATASET These features can be used as interpretation of a given

image in the dataset. The input to the feature extractor is

Various datasets are used for training, testing, and given in the form a 4,096 element vector which then are

evaluation of the image captioning methods. The dataset processed by a Dense layer to produce a 256 element

differ in various perspective such as the number of images, representation of the photo.

the number of captions per image, format of the captions,

and image size. Some of the popularly used datasets for B. Sequence Processor

training are: Flickr8k, Flickr30k, MS COCO, Instagram This is embedding layer, followed by a fully

dataset, Stock3M, FlickrStyle10k. connected layer with 256 memory units (LSTM) recurrent

neural network layer. The function of a sequence processor

We have used Flickr8k dataset for our training our is for handling the text input by acting as a word embedding

model because it is small in size and can be loaded layer. The embedded layer consists of rules to extract the

completely in ram. We can load the entire dataset in ram in required features of the text and consists of a mask to ignore

one go. The dataset consists of 8,092 photographs in JPEG padded values. This is followed by an LSTM layer

format and each image in the dataset has 5 corresponding containing 256 hidden states. The dropout layer is there to

captions annotated by humans. The dataset is divided into reduce overfitting of the training dataset.

three disjoint sets: pre-defined training dataset containing

6,000 images, development dataset and test dataset C. Decoder

containing 1,000 images each. The final phase of the model is to merge the feature

extractor and sequence processor together which are later

IV. EVALUATION METRIC processed by a 256 neuron layer to make a final prediction.

The networks are merged simply by adding two vectors of

BLEU (bilingual evaluation understudy) metric is size 256. This is then fed to two Dense layer and a final

used for evaluating the text that has been generated by a Dense layer that return the probability that the word is in

machine from one language to another. The central idea vocabulary by making a softmax prediction over the entire

behind BLUE is to check the closeness in the text output vocabulary in order to predict the next word for the

generated by machine to a human translator. In estimating sequence.

IJISRT19AP690 www.ijisrt.com 910

Volume 4, Issue 4, April – 2019 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

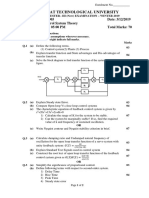

VI. IMPLEMENTATION VII. RESULTS

A. Image Captioning We implemented the model and it was able to

Firstly the “image features” are pre-computed using generate captions successfully. Fig 1 shows the input image

the pre-trained captioning model and then save them into along with the predicted caption that is dog is running

file. These features are then loaded and fed into the model through the grass. Fig 2 shows a test image given to the

as an interpretation of the photo in the dataset. So instead model in order to retrieve similar images, it extracts the

of running the photo through full VGG model, we do it features and generates keywords like dog and water and

through the pre-computed image features, which are both returns images on that basis. Fig 3 and Fig 4 are the

similar. This helps in faster processing and working of the retrieved images. Fig 5 shows the evaluated BLUE score of

model, making it more optimized and also consumes less our model. Fig 6 shows a graph of accuracy and validation

memory. The last layer of the CNN model was removed loss.

since classification is not required in image captioning.

These are the “features” that the model has extracted from

the photo. Following steps for taken for description

cleansing.

All the words were converted to lowercase.

All punctuations were removed.

Removed all words that are less than or equal to one

character in length.

All the words having number in them were removed.

Training of data was done on all the photos and

captions present in the dataset. The model we have

developed will generate a caption for a given photo. We

used the strings ‘startseq' and ‘endseq‘ to mark the start and

end of the caption. Evaluate the model using BLEU score.

And finally generating new captions. Fig 1:- Input Image

B. Retrieval of Image

Database and Description Generation

All the images on which the search queries are to be

processed are placed in a separate folder. This folder serves

as the Database to the search query. Directory of the folder

is stated and captions are generated for each image using

image captioning model. These captions are stored in the

description.txt file which is used for retrieving images as

per the search queries. This description.txt file contains

textual description of the images along with the filename of

the image.

Text based Image Retrieval

Fig 2:- Test Image

Firstly, the keyword for search is entered by the user,

this keyword is then searched in the descriptions generated

in the file description.txt. If any match is found, all the

images corresponding to the keyword are shown as the

result by using Matplotlib library.

Content based Image Retrieval

In CBIR, instead of using a keyword entered by the

user, an image is used for performing search operation. The

search image is passed through the Image description

model which generated a textual description of the image.

All the stopwords from the description generated from the

image are removed and only main keywords are used.

These keywords are then matched in the description.txt file

if any match is found then all the images corresponding to

the keyword are shown as the result by using Matplotlib Fig 3:- Retrieved Image

library.

IJISRT19AP690 www.ijisrt.com 911

Volume 4, Issue 4, April – 2019 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

REFERENCES

[1]. Alex Krizhevsky, Ilya Sutskever, and Geoffrey E.

Hinton, “ImageNet Classification with Deep

Convolutional Neural Networks”. Volume 60 Issue 6,

Pages 84-90, June 2017.Jia Deng, Wei Dong, Richard

Socher, Li-Jia Li, Kai Li and Li Fei-Fei, ImageNet: A

Large-Scale Hierarchical Image Database.

[2]. Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li

and Li Fei-Fei, “ImageNet: A Large-Scale Hierarchical

Image Database”. IEEE Conference on Computer

Vision and Pattern Recognition 2009.

[3]. Andrej Karpathy, Li Fei-Fei, “Deep Visual Semantic

Alignments for Generating Image Descriptions”,

Volume 39 Issue 4, Page 664-676 IEEE Transactions

Fig 4:- Retrieved Image on Pattern Analysis and Machine Intelligence, April

2017.

[4]. Zhongliang Yang,Yu-Jin Zhang, Sadaqatur Rehman,

Yongfeng Huang, “Image Captioning with Object

Detection and Localization”, arXiv:1706.02430, 2017.

[5]. Jyoti Aneja, Aditya Deshpande, Alexander Schwing,

“Convolutional Image Captioning”, arXiv:

1711.09151, 2017.

[6]. Kelvin Xu, Jimmy Lei Ba, Ryan Kiros, Kyunghyun

Cho, Aaron Courville, Ruslan Salakhutdinov, Richard

S. Zemel, Yoshua Bengio, “Show, Attend and Tell:

Neural Image Caption Generation with Visual

Attention”, International Machine Learning Society,

Volume 37, Pages 2048-2057, 2015.

[7]. Micah Hodosh, Peter Young, and Julia Hockenmaier.

“Framing image description as a ranking task: Data,

models and evaluation metrics”. Journal of Artificial

Intelligence Research 47 (2013), 853–899, 2013.

Fig 5:- BLUE Score [8]. Kishore Papineni, Salim Roukos, Todd Ward, and

Wei-Jing Zhu. “BLEU: a method for automatic

evaluation of machine translation”. In Proceedings of

the 40th annual meeting on association for

computational linguistics. Association for

Computational Linguistics, 311–318, 2002.

[9]. Sepp Hochreiter and JÃijrgen Schmidhuber. "Long

short-term memory". Neural Computation 9 8 (1997),

1735–1780, 1997.

[10]. P. W. Huang and S. K. Dai, “Image retrieval by texture

similarity,” Pattern Recognit., vol. 36, no. 3, pp. 665–

679, 2003.

[11]. C.-H. Lin, R.-T. Chen, and Y.-K. Chan, “A smart

content-based image retrieval system based on color

and texture feature,” Image Vis. Comput., vol. 27, no.

6, pp. 658–665, 2009.

Fig 6:- Accuracy and Validation Loss [12]. P. S. Hiremath and J. Pujari, “Content Based Image

Retrieval based on Color, Texture and Shape features

VIII. CONCLUSION using Image and its complement,” Int. J. Comput. Sci.

Secur., vol. 1, no. 4, pp. 25–35, 2007.

In this paper the authors have developed an image [13]. Zhang, “Improving Image Retrieval Performance by

captioning model using deep learning approach with VGG- Using Both Color and Texture Features,” Third Int.

16 model feature extractor and LSTM for making Conf. Image Graph. pp. 172–175, 2004.

descriptive sentences which are syntactically and

semantically correct and we have achieved BLEU score of

0.50.Text based and Content Based Image Retrieval is also

performed.

IJISRT19AP690 www.ijisrt.com 912

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Dealing With Missing Data in Python PandasDocument14 pagesDealing With Missing Data in Python PandasSelloPas encore d'évaluation

- 23 Coding Patterns to Master FAANG+ InterviewsDocument26 pages23 Coding Patterns to Master FAANG+ Interviewshardik PatelPas encore d'évaluation

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- Algorithmic Trading With Markov ChainsDocument29 pagesAlgorithmic Trading With Markov ChainspaoloPas encore d'évaluation

- Deep Learning For The Life SciencesDocument90 pagesDeep Learning For The Life SciencesKostas Papazoglou100% (1)

- Securing Document Exchange with Blockchain Technology: A New Paradigm for Information SharingDocument4 pagesSecuring Document Exchange with Blockchain Technology: A New Paradigm for Information SharingInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- The Effect of Time Variables as Predictors of Senior Secondary School Students' Mathematical Performance Department of Mathematics Education Freetown PolytechnicDocument7 pagesThe Effect of Time Variables as Predictors of Senior Secondary School Students' Mathematical Performance Department of Mathematics Education Freetown PolytechnicInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Perceived Impact of Active Pedagogy in Medical Students' Learning at the Faculty of Medicine and Pharmacy of CasablancaDocument5 pagesPerceived Impact of Active Pedagogy in Medical Students' Learning at the Faculty of Medicine and Pharmacy of CasablancaInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Formation of New Technology in Automated Highway System in Peripheral HighwayDocument6 pagesFormation of New Technology in Automated Highway System in Peripheral HighwayInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Supply Chain 5.0: A Comprehensive Literature Review on Implications, Applications and ChallengesDocument11 pagesSupply Chain 5.0: A Comprehensive Literature Review on Implications, Applications and ChallengesInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Exploring the Clinical Characteristics, Chromosomal Analysis, and Emotional and Social Considerations in Parents of Children with Down SyndromeDocument8 pagesExploring the Clinical Characteristics, Chromosomal Analysis, and Emotional and Social Considerations in Parents of Children with Down SyndromeInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Intelligent Engines: Revolutionizing Manufacturing and Supply Chains with AIDocument14 pagesIntelligent Engines: Revolutionizing Manufacturing and Supply Chains with AIInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- The Making of Self-Disposing Contactless Motion-Activated Trash Bin Using Ultrasonic SensorsDocument7 pagesThe Making of Self-Disposing Contactless Motion-Activated Trash Bin Using Ultrasonic SensorsInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Beyond Shelters: A Gendered Approach to Disaster Preparedness and Resilience in Urban CentersDocument6 pagesBeyond Shelters: A Gendered Approach to Disaster Preparedness and Resilience in Urban CentersInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Enhancing the Strength of Concrete by Using Human Hairs as a FiberDocument3 pagesEnhancing the Strength of Concrete by Using Human Hairs as a FiberInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Natural Peel-Off Mask Formulation and EvaluationDocument6 pagesNatural Peel-Off Mask Formulation and EvaluationInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Teachers' Perceptions about Distributed Leadership Practices in South Asia: A Case Study on Academic Activities in Government Colleges of BangladeshDocument7 pagesTeachers' Perceptions about Distributed Leadership Practices in South Asia: A Case Study on Academic Activities in Government Colleges of BangladeshInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Handling Disruptive Behaviors of Students in San Jose National High SchoolDocument5 pagesHandling Disruptive Behaviors of Students in San Jose National High SchoolInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Safety, Analgesic, and Anti-Inflammatory Effects of Aqueous and Methanolic Leaf Extracts of Hypericum revolutum subsp. kenienseDocument11 pagesSafety, Analgesic, and Anti-Inflammatory Effects of Aqueous and Methanolic Leaf Extracts of Hypericum revolutum subsp. kenienseInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- REDLINE– An Application on Blood ManagementDocument5 pagesREDLINE– An Application on Blood ManagementInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Placement Application for Department of Commerce with Computer Applications (Navigator)Document7 pagesPlacement Application for Department of Commerce with Computer Applications (Navigator)International Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Optimization of Process Parameters for Turning Operation on D3 Die SteelDocument4 pagesOptimization of Process Parameters for Turning Operation on D3 Die SteelInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Advancing Opthalmic Diagnostics: U-Net for Retinal Blood Vessel SegmentationDocument8 pagesAdvancing Opthalmic Diagnostics: U-Net for Retinal Blood Vessel SegmentationInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Adoption of International Public Sector Accounting Standards and Quality of Financial Reporting in National Government Agricultural Sector Entities, KenyaDocument12 pagesAdoption of International Public Sector Accounting Standards and Quality of Financial Reporting in National Government Agricultural Sector Entities, KenyaInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- A Curious Case of QuadriplegiaDocument4 pagesA Curious Case of QuadriplegiaInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- A Knowledg Graph Model for e-GovernmentDocument5 pagesA Knowledg Graph Model for e-GovernmentInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Machine Learning and Big Data Analytics for Precision Cardiac RiskStratification and Heart DiseasesDocument6 pagesMachine Learning and Big Data Analytics for Precision Cardiac RiskStratification and Heart DiseasesInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Forensic Evidence Management Using Blockchain TechnologyDocument6 pagesForensic Evidence Management Using Blockchain TechnologyInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Analysis of Financial Ratios that Relate to Market Value of Listed Companies that have Announced the Results of their Sustainable Stock Assessment, SET ESG Ratings 2023Document10 pagesAnalysis of Financial Ratios that Relate to Market Value of Listed Companies that have Announced the Results of their Sustainable Stock Assessment, SET ESG Ratings 2023International Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Pdf to Voice by Using Deep LearningDocument5 pagesPdf to Voice by Using Deep LearningInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Improvement Functional Capacity In Adult After Percutaneous ASD ClosureDocument7 pagesImprovement Functional Capacity In Adult After Percutaneous ASD ClosureInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Fruit of the Pomegranate (Punica granatum) Plant: Nutrients, Phytochemical Composition and Antioxidant Activity of Fresh and Dried FruitsDocument6 pagesFruit of the Pomegranate (Punica granatum) Plant: Nutrients, Phytochemical Composition and Antioxidant Activity of Fresh and Dried FruitsInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Food habits and food inflation in the US and India; An experience in Covid-19 pandemicDocument3 pagesFood habits and food inflation in the US and India; An experience in Covid-19 pandemicInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Severe Residual Pulmonary Stenosis after Surgical Repair of Tetralogy of Fallot: What’s Our Next Strategy?Document11 pagesSevere Residual Pulmonary Stenosis after Surgical Repair of Tetralogy of Fallot: What’s Our Next Strategy?International Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- Scrolls, Likes, and Filters: The New Age Factor Causing Body Image IssuesDocument6 pagesScrolls, Likes, and Filters: The New Age Factor Causing Body Image IssuesInternational Journal of Innovative Science and Research TechnologyPas encore d'évaluation

- The Optimality of Naive Bayes: Harry ZhangDocument6 pagesThe Optimality of Naive Bayes: Harry Zhang1111566Pas encore d'évaluation

- Computer Science & Engineering: Apex Institute of TechnologyDocument13 pagesComputer Science & Engineering: Apex Institute of Technologymudit nPas encore d'évaluation

- Introduction to Information Theory and CodingDocument18 pagesIntroduction to Information Theory and CodingOmmy OmarPas encore d'évaluation

- Operations Research I: Jorge OyolaDocument13 pagesOperations Research I: Jorge OyolaANTONIO JOSE LOZANO COTUAPas encore d'évaluation

- Unit IIIDocument109 pagesUnit IIIAshutosh AshuPas encore d'évaluation

- Project Group/Seminar/Lab Report: Putting Things On A Table EfficientlyDocument3 pagesProject Group/Seminar/Lab Report: Putting Things On A Table Efficientlyghasemikasra39Pas encore d'évaluation

- Experiment No.: 05 Name of The Experiment: Implementation of AM/DSB-LC Signal Generation Using MATLAB Coding and Simulink Model ObjectiveDocument4 pagesExperiment No.: 05 Name of The Experiment: Implementation of AM/DSB-LC Signal Generation Using MATLAB Coding and Simulink Model ObjectiveAshikul islam shiponPas encore d'évaluation

- Cohen Sutherland AlgorithmDocument28 pagesCohen Sutherland AlgorithmshubhangiPas encore d'évaluation

- Cpillar: Tutorial 4Document5 pagesCpillar: Tutorial 4Anshi MishraPas encore d'évaluation

- An Overview of Tensorflow + Deep learning 沒一村Document31 pagesAn Overview of Tensorflow + Deep learning 沒一村Syed AdeelPas encore d'évaluation

- An Efficient System For Automatic Sorting of The Ceramic TilesDocument3 pagesAn Efficient System For Automatic Sorting of The Ceramic TilesMekaTronPas encore d'évaluation

- 2019 - Structural Analysis of Attributes For Vehicle Re-Identification and RetrievalDocument12 pages2019 - Structural Analysis of Attributes For Vehicle Re-Identification and RetrievalMinh Hải NgôPas encore d'évaluation

- 05 - Geophysical Inverse ProblemsDocument32 pages05 - Geophysical Inverse ProblemsRohit kumarPas encore d'évaluation

- Network Security and CryptographyDocument3 pagesNetwork Security and CryptographytjPas encore d'évaluation

- Arbab's ResumeDocument1 pageArbab's ResumePAVANKUMAR CHATTUPas encore d'évaluation

- Data Structures and Algorithm Course OutlineDocument2 pagesData Structures and Algorithm Course OutlineNapsterPas encore d'évaluation

- RulesDocument3 pagesRulesSandeep KumarPas encore d'évaluation

- New Microsoft Word DocumentDocument5 pagesNew Microsoft Word DocumentTariku WodajoPas encore d'évaluation

- A Secure QR Code System For Sharing Personal Confidential InformationDocument4 pagesA Secure QR Code System For Sharing Personal Confidential Informationbrofista brofistaPas encore d'évaluation

- Real-Time Credit Card Fraud Detection Using Machine LearningDocument6 pagesReal-Time Credit Card Fraud Detection Using Machine LearningFor ytPas encore d'évaluation

- 3130905Document2 pages3130905manish_iitr0% (1)

- A Sliding Mode Control For Robot ManipulatorDocument27 pagesA Sliding Mode Control For Robot ManipulatorauraliusPas encore d'évaluation

- Lecture 10 (Pert CPM)Document37 pagesLecture 10 (Pert CPM)umair aliPas encore d'évaluation

- Research Methodologies in Computing ScienceDocument7 pagesResearch Methodologies in Computing ScienceMariya IqbalPas encore d'évaluation

- Question Bank (8M) UNIT-I (CO1)Document2 pagesQuestion Bank (8M) UNIT-I (CO1)Manohar DattPas encore d'évaluation

- 13 (A) Explain The Banker's Algorithm For Deadlock Avoidance With An Illustration. - BituhDocument6 pages13 (A) Explain The Banker's Algorithm For Deadlock Avoidance With An Illustration. - BituhNagendra Reddy100% (1)