Académique Documents

Professionnel Documents

Culture Documents

P S 23

Transféré par

tiyifaxaTitre original

Copyright

Formats disponibles

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentDroits d'auteur :

Formats disponibles

P S 23

Transféré par

tiyifaxaDroits d'auteur :

Formats disponibles

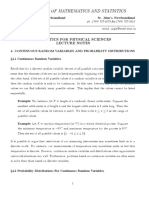

Probability and Statistics

B Madhav Reddy

madhav.b@srmap.edu.in

B Madhav Reddy Probability and Statistics 1 / 15

Recall..

Discrete Continuous

Conditional probability Conditional probability

mass function density function

pX ∣Y (x∣b) = p(x ,b)

pY (b) fX ∣Y (x∣b) = ffY(x(b)

,b)

P{X ∈ A∣Y = b} = ∑ pX ∣Y (x∣b) P{X ∈ A∣Y = b} = ∫A fX ∣Y (x∣b) dx

x ∈A

a

FX ∣Y (x∣b) = ∑ pX ∣Y (x∣b) FX ∣Y (x∣b) = ∫−∞ fX ∣Y (x∣b) dx

a≤x

☀ If X and Y are independent,

● Discrete case: pX ∣Y (x∣b) = pX (x)

● Continuous case: fX ∣Y (x∣b) = fX (x)

Ô⇒ If X and Y are independent, it is enough to work with marginal

functions instead of conditional functions.

B Madhav Reddy Probability and Statistics 2 / 15

Recall..

Definition

⎧

⎪ ∑ ∑ h(x)g(y )p(x, y ), if X and Y are discrete

⎪

⎪

⎪

⎪

⎪

⎪

⎪

x y

⎪

E [h(X )g(Y )] = ⎨

⎪

⎪

⎪

⎪ ∫−∞ ∫−∞ h(x)g(y )f (x, y ) dx dy , if X and Y

∞ ∞

⎪

⎪

⎪

⎪

⎪

⎩ are continuous

Fact 12. If X and Y are independent, then, for any functions h and g,

E [h(X )g(Y )] = E [h(X )] E [g(Y )]

In particular, for independent random variables X and Y ,

E [XY ] = E [X ] E [Y ]

B Madhav Reddy Probability and Statistics 3 / 15

Recall..

Definition

The covariance between any two random variables X and Y, denoted by

Cov (X , Y ), is defined by

Cov (X , Y ) = E [(X − E [X ]) (Y − E [Y ])]

Fact 13. Cov (X , Y ) = E [XY ] − E [X ] E [Y ]

☀ If X and Y are independent, then by Fact 12, Cov (X , Y ) = 0

B Madhav Reddy Probability and Statistics 4 / 15

Fact 14.

1 Cov (X , Y ) = Cov (Y , X )

2 Cov (X , X ) = Var (X )

3 Cov (aX , Y ) = aCov (X , Y )

Cov ( ∑ Xi , ∑ Yj ) = ∑ ∑ Cov (Xi , Yj )

n m n m

4

i=1 j=1 i=1 j=1

n n n

Now, Var (∑ Xi ) = Cov (∑ Xi , ∑ Xi )

i=1 i=1 i=1

n m

= ∑ ∑ Cov (Xi , Xj )

i=1 j=1

= ∑ ∑Cov (Xi , Xj ) + ∑ ∑Cov (Xi , Xj )

i=j i≠j

n

= ∑ Var (Xi ) + 2∑ ∑Cov (Xi , Xj )

i=1 i<j

B Madhav Reddy Probability and Statistics 5 / 15

Fact 15. Var ( ∑ Xi ) = ∑ Var (Xi ) + 2∑ ∑Cov (Xi , Xj )

n n

i=1 i=1 i<j

● If X1 , X2 , . . . Xn are paiwise independent, then

n n

Var (∑ Xi ) = ∑ Var (Xi )

i=1 i=1

Definition

The correlation coeffiecient of two random variables X and Y , denoted

by ρ(X , Y ), is defined, as long as Var (X ) and Var (Y ) are positive, by

Cov (X , Y )

ρ(X , Y ) = √ √

Var (X ) Var (Y )

Fact 16. −1 ≤ ρ(X , Y ) ≤ 1, for any two random variables X and Y

B Madhav Reddy Probability and Statistics 6 / 15

Exercise: The random variables X and Y have a joint density function

given by

⎧

⎪ 1 e −(y + x /y ) , x > 0, y > 0

⎪

f (x, y ) = ⎨ y

⎪

⎪

⎩ 0, otherwise

Find the correlation coefficient, ρ (X , Y ), of X and Y .

Procedure:

Step-1: Calculate the marginal densities

∞ ∞

fX (x) = ∫ f (x, y ) dy and fY (y ) = ∫ f (x, y ) dx

−∞ −∞

Step-2: Calculate the expectations

E [X ] = ∫−∞ xfX (x) dx E [Y ] = ∫−∞ yfY (y ) dy

∞ ∞

E [X 2 ] = ∫−∞ x 2 fX (x) dx E [Y 2 ] = ∫−∞ y 2 fY (y ) dy

∞ ∞

B Madhav Reddy Probability and Statistics 7 / 15

Step-3: Calculate the joint expectation

∞ ∞

E [XY ] = ∫ ∫ xyf (x, y ) dx dy

−∞ −∞

Step-4: Calculate Cov (X , Y ), Var (X ) and Var (Y )

Cov (X , Y ) = E [XY ] − E [X ] E [Y ]

Var (X ) = E [X 2 ] − (E [X ])2 and Var (Y ) = E [Y 2 ] − (E [Y ])2

Step-5: Finally,

Cov (X , Y )

ρ(X , Y ) = √ √

Var (X ) Var (Y )

☀ −1 ≤ ρ(X , Y ) ≤ 1

B Madhav Reddy Probability and Statistics 8 / 15

The correlation coefficient is a measure of the degree of linearity

between X and Y

A positive value of ρ(X , Y ) indicates that Y tends to increase when

X does, whereas a negative value indicates that Y tends to decrease

when X increases

If ρ(X , Y ) = 0, then X and Y are said to be uncorrelated

Definition

Random variables X1 , X2 , . . . are said to be identically distributed if each

of Xi has the exactly same distribution. That is,

FXi (x) = FXj (x), for every x

B Madhav Reddy Probability and Statistics 9 / 15

Theorem (Central limit theorem)

Let X1 , X2 , . . . be a sequence of independent and identically distributed

random variables, each having mean µ and variance σ 2 . Then the

distribution of

X1 + X2 + ⋯ + Xn − nµ

√

σ n

tends to the standard normal as n → ∞.

−nµ

That is, if Yn = X1 + X2 + ⋯ + Xn and Zn = Yn√

σ n

, then

Yn − nµ

FZn (a) = P { √ ≤ a} Ð→ Φ(a) = √ ∫ e − x /2 dx

1 a 2

σ n 2π −∞

as n → ∞

Eventually, everything will be normal!

B Madhav Reddy Probability and Statistics 10 / 15

This theorem is very powerful because it can be applied to random

variables X1 , X2 , . . . having virtually any thinkable distribution with

finite expectation and variance.

As long as n is large (the rule of thumb is n > 30), one can use

Normal distribution to compute probabilities about the sum Yn

B Madhav Reddy Probability and Statistics 11 / 15

Example: A disk has free space of 330 megabytes. Is it likely to be

sufficient for 300 independent images, if each image has expected size of 1

megabyte with a standard deviation of 0.5 megabytes?

Solution: Let Xi denote the size of i th image in megabytes

Given that X1 , X2 , . . . are identically distributed with mean 1 and standard

deviation 0.5

If we denote Yn = X1 + X2 + ⋯ + Xn , then we need to find P {Yn ≤ 330} for

n = 300

Since n is large (300 in our case), we use the central limit theorem

B Madhav Reddy Probability and Statistics 12 / 15

Y300 − 300(1) 330 − 300(1)

P {Y300 ≤ 330} = P { √ ≤ √ }

(0.5) 300 (0.5) 300

= P {Z300 ≤ 3.46}

≈ Φ(3.46) (by central limit theorem)

≈ 0.9997

This probability is very high, hence, the available disk space is very likely

to be sufficient.

B Madhav Reddy Probability and Statistics 13 / 15

Example: You wait for an elevator, whose capacity is 2000 lbs. The

elevator comes with ten adult passengers. Suppose your own weight is 150

lbs, and you heard that human weights are normally distributed with the

mean of 165 lbs and the standard deviation of 20 lbs. Would you board

this elevator or wait for the next one? In other words, is overload likely?

Solution: Let Xi denote the weight of i th person in the lift and

Yn = X1 + X2 + ⋯ + Xn

Overload happens if Y10 + 150 > 2000. Hence we need to find

P {Y10 + 150 > 2000}

B Madhav Reddy Probability and Statistics 14 / 15

P {Y10 + 150 > 2000} = P {Y10 > 1850}

Y10 − 165(10) 1850 − 165(10)

=P{ √ > √ }

20( 10) 20( 10)

= P {Z10 > 3.16}

= 1 − P {Z10 ≤ 3.16}

≈ 1 − Φ(3.16) (by central limit theorem)

≈ 1 − 0.9992 = 0.0008

Thus, there is only 0.08% chance of overload. Hence it is safe to take the

elevator!

B Madhav Reddy Probability and Statistics 15 / 15

Vous aimerez peut-être aussi

- 1 PercentagesDocument46 pages1 PercentagestiyifaxaPas encore d'évaluation

- Surface FinishDocument35 pagesSurface FinishtiyifaxaPas encore d'évaluation

- Calc MDDocument12 pagesCalc MDtiyifaxaPas encore d'évaluation

- Refrigeration and Air-Conditioning by C P Arora 3 EdDocument962 pagesRefrigeration and Air-Conditioning by C P Arora 3 EdDivyank Kumar75% (8)

- Experiment No: 1 Date: Whirling of Shaft ApparatusDocument3 pagesExperiment No: 1 Date: Whirling of Shaft ApparatustiyifaxaPas encore d'évaluation

- SRM University-AP, Amaravati: Mechanical Measurements and Instrumentation Assignment 1Document1 pageSRM University-AP, Amaravati: Mechanical Measurements and Instrumentation Assignment 1tiyifaxaPas encore d'évaluation

- MEG381 CHP05 Measuring SystemsDocument33 pagesMEG381 CHP05 Measuring SystemstiyifaxaPas encore d'évaluation

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- Algebra 1, Lesson 13.1Document9 pagesAlgebra 1, Lesson 13.1galiotoaPas encore d'évaluation

- P&S 6Document69 pagesP&S 6muhyadin20husseinPas encore d'évaluation

- John F. Nash, JR.: Econometrica, Vol. 18, No. 2. (Apr., 1950), Pp. 155-162Document9 pagesJohn F. Nash, JR.: Econometrica, Vol. 18, No. 2. (Apr., 1950), Pp. 155-162Hesham RhPas encore d'évaluation

- Multipulse Laser Damage in Potassium Titanyl Phosphate: Statistical Interpretation of Measurements and The Damage Initiation MechanismDocument7 pagesMultipulse Laser Damage in Potassium Titanyl Phosphate: Statistical Interpretation of Measurements and The Damage Initiation MechanismsankhaPas encore d'évaluation

- Makalah Manajemen Risiko (Citibank)Document26 pagesMakalah Manajemen Risiko (Citibank)Siti Zumrotul IrhamniPas encore d'évaluation

- 15XD25Document3 pages15XD2520PW02 - AKASH APas encore d'évaluation

- 99ebook Com Msg00388Document15 pages99ebook Com Msg00388Andy Soenoewidjoyo0% (4)

- Ilovepdf MergedDocument14 pagesIlovepdf MergedRobinson Ortega MezaPas encore d'évaluation

- 3 - Simulation BasicsDocument23 pages3 - Simulation BasicsDaniel TjeongPas encore d'évaluation

- Chapter 2Document19 pagesChapter 2Chandrahasa Reddy ThatimakulaPas encore d'évaluation

- 29-Wei-Bull and Gamma Distributions-11-Feb-2019Reference Material I - Gamma Dist - Weibull Distri.Document5 pages29-Wei-Bull and Gamma Distributions-11-Feb-2019Reference Material I - Gamma Dist - Weibull Distri.Samridh SrivastavaPas encore d'évaluation

- Liar's Guessing Game PDFDocument9 pagesLiar's Guessing Game PDFFrancisco GalluccioPas encore d'évaluation

- 19MAT301 - Mathematics For Intelligence Systems - V: Assignment - 2Document4 pages19MAT301 - Mathematics For Intelligence Systems - V: Assignment - 2Blyss BMPas encore d'évaluation

- Poisson Functions in R ProgrammingDocument3 pagesPoisson Functions in R ProgrammingrupeshPas encore d'évaluation

- The Problem of Animal Pain: Trent DoughertyDocument210 pagesThe Problem of Animal Pain: Trent DoughertyNguyễn Nam100% (1)

- Department of Mathematics and Statistics: Statistics For Physical Sciences NotesDocument41 pagesDepartment of Mathematics and Statistics: Statistics For Physical Sciences NotesGustavo G Borzellino CPas encore d'évaluation

- A and B Are Mutually Exclusive A and B Are Independent.: IB Questionbank Mathematics Higher Level 3rd Edition 1Document2 pagesA and B Are Mutually Exclusive A and B Are Independent.: IB Questionbank Mathematics Higher Level 3rd Edition 1carlPas encore d'évaluation

- DLL G6 Q4 Week 9Document12 pagesDLL G6 Q4 Week 9Nota BelzPas encore d'évaluation

- 1.2 Theoretical ProbabilityDocument26 pages1.2 Theoretical ProbabilityGaganpreet KaurPas encore d'évaluation

- Convergence of Random VariablesDocument7 pagesConvergence of Random Variablesdaniel656Pas encore d'évaluation

- Chapter 2 ProbabilityDocument39 pagesChapter 2 ProbabilityChristian Rei dela CruzPas encore d'évaluation

- The Time Horizon and Its Role in Multiple Species Conservation PlanningDocument7 pagesThe Time Horizon and Its Role in Multiple Species Conservation PlanningPeer TerPas encore d'évaluation

- Mrunal (Aptitude) Probability Made Easy (Extension of Permutation Combination Concept!) - MrunalDocument7 pagesMrunal (Aptitude) Probability Made Easy (Extension of Permutation Combination Concept!) - MrunalRahul GauravPas encore d'évaluation

- Assignment 4Document8 pagesAssignment 4Sania ShehzadPas encore d'évaluation

- 3 - PRobabilityDocument21 pages3 - PRobabilityAnonymous UP5RC6JpGPas encore d'évaluation

- Modern Bayesian EconometricsDocument100 pagesModern Bayesian Econometricsseph8765Pas encore d'évaluation

- Module 4 Review of Basic Probabilities and Probability Rules-Converted (2) - MergedDocument217 pagesModule 4 Review of Basic Probabilities and Probability Rules-Converted (2) - MergedqueenbeeastPas encore d'évaluation

- FRM 2Document135 pagesFRM 2sadiakhn03100% (4)

- Newbold Sbe8 Tif Ch04Document69 pagesNewbold Sbe8 Tif Ch04Tuna AltınerPas encore d'évaluation

- 8 ProbabilityDocument2 pages8 ProbabilityPuneet JangidPas encore d'évaluation