Académique Documents

Professionnel Documents

Culture Documents

Us 20200257317 A 1

Transféré par

Fred Lamert100%(1)100% ont trouvé ce document utile (1 vote)

26K vues24 pagesUs 20200257317 a 1

Titre original

Us 20200257317 a 1

Copyright

© © All Rights Reserved

Formats disponibles

PDF ou lisez en ligne sur Scribd

Partager ce document

Partager ou intégrer le document

Avez-vous trouvé ce document utile ?

Ce contenu est-il inapproprié ?

Signaler ce documentUs 20200257317 a 1

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF ou lisez en ligne sur Scribd

100%(1)100% ont trouvé ce document utile (1 vote)

26K vues24 pagesUs 20200257317 A 1

Transféré par

Fred LamertUs 20200257317 a 1

Droits d'auteur :

© All Rights Reserved

Formats disponibles

Téléchargez comme PDF ou lisez en ligne sur Scribd

Vous êtes sur la page 1sur 24

cu») United States

US 20200257317

2) Patent Application Publication co) Pub. No.: US 2020/0257317 A1

Musk et al.

(43) Pub. Date: Aug. 13, 2020

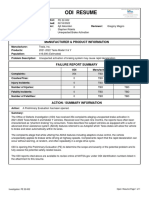

(51) AUTONOMOUS AND USER CONTROLLED Publication Clasitcation

VEMICLE SUMMON TOA TARGET 5

6h mc.

: ae Gas 72 (200601)

(71) Applicant: Testa, Ine, Palo Alto, CA (US) oe Qos

: Gasp ia (200601)

(72) Inveors: Elon Musk, Los Anges, CA (US) eh

Kate Park, Los Allo, C4 (US); Nenad GaN 3708 (2006.01)

Uninovie, San Carlos, CA (US): <4, GA6N 2000 (2006.01)

Christopher Coleman Moore, San 62) . .

Francisco, CA (US); Francs Haviak, GoSD 1/12 2013.01); GOsD 1/0033

all Ma Bay, CA (US), Stuart (2013.01), GosD 1s (2013.01, GosD

Beeece srCA Gr ane 2201/0213 013.01}, GUBN 3408 (201301),

Karpathy, San Francisco, CA (US); GO6N 20/00 (2019.01), GOSD 10221

Arvind Reinanandan, Sunnyvale, CA (201301)

(US), Ashima Kapur Sud, Sn ose :

CA (US); Paul Chen, Woodside, CA (7) ABSTRACT

(US); Pari Jain, Mountain Views CA processor eoupld to memory is configured to receive an

(US): Alexander Hertzberg, Berkeley, adafcaton f'n gogrpbicl location associat wih

CA (US); Jason Kong, San Francisco, ‘target specified by a user remote from a vehicle. A machine

CA (US) Li Wang, Caper, CA Jeaming model ie ulized generate a representation of at

(US); Obtay Aran, Front, CA deat portion of an envionment surrounding the vetcle

{US}: Nicklas Gustafson, Menlo Park, ing sensor dt fom one or mare sass ofthe vec

CA (US); Charles Shich, Cupertino, At least a portion of a path to a target location corresponding

CA (US) David Seclg, Sanford, CA ge ecetved geographical oeation i ealeulted sing the

ws) eer representation of thet Teast portion ofthe env

Fnment surounding the vehicle. Atleast one command is

21) Appl. Now 16272273 Frovidad wo atonstcally navigate th vehicle based onthe

Esermined pat aod upstd sensor data rom at least

(22) Fis Feb. 1, 2019 parton ofthe one or more sensors ofthe vehi

9017 ceive De son

cae barn Je

|

i |

908~1 ecowe Von Data |

305] commie vane | |

Ba |

I |

a ree |

|

|

|

seo |

|

|

|

an aa |

ween ||

: |

a1. Gea

ans

Comet Sanmon

Patent Application Publication Aug. 13,2020 Sheet 1 of 9 US 2020/0257317 Al

101-4

Receive Destination

103~ e Path To

Determin

Destination

¥

105) Navigate To

Destination

¥

107

Complete Summon

FIG. 1

Patent Application Publication Aug. 13,2020 Sheet 2 of 9 US 2020/0257317 Al

y

201~) Receive Destination | 203~7}__ Determine User

Location Location

|

Validate Destination

|

207~7{ Provide Destination

To Path Planner

Module

2057)

FIG. 2

Patent Application Publication

Aug. 13, 2020 Sheet 3 of 9

301-4]

Receive Destination |} ——

ad

3037

Receive Vision Data

305

¥

307) _ Generate

Occupaney Grid

¥

309) Determine

Path Goal

3114 Navigate

To Path Goal

‘Arrived At

518 Destination?

3154

Complete Summon

FIG. 3

US 2020/0257317 Al

Patent Application Publication Aug. 13, 2020 Sheet 4 of 9

4014

Prepare Training

Data

}

4034

Train Machine

Learning Model

|

405)

Deploy Trained

Machine Learning

Model

¥v

407

Receive Sensor

Data

¥

409}

Apply Trained

Machine Learning

Model

|

4117}

Generate

Occupancy Grid

FIG. 4

US 2020/0257317 Al

Patent Application Publication Aug. 13,2020 Sheet Sof 9 US 2020/0257317 Al

501~) Load Saved

Occupancy Grid

503) Receive Drivable

505) Receive Auxiliary

Data

¥

507~) Update Occupancy

Grid

FIG. 5

Patent Application Publication

Aug. 13,2020 Sheet 6 of 9 US 2020/0257317 Al

601~} Determine Vehicle

Adjustments

603~4

Adjust Vehicle

605 ~7} Monitor and Operate

> Vehicle *

y 609

Contact Lost?

No

Yes Yes

Lp! Override Navigation }«—J

611]

FIG. 6

US 2020/0257317 Al

Aug. 13, 2020 Sheet 7 of 9

Patent Application Publication

2°9ld

quouodwog aoe

aoeuoyuy aiouioy fT ]™] SeHUIOMION | | seHeAueDeIEA | oy

v4 A

boL a

a. siojenoy afo14En aINpOW JOUUEIq Wied

Wrebl We SOL

yenes uoyebinen

5 Jajjoquog Kayes ainpow uoydeoied

ae W~€0L

bol

002 4 | siosuag jeuonippy si0SUag UOISIA,

}> 602 Ko LOL

Patent Application Publication Aug. 13,2020 Sheet 8 of 9 US 2020/0257317 Al

800~

801

803

Ne

FIG. 8

Patent Application Publication Aug. 13,2020 Sheet 9 of 9 US 2020/0257317 Al

900~

901

903

FIG. 9

US 2020/0257317 Al

AUTONOMOUS AND USER CONTROLLED

VEHICLE SUMMON TO A TARGI

BACKGROUND OF THE INVENTION

[0001] Human drivers are typically required to operate

vehicles and the driving tasks performed by them are often

‘complex and can be exhausting, For example, retrieving oF

summoning a parked ear from a crowded parking lot oF

arage can be inconvenient and tedious. The maneuver often

Snvolves walking towards one's vehicle, three-point turns,

‘edging out of tight spots without tonching neighboring

vehicles or walls, and driving towards a location that one

previously came from. Although some vehicles are capable

‘of being remotely operated, the route driven by the vehicle

is typically limited 6 a single straight-line path in either the

Torwards or revere direction with limited steering ange and

no intelligence in navigating the vehicle along its own path.

BRIEF DESCRIPTION OF THE DRAWINGS

Various embodiments of the invention are dis:

{inthe following detiled description andthe accom-

panying drawings.

[0003] FIG. Tis a flow diggram illustrating an embodi-

ment ofa process for automatically navigating a vehicle to

a destination target.

[000$) FIG. 2 is a flow diggram iTlustraing an embod

ment of a process for receiving a target destination

[0005] FIG, 3 is a flow diggram iflustrating an emboxt-

rent of a process for automatically navigating a vehicle t0

‘destination target.

[0006] FIG. 4 is a flow diggram illustrating an embod

ment of «process for training and applying & machine

Jeaming model to generate representation of an environ-

ment surroinding a vehicle,

10007} FIG. 5 is a flow diggram iflustrating an emboxt-

ment of a process for generating an occupancy grid,

10008] FIG. 6 is a flow diggram illustrating an embodi-

ment of @ process for automaticaly navigating to a dest

natin large.

[0009] FIG. 7 is block diagram illustrating an embod

ment of an avtonomous vehicle system for automatically

navigating a vehicle to a destination tangel,

[0010] FIG. 8 is a diogram illustrating an embodiment of

a user interface for automatically navigating a vehicle to a

‘destination target.

[0011] FIG. 9 is a diagram illustrating an embodiment of

‘user interface for automatically navigating a vehicle 10 3

‘destination target.

DETAILED DESCRIPTION

10012] The invention can be implemented in numerous

‘ways, inchading as a process; an apparatus; a system; 2

‘composition of matter; a comprter program prociet embod

jed on computer readable storage medium: andior

processor, such as a processor eonfignred fo execute instrve-

tions stored on andor provided by a memory coupled to the

processor. ln this specification, these implementations, or

ny other form thatthe invention may take, may be refered

to as techniques. In general, the order of the steps of

disclosed processes may be altered within the scope of the

‘vention, Unless stated otherwise, a component such as @

procetsar of a memory described as being configured 0

perform a task may be implemented as. yeneral component

Aug. 13, 2020

that is temporarily configured to perform the tsk at a given

time or a specific component that is manlactred to per

orm the task. As used here, the term “processor” refers 10

fone or more devices, circuits, and/or processing cores eon-

figured to process data, such a8 computer program instne-

[0013] A detailed description of one or more emboxtiments

‘ofthe invention is provided below slong with accompanying

figures that illustrate the principles of the invention. The

invention is described in connection with such embodi-

‘ments, but the invention isnot limited to any embodiment.

“The scope ofthe invention is limited only by the claims and

the invention encompasses numerous altematives, modi

cations and equivalents. Numerous specific details are set

{orth in the following description in order to provide a

thorough understanding of the invention, These details are

provides for the purpose of example and the invention may

be practied acconting to the claims without some or all of

these specific details. For the purpose of clarity, technical

‘material that is known ia the technical Fields related to the

invention has not been described in detail so that the

invention is not unnecessarily obscured

[0014] A technique to autonomously summon a vehicle wo

a destination is disclosed. target geographical location is

provided and a vehicle automatically navigates to the target

Jocstion, For example, a user provides a location by dop-

ping a pin on a graphical map user interface atthe destina-

tion locaton. Ax another example, a user summons the

vehicle to the user's location by specifying the user's

lotion asthe destination Incaton. The wser may also select

‘ destisaton location based on Viable paths detected for the

vehicle. The destination location may update (for example if

the user moves around) leading the ear to update its path 10

the destination location. Using the specified. destination

Tocation, the vehicle navigates by lilizing sensor data sue

as vision data captured by cameras to generate a represen-

tation of the environment surrounding the vehicle. In some

cembodinients, the representation isan occupancy grid deta

‘ng drivable and non-ivable space. In some embodiments,

the occupancy grid is generated using a neural network from

‘camera sensor data, The representation of the environment

‘may be funher augmented with auxiliary data such as

ditional sensor data (ineluding redar data), map data, or

other inputs. Using the generated representation, a path is

planned from the vehicle's current location towards the

Sestinaion location. In some embodiments, the path is

saenerated based on the vehicles operating parameters such

as the model's turning radius, vehicle wid, vehicle length

te, An optimal pat is selected based on selection param-

ters such as distance, speed, number of changes in gear, ee

As the vehicle automatically navigates using the selected

path, the representation of the environment is continuously

‘updated. For example, as the veiele travels to the dostina-

tion, new sensor data is captured and the oecupaney grid is

ipdated, Safety checks are continuously performed that can

override or modify the automatic navigation, For example,

ultasonie sensors may be uilized to detect potential coll

sions, A user can monitor the navigation and cancel the

summoned vehicle t any time. In some embodiments, the

vehicle most continually roceive a virtal heartbeat signal

from the user for the vehicle to continue navigating. The

Virtual heartbeat may be used to indicate that the user is

actively monitoring the pmgress of the vehicle. In some

embodiments, he user selects the path and the user remotely

US 2020/0257317 Al

‘controls the vehicle. For example, the user can contr the

steering angle, direction, andlor speed of the vehicle 10

Femotely control the vehicle, As the user remotely eoateols

the vehicle, sary checks are continuously performed that

‘ean override andor mosh the usee controls. For example,

the vehicle can be stopped i an object is detected,

10015] In some embodiments, a system comprises a pro-

‘cessor confined to receive an identification of weogra

cal location associated with a target specified by a user

remote froma vehicle, For example, a user Waiting ata pick

up location specifies her or his geographical location as @

vided by a user. The destination target is associated with @

oogriphical location and used as @ goal for automatically

‘navigating a vehicle. In the example shown, the process of

receiving taet destination ean be initiated from more tha

Aug. 13, 2020

fone start point. The wo start points of FIG. 2 are wo

‘examples Of initiating the receipt of a destination. Adalitional

methods are possible as well. In some embodiments, the

process of FIG. 2 is performed at 101 of FIG. 1

[0028] At 201, a destination location is received. In some

‘embodiments, the destination is provided as a geographical

location, For example, longitude and latitude values are

provided. In some embodiments, un altude is provided as

‘well. For example, an altitude associated with a particular

floor of a parking garage is provided. In various embodi-

‘ments, the destination location is provided from user via @

smartphone device, via the media console of a vehicle, or by

nether means. In some embodiments, the location is

received along with a time astociated with depsrting oF

arriving at the destination. In some embodiments, one or

‘more destinations are receive. For example, in some sce-

ratios, multi-step destination is received that includes

‘mon’ than one stop. In some embodiments the destination

location includes an orientation or heading. For example, the

Jbeading indicates the direction the vehicle should face

it hae arrived at the destination

[0029] At 208, a user locaton is determined, For example,

the location of the wer is determined using global pos

‘ioning system or another locaton aware technique In some

embodiments, the user's location is approximated by the

location of a key fob, a smartphone device of the user, or

‘another deviee in the user's congo. In some embodiments,

the users location is received as a geographical loeation.

Similar to 201, te location may bea longitude and latitude

pair and in some embodiments, may include an altitade, In

some embodiments, the user's Ioeation is dynamic and is

continuously updated oF updated at certain intervals. For

example, the User ean move to a new location aad the

location received is updated. In some embodiments, the

destination location includes an orientation or heading. For

‘example, the heading indicates the direction the vehicle

should fae once it as arrived at the destination,

0030} At 205, a destination is validated. For example, the

‘destination is validated to confiem that the destination is

reachable. In some embodiments, the destination must be

‘within a certain distance from the vehicle originating loca-

tion. For example, certain regulations may require a vehicle

is limited to automatically: navigating no. more than 50

ster. In various embodiments, an invalid destination may

require the user to supply @ new location. Ia some embod

‘cas, an alternative destination i suggested to the user Por

‘example i the event a user selecs the wrong orientation, &

suggested orientation is provided. As another example, ia

the event a user selects.ano-stopping area, the valid stopping

farea such at the closest valid stopping area is suggested

‘Once validated, the selected destination is provide to step

a.

[0031] £207, the destination is provided to. path planner

‘module. In some embodiments, path planner module is

ed w determine a route for the vehicle. At 207, the

validated destination is provided tothe path planner module

In some embodiments the destination is provided as one or

‘more locations, for example, a destination with multiple

stops. The destination may include a two-dimensional loea-

‘ion, sueh asa latitade and longitude location, Alternatively,

in someembodiments, a destination includes an altitude. For

‘example, certain drivable areas, seh as multilevel parking

‘rages, bridges, etc, have muitiple drivable planes for the

Sane (o-dimeasional location, The destination may also

US 2020/0257317 Al

include a heading (0 specify the orientation the vehicle

should face when arriving at the destination, In some

‘embodiments, the path planner module is path planner

‘module 70S of FIG. 7.

10032] FIG. 3 is a flow diggram illustrating an embodi-

ment of a process for automatically navigating a vehicle 10

‘a destination target. For example, using the process of FIG,

3. representation ofan environment surrounding a vehicle

js generated and used to determine one oe more paths to &

destination. A vehicle automatically navigates tsing the

‘determined path(s). As addtional sensor data i updated, the

representation surrounding the environment is updated. In

some embodiments the process of FIG. 3s performed using

the autonomous vehile system of FIG. 7. In some embodi-

ments, the step of 301 is performed at 101 of FIG. 1, the

steps of 303, 308, 307, and/or 309 are performed at 103 of

FIG. 1, the steps of 311 and/or 313 are performed at 105 of

FIG. 1, andior the step of 315 is performed at 107 of FIG.

1. In some embodiments, a noural newwork is used to direct

the path the while follows. For example, using a machine

Jeaming network, steering and/or acceleration values are

predicted to navigate the vehicle to follow a path to the

‘estioation target.

[0033] At 301, « destination is received. For example, @

oogriphical location is received via a mobile app, a key

fob, vin a control center of the vehicle, or another appro-

priate device. In some embodiments, the destination is @

location and an orientation. In some embodiments, the

destioation ineludes an altitude andor a time, La various

‘embodiments, the destination is reesived using the process

‘fFIG. 2. In some embodiments, the destination is dynamic

fand new destination may be received as appropriate, For

‘example, inthe event a user selects a “nd me” feature, the

destination is updated to follow the location of the user.

[Essentially the vehicle can follow the user like a pet.

[0034] Ac 303, vision data is received. For example, sing

‘one or more camera sensors axed to the Vehicle, camera

jmage data is received. In some embodiments, the image

data is received from sensors covering the environment

surrounding the vehicle. The vision data may be prepro-

‘cessed to improve the usefulness ofthe dala for analysis, For

‘example, one or more filters may be applied to reduce noise

‘of the vision data, In various embodiments, the vision data

js continuously captured to update the environment stir

rounding the vehicle

10035] At 308, drivable space is determined. In some

‘embodiments, the drivable space is determined using 2

neural setwork by applying inference on the vision data

received at 303, For example, » convolutional neural net-

work (CNN) is applied using the vision data to determine

drivable and non-drivable space for the environment sur

rounding the vebicle. Drivable space includes areas that the

vehicle can travel. In various embodiments, the drivable

space is free of obstacles such thatthe vehicle ean travel

using a path through the determined drivable space. In

Various embodiments, machine leaming model is trained

to determine drivable spoce and deployed on the vehicle t0

‘automatically analyze and determine the drivable space fom.

image data

10036] In some embodiments, the vision data is supple-

rented with additonal data such as additional sensor data

Additional sensor data may include ultrasonic, radar, lidar

tad, or other appropriate sensor deta. Adlitonal data may

‘alka inelude annotated dat, such as map data, For example,

Aug. 13, 2020

‘an annotated map may annotate vehicle Lanes, speed lies,

intersections, andlor other driving metadata. The additional

data may be used as input to a machine learning model or

‘consumed dovvastream when reating the occupancy grid to

improve the results for determining drivable space,

0037] At 307, an occupancy grid is generated. Using the

Arivable space determined at 308, an oecupaney arid repre-

senting the environment ofthe vehicle is generated. In some

tembodimients, the oscupancy grid is a two-dimensional

‘occupancy grid representing the entire plane (eg, 360

degrees along Iongitwde and latitude axes) the vehicle

resides in, In some embodiments, the occupancy grid

includes a third dimension to account for altitude. For

‘example, a region with mltiple drivable paths at differ

aliudes, such as multi-‘loor parking structure, an ever-

pass, et, can be represented by 2 three-dimensional ocew-

ancy eri

[0038] In various embodiments, the occupancy arid con-

‘sins deivability values at each grid location that corresponds

{oa location in the surtunding environment. The deivability

value at each location may be a probability thatthe location

is drivable. For example, a sidewalk may be designed 10

have # zero drivability value while gravel has a 0.5 driv-

ability valve. The devabifity value may be a normalized

probability hving a range between O and 1 and is based on

the drivable space determined at 308. In some embodiments,

cach location of the grid includes « cost metric associated

‘with the cost (or penalty/reward) of traversing through that

location, The cost value of each grid location in the oceu-

ancy grid is based on the drivable value, The cost value

‘may futher depend on additonal data such as preference

data. For example, path preferences ean be configured t0

avoid toll roads, carpool lanes, school zones, et. In various

embodiments the path preference data can be leaned via a

‘machine leaming model and is determined at 308 a part of

Grivable space. In some embodiments, the path preference

‘ata is configured by the user andlor an operator to optimize

the path taken for navigation to the destination received at

301. For example, the path preferences can be optimized t0

improve safety, convenience, trvel time, andor comfort.

among otber goals. In various embodiments the preferences

are additional weights used 10 determine the eost value of

cach location gad

[0039] “In some embodiments, the occupancy grid is

‘updated with auxiliey data such as akitional sensor data

For example, uliasonie sensor data capturing nearby objects

is used to update the occupancy grid. ther sensor data such

as lida, radar, audio, ec. may be used as well. In various

embodiments, an annotated map may be used in part t0

enenite the occupancy grid. For example, roads and their

properties (speed limit, lanes, ete) can be used to augment

the vision dala for generating the occupancy grid. As another

‘example, occupancy data from other vehicles ean be used 0

update the occupancy grid. For example, a neighboring

vehicle with similarly equipped functionality ean share

sensor data andor occupancy’ ged results,

[0040] In some embodiments, the occupancy’ data is ini-

‘lized with te lest generated oceupaney grid. For example,

the last occupancy grid is saved when a voice is no longer

capturing new data andr is parked or turned off: When the

‘ecupancy grid is needed, for example, when a vchicle is

summoned, the last penerited oceupaney prid is loaded and

‘sed as an intial occupancy grid. This optimization signi

cantly improves the accuraey of the

Vous aimerez peut-être aussi

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryD'EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryÉvaluation : 3.5 sur 5 étoiles3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)D'EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Évaluation : 4.5 sur 5 étoiles4.5/5 (121)

- Grit: The Power of Passion and PerseveranceD'EverandGrit: The Power of Passion and PerseveranceÉvaluation : 4 sur 5 étoiles4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItD'EverandNever Split the Difference: Negotiating As If Your Life Depended On ItÉvaluation : 4.5 sur 5 étoiles4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingD'EverandThe Little Book of Hygge: Danish Secrets to Happy LivingÉvaluation : 3.5 sur 5 étoiles3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaD'EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaÉvaluation : 4.5 sur 5 étoiles4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeD'EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeÉvaluation : 4 sur 5 étoiles4/5 (5794)

- Her Body and Other Parties: StoriesD'EverandHer Body and Other Parties: StoriesÉvaluation : 4 sur 5 étoiles4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreD'EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreÉvaluation : 4 sur 5 étoiles4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyD'EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyÉvaluation : 3.5 sur 5 étoiles3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersD'EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersÉvaluation : 4.5 sur 5 étoiles4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeD'EverandShoe Dog: A Memoir by the Creator of NikeÉvaluation : 4.5 sur 5 étoiles4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerD'EverandThe Emperor of All Maladies: A Biography of CancerÉvaluation : 4.5 sur 5 étoiles4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnD'EverandTeam of Rivals: The Political Genius of Abraham LincolnÉvaluation : 4.5 sur 5 étoiles4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceD'EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceÉvaluation : 4 sur 5 étoiles4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureD'EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureÉvaluation : 4.5 sur 5 étoiles4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealD'EverandOn Fire: The (Burning) Case for a Green New DealÉvaluation : 4 sur 5 étoiles4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)D'EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Évaluation : 4 sur 5 étoiles4/5 (98)

- 2022.08.11 Tesla LTR To Dan ODowd 1Document2 pages2022.08.11 Tesla LTR To Dan ODowd 1Fred Lamert100% (1)

- Tesla 420w Module DatasheetDocument3 pagesTesla 420w Module DatasheetFred Lamert100% (5)

- The Unwinding: An Inner History of the New AmericaD'EverandThe Unwinding: An Inner History of the New AmericaÉvaluation : 4 sur 5 étoiles4/5 (45)

- Tesla Dojo TechnologyDocument9 pagesTesla Dojo TechnologyFred Lamert89% (9)

- Tesla Supercharger Santa MonicaDocument16 pagesTesla Supercharger Santa MonicaFred LamertPas encore d'évaluation

- Elon Musk and Tesla Accuse SEC of HarassmentDocument3 pagesElon Musk and Tesla Accuse SEC of HarassmentjfingasPas encore d'évaluation

- Lawsuit Filed Against Tesla and Pappas RestaurantsDocument20 pagesLawsuit Filed Against Tesla and Pappas RestaurantsFred LamertPas encore d'évaluation

- 2021 02 Battery Raw Materials Report FinalDocument75 pages2021 02 Battery Raw Materials Report FinalFred Lamert100% (5)

- 2021.08.18 - FTC - TeslaDocument3 pages2021.08.18 - FTC - TeslaFred LamertPas encore d'évaluation

- Rivian Q3 2021 Shareholder LetterDocument21 pagesRivian Q3 2021 Shareholder LetterFred LamertPas encore d'évaluation

- NHTSA Tesla FSD NDADocument11 pagesNHTSA Tesla FSD NDAFred LamertPas encore d'évaluation

- Tesla and DMV CommunicationsDocument299 pagesTesla and DMV CommunicationsFred LamertPas encore d'évaluation

- Tesla q2 - 2021Document28 pagesTesla q2 - 2021Fred Lamert100% (2)

- Tsla q4 and Fy 2021 UpdateDocument37 pagesTsla q4 and Fy 2021 UpdateFred Lamert100% (2)

- DFEH-Vs-Tesla Discrimation Racism LawsuitDocument39 pagesDFEH-Vs-Tesla Discrimation Racism LawsuitFred Lamert100% (2)

- TSLA Q3 2021 Quarterly UpdateDocument27 pagesTSLA Q3 2021 Quarterly UpdateFred LamertPas encore d'évaluation

- Part 573 Safety Recall Report 22V-037Document4 pagesPart 573 Safety Recall Report 22V-037Fred LamertPas encore d'évaluation

- Inoa Pe22002 4385Document2 pagesInoa Pe22002 4385Simon AlvarezPas encore d'évaluation

- Part 573 Safety Recall Report 20V-609Document3 pagesPart 573 Safety Recall Report 20V-609Fred LamertPas encore d'évaluation

- Part 573 Safety Recall Report 22V-063Document5 pagesPart 573 Safety Recall Report 22V-063Simon AlvarezPas encore d'évaluation

- DCFCH 22 Applications ReceivedDocument8 pagesDCFCH 22 Applications ReceivedFred LamertPas encore d'évaluation

- TSLA Q1 2021 UpdateDocument30 pagesTSLA Q1 2021 UpdateFred Lamert100% (2)

- Tesla Lithium Extraction PatentDocument39 pagesTesla Lithium Extraction PatentFred Lamert100% (6)

- 2022 Mustang Mach-E Order GuideDocument18 pages2022 Mustang Mach-E Order GuideFred Lamert100% (2)

- Tesla Supercharger Idle Fee LawsuitDocument83 pagesTesla Supercharger Idle Fee LawsuitFred Lamert100% (1)

- Tesla Gigafactory New York ReportDocument2 pagesTesla Gigafactory New York ReportFred LamertPas encore d'évaluation

- Tbone - A Zero-Click Exploit For Tesla Mcus: Ralf-Philipp Weinmann and Benedikt SchmotzleDocument13 pagesTbone - A Zero-Click Exploit For Tesla Mcus: Ralf-Philipp Weinmann and Benedikt SchmotzleFred LamertPas encore d'évaluation

- QS Shareholder Letter Q4 2020Document13 pagesQS Shareholder Letter Q4 2020Fred Lamert100% (2)

- Tesla DMV CommsDocument8 pagesTesla DMV CommsFred LamertPas encore d'évaluation

- Board DecisionDocument55 pagesBoard DecisionFred LamertPas encore d'évaluation

- Q4 and FY2020: UpdateDocument34 pagesQ4 and FY2020: UpdateFred LamertPas encore d'évaluation